Abstract

When our school organized the curriculum around a core set of medical student competencies in 2004, it was clear that more numerous and more varied student assessments were needed. To oversee a systematic approach to the assessment of medical student competencies, the Office of College-wide Assessment was established, led by the Associate Dean of College-wide Assessment. The mission of the Office is to ‘facilitate the development of a seamless assessment system that drives a nimble, competency-based curriculum across the spectrum of our educational enterprise.’ The Associate Dean coordinates educational initiatives, developing partnerships to solve common problems, and enhancing synergy within the College. The Office also works to establish data collection and feedback loops to guide rational intervention and continuous curricular improvement. Aside from feedback, implementing a systems approach to assessment provides a means for identifying performance gaps, promotes continuity from undergraduate medical education to practice, and offers a rationale for some assessments to be located outside of courses and clerkships. Assessment system design, data analysis, and feedback require leadership, a cooperative faculty team with medical education expertise, and institutional support. The guiding principle is ‘Better Data for Teachers, Better Data for Learners, Better Patient Care.’ Better data empowers faculty to become change agents, learners to create evidence-based improvement plans and increases accountability to our most important stakeholders, our patients.

Keywords: competencies, assessment, medical education, outcomes, systems

Introduction

In recent years there has been increasing recognition of the need to focus on learner assessment within the context of the medical curriculum. While medical school curricula continue to be dominated by the delivery of content and experience, the Association of American Medical College's (AAMC) Medical School Objectives Project (1) and the Accreditation Council for Graduate Medical Education (ACGME) Outcomes Project (2) and Milestones initiative (3) have challenged medical educators by redirecting the curricula toward competency rather than content. Certainly, the recent AMA-AAMC New Horizon's in Medical Education (4) was predicated on the need to reform medical education emphasizing a focus on assessment and outcomes. If medical education has a minimum goal of competency described in terms of performance outcomes, then it is incumbent on schools to move in the direction of assessing for competency and outcomes.

Assessing competencies and the broader outcomes of medical education requires more complex tools than the multiple choice examinations and summative clinical rotation evaluations traditionally used by medical schools. This traditional model of content and ‘dwell time’ used tests of medical knowledge as a proxy for skills and preceptor evaluations as a proxy for just about everything else. It is now widely recognized that these tools are inadequate for assessing such things as communication skills, technical skills, professionalism, systems-based practice, and critical thinking.

To address these needs, medical schools have been challenged to be more thoughtful about incorporating a variety of assessment methods, many of which have been used in other fields but are generally less familiar in medical education (5). The options available to a curriculum committee include portfolios, a variety of multiple choice tests, oral examinations, 360 degree reviews, patient or peer assessments, journaling, objective structured clinical evaluations (OSCEs), performance-based assessments, virtual cases, virtual clinics, simulated patient encounters, and simulated environments. Each method has strengths and weaknesses with respect to fidelity, cost, faculty involvement, and reliability.

Clearly, the public expects medical schools to graduate competent physicians, and it is only reasonable for a medical school to be able to demonstrate that its graduates are competent for their level of training. Identifying those who are not competent is not only a matter of public safety and trust: determining which students are struggling is a necessary first step toward helping them improve and work toward competence.

Establishing the Office of College-wide Assessment

When our school decided to organize the curriculum around a core set of medical student competencies in 2004, it was clear that the curriculum would need more numerous and varied assessments. While the curriculum committee endorsed a variety of potential changes, it became obvious that a key element that was missing were people dedicated to the task. The creation and meaningful implementation of an integrated assessment system required the support of the Dean, the medical education administration, as well as the continuous focus of the curriculum committee. Furthermore, the successful creation and implementation of new assessments would require considerable faculty time, particularly of course and clerkship directors. With this in mind, the Dean created the Office of College-wide Assessment in 2008 led by the Associate Dean of College-wide Assessment.

The expressed mission of the Office of College-wide Assessment is ‘to facilitate the development of a seamless assessment system that drives a nimble, competency-based curriculum across the spectrum of our educational enterprise.’ This Office has the responsibility to create an assessment continuum from the postbaccalaureate program through the medical education program and on to residency training. The goal is that the College will be able to track the competency of students and the effectiveness of the curriculum throughout a learner's medical education career.

Creating and implementing a meaningful assessment system required a deep commitment to competency-based education from faculty and the administration in terms of space, faculty development resources, faculty effort, and curricular time. Aside from institutional commitment, a number of structural features of the Office of College-wide Assessment were important for maximizing the likelihood of success in achieving its mission.

Leadership

The success of this office and the vision guiding its many initiatives is in large part a result of the leadership provided by the Associate Dean for College-wide Assessment. The founding associate dean for college-wide assessment is an experienced physician–educator. It is important to note that the person picked to create the position (DPW) had been a course and clerkship director in all years of the curriculum, had been an associate residency director and the associate dean for graduate medical education, had a long history of educational innovation, and excellent interpersonal skills. The dean developed a faculty development plan that included a number of national and international conferences, mentoring from the Office of Medical Education Research and Development (OMERAD), and encouragement to partner with other colleges at Michigan State University and around the country.

Visibility

The Associate Dean sits on all core curricular governance committees. As a result, assessment has become a recurring agenda item at standing, project, and ad hoc committees. This creates a placeholder for ongoing discussions about general and specific assessment problems and plans.

Integration

A major role of the Office of College-wide Assessment is to enhance synergy within the College. Much expertise and energy was already in place throughout the college, but the faculty and departments needed focus and expertise. Rather than bringing existing resources and expertise into this office to create a large centralized unit within the College, the Associate Dean has been able to use existing resources to great effect, creating efficiencies and reducing duplications. The Associate Dean has played a significant role in coordinating initiatives throughout the College and developing partnerships to solve common problems.

Competency-driven

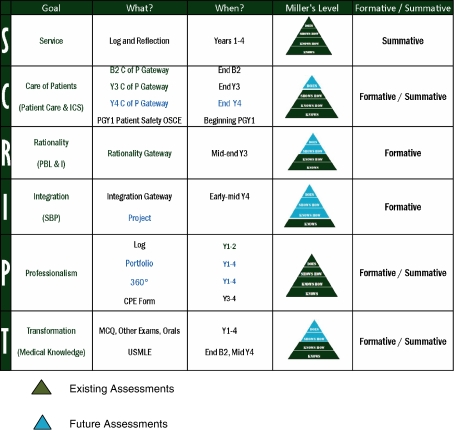

By 2005, the College of Human Medicine had adopted a set of core competencies for organizing the undergraduate medical school curriculum. The CHM competencies, abbreviated as the acronym SCRIPT, have analogs with each of the ACGME competencies but include specific knowledge, skills, and attitudes more central to the CHM mission (Table 1). Orienting the existing college curriculum to the competency-based SCRIPT obviously required curriculum reform, but the curriculum committee determined that curricular reform could be directed by assessment rather than a reworking of courses and their content.

Table 1.

Curricular competencies (SCRIPT) for the College of Human Medicine

SERVICE/(no ACGME-related competency)

|

CARE OF PATIENTS/Patient care and interpersonal and communication skills

|

RATIONALITY/Practice-based learning and improvement

|

INTEGRATION/Systems-based practice

|

PROFESSIONALISM/Professionalism

|

TRANSFORMATION/Medical knowledge

|

Engaging faculty expertise

A dedicated group of experienced faculty–educators was convened to provide systematic analysis of required summative and formative performance assessments as well as to address specific problems identified within the educational program. The members of this group, known as the competence committee, provided wide representation and influence from across the curriculum (6). As a group they have broad knowledge of and experience with many assessment methodologies available to medical educators.

Continuum

Since the ACGME competencies were mandated for graduate medical education and the college had created competencies that mapped onto those of the ACGME, it was obvious that any coherent assessment system should form a continuum from undergraduate through graduate medical education. Faculty observed that many significant educational challenges occurred when students transitioned from one environment to another (7, 8). Any new assessment system would need to assure faculty that students were transition-ready. Within this context, the work of the Office of College-wide Assessment places a special emphasis on the learning transitions across the medical school continuum from undergraduate medical education through graduate and continuing medical education.

The rationale for a systematic approach

With the establishment of the Office of College-wide Assessment, our medical school was well-positioned to address a variety of concerns, many of which were not unique to our institution. While helping students become competent was reason enough to pay closer attention to assessment, good assessment is a key step toward continuous curricular improvement. The Office of College-wide Assessment establishes data collection and feedback loops about what our students can actually do. Discovering what students can and can not do is necessary for any rational intervention in the curriculum. Similarly, once a curricular change has been implemented, assessment provides information necessary for determining if the curricular change has been effective. Another advantage of a systems approach to assessment is that it can be designed from a developmental vantage point resulting in longitudinal information as learners progress through the curriculum. By providing multiple measures of key competencies, it can be used as feedback to guide learning as well as program evaluation to guide continuous program improvement.

Nearly all medical schools require their students to take the United States Medical Licensing Examinations (USMLE), which have high external validity, but these examinations are infrequent and the data are so general that it is difficult for medical schools to make evidence-based decisions about curricular change. Even more importantly, these examinations provide limited information about performance beyond medical knowledge. Schools of medicine and the residency programs they feed now strive to impart exemplary communication skills, patient care competencies, the attributes of professionalism, the use of evidence, and the behaviors of a fail-safe team. Our well-crafted and nationally normed system of USMLE examinations do not provide our learners, our educational enterprises, or our public with information on the acquisition (or lack of acquisition) of many critical competencies.

A curriculum has a tendency to take on a life of its own; a systematic approach supports curricular reform and continuous quality improvement. Individual faculty and departments often take ownership of specific courses and experiences. While that sense of ownership promotes faculty investment in the educational program, it can lead to stagnation. The institution needs good data to help faculty and departments improve their educational offerings, data that are critical to any continuous improvement program. For too long the best data available have been assessments of medical knowledge and evaluation of student satisfaction. While both of these are important, the students, faculty, and college need and deserve better data on outcomes of broader scope.

The implementation of an assessment system also provided the college with a means of reviewing existing assessments within courses and clerkships and identifying gaps. Upon review, it became clear that assessments implemented within courses and clerkships did not address the cumulative effect of the curriculum or the erosion of knowledge and skills after the completion of a course or clerkship. To understand why students have poor presentation skills or appear to lose their patient-centered interviewing skills, a longitudinal developmentally based series of assessments need to be located throughout the curriculum. While placing assessments outside of courses risked alienating faculty and disengaging assessment from course content, the existing system did not provide enough quality data on student performance to lead to meaningful continuous improvement cycles for the curriculum as a whole.

Our learners benefit from a coherent, systematic approach to assessment. Many have committed to a long and difficult professional journey, often deferring important personal goals such as marriage and children in order to complete an arduous educational process. Though we have developed excellent mechanisms to provide them with formative and summative feedback about their medical knowledge, we have not systematically provided frequent, high-quality sources of formative or summative feedback on the other critical competencies already mentioned. This is a failure of our fiduciary responsibility to our learners and creates a significant burden of stress, especially at transitions to increased patient care responsibility and decreased supervision (7–9). This circumstance cried out for change.

The general public also stands to benefit from a more systematic approach to assessment, in that the system provides the foundation for accountability. The public expects medical school graduates to at least be competent to their level of training and medical faculties have higher aspirations for their students. Medical educators are accountable to students, the medical community, and the public for the quality of their school's program and the competence of their graduates. The only way schools can be accountable for the competency of their graduates is to assess for competency. We believe it is the duty of medical schools to accomplish this assessment, and the College needed a more robust system to meet this duty.

Developing the systems approach

Any system of educational assessment should reflect the objectives and goals of the curriculum and needs to integrate with the methodologies and timing of the educational program. While each institution will have to work out many details idiosyncratic to its own curriculum and structure, there are a few challenges common to all programs related to the conceptual framework: scope and timing of assessments within the system, continuity, resources, feedback loops to the curriculum, and program evaluation.

Conceptual framework

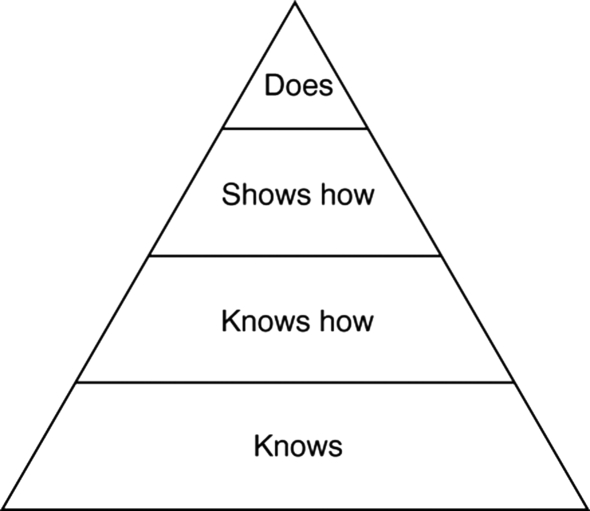

To directly address the limitations of multiple choice questions and global observational evaluations, the Office of College-wide Assessment utilized Miller's Pyramid (10) as an assessment framework that works particularly well in medical education (Fig. 1). It is intuitive to faculty that students need to ‘know’ science content; ‘know how’ to explain and apply their knowledge; ‘show how’ they integrate knowledge, skills, and behaviors in the care of patients; and hopefully ‘do’ the right things when no one is looking throughout their career. Faculty can easily translate Miller's framework into their own assessment strategies, demonstrating each of these levels of achievement. Equally important, this framework enables faculty to analyze what assessment is already being done in their course or clerkship and identify possible gaps. It is not uncommon that some areas of competence are well assessed while others have little organized assessment.

Fig. 1.

Miller's framework for developing competency [Miller 1990 (10)].

Scope of assessments

All Liaison Committee on Medical Education (LCME) accredited schools will have some set of objectives that define their educational program; the College of Human Medicine uses the SCRIPT acronym to organize its general curricular goals and objectives (Table 1). The scope of an assessment system depends on the institutional vision for competency assessment. At CHM the SCRIPT competencies expected of the medical students parallel the ACGME competencies expected of resident, seamlessly linking expectations from medical school through to residency.

One of the reasons to have an Associate Dean was to lead the analysis of the existing college assessments and make recommendations to the College Curriculum Committee. At CHM those recommendations put a priority on establishing better assessment of four of the six CHM competencies (Care of Patients, Rationality, Integration, and Professionalism). The Curriculum Committee agreed with the need to improve assessment of these four competencies and that the other two, Service and Transformation (Medical Knowledge), already were systematically evaluated by a portfolio for the former and a large series of internal and National Board of Medical Examiners (NBME) examinations for the latter.

When to be course/clerkship based?

Assessment that takes place within a course does so with more departmental ownership but may be less generalizable (e.g., performance on the family medicine clerkship contrasted with performance on a surgery clerkship). There is often a trade-off between the case and content knowledge of departmental faculty and the more generalizable nature of competency assessment. As an example, departments (e.g., Surgery and Internal Medicine) often have different expectations for the length, breadth, and level of detail in patient notes and presentations. It is important that each clerkship assesses students' written and oral communication skills so that the assessment system provides the opportunity to look globally at key medical skills such as delivering bad news or writing a discharge summary.

Assessment outside of a course or clerkship can be more interdisciplinary, more collaborative, and more generalizable. However, finding a ‘home’ for such assessment requires thinking differently about the educational infrastructure. Such assessment may not feel comfortable to departmental faculty because of ambiguity about ownership of its development and responsibility for its outcomes. However, such overarching assessment can fuel better curricular feedback by explicitly looking at learners' ability to integrate competencies and at their readiness to move forward to their next level of responsibility such as preclinical to clerkship training or from medical school to residency.

Timing of assessments

Many of these competencies require integration across and beyond courses and clerkships and comprise broad curricular themes. The assessment of a learner's ability to ‘use evidence in the care of patients’ is an example of a competency that crosses not only courses and clerkships but years of medical education curriculum. Similarly, professionalism can and should be assessed not only in courses but also outside of courses or clerkships, for example, when a student interacts with administrative staff or participates in a volunteer activity.

A comprehensive and systematic view across courses and clerkships allows the medical school to set performance levels for some competencies beyond what individual courses and clerkships might expect. Clerkships might emphasize different skills, and due to lack of practice, students taking a subsequent course or clerkship not emphasizing specific skills might see a decline in their performance level. As an example of this, two of our required clerkships, Family Medicine and Internal Medicine, have specific shared decision-making content. The faculty set up a study in which the students were assessed on their ability to use a shared decision-making model in whichever of the two clerkships they took latest in their third year. The faculty found that the more time there was between the clerkships, the worse the student's performance (11). Placing a shared decision-making assessment in the year three OSCE provides students important feedback on this skill at a particular time in their training rather than in a particular subject, which might occur early or late in their training. The program evaluation data that comes from assessments outside of the courses and clerkships can also encourage the teaching of competencies that were traditionally not emphasized by some disciplines.

Continuity across UME, GME, and CME

While assessment outside of a single course or clerkship feels ‘uncomfortable’ to faculty, it is even more challenging to envision assessments that cross from medical schools to residency training sites and into unsupervised practice. Yet data on incoming PGY-1 residency trainees at this institution and others (7–9) was instrumental in making a case for paying attention to the transitions between undergraduate medical education (UME), the supervised practice of residency (GME), and unsupervised practice (CME). In much the same way that patient care handoffs have been recognized as times when errors are more likely to occur, educational handoffs – during which trainees transition to more responsibility and less supervision – create set ups for error commission. Our educational silos can create high levels of stress for our trainees as they strive to take the very best care of patients. At our institution, the PGY-1 Patient Safety OSCE was designed to establish the presence of patient care skills necessary in the first weeks of residency. Participating residents demonstrated a wide range of performance; this formative assessment provided them with immediate, performance-based feedback on their strengths and weaknesses, and provided a basis for formulating a personal learning plan early in their residency. Program directors are able to use aggregate information about their residents' performance to tailor initial orientation and other learning activities. While program directors continue to determine the best ways to incorporate this information into their residency assessment structure, the participating trainees are almost unfailingly grateful for this ‘good data’ at a very critical time.

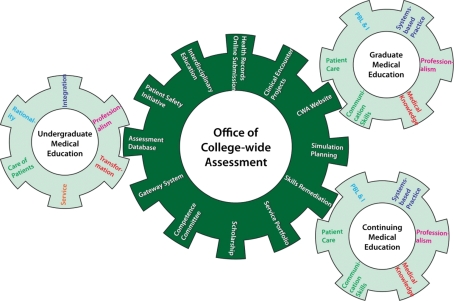

Academic faculties value their interactions with trainees – many have come to academia because they enjoy the challenge and stimulation that learners provide. Yet as learners change and medical practice evolves, faculty are challenged to keep up. Faculty skill sets are not always up to the challenges of teaching medical students and residents, and there is a lack of curricular standardization both nationally and internationally. There is an assumption of basic knowledge, skills, and attitudes at the transition from undergraduate medical student to resident; the same is true for faculty across the medical education continuum as well. At any point in time, assumptions about the competency of learners or faculty might be found to be erroneous. Most faculty teach and guide learners without formal educational training. Assessments of faculty have been, primarily, the assessment of their medical knowledge. Continuing medical education efforts are being redirected toward competencies beyond medical knowledge and novel methods of assessing practitioner competencies are changing the way maintenance of certification is done. Increasingly, tests of medical knowledge have been augmented with quality improvement requirements, practice audits, and patient satisfaction surveys. It is logical that a robust assessment strategy can help faculty identify their own strengths and weaknesses and decrease stress along their professional journey. As a result of the Office of College-wide Assessment efforts at the undergraduate and residency levels, faculty development topics and tools have been created. This is a small part of college-wide assessment activities thus far, but will hopefully continue to grow in importance as critical linkages between undergraduate, graduate, and continuing medical education activities are strengthened. In this way, a competency-based approach to assessment, with the Office of College-wide Assessment at the core, helps drive a coordinated approach for all learners (see Fig. 2).

Fig. 2.

Office of college-wide assessment has a central role in assessing learner competencies.

Implementation resources

The Office of College-wide Assessment has placed less emphasis on improving the quality of the College's multiple choice examinations. The need to improve existing performance-based assessments and to develop new assessment methodologies for SCRIPT competencies has been given highest priority. Predating the founding of the Office, an HRSA grant-funded effort through our Department of Family Medicine (12) was the lynchpin for much of the design and initial implementation of an early blueprint for a ‘Gateway Assessment System.’ This funding provided resources to pilot test assessments, train faculty, and demonstrate the need for a system to the rest of the college and the university. A centerpiece of this grant funding was the development of the first phase of the blueprint, a Year 3 Care-of-Patients Gateway OSCE, which began as a formative clinical skills examination required at the end of the third year. This gateway OSCE was followed by a required formative Year 3 Rationality (Practice-Based Learning and Improvement) Gateway exercise as well as a required formative Year 4 Integration Gateway (Systems-Based Practice) test, the development of which also was funded as part of the HRSA Gateway grant activities.

Additional pieces of the assessment system have been implemented including a new Year 2 Care of Patients Gateway, which takes place just before students begin their required clerkships. The Year 3 Care of Patients Gateway has gone from a formative experience to a summative examination requiring students to demonstrate minimal competency in patient care skills, and remediation and demonstration of competence for students who do not successfully pass the first time. Our new Service Learning curriculum has an accompanying assessment based on a reflective essay and discussion with a faculty mentor. A formative Professionalism log is now utilized within the preclinical curriculum to collate incidents of either problematic or outstanding professionalism and to provide feedback to students as they strive to internalize relevant attributes. Building on this infrastructure, the Office of College-wide Assessment is planning a SCRIPT competency-based Overarching Gateway Assessment Program (O-GAP) that will eventually include the evaluation of each SCRIPT competency at the ‘knows, knows how, shows how (and ideally), does’ (10) levels of acquisition (see Fig. 3).

Fig. 3.

Adaptation of Miller's model to college of human medicine competencies.

The institution can provide many kinds of support for assessment but there is no substitute for funding. There is a good deal to pay for in the creation of an assessment system. Often faculty and leadership will need development through additional training. Our college had considerable internal expertise but still needed to send faculty to national and international training sessions. In addition, the strength of the assessment system is the variety of different assessment modalities upon which it is built. Many assessment modalities have infrastructure requirements like computerized testing, standardized patients, and simulation facilities, each of which requires resources that might not otherwise be readily available.

What is irreplaceable is intellectual capital and recognizing the need for time for faculty to develop, implement, and evaluate assessments. It takes considerable time to review curriculum and do the analysis required to design and place appropriate assessments. Likewise, it takes time to design, validate, and implement new assessments. While this ‘start-up’ is required for any implementation, time also is required to provide feedback to learners as well as departments and course/clerkship directors.

Feedback to the curriculum and learner

While there is great value in creating better assessment of students, there is equal value in using assessment data to improve the curriculum. In addition, any functional assessment office should be a vehicle for delivering data back to learners and to relevant curricular, course, and clerkship faculty. This is made possible by a close working relationship with medical education experts. At CHM, the Office of College-wide Assessment is a hub of data analysis. This work is only possible through collaboration with the Office of Medical Education Research and Development (OMERAD), whose faculty provide critical instructional design, psychometric, statistical, and educational expertise. The expertise in OMERAD augments the ‘clinician educator’ skill set of the Associate Dean and strengthens the messages she is able to take back to the faculty.

The power of those messages – good data on what our learners are doing well and what they are not doing well – has fueled several ongoing curricular improvement projects that had met resistance only a few years earlier. Ongoing faculty projects include interventions to improve the teaching of the written health record and the focused clinical encounter, our preceptors' approach to teaching in the ambulatory setting (13), and the videotaping of student–patient interactions during clerkships. An HRSA-funded medication safety curriculum (14) has been developed and disseminated throughout the clerkship year. Each of these initiatives responds to specific deficits revealed by our system of summative and formative required performance assessments. Good data has changed skeptical faculty into change agents.

The power of good data has also benefitted our students who fail to demonstrate minimal competency. Those who do not pass the Care of Patients Gateways are asked to watch videotapes of their patient encounters. This ‘good data’ has enabled the development of personal learning plans and resulted in improved self-efficacy ratings from students required to remediate (15). In spite of the inherent unpleasantness of required remediation, many students thanked the involved faculty for enabling them to engage in their own ‘quality improvement’ efforts.

Evaluation of assessment system

The vision statement of the Office of College-wide Assessment – ‘Better Data for Teachers, Better Data for Learners, Better Patient Care,’ – distills the most important activities of the Office. It is the belief of the Associate Dean that ‘good people given good data will do great things.’

A robust assessment system is driven by competencies that make sense to its stakeholders; in medical education those stakeholders are trainees, faculty, and the public. A robust assessment system should provide helpful data to its learners, teachers, and patients – data that they can use to make informed decisions and necessary improvements. The Office of College-wide Assessment is continually assaying our students and our faculty to determine if the CHM performance assessments are accomplishing those goals. Students have always been frustrated by ‘dwell time’ strategies that often leave them guessing about how they are doing. Student performance has responded to the increased clarity of a competency-based assessment strategy, though we must continue to improve the transparency of our expectations for our students. Our faculty can be similarly frustrated by how best to discharge their duties as teachers and assessors, and the Office of College-wide Assessment has been able to use data on student performance to inform faculty development efforts. With expertise from OMERAD, we are actively evaluating each effort. The Office of College-wide Assessment will be able to measure its success to the extent it is able to accomplish its central goals: better data for teachers (Do the faculty have the information it needs to improve the curriculum?), and better data for learners (Do our learners understand their strengths and weaknesses?).

Lessons learned

As a result of the creation of an Office of College-wide Assessment, a group of centralized, standardized assessments are being developed, guided by our SCRIPT competencies and the desire to push our assessments as high on Miller's Pyramid as possible. These assessments make sense (multiple choice tests for knowledge, performance-based assessments for skills) and will continually improve in validity and reliability. We are fueling continuous quality improvement loops of both our learners and our curriculum, and working to improve the ways that we deliver authentic feedback to our learners as well the frequency with which we deliver it. Assessments placed at transitions between the preclinical and clinical years and at the beginning of residency have yielded invaluable data on knowledge and skill gaps. Authentic feedback and personal learning plans based on that feedback enable trainees to feel confident in their strengths and to identify things they must quickly review or master. These data can be used by the ‘receiving’ faculty to tailor early curricular offerings as well as to determine safe levels of supervision.

Critical principles/lessons learned

Accreditation mandates and quality assurance efforts are emphasizing improved assessment of both trainee and curricular outcomes.

Better assessment requires a systems-approach; the development of a wide variety of learner assessment strategies, ongoing data analysis and efficient data delivery to stakeholders, and attention to educational transitions.

Better assessment provides an evidence-base for ongoing curricular change that can guide and activate faculty efforts to continuously improve curriculum.

Better assessment provides more authentic feedback to trainees, who can then develop personal learning plans for their own continuous improvement.

Assessments that exist outside of specific courses or clerkships can afford improved curricular feedback by explicitly looking at learners' ability to integrate competencies and at their readiness to move forward to their next level of responsibility.

Assessment system design, data analysis, and data delivery (especially when components of that system exist outside of courses or clerkships) require leadership, a cooperative faculty team with medical education expertise, and significant institutional support in order to execute needed initiatives.

Better data for teachers can convert faculty into change agents. Better data for learners enables that faculty to utilize an evidence-base when individualizing remediation or a trainee's personal learning plan.

Improved accountability to faculty and to trainees through better assessment of outcomes can also provide evidence to our patients that we are paying attention to important aspects of our educational system. The link to improving patient care is an aspirational one – and awaits our most important outcomes work.

Conflict of interest and funding

The authors have not received any funding or benefits from industry or elsewhere to conduct this study.

References

- 1.Anderson MB, Cohen JJ, Hallock JA, Kassebaum DG, Turnbull J, Whitcomb ME, Med School Objectives Writing Group Learning objectives for medical student education – guidelines for medical schools: Report I of the Medical School Objectives Project. Academic Medicine. 1999 doi: 10.1097/00001888-199901000-00010. [DOI] [PubMed] [Google Scholar]

- 2.Accreditation Council for Graduate Medical Education. Available from: http://www.acgme.org/acWebsite/home/home.asp [cited 28 October 2010]

- 3.Nasca TJ. The CEO's first column: the next step in the outcomes-based accreditation project. ACGME Bull. 2008 May;:2–4. May. [Google Scholar]

- 4.New Horizons in Medical Education conference proceedings. Available from: http://ama-assn-media.org/AMC-Conference2010/intro.mp3 [cited 3 December 2010]

- 5.Accreditation Council for Graduate Medical Education. Available from: http://www.acgme.org/Outcome/assess/Toolbox.pdf [cited 7 December 2010]

- 6.Wagner D, Mavis B, Henry R, College of Human Medicine Competence Committee The College of Human Medicine Competence Committee: validation becomes value-added; Ottawa Conference on the Assessment of Competence in Medicine and the Healthcare Professions, May 2010. [Google Scholar]

- 7.Wagner D, Hoppe R, Lee CP. The patient safety OSCE for PGY-1 residents: a centralized response to the challenge of culture change. Teaching Learn Med. 2009;21:8–14. doi: 10.1080/10401330802573837. [DOI] [PubMed] [Google Scholar]

- 8.Lypson M, Frohna J, Gruppen L, Wooliscroft J. Assessing residents' competencies at baseline; identifying the gaps. Acad Med. 2004;79:564–70. doi: 10.1097/00001888-200406000-00013. [DOI] [PubMed] [Google Scholar]

- 9.Wagner D, Lypson M. Centralized assessment in graduate medical education: cents and sensibilities. J Grad Med Educ. 2009;1:21–27. doi: 10.4300/01.01.0004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Miller G. The assessment of clinical skills/competence/performance. Acad Med. 1990;65:63–7. [Google Scholar]

- 11.Solomon DJ, Laird-Fick HS, Keefe CW, Thompson ME, Noel MM. Using a formative simulated patient exercise for curriculum evaluation. BMC Med Educ. 2004;4:8. doi: 10.1186/1472-6920-4-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Noel MB, Reznich C, Henry R. Gateway assessments of medical students for program development, ‘Really Good Stuff. Med Educ. 2006;40:1123–47. doi: 10.1111/j.1365-2929.2006.02616.x. [DOI] [PubMed] [Google Scholar]

- 13.Demuth R, Phillips J, Wagner D, Winklerprins V. Development of a preceptor tool to help students learn diagnostic reasoning; Forthcoming at the 37th Annual STFM Conference on Medical Student Education, 20–23 January 2011, Houston, TX, USA. [Google Scholar]

- 14.Barry H, Reznich C. System-wide implementation of a medication error curriculum in a community-based family medicine clerkship; Society of Teachers of Family Medicine Annual Spring Conference, April 2010, Vancouver, BC. [Google Scholar]

- 15.Demuth R, Phillips J, Wagner D. Teaching students to think like doctors: development of a hands-on remediation curriculum in diagnostic reasoning; RIME presentation, AAMC Annual Meeting, November 2010, Washington, DC. [Google Scholar]