Abstract

The present study investigated age-related differences in the amygdala and other nodes of face-processing networks in response to facial expression and familiarity. fMRI data were analyzed from 31 children (3.5–8.5 years) and 14 young adults (18–33 years) who viewed pictures of familiar (mothers) and unfamiliar emotional faces. Results showed that amygdala activation for faces over a scrambled image baseline increased with age. Children, but not adults, showed greater amygdala activation to happy than angry faces; in addition, amygdala activation for angry faces increased with age. In keeping with growing evidence of a positivity bias in young children, our data suggest that children find happy faces to be more salient or meaningful than angry faces. Both children and adults showed preferential activation to mothers’ over strangers’ faces in a region of rostral anterior cingulate cortex associated with self-evaluation, suggesting that some nodes in frontal evaluative networks are active early in development. This study presents novel data on neural correlates of face processing in childhood and indicates that preferential amygdala activation for emotional expressions changes with age.

Keywords: development, amygdala, fMRI, face processing, social-emotional processing

INTRODUCTION

Navigating the social world requires the ability to discriminate and respond appropriately to salient faces. In recent years, the adult brain networks involved in processing such motivationally meaningful aspects of faces as expression and identity have been extensively mapped (Palermo and Rhodes, 2007; Vuilleumier and Pourtois, 2007). In particular, convergent evidence suggests that in the adult brain the amygdala functions as a ‘motivational relevance detector’ (Adolphs et al., 2005; Cunningham et al., 2008; Todd and Anderson, 2009) and that amygdala activation can serve as a marker of the relative salience of a stimulus. Yet little is known about the development of the amygdala’s role in processing salient stimuli in young children. The goal of the present study was to compare patterns of amygdala activation to emotional expressions in kindergarten and early school-aged children with those found in young adults.

In adults, diverse evidence indicates that the amygdala functions as a hub of brain networks mediating social–emotional processing (Adolphs, 2008; Pessoa, 2008; Kennedy et al., 2009; Todd and Anderson, 2009), including processing of facial emotion (Sergerie et al., 2008). Imaging and lesion data indicate that the amygdala responds to both positive and negative arousing stimuli (Anderson and Sobel, 2003; Phan et al., 2003; Paton et al., 2006; Sergerie et al., 2008), as well as ambiguous events (Whalen, 2007). There is evidence that the amygdala facilitates rapid perception of emotionally important stimuli (Anderson and Phelps, 2001; Anderson et al., 2003) and also influences more extended processing of their motivational relevance (Tsuchiya, et al., 2009). Thus, convergent data point to the amygdala as a key region for processing the motivational relevance of events and thereby influencing implicit and explicit processes at a range of time scales (Adolphs, 2008; Cunningham et al., 2008; Todd and Anderson, 2009). Moreover, if the amygdala functions as a ‘motivational relevance detector’, then patterns of amygdala activation can be seen to mark the relative salience of stimuli.

For humans, motivationally relevant stimuli include social stimuli, and the amygdala plays an important role in social learning (Davis et al., 2009). Recent data indicate that amygdala responses to specific classes of stimuli are modulated with a change in social and motivational context (Cunningham et al., 2008; Van Bavel et al., 2008). For example, in a study where participants were asked to evaluate famous people, Cunningham and colleagues (2008) found that the amygdala responded preferentially to names of celebrities participants felt positive about when they were asked to evaluate positivity, and to names of celebrities they felt negative about when asked to rate negativity. Thus, the amygdala’s response pattern shifted as the motivational relevance of stimuli changed according to momentary task demands.

Increasing evidence indicates that, at a longer time-scale, preferential activation to emotional stimuli also changes over the lifespan. For example, older adults show greater relative amygdala activation to positive vs negative stimuli (Mather et al., 2004), a finding in keeping with behavioral data that show a general bias toward positive emotion in older adults (Charles et al., 2003; Mather and Carstensen, 2003). The same study showed that young adults showed equal levels of amygdala activation to positive and negative stimuli (Mather et al., 2004), a finding consistent with a number of studies in young adults showing no amygdala discrimination between angry and happy faces [for review, see Sergerie et al. (2008)]. Developmental shifts in amygdala activation pattern have also been observed between adolescence and young adulthood. In adolescence, although the amygdala shows adult-like activation to facial emotion in general, its responsiveness to specific emotions differs from that in adults (Thomas et al., 2001b; Yurgelun-Todd and Killgore, 2006; Killgore and Yurgelun-Todd, 2007).

Although little functional imaging data exist on patterns of amygdala activation in young children, young children and adults do show differences in behavioral responses to emotional valence. Recent developmental studies indicate that, relative to young adults, young children show a positivity bias similar to that of older adults (Qu and Zelazo, 2007; Boseovski and Lee, 2008; van Duijvenvoorde et al., 2008). For example, Boseovski and Lee (2008) found that 3–6-year-olds showed a positivity bias in personality judgments and van Duijvenvoorde and colleagues (2008) found that, compared to young adults, 8–9 year olds’ learning was enhanced following positive rather than negative feedback. Patterns of amygdala activation might also be expected to develop in tandem with the capacity to recognize facial emotion. Convergent behavioral evidence indicates that, whereas infants can discriminate happy from frowning expressions at 3 months, especially on their mothers’ faces (Barrera and Maurer, 1981), the capacity to correctly categorize angry faces may not reach adult levels until age 9 or 10 years, especially at lower levels of intensity (Durand et al., 2007; Gao and Maurer, 2009; Vida and Mondloch, 2009). In contrast, children can discriminate happy faces almost as well as adults by 3–5 years (Camras and Allison, 1985; Boyatzis et al., 1993; Durand et al., 2007). Thus, positive expressions may be more salient and more recognizable than angry expressions to young children.

A primary goal of the present study was to extend research on amygdala sensitivity to facial emotion to early childhood by investigating amygdala responses to emotional expression during the kindergarten/early-school years. We expected that, as with older adults, preferential behavioral responses to positive stimuli should be reflected in preferential amygdala activation for happy vs angry faces in young children.

A secondary goal of the study was to compare broader activation patterns for children and adults in response to personally familiar and unfamiliar emotional faces. Haxby and colleagues have proposed a set of ‘core’ and ‘extended’ networks involved in face perception and recognition in adults (Haxby et al., 2000; Gobbini and Haxby, 2007). Core networks include face-sensitive regions of fusiform gyrus and superior temporal sulcus (STS) involved in visual perception. Extended networks include ventromedial prefrontal cortex (VM-PFC), ventrolateral prefrontal cortex (VL-PFC), insula and the cingulate cortex. These frontal and limbic regions are also thought to mediate evaluative and regulatory processes, including emotional feeling, evaluation of social feedback, self-regulation and empathy (Blair et al., 1999; Allman et al., 2001; Mather et al., 2004; Ochsner et al., 2004; Gobbini and Haxby, 2007; Rolls, 2007).

Building on evidence that face processing continues to develop well into adolescence (Itier and Taylor, 2004; Taylor et al., 2004; Batty and Taylor, 2006), recent fMRI studies suggest that specialized processing of faces in core visual cortex regions is similarly slow to develop (Aylward et al., 2005; Golarai et al., 2007; Scherf et al., 2007). Much less is known about the early development of functional brain activation in extended networks for processing salient aspects of facial familiarity and emotion. Nonetheless, structural imaging and event-related potential (ERP) research allows us to make some predictions about the development of extended face processing networks. Studies examining the structural development of the brain have shown that maturation of specific frontal regions occurs along different timelines (Giedd et al., 1999; Gogtay et al., 2004); ventral and midline prefrontal regions mature earlier than dorsal and lateral regions, which continue to mature into late adolescence (Gogtay et al., 2004). Thus, ventromedial regions associated with evaluative processing should be expected to show relatively mature patterns of functional activation in young children. Studies using ERPs and other imaging methods suggest that ventral and midline frontal regions are implicated in evaluation of personally familiar faces from early in development. Children show frontal activation when discriminating mothers’ from unfamiliar faces in infancy as well as in the pre-school years (Carver et al., 2003; Strathearn et al., 2008; Todd et al., 2008). Thus, above and beyond our focus on amygdala activation, we wished to use fMRI to compare specific regions implicated in the extended processing of facial familiarity and emotion in young children and adults. Despite the overall protracted development of frontal brain regions, we expected that ventral prefrontal regions of the prefrontal cortex associated with emotional evaluation and self relevance would be sensitive to personally familiar faces in young children.

To investigate age-related amygdala responses to facial emotion with stimuli that would be salient to young children, we designed an fMRI task using photographs of participants’ mothers and strangers with emotional expressions. Data were analyzed from 31 young children (aged 3.8–8.9 years) and 14 young adults (18–33 years) who viewed blocks of photographs of their mother and an unknown woman with angry and smiling expressions. To investigate age-related differences in amygdala activation, we employed region of interest (ROI) analyses focused on the amygdala. We hypothesized that children would show preferential activation for happy vs angry faces whereas young adults would either show equal activation to positive and negative expressions [for review, see Sergerie et al. (2008)] or show preferential responses to angry faces (Zald, 2003).

The experimental design also allowed us to examine age-related responses in extended face processing networks to personal familiarity and expression, both between children and adults, and within the group of children. Voxelwise whole-brain analyses were employed to probe processing of salient aspects of faces in a wider network of regions. Specifically, we predicted that ventral or midline regions would be sensitive to personal familiarity in young children as well as in adults.

MATERIALS AND METHODS

Subjects

Forty-three children aged 3.8–8.9 years (27 females, mean age 6.0 years), and 14 young adults, aged 18–33 years (7 females, mean age 26.1 years), participated in the study after being screened for uncorrected visual impairments and psychiatric disorders. Participants were recruited through flyers, advertisement and word of mouth, and were from a variety of cultural and socio-economic backgrounds. Each family received $40 for participation and each child received a toy. Informed written consent was obtained from all adults and parents of the children, children gave verbal assent, and the study was approved by the Research Ethics Board at the Hospital for Sick Children.

Stimuli

Mothers’ emotionally expressive faces were photographed against a white background while looking straight at the camera. For each participant, five happy and five angry photographs of his/her mother, as well as five happy and five angry photographs of another mother, were chosen. Contrast and luminance levels across photographs were equated.

Procedure

Mothers of participants either initially visited the laboratory to be photographed or emailed digital photos of themselves based on written instructions. The goal was to obtain faces expressing anger or disapproval that were typical of children’s daily experience. Mothers were instructed to make faces that included the face that, ‘when they see it, the children know they’re in trouble or had better stop what they are doing’. Mothers of adults were asked to make the face they had made when their children were young, and the instructions were phrased in the past tense. Instructions included a request to make both angry and happy faces with mouth open and mouth closed, to control for confounds between expression and amount of tooth showing. Photographs were rated by three adult raters for emotion type and intensity level. Raters were asked to indicate whether each photo was angry/displeased, happy or other, and if angry or happy to rate its intensity on a scale of 1–5. Mean intensity ratings for each photograph rated as angry or happy by all three raters were calculated. Five photos with mean ratings for most intensely angry or displeased expressions, including photos with mouth open and mouth closed, were chosen first and then happy faces were chosen that matched the angry faces in intensity. Photos that were not identified by all raters as angry/displeased or happy were rejected. Finally, photos of another mother were chosen that were matched for age, appearance and affective intensity. The same five happy and five angry photos that were used as mother’s faces for one participant were used as stranger’s faces for another participant. Finally, scrambled images were created from the face images by randomizing their phase information in the Fourier domain.

Children were familiarized with pictures of the scanner and scanner sounds. Using a ‘practice scanner’ made up of a child’s play tube, they were then coached to remain still while lying on their backs and pressing a button. In the scanner a response box was placed at each participant’s dominant hand. Foam padding was used to constrain the participants’ heads. Participants watched cartoons through the MRI compatible goggles while structural images were obtained. The task was presented after acquisition of structural images (6 min), in a 7-min block design task.

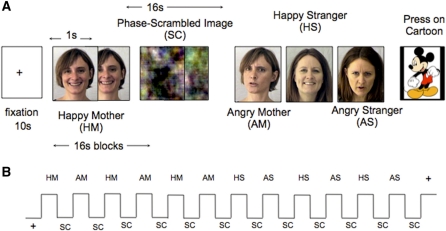

Trial structure

Stimuli were presented through LCD goggles (Resonance Technology, Northridge, CA) using Presentation software (Neurobehavioral Systems, Albany, CA). The task consisted of 12 16-s blocks of emotional faces alternating with 12 16-s blocks of phase-scrambled images. As the youngest children were able to remain still in the scanner only for short time periods, we used a block design for maximum power to detect BOLD effects. There were three blocks each of four face types: happy mother, angry mother, happy stranger and angry stranger. Each block of faces consisted of 16 images of photos of one of the face types listed above, presented in pseudorandom order for 1 s each, drawing from a set of five different photographs of each of the stimulus types (e.g., happy mother) (Figure 1). Blocks of angry and happy faces were alternated. Free viewing tasks have been consistently successful in tapping amygdala response to facial emotion (Adolphs et al., 2005) and have been effective in developmental studies of amygdala activation (Killgore and Yurgelun-Todd, 2001). Here, in order to create as close to a free-viewing task as possible while maintaining young children’s attention to the task, familiar cartoon characters were occasionally randomly presented for 500 ms among the faces and textures. Participants were told to press the button when the cartoon character appeared. Key press responses were recorded with a handheld fiber optic keypad (Lumitouch, Burnaby, Canada).

Fig. 1.

Experimental design. (A) 16-s blocks of affective faces were alternated with 16-s blocks of phase-scrambled images. There were four types of affective faces: Happy Mother (HM), Angry Mother (AM), Happy Stranger (HS) and Angry Stranger (AS). For each face type there were five photographs rated and matched for intensity and appearance. Each block contained 16 1-second images of each face type presented in random order. (A) cartoon image appeared at random intervals and participants were instructed to press a button when they saw the cartoon. (B) Schematic of block presentation. There were three blocks of each face type for a total of 12 blocks of faces and 12 blocks of scrambled images.

fMRI data acquisition and analysis

Participants were scanned with a standard quadrature head coil on a 1.5 Tesla GE Excite HD scanner (GE Medical Systems, Milwaukee, WI). High-resolution anatomical images were obtained using an axial FSPGR sequence, with 106 slices, TR = 8 ms; TE = 23 ms; flip angle = 30°; field of view = 240 mm and voxel size = 1 × 1 × 1.5 mm. Functional images were acquired using a spiral in/out sequence, 24 slices, 206 TRs, TE = 40 ms; TR = 2 s; flip angle = 90°; field of view = 240 mm, and voxel size = 3.75 × 3.75 × 5 mm.

Data were analyzed using AFNI software (Cox, 1996). The first and last five volumes of the single run were discarded. The remaining volumes were spatially registered to the first volume. At this point data from nine children with movement parameters larger than 2 mm or whose log files revealed that they had misunderstood the task (children who pressed the button on every image or on scrambled images rather than just on cartoons) were removed from further analysis. Data for the remaining participants were treated for outliers, and each volume was spatially smoothed with an 8-mm Gaussian filter (full-width, half-maximum). Individual participants’ data were analyzed using the General Linear Model (GLM) in AFNI. GLM analysis proceeded in two stages: We first defined individual activation for faces in relation to baseline, then examined activation associated with facial expression and familiarity.

To examine the BOLD response to faces vs scrambled images, a single regressor was created for all of the face blocks together, which served as the regressor of interest (for results see supplementary tables 4 and 5). At this point data from three more children were removed from further analysis due to visible artifacts not removed by the motion correction algorithm. Thus, the final analyses were on 31 children (21 females) and 14 adults (7 females).

To examine responses related to mothers’ and strangers’ happy and angry faces, multiple regressions were performed for each individual. Regressors were created for each face type—happy mother, happy stranger, angry mother and angry stranger. These served as regressors of interest in a model that used the scrambled image blocks as the baseline.

For group analyses, parameter estimates (beta weights) from the GLM and anatomical maps for each individual were transformed into a common template. For purposes of comparison both adult and child data were transformed into the standard coordinate space of Talairach and Tournoux (Talairach and Tournoux, 1988). However, there is evidence that children’s brain maps may be subject to distortion when normalized to an adult template (Gaillard et al., 2001; Wilke et al., 2002), especially for children under 5 years. Thus, for purposes of comparison, the children’s data were also normalized using a version of the Cincinnati Pediatric Atlas based on an average of 49 children aged 5–9 years (Wilke et al., 2003). All reported results were consistent across both atlases.

For the amygdala, bilateral ROIs were defined anatomically from each individual’s non-normalized anatomical map, and time series were averaged separately in right and left amygdala for all voxels activated over baseline. Because the amygdala is susceptible to signal dropout, which can bias results toward false negatives (Zald, 2003), we used a lenient threshold (P < 0.1) for extracting active amygdala voxels. We employed a multiple regression that included variables for happy mother, happy stranger, angry mother and angry stranger conditions and extracted beta coefficients for each individual. These were analyzed at the group level in SPSS.

Motion data

In order to control for age-related differences in motion within the scanner, motion parameters were analyzed within the group of children and between children and adults (see supplementary Analyses 1 in Supplementary Data). These analyses were used to create motion-equated groupings between children and adults. Motion parameters were included as covariates in comparisons of groups that significantly differed in motion.

RESULTS

ROI analyses

For a region of interest (ROI) analysis of the amygdala, mean activations were extracted and repeated measures ANCOVAs were performed in SPSS on right and left amygdala ROIs. Children experience numerous developmental and experiential changes between the preschool/kindergarten and early grade school years (Eccles, Midgely and Adler, 1984). To create two categorical age groups and probe patterns of amygdala activation between pre-school-aged and school-aged children, we used a median split to divide the children into older (early grade school, 6.5–8.9 years, mean = 6.9 years) and younger (junior and senior kindergarten, 3.8–6.2 years, mean = 4.8 years) age groups. Expression (angry vs happy), familiarity (mother vs stranger) and laterality (right vs left) were within-subject factors, and age group (adults, n = 14; younger children, n = 14, 8 female; older children, n = 17, 12 female) was a between-subject factor. As females have been reported to show greater sensitivity to facial expression than males from infancy through adulthood (McClure, 2000), we also included sex (2) as a between-subject factor in an initial analysis. However, sex was non-significant and did not interact with any factors of interest; it was excluded from further analysis. Order of stimulus presentation (mother first vs stranger first) and maximum positive and negative motion were included as covariates. All contrasts were Bonferroni corrected. For the sake of brevity only significant or marginal results are reported here, but see Supplementary Table S3 for full results.

Results of the ANCOVA showed two significant/marginally significant results: there was a trend toward a main effect of age group, and an interaction between age group and expression.

The main effect of age group reflected greater overall amygdala activation relative to baseline for adults than children at the level of a trend, F(2, 34) = 2.92, P = 0.07, partial η2 = 0.15. Corrected pair-wise comparisons revealed that adults showed greater activation than younger children at the level of a trend, P = 0.07, but the differences between adults and older children was not significant, P > 0.1, suggesting a linear increase in amygdala activation over baseline with age.

To further probe the effect of age group seen in the ANCOVA we regressed amygdala activation on age in the combined group of 14 adults and 31 children, including motion as a covariate. Results revealed that activation for faces relative to scrambled images increased linearly with age, R2 Δ = 0.1, P < 0.05. Within the group of children alone there was also a moderate effect of age, showing greater activation relative to baseline with age, R2 Δ = 0.11, P < 0.05.

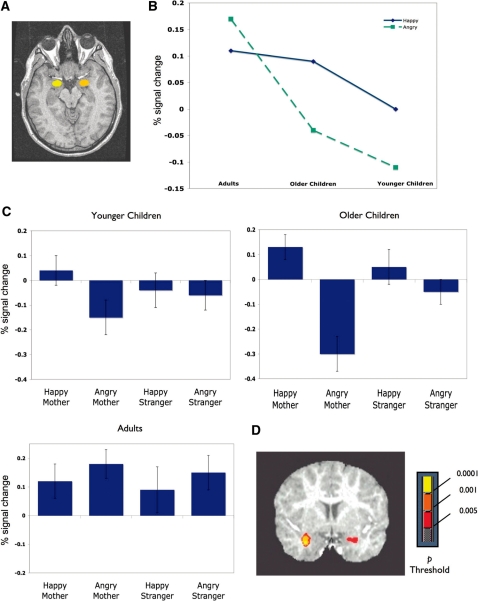

The 3 × 2 × 2 × 2 ANCOVA also revealed an age group × expression interaction F (2, 34) = 4.48, P < 0.05, partial η2 = 0.20. Planned contrasts showed greater activation for happy than angry faces for both the older children, P < 0.005 and the younger children, P < 0.05 (Figure 2). Adults showed the opposite pattern of greater activation for angry than happy faces, but the contrast was not significant, P = 0.22. Contrasts also revealed a linear increase in amygdala response to angry faces, with greatest activation for adults and least activation for the younger children, P < 0.01 (Figure 2).

Fig. 2.

Amygdala ROI. (A) Example amygdala ROI in a representative participant. Yellow and orange denote masks drawn over left and right amygdala, respectively. (B) Age Group by Expression interaction: Both older (N = 17, mean age =6.9 years) and younger children (N = 14, mean age = 4.8 years) showed significantly greater activation for happy than angry faces. The y-axis shows percent signal change from the scrambled image baseline. (C) Activation for happy and angry mothers and strangers in the 2 groups of children and adults. Both groups of children show greater activation to happy than angry faces. (D) Results of voxelwise analysis of 14 adults and 14 motion-equated children (4.3–8.9 years, mean age = 6.3 years) showing the contrast happy–angry in the children.

As a confirmatory measure we performed a follow-up ANCOVA comparing only those adults (N = 10, 4 female) and children (N = 18, 12 female, aged 4.3– 8.3 years, mean age = 6.6 years) whose overall amygdala activation was greater for faces than the scrambled image baseline. Again the same pattern of effects emerged. The marginal main effect of age group, F(1, 20) = 4.03, P = 0.06, partial η2 = 0.17, showing greater amygdala activation over baseline with age, was qualified by an age group × emotion interaction, F(1, 20) = 4.76, P < 0.05, partial η2 = 0.19. Children showed greater activation to happy than angry faces, P = 0.005, whereas adults did not.

Thus, convergent analyses revealed that children, but not adults, showed greater amygdala activation to happy than angry faces. In addition, amygdala activation over baseline, in particular in response to angry faces, increased linearly with age.

Voxelwise analyses

To further investigate extended network responses to facial expression and familiarity, we used four-way mixed model ANOVAs using AFNI’s GroupAna program implemented in MATLAB (Mathworks Inc., Natick, MA, USA), with age group as a between-subject factor, expression (angry vs happy) and familiarity (mother vs stranger) as fixed factors, and subject as a random factor. We performed two separate analyses so that comparison groups would be equated for motion. In Analysis 1, which compared adults and children, age groups were composed of 14 adults and 14 children whose motion parameters did not differ significantly from those of adults (4.3–8.9 years, mean age = 6.3 years, 12 female). In Analysis 2, which investigated age-related activation within the group of children, age groups were again composed of 14 younger children (3.8–6.2 years, mean age = 4.8 years, 8 female) and 17 older children (6.5–8.9 years, mean age = 6.9 years, 12 female).

Voxelwise analyses in adults and low-motion children revealed a main effect of age group in a number of regions, primarily right lateralized (Table 1), including a robust activation in the right putamen. Contrasts revealed that all of these regions showed greater activation for adults than children. At a relatively lenient threshold of P < 0.005, typically face-sensitive regions of right fusiform gyrus also showed greater activation for adults.

Table 1.

Results of ANOVA with age group, familiarity and expression as factors in 14 adults and 14 children matched for motion

| Volume mm2 | Direction | Hemi-sphere | Brain region (peak activation) | F | x | y | z |

|---|---|---|---|---|---|---|---|

| Main effect of group | |||||||

| 1215 | A > C | R | Precentral gyrus/BA 6/extending to medial frontal gyrus | 18.32 | 44 | −7 | 44 |

| 1053 | A > C | R | Inferior parietal lobule/BA 40 | 22.87 | 44 | −52 | 47 |

| 675 | A > C | R | Putamen** | 27.25 | 14 | 5 | 5 |

| 594 | A > C | R | Superior frontal gyrus/BA 6 | 17.45 | 23 | −7 | 59 |

| 486 | A > C | R | Superior frontal gyrus | 23.32 | 23 | −16 | 44 |

| 459 | A > C | R | Mid-cingulate/superior frontal gyrus | 18.67 | 5 | 2 | 50 |

| 270 | A > C | R | Middle frontal gyrus/BA 8 | 20.31 | 41 | 26 | 41 |

| 243 | A > C | L | Posterior cingulate gyrus | 16.64 | −19 | −40 | 29 |

| 216 | A > C | L | Superior frontal gyrus | 16.46 | −22 | −13 | 50 |

| 189 | A > C | R | Anterior cingulate cortex/BA 24 | 15.93 | 5 | 23 | 23 |

| 162 | A > C | L | Anterior cingulate cortex | 15.51 | −10 | 11 | 41 |

| 1917 | A > C | R | Middle temporal gyrus, extending to STS and fusiform gyrus* | 13.19 | 62 | −55 | −1 |

| Main effect of familiarity | |||||||

| 1323 | M > S | L | ACC/BAS 24 and 32, extending to left middle frontal gyrus** | 30.21 | −7 | 35 | 8 |

| 999 | S > M | R | Occipital gyrus | 20.4 | 23 | −88 | 8 |

| 324 | S > M | R | Striate cortex/BA 18 | 17.39 | 5 | −82 | −7 |

| 216 | S > M | R | Striate cortex/BA 18 | 16.48 | 8 | −94 | 17 |

| 162 | S > M | L | Occipital gyrus | 16.98 | −16 | −73 | −7 |

| Main effect of expression | |||||||

| 1080 | A > H | L | Inferior frontal sulcus/inferior frontal gyrus | 18.2 | −43 | 26 | 14 |

| 243 | H > A | R | Insula | 19.43 | 47 | 8 | 5 |

| 216 | H > A | L | Occipital gyrus | 18.07 | −19 | −91 | 2 |

| Group × expression interaction | |||||||

| 486 | C: H > A | L | Amygdala | 13.59 | −25 | −3 | −19 |

| 405 | A: A > HC: H > A | R | Putamen** | 20.61 | 26 | −7 | −7 |

Unless otherwise noted, clusters are significant at P < 0.001, uncorrected for multiple comparisons. Effect of Group: A = Adult, C = Child. Effect of Familiarity: M = Mother, S = Stranger. Effect of Expression: H = Happy, A = Angry. All tables use the LPI coordinate system, with positive numbers indicating right, anterior and superior in relation to the midpoint (0), and negative numbers indicating left, posterior and inferior.

Significant at **P < 0.0001 and *P < 0.005.

There was a main effect of familiarity in a region of left genual anterior cingulate cortex (ACC), which was preferentially activated for mothers across all participants (Table 1).

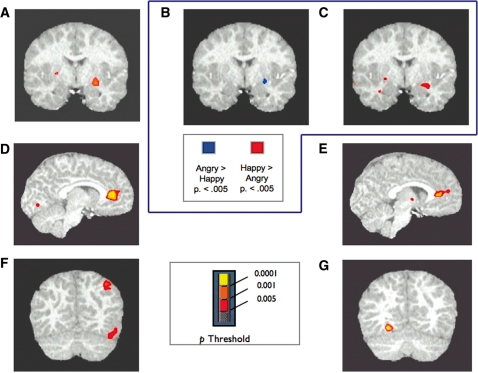

An age group by expression (Figure 2D) interaction showed greater amygdala activation in children for happy than angry faces (Table 1) as found in the ROI analysis. The right putamen also showed an age group × expression interaction (Figure 3A) with children showing greater activation for happy than angry faces. In this region, adults showed the opposite pattern of greater activation for angry than happy faces (Figure 3B and C).

Fig. 3.

(A) Results of voxelwise analysis of 14 adults and 14 motion-matched children showing the interaction between age group and expression in a region centered on the putamen. (B) Contrast between angry and happy faces in the group of 14 adults revealing greater putamen activation for angry than happy faces at a threshold of P < 0.005. (C) Contrast between angry and happy faces in the group of 14 children revealing greater putamen activation for happy than angry faces at a threshold of P < 0.005. (D) Results of voxelwise analysis of 14 adults and 14 motion-matched children showing greater activation in anterior cingulate cortex for mothers than strangers. (E) Results of voxelwise analysis of 17 older and 14 younger children, showing greater activation in anterior cingulate cortex for mothers than strangers. (F) Results of voxelwise analysis with 14 adults and 14 motion-matched children showing greater activation in the right fusiform gyrus and inferior parietal lobule for adults than children. (G) Results of voxelwise analysis with 17 older children and 14 younger children showing greater activation in the left fusiform gyrus for younger than older children.

In Analysis 2, investigating older vs younger children, few regions showed an effect of age group; however, at a threshold of P < 0.005, a region of left fusiform gyrus showed greater activation in younger than older children (Table 2). The amygdala and parahippocampal gyrus showed a main effect of expression, and contrasts revealed greater activation for happy than angry faces (Table 2), again confirming results found in the ROI analysis. Notably, a main effect of familiarity was found for the same region of genual ACC found in the adult/child analysis, and contrasts showed this region to be more responsive to mothers than strangers (Table 2).

Table 2.

Results of ANOVA with age group, familiarity and expression as factors in 17 older children (mean age = 6.9 years) and 14 younger children (mean age = 4.8 years)

| Volume | Direction | Hemi-sphere | Brain region (peak activation) | F | x | y | z |

|---|---|---|---|---|---|---|---|

| Main effect of group | |||||||

| 189 | Y > O | L | Fusiform gyrus | 14.7 | −22 | 55.5 | −10 |

| Main effect of familiarity | |||||||

| 1782 | M > S | L | ACC/BA 24** | 14.27 | −16 | 29 | 14 |

| 162 | M > S | L | Precentral gyrus/middle frontal gyrus | 16.19 | −28 | 2 | 35 |

| Main effect of expression | |||||||

| 675 | H > A | L | Extended amygdala/parahippocampal gyrus** | 23.32 | −28 | 2 | −22 |

| 540 | H > A | L | Parahippocampal gyrus/entorhinal cortex/BA 28 | 20.58 | −22 | −19 | −22 |

| 270 | H > A | R | Parahippocampal gyrus/entorhinal cortex/BA 36 | 17.65 | 26 | −28 | −16 |

| 243 | H > A | L | Fusiform gyrus | 19.06 | 31.5 | 37.5 | −15.5 |

Unless otherwise noted, all clusters are significant at P < 0.001, uncorrected. Effect of Group: A = Adult, C = Child. Effect of Familiarity: M = Mother, S = Stranger. Effect of Expression: H = Happy, A = Angry.

Significant at **P < 0.0001.

Thus, the same region of ACC responded preferentially to mothers’ faces across all participants both in the analysis of adults and motion-matched children and in the analysis of younger and older children. To confirm that this effect could be consistently observed in the youngest and the oldest age groups, we performed follow-up analyses in the 14 youngest children and the 14 adults separately. Results again showed greater activation for mothers than strangers in this region both for the younger children (P < 0.005, peak activation x = –1, y = 29, z = 8), and for the adults (P < 0.005, x = –7, y = 38, z = 8), suggesting that the effect was found independently in the youngest children as well as in the adults.

DISCUSSION

Age related patterns of amygdala activation

The primary goal of the present study was to compare amygdala activation to personally salient emotional stimuli in young children vs adults. Our results show that even in the youngest children the amygdala responds differentially to happy and angry facial expressions. To our knowledge, the present study is the first to report amygdala sensitivity to facial emotion in a population of children this young. Notably, in the comparison of children and adults, preferential amygdala response to valence depended on age group, with children and adults showing different response patterns to specific emotions: Children showed greater amygdala activation for happy than angry faces, whereas amygdala activation in young adults did not discriminate significantly between the two expressions. In addition, amygdala activation for faces over baseline, and in particular for angry faces, increased linearly with age.

By indicating that the amygdala is sensitive to positive emotion in kindergarten-aged children, these data add to a growing body of literature demonstrating the sensitivity of the amygdala and extended amygdalar complex to positive emotion (Liberzon et al., 2003; Paton et al., 2006; Sergerie et al., 2008). Recent studies have also found that preferential amygdala activation can be modulated by contextual factors, such as top-down attentional processes (Pessoa et al., 2002; Ochsner et al., 2004; Bishop et al., 2007) and personal goals (Cunningham et al., 2008). Our data add to a growing body of research suggesting that patterns of preferential amygdala activation may also shift with development.

The age-related differences we found in amygdala activation to positive vs negative valence suggest a similar pattern to that revealed by studies comparing aging populations with young adults. These studies have shown that whereas young adults show greater attentional capture by negative events, and equal amygdala response to positive and negative stimuli (Mather et al., 2004; Sergerie et al., 2008), older adults show equal capture by positive and negative arousing images, and show relatively greater amygdala activation for positive images (Mather et al., 2004; Rosler et al., 2005). Developmental research has also shown that amygdala responses to facial expression change between adolescence and adulthood. Previous studies have shown that adolescents and adults exhibit similar levels of amygdala activation in response to facial emotion, but that adolescents respond preferentially to certain emotional expressions somewhat differently than do adults (Baird et al., 1999; Thomas et al., 2001a,b; Yurgelun-Todd and Killgore, 2006; Killgore and Yurgelun-Todd, 2007). For example, adolescents have been found to respond more to sad faces than do adults (Killgore and Yurgelun-Todd, 2007), and pre-adolescent children have been found to show preferential activation for neutral over fearful faces, unlike adults who showed the opposite pattern of response (Thomas et al., 2001b). Thus a growing body of evidence suggests that amygdala responses to specific emotional expressions differ across developmental periods.

Adding to this developmental picture, our results demonstrate how the amygdala responds preferentially to specific emotional expressions in young children compared to young adults. Thus the present study extends research on development of amygdala sensitivity to facial emotion to younger children, and suggests that 3–6 and 6–8-year-old children show distinct patterns of response to positive and negative facial emotion compared to young adults. This pattern of neural response may reflect behavioral evidence of a positivity bias similar to that found in older adults, as recent studies suggest (Boseovski and Lee, 2008; van Duijvenvoorde et al., 2008). It may also reflect an immature capacity to evaluate angry facial expressions: 4–8-year-old children are better at recognizing and categorizing happy than angry faces, especially at low levels of emotional intensity (Durand et al., 2007; Gao and Maurer, 2009; Vida and Mondloch, 2009). In our study, we asked mothers to pose lower-intensity expressions that were reprimanding rather than extremely angry, in order to avoid responses based on novelty or bizarreness. As recent research has implicated the amygdala in explicit evaluation of facial expressions (Tsuchiya et al., 2009), as well as implicit responses to salient stimuli, it is possible that reduced amygdala activation to angry vs happy faces in young children may partly reflect their better ability to evaluate and categorize lower-intensity happy faces.

Alternatively our results may reflect developmental processes unique to childhood. As preferential amygdala activation for faces over baseline increased with age, and more markedly for angry than happy faces, it is possible that our results reflect an earlier development of preferential responses to happy faces that may be linked to the earlier-developing capacity to categorize positive expressions. Thus happy faces may be more meaningful to young children because they are more recognizable. It is also possible that the non-significant preferential response to angry faces we observed reflects the low intensity of the angry expressions we used. Finally, we cannot rule out the explanation that, as the mothers of adults were older than the mothers of young children, the effects of age for faces over baseline may be influenced by the difference in age of the faces posing the expressions, although we believe that the age of the participants themselves, not the mothers, is the more critical factor.

Overall our results suggest that the amygdala responds differentially to positive and negative facial emotions in the kindergarten/early school years. At the same time, the data indicate that, with age, the amygdala responds increasingly to faces over scrambled images—a finding in keeping with evidence of the protracted structural development of the amygdala (Giedd, 2008). Thus, although young children discriminate facial emotion, their overall pattern of activation in response to emotional faces is still developing.

Whole-brain analysis of extended face processing

The two main voxelwise analyses explored age-related activation in response to facial familiarity and emotion by comparing adults and motion-matched children, and by comparing older and younger children. In the adult/child comparison, an interaction between group and emotional valence was found, with greater extended amygdala activation in children for happy than angry faces. This finding confirmed the results of the ROI analyses. This analysis also revealed a region of right putamen that showed greater activation for happy than angry faces in children and greater activation for angry than happy faces in adults (Figure 3A–C). The putamen plays a role in dopaminergic systems mediating reward prediction and saliency processing (Downar et al., 2003; McClure et al., 2003; Pessiglione et al., 2006; Seeley et al., 2007), has been linked to evaluation of salient aspects of faces, including trustworthiness (Todorov et al., 2008) and maternal attachment (Strathearn et al., 2008), and may be co-activated with the amygdala within extended saliency networks (Popescu et al., 2009; Seeley et al., 2007). Here the putamen shows a similar pattern of age-related differences to the amygdala, consistent with a picture of either differential evaluation of reward or changing salience for negative and positive expressions over development. In the older/younger children comparison, as in the ROI analysis, greater activation was found in the amygdala/parahippocampal gyrus for happy over angry faces for all children.

In addition, both comparisons revealed a region of left rostral ACC (Figure 3) that showed a remarkably consistent response across age, from 3 to 33 years of age. This region at the genu of the ACC (Figure 3) has been reliably implicated in viewing and evaluating both oneself and similar others, including mothers (Craik et al., 1999; Ramasubbu et al., 2007; Zhu et al., 2007; Mitchell et al., 2008; Taylor et al., 2008; Vanderwal et al., 2008). To our knowledge, the present study is the first to report a similar pattern of activation in young children. In adults, convergent findings indicate a graded pattern of response, in which genual ACC is activated more for self than for mothers, and more for mothers than for familiar others (Mitchell et al., 2006; Ramasubbu et al., 2007; Zhu et al., 2007; Vanderwal et al., 2008). Such results are often interpreted in terms of overlapping self/other representations or person-knowledge networks [(but see Legrand and Ruby (2009)].

Developmental ERP research suggests that while frontal neural activity may shift in response to mothers’ vs unfamiliar faces between late infancy and early childhood (Carver et al., 2003), electrophysiological activity over frontal regions discriminates between mothers’ and stranger’s faces from early in development (Carver et al., 2003; Todd et al., 2008). Building on these data, we conclude that activity in prefrontal regions subserving self/other evaluation can be consistently observed by ages 3–8 years—at least when stimuli are sufficiently meaningful. This conclusion is consistent both with recent evidence suggesting that ventral prefrontal regions associated with seeing important others are active from infancy (Strathearn et al., 2008), and with models predicting prefrontal activation in response to salient stimuli early in development (Johnson, Grossmann and Cohen Kadosh, 2009).

Finally, although our focus was not on core face processing regions, in the voxelwise comparison of adults with children, we found more activation for adults than children in canonical face-sensitive regions in the right fusiform gyrus at a more lenient threshold. This finding is consistent with existing research suggesting protracted development of specialization within these regions (Aylward et al., 2005; Golarai et al., 2007; Scherf et al., 2007). Furthermore, younger children showed greater left fusiform activation than did older children, a finding consistent with ERP evidence suggesting increasing right lateralization of face processing with age (Taylor et al., 1999). Apart from this finding, age-related differences in responses to faces over baseline were found between the ages of 8 years and adulthood, and not within the range of 3–8 years. This overall pattern is consistent with studies that indicate that major developmental changes in the neural substrates of face recognition and evaluation take place between mid-childhood and mid-adolescence (Batty and Taylor, 2006; Kolb, Wilson and Taylor, 1992).

It should be noted that our design included repeated presentations of an individual posing a single emotion within a block, although in each block we included five examplars of each individual. It is possible that the amgydala and anterior cingulate results reported here may at least partly reflect differential habituation to repetition of different expressions as a function of age (amygdala) or related to familiarity across all age groups (anterior cingulate). Currently, the question of how emotional expression and repetition interact in the amygdala is unresolved. While some groups have found that, in adults, the amygdala is differentially sensitive to repetition of fearful vs neutral faces (Ishai et al., 2004), other groups have found that amygdala habituation does not differ between fearful and neutral expressions (Fischer et al., 2003). Repetition effects related to personal familiarity [using different exemplars of the same face) have been found in medial frontal regions (Pourtois et al., 2005)], although not the anterior cingulate region we report. Our block design did not allow us to directly measure effects of repetition suppression in order to probe mechanisms behind the patterns of differential activation that we found. Since the present study indicates that age-related differences in response to valence can be observed in the amygdala and putamen, and differential activation can be observed across age groups in the anterior cingulate, an important area for future research will be to investigate mechanisms underlying such differences. Such studies will add considerably to our understanding of how neural processing of salient faces changes over development.

CONCLUSIONS

This study presents novel data on neural responses to facial expression and familiarity in early childhood, in particular data on age-related differences in amygdala activation to facial emotion. Our data show that, whereas amygdala activation to emotional faces develops gradually over childhood, ACC sensitivity to mothers’ faces emerges early and remains stable. Furthermore, taken with research on aging and adolescence, our data contribute to an emerging developmental picture in which preferential amygdala processing of specific emotions changes over the lifespan.

SUPPLEMENTARY DATA

Supplementary data are available at SCAN online.

Acknowledgments

The authors are very grateful to Luiz Pessoa, Srikanth Padmala and the Laboratory for Cognition and Emotion at Indiana University for generously devoting their expertise and resources to the data analysis. They would also like to thank Evan Thompson for editorial help, their anonymous reviewers, and Tammy Rayner for her expert help with scanning their young subjects. The research was funded by a National Science and Engineering Research Council (NSERC) postgraduate scholarship to RMT, a Restracomp scholarship from the Sick Kids Foundation Student Scholarship Program to JWE, and a Canadian Institute for Health Research (CIHR) grant to MJT (MOP-81161).

REFERENCES

- Adolphs R. Fear, faces, and the human amygdala. Current Opinion in Neurobiology. 2008;18:166–72. doi: 10.1016/j.conb.2008.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, et al. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Allman JM, Hakeem A, Erwin JM, et al. The anterior cingulate cortex. The evolution of an interface between emotion and cognition. Annals of the New York Academy of Sciences. 2001;935:107–17. [PubMed] [Google Scholar]

- Anderson AK, Christoff K, Stappen I, et al. Dissociated neural representations of intensity and valence in human olfaction. Nature Neuroscience. 2003;6:196–202. doi: 10.1038/nn1001. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411:305–9. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Sobel N. Dissociating intensity from valence as sensory inputs to emotion. Neuron. 2003;39:581–3. doi: 10.1016/s0896-6273(03)00504-x. [DOI] [PubMed] [Google Scholar]

- Aylward EH, Park JE, Field KM, et al. Brain activation during face perception: evidence of a developmental change. Journal of Cognitive Neuroscience. 2005;17:308–19. doi: 10.1162/0898929053124884. [DOI] [PubMed] [Google Scholar]

- Baird AA, Gruber SA, Fein DA, et al. Functional magnetic resonance imaging of facial affect recognition in children and adolescents. Journal of the American Academy of Child and Adolescent Psychiatry. 1999;38:195–9. doi: 10.1097/00004583-199902000-00019. [DOI] [PubMed] [Google Scholar]

- Barrera ME, Maurer D. The perception of facial expressions by the three-month-old. Child Development. 1981;52:203–6. [PubMed] [Google Scholar]

- Batty M, Taylor MJ. The development of emotional face processing during childhood. Developmental Science. 2006;9:207–20. doi: 10.1111/j.1467-7687.2006.00480.x. [DOI] [PubMed] [Google Scholar]

- Bishop SJ, Jenkins R, Lawrence AD. Neural processing of fearful faces: effects of anxiety are gated by perceptual capacity limitations. Cerebral Cortex. 2007;17:1595–603. doi: 10.1093/cercor/bhl070. [DOI] [PubMed] [Google Scholar]

- Blair RJ, Morris JS, Frith CD, et al. Dissociable neural responses to facial expressions of sadness and anger. Brain. 1999;122:883–93. doi: 10.1093/brain/122.5.883. [DOI] [PubMed] [Google Scholar]

- Boseovski JJ, Lee K. Seeing the world through rose-colored glasses? Neglect of consensus information in young children's personality judgments. Social Development. 2008;17:399–416. doi: 10.1111/j.1467-9507.2007.00431.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boyatzis CJ, Chazan E, Ting CZ. Preschool children's decoding of facial emotions. Joural of Genetic Psychology. 1993;154:375–82. doi: 10.1080/00221325.1993.10532190. [DOI] [PubMed] [Google Scholar]

- Camras LA, Allison K. Children's understanding of emotional facial expressions and verbal labels. Journal of Nonverbal Behavior. 1985;9:84–94. [Google Scholar]

- Carver LJ, Dawson G, Panagiotides H, et al. Age-related differences in neural correlates of face recognition during the toddler and preschool years. Developmental Psychobiology. 2003;42:148–59. doi: 10.1002/dev.10078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charles ST, Mather M, Carstensen LL. Aging and emotional memory: the forgettable nature of negative images for older adults. Journal of Experimental Psychology: General. 2003;132:310–24. doi: 10.1037/0096-3445.132.2.310. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;2:162–73. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Craik FIM, Moroz TM, Moscovitch M, et al. In search of the self: a positron emission tomography study. Psychological Science. 1999;10:26–34. [Google Scholar]

- Cunningham WA, Van Bavel JJ, Johnsen IR. Affective flexibility: evaluative processing goals shape amygdala activity. Psychological Science. 2008;19:152–60. doi: 10.1111/j.1467-9280.2008.02061.x. [DOI] [PubMed] [Google Scholar]

- Davis FC, Johnstone T, Mazzulla EC, et al. Regional response differences across the human amygdaloid complex during social conditioning. Cerebral Cortex. 2009 doi: 10.1093/cercor/bhp126. doi:10.1093/cercor/bhp126 [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downar J, Mikulis DJ, Davis KD. Neural correlates of the prolonged salience of painful stimulation. Neuroimage. 2003;20:1540–51. doi: 10.1016/s1053-8119(03)00407-5. [DOI] [PubMed] [Google Scholar]

- Durand K, Gallay M, Seigneuric A, et al. The development of facial emotion recognition: the role of configural information. Journal of Experimental Child Psychology. 2007;97:14–27. doi: 10.1016/j.jecp.2006.12.001. [DOI] [PubMed] [Google Scholar]

- Eccles J, Midgely C, Adler TF. Grade-related changes in the school environment: effects on achievement motivation. In: Nichols JG, editor. The Development of Achievement and Motivation. Greenwich, CT: JAI Press; 1984. pp. 283–331. [Google Scholar]

- Fischer H, Wright CI, Whalen PJ, et al. Brain habituation during repeated exposure to fearful and neutral faces: a functional MRI study. Brain Research Bulletin. 2003;59:387–92. doi: 10.1016/s0361-9230(02)00940-1. [DOI] [PubMed] [Google Scholar]

- Gaillard WD, Grandin CB, Xu B. Developmental aspects of pediatric fMRI: considerations for image acquisition, analysis, and interpretation. Neuroimage. 2001;13:239–249. doi: 10.1006/nimg.2000.0681. [DOI] [PubMed] [Google Scholar]

- Gao X, Maurer D. Influence of intensity on children's sensitivity to happy, sad, and fearful facial expressions. Journal of Experimental Child Psychology. 2009;102:503–21. doi: 10.1016/j.jecp.2008.11.002. [DOI] [PubMed] [Google Scholar]

- Giedd JN. The teen brain: insights from neuroimaging. Journal of Adolescent Health. 2008;42:335–43. doi: 10.1016/j.jadohealth.2008.01.007. [DOI] [PubMed] [Google Scholar]

- Giedd JN, Blumenthal J, Jeffries NO, et al. Brain development during childhood and adolescence: a longitudinal MRI study. Nature Neuroscience. 1999;2:861–3. doi: 10.1038/13158. [DOI] [PubMed] [Google Scholar]

- Gobbini MI, Haxby JV. Neural systems for recognition of familiar faces. Neuropsychologia. 2007;45:32–41. doi: 10.1016/j.neuropsychologia.2006.04.015. [DOI] [PubMed] [Google Scholar]

- Gogtay N, Giedd JN, Lusk L, et al. Dynamic mapping of human cortical development during childhood through early adulthood. Proceedings of the National Academy of Sciences USA. 2004;101:8174–9. doi: 10.1073/pnas.0402680101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golarai G, Ghahremani DG, Whitfield-Gabrieli S, et al. Differential development of high-level visual cortex correlates with category-specific recognition memory. Nature Neuroscience. 2007;10:512–22. doi: 10.1038/nn1865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4:223–33. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Ishai A, Pessoa L, Bikle PC, et al. Repetition suppression of faces is modulated by emotion. Proceedings of the National Academy of Sciences USA. 2004;101:9827–32. doi: 10.1073/pnas.0403559101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. Face recognition memory and configural processing: a developmental ERP study using upright, inverted, and contrast-reversed faces. Journal of Cognitive Neuroscience. 2004;16:487–502. doi: 10.1162/089892904322926818. [DOI] [PubMed] [Google Scholar]

- Johnson MH, Grossmann T, Cohen Kadosh K. Mapping functional brain development: Building a social brain through interactive specialization. Developmental Psychology. 2009;45:151–9. doi: 10.1037/a0014548. [DOI] [PubMed] [Google Scholar]

- Kennedy DP, Glascher J, Tyszka JM, et al. Personal space regulation by the human amygdala. Nature Neuroscience. 2009;12:1226–27. doi: 10.1038/nn.2381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killgore WD, Yurgelun-Todd DA. Sex differences in amygdala activation during the perception of facial affect. Neuroreport. 2001;12:2543–7. doi: 10.1097/00001756-200108080-00050. [DOI] [PubMed] [Google Scholar]

- Killgore WD, Yurgelun-Todd DA. Unconscious processing of facial affect in children and adolescents. Social Neuroscience. 2007;2:28–47. doi: 10.1080/17470910701214186. [DOI] [PubMed] [Google Scholar]

- Kolb B, Wilson B, Taylor L. Developmental changes in the recognition and comprehension of facial expression: implications for frontal lobe function. Brain and Cognition. 1992;20:74–84. doi: 10.1016/0278-2626(92)90062-q. [DOI] [PubMed] [Google Scholar]

- Legrand D, Ruby P. What is self-specific? Theoretical investigation and critical review of neuroimaging results. Psychological Review. 2009;116:252–82. doi: 10.1037/a0014172. [DOI] [PubMed] [Google Scholar]

- Liberzon I, Phan KL, Decker LR, et al. Extended amygdala and emotional salience: a PET activation study of positive and negative affect. Neuropsychopharmacology. 2003;28:726–33. doi: 10.1038/sj.npp.1300113. [DOI] [PubMed] [Google Scholar]

- Mather M, Canli T, English T, et al. Amygdala responses to emotionally valenced stimuli in older and younger adults. Psychological Science. 2004;15:259–63. doi: 10.1111/j.0956-7976.2004.00662.x. [DOI] [PubMed] [Google Scholar]

- Mather M, Carstensen LL. Aging and attentional biases for emotional faces. Psychological Science. 2003;14:409–15. doi: 10.1111/1467-9280.01455. [DOI] [PubMed] [Google Scholar]

- McClure EB. A meta-analytic review of sex differences in facial expression processing and their development in infants, children, and adolescents. Psychological Bulletin. 2000;126:424–53. doi: 10.1037/0033-2909.126.3.424. [DOI] [PubMed] [Google Scholar]

- McClure SM, Daw ND, Montague PR. A computational substrate for incentive salience. Trends in Neurosciences. 2003;26:423–8. doi: 10.1016/s0166-2236(03)00177-2. [DOI] [PubMed] [Google Scholar]

- Mitchell JP, Macrae CN, Banaji MR. Dissociable medial prefrontal contributions to judgments of similar and dissimilar others. Neuron. 2006;50:655–63. doi: 10.1016/j.neuron.2006.03.040. [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Ray RD, Cooper JC, et al. For better or for worse: neural systems supporting the cognitive down- and up-regulation of negative emotion. Neuroimage. 2004;23:48399. doi: 10.1016/j.neuroimage.2004.06.030. [DOI] [PubMed] [Google Scholar]

- Palermo R, Rhodes G. Are you always on my mind? A review of how face perception and attention interact. Neuropsychologia. 2007;45:75–92. doi: 10.1016/j.neuropsychologia.2006.04.025. [DOI] [PubMed] [Google Scholar]

- Paton JJ, Belova MA, Morrison SE, et al. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–70. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessiglione M, Seymour B, Flandin G, et al. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–5. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L. On the relationship between emotion and cognition. Nature Reviews Neuroscience. 2008;9:148–58. doi: 10.1038/nrn2317. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Kastner S, Ungerleider LG. Attentional control of the processing of neural and emotional stimuli. Brain Research: Cognitive Brain Research. 2002;15:31–45. doi: 10.1016/s0926-6410(02)00214-8. [DOI] [PubMed] [Google Scholar]

- Phan KL, Taylor SF, Welsh RC, et al. Activation of the medial prefrontal cortex and extended amygdala by individual ratings of emotional arousal: a fMRI study. Biological Psychiatry. 2003;53:211–5. doi: 10.1016/s0006-3223(02)01485-3. [DOI] [PubMed] [Google Scholar]

- Popescu AT, Popa D, Pare D. Coherent gamma oscillations couple the amygdala and striatum during learning. Nature Neuroscience. 2009;12:801–7. doi: 10.1038/nn.2305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Schwartz S, Seghier ML, et al. View-independent coding of face identity in frontal and temporal cortices is modulated by familiarity: an event-related fMRI study. Neuroimage. 2005;24:1214–24. doi: 10.1016/j.neuroimage.2004.10.038. [DOI] [PubMed] [Google Scholar]

- Qu L, Zelazo PD. The facilitative effect of positive stimuli on childrens' flexible rule use. Cognitive Development. 2007;22:456–73. [Google Scholar]

- Ramasubbu R, Masalovich S, Peltier S, et al. Neural representation of maternal face processing: a functional magnetic resonance imaging study. Canadian Journal of Psychiatry. 2007;52:726–34. doi: 10.1177/070674370705201107. [DOI] [PubMed] [Google Scholar]

- Rolls ET. The representation of information about faces in the temporal and frontal lobes. Neuropsychologia. 2007;45:124–43. doi: 10.1016/j.neuropsychologia.2006.04.019. [DOI] [PubMed] [Google Scholar]

- Rosler A, Ulrich C, Billino J, et al. Effects of arousing emotional scenes on the distribution of visuospatial attention: changes with aging and early subcortical vascular dementia. Journal of Neurological Science. 2005;229–230:109–16. doi: 10.1016/j.jns.2004.11.007. [DOI] [PubMed] [Google Scholar]

- Scherf KS, Behrmann M, Humphreys K, et al. Visual category-selectivity for faces, places and objects emerges along different developmental trajectories. Developmental Science. 2007;10:F15–30. doi: 10.1111/j.1467-7687.2007.00595.x. [DOI] [PubMed] [Google Scholar]

- Seeley WW, Menon V, Schatzberg AF, et al. Dissociable intrinsic connectivity networks for salience processing and executive control. Journal of Neuroscience. 2007;27:2349–56. doi: 10.1523/JNEUROSCI.5587-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sergerie K, Chochol C, Armony JL. The role of the amygdala in emotional processing: a quantitative meta-analysis of functional neuroimaging studies. Neuroscience and Biobehavioral Reviews. 2008;32:811–30. doi: 10.1016/j.neubiorev.2007.12.002. [DOI] [PubMed] [Google Scholar]

- Strathearn L, Li J, Fonagy P, et al. What's in a smile? Maternal brain responses to infant facial cues. Pediatrics. 2008;122:40–51. doi: 10.1542/peds.2007-1566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar Stereotactic Atlas of the Human Brain. Stuttgart: Beorg Thieme Verlag; 1988. [Google Scholar]

- Taylor MJ, Arsalidou M, Bayless SJ, et al. Neural correlates of personally familiar faces: parents, partner and own faces. Human Brain Mapping. 2008;30:2008–20. doi: 10.1002/hbm.20646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor MJ, Batty M, Itier RJ. The faces of development: A review of early face processing over childhood. Journal of Cognitive Neuroscience. 2004;16:1426–42. doi: 10.1162/0898929042304732. [DOI] [PubMed] [Google Scholar]

- Taylor MJ, McCarthy G, Saliba E, et al. ERP evidence of developmental changes in processing of faces. Clinical Neurophysiology. 1999;110:910–5. doi: 10.1016/s1388-2457(99)00006-1. [DOI] [PubMed] [Google Scholar]

- Thomas KM, Drevets WC, Dahl RE, et al. Amygdala response to fearful faces in anxious and depressed children. Archives of General Psychiatry. 2001a;58:1057–63. doi: 10.1001/archpsyc.58.11.1057. [DOI] [PubMed] [Google Scholar]

- Thomas KM, Drevets WC, Whalen PJ, et al. Amygdala response to facial expressions in children and adults. Biological Psychiatry. 2001b;49:309–16. doi: 10.1016/s0006-3223(00)01066-0. [DOI] [PubMed] [Google Scholar]

- Todd RM, Anderson AK. Six degrees of separation: the amygdala regulates social behavior and perception. Nature Neuroscience. 2009;12:1–3. doi: 10.1038/nn1009-1217. [DOI] [PubMed] [Google Scholar]

- Todd RM, Lewis MD, Meusel LA, et al. The time course of social-emotional processing in early childhood: ERP responses to facial affect and personal familiarity in a Go-Nogo task. Neuropsychologia. 2008;46:595–613. doi: 10.1016/j.neuropsychologia.2007.10.011. [DOI] [PubMed] [Google Scholar]

- Todorov A, Baron SG, Oosterhof NN. Evaluating face trustworthiness: a model based approach. Social Cognitive and Affective Neuroscience. 2008;3:119–27. doi: 10.1093/scan/nsn009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsuchiya N, Moradi F, Felsen C, et al. Intact rapid detection of fearful faces in the absence of the amygdala. Nature Neuroscience. 2009;12:1224–25. doi: 10.1038/nn.2380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Bavel JJ, Packer DJ, Cunningham WA. The neural substrates of in-group bias: a functional magnetic resonance imaging investigation. Psychological Science. 2008;19:1131–9. doi: 10.1111/j.1467-9280.2008.02214.x. [DOI] [PubMed] [Google Scholar]

- van Duijvenvoorde AC, Zanolie K, Rombouts SA, et al. Evaluating the negative or valuing the positive? Neural mechanisms supporting feedback-based learning across development. Journal of Neuroscience. 2008;28:9495–503. doi: 10.1523/JNEUROSCI.1485-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vanderwal T, Hunyadi E, Grupe DW, et al. Self, mother and abstract other: an fMRI study of reflective social processing. Neuroimage. 2008;41:1437–46. doi: 10.1016/j.neuroimage.2008.03.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vida MD, Mondloch CJ. Children's representations of facial expression and identity: identity-contingent expression aftereffects. Journal of Experimental Child Psychology. 2009;104:326–45. doi: 10.1016/j.jecp.2009.06.003. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia. 2007;45:174–94. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. The uncertainty of it all. Trends in Cognitive Sciences. 2007;11:499–500. doi: 10.1016/j.tics.2007.08.016. [DOI] [PubMed] [Google Scholar]

- Wilke M, Schmithorst VJ, Holland SK. Assessment of spatial normalization of whole-brain magnetic resonance images in children. Human Brain Mapping. 2002;17:48–60. doi: 10.1002/hbm.10053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilke M, Schmithorst VJ, Holland SK. Normative pediatric brain data for spatial normalization and segmentation differs from standard adult data. Magnetic Resonance in Medicine. 2003;50:749–57. doi: 10.1002/mrm.10606. [DOI] [PubMed] [Google Scholar]

- Yurgelun-Todd DA, Killgore WD. Fear-related activity in the prefrontal cortex increases with age during adolescence: a preliminary fMRI study. Neuroscience Letters. 2006;406:194–9. doi: 10.1016/j.neulet.2006.07.046. [DOI] [PubMed] [Google Scholar]

- Zald DH. The human amygdala and the emotional evaluation of sensory stimuli. Brain Research: Brain Research Reviews. 2003;41:88–123. doi: 10.1016/s0165-0173(02)00248-5. [DOI] [PubMed] [Google Scholar]

- Zhu Y, Zhang L, Fan J, et al. Neural basis of cultural influence on self-representation. Neuroimage. 2007;34:1310–6. doi: 10.1016/j.neuroimage.2006.08.047. [DOI] [PubMed] [Google Scholar]