Abstract

Various kinds of normative judgments are an integral part of everyday life. We extended the scrutiny of social cognitive neuroscience into the domain of legal decisions, investigating two groups, lawyers and other academics, during moral and legal decision-making. While we found activation of brain areas comprising the so-called ‘moral brain’ in both conditions, there was stronger activation in the left dorsolateral prefrontal cortex and middle temporal gyrus particularly when subjects made legal decisions, suggesting that these were made in respect to more explicit rules and demanded more complex semantic processing. Comparing both groups, our data show that behaviorally lawyers conceived themselves as emotionally less involved during normative decision-making in general. A group × condition interaction in the dorsal anterior cingulate cortex suggests a modulation of normative decision-making by attention based on subjects’ normative expertise.

Keywords: legal decision-making, moral decision-making, neurolaw, fMRI, prefrontal cortex, neuroethics

INTRODUCTION

Normative judgments are ubiquitous in everyday life. For example, judging people as tall or small, or as beautiful or unsightly, refers to respective norms. Besides these examples of normativity in a wide sense, there is one particularly strong understanding related to norms of right or wrong human conduct. One kind of such norms, namely moral norms, has previously been subject to experimental psychology and social, cognitive and affective neuroscience. Some researchers speak of a ‘moral brain’ comprising areas in the frontal, temporal and parietal lobes as well as limbic structures (see, e.g. Greene and Haidt, 2002; Moll and de Oliveira-Souza, 2007), involving brain areas associated with a variety of tasks of social cognition. But not only moral norms are related to right or wrong human conduct. The domain of law, as it is formulated and applied, poses another example that is of high relevance to our social life. What happens on the neural level if subjects are engaged in legal reasoning and judgment? Is there an overlap between brain activation during legal and moral decision-making or are different regions involved in the legal condition? And does such neuroscientific knowledge imply anything for our understanding of normative decision-making?

The tension between moral and legal norms is illustrated by debates in the scholarly literature, where the application of legal rules is contrasted with the reliance on moral intuitions (Goodenough, 2001; Goodenough and Prehn, 2004). The predominant view conceives law ideally as purely rational, free from emotion and passion (Gewirtz, 1996). In the light of recent scientific evidence emphasizing the role of emotion and intuition in moral perception and judgment (Haidt, 2001; Greene and Haidt, 2002; Heekeren et al., 2003; Greene et al., 2004; Moll et al., 2005; Koenigs et al., 2007; Ciaramelli et al., 2007), it is pertinent to know whether legal judgments are also subject to people’s emotions and intuitions. Although we do not think that neuroimaging data can prove or disprove stances in philosophy of law, we are convinced that such empirical investigations can shed new light on these rather theoretical debates.

Considering the recent discussion about the neuroscientific implications for the legal system (Goodenough and Prehn, 2004; Greene and Cohen, 2004; Garland, 2005; Zeki and Goodenough, 2006; Mobbs et al., 2007; Tovino, 2007; Gazzaniga, 2008), sometimes even referred to as ‘neurolaw’ (Wolf, 2008; Schleim et al., 2009), it is apparent that neuroimaging research so far has concentrated on investigating criminals and psychopaths (Blair, 2008; Yang et al., 2008) or developing forensic applications such as lie detection (Sip et al., 2008; Spence et al., 2004). By contrast, we were also interested in investigating what impact legal expertise would have on the neural mechanisms of normative cognition and thus investigated two groups, experienced lawyers and other academics.

While a recent neuroimaging study addressed punishment of legal transgressions (Buckholtz et al., 2008), we focused on a more basic step in the process of legal decision-making that precedes the assessment of punishment, namely the decision whether an action is considered as legally right or wrong. We constructed short stories that could be evaluated from a moral as well as a legal point of view and the subjects were confronted with these stories in our fMRI experiment. They had to decide whether behaviors were right or wrong from either of these points of view. As stories were randomized over subjects, we were able to investigate the impact of the context or the framing (moral vs legal) independent of the respective story’s concrete content.

The rational of our investigation consisted in testing the following three hypotheses. First, we wanted to test whether activations within the ‘moral brain’ could also be found during legal judgment. Given that the evaluation of normative behaviors depends on the attribution of beliefs and intentions, as has been suggested by the so-called ‘Rawlsian’ model in moral psychology (Hauser, 2006; Huebner et al., 2009), we expected an overlap in brain regions related to mentalizing and theory of mind (TOM) such as the medial prefrontal cortex (Walter et al., 2004; Amodio and Frith, 2006; Singer, 2006; Lieberman, 2007) and the temporo-parietal junction (Gallagher et al., 2000; Saxe and Kanwisher, 2003; Frith and Singer, 2008; Adolphs, 2009) when comparing the moral and legal with the neutral condition. With our second hypothesis, we wanted to investigate the differences between moral and legal judgment in the light of the traditional understanding of law separating moral intuitions from the rational application of legal rules (Gewirtz, 1996; Goodenough, 2001). We thus expected stronger brain activation in areas related to rule-based decision-making such as the dorsolateral prefrontal cortex (Miller and Cohen, 2001; Bunge, 2004) for legal decisions. Third, we assumed that as a function of expertise, lawyers would pay more attention to normatively salient features than other academics and thus show less activation related to processing of emotions, such as the amygdala (Dalgleish, 2004), during normative decision-making.

MATERIALS AND METHODS

Participants

Our participants were 46 healthy adults without reported history of psychiatric or neurological disorders. Four of them did not complete the experimental design and two had incidental findings that were dealt with according to our ethical guidelines (Schleim et al., 2007). Out of the 40 remaining subjects (22 male; 31.05 ± 4.02 years of age; 20.31 ± 1.91 years of education; all right-handed; mean ± s.d.), 20 were qualified lawyers having attained the German second state examination and 20 were other academics matched for age (31.95 ± 3.69 vs 30.15 ± 4.22 years, respectively; P > 0.1), education (20.48 ± 1.31 vs 20.13 ± 1.91 years, respectively; P > 0.1) and gender (nine female in each group). Experimental procedures were approved by the local ethics committee and all subjects gave written informed consent.

Experimental design

We developed 36 target stories adapted from moral and legal issues in the media as well as the scholarly literature and 18 control stories taken from everyday life experience (see Supplementary Data), similar to Greene’s and colleagues’ control stimuli (Greene et al., 2001, 2004). Importantly, target stories were constructed such that they were understandable from the moral as well as the legal point of view. Instructions were randomized between subjects and were used to assign the normative cases to either the moral or the legal condition as follows. Each trial began with a 2 s presentation of a cue indicating the experimental condition, ‘neutral’, ‘moral’ or ‘legal’, followed by the story presented together with the question whether this behavior was right from either the personal, moral or legal view. Subjects had as much time as necessary to make a decision, as in previous research (Greene et al., 2001, 2004; Borg et al., 2006), and could answer either ‘yes, rightly’ or ‘no, not rightly’, using buttons in both hands. The decision ended the trial that was then followed by a centered crosshair for 12 s in order to allow for blood-oxygene-level dependent (BOLD) relaxation.

The stimuli were presented on a computer screen using fMRI-compatible video goggles (Nordic Neurolab, Bergen, Norway) and Presentation (Neurobehavioral Systems, Albany, CA, USA). The stories were split equally into two blocks of data acquisition (i.e. 27 stories per block) and presented individually randomized for each subject. Before entering the MRI scanner, subjects received written instructions and practiced the task with four additional stories, two of them as moral, the other two as legal condition. They had to acknowledge their understanding of the difference between both views before proceeding with the experiment.

After the fMRI experiment, we presented all 54 stories including the individual answers in random order on a PC to the subjects and asked them to rate whether the story touched them emotionally (emotion), how realistic they found it (reality), how difficult they found their decision (difficulty) and how certain they were about it (certainty). Answers were recorded using five-point Likert scales ranging from ‘not at all’ to ‘very much’ for each question.

We calculated condition × group ANOVAs for reaction time, emotion, reality, difficulty and certainty in order to test for main effects of condition (neutral, moral and legal), group (lawyers, other academics) and group × condition interactions. To ascertain whether normative context shaped the subjects’ decision, we calculated an endorsement score for each subject and condition by dividing the number of given yes-responses by the number of possible yes-responses. These were analysed with a condition × group ANOVA. Paired tests were used to find significant differences between the individual experimental conditions, corrected for multiple comparisons with Sidak’s method (Sidak, 1967). SPSS Statistics 17 (SPSS Inc., Chicago, IL, USA) was used for these statistical tests.

MRI acquisition

Images were acquired using a 3 T Siemens (Erlangen, Germany) TRIO whole-body scanner and an eight-channel head-coil. A high-resolution T1-weighed whole-brain anatomical scan (1 mm3 voxel resolution, MPRAGE) was acquired prior to functional imaging. Functional images were acquired in 31 axial slices using an echo planar imaging (EPI) pulse sequence, with a TR of 1700 ms, a TE of 25 ms, a flip angle of 80°, a field of view of 192 × 192 mm2, 3.03 mm3 isotropic voxels and 0.75 mm interslice spacing. The first six images of each run were discarded for equilibration. On average, 620.89 (±81.78) volumes were recorded per functional run.

fMRI preprocessing and analysis

Data were preprocessed and analysed using BrainVoyager QX 1.9; the analysis of covariance (ANCOVA) was performed with the updated version 1.10.4 (BrainInnovation, Maastricht, The Netherlands). Each subject’s anatomical scan was converted manually into Talairach space. Functional images were slice scan-time corrected, 3D motion corrected, spatially smoothed using a 12 mm FWHM Gaussian filter to ameliorate differences in intersubject localization, and temporally filtered removing linear trends as well as using a high pass filter (three cycles). Functional images were co-registered to the anatomical images using BrainVoyager’s alignment algorithms, individually improved by manual adjustment, and then transformed into Talairach space.

In analysis I, we defined one predictor for each of the three conditions, starting with stimulus onset until 500 ms before button press. One additional predictor was defined for the cues and two for left and right button presses, comprising the last 500 ms of each trial. The 12 s ISI after button press until onset of the next cue was defined as low-level baseline. Ours was thus a slow event fMRI design. After calculating each subject’s individual design matrix, convolving our predictors with BrainVoyager’s BOLD function, we computed a random effects general linear model removing voxels in the eyes with a mask and used false discovery rate adjustment (Benjamini and Hochberg, 1995) in order to correct for multiple comparisons on the q(FDR) < 0.005 level across the whole brain.

Because the stimuli of both normative conditions were identical and the task was individually specified by the question at the end of each story, we expected differences between moral and legal decisions to occur closest to the time of decision. We thus performed analysis II, in which we defined one predictor (‘decision phase’) for each of the three conditions comprising the last 10 s prior to decision similar to Greene and colleagues’ 16 s time window (Greene et al., 2001, 2004). The remaining variable time between stimulus onset and decision phase was assigned to three other predictors (‘reading phase’ of each condition; results not reported here). Cues, button presses and ISI were modeled as in analysis I. Differences between moral and legal conditions were calculated as described before. Signal time courses for decision phases were extracted using BrainVoyager’s event-related averaging function. To test for the influence of subjects’ differential (i.e. moral vs legal) mean ratings of certainty, reality, difficulty and emotion, we extracted mean beta values of each significant cluster we found in this analysis and calculated correlations using SPSS Statistics 17.

To investigate the effect of group and group × condition interactions, we performed a whole-brain ANCOVA with one between-subjects factor (lawyer or other academic). For the main effect of group analysis, subjects’ mean ratings of certainty, reality, difficulty and emotion for the legal and moral conditions were entered as covariates; for the group × condition interaction analysis, we calculated an ANCOVA with the contrast beta map of both normative conditions (moral vs legal) and the subjects’ differential mean ratings were entered as covariates. To control for multiple comparisons, we initially set an uncorrected voxel-level threshold at F = 14.83 (P < 0.0005) and subsequently used Monte Carlo simulations with 10.000 iterations, yielding a cluster-level false-positive rate at α < 0.005 with a cluster size of k = 4 27 mm3 voxels. These calculations were performed with BrainVoyager’s cluster-level statistical threshold estimator (Goebel et al., 2006).

All coordinates are reported in Talairach space and anatomical regions have been delineated manually according to the atlas of Talairach and Tournoux (1988).

RESULTS

Behavioral results

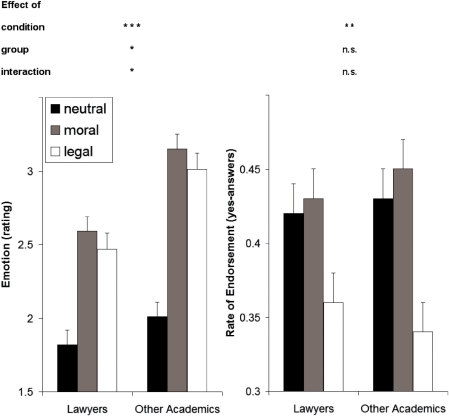

A complete overview of behavioral results from the fMRI experiment and the post-scan rating procedure is reported in Table 1. There was a significant main effect of condition [F(2,76) = 89.83, P < 0.001; see also Figure 1], with both target conditions being judged as significantly more emotional than those of the control condition (P < 0.001 each). Furthermore, there was a significant group difference [F(1,38) = 5.19, P < 0.05], because lawyers considered the stimuli on average to be less emotional than other academics. The group × condition interaction also reached significance [F (2,76) = 3.60, P < 0.05]. Post hoc tests between both groups showed that the rating did not differ significantly in the neutral condition [t (38) = −0.98, P > 0.1], but that lawyers were emotionally less involved in both normative conditions [moral: t(38) = −2.45, P < 0.05; legal: t(38) = −2.68, P < 0.05].

Table 1.

Behavioral results of our 40 subjects from fMRI experiment and post-scan rating procedure

| Mean values per condition | GLM F | Paired tests P | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Neutral | Moral | Legal | Condition | Group | Interact. | m/n | l/n | l/m | ||||

| Lawyers | Other academics | Lawyers | Other academics | Lawyers | Other academics | |||||||

| Reaction Time | 18.21 ± 4.37 | 19.74 ± 3.12 | 19.16 ± 4.73 | 22.02 ± 4.30 | 20.66 ± 6.41 | 23.59 ± 4.80 | 18.8*** | 3 | 1.5 | <0.01 | <0.001 | <0.01 |

| Endorsement | 0.42 ± 0.07 | 0.43 ± 0.13 | 0.43 ± 0.13 | 0.45 ± 0.15 | 0.36 ± 0.15 | 0.34 ± 0.13 | 5.9** | 0 | 0.4 | n.s. | <0.05 | <0.05 |

| Emotion | 1.82 ± 0.58 | 2.01 ± 0.66 | 2.59 ± 0.66 | 3.14 ± 0.65 | 2.47 ± 0.76 | 3.01 ± 0.64 | 89.8*** | 5.2* | 3.6* | <0.001 | <0.001 | n.s. |

| Reality | 3.88 ± 0.74 | 3.84 ± 0.54 | 3.75 ± 0.62 | 3.50 ± 0.48 | 3.86 ± 0.55 | 3.56 ± 0.52 | 5.0** | 1.5 | 1.8 | <0.05 | n.s. | n.s. |

| Difficulty | 2.25 ± 0.55 | 2.09 ± 0.47 | 2.51 ± 0.61 | 2.58 ± 0.43 | 2.49 ± 0.56 | 2.92 ± 0.47 | 30.3*** | 0.7 | 8.9*** | <0.001 | <0.001 | n.s. |

| Certainty | 4.08 ± 0.42 | 3.99 ± 0.44 | 4.17 ± 0.42 | 3.81 ± 0.37 | 4.07 ± 0.36 | 3.54 ± 0.48 | 6.1** | 10.5** | 5.4** | n.s. | <0.01 | <0.05 |

neutral (n), moral (m), and legal (l) condition; reaction times reported in seconds, endorsement in rate of yes-answers, other values referring to five-point Likert-scales from 1 to 5; ± s.d., *P < 0.05, **P < 0.01, ***P < 0.001.

Fig. 1.

Behavioral effects. The difference between groups in reported emotional involvement was significant, because lawyers reported to be less involved than other academics. While there was no significant group difference for endorsement (yes-answer) of the normative issues presented in the stimulus material, subjects were significantly less endorsing them in the legal condition (all error bars + 1 SE; *P < 0.05, **P < 0.01, ***P < 0.001).

Regarding the decisions’ outcome, whether the person in the presented story acted rightly, we found a significant main effect of condition when comparing moral and legal judgments [F(1,38) = 7.05, P < 0.05; see also Figure 1], with significantly higher endorsement in the moral (0.44 ± 0.14 s.d.) than in the legal (0.35 ± 0.14 s.d.) condition (P < 0.05). We observed no significant condition × group interaction [F(1,38) = 0.41] or group difference [F(1,38) = 0.01]. That is, subjects were significantly less permissive of the normative behavior in the legal condition and thus judged fewer of these actions as rightly.

The rating of certainty also deserves special attention, for there was a significant effect of condition [F(2,76) = 6.1, P < 0.01; see also Figure 1], where subjects felt significantly less certain of their decisions in the legal condition than in the other two (P < 0.01 and P < 0.05, respectively). There was also a significant effect of group [F(1,38) = 10.5, P < 0.01] and a significant group × condition interaction [F(2,76) = 5.4, P < 0.01], because other academics were generally less certain of their judgments than lawyers. Post hoc tests showed that groups did not differ significantly for the neutral condition [t(38) = 0.66, P > 0.1], but only for the two normative conditions [moral: t(38) = 2.83, P < 0.01; legal: t(38) = 3.96, P < 0.001].

Imaging results—analysis I: whole trial

As a first step, we calculated the condition effects for all subjects, i.e. independent of the groups (Table 2). For the moral vs the neutral condition, we found activations in the medial prefrontal cortex (PFC), i.e. the anterior medial frontal gyrus, and in the left dorsolateral PFC, i.e. the middle frontal gyrus. We also found stronger activation in the left superior temporal gyrus (STG) extending into the inferior parietal lobe (IPL) and thus encompassing the temporo-parietal junction (TPJ), in the posterior cingulate gyrus (PCG) extending into the precuneus, and in the right cerebellum.

Table 2.

Main effects of normative conditions

| Putative brain area | x | y | z | t-max | Putative brain area | x | y | z | t-max |

|---|---|---|---|---|---|---|---|---|---|

| moral > neutral | normative > neutral (conjunction) | ||||||||

| l Medial frontal gyrus | −5 | 46 | 38 | 5.12 | l Superior frontal gyrus/frontal pole | −14 | 55 | 31 | 4.92 |

| l Middle frontal gyrus | −35 | 8 | 50 | 6.03 | l Superior frontal gyrus | −17 | 36 | 47 | 5.28 |

| l Superior temporal gyrus | −45 | −56 | 19 | 5.98 | l Middle frontal gyrus | −38 | 8 | 50 | 5.96 |

| l Inferior parietal lobe | −34 | −58 | 23 | 7.26 | l Superior temporal gyrus | −45 | −55 | 19 | 5.98 |

| l Precuneus/PCG | −3 | −59 | 25 | 10.79 | l Inferior parietal lobe | −33 | −59 | 24 | 7.23 |

| r Cerebellum | 34 | −62 | −37 | 7.44 | l precuneus/ PCG | −3 | −59 | 25 | 10.79 |

| r Cerebellum | 30 | −61 | −36 | 7.16 | |||||

| legal > neutral | legal > moral (decision phase) | ||||||||

| l Medial frontal gyrus | −3 | 59 | 1 | 5.57 | l Middle frontal gyrus | −41 | 49 | 11 | 6.25 |

| l Superior frontal gyrus/frontal pole | −14 | 55 | 31 | 5 | l Middle temporal gyrus | −60 | −35 | −10 | 7.2 |

| l Superior frontal gyrus | −17 | 36 | 50 | 5.66 | l Angular gyrus | −35 | −75 | 33 | 6.2 |

| l Superior frontal gyrus | −5 | 14 | 51 | 6.13 | |||||

| l Middle frontal gyrus | −38 | 8 | 50 | 7.26 | condition × group (decision phase)* | ||||

| l Cingulate gyrus/corpus callosum | −15 | −5 | 31 | 5.82 | Anterior cingulate gyrus | 0 | 18 | 22 | 22.93 |

| l Middle temporal gyrus | −60 | −10 | 18 | 5.38 | |||||

| l Precuneus/ PCG | −3 | −59 | 25 | 13.25 | |||||

| r Cerebellum | 30 | −61 | −36 | 7.78 | |||||

| l Superior temporal gyrus | −47 | −61 | 21 | 6.65 | |||||

| l Inferior parietal lobe | −33 | −69 | 36 | 8.11 |

Results are significant on the q(FDR) < 0.005 level; x, y, z = respective coordinates in Talairach space; *corrected on the α < 0.005 level and controlling for ratings of difficulty, reality, certainty and emotion; value from F-statistics.

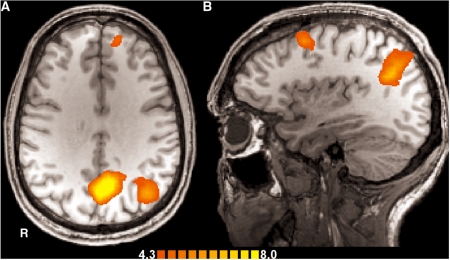

For the legal vs the neutral condition, we found stronger activations in the orbitomedial PFC, the dorsomedial PFC (superior frontal gyrus) and the left dorsolateral PFC, i.e. in the superior frontal and the middle frontal gyrus. Again, we found stronger activation in the left STG extending into the IPL and encompassing the TPJ, in the PCG extending into the precuneus, and in the right cerebellum. Additionally, we found stronger activation in the left middle temporal gyrus. In summary, findings for both normative conditions as compared with neutral decisions were very similar, which was confirmed by the conjunction analysis (see Figure 2). Indeed, a direct statistical comparison between the moral and the legal condition revealed no significant differences at our chosen level of significance in analysis I.

Fig. 2.

Brain regions related to normative judgment as contrasted with the control condition (conjunction analysis). (A) Transversal view (z = 31) showing stronger activations in the anterior medial prefrontal cortex, the PCG extending into precuneus and the left superior temporal gyrus extending into the inferior parietal lobe, encompassing the left temporo-parietal-junction (TPJ); scale denotes t-values. (B) Saggital view (x = −34) of the left hemisphere with activations in the middle frontal gyrus and the left TPJ, statistics as in (A).

Imaging results—analysis II: decision phase

A comparison between the moral and legal condition independent of group yielded stronger activation for the latter in the left dorsolateral prefrontal cortex (DLPFC), i.e. in the middle frontal gyrus, in the middle temporal gyrus and in the left angular gyrus. These findings are also illustrated by signal time courses extracted from the respective brain areas showing a higher increase in BOLD signal in the legal than the other two conditions during this period, particularly when approaching the time of decision (see Figure 3). We checked whether differential (i.e. moral vs legal) activations in this contrast correlated with differential ratings of certainty, reality, difficulty and emotion but found no significant results (r < 0.2 and P > 0.1 for all correlations). There were no significant differences for the opposite contrast, i.e. moral > legal.

Fig. 3.

Differences in neural processing comparing the legal to the moral condition. (A) Cluster of stronger activation in the left dorsolateral prefrontal cortex (left middle frontal gyrus) during legal judgment in a transversal slice at z = 13; scale denotes t-values, L = left. The corresponding signal time courses (right) from this area illustrate an increasing difference between the legal and the other two conditions as the point of decision at zero (red vertical line) is approximated; not shifted for BOLD delay, error bars ± 1 SE. (B) Cluster of stronger activation in the left middle temporal gyrus in a saggital slice at y = −36; statistics as in (A), P = posterior.

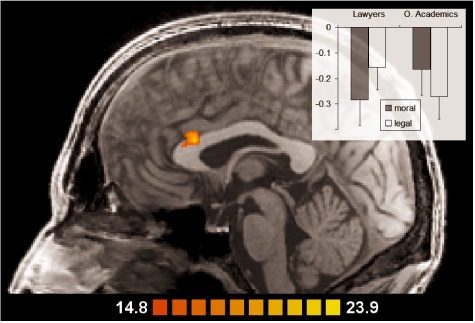

The ANCOVAs performed to investigate group differences and group × condition interaction effects yielded a significant interaction effect in the dorsal anterior cingulate cortex (ACC), more precisely, in the anterior cingulate gyrus (see Figure 4). Inspection of ROI GLM data showed a crossed interaction: lawyers had stronger BOLD responses (less deactivation) in this area during legal, other academics during moral judgment.

Fig. 4.

Group × condition interaction effect: Activation in the anterior cingulate gyrus was modulated by subjects’ expertise (analysis II, decision phase; slice at x = 0); scale denotes F-values; mean beta values are shown in the inlay, error bars −1 SE.

DISCUSSION

With our experiment we could show that processing normative judgments recruit a common set of brain areas irrespective of the context (moral or legal judgments), comprising the dorsomedial prefrontal cortex (DMPFC), the PCG /precuneus and the left temporo-parietal-junction (TPJ). These areas are typically active when thinking about the beliefs and intentions of others. Moreover, legal judgments were associated with significantly stronger activation in the left dorsolateral prefrontal cortex, suggesting that legal decisions were made with regard to explicit rules and less intuitively than moral decisions. Finally, professional lawyers and other academics show differential involvement of the dorsal ACC during normative judgments depending on whether they were made in a moral or a legal context.

Hypothesis 1—overlap of brain activations during moral and legal judgments

Since activations in the frontal, temporal and parietal lobes as well as in limbic structures have consistently been found in several fMRI experiments of moral cognition, some researchers speak of a ‘moral brain’ (see, e.g. Greene and Haidt, 2002; Moll and de Oliveira-Souza, 2007). Given that normative decisions in both of our target conditions imply the attribution of beliefs and intentions, as predicted by the ‘Rawlsian’ model in moral psychology (Hauser, 2006; Huebner et al., 2009), we expected an overlap of activations between the moral and the legal condition, particularly of those brain areas related to mentalizing and TOM, such as the DMPFC (Walter et al., 2004; Amodio and Frith, 2006; Singer, 2006; Lieberman, 2007) and the TPJ (Gallagher et al., 2000; Saxe and Kanwisher, 2003; Frith and Singer, 2008; Adolphs, 2009).

Comparing either of the two normative conditions to the control task as well as the conjunction analysis indeed identified stronger activation in the DMPFC, consistent with our hypothesis. Furthermore, activations in the superior temporal gyrus emphasize the importance of the perception and analysis of goals and intentions for normative judgment, since this area has been associated with this process of social cognition previously (Schultz et al., 2004; Young et al., 2007). However, since this area has traditionally been related to language processing as well and we used a verbal task, our finding could indicate a difference in semantic processing for the normative as compared to the neutral conditions. Nevertheless, a recent meta-analysis of studies investigating TOM reported that 18 out of 40 had stronger activation in the STG, 11 using nonverbal paradigms (Carrington and Bailey, 2009). The activation in the STG extended into the inferior parietal lobe, encompassing the TPJ, whose role for belief attribution has been emphasized frequently (Fletcher et al., 1995; Gallagher et al., 2000; Saxe and Kanwisher, 2003; Singer, 2006; Frith and Singer, 2008). Confirming our hypothesis, our data show neural similarities between moral and legal judgments, suggesting a considerable overlap in cognitive processing between both normative tasks.

Hypothesis 2—differential activation of legal vs moral judgments

We were interested not only in similarities between moral and legal decisions but also in their differences. Particularly, we hypothesized that legal judgments are more related to the application of rules, as follows from the idealistic understanding of law (Gewirtz, 1996; Goodenough, 2001), and predicted from this hypothesis a stronger activation in the DLPFC during legal judgment. Focusing on the decision period of our normative judgment task, where differences between the moral and the legal condition are most likely to occur, we could confirm our prediction. The DLPFC has previously been related to reflecting on explicit rules (MacDonald et al., 2000; Miller and Cohen, 2001; Bunge, 2004). Taking into consideration that reaction times for this condition were longer than those for the moral condition and that the reverse contrast, moral > legal, did not yield any significant differences, we suggest that moral judgments are made more intuitively and automatically even in the legal condition, but that subjects additionally engage in rule-based decision-making when they are prompted to make a legal judgment. According to this view, making a legal decision in our task resembles the overcoming of a prepotent response, such as in a Stroop-task or a go/no-go paradigm, for which activation in the DLPFC has also been found previously (MacDonald et al., 2000 Hester et al., 2004). This interpretation could apply particularly in such instances where subjects consider an action to be morally right, yet legally wrong. Indeed such situations occurred frequently as is demonstrated by the significant condition effect on task outcome (i.e. endorsement of normative behaviors from the moral or the legal point of view) that is due to the fact that subjects were more prohibitive of behaviors in the legal condition. The DLPFC has also been found by Greene and colleagues when subjects made ‘utilitarian’ decisions (Greene et al., 2004), i.e. deciding to sacrifice few lives in order to save many. According to their interpretation, these decisions are more rational (but see Kahane and Shackel, 2008; Schleim, 2008), which is consistent with the idealistic understanding of law we referred to earlier (Gewirtz, 1996; Goodenough, 2001). We would like to emphasize, though, that the difference between both kinds of normative decisions we are describing here are a matter of degree and not absolute. Legal decision-making thus cannot be reduced to the application of black letter law on the grounds of our findings. Also, a caveat to our interpretation is that we did not test rule application explicitly.

Buckholtz and colleagues also found stronger activation in the DLPFC in an fMRI study investigating legal decisions (Buckholtz et al., 2008). However, their finding was located in the right, not in the left hemisphere as in our case. We would like to emphasize the difference between their task and ours to show that both results do not contradict each other. The subjects in Buckholtz’ and colleagues’ study had to determine the degree of punishment a certain actor deserved for his action, while our subjects had to make the more fundamental decision whether an action was legally right or not. The judgment of legal rightness precedes that of legal punishment, since nobody can be legally punished for an action that is not legally wrong. Both judgments are thus different steps in the legal decision-making process and activation in the right DLPFC has been related to punishment in a social interaction task before (Sanfey et al., 2003).

We also found stronger activation in the left middle temporal gyrus for the legal compared to the moral condition. In a recent meta-analysis, this region has been associated with semantic tasks (Patterson et al., 2007). More specifically, Zahn and colleagues related the anterior temporal cortex to the representation of abstract social semantic knowledge comprising concepts such as ‘tactless’ or ‘honorable’ regardless of emotional valence (Zahn et al., 2007). This finding in combination with significantly higher reaction times and ratings of difficulty for legal cases suggests that processing semantic knowledge from the legal stance is more complex.

Hypothesis 3—are lawyers emotionally less involved in normative judgment?

Our study was designed not only to investigate two kinds of normative judgment but also to explore two groups with differences in their normative expertise, that is lawyers and other academics. Particularly, we hypothesized that lawyers would pay more attention to normatively salient features than other academics and in consequence would show less activation related to processing of emotions, such as the amygdala (Dalgleish, 2004). While we found such a pattern in the subjects’ rating of emotional involvement, where lawyers perceived themselves as significantly less involved during normative judgment, we could not confirm this hypothesis on the neural level. This does not directly prove that our hypothesis is wrong. There is the possibility that the emotions induced by our normative cognition task are not strong enough in the first place to elicit significant neural differences between lawyers and other academics. Regarding this suggestion it is important to understand that unlike previous studies employing emotionally dramatic dilemmas such as choosing to kill one family member in order to save the remaining family (Greene et al., 2001, 2004; Borg et al., 2006), our scenarios were much less dramatic and our participants did not have to imagine themselves as actors in the plot but evaluated the situations from a third-person perspective. Another possibility is that the positive finding for the subjective ratings of emotional involvement is due to the lawyers’ wish to conform to a socially desirable ideal type of legal experts who are less influenced by their passions (George, 1996; Goodenough, 2001) and that there are indeed no differences in the way lawyers and other academics process their decisions neurally in our paradigm. If this was true, it would mean that lawyers reacted just as emotionally as other academics in contrast to their training to be less emotional and their belief that they were indeed so. We tried to investigate these questions with additional analyses, but splitting both groups into high- and low-emotional subgroups did not yield any evidence to resolve them (see Supplementary Analysis—Emotional processing).

Leaving aside the question of emotional involvement, we found an interaction effect of condition and group in the dorsal ACC when controlling for the differences as measured in the post-experimental rating procedure. The dorsal ACC has been associated with attention modulation (Kondo et al., 2004; Crottaz-Herbette and Menon, 2006) and, more specifically, the ACC’s subregion we found, belonging to the posterior part of the rostral MFC according to Amodio and Frith (2006), has frequently been related to cognitive tasks (Bush et al., 2000; Amodio and Frith, 2006). This response pattern is hard to interpret, as the dACC is involved in multiple functions and we had no specific a priori hypothesis on its activation. As a possible explanation we suggest a task-switching explanation, associating the difference in ACC activation with the subjects’ capacity to apply their expertise in order to solve the normative judgment task that requires a shift in attention from the respective case to their learned knowledge. However, when they are performing the judgment for which they have less expertise, they have to rely more on their intuitions and thus engage in less cognitive processing. However, a post hoc analysis performed to probe whether legal expertise has a modulating effect on DLPFC activation did not yield significant results (see Supplementary Analysis—Level of expertise). While our explanation thus remains just a guess at this point, we hope that our observation inspires future research to consider the ACC as a region of interest to test its precise role in the modulation of expertise in normative judgment.

CONCLUSION

We could show that the normative context in which subjects evaluate a certain situation matters on the behavioral as well as on the neural level. Our hypotheses concerning the specificity of the ‘moral brain’ and differences between moral and legal decisions could be confirmed, suggesting that several brain regions previously associated with moral cognition, particularly the DMPFC, the left STG and TPJ, are also related to legal judgment and that legal decisions are rather made by applying explicit rules than by relying on intuitions, as indicated by stronger activation in the left DLPFC. Our hypothesis concerning group differences of emotional involvement between lawyers and other academics could only be confirmed on the self-assessed behavioral, not on the neural, level. Additionally, we found a significant group × condition interaction effect in the ACC possibly suggesting that the subjects’ expertise triggers attention shifts. This situation calls for further research to clarify the impact of expertise on normative judgments. Since both groups in our study were strictly matched for education and our stimulus material was adapted from real issues reported in the media, it is likely that other academics were also familiar with the legal issues as is supported by the similar ratings of how realistic the presented cases were. We would thus like to emphasize the possibility that other experimental designs could identify more differences related to the subjects’ expertise than we were able to find.

While others have argued that neuroimaging results related to normative issues will change the way we think about law and morals (Greene and Cohen, 2004; Singer, 2005), we think that it is currently too early to draw any firm normative conclusions from our findings. With our study, we were able to show that there is more to learn about the way the brain processes normatively relevant information as can be understood by focusing on morals alone. Both domains are instances of norms related to right and wrong human conduct and as suggested by our data, it matters in which light we see normative issues.

SUPPLEMENTARY DATA

Supplementary Data are available at SCAN Online.

Acknowledgments

This work was supported by grants to H.W. from the Volkswagen Foundation, Germany (AZ: II/80 777) and the BMBF (German Ministery of Education and Research, AZ 01GP0804). We would like to thank Hauke Heekeren, Stephanie Melzig, Christiane Rieke, Knut Schnell, Markus Staudinger, two anonymous reviewers and the technical staff of the Life and Brain Center, Bonn for their kind support.

REFERENCES

- Adolphs R. The social brain: neural basis of social knowledge. Annual Review of Psychology. 2009;60:693–716. doi: 10.1146/annurev.psych.60.110707.163514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amodio DM, Frith CD. Meeting of minds: the medial frontal cortex and social cognition. Nature Reviews Neuroscience. 2006;7(4):268–77. doi: 10.1038/nrn1884. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. Journal of the Royal Statistical Society Series B: Methodological. 1995;57(1):289–300. [Google Scholar]

- Blair RJR. The amygdala and ventromedial prefrontal cortex in morality and psychopathy. Trends in Cognitive Sciences. 2007;11(9):387–92. doi: 10.1016/j.tics.2007.07.003. [DOI] [PubMed] [Google Scholar]

- Blair J. Cognitive neuroscience of psychopathy. Journal of Neurology Neurosurgery and Psychiatry. 2008;79(8):968–978. [Google Scholar]

- Borg JS, Hynes C, Van Horn J, Grafton S, Sinnott-Armstrong W. Consequences, action, and intention as factors in moral judgments: an fMRI investigation. Journal of Cognitive Neuroscience. 2006;18(5):803–17. doi: 10.1162/jocn.2006.18.5.803. [DOI] [PubMed] [Google Scholar]

- Buckholtz JW, Asplund CL, Dux PE, et al. The neural correlates of third-party punishment. Neuron. 2008;60(5):930–40. doi: 10.1016/j.neuron.2008.10.016. [DOI] [PubMed] [Google Scholar]

- Bunge SA. How we use rules to select actions: a review of evidence from cognitive neuroscience. Cognitive, Affective and Behavioral Neuroscience. 2004;4(4):564–79. doi: 10.3758/cabn.4.4.564. [DOI] [PubMed] [Google Scholar]

- Bush G, Luu P, Posner MI. Cognitive and emotional influences in anterior cingulate cortex. Trends in Cognitive Sciences. 2000;4(6):215–22. doi: 10.1016/s1364-6613(00)01483-2. [DOI] [PubMed] [Google Scholar]

- Carrington SJ, Bailey AJ. Are there theory of mind regions in the brain? A review of the neuroimaging literature. Human Brain Mapping. 2009;30(8):2313–35. doi: 10.1002/hbm.20671. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciaramelli E, Muccioli M, Làdavas E, di Pellegrino G. Selective deficit in personal moral judgment following damage to ventromedial prefrontal cortex. Social Cognitive and Affective Neuroscience. 2007;2:84–92. doi: 10.1093/scan/nsm001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crottaz-Herbette S, Menon V. Where and when the anterior cingulate cortex modulates attentional response: combined fMRI and ERP evidence. Journal of Cognitive Neuroscience. 2006;18(5):766–80. doi: 10.1162/jocn.2006.18.5.766. [DOI] [PubMed] [Google Scholar]

- Dalgleish T. The emotional brain. Nature Reviews Neuroscience. 2004;5(7):582–9. doi: 10.1038/nrn1432. [DOI] [PubMed] [Google Scholar]

- Fletcher PC, Happe F, Frith U, et al. Other minds in the brain—a functional imaging study of theory of mind in story comprehension. Cognition. 1995;57(2):109–28. doi: 10.1016/0010-0277(95)00692-r. [DOI] [PubMed] [Google Scholar]

- Frith CD, Singer T. The role of social cognition in decision making. Philosophical Transactions of the Royal Society B-Biological Sciences. 2008;363(1511):3875–86. doi: 10.1098/rstb.2008.0156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallagher HL, Happe F, Brunswick N, Fletcher PC, Frith U, Frith CD. Reading the mind in cartoons and stories: an fMRI study of ‘theory of mind’ in verbal and nonverbal tasks. Neuropsychologia. 2000;38(1):11–21. doi: 10.1016/s0028-3932(99)00053-6. [DOI] [PubMed] [Google Scholar]

- Garland B, editor. Neuroscience and the Law: Brain, Mind, and the Scales of Justice. New York, NY: Dana Press; 2005. [Google Scholar]

- Gazzaniga MS. The law and neuroscience. Neuron. 2008;60(3):412–15. doi: 10.1016/j.neuron.2008.10.022. [DOI] [PubMed] [Google Scholar]

- George RP, editor. The autonomy of law: essays on legal positivism. Oxford: Clarendon Press; 1996. [Google Scholar]

- Gewirtz P. On ‘I know it when I see it’. Yale Law Journal. 1996;105(4):1023–47. [Google Scholar]

- Goebel R, Esposito F, Formisano E. Analysis of functional image analysis contest (FIAC) data with BrainVoyager QX: from single-subject to cortically aligned group general linear model analysis and self-organizing group independent component analysis. Human Brain Mapping. 2006;27(5):392–401. doi: 10.1002/hbm.20249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodenough OR. Mapping cortical areas associated with legal reasoning and moral intuition. Jurimetrics. 2001;41:429–42. [Google Scholar]

- Goodenough OR, Prehn K. A neuroscientific approach to normative judgment in law and justice. Philosophical Transactions of the Royal Society B: Biological Sciences. 2004;359(1451):1709–26. doi: 10.1098/rstb.2004.1552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene J, Cohen J. For the law, neuroscience changes nothing and everything. Philosophical Transactions of the Royal Society of London Series B-Biological Sciences. 2004;359(1451):1775–85. doi: 10.1098/rstb.2004.1546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene J, Haidt J. How (and where) does moral judgment work? Trends in Cognitive Sciences. 2002;6(12):517–23. doi: 10.1016/s1364-6613(02)02011-9. [DOI] [PubMed] [Google Scholar]

- Greene JD, Nystrom LE, Engell AD, Darley JM, Cohen JD. The neural bases of cognitive conflict and control in moral judgment. Neuron. 2004;44(2):389–400. doi: 10.1016/j.neuron.2004.09.027. [DOI] [PubMed] [Google Scholar]

- Greene JD, Sommerville RB, Nystrom LE, Darley JM, Cohen JD. An fMRI investigation of emotional engagement in moral judgment. Science. 2001;293(5537):2105–8. doi: 10.1126/science.1062872. [DOI] [PubMed] [Google Scholar]

- Haidt J. The emotional dog and its rational tail: a social intuitionist approach to moral judgment. Psychological Review. 2001;108(4):814–34. doi: 10.1037/0033-295x.108.4.814. [DOI] [PubMed] [Google Scholar]

- Hauser MD. The liver and the moral organ. Social Cognitive and Affective Neuroscience. 2006;1(3):214–20. doi: 10.1093/scan/nsl026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heekeren HR, Wartenburger I, Schmidt H, Schwintowski HP, Villringer A. An fMRI study of simple ethical decision-making. Neuroreport. 2003;14(9):1215–19. doi: 10.1097/00001756-200307010-00005. [DOI] [PubMed] [Google Scholar]

- Hester RL, Murphy K, Foxe JJ, Foxe DM, Javitt DC, Garavan H. Predicting success: patterns of cortical activation and deactivation prior to response inhibition. Journal of Cognitive Neuroscience. 2004;16(5):776–85. doi: 10.1162/089892904970726. [DOI] [PubMed] [Google Scholar]

- Huebner B, Dwyer S, Hauser M. The role of emotion in moral psychology. Trends in Cognitive Sciences. 2009;13(1):1–6. doi: 10.1016/j.tics.2008.09.006. [DOI] [PubMed] [Google Scholar]

- Kahane G, Shackel N. Do abnormal responses show utilitarian bias? Nature. 2008;452(7185):E5–E5. doi: 10.1038/nature06785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koenigs M, Young L, Adolphs R, et al. Damage to the prefrontal cortex increases utilitarian moral judgements. Nature. 2007;446(7138):908–11. doi: 10.1038/nature05631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kondo H, Osaka N, Osaka M. Cooperation of the anterior cingulate cortex and dorsolateral prefrontal cortex for attention shifting. Neuroimage. 2004;23(2):670–9. doi: 10.1016/j.neuroimage.2004.06.014. [DOI] [PubMed] [Google Scholar]

- Lieberman MD. Social cognitive neuroscience: a review of core processes. Annual Review of Psychology. 2007;58:259–89. doi: 10.1146/annurev.psych.58.110405.085654. [DOI] [PubMed] [Google Scholar]

- MacDonald AW, Cohen JD, Stenger VA, Carter CS. Dissociating the role of the dorsolateral prefrontal and anterior cingulate cortex in cognitive control. Science. 2000;288(5472):1835–8. doi: 10.1126/science.288.5472.1835. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annual Review of Neuroscience. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Mobbs D, Lau HC, Jones OD, Frith CD. Law, responsibility, and the brain. Plos Biology. 2007;5(4):693–700. doi: 10.1371/journal.pbio.0050103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moll J, de Oliveira-Souza R. Moral judgments, emotions and the utilitarian brain. Trends in Cognitive Sciences. 2007;11(8):319–21. doi: 10.1016/j.tics.2007.06.001. [DOI] [PubMed] [Google Scholar]

- Moll J, Zahn R, de Oliveira-Souza R, Krueger F, Grafman J. The neural basis of human moral cognition. Nature Reviews Neuroscience. 2005;6(10):799–809. doi: 10.1038/nrn1768. [DOI] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Reviews Neuroscience. 2007;8(12):976–87. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Sanfey AG, Rilling JK, Aronson JA, Nystrom LE, Cohen JD. The neural basis of economic decision-making in the ultimatum game. Science. 2003;300(5626):1755–8. doi: 10.1126/science.1082976. [DOI] [PubMed] [Google Scholar]

- Saxe R, Kanwisher N. People thinking about thinking people—The role of the temporo-parietal junction in ‘theory of mind’. Neuroimage. 2003;19(4):1835–42. doi: 10.1016/s1053-8119(03)00230-1. [DOI] [PubMed] [Google Scholar]

- Schleim S. Moral physiology, Its limitations and philosophical implications. Jahrburch für Wissenschaft und Ethik. 2008;13:51–80. [Google Scholar]

- Schleim S, Spranger T, Urbach H, Walter H. Accidental findings in the imaging brain research—Empirical, legal and ethical aspects. Nervenheilkunde. 2007;26(11):1041–5. [Google Scholar]

- Schleim S, Spranger TM, Walter H, editors. Von der Neuroethik zum Neurorecht? [From Neuroethics to Neurolaw?] Göttingen, Germany: Vandenhoeck and Ruprecht; 2009. [Google Scholar]

- Schultz J, Imamizu H, Kawato M, Frith CD. Activation of the human superior temporal gyrus during observation of goal attribution by intentional objects. Journal of Cognitive Neuroscience. 2004;16(10):1695–705. doi: 10.1162/0898929042947874. [DOI] [PubMed] [Google Scholar]

- Sidak Z. Rectangular confidence regions for the means of multivariate normal distributions. Journal of the American Statistical Association. 1967;62:626–33. [Google Scholar]

- Singer P. Ethics and intuitions. The Journal of Ethics. 2005;9:331–52. [Google Scholar]

- Singer T. The neuronal basis and ontogeny of empathy and mind reading: review of literature and implications for future research. Neuroscience and Biobehavioral Reviews. 2006;30(6):855–63. doi: 10.1016/j.neubiorev.2006.06.011. [DOI] [PubMed] [Google Scholar]

- Sip KE, Roepstorff A, McGregor W, Frith CD. Detecting deception: the scope and limits. Trends in Cognitive Sciences. 2008;12(2):48–53. doi: 10.1016/j.tics.2007.11.008. [DOI] [PubMed] [Google Scholar]

- Spence SA, Hunter MD, Farrow T.FD, et al. A cognitive neurobiological account of deception: evidence from functional neuroimaging. Philosophical Transactions of the Royal Society of London Series B: Biological Sciences. 2004;359(1451):1755–62. doi: 10.1098/rstb.2004.1555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Coplanar Stereotaxic Atlas of the Human Brain. Stuttgart: Thieme Verlag; 1988. [Google Scholar]

- Tovino SA. Functional neuroimaging and the law: trends and directions for future scholarship. American Journal of Bioethics. 2007;7(9):44–56. doi: 10.1080/15265160701518714. [DOI] [PubMed] [Google Scholar]

- Walter H, Adenzato M, Ciaramidaro A, Enrici I, Pia L, Bara BG. Understanding intentions in social interaction: the role of the anterior paracingulate cortex. Journal of Cognitive Neuroscience. 2004;16(10):1854–63. doi: 10.1162/0898929042947838. [DOI] [PubMed] [Google Scholar]

- Wolf SM. Neurolaw: the big question. American Journal of Bioethics. 2008;8(1):21–2. doi: 10.1080/15265160701828485. [DOI] [PubMed] [Google Scholar]

- Yang YL, Glenn AL, Raine A. Brain abnormalities in antisocial individuals: implications for the law. Behavioral Sciences and the Law. 2008;26(1):65–83. doi: 10.1002/bsl.788. [DOI] [PubMed] [Google Scholar]

- Young L, Cushman F, Hauser M, Saxe R. The neural basis of the interaction between theory of mind and moral judgment. Proceedings of the National Academy of Sciences of the United States of America. 2007;104(20):8235–40. doi: 10.1073/pnas.0701408104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zahn R, Moll J, Krueger F, Huey ED, Garrido G, Grafman J. Social concepts are represented in the superior anterior temporal cortex. Proceedings of the National Academy of Sciences of the United States of America. 2007;104(15):6430–5. doi: 10.1073/pnas.0607061104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeki S, Goodenough OR, editors. Law and the Brain. Oxford: Oxford University Press; 2006. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.