Abstract

Within the neural face-processing network, the right occipital face area (rOFA) plays a prominent role, and it has been suggested that it receives both feed-forward and re-entrant feedback from other face sensitive areas. Its functional role is less well understood and whether the rOFA is involved in the initial analysis of a face stimulus or in the detailed integration of different face properties remains an open question. The present study investigated the functional role of the rOFA with regard to different face properties (identity, expression, and gaze) using transcranial magnetic stimulation (TMS). Experiment 1 showed that the rOFA integrates information across different face properties: performance for the combined processing of identity and expression decreased after TMS to the rOFA, while no impairment was seen in gaze processing. In Experiment 2 we examined the temporal dynamics of this effect. We pinpointed the impaired integrative computation to 170 ms post stimulus presentation. Together the results suggest that TMS to the rOFA affects the integrative processing of facial identity and expression at a mid-latency processing stage.

Keywords: face processing, occipital face area, transcranial magnetic stimulation

INTRODUCTION

Faces represent a special category amongst visual stimuli (Kanwisher et al., 1997; Yin, 1969). They are processed by viewers in order to extract a variety of social information, such as the identity of a person, the emotional state, or a cue to attention via the direction of eye gaze. In addition to processing each face property independently, it is essential to integrate across different face properties to, for example recognize a friend in spite of a change in expression, or to detect a fearful expression independent of eye gaze direction.

In the human brain, face processing relies on a widespread cortical network, which encompasses multiple regions in the occipital, temporal and parietal lobes (Allison et al., 1994; Haxby et al. 2000). The functional specialization of the different network regions for different face properties has been the subject of an ongoing debate. This debate is particularly important for the right occipital face area (rOFA) in the inferior occipital gyrus (Rossion et al., 2003), which has been shown to be involved in the processing of different face properties (Cohen Kadosh et al., in press; Ganel et al., 2005; Maurer et al., 2002; Pitcher et al., 2007; Rotshtein et al., 2007).

The rOFA is an integral part of the face network, and it has been suggested that it receives feed-forward and re-entrant feedback from face sensitive areas, including the fusiform face area (FFA) (Cohen Kadosh et al., in press; Rotshtein et al., 2007; Schiltz and Rossion, 2006). This raises the possibility that this region may be involved not only in the initial detection and categorization but also the integrative analysis of face stimuli (DeGutis et al., 2007). However, currently, little is known about the rOFA’s functional specialization for specific face properties such as identity, expression, and eye gaze, and the underlying time course of integration of these face properties.

Several event-related potential (ERP) studies have provided evidence regarding the timing of different processing steps, thus helping to pinpoint the relevant time window for the processing of each face property. Sagiv and Bentin (2001) compared neural processing for natural and schematic faces and found evidence for structural encoding of faces in the time range starting from 170 ms post stimulus presentation. With regard to specific face properties, Jacques and Rossion (2006) used ERP adaptation to show that the N170 amplitude was larger when facial identity changed than when it was repeated. With regard to emotional expression, while some evidence is available for a rapid detection of emotional expressions in faces [for fearful in comparison to neutral faces at fronto–central electrodes at around 120 ms (Eimer and Holmes, 2002)], several studies have shown that in-depth processing of emotional expressions occurs at a later time point and at more posterior electrodes. For example Blau et al. (2007) showed that the N170 is also modulated by emotional expressions, while differential responses to emotional intensities were found at a slightly later time window (190–290 ms), (Leppaenen et al., 2007). Caharel and colleagues (Caharel et al., 2005) showed that both, facial identity and emotional expression modulate N170 amplitude, with facial identity effects appearing at slightly shorter latencies. Finally, ERP effects of gaze processing have been pinpointed to a slightly later time window, starting from 250 ms (Klucharev and Sams, 2004; Senju et al., 2006). Therefore, it seems that the temporal processing for identity and expression overlaps, while gaze is processed slightly later in time. These ERP findings are of particular interest for our study as they may guide us in finding possible time windows for the modulation of different face property processing in the brain.

The current study investigated the functional anatomy of the rOFA by using a same–different matching task using transcranial magnetic stimulation (TMS). The matching task probed the interaction of simultaneously changing face properties, as it required participants to match face property information across two faces while preventing the use of an exclusive processing strategy. It has been shown in the behavioural literature that different cognitive processing strategies are employed to process face properties (e.g. Calder et al., 2000; Mondloch et al., 2002). Therefore, it is likely that face aspects that rely on the same/or similar facial information and consequently on the same processing mechanism influence each other (for a similar idea in other cognitive domains, see: Cohen Kadosh and Henik, 2006; Fias et al., 2001; Posner et al., 1990). For example, if both facial identity and emotional expression are processed by looking at the configuration of facial features, then changes to the overall face layout could be due to changes in the expression or the identity and would require the processing of both face aspects interdependently (Ganel and Goshen–Gottstein, 2004). We hypothesized that TMS to the rOFA would interfere with the analysis and integration of specific face properties, such as identity and expression, which have been suggested to rely the same processing strategy (Mondloch et al., 2003). This was based on behavioural, and neuroimaging findings, which suggest that these two face properties also overlap in their processing in the brain (Caharel et al., 2005; Cohen Kadosh et al., in press; Ganel and Goshen–Gottstein, 2004). In a follow-up experiment (Experiment 2) we explored the timing at which this interference occurs. Based on the ERP findings discussed above, we hypothesized that any impairment would be strongest in the 170–300 ms range, possibly reflecting the effects of re-entrant feedback processing from FFA and other higher order face processing areas at a later processing stage (Rossion et al., 2003).

EXPERIMENT 1

Participants

Eight participants (mean age: 20.8 years old, s.d.: 9.3) with normal or corrected-to-normal vision gave informed consent to participate in the experiment.

Stimuli

A stimulus set was created from eight colour photographs taken under standard lighting conditions (two women, two expressions (happy and angry), two directions of eye gaze (directed to the right, or the left)). All pictures were cropped to show the face in frontal view and to exclude the neck and haircut of the person and any differences in hue and saturation were adjusted using Adobe Photoshop 7. The stimulus size of 6.3 × 7 cm corresponded to a visual angle of 6.3 × 7 at the viewing distance of 57 cm. Stimulus pairs in each trial varied with regard to the repetition of none, one, two, or three face properties (identity, expressions, gaze), thus yielding a 2 × 2 × 2 factorial design (identity (same, different), expression (same, different), gaze (same, different)) Each experimental block consisted of 224 trials, with 50% of the trials requiring a ‘same’ decision.

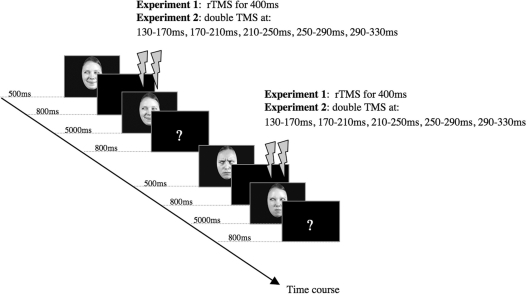

Procedure

Participants did a same–different task while repetitive TMS (rTMS) was delivered at rOFA and vertex. We also included a baseline block without TMS. The order of TMS condition varied between participants according to a balanced 3 × 3 Latin square design. Each trial consisted of a face presented for 500 ms in the centre of the computer screen followed by a fixation cross for 800 ms, which was followed by a second face. The second face (test stimulus) remained on the screen for a maximum of 5000 ms, or until a button press was registered and was followed by a question mark for 800 ms, indicating the beginning of a new trial (Figure 1). The participants had to decide in each trial whether the two consecutively presented faces were same or different. Participants were asked to respond as quickly as possible while avoiding mistakes. The participants indicated their choices by pressing one of two keys (i.e. ‘F’ or ‘J’ on a QWERTY keyboard). The assignment of the keys to same and different was counterbalanced across blocks, and the number of responses to ‘same’ or ‘different’ was equal.

Fig. 1.

Experimental time course of two exemplar trials in the same–different task. TMS onset times are shown for both experiments.

TMS stimulation and site localization

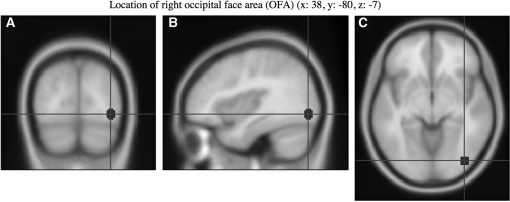

A Magstim Super Rapid Stimulator (Magstim, UK, ∼2T maximum output) was used to deliver the TMS via a figure-of-eight coil with a wing diameter of 70 mm. TMS was delivered at 10 Hz for 500 ms (0, 100, 200, 300 and 400 ms starting from stimulus onset) at 60% of the maximal output, with the coil handle pointing upwards and parallel to the midline. FSL software (FMRIB, Oxford) was used to transform coordinates for the rOFA for each subject individually. Each subject’s high resolution MRI scan was normalized against a standard template and each transformation was used to convert the appropriate Talairach coordinates to the untransformed (structural) space coordinates, yielding subject specific localization of the sites. The Talairach coordinates for rOFA (38, −80, −7) were the averages from eleven neurologically normal participants in an fMRI study of face processing (Rossion et al., 2003) (Figure 2). The TMS site (rOFA) was located for each individual using the Brainsight TMS–MRI co-registration system (Rogue Research, Montreal, Canada), utilizing individual MRI scans for each subject. The vertex was defined according to the 10–20 EEG system.1

Fig. 2.

Location of the rOFA stimulation site [(A) coronal view, (B) sagittal view, (C) axial view]. Montreal Neurological Institute (MNI) coordinates were taken from a previous fMRI study (Rossion et al., 2003).

RESULTS

Accuracy

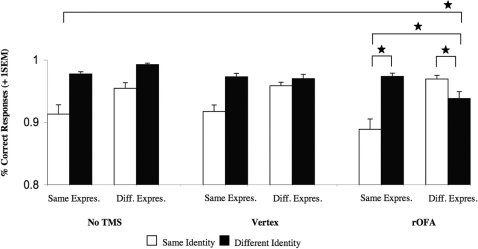

In a first step, all responses were subjected to a repeated measures ANOVA, with the within-subject factors TMS condition (no TMS, vertex, rOFA), identity (same, different), expression (same, different), and gaze (same, different), (Table 1). The only significant effects were a main effect for identity [F(1,7) = 7.02, P = 0.03] and a three way-interaction between TMS condition × identity × expression [F(2,14) = 5.18, P = 0.02, Figure 3].

Table 1.

Statistical result for the accuracy rates in Experiment 1

| Effect | ||

|---|---|---|

| TMS condition | F(2,14) = 1.17 | P = 0.340 |

| Identity | F(1,7) = 7.02 | P = 0.033 |

| Expression | F(1,7) = 1.73 | P = 0.230 |

| Gaze | F(1,7) = 1.04 | P = 0.341 |

| Identity × TMS condition | F(2,14) = 1.27 | P = 0.312 |

| Expression × TMS condition | F(1,14) = 0.234 | P = 0.794 |

| Identity × Expression | F(1,7) = 3.54 | P = 0.102 |

| Identity × Expression × TMS condition | F(2,14) = 5.18 | P = 0.021 |

| Gaze × TMS condition | F(2,14) = 0.159 | P = 0.842 |

| Identity × Gaze | F(1,7) = 0.325 | P = 0.586 |

| Identity × Gaze × TMS condition | F(2,14) = 0.452 | P = 0.645 |

| Expression × Gaze | F(1,7) = 0.142 | P = 0.717 |

| Expression × Gaze × TMS condition | F(2,14) = 1.27 | P = 0.312 |

| Identity × Expression × Gaze | F(1,7) = 4.01 | P = 0.085 |

| Identity × Expression × Gaze × TMS condition | F(2,14) = 0.006 | P = 0.994 |

Bold values indicates P < 0.005.

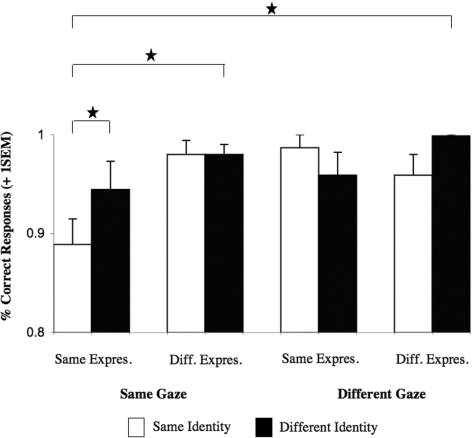

Fig. 3.

Experiment 1: Graph depicting the significant three-way interaction between TMS condition × identity × expression [F(2,14) = 5.18, P = 0.02] in the no TMS, Vertex, and rOFA condition. Note that this presentation is collapsed across gaze. All significant effects (P < 0.05) are marked with an asterisk. Abbreviations: Same Expres. = Same Expression, Diff. Expres. = Different Expression, No TMS= No TMS condition, Vertex= TMS to Vertex condition, rOFA= TMS to rOFA condition. Error bars indicate one standard error of the mean.

To further our understanding regarding the sources of the interaction between TMS condition, Identity, and Expression, additional analyses of the two-way interaction between Identity and Expression were conducted separately for each TMS stimulation site (Keppel, 1991). We found that the observed three-way interaction was due to a significant interaction between identity × expression at rOFA [F(1,7) = 7.2, P = 0.03], but not at the other sites, which yielded a trend toward main effects for identity only [vertex: F(1,7) = 14.87, P = 0.06; no TMS: F(1,7) = 11.96, P = 0.11]. For the rOFA, planned comparisons revealed that participants were accurate in detecting a change in expression or identity, but it was the combined change, or the lack of change in both face properties that yielded decreased accuracy rates [no change in identity and expression versus change in expression only: t(7)= 2.16, P = 0.03; changes in both identity and expression versus change in identity only: t(7)= 1.92, P = 0.05]. This suggests that participants found it more difficult to compare faces when these two face properties changed simultaneously or remained unchanged, while a single property change in identity or expression was sufficient to make an accurate decision.

Reaction times

Mean reaction times were calculated for all responses and subjected to a repeated-measures ANOVA with the same factorial design as for the accuracy analysis. Only the main effect for identity was significant [F(1,7) = 8.91, P = 0.02], but none of the other effects or interactions reached significance [all Fs= 0.018–4.8, all Ps = 0.065–0.924].

DISCUSSION

Experiment 1

The results of Experiment 1 found a specific processing impairment for the TMS to rOFA condition only. More specifically, the participants were highly accurate in detecting single face property changes in identity or expression. Accuracy rates decreased for combined identity and expression changes, or when both remained the same, suggesting that TMS affected mainly the combined processing and the integration across these face properties. Notably, participants did not seem to use gaze information to detect a face change (no main effect for gaze, and gaze did not interact with any other factor), indicating that the results were not due to low-level perceptual effects. Experiment 2 was conducted to modulate rOFA activation at different time windows in order to pinpoint the precise occurrence of the integration between different face properties. This follow-up experiment could also reveal any additional influences of each face property that may have been masked by the stimulation time range.

Experiment 2

Experiment 2 was identical to Experiment 1 with the exception of the following details: Nine participants (mean age: 24.1 years old, s.d. 2.8) participated in Experiment 2. Three participants who participated in Experiment 1 also participated in Experiment 2; the measurements for both experiments were taken 5 months apart.

Procedure

During the same–different task double-pulse TMS was delivered at rOFA with 40ms between pulses at five different time windows (130–170, 170–210, 210–250, 250–290 and 290–330 ms), (O’Shea et al., 2004). These time windows were chosen based on the ERP findings presented in the introduction that showed that differential effects for face properties appear as early as 170 ms post stimulus presentation. Due to the increased length of the experiment (∼2.5 h in total), the five experimental blocks were divided into two sessions, which the participants completed on two different days. The order of the different time windows was counterbalanced across participants.

RESULTS

Accuracy

The data were subjected to a repeated measures ANOVA, with the within-subject factors time window (130–170, 170–210, 210–250, 250–290, 290–330 ms), identity (same, different), expression (same, different), and gaze (same, different). We replicated the TMS to the rOFA findings in Experiment 1 by obtaining a significant two-way interaction between identity and expression [F(1,8) = 12.87, P = 0.007]. In the present experiment, a non-significant trend showed that this interaction was modulated by the time window [time window × identity × expression [F(1,8) = 2.56, P = 0.057]. Finally, the four-way interaction between time window × identity × expression × gaze was significant [F(4,32) = 3.28, P = 0.02] (Table 2). Therefore, in a second analysis step we decomposed this four-way interaction by analyzing the simple three-way interaction between identity, expression, and gaze for each time window separately (Keppel, 1991).

Table 2.

Statistical results for the accuracy rates in Experiment 2

| Effect | ||

|---|---|---|

| Time window | F(4,32) = 0.733 | P = 0.576 |

| Identity | F(1,8) = 34.5 | P = 0.001 |

| Expression | F(1,8) = 18.3 | P = 0.003 |

| Gaze | F(1,8) = 8.92 | P = 0.017 |

| Identity × Time window | F(4,32) = 0.202 | P = 0.935 |

| Expression × Time window | F(4,32) = 0.965 | P = 0.440 |

| Identity × Expression | F(1,8) = 12.8 | P = 0.007 |

| Identity × Expression × Time window | F(4,32) = 2.56 | P = 0.057a |

| Gaze × Time window | F(4,32) = 0.092 | P = 0.984 |

| Identity × Gaze | F(1,8) = 3.61 | P = 0.094 |

| Identity × Gaze × Time window | F(4,32) = 0.883 | P = 0.485 |

| Expression × Gaze | F(1,8) = 0.157 | P = 0.703 |

| Expression × Gaze × Time window | F(4,32) = 1.87 | P = 0.139 |

| Identity × Expression × Gaze | F(1,8) = 2.79 | P = 0.133 |

| Identity × Expression × Gaze × Time window | F(4,32) = 3.28 | P = 0.023 |

aMarginally significant. Bold values indicates P < 0.005.

130–170 ms

In this time window, only the main effects for identity [F(1,8) = 75.0, P < 0.001] and expression [F(1,8) = 16.8, P = 0.003] were significant, while the main effect for gaze and all higher interactions, including the interaction between identity and expression, remained non-significant.

170–210 ms

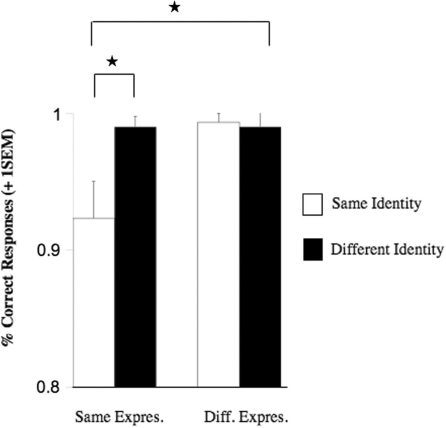

Our analysis revealed significant main effects for identity [F(1,8) = 6.6, P = 0.033], expression [F(1,8) = 16.1, P = 0.004], and gaze [F(1,8) = 13.32, P = 0.006], as well as significant interactions for identity × expression [F(1,8) = 13.19, P = 0.007; Figure 4] and identity × gaze [F(1,8) = 6.14, P = 0.038]. Planned comparisons showed that similar to Experiment 1, the interaction between identity × expression was due to significantly decreased accuracy rates when neither identity and expression changed [t(8) = 3.13, P = 0.014]. Moreover, accuracy rates decreased similarly for the interaction between identity × gaze, in that participants were less accurate when neither identity and gaze changed [t(8) = 3.29, P = 0.011].

Fig. 4.

Experiment 2: Graph depicting the significant two-way interaction between identity × expression [F(1,8) = 13.19, P = 0.007] in the time window from 170 to 210 ms. Note that this presentation is collapsed across gaze. All significant effects (P < 0.05) are marked with an asterisk. Abbreviations: Same Expres. = Same Expression, Diff. Expres. = Different Expression. Error bars indicate one standard error of the mean.

210–250 ms

Overall analysis revealed main effects for identity [F(1,8) = 6.6, P = 0.033], expression [F(1,8) = 16.1, P = 0.004], and gaze [F(1,8) = 13.32, P = 0.006], a significant two-way interaction between identity × expression [F(1,8) = 7.54, P = 0.025], as well as a significant three-way interaction between identity × expression × gaze (Figure 5), [F(1,8) = 25.6, P = 0.001]. Further analysis conducted for each level of gaze change separately showed that the three-way interaction was due to a significant interaction between identity × expression under same gaze [F(1,8) = 22.98, P = 0.001], but not under different gaze [F < 1]. Planned comparisons showed that this interaction was due to a significant decrease in accuracy when neither identity nor expression changed [t(8) = 4.18, P = 0.003]. Neither of the simple main effects nor the interaction was significant for the different gaze condition.

Fig. 5.

Experiment 2: Graph depicting the significant three-way interaction between identity × expression × gaze [F(1,8) = 25.6, P = 0.001] in the time window from 210 to 250 ms. All significant effects (P < 0.05) are marked with an asterisk. Abbreviations: Same Expres. = Same Expression, Diff. Expres. = Different Expression. Error bars indicate one standard error of the mean.

250–290 and 290–330 ms

Both time windows showed the same effects; significant main effects for identity and expression (250–290 ms: identity [F(1,8) = 11.02, P = 0.011], expression [F(1,8) = 7.44, P = 0.026]; 290–330 ms: identity [F(1,8) = 19.8, P = 0.002], expression [F(1,8) = 9.80, P = 0.014]) as well as the interaction between identity × expression (250–290 ms: [F(1,8) = 6.73, P = 0.032]; 290–330 ms: [F(1,8) = 6.61, P = 0.033]). Planned comparisons found a significant decrease in accuracy for the condition when neither identity nor expression changed (250–290 ms: [t(8) = 3.86, P = 0.005]; 290–330 ms: [t(8) = 4.51, P = 0.002]).

Reaction times

None of the main effects or the higher interactions were significant [all Fs = 0.002–1.37, all Ps = 0.275–0.970].

Additional analysis

As the second experiment was conducted to provide detailed timing information of the effects found in the first experiment, we conducted an additional analysis to examine whether the two experiments yielded similar results and if using a longer stimulation protocol in Experiment 1 had masked the effects found in the differential timing experiment. To this end, an ANOVA was conducted with the factors experiment (Experiment 1, Experiment 2), identity (same, different), and expression (same, different). This analysis included the rOFA trials of Experiment 1 and all time windows in experiment 2 were collapsed. We found a significant interaction for identity × expression [F(1,15) = 10.64, P < 0.05]. Most importantly however for the question whether the two experiments yielded different results, none of the other interactions were significant [all Fs = 0.060–1.79, all Ps = 0.201–0.810]. This additional analysis shows that both experiments did not differ with regard to the differential effects on facial identity and emotional expression processing.

DISCUSSION

Experiment 2

Experiment 2 probed the time line of face property processing by rOFA. The impairment occurred from 170 ms post stimulus presentation onwards, suggesting that it affected a later processing stage, possibly related to re-entrant feedback processing. We also found that at a specific time window (210–250 ms) the interaction between identity and expression was modulated by gaze information.

GENERAL DISCUSSION

In the current study we predicted that (i) TMS to the rOFA would not disrupt face processing per se (in this case, accuracy rates would decrease irrespective of the different face property changes), but impair the analysis and integration of specific face properties, such as identity and expression. (ii) Based on recent ERP findings, we also hypothesized that this impairment would be most pronounced in the 170–300 ms range, most likely reflecting re-entrant feedback processing from FFA and other higher order face processing areas (Rotshtein et al., 2007). Our results provide evidence supporting both hypotheses.

In both experiments TMS to the rOFA led to decreased accuracy in the processing of identity and expression. Our results showed that rather than having difficulty with all face properties, our participants were impaired in computing face-specific information that relies on the overall configural relations between facial features, in this case identity and expressions. More specifically, they had difficulties to determine whether both changed or remained the same, but not when only one face property changed, suggesting that the impairment might have concerned a common processing mechanism (Calder et al., 2000). We note that this interpretation is somewhat limited to the specific set of stimuli used in the current study, using two identities, expression and directions of eye gaze. Future studies are needed to establish whether the results can be extended to other facial features, and are independent of specific stimulus surface properties, such as orientation or lightning. The suggestion of a common processing mechanism for facial identity and emotional expression runs in line with findings from recent computational models. Dailey and colleagues (Dailey et al., 2002) used a neuronal network model with principal component analysis (PCA) to assess whether emotional expression processing relies on discrete categories or rather a continuous multidimensional space and found support for both accounts. Calder and Young’s PCA model (2005) of identity and emotional expression processing went a step further and found principal components that processed each facial feature either separately or in combination, thus supporting the multidimensional space account and rejecting the suggestion that both facial features are processed in strongly segregated pathways.

This virtual impairment also mirrors the behavioural difficulties found in patients suffering from prosopagnosia, who have incurred brain injuries to the face processing network and particularly the rOFA. While prosopagnosics are usually aware of looking at a face, they are unable to compute the relations between different face properties that would allow them to identify it (DeGutis et al., 2007; Rossion et al., 2003). In addition, some evidence is available that prosopagnosic participants have similar difficulties in processing emotional expressions (Humphreys et al., 2006, see also Calder and Young, 2005 for a review), although not all prosopagnosics show impaired emotional expression processing (Le Grand et al., 2006).

It has been shown that the rOFA is well connected within the cortical face network, receiving both feed-forward (Fairhall and Ishai, 2007) and re-entrant feed-back connections (Rotshtein et al., 2007). Based on the results of Experiment 1 it is unclear whether the TMS to the rOFA interfered with the feed-forward sweep of information, or rather with re-entrant feedback processing (Kotsoni et al., 2007). With the help of the timing information obtained in Experiment 2, we narrowed down the time point of interference to 170 ms post stimulus presentation (with participants being less accurate in detecting a change or no change in these properties from this time point). We suggest that TMS to the rOFA did not interfere with the feed-forward sweep of information as the participants detect the presence of a face. Rather, TMS impaired the processing of re-entrant feedback information, affecting face-specific mechanisms that process configural face information. This is supported by findings of a recent training study, which found that increased functional connectivity between rOFA and FFA in a prosopagnosic patient was prognostic of improved face recognition skills (DeGutis et al., 2007). Finally, the suggestion of re-entrant feedback also receives support from the intracranial recording studies in humans, which showed late top–down modulation of activity (N210) in the inferior occipital gyrus (Olson et al., 2001), in an area similar to the rOFA stimulated here

In contrast to expression and identity, gaze processing effects were found only during the time windows from 170 to 210 and 210 to 250 ms, when gaze influenced the interaction between identity and expression. More specifically, a change in eye gaze made it easier for the participants to detect a face change. This suggests that within this time range, participants can rely on featural cues to help with face perception, a suggestion that is in line with earlier research findings (Klucharev and Sams, 2004; Senju et al., 2006). Based on previous studies, it seems plausible that before 170 ms eye gaze is processed independently from facial identity and expression (Bruce and Young, 1986; Haxby et al., 2000; Klucharev and Sams, 2004), while after 250 ms, information about the direction of eye gaze is then further processed in special dedicated areas, such as the superior temporal sulcus (Puce et al., 1998; Haxby et al., 2000; Hoffman and Haxby, 2000).

In the current study, the first signs of face-specific impairments were found at around 170–210 ms. This runs in line with the evidence from ERP studies, which showed that identity and expression modulate the N170 component (Caharel et al., 2005). Thus, it seems possible that the integration of different face properties in the rOFA increases gradually in response to continual waves of feedback from FFA and other higher face processing areas (Cohen Kadosh et al., in press; Schiltz and Rossion, 2006). Future studies should extend these findings to populations that exhibit partial or substantial deficits in face processing skills, such as young children or patients suffering from prosopagnosia.

Acknowledgments

The authors acknowledge support from the following funding bodies: KCK: Marie Curie Fellowship (MEST-CT-2005-020725), Economic and Social Research Council (ESRC) postdoctoral fellowship (PTA-026-27-2329); VW: Biotechnology and Biological Sciences Research Council (BBSRC) grant (BB/FO22875/1); RCK: Wellcome Trust RCD Fellowship (WT88378).

Footnotes

1 This approach of localizing the rOFA based on a functional average and the individual structural scans instead of functionally localized coordinates may require a more cautious interpretation of the results in case of a null result, due to possible anatomical variations in the individual participants. Nevertheless, a recent study has shown that different localization methods (e.g. fMRI-TMS neuronavigation, MRI-guided TMS neuronavigation, TMS based on the 10–20 EEG system, etc.) yield a similar behavioural effect given a sufficient sample size (Sack et al., 2009). In turn, using maximally precise site localization can limit the generalizability of the observed effects to the functional activation site used in a particular paradigm, and the particular set of subjects.

REFERENCES

- Allison T, Ginter H, McCarthy G, Nobre AC, Puce A, Luby M, et al. Face recognition in the human extrastriate cortex. Journal of Neurophysiology. 1994;71(2):821–5. doi: 10.1152/jn.1994.71.2.821. [DOI] [PubMed] [Google Scholar]

- Blau VC, Maurer U, Tottenham N, McCandliss BD. The face-specific N170 component is modulated by emotional facial expression. Behavioural and Brain Functions. 2007;3(7) doi: 10.1186/1744-9081-3-7. doi:10.1186/1744-9081-3-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. British Journal of Psychology. 1986;77:305–27. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Caharel S, Courtay N, Bernard C, Lalonde R, Rebai M. Familiarity and emotional expression influence an early stage of face processing: an electrophysiological study. Brain and Cognition. 2005;59:96–100. doi: 10.1016/j.bandc.2005.05.005. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nature Reviews Neuroscience. 2005;6:641–51. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Keane J, Dean M. Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance. 2000;26(2):527–51. doi: 10.1037//0096-1523.26.2.527. [DOI] [PubMed] [Google Scholar]

- Cohen Kadosh K, Henson R.NA, Cohen Kadosh R, Johnson MH, Dick F. Task-dependent activation of face-sensitive cortex: an fMRI adaptation study. in press. doi: 10.1162/jocn.2009.21224. [DOI] [PubMed] [Google Scholar]

- Cohen Kadosh R, Henik A. A common representation for semantic and physical properties: A cognitive-anatomical approach. Experimental Psychology. 2006;53:87–94. doi: 10.1027/1618-3169.53.2.87. [DOI] [PubMed] [Google Scholar]

- Dailey MN, Cottrell GW, Padgett C, Adolphs R. EMPATH: A neural network that categorizes facial expressions. Journal of Cognitive Neuroscience. 2002;14(8):1158–73. doi: 10.1162/089892902760807177. [DOI] [PubMed] [Google Scholar]

- DeGutis JM, Bentin S, Robertson LC, D'Esposito M. Functional plasticity in ventral temporal cortex following cognitive rehabilitation of a congenital prosopagnosic. Journal of Cognitive Neuroscience. 2007;19(11):1790–802. doi: 10.1162/jocn.2007.19.11.1790. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A. An ERP study on the time course of emotional face processing. NeuroReport. 2002;13(4):1–5. doi: 10.1097/00001756-200203250-00013. [DOI] [PubMed] [Google Scholar]

- Fairhall SL, Ishai A. Effective connectivity within the distributed cortical network for face perception. Cerebral Cortex. 2007;17:2400–6. doi: 10.1093/cercor/bhl148. [DOI] [PubMed] [Google Scholar]

- Fias W, Lauwereyns J, Lammertyn J. Irrelevant digits affect feature-based attention depending on the overlap of neural circuits. Cognitive Brain Research. 2001;12:415–23. doi: 10.1016/s0926-6410(01)00078-7. [DOI] [PubMed] [Google Scholar]

- Ganel T, Goshen–Gottstein Y. Effects of familiarity on the perceptual integrality of the identity and expression of faces: the parallel-route hypothesis revisited. Journal of Experimental Psychology: Human Perception and Performance. 2004;30(3):583–97. doi: 10.1037/0096-1523.30.3.583. [DOI] [PubMed] [Google Scholar]

- Ganel T, Valyear KF, Goshen–Gottstein Y, Goodale MA. The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia. 2005;43:1645–54. doi: 10.1016/j.neuropsychologia.2005.01.012. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4(6):223–33. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Hoffman EA, Haxby JV. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nature Neuroscience. 2000;3:80–4. doi: 10.1038/71152. [DOI] [PubMed] [Google Scholar]

- Humphreys K, Avidan G, Behrmann M. A detailed investigation of facial expression processing in congenital prosopagnosia as compared to acquired prosopagnosia. Experimental Brain Research. 2006;176(2):356–73. doi: 10.1007/s00221-006-0621-5. [DOI] [PubMed] [Google Scholar]

- Jacques C, Rossion B. The speed of invidividual face categorization. Psychological Science. 2006;17(6):485–92. doi: 10.1111/j.1467-9280.2006.01733.x. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17(11):4302–11. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keppel G. Design and analysis: A researchers handbook. (3 edn). Upper Saddle River: Prentice Hall; 1991. [Google Scholar]

- Klucharev V, Sams M. Interaction of gaze direcktion and facial expression processing: ERP study. NeuroReport. 2004;15(4):621–5. doi: 10.1097/00001756-200403220-00010. [DOI] [PubMed] [Google Scholar]

- Kotsoni E, Csibra G, Mareschal D, Johnson MH. Electrophysiological correlates of common-onset visual masking. Neuropsychologia. 2007;45(10):2285–93. doi: 10.1016/j.neuropsychologia.2007.02.023. [DOI] [PubMed] [Google Scholar]

- Le Grand R, Cooper PA, Mondloch CJ, Lewis TL, Sagiv N, de Gelder B, et al. What aspects of face processing are impaired in developmental prosopagnosia? Brain and Cognition. 2006;61(2):139–58. doi: 10.1016/j.bandc.2005.11.005. [DOI] [PubMed] [Google Scholar]

- Leppaenen JM, Kauppinen P, Peltola MJ, Hietanen JK. Differential electrocortical responses to increasing intensities of fearful and happy emotional expressions. Brain Research. 2007;1166:103–9. doi: 10.1016/j.brainres.2007.06.060. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ, Geldart S, Maurer D, Le Grand R. Developmental changes in face processing skills. Journal of Experimental Child Psychology. 2003;86:67–84. doi: 10.1016/s0022-0965(03)00102-4. [DOI] [PubMed] [Google Scholar]

- Mondloch C, Le Grand R, Maurer D. Configural face processing develops more slowly than featural face processing. Perception. 2002;31:553–66. doi: 10.1068/p3339. [DOI] [PubMed] [Google Scholar]

- Maurer D, Le Grand R, Mondloch CJ. The many faces of configural processing. Trends in Cognitive Sciences. 2002;6(6):255–60. doi: 10.1016/s1364-6613(02)01903-4. [DOI] [PubMed] [Google Scholar]

- O’Shea J, Muggleton NG, Cowey A, Walsh V. Timing of target discrimination in human frontal eye fields. Journal of Cognitive Neuroscience. 2004;16(6):1060–7. doi: 10.1162/0898929041502634. [DOI] [PubMed] [Google Scholar]

- Olson IR, Chun MM, Allison T. Contextual guidance of attention: Human intracranial event-related potential evidence for feedback modulation in anatomically early, temporally late stages of visual processing. Brain. 2001;124:1417–25. doi: 10.1093/brain/124.7.1417. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Yovel G, Duchaine BC. TMS evidence for the involvement of the right occipital face area in early face processing. Current Biology. 2007;17:1568–73. doi: 10.1016/j.cub.2007.07.063. [DOI] [PubMed] [Google Scholar]

- Posner MI, Sandson J, Dhawan M, Shulman GL. Is word recognition automatic? A cognitive-anatomical approach. Journal of Cognitive Neuroscience. 1990;1:50–60. doi: 10.1162/jocn.1989.1.1.50. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Bentin S, Gore JC, McCarthy G. Temporal cortex activation in humans vieweing eye and mouth movements. Journal of Neuroscience. 1998;18(6):2188–99. doi: 10.1523/JNEUROSCI.18-06-02188.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rossion B, Caldara R, Seghier M, Schuller A.-M, Lazeyrasm F, Mayer E. A network of occipito–temporal face sensitive areas besides the right middle fusiform gyrus is necessary for normal face processing. Brain. 2003;126:1–15. doi: 10.1093/brain/awg241. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Vuilleumier P, Winston J, Driver J, Dolan R. Distinct and convergent visual processing of high and low spatial frequency information in faces. Cerebral Cortex. 2007;17:2713–24. doi: 10.1093/cercor/bhl180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sack AT, Cohen Kadosh R, Schuhmann T, Moerel M, Walsh V, Goebel R. Optimizing functional accuracy of TMS in cognitive studies: a comparision of methods. Journal of Cognitive Neuroscience. 2009;21(2):207–21. doi: 10.1162/jocn.2009.21126. [DOI] [PubMed] [Google Scholar]

- Sagiv N, Bentin S. Structural encoding of human and schematic faces: holistic and part-based processes. Journal of Cognitive Neuroscience. 2001;13(7):937–51. doi: 10.1162/089892901753165854. [DOI] [PubMed] [Google Scholar]

- Schiltz C, Rossion B. Faces are represented holistically in the human occipito–temporal cortex. NeuroImage. 2006;32:1385–94. doi: 10.1016/j.neuroimage.2006.05.037. [DOI] [PubMed] [Google Scholar]

- Senju A, Johnson MH, Csibra G. The development and neural basis of referential gaze perception. Social Neuroscience. 2006;1:220–34. doi: 10.1080/17470910600989797. [DOI] [PubMed] [Google Scholar]

- Yin RK. Looking at upside–down faces. Journal of Experimental Psychology: General. 1969;81:141–5. [Google Scholar]