Abstract

According to theories of emotional complexity, individuals low in emotional complexity encode and represent emotions in visceral or action-oriented terms, whereas individuals high in emotional complexity encode and represent emotions in a differentiated way, using multiple emotion concepts. During functional magnetic resonance imaging, participants viewed valenced animated scenarios of simple ball-like figures attending either to social or spatial aspects of the interactions. Participant’s emotional complexity was assessed using the Levels of Emotional Awareness Scale. We found a distributed set of brain regions previously implicated in processing emotion from facial, vocal and bodily cues, in processing social intentions, and in emotional response, were sensitive to emotion conveyed by motion alone. Attention to social meaning amplified the influence of emotion in a subset of these regions. Critically, increased emotional complexity correlated with enhanced processing in a left temporal polar region implicated in detailed semantic knowledge; with a diminished effect of social attention; and with increased differentiation of brain activity between films of differing valence. Decreased emotional complexity was associated with increased activity in regions of pre-motor cortex. Thus, neural coding of emotion in semantic vs action systems varies as a function of emotional complexity, helping reconcile puzzling inconsistencies in neuropsychological investigations of emotion recognition.

Keywords: animacy, embodiment, emotion, empathy, functional magnetic resonance imaging

INTRODUCTION

Our knowledge of others’ emotions clearly depends on their perceptible reactions, i.e. facial, vocal and bodily expressions (Darwin, 1872). It is unclear, however, what the fundamental building blocks of emotional expression and perception are. Michotte (1950) asserted that social perception is grounded in the analysis of motion cues (e.g. approach, avoidance, contact intensity). Indeed, 1-year-old infants can attribute emotional valence (±) to interactions between ball-like figures in simplistic two-dimensional animations, based on simple motion cues, e.g. collision intensity (hard contact–negative, soft contact–positive) (Premack and Premack, 1997). Such cues are used pan-culturally (Rimé et al., 1985).

Neuroscience has implicated several brain structures in emotion recognition, including the amygdala, occipitotemporal neocortex, insula, somatosensory cortex, basal ganglia, ventral premotor cortex, and medial and orbital prefrontal cortex (Heberlein and Adolphs, 2007). Some regions, e.g. the amygdala are activated by minimal emotion cues, and in the absence of attention (Adolphs, 2008), although the extent to which these brain regions are sensitive to the basic motion cues emphasised by Michotte is unclear.

Understanding of emotions is, however, knowledge driven as well as rooted in structure detection: we discern others’ emotions via a complex combination of structure-detection skills and relevant knowledge (Baldwin and Baird, 2001; Marshall and Cohen, 1988). That there are potentially large individual differences in emotion knowledge may help account for the puzzling finding that, even when tested using the same emotion-recognition tasks, patients with comparable brain lesions do not always show similar deficits, a finding for which there has been no satisfying explanation (Heberlein and Adolphs, 2007).

Here, we address the influence of a key individual difference, namely, individual differences in propositional knowledge for emotion or emotional complexity (Lindquist and Feldman-Barrett, 2008), as measured by the Levels of Emotional Awareness Scale (LEAS; Lane et al., 1990). According to Lane and colleagues (Lane and Pollerman, 2002), individuals low in emotional complexity encode and represent emotion knowledge in visceral or action-oriented terms, whereas individuals high in emotional complexity encode and represent emotion knowledge in a nuanced and differentiated fashion, using multiple emotion concepts. Increased emotional complexity predicts more normative identification of emotion cues in others and greater empathy (Ciarrochi et al., 2003; Lane et al., 1996, 2000).

In the current study, during functional magnetic resonance imaging (fMRI), participants viewed animated scenarios of simple ball-like figures open to interpretation in terms of emotional attributes. Participants were cued to pay attention to social or spatial aspects of the figures’ behaviour. Given that individuals with greater levels of emotional complexity are hypothesised to have more detailed and more differentiated emotion concepts, and to rely less on visceral or action-oriented emotion coding, we predicted that increased emotional complexity would be associated with greater activation in neural regions linked to detailed conceptual knowledge [left temporal poles (TP)] (Rogers et al., 2006), together with reduced activity in brain regions linked to processing visceral/action-oriented components of emotion (hypothalamus, premotor cortex), (Leslie et al., 2004; Thompson and Swanson, 2003) and with greater differentiation between positively vs negatively valenced animations in brain regions coding emotional valence and discrete emotion categories (Murphy et al., 2003; Vytal and Hamman, 2009). In addition, by manipulating attention to social meaning, we could examine the extent to which activity in key neural structures was dependent on attention, and how this interacted with individual differences in emotional complexity.

MATERIALS AND METHODS

During fMRI, participants were asked to watch a series of short animations. Each showed two ‘sprites’ moving with properties that support varying emotional interpretations (affiliative, antagonistic, neutral social behaviours) in a two-dimensional ‘sprite world’ (Figure 1). Participants were pre-cued to attend either to the social behaviour that could underlie the movement of the sprites or to the spatial aspects of the same movement. Following the fMRI session, participants completed the LEAS (Lane et al., 1990) (see below for details).

Fig. 1.

Schematic of animations.

Participants

Sixteen healthy right-handed female, native English speaking individuals (mean age = 24 years, s.d. = 4 years) participated. The study was approved by the Cambridgeshire Local Research Ethics Committee and performed in compliance with their guidelines. Written informed consent was obtained from all participants. Individuals with a history of inpatient psychiatric care; neurological disease, or head injury were excluded, as were individuals on medication for anxiety or depression. Following the fMRI session, participants completed the LEAS (Lane et al., 1990) (see below for details). Only female volunteers were scanned as previous work has found considerable sex differences in LEAS scores, with women outperforming men (Barrett et al., 2000; Ciarrochi et al., 2003). We did not control for menstrual cycle phase. In future studies, it will be important to examine both potential menstrual cycle phase effects in women and potential neural sex differences in the effects of LEAS (McRae et al., 2008).

Stimuli

The stimuli consisted of 14-s duration animations, displaying the motion of two sprites—one green circle and one blue circle in a two-dimensional environment (the sprite world), which included some obstacles to motion in a straight line (see Figure 1 and example movies can be viewed at http://www.mrc-cbu.cam.ac.uk/research/emotion/cemhp/tavaresaffant.html). Animations were created using the software package Blender version 2.1 (Blender Foundation, http://www.blender.org). Three categories of animation were constructed, designed to convey the impression of three types of interpersonal situation: (i) Affiliative interactions between the two sprites; (ii) Antagonistic interactions between the two sprites; and (3) Neutral, (non)-interactions between the two sprites.

In the affiliative animations, the two sprites entered from different sides of the sprite-world. They approached each other, and gently touched, suggesting affection, then moved in a mutually coordinated fashion throughout. In the antagonistic animations, the two sprites entered from different sides of the sprite-world and approached each other. They initially maintained a distance from each other, while moving around slowly. In one half of the animations, they then approached each other, making brief, hard contact with each other and retreating rapidly, suggesting physical aggression. In the other half, they never touched, but circled each other in a manner suggestive of hostility. At the end of the sequence, the two sprites left the scene quickly and in different directions. In the emotionally neutral animations, the two sprites entered from different sides of the sprite-world. They moved around, sometimes stopping and changing direction, but never approaching or withdrawing from each other. The sprites then left the scene independently at a gentle pace.

Task

During scanning, each animation (affiliative, antagonistic or neutral) was preceded by a cue word, either ‘behavioural’ or ‘spatial’, specifying how they should attend to the scenarios. For the behavioural cue, participants were instructed to identify what type of interaction might be happening between the two sprites (affiliative, antagonistic or neutral). For the spatial cue, participants were instructed to pay attention to various aspects of the motion of the two sprites, such as speed, trajectory, position of entering/exiting the scene, etc. As a manipulation check, following the presentation of each animation, a summary statement appeared describing the contents of the animation, and the participants had to judge (true/false) if the statement could appropriately describe the preceding animation. Examples of behavioural statements include: ‘A woman met her partner in an art gallery’ (affiliative), ‘Street kids were insulting each other’ (antagonistic) and ‘Two joggers were exercising around a park’ (neutral). Examples of spatial statements include: ‘The blue circle stayed in the bottom left-hand corner’, ‘The circles completed a figure of eight movement.’ The statement accurately described the content of each animation in 50% of cases. Each animation and each statement was presented only once. The cue word was presented for 1.8 s, the animation for 14 s and the statement for 7.5 s. In addition, a baseline fixation condition was used. In this condition, the cue word ‘cross’ appeared, followed by a fixation-cross for 14 s, and then a statement saying that the participants should press either the left or right response button. There were 12 examples of each of the animations (affiliative, antagonistic and neutral) in each of the two conditions (behavioural or spatial cue) plus baseline condition. Animations (affiliative, antagonistic and neutral) and task (behavioural or spatial cue) were presented in pseudorandomised, counterbalanced fashion.

Post-task ratings of the animations

Following scanning, participants rated each animation seen during fMRI for valence and emotional intensity using visual analogue scales with lines 11.5-cm long. For valence, the scale ranged from positive (0 cm) through neutral (5.75 cm) to negative (11.5 cm). For intensity ratings, the scale ranged from low (0 cm) to high (11.5 cm). The period of time between the imaging study and the ratings task ranged from 0 days to 6 weeks, depending on the availability of the participant (average ∼2 weeks).

Levels of emotional awareness

Emotional Awareness is the ability to recognise and describe emotion in oneself and others. According to Lane and colleagues’ Piagetian model (Lane and Pollerman, 2002; Lane and Schwartz, 1987), the development of emotional awareness comprises five stages, ranging from awareness of physical sensations to the capacity to appreciate complexity in the emotional experiences of self and other. The LEAS (Lane et al., 1990) is a written performance measure that asks an individual to describe her anticipated emotions and those of another person in each of 20 short scenarios described in two to four sentences. One scenario is presented per page, followed by two questions, ‘How would you feel?’ and ‘How would the other person feel?’ at the top of each page. Participants write their responses on the remainder of each page. They are instructed to use as much or as little of the page as is needed to answer the two questions.

Scoring is based on specific criteria aimed at determining the degree of specificity in the emotion terms used and the range of emotions described, and the differentiation of self from other. Each of the 20 scenarios receives a score of 0–5, corresponding to the stages of the developmental model underpinning the LEAS. A score of 0 is assigned when non-affective words are used; a score of 1 when words indicating physiological cues are used in the description (e.g. ‘I’d feel tired’); a score of 2 when words are used that convey undifferentiated emotion (e.g. ‘I’d feel bad’) or when the word ‘feel’ is used to convey an action tendency (e.g. ‘I’d feel like punching the wall’); a score of 3 when one word conveying a typical differentiated emotion is used (e.g. angry, happy, etc); a score of 4 when two or more level 3 words are used in a way that conveys greater emotional differentiation than would either word alone. Participants receive a separate score for the ‘self’ response and for the ‘other’ response, ranging from 0–4. In addition, a total LEAS score is given to each scenario equal to the higher of the ‘self’ and ‘other’ scores. A score of 5 is assigned to the total when ‘self’ and ‘other’ each receive a score of 4; thus the maximum LEAS score is 100, with higher total scores indicating greater awareness of emotional complexity in self and other. A computerized LEAS scoring program has recently been developed (Barchard et al., in press).

The LEAS has high inter-rater reliability, internal consistency (e.g. α = 0.89, Ciarrochi et al., 2003) and test-retest reliability. It is only weakly correlated with measures of verbal ability. Ramponi et al. (2004) found a non-significant correlation (r = 0.02) between LEAS scores and the National Adult Reading Test; Lane et al. (1995) found a correlation of r = 0.17 between LEAS scores and the Shipley Institute of Living Scale Vocabulary Subtest. In a large sample (n = 869) of students, a correlation of 0.15 was found between LEAS and Thurstone’s Reading Test scores (Romero et al., 2008). Furthermore, sex differences in LEAS are still significant when controlling for verbal ability (Barrett et al., 2000). These data indicate that the LEAS is not just a measure of verbal ability. Nor is LEAS correlated with scores on the affective intensity, manifest anxiety or Beck Depression Scales (Lane and Pollerman, 2002) nor with the big five personality dimensions (Ciarrochi et al., 2003), and there are only low or non-significant correlations between LEAS and the Toronto Alexithymia scale (Ciarrochi et al., 2003; Lane et al., 1998; Lumley et al., 2005; Suslow et al., 2000). Scores on the LEAS are related to the understanding emotions section of the MSC Emotional Intelligence Test and to the attention to mood scale of the Trait Meta-Mood scale (Lumley et al., 2005), to the perceiving emotions in stories of the Multifactor Emotional Intelligence Scale (MEIS) (Ciarrochi et al., 2003), to the range and differentiation of emotional experience scale (RDEES) (Kang and Shaver, 2004) and have been shown to predict accuracy of facial emotion recognition (Lane et al., 1996, 2000) and self-reported empathy (Ciarrochi et al., 2003).

Two raters scored the LEAS independently. The inter-rater reliability was good (tau-b = 0.893, N = 100, p < 0.01). On the LEAS, participants’ scores ranged from 65 to 90 (mean = 77, s.d. ± 6.7). These scores are similar to published values for young university student populations (Ciarrochi et al., 2003), but somewhat higher than those reported for broader community samples (Barrett et al., 2000).

Image acquisition

Blood oxygenation level-dependent (BOLD) contrast functional images were acquired with echo-planar T2*-weighted (EPI) images using a Medspec (Bruker, Ettlingen, Germany) 3-T MR system with a head coil gradient set. Each image volume consisted of 21 interleaved 4-mm-thick slices (inter-slice gap: 1mm; in-plane resolution: 2.2 × 2.2 mm; field of view: 20 × 20 cm; matrix size: 90 × 90; flip angle: 74°; echo time: 27.5 ms; voxel bandwidth: 143 kHz; repetition time: 1.6 s). Slice acquisition was transverse oblique, angled to avoid the eyeballs, and covered most of the brain. Six hundred and ninety-five volumes were acquired in one continuous run, and the first six volumes were discarded to allow for T1 equilibration.

Image analysis

fMRI data were analysed using statistical parametric mapping software (Wellcome Trust Centre for Neuroimaging, London, UK). Standard pre-processing was conducted, comprising slice timing correction, realignment, undistortion (Cusack et al., 2003) and masked normalisation of each participant’s EPI data to the Montreal Neurological Institute (MNI)—International Consortium for Brain Mapping template (Brett et al., 2001). Images were re-sampled into this space with 2 mm isotropic voxels and smoothed with a Gaussian kernel of 8 mm full-width at half-maximum. Condition effects were estimated for each participant at each voxel using boxcar regressors for the 14 s animation period, convolved with a canonical hemodynamic response function (HRF) in a general linear model, with spatial realignment parameters included as regressors to account for residual movement-related variance. A high-pass filter was used to remove low-frequency signal drift, and the data were also low-pass filtered with the canonical HRF. Activation contrasts between conditions were estimated for each participant at each voxel, producing statistical parametric maps. Random-effects analysis was conducted to analyse data at a group level, with modulations by levels of emotional awareness assessed by simple regression against LEAS scores.

A priori regions of interest (ROIs) were determined based on areas activated by emotion, social perception and social cognition. We used small volume correction (SVC) for multiple comparisons applied at P < 0.05 (family-wise error) following an initial thresholding at P < 0.05 uncorrected. The amygdala, basal ganglia, posterior and anterior cingulate, insula, postcentral gyrus, inferior and medial orbitofrontal cortex (OBFC) and hypothalamus were defined using structural templates derived by automated anatomic labelling (Tzourio-Mazoyer et al., 2002). Spherical ROIs (10 mm radius spheres) were created to sample the lateral fusiform gyrus (FG) (central coordinates −42, −49, −19; 40, −48, −16) and dorsal and ventral medial prefrontal cortex (DMPFC and VMPFC) (−4, 60, 32; 7, 55, 28 and ±2, 48, −12), by computing the average of the reported activation coordinates for these regions across previous imaging studies of animate motion (Castelli et al., 2000, 2002; Martin and Weisberg, 2003; Ohnishi et al., 2004; Schultz et al., 2003). For the superior temporal sulcus (STS) we used the coordinates (±54, −34, 4) taken from Schultz et al. (2004). For the TPs we used the coordinates (±44, 14, −27) from Rogers et al. (2006). For the premotor cortex we used the coordinates (± 54, 5, 40) from Grèzes et al. (2007). For the periacqueductal grey (PAG) we used the coordinates (2, −32, −24) from Bartels and Zeki (2004). In addition, all activations in the vicinity of our ROIs that survived an uncorrected threshold of P < 0.001 and a minimum cluster size of five voxels are reported for completeness.

For anatomical labelling purposes, activation coordinates were transformed into the Talairach and Tournoux coordinate system using an automated non-linear transform (Brett et al., 2001) and labelled with reference to the Talairach Demon database (http://www.talairach.org) and the atlas of Talairach and Tournoux (1988). For visualizing activations, group maps are overlaid on the ICBM 152 structural template, an average T1-weighted image of 152 individuals co-registered to MNI space. Activations are reported using (x, y, z) coordinates in Talairach (not MNI) coordinates.

RESULTS

Film ratings and post-film probe questions

The post-scan visual analogue scale based valence ratings confirmed that the films were rated as intended (affiliative–positive; antagonistic–negative; neutral–neutral) (affiliative mean = 1.3, s.d. = 0.50; antagonistic mean = 9.7, s.d. = 0.60, indifferent mean = 5.8, s.d. = 0.21). With regards to emotional intensity, the antagonistic animations were considered the most intense of the three, followed at some distance by the affiliative animations. The neutral animations were judged to be of low intensity (affiliative mean = 5.2, s.d. = 2.97; antagonistic mean = 8.9, s.d. = 1.00; indifferent mean = 2.7, s.d. = 1.76). All these differences were significant [affiliative vs antagonistic: t (15) = −7.88, P < 0.001; affiliative vs indifferent: t (15) = 4.13, P < 0.001; antagonistic vs indifferent: t (15) = 16.62, P < 0.001]. LEAS scores were not correlated with intensity or valence ratings.

Participants provided the expected response to 92% of the post-film probe statements and error rates were comparable across the affiliative, antagonistic and neutral films, χ2 = 2.9, df = 2, P = 0.24, for the two viewing tasks (behavioural, spatial), χ2 = 0.072, df = 1, P = 0.79, and for the two response options (true, false) χ2 = 0.13, df = 1, P = 0.72. The high accuracy rates in both conditions show that the manipulation of attention was successful. Error rates were unrelated to LEAS scores.

fMRI data

Cross-participant results

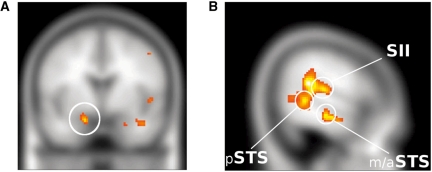

Main effect of emotion—For the contrast antagonistic and affiliative vs neutral animations, collapsed across task (behavioural vs spatial), we found increased activity at a whole brain corrected P < 0.05 in the right secondary somatosensory cortex (SII) (with a similar trend in left SII), and at P < 0.05 SVC in left and right amygdala, hypothalamus, left and right lateral fusiform gyrus, right inferior orbitofrontal gyrus, right precentral gyrus (with a similar trend in left precentral gyrus), left postcentral gyrus, and left and right STS (Figure 2). There was also trend level activation of the right insula. In addition, at an uncorrected P < 0.001 and a cluster extent of five voxels, there was activation in the dorsomedial prefrontal cortex and in the right TP (Table 1).

Fig. 2.

Main effect of emotional content of animations. (A) Activation in the left amygdala. (B) Activation in the right posterior STS (pSTS), SII and mid/anterior STS extending into temporal pole.

Table 1.

Main effect of emotional content of animations

| Brain regions | Hemisphere | Talairach coordinates | Cluster size | Z-score | P |

|---|---|---|---|---|---|

| P whole brain corrected | |||||

| SII | R | 57, −34, 18 | 29 | 5.51 | 0.005 |

| PSVC < 0.05 | |||||

| Amygdala | L | −18, −1, −17 | 193 | 4.06 | 0.002 |

| R | 24, −1, −20 | 215 | 3.19 | 0.026 | |

| Hypothalamus | L | −2, −6, −11 | 97 | 3.45 | 0.033 |

| R | 0, −6, −11 | 132 | 3.23 | 0.058 | |

| Lateral fusiform gyrus | L | −42, −40, −18 | 144 | 3.84 | 0.011 |

| R | 48, −47, −14 | 162 | 3.89 | 0.009 | |

| Inferior orbitofrontal gyrus | R | 46, 32, −12 | 474 | 3.75 | 0.031 |

| Precentral gyrus | R | 50, 0, 46 | 328 | 3.85 | 0.044 |

| Postcentral Gyrus | L | −59, −19, 18 | 107 | 4.36 | 0.01 |

| Superior Temporal Sulcus | L | −53, −30, 13 | 198 | 4.3 | 0.002 |

| R | 55, −39, 4 | 468 | 4.64 | 0.001 | |

| Insula | R | 50, 0, 0 | 99 | 3.5 | 0.067 |

| Punc< 0.001 | |||||

| Medial frontal gyrus | R | 10, 49, 42 | 7 | 3.54 | <0.001 |

| Temporal pole | R | 38, 1, −20 | 254 | 3.63 | <0.001 |

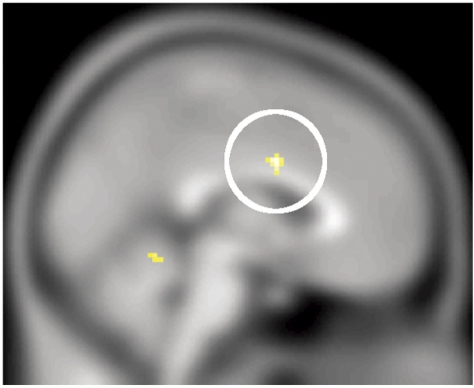

Effect of emotion: amplification by social attention—For the contrast antagonistic and affiliative vs neutral animations, for the behavioural vs spatial task, we found increased activity in the anterior cingulate cortex (0, 3, 27, Z = 3.6, Psvc = 0.037) (Figure 3). In addition, at an uncorrected P < 0.001 and a cluster extent of five voxels, there was activation in following ROIs: dorsomedial prefrontal cortex (−14, 44, 27, Z = 3.66), left insula (−46, −1, 13, Z = 3.33), left postcentral gyrus (−48, −23, 38, Z = 3.58) and right SII (51, −30, 20, Z = 3.48).

Fig. 3.

Dorsal ACC activation for the interaction between emotional content and selective attention.

Antagonistic vs affiliative animations—At a whole brain corrected P < 0.05, activations were seen in the early visual cortex (−6, −99, 9, Z = 5.74; 12, −90, 21, Z = 5.28), and at P < 0.05 SVC in right STS (51, −29, 2, Z = 3.82, Psvc = 0.011), and right postcentral gyrus (28, −42, 51, Z = 4.07, Psvc = 0.024). At an uncorrected P of < 0.001 and an extent threshold of 5 voxels, there was also activation in left SII (–55, –34, 18, Z = 3.25).

Antagonistic vs affiliative animations: Amplification by social attention—For the contrast antagonistic vs affiliative animations, for the Behavioural vs Spatial task, there was increased activity in the medial orbitofrontal gyrus (12, 32, –12, Z = 3.75, Psvc = 0.015).

Affiliative vs antagonistic animations—There were no areas showing significantly increased activation for the contrast of affiliative vs antagonistic animations.

Affiliative vs antagonistic animations: amplification by social attention—The contrast affiliative vs antagonistic animations, for the behavioural vs spatial task, did however reveal differences in left putamen (−20, 14, 9, Z = 3.38, Psvc = 0.051) and right inferior orbitofrontal gyrus (32, 32, −13, Z = 3.55, Psvc = 0.052).

Correlations with levels of emotional awareness

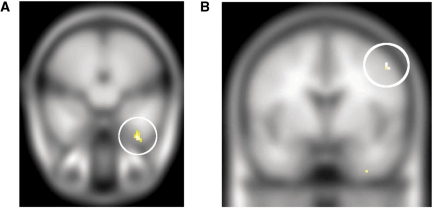

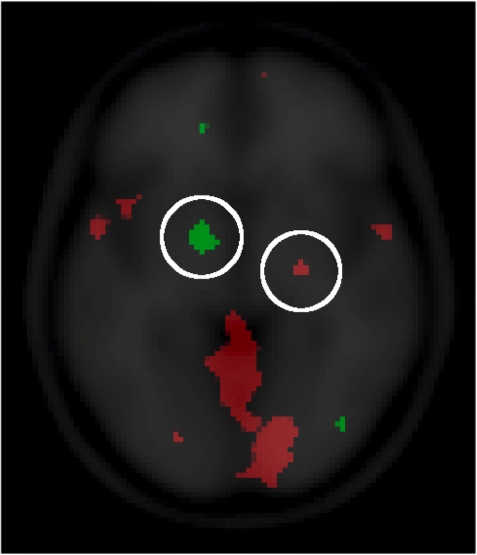

Main effect of emotion—For the main effect of emotion, a positive correlation with increasing LEAS was seen in the left TP (−36, 16, −26, Z = 3.54, Psvc = 0.027) (Figure 4). In addition negative correlations (i.e. increased activation with lower LEAS) were seen at a trend level in the hypothalamus (0, −2, −10, Z = 3.12, Psvc = 0.078) and in the right precentral gyrus (51, 2, 40, Z = 3.55, Psvc = 0.027).

Fig. 4.

Increased levels of emotional awareness are associated with increased left temporal polar activation (A), and decreased levels of emotional awareness are associated with increased right premotor activation (B) whilst viewing emotional relative to neutral animations.

Effect of emotion: amplification by social attention—No significant positive correlations were seen for this contrast. There were significant negative correlations with LEAS for this contrast in the right STS (50, −37, 7, Z = 3.25, Psvc = 0.051), globus pallidus (28, −8, −3, Z = 2.99, Psvc = 0.043) and periaqueductal gray (6, −34, −15, Z = 2.96, Psvc = 0.034), and at an uncorrected P < 0.001 and cluster extent five voxels, in the left insula (−40, −6, 12, Z = 3.32), postcentral gyrus (54, −18, 44, Z = 3.74), left STS (−56, −24, 0, Z = 3.39) and left SII (−58, −16, 26, Z = 3.35). I.e. with higher levels of emotional awareness, there was a reduced effect of social attention in these regions.

Antagonistic vs affiliative animations—For the contrast of the antagonistic animations with the affiliative animations, increased LEAS led to increased activity in the left globus pallidus/hypothalamus (−14, −2, −2, Z = 3.63, Psvc = 0.008) and at a trend level in the left anterior cingulate (−12, 43, 2, Z = 3.23). This latter finding is consistent with McRae et al. (2008) who found that LEAS scores in women (but not men) were positively correlated with activity in the anterior cingulate to high, but not low, arousal emotional images.

Antagonistic vs affiliative animations: amplification by social attention—No significant correlations were seen for this contrast.

Affiliative vs antagonistic animations—For the contrast of the affiliative animations with the antagonistic animations, increased LEAS was correlated with increased activity in the insula bilaterally (−36, −1, −13, Z = 3.95, Psvc = 0.019; 48, 2, 2, Z = 3.68, Psvc = 0.041), and the periaqueductal gray (8, −36, −20, Z = 3.33, Psvc = 0.045). In addition, at an uncorrected P < 0.001 and a cluster extent of five voxels, this contrast was associated with activation in the globus pallidus (24, −16, −4, Z = 3.49). Notably, the pallidal correlation for affiliative animations and LEAS fell relatively more posterior to the correlation with antagonistic animations (Figure 5). An analogous anterior–posterior division in the pallidum has been observed in the rat brain (Smith and Berridge, 2005; see Discussion section). At this same threshold positive correlations were also seen in the cingulate gyrus (−2, 25, 30, Z = 3.44) and posterior cingulate (2, −41, 4, Z = 3.44).

Fig. 5.

In red, the correlation of LEAS with the contrast affiliative vs antagonistic animations (social and spatial tasks). In green, the correlation of LEAS with the contrast antagonistic vs affiliative animations (social and spatial tasks). Highlighted with the white circles are the left and right pallidum, respectively.

Affiliative vs antagonistic animations: amplification by social attention—Attention to social meaning correlated positively with LEAS in the contrast of the affiliative vs antagonistic animations in the periaqueductal gray (0, −34, −17, Z = 3.01, Psvc = 0.031).

DISCUSSION

With the goal of locating the neural structures involved in processing emotion from motion dynamics, and how these were influenced by selective attention and levels of emotional awareness, healthy volunteers viewed animations of affiliative, antagonistic and neutral interactions. Our findings suggest that neural representation of emotion is critically dependent on individual differences in emotional complexity.

Brain regions sensitive to emotion (antagonistic and affiliative vs neutral interactions)

According to Michotte (1950) the detection of emotion is grounded in the analysis of motion cues, e.g. approach, avoidance, contact intensity. Consistent with this, we found that participants could extract not just emotional valence (±) and arousal, but complex social meanings from our simple animations. Regardless of task, viewing emotional animations, relative to neutral animations, resulted in activation across a distributed set of neural structures implicated in processing emotions and social intentions from facial, vocal and bodily expressions, and in the representation of one’s own emotions (amygdala, lateral FG, posterior STS, SII, OBFC, premotor cortex, TP, hypothalamus, insula and DMPFC) (Frith and Frith, 2003; Heberlein and Adolphs, 2007; Kober et al., 2008; Heberlein and Atkinson, 2009). Our results refine previous findings that lesions encompassing, but not restricted to, amygdala (Heberlein and Adolphs, 2004) and somatosensory cortices (Heberlein et al., 2003) result in a diminished use of affective and social words to describe the original Heider and Simmel animation.

Interaction between social attention and emotion

The influence of attention on the neural processing of emotion cues is a key issue (Adolphs, 2008). Our attentional manipulation was realised by directly cueing participants to pay attention to social or spatial attributes of the sprites’ motion. Attention to social meaning resulted in increased activity in the dorsal ACC, together with SII, insula and DMPFC. Previous studies have shown heightened activity in these regions when attending to facial expression: dACC (Vuilleumier et al., 2001), which might supervise ‘top–down’ attentional allocation to salient emotional and social stimuli (Peers and Lawrence, 2009); SII and insula (Winston et al., 2003), lesions to which impair explicit emotion recognition (Adolphs et al., 2000, 2002; Heberlein et al., 2004; Pitcher et al., 2008), and which are involved in processing the somatic and visceral aspects of self and others emotions (Damasio et al., 2000; Heberlein and Adolphs, 2007); and DMPFC, which is involved in processing the affective meanings which generate emotions by the cognitive route (Teasdale et al., 1999; Ochsner et al., 2009), and mental state attribution or theory of mind more generally (Amodio and Frith 2006; Frith and Frith, 2003; Moriguchi et al., 2006; van Overwalle, 2009).

Brain regions sensitive to social interaction type (antagonistic vs affiliative)

When compared with the affiliative animations, antagonistic ones activated bilateral early visual cortex (BA 17–19), right pSTS and right postcentral gyrus. This is consistent with previous studies of dynamic facial and bodily expression processing (Grosbras and Paus, 2006; Grèzes et al., 2007; Kilts et al., 2003; Pelphrey et al., 2007; Sato et al., 2004). These activations might have been due to differences between the animations in motion content (the circles of the antagonistic animations moved consistently more and at a faster pace), or in emotional valence or arousal (in the post-scanning behavioural ratings the antagonistic animations were considered to be more emotionally intense). Early visual areas, pSTS and premotor cortex are all sensitive to biological motion (Casile et al., 2009; Jastorff and Orban, 2009; Pelphrey et al., 2003), as well as emotional valence and arousal (Lane et al., 1999; Sugurladze et al., 2003). The relative contributions of kinematics and arousal may be impossible to differentiate, as, consistent with Michotte’s (1950) proposals, there is a very direct relationship between the kinematic properties (e.g. velocity) of biological motion stimuli and perceiver’s ratings of arousal (Aronoff, 2006; Chouchourelou et al., 2006; Ikeda and Watanabe, 2009; Pavlova et al., 2005; Pollick et al., 2001; Roether et al., 2009). We found no increase in amygdala activity for antagonistic relative to affiliative films. This contrasts with some studies (Carter and Pelphrey, 2008; Sinke et al., 2010), but is consistent with lesion work (Heberlein and Adolphs, 2004) and likely relates to the complexity of the affiliative interactions or the lack of imminent threat to the viewer in our antagonistic interactions (which were viewed ‘from above’) (Sinke et al., 2010).

Interaction between social attention and social interaction type

Under social attention, we observed differential activations of the OBFC, with the antagonistic animations activating medial OBFC and the affiliative animations activating an inferior and more lateral OBFC region. This finding is consistent with a recent study which reported that words with increasing negative valence were associated with increased activity in medial OBFC/subgenual cingulate, whereas increasing positive valence was associated with increased activity in lateral OBFC (Lewis et al., 2006). It is unlikely, however, that these differences simply reflect differences in emotional valence (±) between the films, since Kringelbach and Rolls (2004) have shown in a meta-analysis that medial and lateral OFC are both capable of coding rewarding (i.e. positive valence) and punishing (i.e. negative valence) information. Rather, the differences in OBFC may relate to some more specific aspect of the type of social interaction (antagonistic vs affiliative) and its implications for the viewer. For example, Mobbs et al. (2007) found that medial OBFC/subgenual cingulate was specifically activated by remote, rather than imminent threats; whereas Nitschke et al. (2004) found that lateral OBFC was activated when mothers viewed their own infants (i.e. a specifically affiliative condition) and Sollberger et al. (2009) found that alterations in warm, affiliative personality traits, but not other interpersonal traits, were correlated with structural changes in lateral orbitofrontal cortex in individuals with neurodegenerative diseases. The specific nature of emotion coding in medial vs lateral OBFC, which form distinct neural networks (Price, 2007) is an important topic for future investigation.

Correlations with LEAS

LEAS and brain regions responsive to emotional content

Lane and Schwartz (1987) proposed that a person’s ability to recognise and describe emotion in oneself and others, called emotional awareness or complexity, undergoes a five-stage structural development. A fundamental tenet of this model is that individual differences in emotional awareness reflect variations in the degree and differentiation of the ‘schema’ used to process emotional information. The first two stages of development are ‘action-oriented’ emotion processing stages, namely bodily sensations and actions tendencies. The three remaining stages, single emotions, blends of emotion and combinations of blends, are associated with more highly differentiated emotional knowledge (for self and others) (Lane, 2000, 2008; Lane and Pollermann, 2002), that is with a propositional (e.g. conceptual) knowledge of emotion (Lindquist and Barrett, 2008). In our study, for the main effect of emotion (i.e. independent of attentional set), LEAS scores correlated positively with activity in the left TP, and negatively with activity in the hypothalamus and the right precentral gyrus. Left TP activity has been associated with detailed emotional perspective taking (Derntl et al., 2010; Ruby and Decety, 2004), in narrative and story processing (Maguire et al., 1999) and more generally with the level of specificity of retrieved semantic knowledge (Grabowski et al., 2001; Rogers et al., 2006), as part of a semantic ‘hub’ (Patterson et al., 2007). Burnett et al. (2009) found that left TP activity when processing social emotions increased from adolescence to adulthood, consistent with the increasing refinement of emotion categories during development (Widen and Russell, 2008). Thus, consistent with LEAS theory, increasing emotional complexity is linked with increased neural coding of emotion in the semantic system.

Conversely, lower emotional complexity was associated with increased activity in the hypothalamus, a visceromotor structure (Thompson and Swanson, 2003) and premotor cortex [(Z = 40), falling within ventral premotor cortex (Tomassini et al., 2007)]. The latter region has been identified as a key node in a putative human ‘mirror neuron system’ (Morin and Grèzes, 2008), implicated in goal-directed action observation (Rizzolatti and Craighero, 2004) and activated in previous studies of the perception and production of emotional actions (Grèzes et al., 2007; Grosbras and Paus, 2006; Henenlotter et al., 2005; Leslie et al., 2004; van der Gaag et al., 2007; Warren et al., 2006; Zaki et al., 2009). The negative correlation with LEAS suggests, again consistent with Lane's model of emotional awareness, that low LEAS is associated with a greater tendency to encode emotional information in an action-oriented and visceral fashion. These findings place critical constraints on ‘motor’ theories of empathy (Gallese et al., 2004; Pfeifer et al., 2008; Montgomery et al., 2009).

LEAS, social attention and social interaction type

Higher LEAS scores did not predict greater activity in any region during the social vs spatial attention condition. Rather, we found a negative correlation between LEAS score and amplification of activity as a function of social attention in several cortical (STS, insula, SII) and subcortical (globus pallidus, periacqueductal gray) regions. That is, the attentional amplification of emotional content, in STS and insula, was attenuated in individuals with increasing emotional complexity, presumably because heightened emotional complexity is associated with a greater default tendency to attend to emotional and social information, thus reducing the impact of instructed attentional set (Ciarrochi et al., 2003, 2005). The finding that LEAS score positively correlated with activity in regions linked to detailed semantic processing (left TPs) regardless of task, is also consistent with this suggestion. Ongoing work is examining the extent to which individual differences in emotional complexity influences patterns of brain activity during explicit retrieval of detailed emotional knowledge.

Increased emotional differentiation as indexed by increased LEAS score, was accompanied by an increased neural differentiation between the positive and negative animations within the pallidum, where activation to antagonistic animations showed a positive correlation with LEAS in the anterior pallidum, whereas activation to affiliative animations showed a positive correlation with LEAS in the posterior pallidum. Smith and Berridge (2005) found evidence for an anterior–posterior ‘hedonic gradient’ in the rat pallidum reflecting increased ‘liking’ of foods. Our findings suggest increased differentiation between emotions of different valence with increasing LEAS in regions coding basic ‘building blocks’ of emotional valence. This accords with the hierarchical organisation of levels of emotional awareness theory, in which functioning at each level adds to and modifies the functioning of previous levels (Lane, 2000, 2008). The greater differences seen between affiliative and antagonistic animations with increasing LEAS score in regions implicated in processing affiliative signals (the insula) (Henenlotter et al., 2005) and basic affiliative behaviours (PAG) (Bartels and Zeki, 2004; Lonstein and Stern, 1997), are also consistent with Lane’s theory. Increased LEAS scores are associated with heightened interpersonal closeness (Lumley et al., 2005).

In conclusion, simple motion cues alone communicate rich information about emotional interactions and trigger widespread activity across a range of structures implicated in processing emotion and social information. Regions including dACC were modulated by selective attention to social meaning. Critically, individual differences in emotional complexity profoundly influenced the neural coding of such cues, and the influence of attention, as predicted by Lane’s model of Levels of Emotional Awareness (Lane 2000; Lane et al., 1990). Increased levels of emotional awareness predicted increased semantic neural emotion coding, whereas decreased levels of emotional awareness predicted visceral and action-oriented coding. If extended and confirmed, results of this type could do much to clarify why the effects on emotion processing of lesions to specific regions are subject to individual variation. Indeed, future fMRI studies in this domain would benefit from including measures of individual differences in emotional complexity on a more routine basis.

Acknowledgments

P.T. would like to thank Fundação para a Ciência e Tecnologia (Portugal) for their support in the form of a fellowship (PRAXISXXI/BD/21369/1999). P.B.’s involvement in this research was funded under MRC project code U.1055.02.003.00001.01. A.L. is funded by the Wales Institute of Cognitive Neuroscience.

REFERENCES

- Adolphs R. Fear, faces, and the human amygdala. Current Opinion in Neurobiology. 2008;18:166–72. doi: 10.1016/j.conb.2008.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D. Neural systems for recognition of emotional prosody: a 3-D lesion study. Emotion. 2002;2:23–51. doi: 10.1037/1528-3542.2.1.23. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Cooper G, Damasio A. A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. Journal of Neuroscience. 2000;20:2683–2690. doi: 10.1523/JNEUROSCI.20-07-02683.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amodio DM, Frith CD. Meeting of minds: the medial frontal cortex and social cognition. Nature Reviews Neuroscience. 2006;7:268–77. doi: 10.1038/nrn1884. [DOI] [PubMed] [Google Scholar]

- Aronoff J. How we recognize angry and happy emotion in people, places and things. Cross-Cultural Research. 2006;40:83–105. [Google Scholar]

- Baldwin DA, Baird JA. Discerning intentions in dynamic human action. Trends in Cognitive Sciences. 2001;5:171–8. doi: 10.1016/s1364-6613(00)01615-6. [DOI] [PubMed] [Google Scholar]

- Barchard KA, Bajgar J, Leaf DE, Lane RD. Computer scoring of the Levels of Emotional Awareness Scale. Behavior Research Methods. in press doi: 10.3758/BRM.42.2.586. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Lane RD, Sechrest L, Schwartz GE. Sex differences in emotional awareness. Personality and Social Psychology Bulletin. 2000;26:1027–35. [Google Scholar]

- Bartels A, Zeki S. The neural correlates of maternal and romantic love. Neuroimage. 2004;21:1155–66. doi: 10.1016/j.neuroimage.2003.11.003. [DOI] [PubMed] [Google Scholar]

- Brett M, Christoff K, Cusack R, Lancaster J. Using the Talairach atlas with the MNI template. Neuroimage. 2001;13:S85. [Google Scholar]

- Brett M, Leff A, Rorden C, Ashburner J. Spatial normalization of brain images with focal lesions using cost function masking. Neuroimage. 2001;14:486–500. doi: 10.1006/nimg.2001.0845. [DOI] [PubMed] [Google Scholar]

- Burnett S, Bird G, Moll J, Frith C, Blakemore SJ. Development during adolescence of the neural processing of social emotion. Journal of Cognitive Neuroscience. 2009;21:1736–50. doi: 10.1162/jocn.2009.21121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter EJ, Pelphrey KA. Friend or foe? Brain systems involved in the perception of dynamic signals of menacing and friendly social approaches. Social Neuroscience. 2008;3:151–63. doi: 10.1080/17470910801903431. [DOI] [PubMed] [Google Scholar]

- Casile A, Dayan E, Caggiano V, Hendler T, Flash T, Giese M. Neuronal encoding of human kinematic invariants during action observation. Cerebral Cortex. 2009 doi: 10.1093/cercor/bhp229. Nov 20 [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- Castelli F, Frith C, Happé F, Frith U. Autism, Asperger Syndrome and brain mechanisms for the attribution of mental states to animated shapes. Brain. 2002;125:1839–49. doi: 10.1093/brain/awf189. [DOI] [PubMed] [Google Scholar]

- Castelli F, Happé F, Frith U, Frith C. Movement and mind: a functional imaging study of perception and interpretation of complex intentional movement patterns. Neuroimage. 2000;12:314–32. doi: 10.1006/nimg.2000.0612. [DOI] [PubMed] [Google Scholar]

- Chouchourelou A, Matsuka T, Harber K, Shiffrar M. The visual analysis of emotional actions. Social Neuroscience. 2006;1:63–74. doi: 10.1080/17470910600630599. [DOI] [PubMed] [Google Scholar]

- Ciarrochi J, Caputi P, Mayer JD. The distinctiveness and utility of a measure of trait emotional awareness. Personality and Individual Differences. 2003;34:1477–1490. [Google Scholar]

- Ciarrochi J, Hynes K, Crittenden N. Can men do better if they try harder? Sex and motivational effects on emotional awareness. Cognition and Emotion. 2005;19:133–41. [Google Scholar]

- Cusack R, Brett M, Osswald K. An evaluation of the use of magnetic field maps to undistort echo-planar images. Neuroimage. 2003;18:127–42. doi: 10.1006/nimg.2002.1281. [DOI] [PubMed] [Google Scholar]

- Damasio A, Grabowski T, Bechara A, et al. Subcortical and cortical brain activity during the feeling of self-generated emotions. Nature Neuroscience. 2000;3:1049–56. doi: 10.1038/79871. [DOI] [PubMed] [Google Scholar]

- Darwin C. The Expression of the Emotions in Man and Animals. London: John Murray; 1872. [Google Scholar]

- Derntl B, Finkelmeyer A, Eickhoff S, et al. Multidimensional assessment of empathic abilities: neural correlates and gender differences. Psychoneuroendocrinology. 2010;35:67–82. doi: 10.1016/j.psyneuen.2009.10.006. [DOI] [PubMed] [Google Scholar]

- Frith U, Frith C. Development and neurophysiology of mentalizing. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences. 2003;358:459–73. doi: 10.1098/rstb.2002.1218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G. A unifying view of the basis of social cognition. Trends in Cognitive Sciences. 2004;8:396–403. doi: 10.1016/j.tics.2004.07.002. [DOI] [PubMed] [Google Scholar]

- Grabowski TJ, Damasio H, Tranel D, Ponto LL, Hichwa RD, Damasio A. A role for left temporal pole in the retrieval of words for unique entities. Human Brain Mapping. 2001;13:199–212. doi: 10.1002/hbm.1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grèzes J, Pichon S, de Gelder B. Perceiving fear in dynamic body expressions. Neuroimage. 2007;35:959–67. doi: 10.1016/j.neuroimage.2006.11.030. [DOI] [PubMed] [Google Scholar]

- Grosbras MH, Paus T. Brain networks involved in viewing angry hands or faces. Cerebral Cortex. 2006;16:1087–96. doi: 10.1093/cercor/bhj050. [DOI] [PubMed] [Google Scholar]

- Heberlein AS, Adolphs R. Impaired spontaneous anthropomorphising despite intact perception and social knowledge. Proceedings of the National Academy of Sciences of the United States of America. 2004;101:7487–91. doi: 10.1073/pnas.0308220101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heberlein AS, Adolphs R. Neurobiology of emotion recognition: current evidence for shared substrates. In: Harmon-Jones E, Winkielman P, editors. Social Neuroscience: Integrating Biological and Psychological Explanations of Social Behavior. New York: Guilford Press; 2007. pp. 31–55. [Google Scholar]

- Heberlein AS, Adolphs R, Tranel D, Damasio H. Cortical regions for judgements of emotions and personality traits from point-light walkers. Journal of Cognitive Neuroscience. 2004;16:1143–58. doi: 10.1162/0898929041920423. [DOI] [PubMed] [Google Scholar]

- Heberlein AS, Atkinson AP. Neuroscientific evidence for simulation and shared substrates in emotion recognition: beyond faces. Emotion Review. 2009;1:162–77. [Google Scholar]

- Heberlein AS, Pennebaker JW, Tranel D, Adolphs R. Effects of damage to right-hemisphere brain structures on spontaneous emotional and social judgments. Political Psychology. 2003;24:705–26. [Google Scholar]

- Hennenlotter A, Schroeder U, Erhard P, et al. A common neural basis for receptive and expressive communication of pleasant facial affect. Neuroimage. 2005;26:581–91. doi: 10.1016/j.neuroimage.2005.01.057. [DOI] [PubMed] [Google Scholar]

- Ikeda H, Watanabe K. Anger and happiness are linked differently to the explicit detection of biological motion. Perception. 2009;38:1002–111. doi: 10.1068/p6250. [DOI] [PubMed] [Google Scholar]

- Jastorff J, Orban GA. Human functional magnetic resonance imaging reveals separation and integration of shape and motion cues in biological motion processing. Journal of Neuroscience. 2009;29:7315–29. doi: 10.1523/JNEUROSCI.4870-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang SM, Shaver PR. Individual differences in emotional complexity: their psychological implications. Journal of Personality. 2004;72:687–726. doi: 10.1111/j.0022-3506.2004.00277.x. [DOI] [PubMed] [Google Scholar]

- Kilts CD, Egan G, Gideon DA, Ely TD, Hoffman JM. Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. Neuroimage. 2003;18:156–68. doi: 10.1006/nimg.2002.1323. [DOI] [PubMed] [Google Scholar]

- Kober H, Barrett LF, Joseph J, Bliss-Moreau E, Lindquist K, Wager TD. Functional grouping and cortical-subcortical interactions in emotion: a meta-analysis of neuroimaging studies. Neuroimage. 2008;42:998–1031. doi: 10.1016/j.neuroimage.2008.03.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kringelbach ML, Rolls ET. The functional neuroanatomy of the human orbitofrontal cortex: evidence from neuroimaging and neuropsychology. Progress in Neurobiology. 2004;72:341–72. doi: 10.1016/j.pneurobio.2004.03.006. [DOI] [PubMed] [Google Scholar]

- Lane RD. Neural correlates of conscious emotional experience. In: Lane RD, Nadel L, editors. Cognitive Neuroscience of Emotion. New York: Oxford University Press; 2000. pp. 345–70. [Google Scholar]

- Lane RD. Neural substrates of implicit and explicit emotional processes: a unifying framework for psychosomatic medicine. Psychosomatic Medicine. 2008;70:214–31. doi: 10.1097/PSY.0b013e3181647e44. [DOI] [PubMed] [Google Scholar]

- Lane RD, Chua PM, Dolan RJ. Common effects of emotional valence, arousal and attention on neural activation during visual processing of pictures. Neuropsychologia. 1999;37:989–97. doi: 10.1016/s0028-3932(99)00017-2. [DOI] [PubMed] [Google Scholar]

- Lane RD, Kivley LS, Du Bois A, Shamasundara P, Schwartz GE. Levels of emotional awareness and the degree of right hemispheric dominance in the perception of facial emotion. Neuropsychologia. 1995;33:525–38. doi: 10.1016/0028-3932(94)00131-8. [DOI] [PubMed] [Google Scholar]

- Lane RD, Pollermann B. Complexity of emotion representations. In: Barrett LF, Salovey P, editors. The Wisdom in Feeling: Psychological Processes in Emotional Intelligence. New York: Guilford Press; 2002. pp. 271–94. [Google Scholar]

- Lane RD, Quinlan DM, Schwartz GE, Walker PA, Zeitlin SB. The levels of emotional awareness scale: a cognitive -developmental measure of emotion. Journal of Personality Assessment. 1990;55:124–34. doi: 10.1080/00223891.1990.9674052. [DOI] [PubMed] [Google Scholar]

- Lane RD, Schwartz GE. Levels of emotional awareness: a cognitive-developmental theory and its application to psychopathology. American Journal of Psychiatry. 1987;144:133–43. doi: 10.1176/ajp.144.2.133. [DOI] [PubMed] [Google Scholar]

- Lane RD, Sechrest L, Riedel R. Sociodemographic correlates of alexithymia. Comprehensive Psychiatry. 1998;39:377–85. doi: 10.1016/s0010-440x(98)90051-7. [DOI] [PubMed] [Google Scholar]

- Lane RD, Sechrest L, Riedel R, Shapiro D, Kaszniak A. Pervasive emotion recognition deficit common to alexithymia and the repressive coping style. Psychosomatic Medicine. 2000;62:492–501. doi: 10.1097/00006842-200007000-00007. [DOI] [PubMed] [Google Scholar]

- Lane RD, Sechrest L, Reidel R, Weldon V, Kaszniak A, Schwartz GE. Impaired verbal and nonverbal emotion recognition in alexithymia. Psychosomatic Medicine. 1996;58:203–10. doi: 10.1097/00006842-199605000-00002. [DOI] [PubMed] [Google Scholar]

- Leslie KR, Johnson-Frey SH, Grafton ST. Functional imaging of face and hand imitation: towards a motor theory of empathy. Neuroimage. 2004;21:601–7. doi: 10.1016/j.neuroimage.2003.09.038. [DOI] [PubMed] [Google Scholar]

- Lewis PA, Critchley HD, Rotshtein P, Dolan RJ. Neural correlates of processing valence and arousal in affective words. Cerebral Cortex. 2006;17:742–48. doi: 10.1093/cercor/bhk024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Barrett LF. Emotional complexity. In: Lewis M, Haviland-Jones JM, Barrett LF, editors. Handbook of Emotions. New York: Guilford Press; 2008. pp. 513–30. [Google Scholar]

- Lonstein JS, Stern JM. Role of the midbrain periacqueductal gray in maternal nurturance and aggression: c-fos and electrolytic lesion studies in lactating rats. Journal of Neuroscience. 1997;17:3364–78. doi: 10.1523/JNEUROSCI.17-09-03364.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lumley MA, Gustavson BJ, Partridge RT, Labouvie-Vief G. Assessing alexithymia and related emotional ability constructs using multiple methods: interrelationships among measures. Emotion. 2005;5:329–42. doi: 10.1037/1528-3542.5.3.329. [DOI] [PubMed] [Google Scholar]

- Maguire EA, Frith CD, Morris R.GM. The functional neuroanatomy of comprehension and memory: the importance of prior knowledge. Brain. 1999;122:1839–50. doi: 10.1093/brain/122.10.1839. [DOI] [PubMed] [Google Scholar]

- Marshall SK, Cohen AJ. Effects of musical soundtracks on attitudes toward animated geometric figures. Music Perception. 1988;6:95–112. [Google Scholar]

- Martin A, Weisberg J. Neural foundations for understanding social and mechanical concepts. Cognitive Neuropsychology. 2003;20:575–87. doi: 10.1080/02643290342000005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McRae K, Reiman EM, Fort CL, Chen K, Lane RD. Association between trait emotional awareness and dorsal anterior cingulate activity during emotion is arousal-dependent. Neuroimage. 2008;41:648–55. doi: 10.1016/j.neuroimage.2008.02.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michotte A. The emotions regarded as functional connections. In: Reymert ML, editor. Feelings and Emotions: the Mooseheart Symposium. New York: McGraw-Hill; 1950. pp. 114–25. [Google Scholar]

- Mobbs D, Petrovic P, Marchant JL, et al. When fear is near: threat imminence elicits prefrontal-periaqueductal gray shifts in humans. Science. 2007;317:1079–83. doi: 10.1126/science.1144298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montgomery KJ, Seeherman KR, Haxby JV. The well-tempered social brain. Psychological Science. 2009;20:1211–3. doi: 10.1111/j.1467-9280.2009.02428.x. [DOI] [PubMed] [Google Scholar]

- Moriguchi Y, Ohnishi T, Lane RD, et al. Impaired self-awareness and theory of mind: an fMRI study of mentalizing in alexithymia. Neuroimage. 2006;32:1472–82. doi: 10.1016/j.neuroimage.2006.04.186. [DOI] [PubMed] [Google Scholar]

- Morin O, Grèzes J. What is “mirror” in the premotor cortex? A review. Clinical Neurophysiology. 2008;38:189–95. doi: 10.1016/j.neucli.2008.02.005. [DOI] [PubMed] [Google Scholar]

- Murphy F, Nimmo-Smith I, Lawrence AD. Functional neuroanatomy of emotions: a meta-analysis. Cognitive, Affective, and Behavioural Neuroscience. 2003;3:207–33. doi: 10.3758/cabn.3.3.207. [DOI] [PubMed] [Google Scholar]

- Nitschke JB, Nelson EE, Rusch BD, Fox AS, Oakes TR, Davidson RJ. Orbitofrontal cortex tracks positive mood in mothers viewing pictures of their newborn infants. Neuroimage. 2004;21:583–92. doi: 10.1016/j.neuroimage.2003.10.005. [DOI] [PubMed] [Google Scholar]

- Ochsner K, Ray RR, Hughes B, et al. Bottom-up and top-down processes in emotion generation: common and distinct neural mechanisms. Psychological Science. 2009;20:1322–31. doi: 10.1111/j.1467-9280.2009.02459.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohnishi T, Moriguchi Y, Matsuda H, et al. The neural network for the mirror system and mentalizing in normally developed children: an fMRI study. Neuroreport. 2004;15:1483–7. doi: 10.1097/01.wnr.0000127464.17770.1f. [DOI] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Reviews Neuroscience. 2007;8:976–87. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Pavlova M, Sokolov AA, Sokolov A. Perceived dynamics of static images enables emotional attribution. Perception. 2005;34:1107–16. doi: 10.1068/p5400. [DOI] [PubMed] [Google Scholar]

- Peers PV, Lawrence AD. Attentional control of emotional distraction in rapid serial visual presentation. Emotion. 2009;9:140–5. doi: 10.1037/a0014507. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Mitchell TV, McKeown MJ, Goldstein J, Allison T, McCarthy G. Brain activity evoked by the perception of human walking: controlling for meaningful coherent motion. Journal of Neuroscience. 2003;23:6819–25. doi: 10.1523/JNEUROSCI.23-17-06819.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, McCarthy G, LaBar KS. Perception of dynamic changes in facial affect and identity in autism. Social Cognitive and Affective Neuroscience. 2007;2:140–9. doi: 10.1093/scan/nsm010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeifer JH, Iacoboni M, Mazziotta JC, Dapretto M. Mirroring others’ emotions relates to empathy and interpersonal competence in children. Neuroimage. 2008;39:2076–85. doi: 10.1016/j.neuroimage.2007.10.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D, Garrido L, Walsh V, Duchaine BC. Transcranial magnetic stimulation disrupts the perception and embodiment of facial expressions. Journal of Neuroscience. 2008;28:8929–33. doi: 10.1523/JNEUROSCI.1450-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pollick FE, Paterson HM, Bruderlin A, Sanford AJ. Perceiving affect from arm movement. Cognition. 2001;82:b51–61. doi: 10.1016/s0010-0277(01)00147-0. [DOI] [PubMed] [Google Scholar]

- Premack D, Premack A. Infants attribute value +/- to the goal-directed actions of self-propelled objects. Journal of Cognitive Neuroscience. 1997;9:848–56. doi: 10.1162/jocn.1997.9.6.848. [DOI] [PubMed] [Google Scholar]

- Price JL. Definition of the orbital cortex in relation to specific connections with limbic and visceral structures and other cortical regions. Annals of the New York Academy of Sciences. 2007;1121:54–71. doi: 10.1196/annals.1401.008. [DOI] [PubMed] [Google Scholar]

- Ramponi C, Barnard PJ, Nimmo-Smith I. Recollection deficits in dysphoric mood: an effect of schematic models and executive mode? Memory. 2004;12:655–70. doi: 10.1080/09658210344000189. [DOI] [PubMed] [Google Scholar]

- Rimé B, Boulanger B, Laubin P, Richir M, Stroobant K. The perception of interpersonal emotions originated by patterns of movement. Motivation and Emotion. 1985;9:241–60. [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annual Review of Neuroscience. 2004;27:169–92. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Roether CL, Omlor L, Christensen A, Giese MA. Critical features for the perception of emotion from gait. Journal of Vision. 2009;9:1–32. doi: 10.1167/9.6.15. [DOI] [PubMed] [Google Scholar]

- Rogers TT, Hocking J, Noppeney U, et al. Anterior temporal cortex and semantic memory: reconciling findings from neuropsychology and functional imaging. Cognitive, Affective and Behavioral Neuroscience. 2006;6:201–13. doi: 10.3758/cabn.6.3.201. [DOI] [PubMed] [Google Scholar]

- Romero GA, Cannon MB, Bartlett JC, Potts BT, Barchard KA. The relationship between verbal ability and levels of emotional awareness. Paper presented at the Western Psychological Association Annual Convention, Irvine, CA; 2008. [Google Scholar]

- Ruby P, Decety J. How would you feel versus how do you think she would feel? A neuroimaging study of perspective-taking with social emotions. Journal of Cognitive Neuroscience. 2004;16:988–99. doi: 10.1162/0898929041502661. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S, Naito E, Matsumura M. Enhanced neural activity in response to dynamic facial expressions of emotion: an fMRI study. Brain Research and Cognitive Brain Research. 2004;20:81–91. doi: 10.1016/j.cogbrainres.2004.01.008. [DOI] [PubMed] [Google Scholar]

- Schultz RT, Grelotti DJ, Klin A, et al. The role of the fusiform face area in social cognition: implications for the pathobiology of autism. Philosophical transactions of the Royal Society of London. Series B, Biological Sciences. 2003;358:415–27. doi: 10.1098/rstb.2002.1208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz J, Imamizu H, Kawato M, Frith CD. Activation of the human superior temporal gyrus during observation of goal attribution by intentional objects. Journal of Cognitive Neuroscience. 2004;16:1695–705. doi: 10.1162/0898929042947874. [DOI] [PubMed] [Google Scholar]

- Sinke CB, Sorger B, Goebel R, de Gelder B. Tease or threat? Judging social interactions from bodily expressions. Neuroimage. 2010;49:1717–27. doi: 10.1016/j.neuroimage.2009.09.065. [DOI] [PubMed] [Google Scholar]

- Smith KS, Berridge KC. The ventral pallidum and hedonic reward: neurochemical maps of sucrose "liking" and food intake. Journal of Neuroscience. 2005;25:8637–49. doi: 10.1523/JNEUROSCI.1902-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sollberger M, Stanley CM, Wilson SM, et al. Neural basis of interpersonal traits in neurodegenerative diseases. Neuropsychologia. 2009;47:2812–27. doi: 10.1016/j.neuropsychologia.2009.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Surguladze SA, Brammer MJ, Young AW, et al. A preferential increase in the extrastriate response to signals of danger. Neuroimage. 2003;19:1317–28. doi: 10.1016/s1053-8119(03)00085-5. [DOI] [PubMed] [Google Scholar]

- Suslow T, Donges U.-S, Kersting A, Arolt V. 20-item Toronto Alexithymia Scale: do difficulties describing feelings assess proneness to shame instead of difficulties symbolizing emotions? Scandinavian Journal of Psychology. 2000;41:329–34. doi: 10.1111/1467-9450.00205. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar Atlas of the Human Brain: 3-Dimensional Proportional System – An Approach to Cerebral Imaging. New York: Thieme Medical Publishers; 1988. [Google Scholar]

- Teasdale J, Howard R, Cox S, et al. Functional MRI study of the cognitive generation of affect. American Journal of Psychiatry. 1999;156:209–15. doi: 10.1176/ajp.156.2.209. [DOI] [PubMed] [Google Scholar]

- Thompson RH, Swanson LW. Structural characterization of a hypothalamic visceromotor pattern generator network. Brain Research. Brain Research Reviews. 2003;41:153–202. doi: 10.1016/s0165-0173(02)00232-1. [DOI] [PubMed] [Google Scholar]

- Tomassini V, Jbabdi S, Klein JC, et al. Diffusion-weighted imaging tractography-based parcellation of the human lateral premotor cortex identifies dorsal and ventral subregions with anatomical and functional specializations. Journal of Neuroscience. 2007;27:10259–69. doi: 10.1523/JNEUROSCI.2144-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, et al. Automated anatomical labeling of activation in SPM using a macroscopic parcellation of the MNI MRI single-subject brain. Neuroimage. 2002;15:273–89. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Van der Gaag C, Minderaa RB, Keysers C. Facial expressions: what the mirror neuron system can and cannot tell us. Social Neuroscience. 2007;2:179–222. doi: 10.1080/17470910701376878. [DOI] [PubMed] [Google Scholar]

- Van Overwalle F. Social cognition and the brain: a meta-analysis. Human Brain Mapping. 2009;30:829–58. doi: 10.1002/hbm.20547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–41. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vytal K, Hamman S. Neuroimaging support for discrete neural correlates of basic emotions: a voxel-based meta-analysis. Journal of Cognitive Neuroscience. 2009 doi: 10.1162/jocn.2009.21366. Nov 25 [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- Warren JE, Sauter DA, Eisner F, et al. Positive emotions preferentially engage an auditory-motor mirror system. Journal of Neuroscience. 2006;26:13067–75. doi: 10.1523/JNEUROSCI.3907-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Widen SC, Russell JA. Children acquire emotion categories gradually. Cognitive Development. 2008;23:291–312. [Google Scholar]

- Winston JS, O'Doherty J, Dolan RJ. Common and distinct neural responses during direct and incidental processing of multiple facial emotions. Neuroimage. 2003;20:84–97. doi: 10.1016/s1053-8119(03)00303-3. [DOI] [PubMed] [Google Scholar]

- Zaki J, Weber J, Bolger N, Ochsner K. The neural bases of empathic accuracy. Proceedings of the National Academy of Sciences of the United States of America. 2009;106:11382–7. doi: 10.1073/pnas.0902666106. [DOI] [PMC free article] [PubMed] [Google Scholar]