Summary

Prolonged viewing of a stimulus results in a subsequent perceptual bias [1], [2] and [3]. This perceptual adaptation and the resulting aftereffect reveal important characteristics regarding how perceptual systems are tuned [2], [4], [5] and [6]. These aftereffects occur not only for simple stimulus features but also for high-level stimulus properties [7], [8], [9] and [10]. Here we report a novel cross-category adaptation aftereffect demonstrating that prolonged viewing of a human body without a face shifts the perceptual tuning curve for face gender and face identity. This contradicts a central assumption underlying perceptual adaptation: that adaptation depends on physical similarity between how the adapting and the adapted features are perceived [5]. Additionally, this aftereffect was not due to response bias, because its dependence on adaptation duration resembled traditional perceptual aftereffects. These body-to-face adaptation results demonstrate that bodies alone can alter the tuning properties of neurons that code for the gender and identity of faces. More generally, these results reveal that high-level perceptual adaptation can occur when the property or features being adapted are automatically inferred rather than perceived in the adapting stimulus.

Results

It has been known since the time of Aristotle that prolonged viewing of a stimulus causes a subsequent perceptual bias [1]. For example, after viewing a moving stimulus, a subsequent stationary stimulus appears to drift in the opposite direction. This perceptual adaptation, and its subsequent contrastive aftereffect, reflects the process of neural systems rapidly and dynamically recalibrating their responses to more efficiently react to current conditions [4], [5] and [11]. Perceptual adaptation is thought to reflect the recalibration of the tuning of neurons sensitive to the properties of the adapting stimulus as a result of prolonged activation of these neurons [2] and [6]. The aftereffect is the measurable result of perceptual changes induced by this recalibration of neural tuning.

It was long thought that only simple stimulus features could be adapted, such as motion and color. However, recent results demonstrate that adaptation occurs for high-level stimulus properties as well, such as object shape [9], perceived numerosity [7], and face properties [8], [10], [12], [13], [14] and [15]. For example, prolonged viewing of a male face can make subsequently seen faces appear more feminine than they normally would [10]. A similar type of perceptual aftereffect occurs when judging the identity of a face [8] and [12].

These face adaptation aftereffects have typically been interpreted as revealing a “face space” in the brain that represents the visual features, and their configuration, used to determine face gender and identity. A central premise arising from this interpretation is that the neurons adapted by this behavioral technique, i.e., the neurons that represent the face space, respond selectively to faces and not other objects [8] and [16]. This premise is based on a central property of adaptation: perceptual adaptation depends on the similarity between the perception of the features in the adapting stimulus and features that are adapted [5]. (It is worth noting that neither adaptation to an illusory stimulus [e.g., illusory contours] nor adaptation to an imagined stimulus contradicts this property of adaptation. This is because in both of these cases, the features in the adapting stimulus and features that are adapted are perceived as similar.) Here we present data that challenge the premise that neurons that represent the face space respond exclusively to faces by demonstrating that face aftereffects result from adaptation to nonface stimuli.

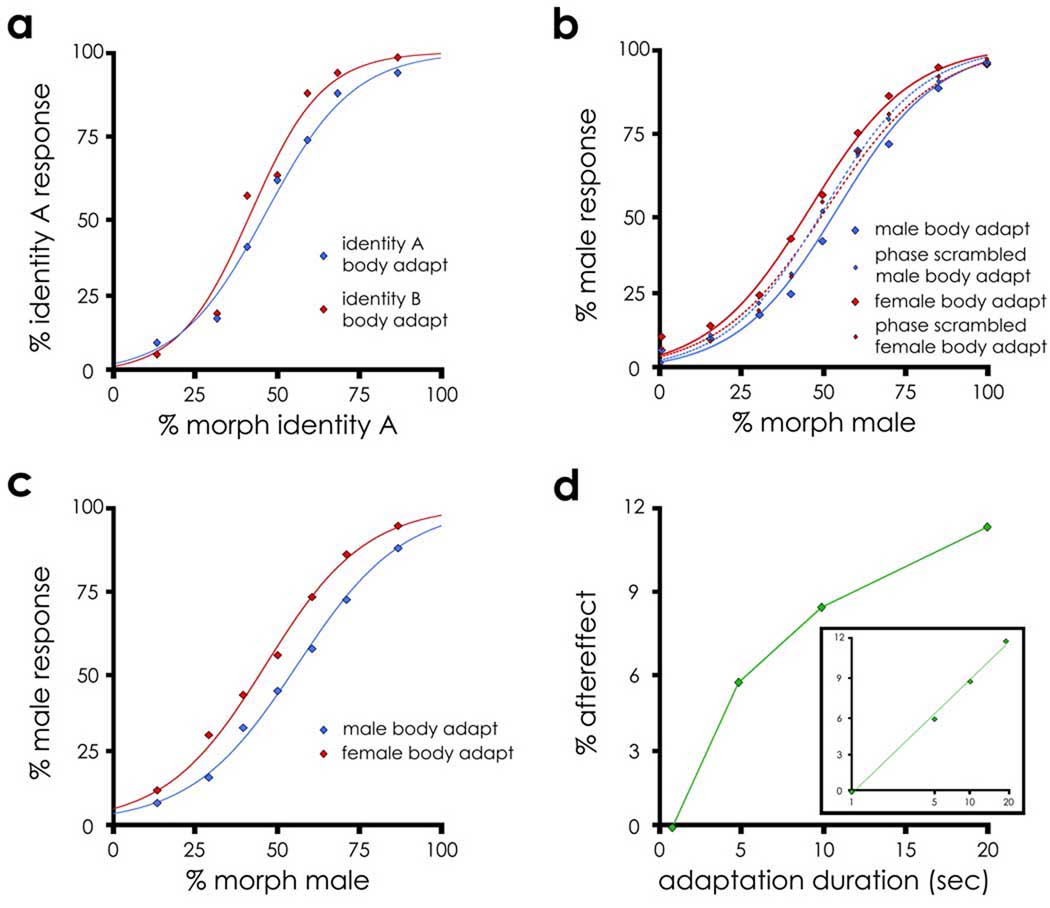

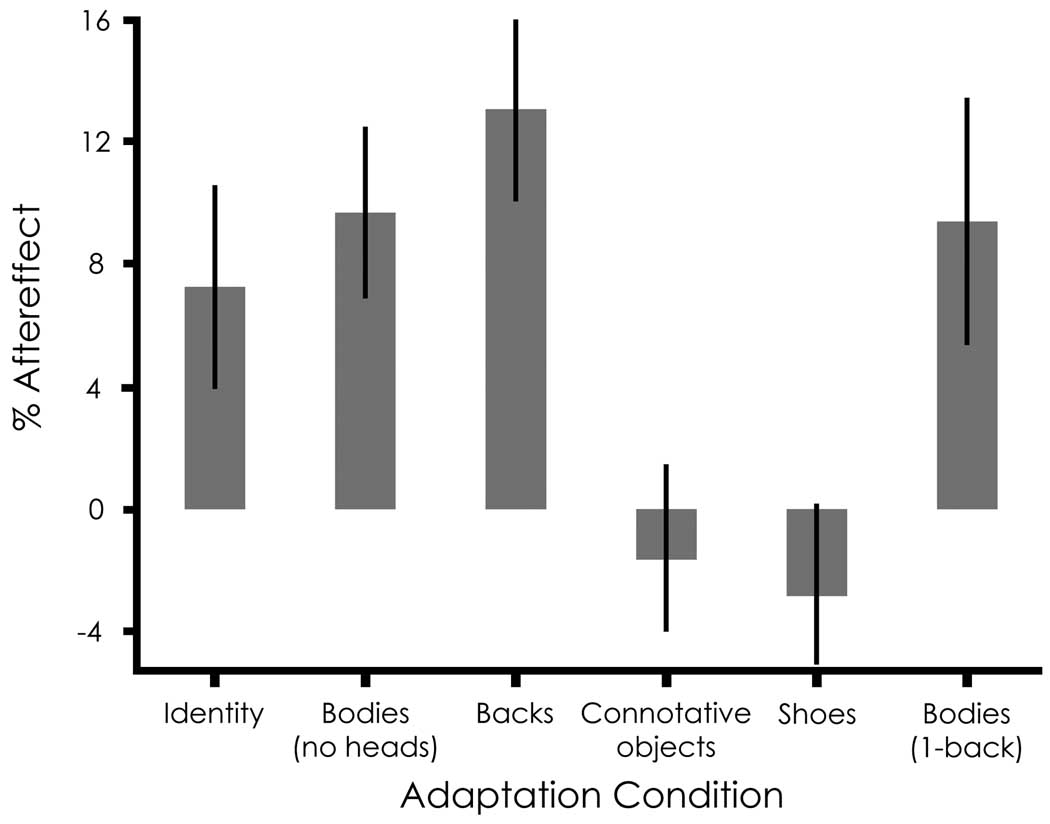

In experiment 1, subjects were trained to identify two individuals by studying photographs that included their faces and bodies (Figure 1A). Following training, subjects were asked to identify faces created by morphing along a continuum between the two previously learned individuals (Figure 1B). Prior to each face identification trial, subjects saw a photograph of only the body of one of the individuals for 5 s (Figure 1C). We found that after adapting to the body of one individual, perception was biased such that the morphed faces were more likely to be judged as depicting the other, nonadapted individual (Figure 2A). The magnitude of this aftereffect was 7.9% (Figure 3; main effect of adaptation condition F1,11 = 4.89, p = 0.049). This change in the perceived identity of the faces is consistent with a shift in the sensitivity to features of the face associated with the adapting body. This sensitivity shift, or renormalization [4] and [11], was similar to the aftereffect that would occur if subjects had been adapted to that individual's face (albeit to a lesser extent [8]). Thus, adaptation to a body altered the face space even though the adapting stimulus did not contain a face.

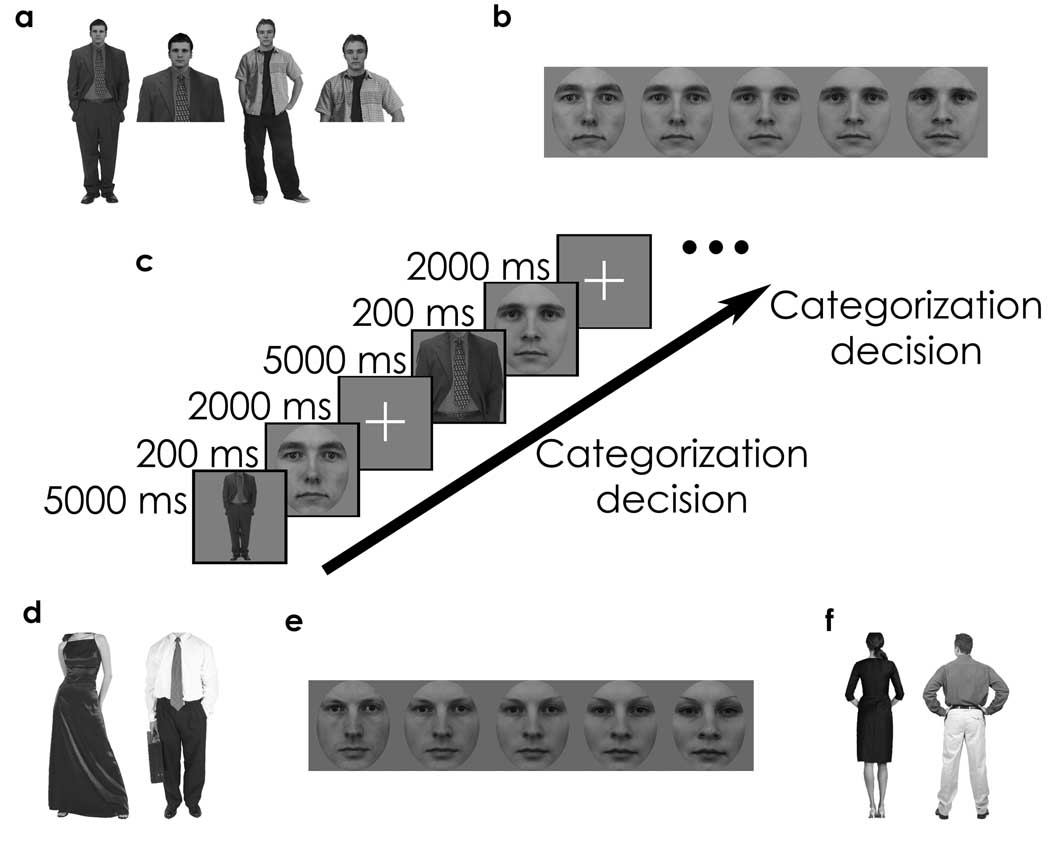

Figure 1. Examples of Stimuli and Paradigm.

(A) Training stimuli for the body-to-face identity adaptation experiment (experiment 1). During the adaptation phase, the same images were cropped so that the face was not visible (see C).

(B) Examples of 0%, 25%, 50%, 75%, and 100% identity face morphs (experiment 1).

(C) Examples of two adaptation trial sequences. Each trial consisted of an adapting body presented for 5000 ms followed by a face presented for 200 ms. Subjects were asked to make an identity or gender decision of the face during the presentation of the fixation cross.

(D) Gender-specific bodies from the neck down (experiments 2A, 3, and 5).

(E) Examples of 0%, 25%, 50%, 75%, and 100% male-to-female face morphs (experiments 2A, 2B, 3, 4A, 4B, and 5).

(F) Gender-specific bodies photographed from behind (experiment 2B).

Figure 2. Prolonged Exposure to Bodies Adapts Face Perception.

(A) Face identity discrimination performance after adapting to bodies (experiment 1). Best-fit cumulative Gaussian curves are shown as well.

(B) Gender discrimination performance after adapting to headless male or female bodies and to their phase-scrambled control images (experiment 2A).

(C) Gender discrimination performance after adapting to back views of male or female bodies (experiment 2B).

(D) Magnitude of gender adaptation aftereffect with respect to adaptation duration shown on a linear scale and on a semilog scale (inset) (experiment 3).

Figure 3. Summary of Aftereffect Magnitude across Experiments.

Mean and standard error of aftereffects for each adaptation condition. Specifically, body-to-face identity adaptation (experiment 1) and the six gender adaptation experiments: body photographs from the neck down (experiment 2A), body photographs taken from behind (experiment 2B), connotative objects (experiment 4A), men's and women's shoes (experiment 4B) as adaptors, and body photographs from the neck down viewed while subjects performed a 1-back task (experiment 5). Evaluation of aftereffects with the point of subjective equality yielded the same results (see Table S1).

One possible explanation for this result is that seeing a body evoked a highly detailed mental image of the specific face that subjects had learned to associate with that body. To address this possibility, we examined adaptation via a paradigm that did not require subjects to learn an association between particular bodies and faces. Specifically, we asked whether the gender of a body would influence the perceived gender of a subsequently viewed face. Subjects viewed photographs of headless male or female bodies for 5 s (experiment 2A; Figure 1D). In addition, phase-scrambled images of male or female bodies were used to provide neutral adaptation control conditions. We then measured whether the perceived gender of faces (Figure 1E) was altered depending on whether subjects had been adapted to male or female bodies. Similar to the body-to-face identity adaptation effect described above, face perception was significantly biased away from the gender of the adapting body. The magnitude of this aftereffect was 9.7% (Figure 2B; Figure 3; main effect of adaptation condition F1,11 = 11.76, p = 0.006). This shift suggests that viewing bodies adapts the perception of face-specific visual features and their configuration, in this case adapting the perception of the facial properties involved in determining gender, even when there was no specific face in memory associated with the adapting body. No aftereffect was seen for male and female phase-scrambled bodies (Figure 2B; aftereffect magnitude = −0.72%, main effect of adaptation condition F1,11 < 1.0). In addition, to test whether body-to-face adaptation was due to face information being conspicuously cropped out of the adapting photographs, the same paradigm was repeated with photographs of the back view of males and females (including the back of the head; experiment 2B; Figure 1F). Again, face perception was biased away from the gender of the adapted body (Figure 2C; Figure 3; aftereffect magnitude = 13.06%, main effect of adaptation condition F1,11 = 21.36, p < 0.001). Taken together, these results suggest that bodies without visible faces can adapt neurons that represent face-specific visual features, even when there is no learned association between those bodies and any specific face.

A critical issue is whether these aftereffects were truly a result of perceptual adaptation or whether they were due to some other, perhaps strategic, process that could produce a response or decision bias that was not perceptual in origin. A defining characteristic of perceptual adaptation is that the magnitude of adaptation builds up logarithmically with longer exposure to the adapting stimulus [3], [17], [18] and [19]. The magnitude of a strategic process, on the other hand, is unlikely to be logarithmically related to the exposure duration of the adapting stimulus. Therefore, we repeated the gender body-to-face adaptation paradigm, except that the adapting bodies were viewed for 1, 5, 10, and 20 s and the male-body and female-body adaptation trials were randomly presented rather than being blocked by gender (experiment 3). Consistent with the perceptual adaptation account, the magnitude of the aftereffect increased with adaptation duration (F3,33 = 3.14, p = 0.038), with the shape of the psychometric curve over the adaptation durations well fit by a logarithmic function (Figure 2D). The logarithmic relationship between adaptation duration and the magnitude of the aftereffect strongly suggests that body-to-face adaptation leads to a genuine perceptual aftereffect whose temporal dynamics resembles that of the tilt or motion aftereffects [3], [17] and [18], as well as more standard face adaptation aftereffects [19].

One possible explanation for our findings is that bodies adapt a general, abstract representation of identity and/or gender that then biases face perception. To evaluate this possibility, we repeated the adaptation paradigm described above with photographs of gender-specific objects (football helmet, purse, etc. [20]; experiment 4A). If a nonspecific representation of gender is being adapted, then any gender-specific object should bias face gender perception, not just bodies. Prolonged exposure to gender-connotative pictures, however, failed to produce a face gender aftereffect (Figure 3; aftereffect magnitude = −1.64%, main effect of adaptation condition F < 1.0). Moreover, a failure to elicit a gender-specific face aftereffect was also found when a single, strongly gender-biased object category (shoes) was used as the adapting stimulus (experiment 4B; Figure 3; aftereffect magnitude = −2.8%, main effect of adaptation condition F1,11 = 1.41, p = 0.24). These negative results occurred even though the subjects in these gender-biased object studies unanimously reported being aware that the adapting stimuli were gender specific. These results suggest that this face aftereffect was specific to body perception in experiments 2 and 3 rather than gender per se. Thus, it appears that cross-category face-gender adaptation is dependent on preexposure to stimuli for which gender is an intrinsic rather than a culturally determined property.

An additional question that remains is: could body-to-face adaptation simply be a by-product of subjects explicitly imagining a face when they viewed the bodies? Recent studies are mixed on whether explicit face imagery can induce face adaptation [21], [22] and [23]. Regardless, there are three points that make it unlikely that explicit imagery could explain body-to-face adaptation. First, in those previous studies, subjects were given a specific face to explicitly imagine. In our studies, subjects were asked to passively view a body for which there was no associated face (i.e., the adapting stimuli were unfamiliar bodies in experiments 2A, 2B, and 3). Second, we asked subjects in a postexperimental debrief whether they imagined a face when they saw the bodies. A small minority of subjects (5 out of 24 in experiments 2A and 2B) said that they were aware of occasionally imagining faces. However, the body-to-face adaptation results remained significant even when these subjects were excluded. Finally, we ran a control experiment (experiment 5) where subjects' attention and working memory were occupied to help suppress explicit imagery. In this experiment, we replicated the gender body-to-face adaptation experiment (experiment 2A), except that subjects viewed a series of male or female bodies during adaptation (instead of a single body shown for 5 s). Each body was shown for 950 ms each with a 50 ms blank screen presented between successive bodies during the adaptation period. Subjects performed a 1-back (repetition detection) task on these bodies. For this experiment (and experiment 3, which used the same task), no subject reported imagining a face during the presentation of the body. Nonetheless, significant body-to-face adaptation was seen (Figure 3; aftereffect magnitude = 8.4%, main effect of adaptation condition F1,11 = 6.93, p = 0.023). These results strongly suggest that body-to-face adaptation is not dependent on subjects explicitly imagining faces while viewing the bodies.

Discussion

What does body-to-face adaptation tell us about the architecture of the body- and face-processing systems? Perceptual aftereffects are assumed to occur because of changes in response properties of the neurons sensitive to the features of the adapted stimulus resulting from their sustained activation [2], [4], [5] and [11]. Body-to-face daptation demonstrates that bodies alone can activate the network that codes for the visual information used to determine the gender and identity of faces.

There are two types of mechanisms that could account for this effect. One possibility is that viewing bodies may lead to a top-down activation of the neural representation of face-specific visual features and their configuration automatically inferred from the body. This activation leads to adaptation of the neural circuitry that processes these face-specific visual properties, through a similar mechanism as that used when viewing a face. Alternatively, visual information about bodies and faces is coded together in a single representational space in the brain, and this face-body space is adapted as a result of prolonged viewing of the body alone.

One possible neural locus of the body-to-face adaptation effect is in the fusiform gyrus. The fusiform gyrus contains both a brain region critical for face processing (the fusiform face area [FFA] [24] and [25]) and an abutting region involved in body processing (the fusiform body area [FBA] [26] and [27]). The close proximity of these body- and face-responsive regions may facilitate the lateral or top-down neural interactions required for body-to-face adaptation effects. However, the literature is mixed regarding whether the neural substrate that codes for face identity and gender is in the FFA or is downstream from the FFA (e.g., [28] and [29]). Therefore, it may be that these effects occur downstream of the FFA/FBA, reflect adaptation at multiple processing stages, and/or depend on interactions between multiple processing stages and brain regions [12], [19] and [30]. Regardless of its neural substrate, based on biophysical models of perceptual adaptation [2], [4], [5] and [11], bodies without faces activate, and adapt the tuning of, the neurons that underlie face, body, and gender perception.

Body-to-face adaptation demonstrates that high-level perceptual aftereffects can occur when the to-be-adapted features are not present in the adapting stimulus but rather are automatically inferred from the presentation of a conceptually related object. This aftereffect does not occur in response to any conceptual relationship. Instead, it seems to depend on the intrinsic relationship between bodies and faces. That is, the aftereffect is dependent on an inherent, rather than an arbitrary, association. In a more general sense, our findings provide compelling evidence for a central tenet of embodied cognition: the conceptual system is grounded in perception [31] and [32].

Experimental Procedures

Target face stimuli for all experiments were constructed from photographs of 18 male and 18 female frontal-view faces with neutral expressions from the Karolinska Directed Emotional Faces stimulus set [33]. Morpheus Photo Morpher was used to morph between pairs of male faces for experiment 1 (Figure 1B) and to create male-to-female face morph continua (Figure 1E) for all other experiments. Body (Figures 1D and 1F) and face stimuli were resized with Photoshop to best fill a gray square presented in the middle of the screen that subtended approximately 6.5° of visual angle. Photographs of gender-connotative objects (experiment 4A) were taken from a previously normed set [20]; shoe images (experiment 4B) were taken from the Internet.

For all experiments except experiments 3 and 5, each adaptation trial began with subjects viewing an adaptation image (a body or an object) for 5 s. Following adaptation, a target face was presented for 200 ms followed by a 2000 ms fixation cross in the center of the screen. Subjects made a two-alternative forced-choice response to classify the face as quickly and accurately as possible (Figure 1C). Each experimental session was divided into blocks of trials. The adaptation condition (i.e., gender or identity) was held constant in each block of trials with a 1 min break between blocks (except for experiment 3; see below).

In experiment 1, the adaptation trials were preceded by a training session. During training, subjects learned to identify two individuals by viewing images of their faces and bodies (Figure 1A) paired with their names.

In experiment 3, the duration of the adaptation period and the gender of the body varied in pseudorandom order from trial to trial. Trial durations were set at 1, 5, 10, and 20 s, during which the body photographs flashed on for 950 ms and off for 50 ms. During these adaptation periods, subjects performed a repetition-detection task via button press. Presentation conditions and task when viewing the morphed target faces were identical to the previously described procedure.

In experiment 5, the paradigm was identical to experiment 2A, except that the body photographs flashed on for 950 ms and off for 50 ms and the repetition-detection task was used, as in experiment 3.

See Supplemental Experimental Procedures available online for further details regarding the methods.

Supplementary Material

Acknowledgments

We thank D. Leopold and C. Baker for their insightful comments. This work was supported by the Intramural Program of the National Institutes of Health, National Institute of Mental Health.

References

- 1.Aristotle. Aristotle: Parva Naturalia. New York: Clarendon Press; 2001. [Google Scholar]

- 2.Barlow HB, Hill RM. Evidence for a physiological explanation of the waterfall phenomenon and figural aftereffects. Nature. 1963;200:1345–1347. doi: 10.1038/2001345a0. [DOI] [PubMed] [Google Scholar]

- 3.Gibson JJ, Radner M. Adaptation, aftereffect, and contrast in the perception of tilted lines. I. Quantitative studies. J. Exp. Psychol. 1937;20:453–467. [Google Scholar]

- 4.Clifford CWG, Rhodes G, editors. Fitting the Mind to the World, Volume 1. Oxford, England: Oxford University Press; 2005. [Google Scholar]

- 5.Kohn A. Visual adaptation: physiology, mechanisms, and functional benefits. J. Neurophysiol. 2007;97:3155–3164. doi: 10.1152/jn.00086.2007. [DOI] [PubMed] [Google Scholar]

- 6.Kohn A, Movshon JA. Adaptation changes the direction tuning of macaque MT neurons. Nature neuroscience. 2004;7:764–772. doi: 10.1038/nn1267. [DOI] [PubMed] [Google Scholar]

- 7.Burr D, Ross J. A visual sense of number. Curr Biol. 2008;8:425–428. doi: 10.1016/j.cub.2008.02.052. [DOI] [PubMed] [Google Scholar]

- 8.Leopold DA, O'Toole AJ, Vetter T, Blanz V. Prototype-referenced shape encoding revealed by high-level aftereffects. Nat. Neurosci. 2001;4:89–94. doi: 10.1038/82947. [DOI] [PubMed] [Google Scholar]

- 9.Suzuki S, Cavanagh P. A shape-contrast effect for briefly presented stimuli. J. Exp. Psychol. Hum. Percept. Perform. 1998;24:1315–1341. doi: 10.1037//0096-1523.24.5.1315. [DOI] [PubMed] [Google Scholar]

- 10.Webster MA, Kaping D, Mizokami Y, Duhamel P. Adaptation to natural facial categories. Nature. 2004;428:557–561. doi: 10.1038/nature02420. [DOI] [PubMed] [Google Scholar]

- 11.Clifford CW, Webster MA, Stanley GB, Stocker AA, Kohn A, Sharpee TO, Schwartz O. Visual adaptation: neural, psychological and computational aspects. Vision Res. 2007;47:3125–3131. doi: 10.1016/j.visres.2007.08.023. [DOI] [PubMed] [Google Scholar]

- 12.Afraz SR, Cavanagh P. Retinotopy of the face aftereffect. Vision Res. 2008;48:42–54. doi: 10.1016/j.visres.2007.10.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Fang F, He S. Viewer-centered object representation in the human visual system revealed by viewpoint aftereffects. Neuron. 2005;45:793–800. doi: 10.1016/j.neuron.2005.01.037. [DOI] [PubMed] [Google Scholar]

- 14.Rhodes G, Jeffery L, Watson TL, Clifford CW, Nakayama K. Fitting the mind to the world: face adaptation and attractiveness aftereffects. Psychol. Sci. 2003;14:558–566. doi: 10.1046/j.0956-7976.2003.psci_1465.x. [DOI] [PubMed] [Google Scholar]

- 15.Webster MA, MacLin OH. Figural aftereffects in the perception of faces. Psychonomic bulletin & review. 1999;6:647–653. doi: 10.3758/bf03212974. [DOI] [PubMed] [Google Scholar]

- 16.Kanwisher N, Yovel G. The fusiform face area: a cortical region specialized for the perception of faces. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 2006;361:2109–2128. doi: 10.1098/rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Greenlee MW, Magnussen S. Saturation of the tilt aftereffect. Vision Res. 1987;27:1041–1043. doi: 10.1016/0042-6989(87)90017-4. [DOI] [PubMed] [Google Scholar]

- 18.Hershenson M. Linear and rotation motion aftereffects as a function of inspection duration. Vision Res. 1993;33:1913–1919. doi: 10.1016/0042-6989(93)90018-r. [DOI] [PubMed] [Google Scholar]

- 19.Leopold DA, Rhodes G, Muller KM, Jeffery L. The dynamics of visual adaptation to faces. Proc. R. Soc. B. 2005;272:897–904. doi: 10.1098/rspb.2004.3022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Lemm KM, Dabady M, Banaji MR. Gender picture priming: It works with denotative and connotative pictures. Social Cognition. 2005;23:218–241. [Google Scholar]

- 21.Hills PJ, Elward RL, Lewis MB. Identity adaptation is mediated and moderated by visualisation ability. Perception. 2008;37:1241–1257. doi: 10.1068/p5834. [DOI] [PubMed] [Google Scholar]

- 22.Moradi F, Koch C, Shimojo S. Face adaptation depends on seeing the face. Neuron. 2005;45:169–175. doi: 10.1016/j.neuron.2004.12.018. [DOI] [PubMed] [Google Scholar]

- 23.Ryu JJ, Borrmann K, Chaudhuri A. Imagine Jane and identify John: face identity aftereffects induced by imagined faces. PLoS ONE. 2008;3:e2195. doi: 10.1371/journal.pone.0002195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Puce A, Allison T, Asgari M, Gore JC, McCarthy G. Differential sensitivity of human visual cortex to faces, letterstrings, and textures: a functional magnetic resonance imaging study. J. Neurosci. 1996;16:5205–5215. doi: 10.1523/JNEUROSCI.16-16-05205.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Peelen MV, Downing PE. Selectivity for the human body in the fusiform gyrus. J. Neurophysiol. 2005;93:603–608. doi: 10.1152/jn.00513.2004. [DOI] [PubMed] [Google Scholar]

- 27.Schwarzlose RF, Baker CI, Kanwisher N. Separate face and body selectivity on the fusiform gyrus. J. Neurosci. 2005;25:11055–11059. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hadjikhani N, de Gelder B. Neural basis of prosopagnosia: an fMRI study. Human brain mapping. 2002;16:176–182. doi: 10.1002/hbm.10043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kriegeskorte N, Formisano E, Sorger B, Goebel R. Individual faces elicit distinct response patterns in human anterior temporal cortex. Proceedings of the National Academy of Sciences of the United States of America. 2007;104:20600–20605. doi: 10.1073/pnas.0705654104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kovacs G, Zimmer M, Harza I, Antal A, Vidnyanszky Z. Position-specificity of facial adaptation. Neuroreport. 2005;16:1945–1949. doi: 10.1097/01.wnr.0000187635.76127.bc. [DOI] [PubMed] [Google Scholar]

- 31.Barsalou LW. Grounded cognition. Annu. Rev. Psychol. 2008;59:617–645. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- 32.Martin A. The representation of object concepts in the brain. Annu. Rev. Psychol. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- 33.Lundqvist D, Flykt A, Öhman A. The Karolinska Directed Emotional Faces. 1998 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.