Abstract

Our sense of relative timing is malleable. For instance, visual signals can be made to seem synchronous with earlier sounds following prolonged exposure to an environment wherein auditory signals precede visual ones. Similarly, actions can be made to seem to precede their own consequences if an artificial delay is imposed for a period, and then removed. Here, we show that our sense of relative timing for combinations of visual changes is similarly pliant. We find that direction reversals can be made to seem synchronous with unusually early colour changes after prolonged exposure to a stimulus wherein colour changes precede direction changes. The opposite effect is induced by prolonged exposure to colour changes that lag direction changes. Our data are consistent with the proposal that our sense of timing for changes encoded by distinct sensory mechanisms can adjust, at least to some degree, to the prevailing environment. Moreover, they reveal that visual analyses of colour and motion are sufficiently independent for this to occur.

Keywords: timing perception, perceptual binding, colour, motion

1. Introduction

Cross-modal temporal recalibration refers to a shift in the point of subjective simultaneity (PSS) between two types of event following prolonged exposure to asynchronous inputs. For instance, repeated exposures (adaptation) to sights that lead corresponding sounds can shift the PSS for vision and audition towards earlier sights. Conversely, adaptation to sights that lag sounds can have the opposite effect [1–3]. These data seem to suggest that our sense of relative timing can adjust according to the prevailing environment. Thus if sounds typically lag sights in the environment, this relationship will be judged as typical, and therefore as synchronous. Moreover, if the environment changes, such that sounds are made to lead sights, this new relationship will be judged as typical and therefore as synchronous.

Cross-modal temporal recalibration has been demonstrated for all combinations of signals paired from vision, audition and touch [4,5]. However, it is unclear if temporal recalibration can be observed for events encoded within a single sensory modality.

Temporal recalibration necessitates that the timing of the affected events be encoded somewhat independently, thereby allowing for relative shifts. Combinations of events from separate sensory modalities might therefore be ideally suited. However, there is ample evidence for functional specialization within the human visual system. For instance, distinct cortical structures seem to be specialized for the analysis of different stimulus properties, such as colour and movement [6,7]. The fact that these functionally specialized areas are located in different regions of the brain is presumably a prerequisite for the selective visual deficits that can arise following focal brain damage. Such deficits have been documented for both colour [8] and motion perception [9]. However, while there is ample evidence for independent coding of colour and motion, it is also well established that at least some visual neurons are sensitive to both colour and direction of motion [10–12]. Thus, it is unclear whether analyses of colour and motion are sufficiently independent to allow for adaptation-induced shifts in relative timing.

While there is some debate concerning whether functional specialization within the human visual system [7] creates a need for integrative processes [11], several perceptual phenomena seem to confirm this necessity. For instance, when a stimulus contains multiple colours and directions of motion, perceptual pairings of colour and motion can be systematically and persistently erroneous [13]. This not only confirms the existence of an integrative process, by which colour and motion signals are combined to form a coherent whole, but establishes that this process is error prone. Our sense of relative timing for colour and direction changes is similarly susceptible to error, with colour changes often seeming to precede physically synchronous direction changes ([14–18]; but see [19]).

The independence of colour and motion processing suggested by previous studies [8,9,13,17,18] encouraged us to examine the possibility that our sense of timing for these attributes might, at least to some extent, adjust according to the dynamics of the contemporary environment. The results of the following experiment answer this question in the affirmative.

2. Methods

Stimuli were generated using Matlab software to drive a VSG 2/3F stimulus generator (Cambridge Research Systems) and were displayed on a gamma-corrected Sony Trinitron G420 monitor at a resolution of 1024 × 768 pixels and a refresh rate of 120 Hz. All stimuli were viewed from 57 cm, with the observer's head restrained by a chinrest.

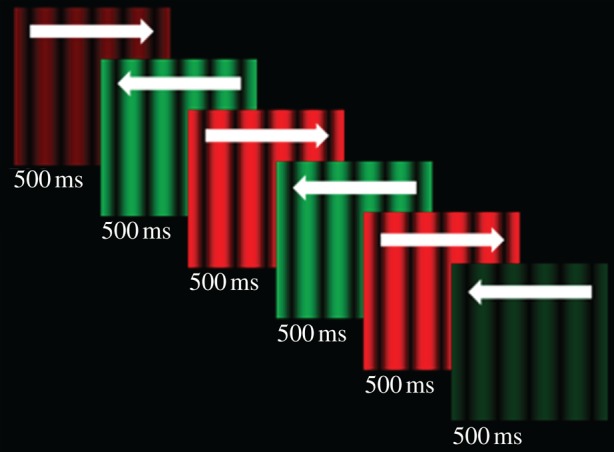

The stimulus, depicted in figure 1a, consisted of a sinusoidal luminance-modulated grating with a Michelson contrast of 100 per cent and a spatial frequency of one cycle per degree of visual angle presented against a black background. The grating waveform drifted at a rate of 10 Hz, and reversed direction (left/right) at a periodicity of 1 Hz. The grating also alternated in colour at a rate of 1 Hz, between a peak red (CIE x = 0.614, y = 0.342, Y = 17) and peak green (CIE x = 0.276, y = 0.607, Y = 17). This basic stimulus was used as both an adapting and test stimulus.

Figure 1.

Depiction of a test stimulus presentation. The stimulus oscillated in colour (green/red) and direction of motion (left/right). The peak luminance of the stimulus was linearly ramped on and off at the beginning and the end of each test presentation (for 500 ms). Here, we have depicted a situation wherein changes in direction and colour were physically synchronous, but in most trials colour changes were offset relative to direction changes. We have also depicted a situation wherein the start of the animation cycle is synched with the start of the test presentation. However, the starting point, in terms of the animation cycle, was determined at random on a trial-by-trial basis.

There were three types of runs of trials. In baseline runs of trials, participants simply viewed and made judgments of test stimuli. During adaptation runs of trials, participants passively viewed adapting stimuli prior to each test stimulus presentation. On the first trial, the adapting stimulus presentation persisted for 45 s, whereas on most subsequent trials the adapting stimulus presentation persisted for 6 s. There was, however, another 45 s presentation of the adapting stimulus on the 33rd trial during each adaptation run of trials. There was a one-second blank ISI between each adaptation and test stimulus presentation. During separate runs of trials, colour changes in the adapting stimulus either preceded (colour lead) or succeeded (colour lag) direction changes by 200 ms. All other features of the adapting stimulus animation were as for the test stimulus.

Each presentation of the test stimulus persisted for 3 s. The luminance of the test stimulus was linearly ramped on and off, from black, for 500 ms at the start and the end of each test presentation—so the test stimulus seemed to fade in and out. Participants saw two cycles of the test stimulus animation at full luminance on each trial. The starting point, in terms of the test stimulus animation cycle, was randomized on a trial-by-trial basis. After viewing each test stimulus participants were required to indicate if the colour and direction changes had been synchronous or asynchronous.

During each run of trials, the physical timing of test colour changes, relative to direction changes, was manipulated (±250 ms) according to the method of constant stimuli. A complete run of trials involved six presentations of 11 colour/direction change timing relationships, 66 trials in total.

There were five observers, all naive as to the purpose of the study. On separate days, each observer completed two baseline runs of trials and then two adaptation runs of trials, with colour changes that either preceded or lagged direction changes. The order in which the adaptation conditions were completed was determined in a pseudo-random fashion, with two observers completing the colour leading condition and then the colour lagging. Other observers completed the conditions in the reverse order.

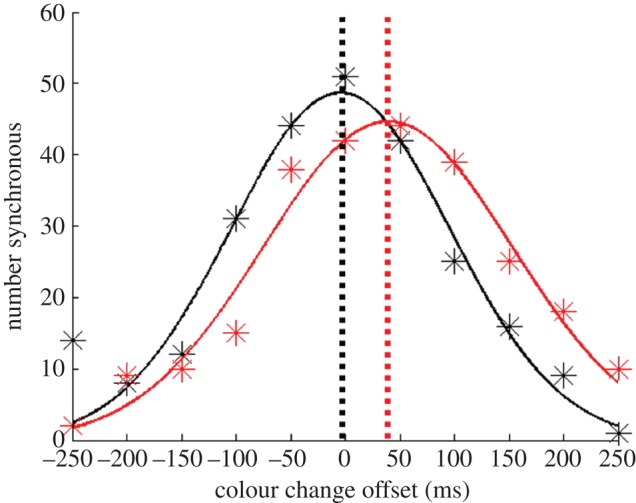

Data from each run of trials provided a distribution of perceived colour/direction change synchrony as a function of physical relative timing. Data from the two baseline runs of trials were collated and compared with data from the subsequent two adaptation runs of trials completed by the same observer. Gaussian functions were fitted to these data, and the peaks of the fitted functions taken as individual estimates of the PSS for colour and direction changes during the relevant runs of trials (figure 2).

Figure 2.

Gaussian functions fitted to distributions of reported colour/motion synchrony, summed across all participants, as a function of the physical timing of colour changes relative to direction changes. Direction changes occurred at 0 ms. Data are derived from trial runs involving adaptation to colour changes that preceded (black) and lagged (red) direction changes by 200 ms. These data depictions are provided for illustrative purposes only. Statistical analyses were based on individual data fits, with differences taken from PSS estimates from runs of trials with and without adaptation.

3. Results

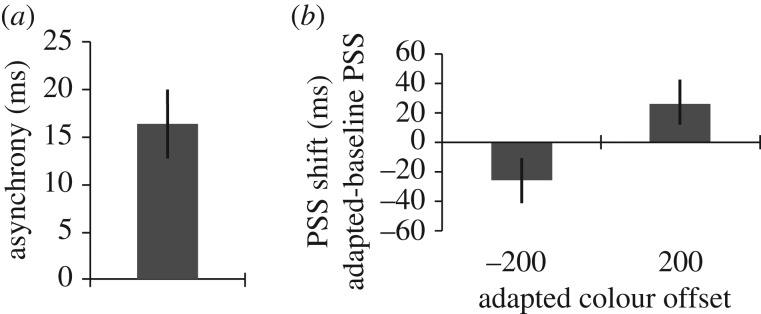

In figure 3a, we have depicted the average PSS for colour and direction changes during baseline runs of trials. These data show that, in the absence of adaptation, colour changes seemed to be coincident with direction changes when they lagged the direction changes by approximately 19 ms (±5, t4 = 4.54, p = 0.01).

Figure 3.

Bar graphs depicting the results of this study. Error bars denote standard error. (a) Colour/motion perceptual asynchrony in baseline runs of trials. (b) Shift in the PSS for colour and direction of motion changes, from baseline, after adaptation to colour changes that led (−200 ms) and lagged (+200 ms) colour changes.

In figure 3b, we have depicted the magnitudes of PSS shifts following adaptation to colour changes that preceded and lagged direction changes by 200 ms. Shifts were calculated relative to the immediately preceding baseline sessions. In both cases adaptation shifted the PSS towards the adapted relationship resulting in substantial differences between the PSS shifts following adaptation to leading (−26 ms ± 13) and lagging (26 ms ± 13) colour changes (paired t4 = 3.49, p = 0.025).

4. Discussion

Our data show that the apparent relative timing of colour and direction changes is malleable. Prolonged exposure, i.e. adaptation, to temporally offset colour and direction changes shifted the PSS for these changes towards the adapted relationship. This suggests that our sense of timing for different visual events is shaped by the dynamics of the prevailing environment. If colour changes are made to lag direction changes, this relationship will begin to seem normal and therefore synchronous. The reverse situation can be induced by making colour changes lead direction changes.

Our data are consistent with recent studies concerning the apparent timing of changes encoded in different sensory modalities [1–5]. Here too the apparent timing of different stimulus changes can be drawn towards an adapted relationship. Presumably this type of relative shift is only possible because the apparent timing of the affected changes is encoded somewhat independently. Our data, therefore, demonstrate that timing codes for visual colour and direction changes are sufficiently independent to allow for relative shifts of perceived timing.

Our baseline trials reflect the apparent timing of colour and direction changes without adaptation, and are consistent with colour changes seeming to coincide with physically delayed (approx. 19 ms) direction changes. Larger colour advantages (approx. 100 ms) have been suggested by studies asking people to judge if a specific colour is predominantly paired with one direction of motion or another [17,18]. However, the magnitude of this effect is variable [15] and task dependent. For instance, one study asked people to judge the order in which colour and direction changes occurred, and found no evidence of an advantage for colour [16]. Other studies have used tasks like ours (asking people if colour and direction changes were synchronous) and have found robust advantages for colour [14,19]. Reaction times to changes in colour and direction also reflect a small advantage for colour [20]. Our baseline data are thus broadly consistent with the literature on perceived timing for colour and direction changes, which reflects a high degree of independence, with physically asynchronous inputs often judged as synchronous.

While our data are consistent with the impact of adapting to combinations of temporally offset cross-modal events, with adapted offsets beginning to look more synchronous than they did previously [1–3], there is an apparently opposite effect. When examining the timing of tactile events, participants seem to incorporate the statistics of prior exposures, which is referred to as a process of ‘Bayesian calibration’ [21]. In this case it would appear that experience of the offset between the adapted pair of tactile events is unchanged. Instead, other timing relationships, that had seemed synchronous in the absence of adaptation, are interpreted as being like the adapted pair—asynchronous [21]. We are not sure why exposure to offset tactile events results in this reversed temporal distortion. One possibility is that a Bayesian calibration will take place when making intra-modal judgments about identical features (e.g. changes in intensity at different spatial locations). However, our data concerning adaptation to an asynchrony signalled via distinct visual changes dictates that the discrepancy cannot be a necessary consequence of adapting and testing within a single sensory modality.

Given the functional architecture of sensory processing, determining whether perceptual events are synchronous or asynchronous is a non-trivial problem. A single physical event can be encoded within initially independent sensory systems, each marked by discrepant and variable processing speeds [22,23]. Taking vision as an example, it is apparent that this type of dilemma is not the special preserve of cross-modal perception, as different features of a single visual object can be encoded independently, and at different rates, by distinct visual mechanisms [24,25]. How then do we determine if different sensory events have occurred synchronously or asynchronously?

The existence of temporal recalibration suggests that one strategy, used to cope with the uncertainty caused by the variable time courses of processing in independent sensory systems, is to treat temporally proximate events that have reliably been presented at a specific timing relationship as being synchronous. Thus, perceived timing would not simply result from the physical timing of events, or from the time course of corresponding neural activity (although this factor is clearly important, e.g. [15,26,27]), but also from an experienced-based appraisal of the timing relationship between presented events. Our data reveal that this experienced-based influence on timing perception is not restricted to cross-modal perception. Rather, we have established the existence of an experience-based analysis that shapes our sense of timing for events encoded in a single sensory modality.

References

- 1.Fujisaki W., Shimojo S., Kashino M., Nishida S. 2004. Recalibration of audiovisual simultaneity. Nat. Neurosci. 7, 773–778. 10.1038/nn1268 (doi:10.1038/nn1268) [DOI] [PubMed] [Google Scholar]

- 2.Vatakis A., Navarra J., Soto-Faraco S., Spence C. 2007. Temporal recalibration during asynchronous audiovisual speech perception. Exp. Brain Res. 181, 173–181. 10.1007/s00221-007-0918-z (doi:10.1007/s00221-007-0918-z) [DOI] [PubMed] [Google Scholar]

- 3.Vroomen J., Keetels M., de Gelder B., Bertelson P. 2004. Recalibration of temporal order perception by exposure to audio–visual asynchrony. Cogn. Brain Res. 22, 32–35. 10.1016/j.cogbrainres.2004.07.003 (doi:10.1016/j.cogbrainres.2004.07.003) [DOI] [PubMed] [Google Scholar]

- 4.Hanson J. V. M., Heron J., Whitaker D. 2008. Recalibration of perceived time across sensory modalities. Exp. Brain Res. 185, 347–352. 10.1007/s00221-008-1282-3 (doi:10.1007/s00221-008-1282-3) [DOI] [PubMed] [Google Scholar]

- 5.Keetels M., Vroomen J. 2008. Temporal recalibration to tactile–visual asynchronous stimuli. Neurosci. Lett. 430, 130–134. 10.1016/j.neulet.2007.10.044 (doi:10.1016/j.neulet.2007.10.044) [DOI] [PubMed] [Google Scholar]

- 6.Livingstone M. S., Hubel D. H. 1988. Segregation of form, color, movement, and depth: anatomy, physiology, and perception. Science 240, 740–749. 10.1126/science.3283936 (doi:10.1126/science.3283936) [DOI] [PubMed] [Google Scholar]

- 7.Zeki S. 1978. Functional specialization in the visual cortex of the monkey. Nature 274, 423–428. 10.1038/274423a0 (doi:10.1038/274423a0) [DOI] [PubMed] [Google Scholar]

- 8.Cowey A., Heywood C. A. 1997. Cerebral achromatopsia: colour blindness despite wavelength processing. Trends Cogn. Sci. 1, 133–139. 10.1016/S1364-6613(97)01043-7 (doi:10.1016/S1364-6613(97)01043-7) [DOI] [PubMed] [Google Scholar]

- 9.Zihl J., Von Cramon D., Mai N., Schmid C. H. 1983. Selective disturbance of movement vision after bilateral brain damage. Brain 106, 313–340. 10.1093/brain/106.2.313 (doi:10.1093/brain/106.2.313) [DOI] [PubMed] [Google Scholar]

- 10.Gegenfurtner K. A., Kiper D. C., Levitt J. B. 1997. Functional properties of neurons in macaque area V3. J. Neurophysiol. 77, 1906–1923. [DOI] [PubMed] [Google Scholar]

- 11.Lennie P. 1998. Single units and visual cortical organization. Perception 27, 1–47. [DOI] [PubMed] [Google Scholar]

- 12.Nishida S., Watanabe J., Kuriki I., Tokimoto T. 2007. Human visual system integrates color signals along a motion trajectory. Curr. Biol. 17, 366–372. 10.1016/j.cub.2006.12.041 (doi:10.1016/j.cub.2006.12.041) [DOI] [PubMed] [Google Scholar]

- 13.Wu D. A., Kanai R., Shimojo S. 2004. Steady-state misbinding of colour and motion. Nature 429, 262. 10.1038/429262a (doi:10.1038/429262a) [DOI] [PubMed] [Google Scholar]

- 14.Arnold D. H. 2005. Perceptual pairing of colour and motion. Vision Res. 45, 3015–3026. 10.1016/j.visres.2005.06.031 (doi:10.1016/j.visres.2005.06.031) [DOI] [PubMed] [Google Scholar]

- 15.Arnold D. H., Clifford C. W. G. 2002. Determinants of asynchronous processing in vision. Proc. R. Soc. Lond. B 269, 579–583. 10.1098/rspb.2001.1913 (doi:10.1098/rspb.2001.1913) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bedell H. E., Chung S. T. L., Ogmen H., Patel S. S. 2003. Color and motion: which is the tortoise and which is the hare? Vis. Res. 43, 2403–2412. 10.1016/S0042-6989(03)00436-X (doi:10.1016/S0042-6989(03)00436-X) [DOI] [PubMed] [Google Scholar]

- 17.Moutoussis K., Zeki S. 1997. A direct demonstration of perceptual asynchrony in vision. Proc. R. Soc. Lond. B 264, 393–399. 10.1098/rspb.1997.0056 (doi:10.1098/rspb.1997.0056) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Moutoussis K., Zeki S. 1997. Functional segregation and temporal hierarchy of the visual perceptive systems. Proc. R. Soc. Lond. B 264, 1407–1414. 10.1098/rspb.1997.0196 (doi:10.1098/rspb.1997.0196) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Nishida S., Johnston A. 2002. Marker correspondence, not processing latency, determines temporal binding of visual attributes. Curr. Biol. 12, 359–368. 10.1016/S0960-9822(02)00698-X (doi:10.1016/S0960-9822(02)00698-X) [DOI] [PubMed] [Google Scholar]

- 20.Paul L., Schyns P. G. 2003. Attention enhances feature integration. Vis. Res. 43, 1793–1798. 10.1016/S0042-6989(03)00270-0 (doi:10.1016/S0042-6989(03)00270-0) [DOI] [PubMed] [Google Scholar]

- 21.Miyazaki M., Yamamoto S., Uchida S., Kitazawa S. 2006. Bayesian calibration of simultaneity in tactile temporal order judgment. Nat. Neurosci. 9, 875–877. 10.1038/nn1712 (doi:10.1038/nn1712) [DOI] [PubMed] [Google Scholar]

- 22.Corey D. P., Hudspeth A. J. 1979. Response latency of vertebrate hair cells. Biophys. J. 26, 499–506. 10.1016/S0006-3495(79)85267-4 (doi:10.1016/S0006-3495(79)85267-4) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lennie P. 1981. The physiological basis of variations in visual latency. Vis. Res. 21, 815–824. 10.1016/0042-6989(81)90180-2 (doi:10.1016/0042-6989(81)90180-2) [DOI] [PubMed] [Google Scholar]

- 24.Bullier J. 2001. Integrated model of visual processing. Brain Res. Rev. 36, 96–107. 10.1016/S0165-0173(01)00085-6 (doi:10.1016/S0165-0173(01)00085-6) [DOI] [PubMed] [Google Scholar]

- 25.Maunsell J. H. R., Ghose G. M., Assad J. A., McAdams C. J., Boudreau C. E., Noerager B. D. 1999. Visual response latencies of magnocellular and parvocellular LGN neurons in macaque monkey. Vis. Neurosci. 16, 1–14. [DOI] [PubMed] [Google Scholar]

- 26.Fraisse P. 1980. Les synchronisations sensori-motrices aux rythmes (The sensorimotor synchronization of rhythms). In Anticipation et comportement (ed. Requin J.), pp. 233–257. Paris, France: Centre National. [Google Scholar]

- 27.Paillard J. 1949. Quelques données psychophysiologiques relatives au déclenchement de la commande motrice (Some psychophysiological data relating to the triggering of motor commands). L'Année Psychol. 48, 28–47. [Google Scholar]