Abstract

Objective

To investigate the impact of the HCAHPS report of patient experiences and word-of-mouth narratives on consumers' hospital choice.

Data Sources

Online consumer research panel of U.S. adults ages 18 and older.

Study Design/Data Collection/Extraction Methods

In an experiment, 309 consumers were randomly assigned to see positive or negative information about a hospital in two modalities: HCAHPS graphs and a relative's narrative e-mail. Then they indicated their intentions to choose the hospital for elective surgery.

Principal Findings

A simple, one-paragraph e-mail and 10 HCAHPS graphs had similar impacts on consumers' hospital choice. When information was inconsistent between the HCAHPS data and e-mail narrative, one modality attenuated the other's effect on hospital choice.

Conclusions

The findings illustrate the power of anecdotal narratives, suggesting that policy makers should consider how HCAHPS data can be affected by word-of-mouth communication.

Keywords: Patient satisfaction, hospital choice, HCAHPS, word-of-mouth communication

Health policy advocates argue that supplying consumers with publicly available information about provider quality will induce better, more informed choices. Despite significant investments in collecting, analyzing, and reporting quality data, it remains unclear whether people will use it to choose health care providers. Assessments of quality data utilization by consumers have yielded mixed results. For example, HealthGrades reported that 40 percent of health care consumers considered a hospital's quality rating when choosing among alternative providers (Landro 2004). But other studies indicate that most consumers either remain unaware of publicly available health quality data or fail to fully utilize it (General Accounting Office 1999; Kaiser Family Foundation 2004; Jha and Epstein 2006; Sofaer 2008;). Few consumers use published reports in their decision making (Hibbard et al. 2000; Marshall et al. 2000; RAND Health 2002; Hibbard, Stockard, and Tusler 2005; Dolan 2008;).

Why have health care consumers been reluctant to embrace data prepared to assist them in choosing providers, especially since poor choices could pose life-and-death risks? Some researchers have suggested that consumers simply lack information because public health agencies have not made the data accessible, and resistance from providers has discouraged widespread dissemination of comparative quality ratings (Herzlinger 2002; Shaller et al. 2003;). However, rival explanations suggest that consumers may find the information hard to understand and interpret, and the more difficulty people have understanding something, the less they will use it (Hibbard, Slovic, and Jewett 1997; Hibbard and Peters 2003;). Studies of consumer decision making reveal that people often rely on informal, qualitative information from friends, relatives, and acquaintances when making choices among health care providers or health plans (Lupton, Donaldson, and Lloyd 1991; Peters et al. 2007a,b;). Furthermore, recommendations from nonprofessionals often outweigh advice from authoritative sources (Gibbs, Sangl, and Burrus 1996; Crane and Lynch 1998; Fagerlin, Wong, and Ubel 2005;).

Similar dynamics have long been observed in markets for goods and services that consumers buy every day. When the work of choosing among competing alternatives increases and the choices involve sorting out complicated data, consumers usually fall back on simple rules of thumb known as heuristics (Tversky and Kahneman 1974). One such heuristic is the “vividness” of how something is described: information presented vividly is more persuasive than information presented in a pallid manner (Rook 1986, 1987), which helps explain why consumers give more weight to anecdotal feedback from friends, relatives, and even casual acquaintances than to quantitative data systematically compiled and analyzed by experts (Schwartz 2004).

Vividness is enhanced by presenting information in narratives, which are intrinsically appealing because they represent events the way people experience them in real life (Satterfield, Slovik, and Gregory 2000). For example, in pilot focus groups we conducted before this study, consumers described their decisions among alternative hospitals by telling richly detailed stories of friends' and relatives' experiences involving those hospitals, and how they relied on such anecdotal evidence in making their own choices.

Even when quality ratings factor into consumers' hospital choice decisions, people will ask acquaintances for advice, and this anecdotal information may complement or compete with quantitative data. Consumers have become accustomed to seeing quality ratings for many services inside and outside health care, sometimes accompanied by customers' testimonials (Chevalier and Mayzlin 2006). To our knowledge, the relative impact of narrative stories and quantitative ratings of health care providers on hospital choice has not been systematically tested. In an experiment, we examined consumers' reactions to scenarios in which they viewed both quantitative ratings from the CAHPS Hospital Survey (HCAHPS) and anecdotal information about patient satisfaction at a fictional hospital.

STUDY

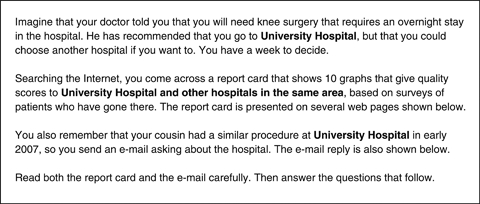

A scenario was prepared in which respondents were asked to imagine that they needed to undergo a knee operation (see Figure 1). Elective surgery was chosen because patients undergoing such a procedure would usually have a choice over the surgeon and hospital (Wilson, Woloshin, and Schwartz 2007). Information about hospitals was then shown to the respondents in quantitative and anecdotal form.

Figure 1.

Scenario Presented to Respondents

An experiment was conducted using a 2 × 2 between-subjects design in which respondents were randomly assigned to one of four conditions created by preparing descriptions of a fictional hospital with a generic yet plausible name, “University Hospital.”1 Respondents viewed a simulated report card about University Hospital, derived from the HCAHPS website; they also viewed comparable information about University Hospital in an e-mail from the respondent's “cousin.” In both HCAHPS and e-mail conditions, respondents were randomly assigned to see either favorable or unfavorable information about University Hospital.

A sample of 309 adults was obtained from a marketing research firm's online consumer panel. Participants' average age was 45.1 years; 51.8 percent of the sample was male; 37.7 percent were college graduates; median annual household income was approximately U.S.$51,000. To avoid order bias, half the respondents saw the HCAHPS ratings first, and half saw the e-mail first; no significant order effects were found.

The HCAHPS report card was created by closely simulating the publicly reported results posted on the HospitalCompare (2008) website. We created mock results for three hospitals: “University Hospital” plus two fictitious competitors labeled “Hospital A” and “Hospital B,” as well as national and state benchmark averages. The focal hospital, “University Hospital,” was shown as scoring 6–11 percentage points better or worse than the Statewide Average of all hospitals on each measure, significantly higher or lower than the competition. Random spot checks of hospitals in the HospitalCompare (2008) dataset indicated that these variances were representative of data reported on the website.

Respondents viewed HCAHPS data in bar charts; several studies report superior understanding and use of report card data when presented in bar charts versus numbers in tables (Feldman-Stewart et al. 2000; Hibbard et al. 2002; Hibbard and Peters 2003; Fagerlin, Wong, and Ubel 2005;). Care was taken to ensure that all images were nearly identical to what consumers would see during an actual search of the website.

The cousin's e-mail message was carefully crafted to match the HCAHPS ratings, using the same attributes as HCAHPS, and portraying the cousin's experience as either positive or negative on all attributes.2 This matching was necessary to avoid potential confounding reported in earlier research (Ubel, Jepson, and Baron 2001). The message concluded with either “I would definitely recommend this hospital” or “I wouldn't recommend this hospital,” which again closely resembled the wording of the HCAHPS survey question.

The key-dependent variable was hospital choice, measured by a two-item scale where respondents indicated whether they would choose University Hospital and recommend it to others (α=0.96). Other measures were adapted from earlier studies of health care report cards (Hibbard et al. 2002). Measures of respondents' knowledge of health care quality and prior health care experience were also collected.

RESULTS

As a manipulation check, we conducted an online pilot study (N=40) in which we tested for differences in perceived quality between favorable versus unfavorable information about University Hospital. One-half the respondents viewed HCAHPS charts and one-half viewed the e-mail; the scenarios effectively differentiated between positive and negative descriptions in both HCAHPS and e-mail conditions, but no significant differences were found between HCAHPS and e-mail, indicating that both communicated the same impression of University Hospital's quality.

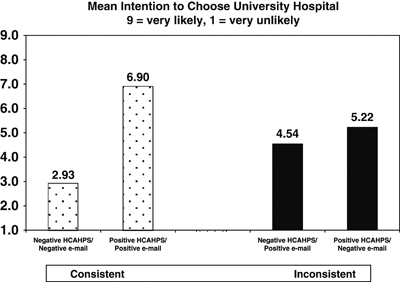

Results of the main experiment showed that respondents were more likely to choose University Hospital when they saw positive HCAHPS ratings and positive e-mail information than when both HCAHPS and e-mail were negative (t=11.9, p<.001). However, when HCAHPS and e-mail information contradict each other (one positive, the other negative), respondents' choice of University Hospital is significantly attenuated relative to when both pieces of information are consistent (t's>4.5, p's<.001). We also found a significant difference between the cell means of the two “contradictory” conditions (i.e., e-mail-positive/HCAHPS-negative versus e-mail-negative/HCAHPS-positive, t=2.11, p<.04), suggesting that HCAHPS information exerted greater influence on consumer choice than the e-mail when the two were inconsistent (see Figure 2).

Figure 2.

Effects of HCAHPS and E-Mail Information on Hospital Choice

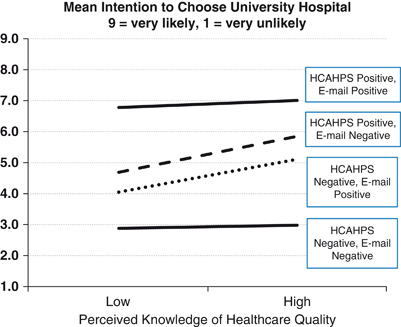

We expected that respondents' health status, perceived knowledge of health care quality, and prior experience with providers would influence their perceptions of HCAHPS and e-mail information, so we tested them as covariates in the model. Only knowledge of health care quality emerged as a significant covariate. A follow-up ANOVA showed that knowledge of health care quality moderated the effect of both HCAHPS and e-mail information on intention to use University Hospital. When HCAHPS and e-mail were inconsistent, consumers who perceived themselves as more knowledgeable about health care quality were significantly more likely to choose University Hospital; however, when the two sources were consistently positive or consistently negative, consumer knowledge had no effect (see Figure 3).

Figure 3.

Impact of Knowledge of Health Care Quality, HCAHPS Ratings, and E-Mail Message Favorability on Intention to Choose University Hospital

DISCUSSION

When HCAHPS and narrative information reinforce each other, they have powerful effects on consumers' decisions. Seeing positive HCAHPS data and reassured by similar narratives, consumers strongly prefer the focal hospital; and when unfavorable narrative reports reinforce poor HCAHPS data, they avoid the hospital. Importantly, when the HCAHPS and e-mail were contradictory (one good, the other bad), each attenuated the effects of the other. Apparently, consumers mentally averaged the conflicting information and migrated to the middle of the intention scale.

Interestingly, a simple, one-paragraph e-mail had nearly the same impact on consumers' decisions to use a hospital as the HCAHPS report of patient experiences. While communicating essentially the same information, the e-mail took less time to read but produced a very similar effect.3 However, the HCAHPS information exerted greater influence on consumer choice than the e-mail when the two were inconsistent.

Our finding that a relative's anecdotal information affected consumer choice was unsurprising, since prior research has shown that word-of-mouth plays a pivotal role in consumer decision making about health care providers (Hibbard and Peters 2003; Dolan 2008). For policy makers trying to encourage consumers to use quality data, the bad news is that anecdotal information blunts the impact of HCAHPS ratings; the good news is that HCAHPS ratings have a powerful impact on the persuasive power of anecdotes. When they see quantitative and anecdotal information concurrently, consumers weigh them both.

There are several policy implications here. First, policy makers should be encouraged because the carefully prepared HCAHPS data can compete effectively with relatives' anecdotes, which no one can control. This suggests that efforts to inform consumers about the availability of provider quality information should continue with even greater urgency. Second, policy makers should make HCAHPS data easier to access, use, and understand. In our pilot research, significantly more time was needed to view the HCAHPS pages than read the e-mail, and while this differential cannot be eliminated, making the HCAHPS information more consumer-friendly will reduce the effort required to use it. For instance, posting sets of HCAHPS overall ratings can allow consumers to judge hospitals' quality at-a-glance, like satisfaction scores posted by J. D. Power or University of Michigan's ACSI. Though lacking detail, the overall rating correlates with individual survey items, and consumers could view those with an additional click. Third, when making major health care decisions, people will consult with friends or relatives and value their advice. Anticipating this, policy makers should consider ways to educate consumers about integrating information from relatives' narratives and HCAHPS. For example, a call-out on the HospitalCompare website could ask, “What do your friends and relatives say?” Suggestions could include asking a relative for specifics about a negative experience, or explaining why friends' experiences and HCAHPS ratings might differ. Site users could also be prompted to show acquaintances how to access the quality information and engage in discussion. Further research is needed to identify optimal ways to educate people about contradictory health care information.

The fact that our scenario involved an e-mail from one's “cousin” begs the question whether anecdotal information from other sources would have altered the results. For example, would the narrative be more effective if the “cousin” were a nurse? Who worked at the hospital? Who did not work there? From a casual acquaintance? A stranger whose remarks were overheard? Although we chose to focus the anecdotal information rather narrowly in the present study, further research should examine this from a source credibility theoretical framework.

Participants' knowledge of health care quality affected hospital choice when HCAHPS and e-mail information were inconsistent, supporting research documenting the tendency to give greater weight to negative versus positive information in situations where both are present and one's knowledge is low (Ahluwalia 2002; Klein and Ahluwalia 2005;). Thus, when the scenarios contained contradictory information, people with less knowledge about health care quality made choices that reflected greater weight given to negative information, making them reluctant to choose University Hospital.

This research makes a contribution to understanding consumer decision making using HCAHPS. Since the data became public in 2008, researchers have focused on the relationship between HCAHPS and provider quality indicators (Isaac et al. 2008) and the impact of public disclosure on hospitals' efforts to improve patient satisfaction (Press-Ganey 2008). Yet no one has studied how consumers use these ratings when word-of-mouth information is also present.

LIMITATIONS

This study required little effort from consumers to obtain HCAHPS data: they read the scenario, immediately followed by the experimental stimuli already prepared by the researchers. In real life, it takes work to visit the HospitalCompare website; specify the location, hospitals, and variables to examine; indicate preference for viewing data in chart or tabular form; download the information; and comprehend the data. To obtain anecdotal feedback, people merely have to contact relatives, or they may receive feedback without even asking for it. Future research should examine situations where respondents “naturally” obtain data for themselves.

In this study we made sure that the stimuli conveyed significant differences between hospitals in both e-mails and HCAHPS ratings. Smaller differences among the hospitals could cause shifts in the impact of HCAHPS ratings versus e-mail narratives. For instance, if HCAHPS ratings of several hospitals are fairly close, the e-mail might have greater impact. Furthermore, we did not test scenarios in which e-mail and HCAHPS ratings differed by degree—for example, the focal hospital could have high HCAHPS ratings but a tepid e-mail message. Additionally, we focused on patient satisfaction ratings rather than the more technical measures of quality in HospitalCompare, reasoning that ordinary people can easily relate to the questions in the HCAHPS survey compare them to anecdotal experiences from relatives. Future investigations should explore the impact of technical quality measures alongside anecdotal information.

CONCLUSION

Though public awareness of the HCAHPS survey has been limited, this study shows that even when consumers have HCAHPS information right in front of them, its impact is attenuated by an anecdotal narrative which provides contrary information. This is problematic because a core principle behind the initiative is that consumers will use data to reward high-quality providers with their business. Our research is an initial attempt to understand how consumers use HCAHPS when weighing it against narrative information they receive from informal sources. Given the resources devoted to the HCAHPS program and requirements for providers to collect and publish their patient satisfaction data, further studies are clearly warranted.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: The authors acknowledge support of Union Graduate College for this research.

Disclosures: None.

Disclaimers: None.

NOTES

Seventeen communities have hospitals named “University Hospital” or “University Medical Center.” Residents of these MSAs did not receive the survey.

We used a “cousin” because this relative can be either male or female, avoiding gender bias.

In the online pilot, the survey took less time to complete when respondents read the e-mail (mean=5.9 minutes) versus reviewing the HCAHPS ratings (mean=12.7 minutes), t=3.46, p<.001.

SUPPORTING INFORMATION

Additional supporting information may be found in the online version of this article:

Appendix SA1: Author Matrix.

Appendix SA2: Detailed Description of Study Methods.

Appendix SA3: Sample Study Materials, Stimuli, and Questionnaires.

Appendix SA4: Additional Demographic Analyses.

Please note: Wiley-Blackwell is not responsible for the content or functionality of any supporting materials supplied by the authors. Any queries (other than missing material) should be directed to the corresponding author for the article.

REFERENCES

- Ahluwalia R. How Prevalent Is the Negativity Effect in Consumer Environments? Journal of Consumer Research. 2002;29(3):270–9. [Google Scholar]

- Chevalier JA, Mayzlin D. The Effect of Word-of-Mouth on Sales: Online Book Reviews. Journal of Marketing Research. 2006;43(3):345–54. [Google Scholar]

- Crane FG, Lynch JE. Consumer Selection of Physicians and Dentists: An Examination of Choice Criteria and Cue Usage. Journal of Health Care Marketing. 1998;8(3):16–9. [PubMed] [Google Scholar]

- Dolan PL. Patients Rarely Use Online Ratings to Pick Physicians. American Medical News. 2008;51:24. [Google Scholar]

- Fagerlin A, Wong C, Ubel PA. Reducing the Influence of Anecdotal Reasoning on People's Health Care Decisions: Is a Picture Worth a Thousand Statistics? Medical Decision Making. 2005;25(4):398–405. doi: 10.1177/0272989X05278931. [DOI] [PubMed] [Google Scholar]

- Feldman-Stewart D, Kocovski N, McConnell BA, Brundage MD, Mackillop WJ. Perception of Quantitative Information for Treatment Decisions. Medical Decision Making. 2000;20(2):228–38. doi: 10.1177/0272989X0002000208. [DOI] [PubMed] [Google Scholar]

- General Accounting Office. 1999. Physician Performance: Report Cards under Development but Challenges Remain. Washington, D.C.:Report GAO/HEHS 99–178.

- Gibbs DA, Sangl J, Burrus B. Consumer Perspectives on Information Needs for Health Plan Choice. Health Care Financing Review. 1996;18(1):55–74. [PMC free article] [PubMed] [Google Scholar]

- Herzlinger R. Let's Put Consumers in Charge of Healthcare. Harvard Business Review. 2002;22(7):44–55. [PubMed] [Google Scholar]

- Hibbard JH, Harris-Kojetin L, Mullin P, Lubalin J, Garfinkel S. Increasing the Impact of Health Plan Report Cards by Addressing Consumers' Concerns. Health Affairs. 2000;16(5):138–43. doi: 10.1377/hlthaff.19.5.138. [DOI] [PubMed] [Google Scholar]

- Hibbard JH, Peters E. Supporting Informed Consumer Health Care Decisions: Data Presentation Approaches that Facilitate the Use of Information in Choice. Annual Review of Public Health. 2003;24:413–33. doi: 10.1146/annurev.publhealth.24.100901.141005. [DOI] [PubMed] [Google Scholar]

- Hibbard JH, Slovic P, Jewett JJ. Increasing Informed Consumer Decisions in Health Care. Milbank Quarterly. 1997;75(3):395–414. doi: 10.1111/1468-0009.00061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hibbard JH, Slovic P, Peters E, Finucane ML. Strategies for Reporting Health Plan Performance Information to Consumers: Evidence from Controlled Studies. Health Services Research. 2002;37(2):291–313. doi: 10.1111/1475-6773.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hibbard JH, Stockard J, Tusler M. Hospital Performance Reports: Impact on Quality, Market Share, and Reputation. Health Affairs. 2005;24(4):1150–60. doi: 10.1377/hlthaff.24.4.1150. [DOI] [PubMed] [Google Scholar]

- HospitalCompare. 2008. [accessed on June 30, 2008]. Available at http://www.hospitalcompare.hhs.gov/Hospital/Search/SearchOptions.asp.

- Isaac T, Zaslavsky AM, Cleary PD, Landon BE. 2008. The Relationship between Patients' Perception of Care and Hospital Quality and Safety. Presented at CAHPS User Group Meeting, December 2008 [accessed on March 28, 2009]. Available at https://www.cahps.ahrq.gov/content/community/Events/files/F-3-P_Isaac.pdf.

- Jha A, Epstein AM. The Predictive Accuracy of the New York State Coronary Artery Bypass Surgery Report-Card System. Health Affairs. 2006;25(3):844–55. doi: 10.1377/hlthaff.25.3.844. [DOI] [PubMed] [Google Scholar]

- Kaiser Family Foundation. 2004. “National Survey on Consumers' Experiences with Patient Safety and Quality Information” [accessed on May 8, 2008]. Available at http://www.kff.org/kaiserpolls/upload/National-Survey-on-Consumers-Experiences-With-Patient-Safety-and-Quality-Information-Survey-Summary-and-Chartpack.pdf.

- Klein JG, Ahluwalia R. Negativity in the Evaluation of Political Candidates. Journal of Marketing. 2005;69(1):131–42. [Google Scholar]

- Landro L. 2004. “Consumers Need Health-Care Data.”Wall Street Journal, January 29, 2004.

- Lupton D, Donaldson C, Lloyd P. Caveat Emptor or Blissful Ignorance? Patients and the Consumerist Ethos. Social Science of Medicine. 1991;33(5):559–68. doi: 10.1016/0277-9536(91)90213-v. [DOI] [PubMed] [Google Scholar]

- Marshall MN, Shekelle PG, Leatherman S, Brook RH. The Public Release of Performance Data: What Do We Expect to Gain? A Review of the Evidence. Journal of American Medical Association. 2000;283(14):1866–74. doi: 10.1001/jama.283.14.1866. [DOI] [PubMed] [Google Scholar]

- Peters E, Dieckmann N, Dixon A, Hibbard JH, Mertz CK. Less Is More in Presenting Quality Information to Consumers. Medical Care Research and Review. 2007a;64(2):169–90. doi: 10.1177/10775587070640020301. [DOI] [PubMed] [Google Scholar]

- Peters E, Hibbard J, Slovic P, Dieckmann N. Numeracy Skill and the Communication, Comprehension, and Use of Risk-Benefit Information. Health Affairs. 2007b;26(1):741–8. doi: 10.1377/hlthaff.26.3.741. [DOI] [PubMed] [Google Scholar]

- Press-Ganey. 2008. “Hospital Pulse Report, 2008” [accessed on March 12, 2009]. Available at http://www.pressganey.com/galleries/default-file/2008_Hospital_Pulse_Report.pdf.

- RAND Health. 2002. “Report Cards for Health Care: Is Anyone Checking Them?” [accessed on August 6, 2009]. Available at http://www.rand.org/pubs/research_briefs/RB4544/

- Rook KS. Encouraging Preventive Behavior for Distant and Proximal Health Threats: Effects of Vivid versus Abstract Information. Journal of Gerontology. 1986;41(4):526–34. doi: 10.1093/geronj/41.4.526. [DOI] [PubMed] [Google Scholar]

- Rook KS. Effects of Case History versus Abstract Information on Health Attitudes and Behaviors. Journal of Applied Social Psychology. 1987;17(2):533–53. [Google Scholar]

- Satterfield T, Slovik P, Gregory R. Narrative Valuation in a Policy Judgment Context. Ecological Economics. 2000;34:315–31. [Google Scholar]

- Schwartz B. The Paradox of Choice: Why More Is Less. New York: Harper-Collins; 2004. [Google Scholar]

- Shaller D, Sofaer S, Findlay D, Hibbard JH, Lansky D, Delbanco S. Consumers and Quality-Driven Health Care: A Call to Action. Health Affairs. 2003;22(3):95–101. doi: 10.1377/hlthaff.22.2.95. [DOI] [PubMed] [Google Scholar]

- Sofaer S. 2008. Engaging Consumers in Using Quality Reports: What It Takes to ‘Capture Eyeballs’. Presented at CAHPS User Group Meeting, December 2008 [accessed on March 28, 2009]. Available at https://www.cahps.ahrq.gov/content/community/Events/files/T-6-JC_Shoshanna-Sofaer.pdf.

- Tversky A, Kahneman D. Judgment under Uncertainty: Heuristics and Biases. Science. 1974;185(4157):1124–31. doi: 10.1126/science.185.4157.1124. [DOI] [PubMed] [Google Scholar]

- Ubel PA, Jepson C, Baron J. The Inclusion of Patient Testimonials in Decision Aids: Effects on Treatment Choices. Medical Decision-Making. 2001;21(1):60–8. doi: 10.1177/0272989X0102100108. [DOI] [PubMed] [Google Scholar]

- Wilson CT, Woloshin S, Schwartz LM. Choosing Where to Have Major Surgery. Archives of Surgery. 2007;142:242–6. doi: 10.1001/archsurg.142.3.242. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.