Abstract

Human attention is selective, focusing on some aspects of events at the expense of others. In particular, angry faces engage attention. Most studies have used pictures of young faces, even when comparing young and older age groups. Two experiments asked (1) whether task-irrelevant faces of young and older individuals with happy, angry, and neutral expressions disrupt performance on a face-unrelated task, (2) whether interference varies for faces of different ages and different facial expressions, and (3) whether young and older adults differ in this regard. Participants gave speeded responses on a number task while irrelevant faces appeared in the background. Both age groups were more distracted by own than other-age faces. In addition, young participants' responses were slower for angry than happy faces, whereas older participants' responses were slower for happy than angry faces. Factors underlying age-group differences in interference from emotional faces of different ages are discussed.

Keywords: interference, attention bias, own-age bias, emotion, facial expression

Human attention is necessarily selective. Our environment is highly complex and our cognitive system limited, so that not all stimuli can be fully analyzed. The ability to focus attention on critical elements of the environment while ignoring distracting information is essential for adaptive behavior and psychological well-being (Desimone & Duncan, 1995; Kastner & Ungerleider, 2001; Miller & Cohen, 2001). Some degree of stimulus processing seems to take place with only minimal attention and often without conscious awareness, guiding attention to salient events, even when they occur outside the current focus of attention (Merikle, Smilek, & Eastwood, 2001). In interaction with such bottom-up factors as stimulus salience, top-down factors, such as expectations and current goals, influence where, how, and what is attended (Compton, 2003; Corbetta & Shulman, 2002; Feinstein, Goldin, Stein, Brown, & Paulus, 2002; Yantis, 1998). Rapid detection of stimuli with emotional significance is crucial for survival and advantageous for our social interactions (Öhman & Mineka, 2001). Therefore, emotional information should be more likely to attract attention than other stimuli and be privileged for receiving ‘preattentive’ analysis.

Although the emotional value of stimuli differs between individuals, there are some stimuli—such as human faces—that are emotionally significant to almost all individuals. Human faces constitute a unique category of biologically and socially important stimuli (Bruce & Young, 1998). Faces occur frequently in our lives, and they are associated with important outcomes (e.g., food, safety, social interactions). Even brief glances at a face are sufficient to extract various person-related information such as identity, familiarity, age, gender, attractiveness, trustworthiness, ethnic origins, direction of gaze, or emotional state (Engell, Haxby, & Todorov, 2007; Palermo & Rhodes, 2007; Vuilleumier, 2002). There is also evidence that perception of faces is processed in a category sensitive brain region (Farah, 1996; Haxby, Hoffman, & Gobbini, 2000; Kanwhisher, McDermott, & Chun, 1997; Perrett, Hietanen, Oram, & Benson, 1992; Puce, Allison, Asgari, Gore, & McCarthy, 1996; but see Gauthier, Skudlarski, Gore, & Anderson, 2000). These factors make faces ideal candidates for studying selective attention and preattentive processing (Öhman, Flykt, & Lundqvist, 2000; Öhman & Mineka, 2001).

Preferential Processing of Faces

Multiple lines of research suggest that faces receive high priority in attention. Results from infant studies, for instance, show that faces preferentially engage attention compared to other stimuli (Goren, Sarty, & Wu, 1975; Johnson, Dziurawiec, Ellis, & Morton, 1991). Behavioral studies with healthy young adults have found that faces capture attention more readily than other stimuli such as pictures of musical instruments or appliances, and that people attend to faces even when they are not task relevant or when they distract from a task (Jenkins, Burton, & Ellis, 2002; Khurana, Smith, & Baker; 2000; Lavie, Ro, & Russell, 2003; Ro, Russel, & Lavie, 2001; Young, Ellis, Flude, McWeeny, & Hay, 1986; see Palermo & Rhodes, 2007, for a review; but see Brown, Huey, & Findlay, 1997). Even very briefly (pre-consciously) presented faces are associated with heightened activation of neural structures involved in emotion processing and attention (Cunningham et al., 2004; Morris, Öhman, & Dolan, 1998, 1999; Pourtois, Grandjean, Sander, & Vuilleumier, 2004; Schupp et al., 2004; Whalen et al., 2001). Studies with unilateral visual neglect patients show that contralesionally presented faces are more often detected than scrambled faces and other shapes (Vuilleumier, 2000); and prosopagnosic patients, even though unable to recognize familiar faces, show a normal pattern of interference from distracter faces (De Haan, Young, & Newcombe, 1987; Sergent & Signoret, 1992). Taken together, a broad range of studies suggest that faces preferentially capture or hold attention and may even be preattentively processed.

Selective Attention for, and Interference from, Emotional Faces

Studies have also investigated whether different facial expressions differentially capture or hold attention and differentially distract from tasks where they are not relevant (see Palermo & Rhodes, 2007, for a review). Evidence to date points to preferential processing of, and interference from, faces with negative facial expressions, in particular those portending danger or threat (i.e., angry faces or fearful faces), compared to neutral or happy faces in healthy young adults (Fox, Russo, Bowles, & Dutton, 2001; Hansen & Hansen, 1988; Mogg & Bradley, 1999; Öhman, Lundqvist, & Esteves, 2001; von Honk, Tuiten, De Haan, van den Hout, & Stam, 2001; see Palermo & Rhodes, 2007, for a review; but see Mack & Rock, 1998; White, 1995). In their important early study, using a visual search paradigm with schematic faces, Hansen and Hansen (1988) found that an angry face among happy faces was detected significantly faster and with fewer errors than a happy face among angry faces. Moreover, time needed to detect the angry face among happy faces was not affected by the number of happy faces, suggesting highly efficient detection of faces with angry facial expressions. Recently, Öhman et al. (2001) replicated this ‘face-in-the-crowd’ effect across several experiments controlling for various potential confounds: They found faster and more accurate detection of angry than happy faces (among emotional as well as neutral faces), that held for different search set sizes, and for inverted as well as upright faces. Angry faces were also more quickly and accurately detected than were other negative faces (i.e., sad or “scheming” faces), which suggests that there is an ‘anger superiority effect’ that may be related to danger or threat rather than to negative valence generally. Interestingly, on displays with only angry distracter faces but no target face present, participants were significantly slower indicating that there was no target face than on displays with only neutral or only happy distracter faces but no target face present. This suggests that participants ‘dwell’ longer on, or seem to have more difficulty disengaging attention from, angry compared to neutral or happy faces. In line with this finding, in the context of an emotional Stroop task, von Honk et al. (2001) found that naming the color of an angry face took longer than naming the color of a neutral face. Furthermore, Fox et al. (2001), using a spatial cuing paradigm, found that when an angry compared to a happy or a neutral face was presented in one location on the computer followed by a target presented in another location, highly state anxious individuals took longer to detect the target, suggesting that the presence of an angry face had a strong impact on the disengagement of attention (see Milders, Sahraie, Logan, & Donnellon, 2006; Pourtois et al., 2004, for similar effects for fearful faces).

In sum, a wide range of studies across various experimental paradigms suggest that compared to neutral (or to happy) faces, angry and fearful faces more readily capture or hold attention, and distract from other stimuli. This ‘threat-advantage’ is often explained from an ecological or evolutionary standpoint that it is adaptive to preferentially attend and quickly respond to threat-related, potentially harmful stimuli (LeDoux, 1998; Öhman & Mineka, 2001).

Age-Group Differences in Selective Attention for, and Interference from, Emotional Faces

Does this preferential attention to, and interference from, angry faces persist throughout the lifespan? Results of the studies that compare young and older adults are mixed. On the one hand, there is some evidence of an ‘anger superiority effect’ for older adults similar to that of young adults (Hahn, Carlson, Singer, & Gronlund, 2006; Mather & Knight, 2006). Using a visual search paradigm, Hahn et al. (2006) asked participants to search for a discrepant facial expression in a matrix of otherwise homogeneous faces. Both young and older adults were faster to detect an angry face in an array of non-angry faces than a happy or a neutral face in arrays of non-happy or non-neutral faces. Similarly, Mather and Knight (2006) showed that both age groups were faster to detect a discrepant angry face than a discrepant happy or sad face.

On the other hand, Hahn et al. (2006) also found that young adults were slower to search arrays of angry faces for non-angry faces than to search happy or neutral arrays for non-happy or non-neutral faces, whereas older adults were faster to search through angry arrays than through happy or neutral arrays. These findings seem to suggest that detection and monitoring of negative information does not change with age but that older adults may become better able to disengage from negative as opposed to positive information, thus arguing for differences between young and older adults regarding distraction from angry versus happy faces.

Similarly, studies of age-group differences in attention preference suggest that older adults are more likely than young adults to favor positive and to avoid negative faces (see Carstensen & Mikels, 2005; Mather & Carstensen, 2005, for reviews). Mather and Carstensen (2003) used a dot-probe task in which participants saw pairs of faces, one emotional (happy, sad, or angry) and one neutral. Young adults did not exhibit an attention bias toward any category of faces, whereas older adults showed preferential attention to happy compared with neutral faces and to neutral compared with sad or angry faces. Recording eye movements during free viewing of emotional–neutral face pairs, Isaacowitz, Wadlinger, Goren, and Wilson (2006a) observed preferential gaze away from sad and toward happy faces in older adults, whereas young adults also looked away from sad faces but did not look toward happy faces. Further supporting these age-group differences, Isaacowitz, Wadlinger, Goren, and Wilson (2006b) reported an attention bias (in relation to neutral faces) toward happy faces and away from angry faces in older adults, whereas young adults showed only a bias toward fearful faces. Directly comparing the two age groups, older adults looked less at angry and fearful faces than young adults, but the age groups did not differ with respect to happy or sad faces (but see Sullivan, Ruffman, & Hutton, 2007). Furthermore, a recent eye tracking study by Allard and Isaacowitz (in press) found that regardless of whether positive, negative, and neutral images (not faces) were viewed under full or divided attention, older adults demonstrated a fixation preference for positive and neutral in comparison to negative images. These results suggest that older adults' preference toward positive over negative stimuli may not necessitate full cognitive control but also holds when individuals are distracted by competing information (see also Thomas & Hasher, 2006; but see Knight et al., 2007, for contrary findings).

Taken together, the literature suggests that both young and older adults are able to rapidly detect angry faces. At the same time, older adults seem to be better at ignoring or disengaging from angry than happy faces and preferentially attend to happy over angry faces. Older adults might therefore be more distracted by happy than angry faces, whereas young adults might be more distracted by angry than happy faces.

Why would older but not young adults be less able to ignore, and be more distracted by, happy than angry faces? The literature offers different explanations. There is, for example, evidence that older adults become more motivated to maximize positive affect and minimize negative affect as an adaptive emotion regulation strategy (Carstensen, Isaacowitz, & Charles, 1999). This then seems to be reflected in older adults' preferential attention to positive over negative information (or attention away from, and suppression of, negative relative to positive information; Carstensen & Mikels, 2005; Mather & Carstensen, 2005) and should result in stronger distraction by happy than angry faces in older adults. Another explanation stems from evidence that older adults are less able and take longer to correctly identify facial expressions of anger as compared to happiness in emotion identification tasks (Ebner & Johnson, 2009; see Ruffman, Henry, Livingstone, & Phillips, 2008, for a review).

Attention toward Own Age Faces

It is important to note that all these studies on selective attention for, and interference from, emotional faces reported so far have only used faces of young or middle-aged individuals. Previous research in face recognition and person identification using mostly neutral faces has shown, however, that adults of different ages are more accurate and faster in old–new recognition memory tests for faces and persons of their own compared to other age groups (referred to as the “own age bias”; Anastasi & Rhodes, 2006; Bäckman, 1991; Bartlett & Fulton, 1991; Lamont, Stewart-Williams, & Podd, 2005; Mason, 1986; Perfect, & Harris, 2003; Wright & Stroud, 2002). This memory effect suggests that the age of the face is one important factor that influences whether and how faces are attended to and may influence the extent to which a face distracts from a face-unrelated task. The own age effect is typically explained by the amount of exposure an individual has to classes of faces, assuming that people more frequently encounter own age faces than other age faces (Ebner & Johnson, 2009) and are therefore relatively more familiar with faces of their own age group (Bartlett & Fulton, 1991). Evidence of an own age bias in memory and person identification clearly challenges findings and interpretation of attention studies, as young faces may be less relevant and salient for older than young participants.

Overview of the Present Study

The central aim of the present study was to bring together the literature on selective attention to, and interference from, emotional faces in young and older adults and evidence of own age effects in face processing. To our knowledge, so far there has been no systematic investigation, involving both young and older participants, of interference from faces displaying young and older individuals with different facial expressions.

The present study used a Multi-Source Interference Task, in which participants responded to numbers by pressing a button indicating which of three numbers was different from the other two (Bush & Shin, 2006). In our version of the task, to-be-ignored faces of young or older individuals displaying happy, angry, and neutral facial expressions appeared in the background. Across two experiments, one with young participants (Experiment 1) and one with both young and older participants (Experiment 2), we addressed three research questions: (1) Do task-irrelevant faces slow down response times on face-unrelated number trials compared to trials during which no faces are presented? Based on findings that faces have a strong interference potential even when they are task-irrelevant or distract from a task (see Palermo & Rhodes, 2007, for a review), we predicted that young participants would give slower responses to number trials when task-distracting faces were simultaneously presented in the background than when no faces were presented. (2) Is there more interference from own as opposed to other age faces in young and older participants as they respond to the number task? Referring to the own age bias in face processing (Bäckman, 1991; Lamont et al., 2005), we expected that young participants would give slower number responses when young as compared to older faces were presented in the background. Correspondingly, for older participants we expected slower number responses when older as compared to young faces were presented in the background. (3) Are young participants more distracted by angry compared to happy or neutral faces, whereas older participants are more distracted by happy than angry or neutral faces? Considering the literature on preferential attention to, and increased distraction potential of, angry relative to happy or neutral faces in young adults (see Palermo & Rhodes, 2007, for a review), we expected that young participants would give slower number responses when angry as compared to happy or neutral faces appeared in the background. Based on some evidence that older adults, even though equally likely as young adults to detect angry faces, seem to be better at ignoring or disengaging from angry than happy faces (Hahn et al., 2006) and preferentially attend to happy over angry faces (Isaacowitz et al., 2006b; Mather & Carstensen, 2003), we hypothesized that older participants would give slower number responses when happy than angry faces were presented in the background.

We did not have specific expectations regarding the interaction between age and expression of the face in terms of face interference effects. To our knowledge, there is no literature on this point. On the one hand, it is possible that both factors have independent effects and do not interact. On the other hand, it is also possible that the impact of one factor would depend on the other; for example, that certain facial expressions (e.g., anger, happiness) might especially attract or hold attention when shown by own age as opposed to other age individuals because of the potentially greater significance of emotion expression in a more probable interaction partner.

Experiment 1

Experiment 1 tested young participants. The purpose of this experiment was threefold: To examine (1) whether task-irrelevant, to-be-ignored faces of young and older individuals with different facial expressions (i.e., happy, angry, neutral) distracted attention from a face-unrelated primary task; (2) whether own age faces (i.e., young faces) distracted attention more from a face-unrelated task than other age faces (i.e., older faces); and (3) whether angry faces distracted attention more from a face-unrelated task than happy or neutral faces.

Methods

Participants

Twenty-two young adult undergraduate students (age range 18–25 yrs., M = 20.2, SD = 1.68, 37 % female) were recruited through the university's undergraduate participant pool and via flyers and received course credit or monetary compensation for participating. Participants reported good general emotional well-being (M = 4.05, SD = 0.14) and good general physical health (M = 3.95, SD = 0.25; scales described below).

Procedure

Participants were told about the testing procedure and signed a consent form. Then, using a computer, they worked on a modified version of the Multi-Source Interference Task (MSIT; Bush & Shin, 2006; Bush, Shin, Holmes, Rosen, & Vogt, 2003). We decided on this specific task as the face-unrelated task for multiple reasons: The MSIT has been shown to reliably and robustly activate the cognitive attention network, it produces a robust and temporally stable interference effect within participants, and it is easy to learn (Bush & Shin, 2006).

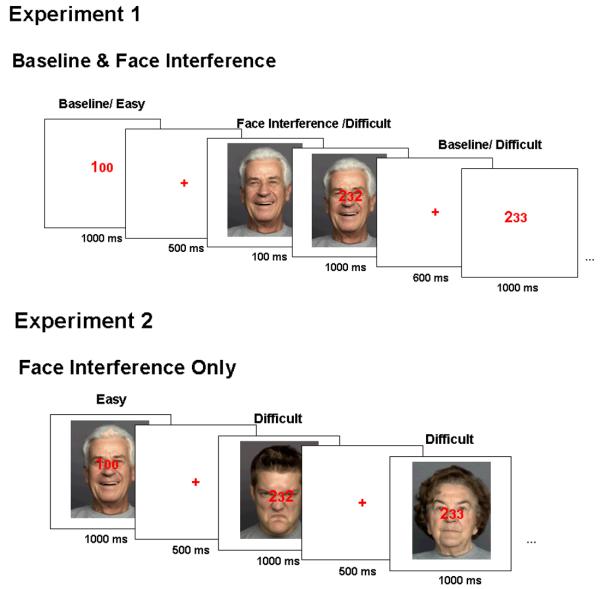

Figure 1 presents the version of the MSIT used in Experiment 1. Participants were told they would see, in the center of the screen, three digits, two matching and one different (e.g., 100; 232), and that the digit that was different from the other two was the target number. For example, given 100, the digit 1 would be the target, and given 232, the digit 3 would be the target. Their task was to report, via button-press, the identity of the target number, regardless of its position, as quickly and as accurately as possible. Participants used their right index, middle, and ring fingers on the buttons marked one, two, and three from left to right on their keyboard.

Figure 1.

Multi-Source Interference Tasks used in Experiment 1 and Experiment 2

Note. Sample number trials: ‘100’ correct response = 1, ‘232’ correct response = 3, ‘233’ correct response = 2.

Participants were informed that easy (congruent) and difficult (incongruent) trials would be randomly intermixed. On the easy trials the distracter numbers were always 0s, the target number always matched its position on the button-press (e.g., 100), and the font size of 0s was smaller than that of the target number. On the difficult trials the distracter numbers were 1s, 2s, or 3s, the target never matched its position on the button-press (e.g., 232, 233), and the target numbers were equally likely to be in large or small font size. In addition to the two difficulty levels, the task comprised two types of trials, baseline and face interference, randomly intermixed. Participants were told that on some trials, simultaneous with the numbers, faces would appear in the background (i.e., face interference trials) but that they should ignore the faces and focus on the number task.

In all trials, the three numbers remained on the screen for 1000 ms. On face interference trials, the inter-stimulus interval, during which a fixation cross appeared, was 500 ms, and, faces appeared 100 ms before the numbers, before the onset of the number trial. To equate the inter-stimulus interval, in baseline trials the fixation cross presentation was extended for 100 ms (for a total of 600 ms). Numbers and fixation crosses were printed in red and numbers appeared on the face centered vertically on the bridge of the nose.

The task comprised 288 trials (144 baseline and 144 face interference trials), with equal numbers of easy and difficult trials in both conditions. Types of trials were pseudo-randomly intermixed with the constraint that a trial was never followed by a trial that was exactly the same and that never more than two easy or two difficult trials and never more than three baseline or face interference trials were presented in a row. For the face interference trials, each of 72 faces was presented twice: once in combination with an easy trial and once in combination with a difficult trial. Presented faces belonged to one of two age groups (young faces: 18–31 years, older faces: 69–80 years) and either had a happy, an angry, or a neutral facial expression. Within each age group, there were equal numbers of male and female faces and equal numbers of each expression. Twelve stimuli were presented for each age of face by facial expression combination. Presentation of a specific face with a specific expression was counterbalanced across participants. The presentation orders were pseudo-randomized with the constraints that each combination of the categories (age of face, gender of face, facial expression) was represented equally often throughout the presentation order, and that no more than two faces of the same category followed each other. The presentation orders were further controlled for hair color and attractiveness of faces as rated by six independent raters (four young and two older adults). The faces used in this experiment were taken from the FACES database (Ebner, Riediger, & Lindenberger, in press). We selected 72 faces that were equated across age groups for attractiveness and distinctiveness, and that displayed distinct versions of happy, angry, and neutral expressions. The stimulus presentation was controlled using E-Prime (Schneider, Eschman, & Zuccolotto, 2002). Responses and response times were recorded. For both tasks, the experimenter gave verbal instructions and the program provided written instructions and practice runs.

At the end of the session participants indicated their general emotional well-being (“In general, how would you rate your emotional well-being?”, response options: 1 = ‘distressed’ to 5 = ‘excellent’), their general physical health (“In general, how would you rate your health and physical well-being?”, response options: 1 = ‘poor’ to 5 = ‘excellent’), the extent to which they had felt distracted by the faces (“Overall, how much do you feel you were distracted by the faces?”, response options: 1 = ‘not distracted at all’ to 5 = ‘very distracted’), as well as what type of face (age of face, facial expression) had been more distracting (“Which type of face was more distracting?”; response options: young, old, no difference, and happy, angry, neutral, no difference, respectively). Test sessions typically took 30 minutes.

Results and Discussion

Data Preparation

As shown in Tables 1 and 2, error rates were low. Erroneous or null responses (5.3 % of all trials) and the single anticipatory (< 300 ms) response were discarded from analyses. Preliminary analyses revealed no effect of gender of participant, no effect of gender of face, and no interaction; we therefore collapsed across male and female participants as well as male and female faces in all subsequent analyses.

Table 1.

Mean Response Times (RTs, in Milliseconds), Standard Deviations (SDs), and Percent of Errors (%E) for Young Participants, Experiment 1, Baseline and Face Interference Trials

| Easy Trials |

Difficult Trials |

|||||

|---|---|---|---|---|---|---|

| Trial Type | RT | SD | %E | RT | SD | %E |

| Baseline Trials | 538 | 65 | 0.8 | 710 | 75 | 9.8 |

| Face Interference Trials |

535 | 61 | 1.0 | 718 | 75 | 9.6 |

Table 2.

Mean Response Times (RTs, in Milliseconds), Standard Deviations (SDs), and Percent of Errors (%E) for Young Participants, Experiment 1, Face Interference Trials

| Easy Trials |

Difficult Trials |

|||||

|---|---|---|---|---|---|---|

| Stimulus | RT | SD | %E | RT | SD | %E |

| Young Faces | 537 | 62 | 0.8 | 727 | 84 | 8.9 |

| Older Faces | 534 | 62 | 1.3 | 708 | 71 | 10.2 |

| Happy Faces | 534 | 65 | 0.5 | 708 | 78 | 8.8 |

| Angry Faces | 534 | 64 | 0.7 | 730 | 71 | 9.5 |

| Neutral Faces | 537 | 61 | 1.8 | 715 | 83 | 10.4 |

Alpha was set at .05 for all statistical tests.

Interference Independent of Type of Face

To examine whether to-be-ignored faces (independent of age and expression of the face) distracted young participants' attention from the face-unrelated number task as compared to trials with no faces presented in the background, we compared overall performance on baseline trials with overall performance on face interference trials. We conducted a 2 (trial difficulty: easy, difficult) × 2 (trial type: baseline, face interference) repeated-measures analysis of variance (ANOVA) on response time on the number task with trial difficulty and trial type as the two within-subject factors. Results are presented in Table 1. The main effect for trial type was not significant (Wilks' λ = .93, F(1, 21) = 1.20, p = .29, ηp2 = .07) but there was a significant main effect for trial difficulty (Wilks' λ = .06, F(1, 21) = 293.84, p < .0001, ηp2 = .95) and a significant trial type x trial difficulty interaction (Wilks' λ = .78, F(1, 21) = 4.67, p = .05, ηp2 = .22): Responses were faster for the easy compared to the difficult trials. In addition, responses for the difficult, but not the easy, trials were slower when faces were presented in the background compared to when no faces were presented.

Interference as a Function of Type of Face

Next, we examined whether young faces distracted attention more from the number task than older faces and whether angry faces distracted attention more than happy or neutral faces. Even though we did not have specific expectations regarding the interaction between age and expression of the face, we conducted a 2 (age of face: young, older) × 3 (facial expression: happy, angry, neutral) repeated-measures ANOVA on response time with age of face and facial expression as within-subject factors; this allowed us to examine the interaction between both factors in addition to their main effects. Because of the substantial difference in response times for easy versus difficult trials, we ran this analysis separately for easy and difficult trials.

Easy Trials

None of the effects reached significance for easy trials: main effect for age of face (Wilks' λ = .96, F(1, 21) = 0.79, p = .39, ηp2 = .05), main effect for facial expression (Wilks' λ = .98, F(2, 20) = 0.15, p = .87, ηp2 = .02), and age of face by facial expression interaction (Wilks' λ = .93, F(2, 20) = 0.57, p = .57, ηp2 = .07).

Difficult Trials

As summarized in Table 2, for difficult trials, the main effects for age of face (Wilks' λ = .80, F(1, 21) = 4.18, p = .05, ηp2 = .20) and facial expression (Wilks' λ = .66, F(2, 20) = 4.12, p = .04, ηp2 = .34) were significant. The interaction did not reach significance (Wilks' λ = .95, F(2, 20) = 0.42, p = .67, ηp2 = .05). As expected, participants gave slower responses on the number task when young compared to older faces were presented in the background. Also confirming our expectations, participants gave slower responses with angry than happy faces in the background (t(21) = 2.95, p = .01). However, responses on trials with neutral background faces were not significantly different from trials with happy (t(21) = 1.02, p = .32) or angry faces (t(21) = −1.81, p = .09).1

Summary

There were several significant effects with respect to difficult trials: In line with our expectations, young participants responded slower on the number task when faces were presented in the background than when no faces were presented. This suggests that the task-irrelevant faces distracted from the face-unrelated number task. Also as expected, participants were more distracted on the number task when faces of their own (i.e., young faces) as compared to the other age group (i.e., older faces) appeared in the background. In addition, facial expression influenced task performance: Partly confirming our expectations, young participants were distracted more by angry than happy faces; but there were no differences between neutral and happy or angry faces. Note that none of these effects were significant for easy trials. An explanation for differences in findings regarding easy versus difficult trials is discussed below.

Experiment 2

Experiment 2 tested young and older participants. The purpose of Experiment 2 was to examine whether young and older participants differed in interference from faces of different ages and with different facial expressions. Regarding young participants, we expected to replicate results for difficult trials in Experiment 1 that young faces would distract more from a face-unrelated task than older faces and that angry faces would distract more than happy faces. Correspondingly, regarding older participants, we hypothesized that older faces would distract more from a face-unrelated task than young faces and that happy faces would distract more than angry faces. This prediction was based on previous evidence of an own age effect in face processing (Bäckman, 1991; Lamont et al., 2005) and observations that older adults are better at disengaging from angry than happy faces (Hahn et al., 2006) and preferentially attend to happy relative to angry faces (Isaacowitz et al., 2006b; Mather & Carstensen, 2003).

Methods

Participants

Thirty-two young adult undergraduate students (age range 18–22 yrs., M = 19.3, SD = 1.34, 56 % female) were recruited through the university's undergraduate subject pool and 20 older adults (age range 65–84 yrs., M = 74.1, SD = 4.81, 50 % female) from the community and received course credit or monetary compensation. Older participants reported a mean of 17.8 years of education (SD = 2.4). Physical and cognitive characteristics of the sample were comparable to Experiment 1. The age groups did not differ in reported general health and physical well-being (MY = 4.25, SD = 0.76; MO = 4.00, SD = 0.82; F(1, 50) = 1.22, p = .28, ηp2 = .02) or in reported general emotional well-being (MY = 3.91, SD = 0.96; MO = 4.00, SD = 0.47; F(1, 50) = 0.16, p = .69, ηp2 = .00). Older participants reported higher current positive affect (MY = 2.63, SD = 0.62; MO = 3.53, SD = 0.79; F(1, 50) = 20.63, p < .0001, ηp2 = .30) and lower negative affect (MY = 1.39, SD = 0.52; MO = 1.13, SD = 0.15; F(1, 50) = 4.65, p = .04, ηp2 = .09) than young participants. Neither positive affect nor negative affect had an influence on distraction by happy faces (positive affect: βY = −.07, tY(31) = −0.40, p = .69; βO = .10, tO(19) = 0.40, p = .69; negative affect: βY = .18, tY(31) = 1.01, p = .32; βO = −.02, tO(19) = −0.06, p = .95), angry faces (positive affect: βY = −.09, tY(31) = −0.50, p = .62; βO = .05, tO(19) = 0.21, p = .83; negative affect: βY = .19, tY(31) = 1.07, p = .29; βO = −.10, tO(19) = −0.38, p = .71), or neutral faces (positive affect: βY = −.05, tY(31) = −0.28, p = .78; βO = .10, tO(19) = 0.38, p = .71; negative affect: βY = .23, tY(31) = 1.24, p = .22; βO = −.05, tO(19) = −0.21, p = .84) in young or older participants, and these variables were therefore not further considered. Young participants scored better than older participants in visual motor processing speed (MY = 68.6, SD = 9.31; MO = 45.5, SD = 10.39; F(1, 50) = 67.65, p < .0001, ηp2 = .58), but the age groups did not differ in vocabulary (MY = 22.9, SD = 4.20; MO = 22.0, SD = 4.76; F(1, 50) = 0.50, p = .48, ηp2 = .01; tests are described below). Older participants scored high on the Mini-Mental State Examination (MMSE; Folstein, Folstein, & McHugh, 1975; M = 28.9, SD = 1.22; max possible = 30), suggesting no indication of early dementia in this group.2

Procedure

Participants were first informed about the testing procedure and signed a consent form. They then filled out the short version of the Positive and Negative Affect Schedule (PANAS; Watson, Clark, & Tellegen, 1988). Next, participants worked on the MSIT. The MSIT task was identical to the one administered in Experiment 1 (see Figure 1), except that we included only face interference trials (no baseline trials) to reduce distraction by frequent switching between baseline and face interference trials, especially for older adults. In addition, numbers and faces appeared simultaneously (stimulus presentation: 1000 ms, inter-stimulus interval: 500 ms). As before, the stimulus presentation was controlled using E-Prime and responses and response times were recorded, and the experimenter gave verbal instructions and the program provided written instructions and practice runs. There were 144 (face interference) trials. Trial types were again pseudo-randomly intermixed with the same constraints as in Experiment 1. Again, each of 72 faces was presented twice, once in combination with an easy trial and once in combination with a difficult trial, in identical order as in Experiment 1.

After the MSIT, participants filled in an abbreviated version of the verbal subscale of the Wechsler Adult Intelligence Scale vocabulary test (WAIS; Wechsler, 1981; max score = 30). In this task participants were asked to give a simple definition for each of 15 words (e.g., obstruct, sanctuary). Responses were coded as 0 = ‘incorrect’, 1 = ‘partly correct’, or 2 = ‘fully correct’. Participants next indicated their general emotional well-being (“In general, how would you rate your emotional well-being?”, response options: 1 = ‘distressed’ to 5 = ‘excellent’) and their general physical health (“In general, how would you rate your health and physical well-being?”, response options: 1 = ‘poor’ to 5 = ‘excellent’). They also worked on the Digit-Symbol-Substitution test (Wechsler, 1981; max score = 93) as a measure of visual motor processing speed. In this task, each digit from 1 to 9 is assigned a sign and participants are asked to enter the correct sign under each of the digits as quickly as possible. Numbers of correctly entered signs are counted. Finally, participants rated the extent to which they had felt distracted by the faces (“Overall, how much do you feel you were distracted by the faces?”, response options: 1 = ‘not distracted at all’ to 5 = ‘very distracted’), as well as what type of face (age of face, facial expression) had been more distracting (“Which type of face was more distracting?”; response options: young, old, no difference, and happy, angry, neutral, no difference, respectively). Test sessions typically took 40 minutes for young participants and 50 minutes for older participants.

Results and Discussion

Data Preparation

As shown in Table 3, error rates were low. Erroneous or null responses (5.7 % of all trials) and anticipatory (< 300 ms) responses (0.2 % of all trials) were discarded from analyses. One older male participant and two older female participants were only able to use their index finger for the responses which resulted in especially slow response times. One older participant declined to do the task after the first few trials. All four participants were excluded from analyses. Preliminary analyses revealed no effect of gender of participant, no effect of gender of face, and no interaction; we therefore collapsed across male and female participants as well as male and female faces in all subsequent analyses.

Table 3.

Mean Response Times (RTs, in Milliseconds), Standard Deviations (SDs), and Percent of Errors (%E), for Young and Older Participants, Experiment 2, Face Interference Trials

| Easy Trials |

Difficult Trials |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Young Participants |

Older Participants |

Young Participants |

Older Participants |

|||||||||

| Stimulus | RT | SD | %E | RT | SD | %E | RT | SD | %E | RT | SD | %E |

| Young Faces | 575 | 71 | 1.3 | 721 | 54 | 0.8 | 737 | 74 | 8.0 | 953 | 56 | 7.3 |

| Older Faces | 571 | 66 | 0.9 | 734 | 64 | 0.8 | 732 | 74 | 6.5 | 946 | 63 | 12 |

| Happy Faces | 576 | 70 | 1.4 | 738 | 57 | 1.4 | 731 | 79 | 7.8 | 952 | 60 | 11 |

| Angry Faces | 573 | 70 | 0.7 | 722 | 60 | 0.7 | 733 | 79 | 6.7 | 948 | 54 | 9.4 |

| Neutral Faces | 570 | 68 | 1.3 | 724 | 59 | 0.7 | 741 | 79 | 7.3 | 948 | 67 | 9.0 |

Again, alpha was set at .05 for all statistical tests.

Interference as a Function of Type of Face

To first explore whether young and older participants differed in their overall task performance independent of the type of face (age, facial expression) presented, we conducted a 2 (age group of participant: young, older) × 2 (trial difficulty: easy, difficult) repeated-measures ANOVA on response times on the number task with trial difficulty as the within-subject factor and age group of participant as between-subjects factor. The main effects for age group of participant (F(1, 50) = 104.31, p < .0001, ηp2 = .68) and trial difficulty (Wilks' λ = .05, F(1, 50) = 906.95, p < .0001, ηp2 = .95), and their interaction (Wilks' λ = .69, F(1, 50) = 22.03, p < .0001, ηp2 = .31) were significant. Older participants (MO = 839 ms, SD = 58) were slower in their responses on the MSIT than young participants (MY = 654, SD = 71). Furthermore, both age groups, but especially older participants, responded more slowly to difficult (MY = 735, SD = 73; MO = 949, SD = 57) than to easy trials (MY = 573, SD = 68; MO = 728, SD = 58).

Next, we examined whether both young and older participants' attention to a face-unrelated task was more distracted by own age as compared to other age faces, and whether young and older participants were differentially distracted by happy, angry, and neutral faces. We conduced a 2 (age group of participant: young, older) × 2 (age of face: young, older) × 3 (facial expression: happy, angry, neutral) repeated-measures ANOVA on response times on the number task with age of face and facial expression as within-subject variables and age group of participant as between-subjects factor. Again, this analysis allowed exploration of the interaction between age and expression of the face in addition to investigation of the two main effects. Because of the substantial difference in response times for easy versus difficult trials as well as the interaction between age group of participant and difficulty level, and to be consistent with Experiment 1, we again conducted analyses separately for easy and difficult trials.

Easy Trials

With respect to easy trials, the main effects for age group of participant (F(1, 50) = 68.73, p < .0001, ηp2 = .58) and for facial expression (Wilks' λ = .81, F(2, 49) = 5.51, p = .007, ηp2 = .19), and the age of face × age group of participant interaction (Wilks' λ = .86, F(1, 50) = 7.76, p = .008, ηp2 = .14) were significant. All other effects were not significant: main effect for age of face (Wilks' λ = .96, F(1, 50) = 2.01, p = .16, ηp2 = .04), facial expression × age group of participant interaction (Wilks' λ = .93, F(2, 49) = 1.84, p = .17, ηp2 = .07), age of face × facial expression interaction (Wilks' λ = .98, F(2, 49) = 0.39, p = .68, ηp2 = .02), and age of face × facial expression × age group of participant interaction (Wilks' λ = 1.00, F(2, 49) = 0.03, p = .97, ηp2 = .00).

To examine the effect of age of face in young and older participants for easy trials, we followed up on the significant age of face × participant age group interaction in repeated-measures ANOVAs on response times with age of face as within-subject variable separately for young and older participants. The analyses showed that the main effect for age of face was only significant in older participants (t(19) = −2.25, p = .04) but not in young participants (t(31) = 1.27, p = .21; see Table 3): In line with our predictions, older participants responded slower to the number task when older compared to young faces were presented in the background. Consistent with Experiment 1, there was no significant effect for young participants for easy trials.

We then investigated which facial expression was more distracting for easy trials: First, we conducted paired-samples t-tests that tested mean differences in response times in each of the pairs of facial expressions (happy–angry, happy–neutral, angry–neutral), collapsed across young and older faces and young and older participants. Participants overall gave slower responses when happy than when angry (t(51) = 2.61, p = .01) or neutral faces (t(51) = 2.64, p = .01) appeared in the background; response times were not different for angry and neutral faces (t(51) = 0.16, p = .87). To test our hypothesis that young participants were more distracted by angry faces than happy faces, whereas older participants were more distracted by happy faces than angry faces, we then examined separately for young and older participants which facial expression was more distracting (even though the facial expression × age group of participant interaction was not significant). We conducted paired-samples t-tests that tested mean differences in response times in each of the pairs of facial expressions, collapsed across young and older faces. As presented in Table 3, confirming our predictions, older participants gave slower responses when happy than when angry (t(19) = 3.45, p = .003) or neutral (t(19) = 2.60, p = .02) faces appeared in the background; older participants' response times were not different for angry and neutral faces (t(19) = −0.51, p = .61). In line with Experiment 1, there were no significant effects for young participants in easy trials: happy versus angry (t(31) = 0.90, p = .37), happy versus neutral (t(31) = 1.38, p = .18), and angry versus neutral (t(31) = 0.58, p = .57). This showed that the greater distraction from happy than angry and neutral faces was driven by older participants.

Difficult Trials

For difficult trials, there was a main effect for age group of participant (F(1, 50) = 119.22, p < .0001, ηp2 = .71) and an age of face × facial expression × age group of participant three-way interaction (Wilks' λ = .87, F(2, 49) = 3.72, p = .03, ηp2 = .13). All other effects did not reach significance: main effect for age of face (Wilks' λ = .96, F(1, 50) = 2.11, p = .15, ηp2 = .04), main effect for facial expression (Wilks' λ = .99, F(2, 49) = 0.25, p = .78, ηp2 = .01), age of face × age group of participant interaction (Wilks' λ = 1.00, F(1, 50) = 0.06, p = .80, ηp2 = .00), facial expression x age group of participant interaction (Wilks' λ = .96, F(2, 49) = 1.07, p = .35, ηp2 = .04), and age of face × facial expression interaction (Wilks' λ = .99, F(2, 49) = 0.32, p = .73, ηp2 = .01).

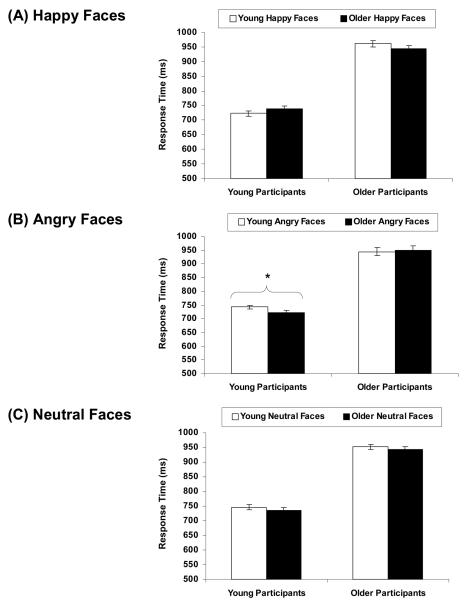

To better understand the significant three-way interaction, that is, how age of the face interacted with expression of the face in young and older participants, we conducted repeated-measures ANOVAs on response times with age of face and facial expression as within-subject variables separately for young and older participants. Results are presented in Table 3 and Figure 2. None of the main effects were significant in young or older participants: main effect for age of face (Wilks' λ = .96, F(1, 31) = 1.30, p = .26, ηp2 = .04; Wilks' λ = .96, F(1, 19) = 0.80, p = .38, ηp2 = .04, respectively) and main effect for facial expression (Wilks' λ = .93, F(2, 30) = 1.19, p = .32, ηp2 = .07; Wilks' λ = .95, F(2, 18) = 0.43, p = .66, ηp2 = .05, respectively). The age of face × facial expression interaction was significant in young participants (Wilks' λ = .79, F(2, 30) = 3.92, p = .03, ηp2 = .21) but not in older participants (Wilks' λ = .91, F(2, 18) = 0.84, p = .45, ηp2 = .09).

Figure 2.

Difficult Trials from Experiment 2

Note. Error bars represent standard errors of the condition mean differences.

* p < .05.

Following-up on this significant interaction in young participants, we compared response times on difficult trials with young and older background faces separately for happy, angry, and neutral expressions: Different from Experiment 1, young participants' responses to difficult trials were only significantly slowed down by angry young faces (M = 742 ms, SD = 84) as compared to angry older faces (M = 723 ms, SD = 80; t(31) = 2.58, p = .02) but there were no significant differences between young and older faces for happy (t(31) = −1.68, p = .10) or neutral expressions t(31) = 1.23, p = .23).3

Summary

We found several differences between young and older participants: As predicted, older participants responded slower to easy number trials when older compared to young faces appeared in the background. In addition, although the interaction of facial expression by age group of participant was not significant which might be explained by the differential impact task difficulty had on young and older participants' face interference, subsequent planned comparisons indicated that, in line with our predictions, older participants gave slower responses on easy number trials with happy as compared to angry or neutral faces in the background. Young participants' responses on difficult number trials, in contrast, were slowed down by young compared to older faces. It is important to note, however, that, different from Experiment 1, this own age interference effect in young participants' responses on difficult number trials held only for angry but not for happy or neutral faces. That is, there was a significant age of face × facial expression interaction for young participants but no significant main effects, suggesting that only the combination of an own age face with an angry facial expression was effective in distracting young participants' attention from the face-unrelated primary task in Experiment 2.

Thus, Experiment 1 and 2 produced somewhat different findings for young participants. One possible explanation is that slightly different versions of the MSIT task were administered in the two experiments. In Experiment 1, the face appeared 100 ms before the numbers, whereas in Experiment 2, face and numbers were presented simultaneously. Thus, in Experiment 1 there was an opportunity for face processing (including processing of age and facial expression) to begin before the number task initiated task-relevant processing. In Experiment 2, focus on processing the number task from the beginning of the trial may have reduced interference from the faces for all but the most salient combinations of features for young adults, namely young, angry faces.

It is important to note that, consistent across Experiment 1 and 2, young participants only showed effects in response time on difficult trials, whereas older participants only showed effects in response time on easy trials. One explanation for this finding refers to age-group differences in overall task performance (independent of age and expression of the presented faces) as found in Experiment 24: It seems likely that because easy trials were so easy for young participants, young participants might have been able to also attend to the faces without being distracted by them (or attended to them after completing the easy number task). Only for difficult trials was the task difficult enough to be sensitive to competition from distraction. For older participants, in contrast, the difficult trials may have been so difficult (note in Table 3 that older participants' mean response times in difficult trials were close to the 1000 ms stimulus presentation time) that the task was not sensitive to added competition. Our findings therefore suggest that the ability to detect differential distraction as a function of the type of face (age, facial expression) in young and older participants was influenced by the overall difficulty of the face-unrelated primary task. Lavie (1995, 2000) has suggested that depending on the level of attentional load of a primary task, distracters are either excluded from attention or are perceived. Furthermore, available evidence suggests that increases in task load differentially affect distracter interference in older compared to young adults (Maylor & Lavie, 1998). Thus, our findings not only suggest that young and older adults are differentially distracted by young and older faces, respectively, but that this effect is influenced by the extent to which the primary task taxes attention in young and older adults.

General Discussion

In integrating evidence on age-group differences in selective attention to, and interference from, faces with different facial expressions and effects of an own age bias in face processing, the present study produced three novel findings: (1) Task-irrelevant, to-be-ignored faces of young and older individuals with happy, angry, and neutral expressions distracted attention from a face-unrelated primary task; (2) interference from faces varied for the different types of faces (age of face, expression of face); and (3) young and older adults showed different face interference effects. Next, we discuss these findings and their implications.

Faces Distract Attention

As expected, Experiment 1 found interference from faces in young adults. That is, relative to no faces, to-be ignored faces that appeared in the background interfered with performance in difficult trials of a face-unrelated primary task. This finding is consistent with evidence that faces engage and hold attention even when task-irrelevant or task-distracting (see Palermo & Rhodes, 2007, for a review). Studies had shown that even very brief presentations of distracter faces are sufficient to attract attention (Koster, Crombez, Van Damme, Verschuere, & De Houwer, 2004) and only a minimal degree of attention is required for processing faces (Holmes, Vuilleumier, & Eimer, 2003; Pessoa, McKenna, Guiterrez, & Ungerleider, 2002) resulting in delayed response to non-face targets (Koster, Verschuere, Burssens, Custers, & Crombez, 2007). This interference effect is typically explained by the idea that faces contain and convey crucial social information, for example familiarity and emotional expression, and therefore constitute highly relevant biological and social stimuli that influence attentional processing.

Of course, further work that includes a non-face distracting background condition would be necessary to conclude that this overall interference effect from faces in young adults is greater for faces than for non-face distracters (e.g., geometrical shapes, musical instruments or appliances) in the present task. Furthermore, the present study does not disentangle whether face interference effects are due to faces capturing attention more readily or holding attention longer than distracting non-face stimuli (Bindemann, Burton, Hooge, Jenkins, & De Haan, 2005; Koster et al., 2004). It would be interesting to further separate these two processes in future studies and to also examine possible age-group differences therein. In addition, future developmental studies, linking changes in performance with neural development, may be particularly useful in further understanding how attention and face processing interact. Nevertheless, as discussed below, our findings clearly show differential attentional effects as a function of the age and the expression of the face as well as the age of the perceiver.

Greater Interference from Own Age Faces

The results in terms of differences in interference from faces of different ages were largely in line with our predictions and with the literature on the own age effect in face processing (Anastasi & Rhodes, 2006; Bäckman, 1991; Lamont et al., 2005). Experiment 1 found an own age face interference effect for young participants, in that they were more distracted by young than by older faces during difficult trials of a face-unrelated task. This own age effect for young participants in response to difficult trials was also found in Experiment 2 but only held for angry but not happy or neutral faces. A possible explanation for these differences between the two experiments is discussed above. Experiment 2 furthermore found an own-age face interference effect in older participants in that they responded slower to the number task on easy trials when older compared to young faces were presented in the background.

Research on the own age bias in face processing typically explains better memory for faces from the own as opposed to other age groups in terms of frequency of contact, and thus familiarity people have with own compared to other age faces (Bartlett & Fulton, 1991). Different daily routines and different environmental settings of young and older adults likely lead to more frequent encounters with same age individuals. Indeed, both young and older adults report more frequent contact with persons of their own as opposed to the other age group, and this is related to how well participants are able to identify facial expressions of, and remember, young and older faces (Ebner & Johnson, 2009). This finding may reflect “expertise” maintained during daily contact or motivational factors (e.g., faces of one's own age group are more likely to represent potential interaction partners). As the present study did not address these potentially underlying factors, the influences of familiarity, frequency of contact, and motivational salience on interference effects of faces of different ages remain to be further determined in future research.

Differential Interference Depending on Facial Expression

In line with our predictions, young participants' performance on difficult trials in Experiment 1 suffered more from angry than happy background faces. This finding is consistent with evidence suggesting that negative—particularly angry and fearful faces—distract attention more readily than neutral or happy faces in young adults (see Palermo & Rhodes, 2007, for a review). This effect is often explained from an evolutionary standpoint that it is adaptive to preferentially attend to threat-related, potentially harmful stimuli (LeDoux, 1998; Öhman & Mineka, 2001) and that processing information related to threat or danger is highly prioritized and proceeds relatively automatically, even when the source of threat is unattended or presented outside of explicit awareness (Mogg & Bradley, 1999; Morris et al., 1998; Vuilleumier & Schwartz, 2001; Whalen et al., 1998), and that processing threat-related information may be mediated by specialized neural circuitry (Adolphs, Tranel, & Damasio, 1998; Esteves, Dimberg, & Öhman, 1994; Morris et al., 1999). However, it is important to note that Experiment 2 did not replicate this stronger interference from angry over happy faces in young adults. As discussed above, differences between the two task versions administered in Experiment 1 and 2 offer an explanation. Future studies will have to follow up on this explanation and examine conditions under which the age and the expression of a face interact, conjointly signal social and/or self-referential significance, and consequently distract attention.

Regarding differences between young and older adults, planned subsequent comparisons showed that, as predicted, older but not young participants were more distracted by happy than angry or neutral faces on easy trials. Differences in face interference between young and older participants clearly indicate that perceptual features of faces with different expressions such as, for example, open versus closed mouth or lines of eyebrows did not explain why the faces were differentially distracting. Rather, the results suggest that, in line with the literature, age-related shifts in interest, age-related differences in salience of different emotions, and increased motivational orientation toward positive over negative information with increasing age might have played a role (Allard & Isaacowitz, in press; Carstensen & Mikels, 2005; Isaacowitz et al., 2006b; Mather & Carstensen, 2005).

Given its obvious survival value, the direction of attention to imminent threat or danger as a phylogenetically old mechanism should be present and strong at all ages (Mathews & Mackintosh, 1998) and, indeed, some studies showed that, like young adults, older adults are able to rapidly detect angry faces (Hahn et al., 2006; Mather & Knight, 2006). Hahn et al., however, also found that older adults are better able to disengage from angry than from happy or neutral faces. Combined with evidence of preferential attention away from negative toward positive information in older adults (Carstensen & Mikels, 2005; Mather & Carstensen, 2005), older adults' stronger interference from happy compared to angry faces found in the present study seems to reflect improved disengagement from, or suppression of, negative information. (Note, however, that the present study does not allow disentangling of engagement of attention from disengagement of attention.) Further supporting this assumption, there is neuropsychological evidence suggesting that older compared to young adults engage more controlled attentional processing when responding to threat-related facial expressions, recruiting prefrontal and parietal pathways (Gunning-Dixon et al., 2003; Iidaka et al., 2002).

Another explanation for the age-group differences stems from evidence that anger is a more difficult emotion to categorize compared to happiness, especially for older adults. This might then be reflected in reduced attention to anger as it is not quickly and accurately detected as such. Ruffman, Sullivan, and Edge (2006), for instance, found that older adults did not distinguish between high-and low-danger faces to the same extent as did young adults. There is also evidence showing that older adults have greater difficulty recognizing almost all negative facial expressions than young adults (Calder et al., 2003; Ebner & Johnson, 2009; Moreno, Borod, Welkowitz, & Alpert, 1993; Phillips, MacLean, & Allen, 2002; see Ruffman et al., 2008, for a review).

Moreover, age-group differences in visual scan patterns of emotional faces may offer explanations for the age-group differences in face interference. For example, there is indication that successful identification of happiness is associated with viewing the lower half of a face, whereas successful identification of anger is associated with examining the upper half of a face (Calder, Young, Keane, & Dean, 2000). Furthermore, compared with young adults, older adults fixate less on top halves of faces (Wong, Cronin-Golomb, & Neargarder, 2005), which may not only adversely affect their ability to identify angry faces but may also explain less interference from angry than happy faces found in the present study. Age-group differences in visual scan patterns of faces of different ages and with different expressions need to be further explored in future research.

It is important to note that in the present study, we focused on the investigation of interference effects from young or older faces with either happy, angry, or neutral expressions. Results of the present study can therefore not be generalized to other positive or negative facial expressions, such as surprise, fear, or disgust. Also, the present study only used Caucasian faces and did not systematically vary ethnicity of perceivers. Therefore, it remains for future studies to examine interference from young and older emotional faces of different races and in interaction with young and older perceivers of different ethnicities.

In conclusion, to our knowledge, the present study is the first to investigate interference from faces of different ages and with different facial expressions in young and older adults. It shows that task-irrelevant faces of young and older individuals with happy, angry, and neutral expressions disrupt performance on a face-unrelated task, that face interference differs as a function of the age and the expression of the face, and that young and older adults differ in this regard. There are various potential implications of the present findings. For example, stronger interference from own age as compared to other age faces makes individuals more likely to attend and respond to social signals from members of their own age group and render them more likely social interaction partners, whereas opportunities for social interaction with other age groups might be missed. Note that participants' self-reports suggest that individuals sometimes have limited insight into face interference effects and that these attention biases may be largely outside of conscious awareness. In addition, age-group differences in face interference might also help explain why young and older adults differ in the type of information they are most likely to remember (Grady, Hongwanishkul, Keightley, Lee, & Hasher, 2007; Leigland, Schulz, & Janowsky, 2004; Mather & Carstensen, 2003), as information that captures attention more readily or holds it longer is more likely to be stored in, and later revived from, memory. Finally, the findings pertaining to differences in interference effects as a function of the level of difficulty of the primary task, highlight the possibility that detecting differences in the salience of distracting stimuli depends on the attentional demands of the primary task (Lavie, 2005), which seem to vary between young and older adults in ways that may mask systematic effects of the type of distracter.

Acknowledgements

This research was conducted at Yale University and supported by the National Institute on Aging Grant AG09253 awarded to MKJ and by a grant from the German Research Foundation (DFG EB 436/1-1) awarded to NCE. The authors wish to thank the Yale Cognition Project group for discussions of the studies reported in this paper, Kathleen Muller and William Hwang for assistance in data collection, and Carol L. Raye for helpful comments on earlier versions of this paper.

Footnotes

When asked to indicate the extent of distraction by the faces, participants reported to have been somewhat distracted by the faces (M = 2.73, SD = 0.15), with the majority of participants indicating that young faces were more distracting than older faces and that angry faces were more distracting than happy or neutral faces. This suggests some participant insight into face interference effects.

With the exception of one older female participant, who had contacted the lab independently for study participation, dementia screening for older participants had taken place between one to two years earlier in the context of participants' entry into the lab's participant pool.

In Experiment 2 we found little participant insight into face interference effects. The majority of both age groups reported no difference in distraction by young and older faces and no difference in interference from happy, angry, or neutral faces. Some of the young participants reported more interference from angry than happy or neutral faces.

To our knowledge, the present study is the first that examined age-group differences on the MSIT. It shows that young and especially older participants responded slower on difficult than easy trials. Note, however, that we shortened the stimulus presentation time (1000 ms instead of 1750 ms in the original version of the task; Bush et al., 2003) and added faces as an additional potential source of interference.

References

- Adolphs R, Tranel D, Damasio AR. The human amygdala in social judgment. Nature. 1998;393:470–474. doi: 10.1038/30982. [DOI] [PubMed] [Google Scholar]

- Allard ES, Isaacowitz DM. Are preferences in emotional processing affected by distraction? Examining the age-related positivity effect in visual fixation within a dual-task paradigm. Aging, Neuropsychology, & Cognition. doi: 10.1080/13825580802348562. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anastasi JS, Rhodes MG. Evidence for an own-age bias in face recognition. North American Journal of Psychology. 2006;8:237–252. [Google Scholar]

- Bäckman L. Recognition memory across the adult life span: The role of prior knowledge. Memory & Cognition. 1991;19:63–71. doi: 10.3758/bf03198496. [DOI] [PubMed] [Google Scholar]

- Bartlett JC, Fulton A. Familiarity and recognition of faces in old age. Memory & Cognition. 1991;19:229–238. doi: 10.3758/bf03211147. [DOI] [PubMed] [Google Scholar]

- Bindemann M, Burton M, Hooge ITC, Jenkins R, De Haan EHF. Faces retain attention. Psychonomic Bulletin & Review. 2005;12:1048–1053. doi: 10.3758/bf03206442. [DOI] [PubMed] [Google Scholar]

- Brown V, Huey D, Findlay JM. Face detection in peripheral vision: Do faces pop out? Perception. 1997;26:1555–1570. doi: 10.1068/p261555. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. In the eye of the beholder: The science of face perception. Oxford University Press; UK: 1998. [Google Scholar]

- Bush G, Shin LM. The Multi-Source Interference Task: an fMRI task that reliably activates the cingulo-frontal-parietal cognitive/attention network. Nature Protocols. 2006;1:308–313. doi: 10.1038/nprot.2006.48. [DOI] [PubMed] [Google Scholar]

- Bush G, Shin LM, Holmes J, Rosen BR, Vogt BA. The Multi-Source Interference Task: validation study with fMRI in individual subjects. Molecular Psychiatry. 2003;8:60–70. doi: 10.1038/sj.mp.4001217. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Keane J, Manlya T, Sprengelmeyer R, Scott S, Nimmo-Smith I, Young AW. Facial expression recognition across the adult life span. Neuropsychologia. 2003;41:195–202. doi: 10.1016/s0028-3932(02)00149-5. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Keane J, Dean M. Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance. 2000;26:527–51. doi: 10.1037//0096-1523.26.2.527. [DOI] [PubMed] [Google Scholar]

- Carstensen LL, Isaacowitz DM, Charles ST. Taking time seriously: A theory of socioemotional selectivity. American Psychologist. 1999;54:165–181. doi: 10.1037//0003-066x.54.3.165. [DOI] [PubMed] [Google Scholar]

- Carstensen LL, Mikels JA. At the intersection of emotion and cognition: Aging and the positivity effect. Current Directions in Psychological Science. 2005;14:117–121. [Google Scholar]

- Compton R. The interface between emotion and attention: A review of evidence from psychology and neuroscience. Behavioral and Cognitive Neuroscience Reviews. 2003;2:115–129. doi: 10.1177/1534582303255278. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus driven attention in the brain. Nature Reviews: Neuroscience. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Cunningham WA, Johnson MK, Raye CL, Gatenby JC, Gore JC, Banaji MR. Separable neural components in the processing of black and white faces. Psychological Science. 2004;15:806–813. doi: 10.1111/j.0956-7976.2004.00760.x. [DOI] [PubMed] [Google Scholar]

- De Haan EHF, Young AW, Newcombe F. Faces interfere with name classification in a prosopagnosic patient. Cortex. 1987;23:309–316. doi: 10.1016/s0010-9452(87)80041-2. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annual Review of Neuroscience. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Ebner NC, Johnson MK. Young and older emotional faces: Are there age-group differences in expression identification and memory? Emotion. 2009;9:329–339. doi: 10.1037/a0015179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebner NC, Riediger M, Lindenberger U. FACES—A database of facial expressions in young, middle-aged, and older women and men: Development and Validation. Behavior Research Methods. doi: 10.3758/BRM.42.1.351. in press. [DOI] [PubMed] [Google Scholar]

- Engell AD, Haxby JV, Todorov A. Implicit trustworthiness decisions: Automatic coding of face properties in human amygdala. Journal of Cognitive Neuroscience. 2007;19:1508–1519. doi: 10.1162/jocn.2007.19.9.1508. [DOI] [PubMed] [Google Scholar]

- Esteves F, Dimberg U, Öhman A. Automatically elicited fear: Conditioned skin conductance responses to masked facial expressions. Cognition & Emotion. 1994;8:393–413. [Google Scholar]

- Farah MJ. Is face recognition ‘special’? Evidence from neuropsychology. Behavioural Brain Research. 1996;76:181–189. doi: 10.1016/0166-4328(95)00198-0. [DOI] [PubMed] [Google Scholar]

- Feinstein JS, Goldin PR, Stein MB, Brown GG, Paulus MP. Habituation of attentional networks during emotion processing. NeuroReport. 2002;13:1255–1258. doi: 10.1097/00001756-200207190-00007. [DOI] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. ‘Mini-mental State’: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Fox E, Russo R, Bowles R, Dutton K. Do threatening stimuli draw or hold visual attention in subclinical anxiety? Journal of Experimental Psychology: General. 2001;130:681–700. [PMC free article] [PubMed] [Google Scholar]

- Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nature Neuroscience. 2000;3:191–197. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- Goren CC, Sarty M, Wu PY. Visual following and pattern discrimination of face-like stimuli by newborn infants. Pediatrics. 1975;56:544–549. [PubMed] [Google Scholar]

- Grady CL, Hongwanishkul D, Keightley M, Lee W, Hasher L. The effect of age on memory for emotional faces. Neuropsychology. 2007;21:371–380. doi: 10.1037/0894-4105.21.3.371. [DOI] [PubMed] [Google Scholar]

- Gunning-Dixon FM, Gur RC, Perkins AC, Schroeder L, Turner T, Turetsky BI, Chan RM, Loughead JW, Alsop DC, Maldjian J, Gur RE. Age-related differences in brain activation during emotional face processing. Neurobiology of Aging. 2003;24:285–295. doi: 10.1016/s0197-4580(02)00099-4. [DOI] [PubMed] [Google Scholar]

- Hahn S, Carlson C, Singer S, Gronlund SD. Aging and visual search: Automatic and controlled attentional bias to threat faces. Acta Psychologica. 2006;123:312–336. doi: 10.1016/j.actpsy.2006.01.008. [DOI] [PubMed] [Google Scholar]

- Hansen CH, Hansen RD. Finding the face in the crowd: an anger superiority effect. Journal of Personality and Social Psychology. 1988;54:917–924. doi: 10.1037//0022-3514.54.6.917. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Holmes A, Vuilleumier P, Eimer M. The processing of emotional facial expression is gated by spatial attention: Evidence from event-related brain potentials. Cognitive Brain Research. 2003;16:174–184. doi: 10.1016/s0926-6410(02)00268-9. [DOI] [PubMed] [Google Scholar]

- Iidaka T, Okada T, Murata T, Omori M, Kosaka H, Sadato N, Yonekura Y. Age-related differences in the medial temporal lobe responses to emotional faces as revealed by fMRI. Hippocampus. 2002;12:352–362. doi: 10.1002/hipo.1113. [DOI] [PubMed] [Google Scholar]

- Isaacowitz DM, Wadlinger HA, Goren D, Wilson HR. Is there an age-related positivity effect in visual attention? A comparison of two methodologies. Emotion. 2006a;6:511–516. doi: 10.1037/1528-3542.6.3.511. [DOI] [PubMed] [Google Scholar]

- Isaacowitz DM, Wadlinger HA, Goren D, Wilson HR. Selective preference in visual fixation away from negative images in old age? An eye-tracking study. Psychology and Aging. 2006b;21:40–48. doi: 10.1037/0882-7974.21.1.40. [DOI] [PubMed] [Google Scholar]

- Jenkins R, Burton AM, Ellis AW. Long-term effects of covert face recognition. Cognition. 2002;86:43–52. doi: 10.1016/s0010-0277(02)00172-5. [DOI] [PubMed] [Google Scholar]

- Johnson MH, Dziurawiec S, Ellis HD, Morton J. Newborns' preferential tracking of faces and its subsequent decline. Cognition. 1991;40:1–19. doi: 10.1016/0010-0277(91)90045-6. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kastner S, Ungerleider LG. The neural basis of biased competition in human visual cortex. Neuropsychologia. 2001;39:1263–1276. doi: 10.1016/s0028-3932(01)00116-6. [DOI] [PubMed] [Google Scholar]

- Khurana B, Smith WC, Baker MT. Not to be and then to be: Visual representation of ignored unfamiliar faces. Journal of Experimental Psychology: Human Perception and Performance. 2000;26:246–263. doi: 10.1037//0096-1523.26.1.246. [DOI] [PubMed] [Google Scholar]

- Knight M, Seymour TL, Gaunt JT, Baker C, Nesmith K, Mather M. Aging and goal-directed emotional attention: Distraction reverses emotional biases. Emotion. 2007;7:705–714. doi: 10.1037/1528-3542.7.4.705. [DOI] [PubMed] [Google Scholar]

- Koster EHW, Crombez G, Van Damme S, Verschuere B, De Houwer J. Does imminent threat capture and hold attention? Emotion. 2004;4:312–317. doi: 10.1037/1528-3542.4.3.312. [DOI] [PubMed] [Google Scholar]

- Koster EHW, Verschuere B, Burssens B, Custers R, Crombez G. Attention for emotional faces under restricted awareness revisited: do emotional faces automatically attract attention? Emotion. 2007;7:285–95. doi: 10.1037/1528-3542.7.2.285. [DOI] [PubMed] [Google Scholar]

- Lamont AC, Stewart-Williams S, Podd J. Face recognition and aging: Effects of target age and memory load. Memory & Cognition. 2005;33:1017–1024. doi: 10.3758/bf03193209. [DOI] [PubMed] [Google Scholar]

- Lavie N. Perceptual load as a necessary condition for selective attention. Journal of Experimental Psychology: Human Perception and Performance. 1995;21:451–468. doi: 10.1037//0096-1523.21.3.451. [DOI] [PubMed] [Google Scholar]

- Lavie N. Selective attention and cognitive control: Dissociating attentional functions through different types of load. In: Monsell S, Driver J, editors. Control of cognitive processes. Attention and performance. XVIII. MIT Press; Cambridge, MA: 2000. pp. 175–194. [Google Scholar]

- Lavie N. Distracted and confused?: Selective attention under load. Trends in Cognitive Sciences. 2005;9:75–82. doi: 10.1016/j.tics.2004.12.004. [DOI] [PubMed] [Google Scholar]

- Lavie N, Ro T, Russell C. The role of perceptual load in processing distractor faces. Psychological Science. 2003;14:510–515. doi: 10.1111/1467-9280.03453. [DOI] [PubMed] [Google Scholar]

- LeDoux JE. Fear and the brain: Where we have been, and where are we going? Biological Psychiatry. 1998;44:1229–1238. doi: 10.1016/s0006-3223(98)00282-0. [DOI] [PubMed] [Google Scholar]

- Leigland LA, Schulz LE, Janowsky JS. Age related changes in emotional memory. Neurobiology of Aging. 2004;25:1117–1124. doi: 10.1016/j.neurobiolaging.2003.10.015. [DOI] [PubMed] [Google Scholar]

- Mack A, Rock I. Inattentional blindness. MIT Press; Cambridge, MA: 1998. [Google Scholar]

- Mason SE. Age and gender as factors in facial recognition and identification. Experimental Aging Research. 1986;12:151–154. doi: 10.1080/03610738608259453. [DOI] [PubMed] [Google Scholar]

- Mather M, Carstensen LL. Aging and attentional biases for emotional faces. Psychological Science. 2003;14:409–415. doi: 10.1111/1467-9280.01455. [DOI] [PubMed] [Google Scholar]

- Mather M, Carstensen LL. Aging and motivated cognition: The positivity effect in attention and memory. Trends in Cognitive Science. 2005;9:496–502. doi: 10.1016/j.tics.2005.08.005. [DOI] [PubMed] [Google Scholar]

- Mather M, Knight MR. Angry faces get noticed quickly: Threat detection is not impaired among older adults. Journals of Gerontology Series B: Psychological Sciences and Social Sciences. 2006;61:P54–P57. doi: 10.1093/geronb/61.1.p54. [DOI] [PubMed] [Google Scholar]

- Mathews A, Mackintosh B. A cognitive model of selective processing in anxiety. Cognitive Therapy and Research. 1998;22:539–560. [Google Scholar]

- Maylor EA, Lavie N. The influence of perceptual load on age differences in selective attention. Psychology and Aging. 1998;13:563–573. doi: 10.1037//0882-7974.13.4.563. [DOI] [PubMed] [Google Scholar]

- Merikle P, Smilek D, Eastwood JD. Perception without awareness: Perspectives from cognitive psychology. Cognition. 2001;79:115–134. doi: 10.1016/s0010-0277(00)00126-8. [DOI] [PubMed] [Google Scholar]