Abstract

Dopamine released in the nucleus accumbens is thought to contribute to the decision to exert effort to seek reward. This hypothesis is supported by findings that performance of tasks requiring higher levels of effort is more susceptible to disruption by manipulations that reduce accumbens dopamine function than tasks that require less effort. However, performance of some low-effort cue-responding tasks is highly dependent on accumbens dopamine. To reconcile these disparate results, we made detailed behavioral observations of rats performing various operant tasks and determined how injection of dopamine receptor antagonists into the accumbens influenced specific aspects of the animals' behavior. Strikingly, once animals began a chain of operant responses, the antagonists did not affect the ability to continue the chain until reward delivery. Instead, when rats left the operandum, the antagonists severely impaired the ability to return. We show that this impairment is specific to situations in which the animal must determine a new set of approach actions on each approach occasion; this behavior is called “flexible approach.” Both high-effort operant tasks and some low-effort cue-responding tasks require dopamine receptor activation in the accumbens because animals pause their responding and explore the chamber, and accumbens dopamine is required to terminate these pauses with flexible approach to the operandum. The flexible approach hypothesis provides a unified framework for understanding the contribution of the accumbens and its dopamine projection to reward-seeking behavior.

Introduction

Current models of the behavioral function of the dopamine projection from the ventral tegmental area (VTA) to the nucleus accumbens (NAc) propose that in addition to facilitating learning in some circumstances (Fields et al., 2007; Rangel et al., 2008), dopamine release in the NAc core invigorates behavioral responding (Berridge and Robinson, 1998; Ikemoto and Panksepp, 1999; Robbins and Everitt, 2007; Salamone et al., 2007). One prominent idea is that dopamine receptor activation in the NAc constitutes an element of the neural computation of the effort required to obtain reward (Phillips et al., 2007; Salamone et al., 2007; Floresco et al., 2008). This hypothesis is supported by numerous studies showing that reward-seeking tasks requiring high degrees of effort are disrupted by reduction of dopamine function in the NAc, whereas similar tasks that require less effort are much less affected (Cousins et al., 1996; Aberman and Salamone, 1999; Salamone et al., 2007).

However, recent work found that 6-hydroxydopamine (6-OHDA) lesions of the NAc, which selectively destroy catecholaminergic terminals, did not influence animals' decision to exert greater effort for greater reward in an operant choice task (Walton et al., 2009). Furthermore, both 6-OHDA lesions of the NAc and microinjection of dopamine receptor antagonists into the NAc can markedly reduce the ability of animals to exhibit reward seeking in response to reward-predictive cues (Nicola, 2007), even though the degree of effort required to earn each reward is similar to that of continuous reinforcement tasks, performance of which is not disrupted by NAc dopamine manipulations (Aberman and Salamone, 1999; Yun et al., 2004a). These findings support another class of hypotheses: that NAc dopamine facilitates the ability to respond to reward-predictive information (Berridge and Robinson, 1998; Robbins and Everitt, 2007).

One possibility is that dopamine facilitates separate, parallel computations within the NAc that underlie effortful and cue responding (Floresco, 2007; Nicola, 2007; Niv et al., 2007; Salamone et al., 2007). However, detailed behavioral observation is often absent from studies describing effort and cue-responding tasks, leaving open the possibility of a more parsimonious explanation: that NAc dopamine-dependent tasks are accomplished with a common, but previously unobserved, dopamine-dependent computational and behavioral strategy. To investigate this possibility, we began with the observation that in published behavioral studies, cue-responding tasks tend to be dependent on NAc dopamine if the intertrial intervals (ITIs) are long, whereas short-ITI tasks are less often affected by NAc dopamine manipulations (Nicola, 2007). We reasoned that animals would be more likely to leave the vicinity of task-related operanda during longer intervals, and that therefore NAc dopamine may be required for locomotor approach to reward-associated objects. Here, we use fine-grained analysis of behavior to demonstrate that animals often leave the operandum when performing both high-effort operant and low-effort, long-ITI cue-responding tasks. We show that dopamine receptor activation in the NAc core is required to return to the operandum when a novel set of actions to reach it must be determined on each approach occasion. Thus, NAc dopamine's contribution to action initiation under a limited set of circumstances explains its role in facilitating both high- and low-effort reward-seeking behavior.

Materials and Methods

Animals

Male Long–Evans rats weighing 275–300 g were obtained from Harlan and housed in a room with a 12 h on, 12 h off light cycle. Experiments were conducted during the light phase. One week after their arrival, rats were placed on a restricted diet, receiving 13 g of Bio-Serv formula F-173 rodent pellets and at least 30 ml of water every day until completion of experiments. Animals showing weight loss of >10% of free-feeding weight were given additional food until their weight stabilized. Animals were habituated to handling daily for at least 1 week before beginning experiments, which commenced 1–2 weeks after the start of food restriction. All animal procedures were consistent with the U.S. National Institutes of Health Guide for the Care and Use of Laboratory Animals, and were approved by the Institutional Animal Care and Use Committee of either the Ernest Gallo Clinic and Research Center or the Albert Einstein College of Medicine.

Behavioral tasks

Training procedures are described in detail in a separate section. Task paradigms used for experiments are described here.

Operant chambers

All behavioral experiments were run in standard Med Associates operant chambers (∼30 × 25 cm). The chambers were illuminated with two 28 V white house lights, and at all times during the experiment, white noise (65 dB) was played through a dedicated speaker. This, and the melamine cabinet enclosing each box, ensured that minimal outside noise distracted the animals. Operant chambers were equipped with two retractable levers on one wall, between which was a reward receptacle through which liquid 10% sucrose was delivered into a small well. For the conditional discrimination and switching (CDAS) experiment, chambers were configured differently (see Fig. 5B), with a nosepoke on one wall and a receptacle on each flanking wall. In all experiments, photobeams across the receptacles were used to detect the precise times of entry into and exit from the receptacle. Lever presses (and, in the CDAS task, nosepokes) were time stamped and recorded using the Med Associates system; the time resolution was 1 ms.

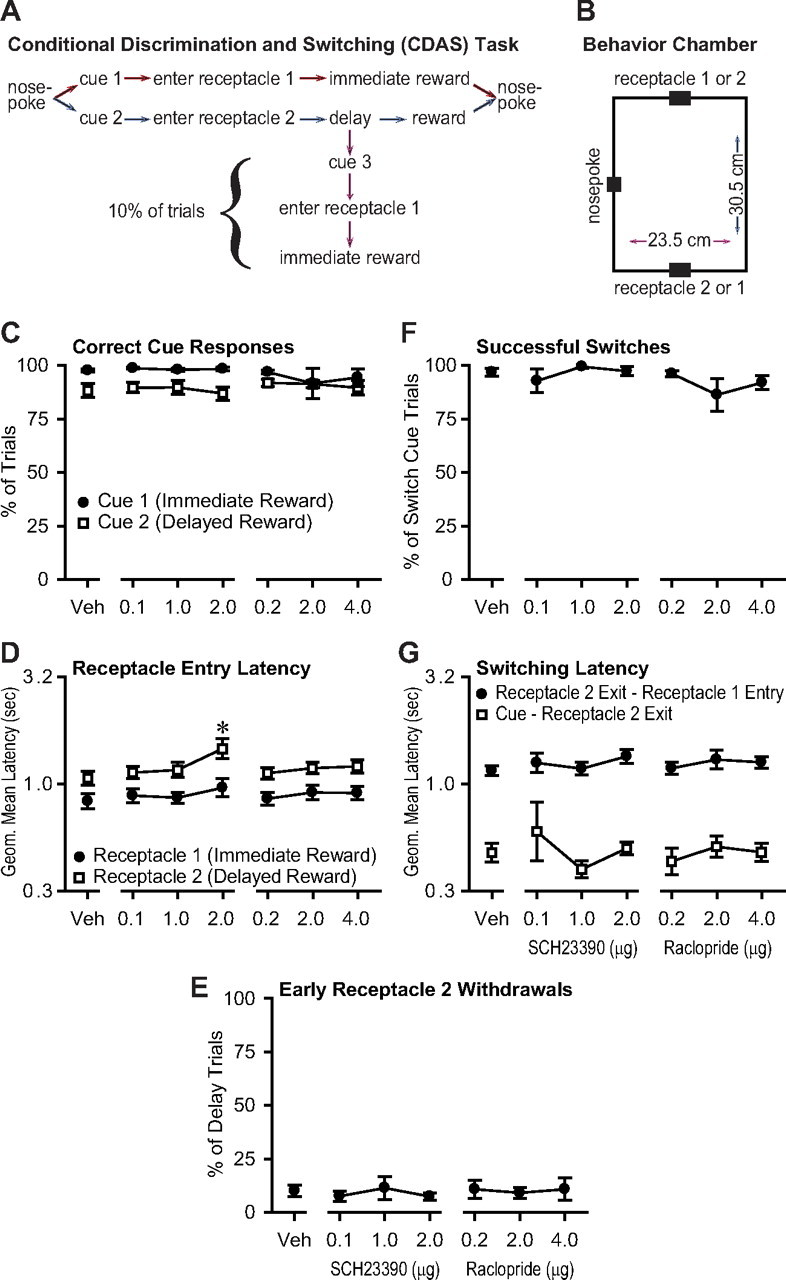

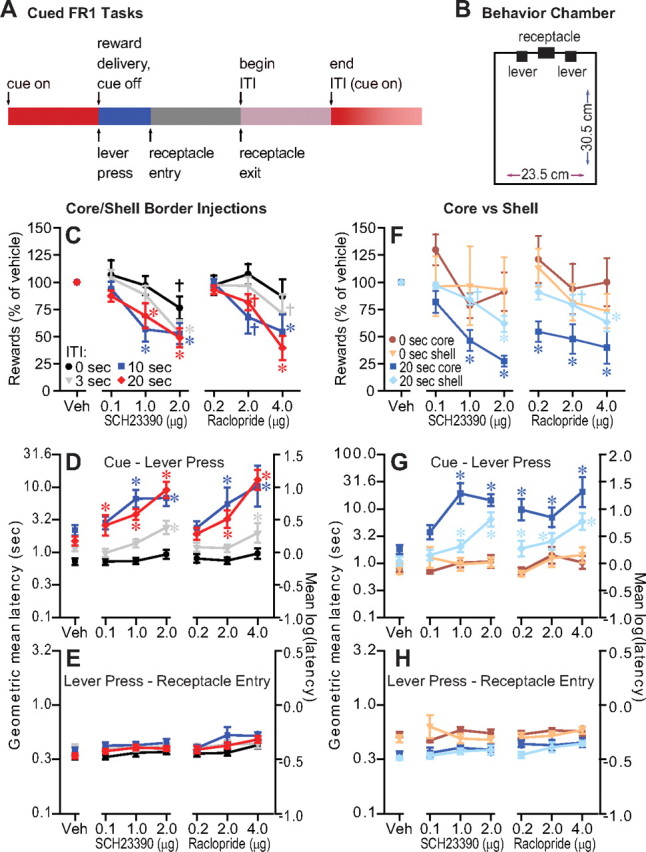

Figure 5.

Inflexible approach in the CDAS task is not substantially impaired by NAc core dopamine antagonist injection. A, CDAS task structure. B, Scale diagram of the behavior chamber. C, The proportion of correct responses to cue 1 and cue 2 was not affected by the antagonists. D, The antagonists had only minor effects on latency to enter the receptacles after cue 1 and cue 2 presentation. E, The antagonists did not affect animals' ability to wait for reward as indicated by the number of early withdrawals from receptacle 2. F, The antagonists also did not affect the ability to respond to cue 3 with a successful switch from waiting in receptacle 2 to entry into receptacle 1. G, The latencies to withdraw from receptacle 2 or to arrive at receptacle 1 after cue 3 presentation were unaffected.

Auditory cues

In all tasks, auditory cues were presented using a sound card dedicated to each operant chamber. The cues consisted of a siren cue (which cycled in frequency from 4 to 8 kHz over 400 ms), an intermittent cue (6 kHz that was on for 40 s, off for 50 ms), and a constant tone cue (3 kHz tone).

Cued fixed ratio 1 task

The siren cue was presented at the beginning of the session and at the end of each ITI. A press on the lever caused the cue to be terminated and 60 μl of 10% sucrose reward to be delivered into the receptacle next to the lever. An ITI that was different for each group of rats (0, 3, 10, or 20 s) was then imposed, at the end of which the cue was presented again, and terminated by a lever press and reward delivery in the same way. There was no maximal cue presentation length.

A subset of rats trained on the cued fixed ratio 1 (FR1) task with 10 s ITI was used for video analysis (see Figs. 2C,D, 3). For these experiments, a light on the wall opposite the lever was illuminated whenever the auditory cue was on, to preserve a record of cue presentation and termination times for offline analysis.

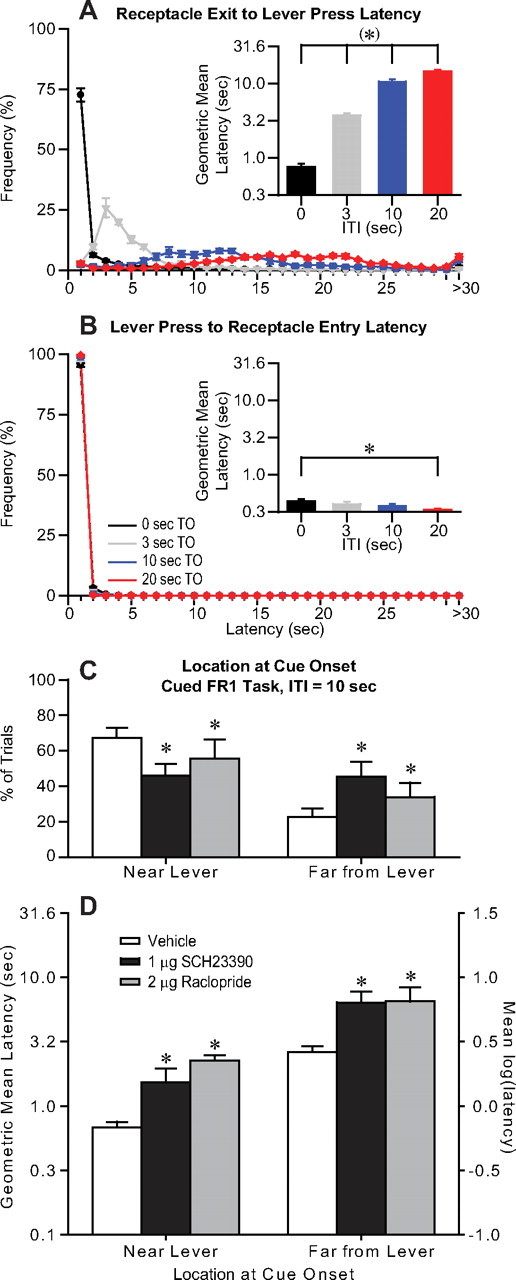

Figure 2.

Animals leave the lever and receptacle in long but not short-ITI tasks. A, Distributions of latencies to press the lever after reward consumption during vehicle injections (core, shell, and core/shell border injections combined) show that this latency is very short and narrowly distributed in the 0 s ITI task, but is longer and more variable in longer ITI tasks. Graphs show the mean (±SEM) across animals of the frequency of the latency given by the abscissa (bin width = 1 cm). Because animals cannot move long distances in <1 s, this implies that animals moved directly from the receptacle to the lever in the 0 s ITI task, but not necessarily in the longer ITI tasks. A, Inset, Mean receptacle exit to lever press latency increases for longer ITIs. The differences between each value and each of the other three values are significant. B, The latency to enter the reward receptacle after a lever press was short and narrowly distributed in all ITI tasks, suggesting that animals rarely deviated from a direct path between the two even in long-ITI tasks. B, Inset, Mean lever press to receptacle entry latency is short and does not vary substantially among the tasks (although the difference between the 0 and 20 s ITI tasks is significant). C, Video analysis of the cued FR1 task with 10 s ITI shows that dopamine antagonist injection in the NAc core decreased the likelihood that animals were in the third of the chamber nearest the lever at cue onset, and increased the likelihood that the animals were in the third of the chamber farthest from the lever. D, The antagonists increased the latency to respond after cue onset by a similar log unit value (i.e., by a similar proportion) no matter the animal's location at cue onset.

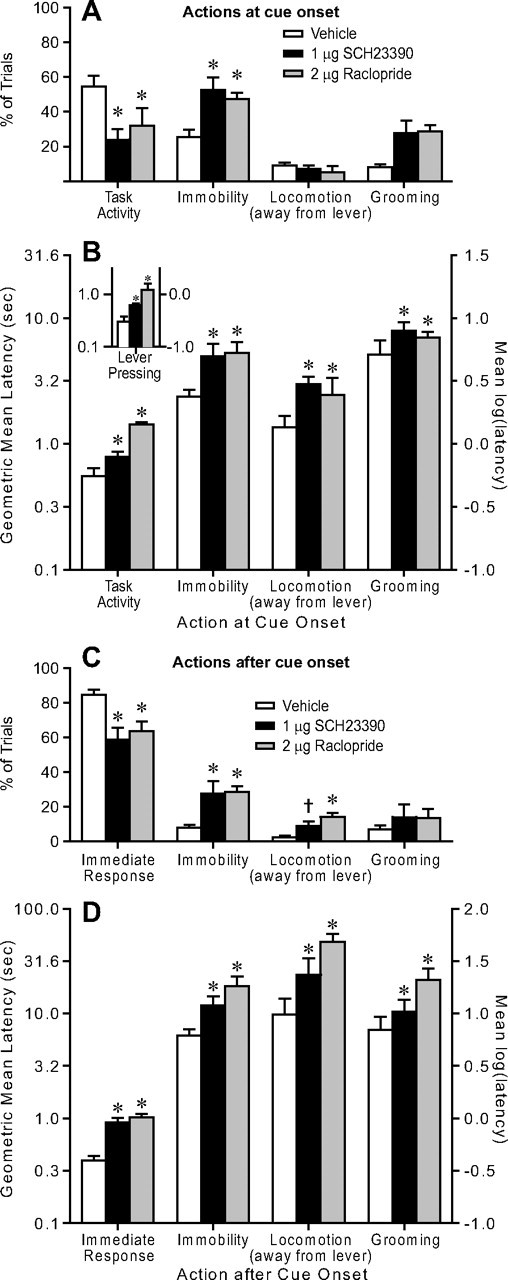

Figure 3.

In a cued FR1 task with a 10 s ITI, dopamine antagonist injection in the NAc core increases the latency to respond after cue presentation without regard to the animal's actions at cue presentation and between cue onset and response. A, The proportion of trials in which animals were already engaged in task-related activity was decreased by the antagonists, and the proportion of trials in which the animal was immobile at cue onset was increased. The incidences of locomotion away from the lever and grooming at cue onset were not affected. B, No matter the actions in which the animal was engaged at cue onset, the antagonists caused a similar proportional increase in cue to response latency (i.e., the log unit increase was similar regardless of action at cue onset). B, Inset, Even when animals were already pressing the lever at cue onset, the latency to the next press was increased by the antagonists. C, Dopamine antagonist injection in the NAc core decreased the fraction of trials with an immediate response, and at the same time increased the fractions of trials in which animals were immobile for at least 1 s between cue onset and lever press, and in which animals locomoted away from the lever during the cue to lever press interval. D, Each drug increased the cue to lever press latency regardless of the actions occurring within the cue to lever press interval. The increase in response latency was similar: no matter the actions within an interval, the antagonists caused an increase in latency of 0.35–0.65 log units.

Discriminative stimulus task

The discriminative stimulus (DS) task was similar to that used previously (Ambroggi et al., 2008; Ishikawa et al., 2008a,b). Two cues were presented, a DS and a neutral stimulus (NS). One of these was the siren cue, and the other was the intermittent cue; this relationship was different for different rats. Cues were presented on a variable interval schedule with average interval of 30 s, and randomly chosen at the time of presentation. The NS was always presented for 10 s, and responding during the NS was recorded but had no programmed consequences. A lever response during the DS terminated the DS and caused delivery of 10% sucrose reward into the receptacle next to the lever. DSs during which the animal did not respond were terminated after 10 s. Responses during the ITI between cue presentations were not rewarded.

For the DS task, light-emitting diodes (LEDs) were affixed to the animals' head caps (which also held the guide cannulae for microinjection), and an automated video tracking system (EthoVision) was used to track the animal's position throughout the experiment (30 frames/s). Video tracking data were synchronized with the Med Associates behavioral timestamp data through the use of timing TTL pulses exchanged between the systems every 5 s.

CDAS task

Animals initiated a trial with a nose poke into a hole located on one wall of the chamber. One of two cues (siren or intermittent) was presented immediately upon nosepoke. One of these instructed the animal to locomote to the receptacle on the left flanking wall, and the other instructed the animal to locomote to the receptacle on the right flanking wall. Correct entry into receptacle 1 in response to cue 1 caused immediate delivery of 60 μl of 10% sucrose. Correct entry into receptacle 2 in response to cue 2 caused reward delivery after a delay of up to 5 s. Early withdrawal, or responding in the incorrect receptacle, caused the imposition of a 10 s penalty period during which a trial could not be initiated. In some randomly chosen trials, cue 3 (constant tone) was presented while the animal was in receptacle 2. This cue instructed the animal to withdraw from receptacle 2 and enter receptacle 1 to receive immediate reward. Cue 3 was introduced late in training (after surgery) and was therefore experienced by the animal many fewer times than the other cues in this study. After all trials, there was a 3 s ITI (plus an additional 10 s if a penalty was imposed) before availability of trial initiation was signaled with a flashing light in the nose poke hole.

FR8 task

This task was identical to the cued FR1 task with 0 s ITI, except that 8 lever presses were required to earn each reward instead of 1. An auditory cue (siren) was presented upon receptacle exit, and was terminated with the eighth lever press. Automated video tracking of head-mounted LEDs was used, as described for the DS task.

Cannula implantation surgery

After reaching a performance plateau, rats were implanted with bilateral guide cannulae for microinjection. Stainless steel guide cannulae (27 ga) with plastic hubs were purchased from Plastics One and cut to a length such that when the base of the hub touched the top of the skull, the end of the guide was 2 mm above the target. Surgery proceeded as described previously (Wakabayashi et al., 2004; Yun et al., 2004b; Nicola et al., 2005; Ambroggi et al., 2008; Ishikawa et al., 2008b). Animals were anesthetized with isoflurane (0.5–2%) and placed in a stereotactic frame with the skull level. The scalp was retracted and holes drilled above the targets. Target coordinates for the tips of the injectors were as follows (in mm below bregma): core/shell border: AP 1.6, ML 1.1, DV 7.5; core: AP 1.2, ML 2.0, DV 7.8; shell: AP 1.2, ML 0.8, DV 7.3. Bilateral cannulae were implanted and fixed to the skull with bone screws and dental cement. For animals in the DS and FR8 groups, two threaded nylon spacers were embedded in the dental cement; these allowed a small headstage containing LEDs and a small battery to be attached to the head before video tracking experiments. All animals were treated with penicillin (i.m.), xylazine (i.p.), and Neopredef topical antibiotic powder before being allowed to recover. Stainless steel wires cut to be flush with the end of the guide cannulae were inserted into the guides and remained there at all times except during injections. Animals were allowed 4–7 d of ad libitum food and water before food restriction and behavioral experiments were restarted.

Microinjections

Baseline performance was reestablished over 3–7 d. After this, animals were microinjected with the D1 antagonist SCH23390 or D2 antagonist raclopride immediately before some sessions as described previously (Wakabayashi et al., 2004; Yun et al., 2004b; Nicola et al., 2005). Rats were gently restrained, and a 33 ga injector cannula extending 2 mm below the guide was inserted. One minute later, 0.5 μl of drug solution was infused into each hemisphere over 2 min using an electronic syringe pump, and after a 1 min diffusion period, the injectors were withdrawn. The animal was immediately placed into the operant chamber and the session began. Drugs were purchased from Sigma and dissolved in sterile 0.9% saline. Each animal received injections of both SCH23390 and raclopride; the order of drugs and doses was randomized. Generally, each animal received bilateral injections of vehicle (saline), 0.1, 1.0, and 2.0 μg of SCH23390, and 0.2, 2.0, and 4.0 μg of raclopride (doses per hemisphere). Injections were given every other day. If performance on the intervening days was not comparable to baseline performance, injections were withheld until performance stabilized.

Data analysis

Statistics and graphs

Repeated-measures ANOVAs with one factor (drug/dose) or two factors (drug/dose and either time interval, location, cue, distance traveled, or other factor) (see Table 1) were used to determine effects of dopamine antagonists on number of rewards earned per session and latencies between behavioral events. Latency values were transformed by taking the log10 because latency distributions tend to be skewed. The log latency distributions were more symmetrical, allowing the use of parametric statistics. Statistical tests using the log of the dependent variable determine whether there is a proportional, rather than additive, change (for instance, an increase of 1 log unit is a 10-fold increase, no matter the baseline value). This allows comparison of changes in latencies that are very different in the control condition. The mean ± SEM of the log latency values are displayed in figures, with the geometric mean latency (exponent of the mean of the log latencies) scale shown on the left-hand axis.

Table 1.

Statistical results for all figures

| Figure(s) | Dependent variable | Factor(s) | Results |

|---|---|---|---|

| 1C | Rewards earned | Drug dose | 0 s ITI: F(6,7) = 2.4, p = 0.04, n = 8 rats |

| 3 s ITI: F(6,6) = 4.2, p = 0.003, n = 7 | |||

| 10 s ITI: F(6,7) = 5.5, p < 0.001, n = 8 | |||

| 20 s ITI: F(6,6) = 11.5, p < 0.001, n = 7 | |||

| 1D,E, S2A | Log latency between events (cue-lever press, lever press-entry, consummatory) | Interval, drug dose | Interaction interval × drug dose: |

| 0 s ITI: F(12,7) = 0.8, p = 0.7 | |||

| 3 s ITI: F(12,6) = 2.5, p = 0.008 | |||

| 10 s ITI: F(12,6) = 2.6, p = 0.006 | |||

| 20 s ITI: F(12,6) = 11.3, p < 0.001 | |||

| 1F | Rewards earned | Drug dose | 0 s ITI core: F(6,7) = 1.6, p = 0.18, n = 8 |

| 0 s ITI shell: F(6,7) = 1.5, p = 0.2, n = 8 | |||

| 20 s ITI core: F(6,7) = 6.8, p < 0.001, n = 8 | |||

| 20 s ITI shell: F(6,7) = 5.7, p < 0.001, n = 8 | |||

| 1G,H, S2B | Log latency between events (cue-lever press, lever press-entry, consummatory) | Interval, drug dose | Interaction interval × drug dose: |

| 0 s ITI core: F(12,7) = 1.0, p = 0.5 | |||

| 0 s ITI shell: F(12,6) = 1.6, p = 0.1 | |||

| 20 s ITI core: F(12,4) = 3.3, p = 0.001 | |||

| 20 s ITI shell: F(12,7) = 4.9, p < 0.001 | |||

| 2A, inset | Log latency between receptacle exit and lever press | ITI | Non-repeated-measures ANOVA: |

| F(3,58) = 320, p < 0.001 | |||

| 2B, inset | Log latency between lever press and receptacle entry | ITI | Non-repeated-measures ANOVA: |

| F(3,58) = 6.4, p < 0.001 | |||

| 2C | % of trials | Drug dose | Near lever: F(2,5) = 7.8, p = 0.016, n = 6 rats |

| Far from lever:: F(2,5) = 11.8, p = 0.006 | |||

| 2D | Log latency between cue onset and lever press | Location, drug dose | Location: F(1,4) = 47.9, p = 0.002 |

| Drug dose: F(2,4) = 5.0, p = 0.04 | |||

| Interaction location × drug dose: F(2,4) = 0.3, p = 0.7 | |||

| 3A | % of trials | Drug dose | Task activity: F(2,5) = 9.3, p = 0.011, n = 6 rats |

| Immobility: F(2,5) = 13.8, p = 0.004 | |||

| Locomotion: F(2,5) = 0.9, p = 0.45 | |||

| Grooming: F(2,5) = 1.5, p = 0.29 | |||

| 3B | Log latency between cue onset and lever press | Action at cue onset, drug dose | Action at cue onset: F(3,4) =71.6, p < 0.001 |

| Drug dose: F(2,4) = 6.1, p = 0.02 | |||

| Interaction action × drug dose: F(6,4) = 0.4, p = 0.9 | |||

| 3B, inset | Log latency between cue onset and lever press | Drug dose | Lever pressing: F(2,5) = 10.4, p = 0.006 |

| 3C | % of trials press | Drug dose | Immediate response: F(2,5) = 14.0, p = 0.004 |

| Immobility: F(2,5) = 8.1, p = 0.015 | |||

| Locomotion: F(2,5) = 6.0, p = 0.03 | |||

| Grooming: F(2,5) = 1.5, p = 0.28 | |||

| 3D | Log latency between cue onset and lever press | Action after cue onset (comparison with Immediate Response), drug dose | Immobility vs immediate response: |

| Action: F(1,4) = 208.6, p < 0.001 | |||

| Drug dose: F(2,4) = 11.5, p = 0.003 | |||

| Interaction action × drug dose: F(2,4) = 2.2, p = 0.2 | |||

| Locomotion vs immediate response: | |||

| Action: F(1,4) = 201.1, p < 0.001 | |||

| Drug dose: F(2,4) = 16.5, p < 0.001 | |||

| Interaction action × drug dose: F(2,4) = 3.4, p = 0.1 | |||

| Grooming vs immediate response: | |||

| Action: F(1,3) = 77.9, p = 0.003 | |||

| Drug dose: F(2,3) = 11.3, p = 0.009 | |||

| Interaction action × drug dose: F(2,3) = 3.7, p = 0.09 | |||

| 4C | Cue response ratio | Location, drug dose | DS: |

| Location: F(2,4) = 16.8, p = 0.001 | |||

| Drug dose: F(6,4) = 9.5, p < 0.001 | |||

| Interaction location × drug dose: F(12,4) = 1.0, p = 0.4 | |||

| NS: all main & interaction F > 0.2 | |||

| n = 6 rats | |||

| 4D | Movement velocity | Movement type, drug dose | Movement type: F(3,4) = 12.2, p < 0.001 |

| Drug dose: F(6,4) = 1.6, p = 0.2 | |||

| Interaction movement type × drug dose: | |||

| F(18,4) = 1.4, p = 0.2 | |||

| (post hoc tests show post-DS movements resulting in lever press to be faster than each other type of movement, irrespective of drug dose) | |||

| 4E | First movement log latency | Cue, drug dose | Interaction cue × drug dose: |

| F(6,4) = 4.2, p = 0.005 | |||

| 5C | Rewards earned | Drug dose | Cue 1: F(6,6) = 0.7, p = 0.7, n = 7 rats |

| Cue 2: F(6,6) = 0.7, p = 0.7 | |||

| 5D | Log latency to reach receptacles 1 and 2 | Drug dose | Cue 1–receptacle 1 entry latency: F(6,6) = 2.3, p = 0.06 |

| Cue 2–receptacle 2 entry latency: F(6,6) = 7.1, p < 0.001 | |||

| 5E | % of trials | Drug dose | F(6,6) = 0.2, p = 0.97 |

| 5F | % of trials | Drug dose | F(6,6) = 1.5, p = 0.2 |

| 5G | Log latency to respond to cue 3 | Drug dose | Cue 3–receptacle 2 exit latency: F(6,6) = 1.0, p = 0.5 |

| Receptacle 2 exit–receptacle 1 entry latency: F(6,6) = 1.6, p = 0.2 | |||

| 7C | Log latency between events | Interval, drug | Interaction interval × drug: |

| F(2,7) = 5.7, p = 0.02, n = 9 rats | |||

| 7E, inset | % of trials | Drug | F(2,8) = 0.7, p = 0.5 |

| 7F | Log latency between lever presses | Distance traveled, drug dose | Interaction distance traveled × drug: |

| F(2,7) = 4.4, p = 0.03 | |||

| 7G | Velocity of last movement before lever press | Interval, drug | Interval: F(1,7) = 6.5, p = 0.04 |

| Drug: F(1,7) = 3.5, p = 0.06 | |||

| Interaction interval × drug: | |||

| F(2,7) = 0.3, p = 0.7 |

Unless otherwise noted, all tests are repeated-measures ANOVA with one or two factors.

ANOVAs were followed by Holm–Sidak post hoc tests to determine significant differences from the vehicle control condition. A Holm–Sidak adjusted p < 0.05 was considered a significant difference, and, provided the overall ANOVA result was considered significant (p < 0.05), a Holm–Sidak post hoc unadjusted p < 0.1 was considered a trend toward significance. Tests were run in SigmaStat.

In some cued FR1 experiments, a hardware problem prevented detection of receptacle exits in a small number (<1%) of trials. This resulted in longer delays to cue presentation than otherwise would have occurred. These trials were detected and eliminated, along with the next trial, from the analysis. This reduced the total session time, and counts of rewards earned were normalized to 30 min.

Video analysis of cued FR1 task with 10 s ITI

To determine in more detail how the animals' behavior during a long-ITI task was affected by NAc core dopamine antagonist injection, we conducted a video analysis of rats performing a variant of the cued FR1 task with 10 s ITI. Injections of 1 μg of SCH23390 or 2 μg of raclopride that resulted in ∼50% reduction in rewards earned were selected for video analysis. From 6 rats, 6 vehicle, 6 SCH23390, and 3 raclopride sessions were obtained; the video from 1 rat's vehicle injection session was lost, and we therefore substituted data from a session in which the rat was not injected.

Videos were digitized by an assistant and analyzed with the experimenter blind to the animal's identity and drug received. The animal's actions at cue onset were noted (pressing lever, checking receptacle, immobile, grooming, or locomotion) as well as its location. The animal was scored as “near” the levers if its head was in the third of the chamber closest to the levers, and “far” if it was in the third farthest away. They were rarely in the middle third, and therefore these trials were not analyzed. The animal's actions between cue onset and lever press were scored (immediate response, immobility lasting > 1 s, grooming, or locomotion in a direction not toward the lever or receptacle). An immediate response means that none of the latter three actions occurred, and instead the animal approached the receptacle or the lever with <1 s delay before onset of locomotion. Animals often checked the receptacle before pressing the lever, or made failed attempts to press the lever before earning reward; these trials were classified as immediate response trials if grooming, immobility > 1 s, or locomotion away from the lever did not occur. In many trials, more than one of the latter three actions occurred, and all of the actions were noted for each trial.

Analysis of automated video tracking data (DS and FR8 tasks)

The video tracking system determined the X and Y coordinates of the LEDs in real time for each video frame; these coordinates were time-stamped and saved to disk. (The entire video image was not saved.) Distances traveled between behavioral intervals (e.g., lever presses) were computed by summing the distances between LED locations in each frame (30 frames/s) in the interval. Gaps in the tracking data (resulting, for instance, from the animal bending its head at such an angle that the LED was not detectable) were filled by linear interpolation. To determine movement velocities, we first isolated movement epochs using previously published methods (Drai et al., 2000) (supplemental Fig. S3, available at www.jneurosci.org as supplemental material). For each time point t, the SD of distances between the LED location at t and its locations in a 200 ms window surrounding t was computed. This constitutes a movement index for t. The movement index was bimodally distributed, and larger values indicated that the animal was in locomotion. The threshold movement index for locomotion was individually chosen for each animal based on this distribution, and was generally ∼0.2. Consecutive epochs of at least 8 time points (267 ms) with a movement index greater than threshold were identified as locomotor events.

For analysis of movement velocity after cue onset (see Fig. 4D), the first valid movement before a lever press or cue termination was used, including movements that were already in progress when the cue was presented. Movements that were still in progress at the time of lever press, or that ended within 200 ms of the lever press, were classified as movements that resulted in a lever press. Movements during the ITI (see Fig. 4D) were identified by searching for pauses in motor activity in which the movement index was less than threshold for >0.5 s, followed by at least 8 consecutive points with movement index above threshold. The latency to move after DS onset (see Fig. 4E) was determined only for trials in which the movement index was below threshold at DS onset. The last movement before a lever press (see Fig. 7G) was identified as the last epoch of at least 8 consecutive points above threshold occurring before the lever press, but after the event that began the interval (previous lever press or receptacle exit). For all movements analyzed, the movement velocity was computed as the distance traveled during the locomotor event divided by the total duration of the event.

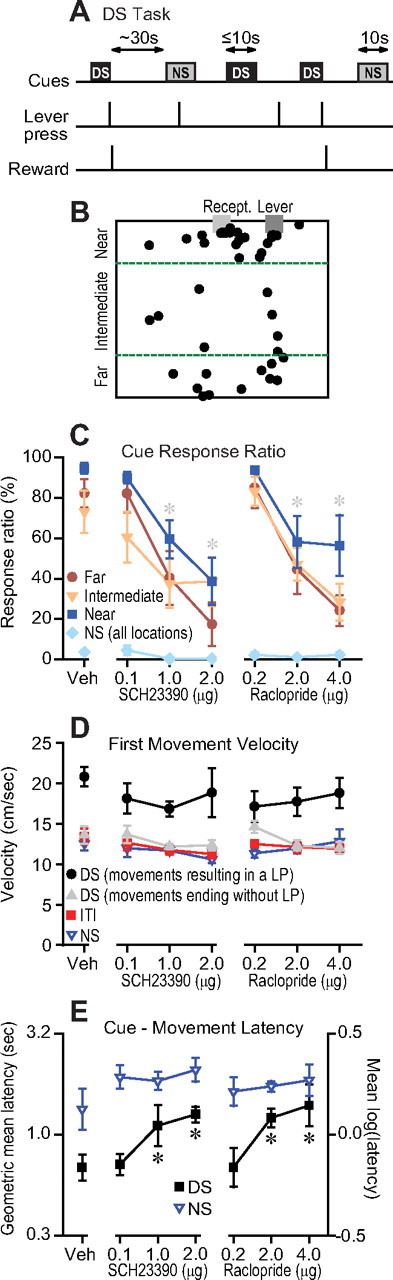

Figure 4.

Dopamine antagonist injection in the NAc core impairs flexible approach in response to a reward-predictive DS. A, DS task structure. A lever press during the DS (maximum 10 s) terminated the cue and delivered sucrose reward. Responses during the NS did not earn reward. B, Data from a representative vehicle injection session show that the animal's location at cue onset was widely variant. Diagram is to scale (30 × 25 cm); green dashed lines show borders demarking the regions “near,” “intermediate,” and “far” from the lever. C, The response ratio (proportion of DSs to which the animal responds with a lever press) was reduced by D1 or D2 antagonist injection into the NAc core, and this effect did not depend on the animals' location at DS onset. Animals rarely responded to the NS, and data for all locations were combined. Gray asterisks indicate significant difference from vehicle of the overall DS response ratio combined across locations. D, The velocity of the first movement after the DS was highest when the movement resulted in a lever press (LP) without an intervening pause. When the first post-DS movement did not result in a lever press, the velocity was lower, and similar to the first movement after the NS and to movements during the ITI. The antagonists had no significant effects on these velocities. E, The latency to the first movement after the DS was increased by the antagonists, whereas the latency to the first movement after the NS was longer and not significantly affected by the antagonists.

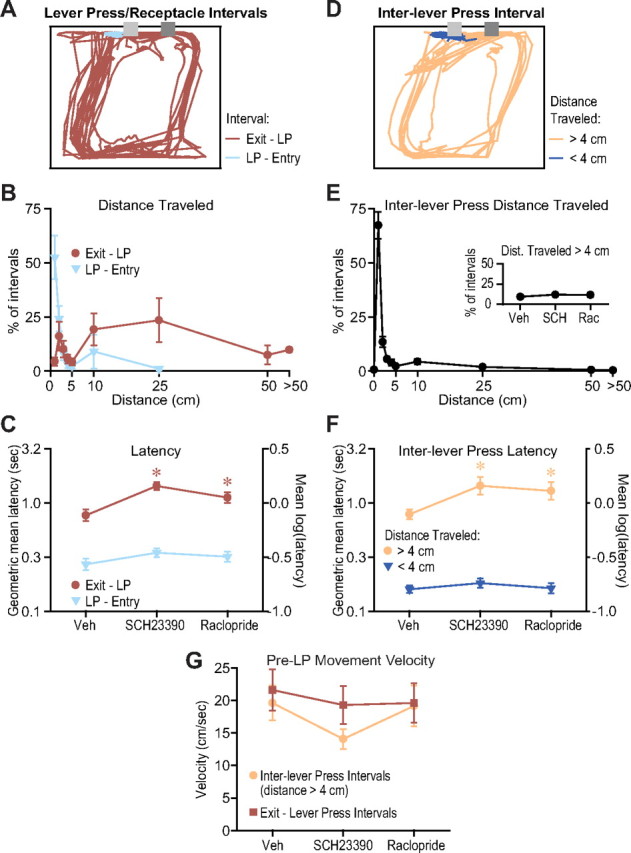

Figure 7.

Dopamine antagonist injection into the NAc core reduces performance of a FR8 task by impairing flexible approach. A, A scale drawing (25 × 30 cm) showing a representative set of motion tracks between the eighth lever press and receptacle entry, and between receptacle exit and the first lever press, during a 10 min period of one animal's vehicle injection FR8 session. The dark gray box shows the location of the lever, and the light gray box shows the reward receptacle. LEDs were mounted on the animal's head, and the animal pressed the lever with its head close to the receptacle. B, The mean (across rats) distribution of distances traveled in the intervals between the eighth lever press and receptacle entry, and receptacle exit and the first lever press. The movement to the receptacle was almost always very short and stereotyped, whereas animals moved widely variable distances between receptacle exit and the reinitiation of operant responding, indicating that animals reached varied locations in the exit to lever press interval but not in the lever press to entry interval. C, The latency to reach the receptacle after the eighth lever press was not affected by dopamine antagonist injection in the NAc core, whereas the latency to resume lever pressing after receptacle exit was prolonged. D, Motion tracks during the same 10 min interval as shown in A, but for inter-lever press intervals in which the distance traveled was >4 cm or <4 cm. E, The mean distribution of distances traveled during intervals between lever presses shows that usually this distance is exceedingly short (<1 cm), but there is a long and variable tail. E, Inset, The proportion of inter-lever press intervals in which the distance traveled was >4 cm was not affected by the antagonists. F, The latency between lever presses was not affected by the antagonists when the distance traveled was <4 cm, but was increased when the distance traveled was >4 cm. G, In latencies that were lengthened by the antagonists, the velocity of the last movement before the lever press was not significantly affected by the antagonists.

Data selection for FR8 analysis

Injection of dopamine antagonists into the NAc core resulted in profound deficits in performance as measured by rewards earned (see Fig. 6B). In many cases, the number of rewards earned after injection of 1 or 2 μg of SCH23390 or 2 or 4 μg of raclopride was very small and did not allow enough data to analyze the effects of the antagonists on latencies between events. Therefore, for analysis, we selected, from each rat, one SCH23390 and one raclopride injection session, at any dose, in which the number of rewards earned was approximately half that of the vehicle injection session. Out of 13 animals run on the FR8 task, 4 were eliminated because no injection sessions resulted in a ∼50% performance deficit (usually because the lowest doses had little effect and the intermediate and high doses reduced performance to near 0 rewards earned).

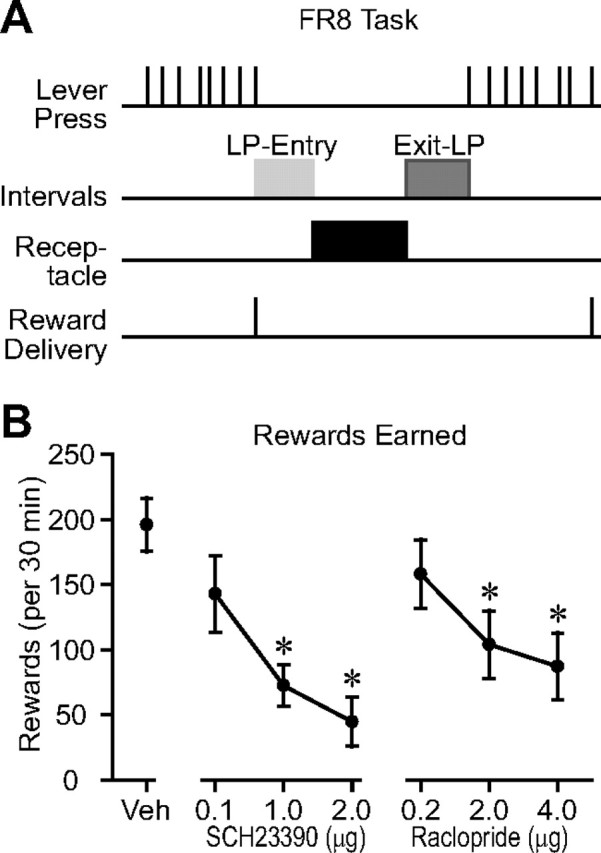

Figure 6.

NAc core dopamine antagonist injection reduces rewards earned on a high-effort operant task (FR8). A, FR8 task structure. After every eighth lever press, a 10% sucrose reward was delivered in a receptacle next to the lever. Bars on the “Intervals” line show the lever press to receptacle entry and receptacle exit to lever press latency intervals analyzed in Figure 7. B, The number of rewards earned on the FR8 task is reduced by injection of D1 or D2 receptor antagonists into the NAc core.

Histology

When behavioral experiments were complete, animals were deeply anesthetized with pentobarbital and perfused intracardially with saline and 4% formalin. Brains were removed, sectioned (40 μm), and stained for Nissl substance to locate injection sites. Cannula tip locations are shown in supplemental Figure S1 (available at www.jneurosci.org as supplemental material) and in all cases were located in the target structure.

Behavioral task training procedures

Cued FR1 tasks

The cued FR1 tasks provided different ITIs (periods during which reward was unavailable) for different groups of rats, allowing us to test the hypothesis that the NAc dopamine dependence of task performance depends on ITI. Training proceeded as follows. On the first training day, presses on levers or entry into the reward receptacle resulted in reward delivery (60 μl of 10% sucrose) in the receptacle. There was a 20 s ITI until reward could be earned again. No explicit cues were presented. Animals remained in the chamber until they earned 100 rewards or for at most 2 h. On the next training day, animals had to press either of the levers once to earn a reward. The siren cue was presented at all times except for a 3 s ITI after each lever press that earned reward. During the ITI, lever presses did not earn reward. Animals were run on this task until they earned 100 rewards in <2 h. Occasionally, we placed a pea-sized quantity of peanut butter on the levers to encourage responding.

In the next stage of training, we chose, for each rat, one lever to be the “active” lever and the other to be the “inactive” lever. Half the rats in each group were assigned the left lever as active, and the other half were assigned the right. Responses on the active lever only were rewarded from this point on; inactive lever responses were recorded but had no programmed consequence. The 3 s ITI was eliminated, and instead the siren cue was presented when the animal exited from the reward receptacle (but not <1 s after receptacle entry if the animal withdrew and did not reenter within 1 s of the original entry). The duration of cue presentation was indefinite; cues were always terminated by the animal's press on the active lever (or by the end of the session). Sessions were 30 min long and there was no experimenter-imposed cap on the number of rewards the animal could earn per session. This protocol comprised the cued FR1 task with 0 s ITI. For the cued FR1 tasks with 3, 10, and 20 s, we gradually increased the ITI over the next 1 to 2 weeks, such that the cue (and ability to earn reward by a lever press) was presented at longer intervals following receptacle exit. When animals had been run on the final ITI schedule for at least 30 sessions (on 30 separate days), they underwent cannula implantation surgery.

DS task

Animals were trained on a task similar to the DS task used previously (Ambroggi et al., 2008; Ishikawa et al., 2008a,b). After receptacle and initial FR1 training as described for the cued FR1 tasks, animals were placed on a task in which the DS cue (siren or intermittent tone, randomly chosen for each rat) was presented at the end of a 10 s ITI. Animals had 10 s to respond with a lever press to earn 60 μl of 10% sucrose to be delivered into the receptacle next to the lever; otherwise, the cue was terminated and presented again 10 s later. The ITI was increased to 20 and then 30 s across several days. In the final DS task (see Fig. 4A), the length of the ITI was chosen from a Gaussian distribution with mean of 30 s, and the cue presented was randomly chosen on each trial (either DS or NS). The NS was either the intermittent or the siren tone (whichever was not used for the DS), and was always presented for 10 s. Responding during the NS or during the ITI was recorded but had no programmed consequences. The DS cue was terminated either 10 s after cue onset or by the animal's lever press, whichever came first. Failure to press within the 10 s maximum DS presentation period counted as a failure to respond. Animals were trained on the DS task in 1 h sessions until performance reached a plateau at ∼90% DS response ratio (proportion of DSs to which the animal responded) and <20% NS response ratio.

FR8 task

After receptacle and FR1 training as described for the cued FR1 tasks, the FR was gradually increased over several days until it reached 8 (see Fig. 6A). An auditory cue (siren) was presented at the beginning of the session and again upon exit from the receptacle after reward consumption. The cue was on until the eighth lever press, at which point it was turned off and 100 μl of 10% sucrose was delivered into the receptacle next to the lever. Animals were run on this task until they reached a performance plateau, with no trend toward an increase in rewards earned over 7 consecutive sessions.

CDAS task

For the CDAS task (see Fig. 5A), Med Associates operant chambers were configured as shown in Figure 5B, with two reward receptacles on opposite walls and a nosepoke hole midway between them on the wall perpendicular to each. Training progressed in 7 stages. All training sessions were 2 h long, and animals underwent one per day (5 d per week).

Stage 1.

Training began with 1 d of habituation to the reward receptacles. Two cues (1 and 2) were presented. For half the animals, cue 1 was the siren and cue 2 was the intermittent cue, and the opposite relation was assigned to the other half. Cue 1 signaled reward availability in receptacle 1, and cue 2 signaled reward availability in receptacle 2. Receptacle 1 was on the left side, and receptacle 2 on the right side, for half the animals, and the opposite relation was assigned to the other half. Reward consisted of 60 μl of 10% sucrose delivered upon entry into the correct receptacle. Incorrect entries resulted in a 1 s period of darkness (house lights turned off) and no reward delivery. The next cue was presented 20 s after receptacle exit, regardless of whether reward was earned. Rewards were not delivered during the 20 s ITI.

Stage 2.

Animals had to perform a nosepoke to obtain presentation of cue 1 or cue 2. The correct response to cue 1 was to enter receptacle 1, upon which an immediate 60 μl reward was delivered; the correct response to cue 2 was to enter receptacle 2 for immediate 60 μl reward. A 3 s ITI, during which a trial could not be initiated, was imposed after receptacle exit. The ITI was the same whether or not the animal responded correctly to cue, but the house lights were turned off during the ITI (and no reward was delivered) if an incorrect response was made. A flashing light (50 ms on, 75 ms off) within the nosepoke hole was turned on to signal the end of the ITI and remained on until the next trial was initiated with a nosepoke. Animals remained at this stage until they initiated 300 trials in the 2 h session; however, correct responding to the cues at this point was usually not greater than chance.

Stage 3.

The task at this stage was identical to stage 2, with one exception: if the animal made an incorrect response, no reward was delivered and the cue remained on until the animal entered the correct receptacle, at which point reward was delivered. Several days on this protocol resulted in the animal making 5–10 times as many correct as incorrect responses to one of the cues, while correct responding to the other cue remained at chance levels. At this point, animals were advanced to the next stage.

Stage 4.

At this stage, as in stages 2 and 3, cue 1 and cue 2 were presented upon trial initiation with a nosepoke. Incorrect responses to the cues resulted in a 10 s penalty period during which the house lights were turned off and a trial could not be initiated. Correct responses resulted in immediate delivery of 60 μl of sucrose, and a 3 s ITI (house lights on) was imposed after receptacle exit, during which a trial could not be initiated. The end of the ITI or penalty period was signaled with the flashing light in the nosepoke hole. This stage continued for 2–3 weeks, until animals consistently made 5–10 times as many correct as incorrect cue responses.

Stage 5.

In this stage, a delay to reward in receptacle 2 was introduced. At the beginning of the session, reward was delivered immediately after the first 5 correct responses to cue 2. Then, it was increased by a step value after every 5 correct trials, with no maximum delay. The step value was initially 100 ms, and this was increased to 300 ms over several days. Animals were typically capable of waiting up to 11 s. Early withdrawal from receptacle 2 resulted in imposition of the same 10 s penalty that resulted from incorrect responses to cue 1 or cue 2.

Stage 6.

This stage was similar to stage 5. As before, the animal initiated a trial with a nosepoke, which resulted in presentation of cue 1 or cue 2, directing the animal into receptacle 1 or receptacle 2, respectively. The delay to reward was initially 0 s, but this increased by 1 s whenever a threshold number of rewards was earned in receptacle 2. The maximum delay to reward was capped at 5 s. The threshold number of rewards was initially 10, but over 3–4 weeks, we reduced this value to 3. Early withdrawals from receptacle 2 or incorrect responses to cue 1 or cue 2 resulted in a 10 s penalty period (lights out) during which a trial could not be initiated. Thus, by the end of this stage, animals were consistently waiting 5 s for reward after 15 correct trials in receptacle 2, as well as continuing to respond correctly in the vast majority of trials to cue 1 and cue 2. At this point, the animals underwent surgery for implantation of cannulae within the NAc core.

Stage 7 (final CDAS task).

After recovery from surgery and reestablishment of baseline performance on the stage 6 protocol, the switching cue (cue 3) was introduced. This cue was presented in one randomly chosen trial out of each block of 10 successful cue 2 trials. These blocks began with the sixth successful cue 2 trial after the animal reached the maximum 5 s delay to reward in receptacle 2. Cue 3 consisted of a continuous 3 kHz tone, which was presented between 100 and 2000 ms after the animal entered receptacle 2 in response to cue 2. From the time of cue 3 onset, the animal had 5 s to withdraw from receptacle 2, and an unlimited time to reach receptacle 1, at which point 180 μl of 10% sucrose was delivered in receptacle 1, and the trial was scored as a successful switch. If the animal failed to withdraw from receptacle 2 within 5 s of onset of cue 3, or if the animal withdrew and reentered receptacle 2, cue 3 was terminated, the house lights were turned off for a 10 s penalty period, and the trial was scored as a failure to switch. Stage 7 sessions were repeated between 3 and 6 times, until the animal successfully switched in the majority of switching trials, at which point microinjection experiments commenced using the stage 7 protocol (see Fig. 5A).

Importantly, cue 3 was presented in only 3–6 sessions during training (after the animals were implanted with cannulae), and in <5% of all trials, in contrast to cues 1 and 2 (which were presented to the animals multiple times in every session, every day, 5 d per week for 4–5 months) and to the cues in the cued FR1 and DS tasks, which were presented in every trial across dozens of training sessions before microinjection experiments began.

Results

NAc core dopamine is required for tasks with long, but not short, intertrial intervals

Performance of cue-responding tasks with long ITIs is particularly susceptible to disruption by NAc dopamine manipulations (Nicola, 2007). To explain this susceptibility, we adapted the locomotor hypothesis proposed by Ikemoto and Panksepp (1999). Specifically, we propose that (1) the longer the period of reward nonavailability, the more likely the animal is to move away from reward-associated operanda; (2) to resume operant behavior after moving away, animals flexibly approach reward: i.e., across trials, animals necessarily use different actions to move toward the operandum, because their exact locations during pauses in operant activity will differ across trials; and (3) NAc dopamine is required for animals to perform flexible, but not inflexible, approach. To begin testing this hypothesis, which we term “the flexible approach hypothesis,” we first determined, under controlled conditions, whether increasing the ITI increases the dependence of task performance on NAc dopamine.

We trained rats to press a lever after onset of a reward-predictive cue (FR1 tasks) and varied the ITI across animals. An auditory cue at the end of a 0, 3, 10, or 20 s ITI signaled availability of liquid 10% sucrose reward in exchange for a single lever press (Fig. 1A); sucrose was delivered into a receptacle next to the levers (Fig. 1B). The ITI was the time between reward receptacle exit on one trial and cue onset on the next. We asked whether microinjection of dopamine receptor antagonists into the NAc (at the border between core and shell) before the session impacted task performance as measured by the number of rewards earned per session. Injection of a dopamine D1 receptor antagonist (SCH23390) or a D2 receptor antagonist (raclopride) caused the greatest reduction in number of rewards earned in animals on the longer (10 or 20 s) ITI tasks (Fig. 1C). (Detailed statistical results for all figures are provided in Table 1.)

Figure 1.

NAc core dopamine is required for cued FR1 tasks with long, but not short ITIs. A, B, An auditory cue signaling reward availability was presented after ITIs that were different for different groups of rats. A lever press was required during cue presentation to obtain liquid sucrose reward delivered in a nearby reward receptacle. The number of rewards earned was reduced by injection of dopamine D1 (SCH23390 or D2 (raclopride) antagonists into the NAc at the border between core and shell. This effect was more pronounced for the longer (10 and 20 s) ITIs (C) and when the injections were within the NAc core (F). D, G, The latency to press the lever after cue onset was increased by the antagonists, but only for the longer ITIs. E, H, The latency to enter the receptacle after the lever press was not affected by the antagonists at any dose, at any of the three injection sites. Except where noted otherwise, significance symbols apply to this and all subsequent figures: *significant difference from vehicle with Holm–Sidak post hoc test adjusted p < 0.05; †trend toward a significant difference from vehicle with Holm–Sidak post hoc test unadjusted p < 0.1. Error bars in all figures indicate SEM. Detailed statistical results for all figures are shown in Table 1.

The reduced ability to earn reward in longer ITI tasks must be due to an increase in at least 1 of 3 latencies: between cue and lever press, between lever press and reward receptacle entry, and between receptacle entry and receptacle exit (the consummatory time). The cue to lever press latency was increased by the antagonists, and these effects were much larger in tasks with longer ITIs (10 and 20 s) (Fig. 1D). Strikingly, neither the latency to enter the receptacle after a lever press (Fig. 1E) nor the consummatory time were affected by the antagonists at any dose. (Dopamine antagonist effects on consummatory time in all tasks were negligible, as shown in supplemental Fig. S2, available at www.jneurosci.org as supplemental material.) These results indicate a specific deficit in the ability to respond after cue presentation, and not in the receptacle approach and consummatory actions that occur afterward.

The NAc core is proposed to be a critical site at which NAc dopamine can invigorate behavior (Robbins and Everitt, 2007). To determine the relative contribution of dopamine acting in the core and shell to long-ITI tasks, we implanted separate groups of animals trained on the 0 or 20 s ITI tasks with cannulae in the NAc core or shell. The number of rewards earned was reduced only in the 20 s ITI task, and core injections were much more effective in reducing rewards earned than shell injections (Fig. 1F). The cue to lever press latency was substantially increased by the antagonists in rats on the 20 s ITI task, and these effects were also more pronounced in the core than in the shell (Fig. 1G). In contrast, the lever press to receptacle entry latency was unaffected in either task, at either injection site (Fig. 1H).

Why is performance of longer ITI tasks more affected by the antagonists than short-ITI tasks? We reasoned that longer periods of reward unavailability would result in a greater likelihood that animals leave the vicinity of the lever and receptacle. If this were the case, then the greater effects of the antagonists on the cue to lever press latency in longer ITI tasks could be ascribed to a deficit in ability to return to the lever. To determine whether animals move away from the lever during longer intervals, we first examined, in vehicle injection sessions, the latency to press the lever after exit from the reward receptacle (regardless of when the cue was presented). This latency increased with increasing ITIs; at short ITIs (especially 0 s), the latency was narrowly distributed, whereas at longer ITIs, the latency distribution was quite broad (Fig. 2A). In contrast, the lever press to receptacle entry latency was exceedingly brief in all ITI tasks (Fig. 2B). Because it is not possible for animals to move substantial distances in <1 s, these results imply that animals moved directly between the lever and receptacle in all tasks, and directly between receptacle and lever only in the shorter ITI tasks (especially 0 s ITI). Notably, these short latencies were not affected by the antagonists: the lever press to receptacle entry latency was unaffected in any ITI task (Fig. 1E,H), and in the 0 s ITI task, the receptacle exit to lever press latency was also unaffected (Fig. 1D,G) (note that cue onset occurs at receptacle exit in the 0 s ITI task, so the cue to lever press latency is the same as the exit to lever press latency). In contrast, in longer ITI tasks, the long, broadly distributed latencies between receptacle exit and lever press suggest that animals could have moved considerable and varied distances from the lever and receptacle during this interval. These latencies were prolonged by the antagonists (not shown: the effects were similar to those on the cue to lever press latency shown in Fig. 1D). In sum, these results suggest that very short behavioral latencies, during which movement away from the lever and receptacle could not have occurred, are not prolonged by NAc dopamine antagonist injection, whereas the antagonists increase longer latencies during which extensive movement could have occurred.

To confirm that animals moved away from the lever during the ITI in longer ITI tasks, we made video recordings of animals on a cued FR1 task with a 10 s ITI and determined whether animals were near the lever at cue onset (within the third of the chamber closest to the lever) or far from it (in the third farthest away, along the opposite wall). In vehicle injection sessions, animals were in the “far” zone at cue onset in ∼23% of trials (Fig. 2C); in fact, this greatly underestimates the number of intervals in which the animals visited the far zone during the ITI, as animals often moved back to the near zone before cue presentation: animals reached the far zone in 80 ± 7% of ITIs in vehicle injection sessions. Therefore, in a long-ITI task, animals clearly leave the vicinity of the lever during the ITI. In contrast, in short-ITI tasks (especially 0 s ITI), the interval between receptacle exit and lever press was usually much too short for the animal to have moved far from the lever and receptacle (Fig. 2A). Thus, the substantially greater impairment of long-interval task performance by NAc dopamine antagonist injection, as compared to the antagonists' effects on short interval performance, supports the hypothesis that return to the lever from relatively long distances is specifically impaired by dopamine antagonist injection in the NAc.

The hypothesis that long, but not short, distance approach depends on NAc dopamine is consistent with the hypothesis that NAc dopamine is necessary to perform actions requiring greater effort. However, the effort hypothesis would also predict that within a long-interval task, the latency to approach the lever should be increased to a greater extent by the antagonists when the animal is farther away than when the animal is near. Our findings are at odds with this prediction, since the latency to press the lever after cue onset was increased by a proportionally similar amount (i.e., a similar log unit increase) by antagonist injection in the NAc core regardless of distance from the lever (Fig. 2D). This result is consistent with the hypothesis that dopamine is required for switching among behaviors (van den Bos et al., 1991; Redgrave et al., 1999; Yun et al., 2004a): no matter where animals are when the cue is presented, they must switch from their ongoing behavior to lever approach.

If the switching hypothesis is correct, then, because some behaviors may be particularly difficult to switch from, the antagonists may have differential effects on response latency depending on the behavior at cue presentation. Furthermore, the antagonists should be least effective in increasing the latency to press the lever when the cue is presented while the animal is already engaged in lever pressing. To test these predictions, we first categorized the animals' actions at cue onset. Examination of the videos revealed four main action classes: task-related activity (pressing the lever, checking the receptacle, or approaching the lever or receptacle), immobility, locomotion in a direction other than toward the lever/receptacle, and grooming. The antagonists caused a decrease in the proportion of trials in which the animals were already engaged in task-related activity at cue onset, and a corresponding increase in trials in which animals were immobile (Fig. 3A). The incidences of locomotion and grooming were not affected. Notably, the latency to press the lever after cue onset was increased by a proportionally similar amount regardless of the action at cue onset (Fig. 3B). This was the case even for the subset of “task activity” trials in which the animal was already engaged in lever pressing at cue onset (Fig. 3B, inset). These results therefore argue against the predictions of the switching hypothesis.

The antagonists decreased the proportion of trials in which animals initiated an immediate approach response to the lever/receptacle, and increased the proportion of trials in which animals were immobile for >1 s, or engaged in locomotion away from the lever, during the interval between cue onset and lever press (Fig. 3C). However, no matter the intervening behavior, the latency between cue onset and operant response was increased by a proportionally similar amount (Fig. 3D), again arguing against the switching hypothesis. The results in Figures 2 and 3 are more consistent with the flexible approach hypothesis. If, for a given behavioral interval (e.g., receptacle exit to lever press), animals consistently move to varied locations away from the lever, they must determine anew how to return to it whenever reward becomes available. This flexible approach strategy differs from the inflexible strategy of simply performing the same sequence of actions during the interval, and is in fact essential for the ability to obtain reward whenever an inflexible strategy is insufficient. In intervals where animals use a flexible approach strategy, interference with this strategy by dopamine antagonists delays responding even when the animal is near the lever, regardless of the animal's actions at and after cue onset, as long as the animal's location when signaled that reward is available is variable.

NAc core dopamine facilitates flexible approach in response to reward-predictive cues

Because there was a fixed interval between receptacle exit and cue presentation in the cued FR1 tasks, behavior on the longer ITI tasks was strongly influenced by an interoceptive timing mechanism, as evidenced by the greater likelihood of anticipatory behavior at the end of the ITI than at the beginning (supplemental Fig. S3, available at www.jneurosci.org as supplemental material). It is therefore possible that NAc dopamine contributed to behavior in these tasks not strictly by facilitating flexible approach, but rather by influencing the interoceptive timing mechanism itself. Therefore, to further test the flexible approach hypothesis, we used a DS task in which the interval between cue presentations was variable, eliminating the animal's use of interoceptive timing to regulate operant behavior (Fig. 4A). A lever press during the DS caused the DS to be terminated and sucrose reward to be delivered, whereas responses during the NS had no consequence. We used an automated video tracking system to determine the position of LEDs mounted on the animal's head throughout the session. Animals' lever pressing and lever approach behavior in this task was under control of the DS and was not influenced by an interoceptive timing mechanism (supplemental Fig. S3, available at www.jneurosci.org as supplemental material).

The distribution of the animal's locations at DS onset was broad and widely variant, as shown by the example in Figure 4B. This indicates that animals likely used a flexible approach strategy to reach the lever, since responding to each DS would require a different set of actions. Both D1 and D2 receptor antagonists injected in the NAc core reduced the proportion of DSs to which the animal responded with a lever press. To determine whether these effects were dependent on the animal's distance from the lever at cue onset, we divided the chamber into three zones: near the lever, far from it, and an intermediate zone (Fig. 4B; supplemental Fig. S3, available at www.jneurosci.org as supplemental material). The effects of the dopamine antagonists on the ability to respond to the reward-predictive cue did not depend on location at cue onset (Fig. 4C). These observations confirm similar findings with the 10 s ITI task (Fig. 2D), but in a task without anticipatory responding controlled by an interoceptive timing signal. The results argue against the generality of the hypothesis that tasks that require greater effort (e.g., crossing the entire width of the behavior chamber vs approach to the lever from a distance of only a few centimeters) are more dependent on NAc dopamine.

Failure to respond with a lever press could be due to two factors: the velocity of locomotor approach to the lever could be lower, or the latency to initiate such approach could be delayed (sometimes past the 10 s maximal period of DS presentation). To distinguish between these possibilities, we identified locomotor events using a well established algorithm for determining when animals are stationary and when they are in locomotion (Drai et al., 2000) (supplemental Fig. S4, available at www.jneurosci.org as supplemental material). In the control condition, the first movement after DS onset usually (but not always) continued without pause until a lever press occurred. The velocity of these movements was significantly higher than first movements after DS onset that ended before a lever press occurred (including movements during DSs to which the animal did not respond at all with a lever press). Furthermore, first movements after DS onset that ended without a lever press were similar in velocity to first movements after NS onset and movements occurring during the ITI, both of which rarely resulted in a lever press. The velocities of none of these types of movements were significantly affected by the dopamine antagonists (Fig. 4D); however, note that the antagonists caused there to be many fewer post-DS movements that resulted in a lever press, since lever responding during the DS was greatly reduced (Fig. 4C).

In the control condition, the latency to the first movement after cue presentation was shorter for the DS than the NS (Fig. 4E). The latency to move after DS onset was increased by the antagonists, but the latency to move after NS onset was not significantly affected (Fig. 4E). Therefore, antagonist injection decreased the likelihood of a short-latency, high-velocity approach to the lever. These results are consistent with observations that, in the 10 s ITI cued FR1 task, the antagonists decreased the probability of an immediate response after cue presentation (Fig. 3C). Thus, our findings indicate that dopamine receptor activation in the NAc is required for animals to initiate flexible locomotor approach to the operandum in response to reward-predictive information. However, once locomotor approach is initiated, NAc dopamine is not required to specify the approach velocity.

NAc core dopamine is not required for inflexible approach in response to reward-predictive cues

The latency to reach the reward receptacle after a lever press was not affected by dopamine antagonist injection into the NAc (Fig. 1E,H). We propose that NAc dopamine is not required for this movement because it is inflexible: because the animal's start and end positions are identical on every trial, identical actions could be used to accomplish the movement. However, because the distance between lever and receptacle is short, little if any locomotion is required for this movement. Therefore, an alternative possibility is that NAc dopamine is simply required for locomotion, especially high-velocity locomotion (Fig. 4D,E), whether or not the locomotion constitutes flexible approach. To distinguish between these hypotheses, we designed a task in which animals made high-velocity movements from identical start and end positions in response to reward-predictive cues. We reasoned that if the flexible approach hypothesis were correct, such inflexible movements would be unaffected by NAc core dopamine antagonist injection; however, if NAc core dopamine is generally required for high-velocity locomotion, such movements would be slowed or eliminated.

In the CDAS task, the rat initiated a trial by performing a nosepoke into a nosepoke hole located on one wall of the chamber, between reward receptacles on the two flanking walls (Fig. 5A,B). Upon nosepoke, one of two auditory cues was presented, which directed the animal to enter one of the two receptacles. Liquid sucrose reward was delivered immediately in receptacle 1, but the animal had to wait for up to 5 s for the identical reward in receptacle 2. In some trials (“switching trials”), a third auditory cue was presented during the wait for reward in receptacle 2, which directed the animal to withdraw from receptacle 2, locomote across the chamber to receptacle 1, and receive an immediate reward. Because the animal's location whenever a particular cue was presented was identical across trials (in the nosepoke hole for cues 1 and 2, or in receptacle 2 for cue 3), and because the end location that constitutes a correct response to each cue was also identical across trials, each cue likely promoted the same actions to reach reward whenever it was presented.

Injection of D1 or D2 receptor antagonists into the NAc core did not affect the ability to respond correctly to cue 1 and cue 2 (Fig. 5C). Furthermore, the latency to reach receptacle 1 from the nosepoke when cue 1 was presented was not affected by the antagonists, and although the latency to reach receptacle 2 from the nosepoke hole when cue 2 was presented was different, only the highest dose of the D1 antagonist caused a significant increase (Fig. 5D). Animals withdrew from receptacle 2 early (without earning reward) on only 10% of trials, and this value was not significantly affected by the antagonists (Fig. 5E). Therefore, NAc core dopamine is not required to respond to reward-predictive cues or to move toward the reward receptacle in a task where the start location (at cue presentation) and end location (as a result of cue-evoked locomotion) are constant across trials. In contrast, NAc dopamine is required for animals to locomote toward the reward receptacle when cues are presented at relatively long intervals, with the animal presumably at a different location within the chamber on each trial (Wakabayashi et al., 2004). The present results also show that NAc core dopamine is not required to wait in the receptacle for reward, consistent with earlier findings (Wakabayashi et al., 2004).

In nearly all switching trials, animals responded to cue 3 by withdrawing from receptacle 2 and entering receptacle 1, and this high rate of successful switches was unaffected by the dopamine antagonists (Fig. 5F). Furthermore, the latency to exit receptacle 2 upon presentation of cue 3 was not affected by the antagonists, nor was the latency to reach receptacle 1 from receptacle 2 in these trials (Fig. 5G). Thus, because cue 3 was presented while the animal was waiting in receptacle 2, this result argues against an antagonist-induced inability to initiate locomotion from the waiting state. Moreover, cue 3 was presented many fewer times during training than any of the other cues in this study, arguing that differences in training cannot account for differences in the effects of NAc dopamine antagonists on the tasks described in this work. Similarly, the absence of effects on responding to cue 3 argues against the idea that NAc dopamine is generally required for switching from one behavior to another (such as from waiting in the receptacle to locomotion). Finally, the mean velocity to reach receptacle 1 from receptacle 2 (computed by dividing the fixed distance between the receptacles by the geometric mean latency to reach receptacle 1 from receptacle 2) was 26.5 cm/s, which was higher than the velocity of lever approach after DS presentation (Fig. 4D). Therefore, the lack of effect of the antagonists argues against the hypothesis that NAc core dopamine is simply required for high-velocity locomotion.

Despite the nearly complete lack of effect on measures of behavior after trial initiation, the antagonists reduced the number of trials completed per session, most likely due to an increased latency to initiate trials after the intertrial and penalty intervals (supplemental Fig. S5, available at www.jneurosci.org as supplemental material), suggesting that the antagonists reached their targets and were effective. In summary, the results of the CDAS experiment rule out the hypotheses that NAc core dopamine is generally required for high-velocity locomotion, locomotor initiation, switching from a waiting state to locomotion, cue responding in general, or responding only when the response is relatively poorly trained. Instead, the antagonists had pronounced effects on the latency to respond when flexible approach was required (10 s ITI, 20 s ITI, and DS tasks), but not when an inflexible, invariant motor response was sufficient to earn reward on every trial (0 s ITI, 3 s ITI, and CDAS tasks). Our findings therefore suggest that NAc core dopamine receptor activation has a specific role in facilitating flexible approach behavior.

NAc core dopamine facilitates operant effort by promoting flexible approach

Performance of a FR1 task for food reward, a low-effort task as measured by the overall rate of lever pressing, is not affected by reduction of NAc dopamine function (Aberman and Salamone, 1999; Yun et al., 2004a). Increasing the ratio requirement increases the rate of lever pressing and renders task performance susceptible to 6-OHDA lesion of the NAc (Aberman and Salamone, 1999). In our version of FR1 (the cued FR1 task with 0 s ITI), animals do not leave the lever and reward receptacle and therefore do not use a flexible approach strategy. We propose that deficits in high-effort operant task performance caused by disruption of NAc dopamine are due not to an inability to press the lever at a high rate, but rather to an inability to flexibly approach the lever after pauses during which the animal moves away from it. To test this hypothesis, we trained animals on a FR8 task that was identical to the cued FR1 task with 0 s ITI, except that 8 lever presses were required to earn reward instead of 1 (Fig. 6A). Consistent with previous observations (Aberman and Salamone, 1999), performance of the FR8 task was severely impaired by injection of D1 or D2 receptor antagonists into the NAc core (Fig. 6B), in strong contrast to their minimal effects on the FR1 task with 0 s ITI (Fig. 1F).

According to the flexible approach hypothesis, the higher ratio should result in a significant degree of movement away from the lever such that flexible approach is required to return to it. Therefore, only those behavioral latencies in which such movement frequently occurs should be increased by injection of dopamine antagonists into the NAc core. To test this prediction, we used automated video tracking of head-mounted LEDs during task performance, and used the variability in distances traveled during intervals between operant and/or consummatory events as an indicator of the requirement for flexible approach. Because the number of rewards earned was reduced to near 0 after antagonist injection in many sessions (in which case there was insufficient latency data to analyze), for video analysis, we selected sessions across the entire dose ranges in which performance was reduced by ∼50% compared with the vehicle injection session.

Whereas the distance traveled between the eighth lever press and receptacle entry was exceedingly short and invariant (in the vast majority of cases, the movement path distance was <3–4 cm), the distance traveled between receptacle exit and the next lever press tended to be much longer and more variable (usually well over 3–4 cm) (Fig. 7A,B). This indicates that approach to the receptacle after the eighth lever press was likely inflexible, whereas during the interval between receptacle exit and lever press, animals reached widely variant locations and therefore required flexible approach to return to the lever. Consistent with the flexible approach hypothesis, the latency to enter the receptacle after the eighth lever press was not affected by the antagonists, whereas the latency to resume lever pressing after receptacle exit was prolonged (Fig. 7C).

During the interval between lever presses, animals usually did not move more than ∼1 cm, but sometimes moved much longer distances (Fig. 7D,E). For ∼90% of inter-lever press intervals in the vehicle injection condition, the distance traveled was <4 cm; when it was >4 cm, the distance was highly variable (Fig. 7E). Therefore, we concluded that animals move away from the lever and require flexible approach to return to it in the ∼10% of intervals where the animal traveled >4 cm. In contrast, animals used an inflexible response strategy when the distance traveled was <4 cm. Dopamine antagonist injection had no effect on the proportion of inter-lever press intervals in which the distance traveled was >4 cm (Fig. 7E, inset), suggesting that once operant effort is initiated, NAc core dopamine does not participate in deciding whether to continue the effort or stop the effort with a movement away from the lever. Furthermore, the latency between lever presses with distance traveled <4 cm was not affected by the antagonists, whereas if the animals moved >4 cm, the antagonists increased the inter-lever press latency (Fig. 7F).

To determine whether the increased latency of behavioral intervals requiring flexible approach was due to reduced locomotor velocity, we examined the velocity of the last movement before the lever press in exit to lever press intervals, as well as inter-lever press intervals where the distance traveled was >4 cm. In neither case was the velocity of movement significantly affected by the antagonists (Fig. 7G). These results are similar to those observed for movements after DS presentation (Fig. 4D). Together, these findings suggest that NAc core dopamine facilitates the decision to approach the lever to resume operant behavior, but regulates neither the velocity of this approach once the decision is made nor the vigor of operant performance once the operandum is reached.

We conclude that during a high operant effort task, NAc core dopamine serves a very specific function. When animals pause their operant behavior and move away from the lever, NAc dopamine facilitates the return to the lever to resume effort. In contrast, NAc dopamine is not required to maintain operant effort once it is initiated. Because NAc core dopamine has an identical function in cue-responding tasks, our results suggest that both responding to reward-predictive cues and exertion of operant effort are dependent on NAc dopamine because a similar neural mechanism is required to accomplish flexible approach in both types of task.

Discussion

Contemporary theories ascribe a behavior activational role to the dopamine projection from the midbrain to the NAc, especially the NAc core. Some hypotheses propose that NAc dopamine “invigorates” behavioral responding, perhaps by rendering more likely the choice of higher-effort reward-seeking options (Phillips et al., 2007; Salamone et al., 2007; Floresco et al., 2008). Others propose a more general role in increasing the likelihood of responding to reward-predictive information (Berridge and Robinson, 1998; Fields et al., 2007; Robbins and Everitt, 2007). Our results show that the discrepancies between these hypotheses can be accounted for by a specific role for NAc core dopamine in promoting flexible approach to reward-associated objects.

The flexible approach hypothesis states that NAc dopamine is required for reward-seeking behavior only when the specific actions required to obtain reward are variable across instances of reward availability. Therefore, when the animal's starting location varies across trials, different actions are required to reach a fixed goal (such as a lever), and NAc core dopamine is required for those actions to occur. When the start and end locations are fixed across trials (as in the CDAS task), identical actions can consistently bring the animal to the goal, and NAc core dopamine is not required. The selective contribution of NAc dopamine to flexible approach explains why NAc dopamine is required for performance of only high-effort operant tasks and low-effort tasks with significant periods of reward unavailability: in both situations, animals frequently pause their responding to move away from the operandum, and the wide variability in locations reached during these pauses necessitates flexible approach to resume responding.