Abstract

Perceptual phenomena that occur around the time of a saccade, such as peri-saccadic mislocalization or saccadic suppression of displacement, have often been linked to mechanisms of spatial stability. These phenomena are usually regarded as errors in processes of trans-saccadic spatial transformations and they provide important tools to study these processes. However, a true understanding of the underlying brain processes that participate in the preparation for a saccade and in the transfer of information across it requires a closer, more quantitative approach that links different perceptual phenomena with each other and with the functional requirements of ensuring spatial stability. We review a number of computational models of peri-saccadic spatial perception that provide steps in that direction. Although most models are concerned with only specific phenomena, some generalization and interconnection between them can be obtained from a comparison. Our analysis shows how different perceptual effects can coherently be brought together and linked back to neuronal mechanisms on the way to explaining vision across saccades.

Keywords: visual stability, computational model, peri-saccadic mislocalization, saccadic suppression of displacement

1. Introduction

Our visual system allows us to sample the environment with very high resolution by shifting gaze from one location to the next. For example, if we scan the surface of a particular object with rapid eye movements, called saccades, we can enjoy the richness of details in the structure of this object. While the object itself appears well localized in the outside world our retina is rather faced with a sequence of snapshots intermitted by blur owing to the retinal slip. A long history of research has dealt with the question how such a sequence of retinal images is transformed into our subjective experience of visual stability. Most of the offered explanations are rather abstract and remain at the descriptive level. In more recent years, however, neurocomputational models are increasingly used to provide a deeper understanding of the phenomena involved, in particular if they link experimental data to neural mechanisms or include different levels of explanation, e.g. behavioural and neural. While the ultimate explanation of perceptual stability seems still out of reach, present research addresses several different phenomena that appear related to perceptual stability. A very prominent phenomenon is the mislocalization of briefly flashed stimuli that occurs during gaze shifts and even slightly before the actual onset of the movement. The idea behind flash localization studies is to reveal those mechanisms involved in the peri-saccadic transformation that remain invisible when probed with stationary objects. Another line of research introduces changes from the pre-saccadic to the post-saccadic image to test whether such changes violate the perception of stability or rather remain unnoticed. These experiments provide hints about particular aspects that are considered by the brain to maintain spatial stability.

Electrophysiological observations have provided insight about dynamic neural changes that occur prior to gaze shifts, supporting the idea that extraretinal signals modulate visual processing around the time of a saccade. The neural changes concern the strength and spatial selectivity of the response and already affect neurons with retinocentric receptive fields, suggesting that at least part of the transformation is performed in a retinocentric reference frame. However, some studies also revealed that eye position modulates the activity of cells with retinocentric receptive fields, suggesting a mechanism to encode a stimulus simultaneously also in other reference frames such as head-centred or allocentric (world-centered).

We here review a number of theories and computational models that offer explanations for some of the perceptual phenomena that arise before and during saccades. Several of these computational models can be used as tools to gain deeper insight into the underlying mechanisms and the spatio-temporal transformation that occur around saccades. While present models typically address individual phenomena rather than offering a fundamental explanation of perceptual stability, we discuss present controversies and identify potentially promising pathways that will guide us towards a more solid understanding of the subjective experience of spatial stability. We begin by briefly introducing the main empirical findings from different peri-saccadic phenomena. Thereafter, we present and discuss current models to guide future research in this field.

2. Perceptual and physiological phenomena before and during saccades

(a). Peri-saccadic shift

Stimuli that are flashed during saccades appear displaced. This has been known at least since the time of Helmholtz [1], who suggested that an effort-of-will is associated with the initiation of the eye movement and used by the visual system to predict the spatial location of a retinal flash when the eye is in motion. However, Matin et al. [2] observed that such mislocalization occurred even when the flash was presented before the eyes began to move. This effect was found when the subject was in complete darkness and relied only on the retinal signal of the flash and any available information about the eye position or eye movement. This observation has sparked a long line of research that investigated the mechanisms of flash localization before and during eye movements in darkness [3–9]. The typical finding across those studies was that flashes that are presented for 10–50 ms within a time window of 100 ms before and up to 100 ms after a saccade are systematically mislocalized (figure 1a). Since the magnitude and time course of this mislocalization is independent from the position of the flashed stimulus, we call this mislocalization pattern a peri-saccadic shift. Prior to saccade onset, this shift is in the direction of the saccade. During the saccade, the shift reverses in direction, although the strength of the reversal varies in different datasets. For small saccades, the shift appears to be a constant fraction of the saccade amplitude, but for larger amplitudes the amount of shift saturates [10]. The shift is best observed for very brief flashes of a few milliseconds in duration because it decreases in magnitude with increasing flash duration [10].

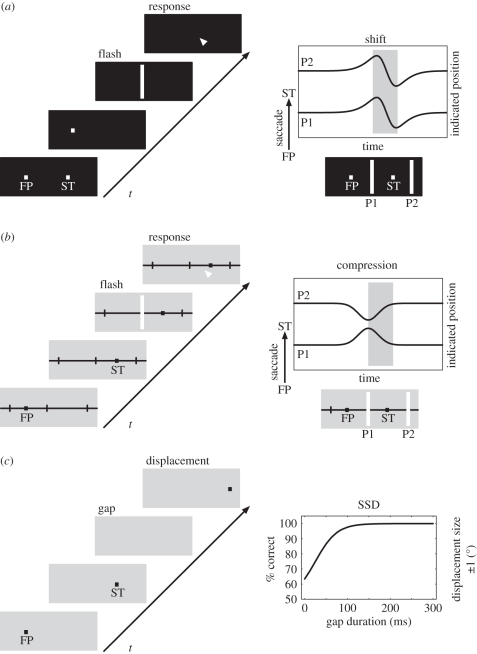

Figure 1.

Peri-saccadic shift, compression and suppression of displacement. (a) Illustration of a localization experiment performed in darkness. While the subject fixates the fixation point (FP), a saccade target (ST) is briefly presented. After the offset of the FP, the subject performs an eye movement to the memorized target location. Around the time of the eye movement, a brief flash is presented. After the saccade, the flash has to be localized with a pointing device or by a second eye movement. The result (shown on the right) is a peri-saccadic shift first in and later against saccade direction regardless of flash position (e.g. P1 and P2). (b) Illustration of a localization experiment performed with background illumination and clear visual references. The procedure is identical to (a), except that a ruler marking the saccade target position is present throughout the experiment. The result shows peri-saccadic compression since both flash positions P1 and P2 are mislocalized towards the saccade target. (c) Illustration of an experiment investigating saccadic suppression of displacement. A saccade has to be made from FP to ST. During the saccade, the target (ST) is briefly extinguished (gap period) and then reappears displaced in or against the direction of the eye movement. The subject reports the direction of the displacement. The results show that the displacement is veridically perceived only for gap durations of more than 100 ms. If there is no gap, subjects fail to notice the jump and cannot report its direction.

(b). Peri-saccadic compression

Other studies of peri-saccadic localization showed mislocalization that was spatially non-uniform [11–13]. Studies by Ross et al. [14] and Morrone et al. [15] showed an apparent compression of spatial positions so that they cluster around the saccade target. This peri-saccadic compression is different from peri-saccadic shift (figure 1b). One difference lies in its time course that begins about 50 ms before saccade onset, peaks close to saccade onset and ends at saccade offset. Thus, the time window of compression is typically shorter than the one of the shift. Other differences lie in the sensitivity to contrast and luminance of the flashed stimulus. While the peri-saccadic shift is typically observed under conditions of total darkness, peri-saccadic compression is best seen under illuminated conditions. The critical factors in illuminated conditions have been addressed by a number of studies. The presence of post-saccadic stimuli increases the amplitude of compression, which has been interpreted in favour of a post-saccadic reference theory [16]. Compression has also been observed when references are removed by a translucent shutter but diffuse light remains available [17]. Thus, post-saccadic visual references appear to influence compression indirectly by facilitating the localization of the saccade target [18] rather than being causally involved in generating compression. The dependence of peri-saccadic compression on contrast was observed in an experiment in which the contrast of the probe to the background was varied [19]. In this experiment, the screen background was dark grey (luminance 13.2 cd m−2) while the probes had luminance between 14.3 and 61.3 cd m−2. The strength of apparent compression varied with stimulus contrast such that strongest compression was observed at lowest contrast. Subsequent experiments aimed at exploring the exact difference between compression and shift using variations of stimulus luminance in darkness [20]. Peri-saccadic mislocalization was measured with near-threshold stimuli and above-threshold stimuli in dark-adapted and light-adapted subjects. The data could be interpreted as a superposition of shift and compression, or alternatively as a position-dependent shift. In both adaptation states, near-threshold stimuli gave stronger compression than above-threshold stimuli.

Compression is a two-dimensional phenomenon and occurs both along and orthogonal to the eye movement direction. Kaiser & Lappe [21] demonstrated this in an experiment with probes of small red dots on a green background arranged in a grid of 24 positions around the saccade target. The peri-saccadic mislocalization of these probes showed a clear two-dimensional pattern such that stimuli flashed far in the periphery were mislocalized in oblique directions towards the saccade target.

The strength of compression depends not only on visual but also on motor parameters. For example, compression correlates with eye speed such that higher speed results in stronger compression [22]. When visual target information and motor control are put into conflict, such as in anti-saccade [23] or saccadic adaptation [24,25] paradigms, compression occurs towards the motor endpoint.

(c). Saccadic suppression of displacement

The studies discussed above used briefly flashed stimuli to reveal peri-saccadic transformations that are invisible for the experimenter when using stable stimuli, i.e. stimuli that are present before, after and during the saccade. A more direct test to probe the subjective experience of stability uses displacements of the visual image during the saccade. During fixation, a small displacement of an object is easily detected since it triggers the response of motion detectors, indicating a change in the scene. Since the sensitivity to luminance changes is partly reduced during eye movements, commonly referred to as saccadic suppression [26–28], subjects cannot rely on the motion cue when the displacement is carried out during the saccade. Thus, the displacement must rather be detected by comparing the pre-saccadic with the post-saccadic stimulus location. Unlike during fixation, a small displacement of a target stimulus during a saccade is often not noticed after the eye movement [29,30]. In such experiments, a target stimulus that is initially fixated jumps to a new location, and subjects have to follow the jump with a saccade. Between saccade onset and saccade end, the target stimulus is then displaced to the left or right. If the intra-saccadic displacement is small, the displacement is not detected. This phenomenon is termed ‘saccadic suppression of displacement'. A poor trans-saccadic transfer of location information does not explain the failure to perceive the displacement, since the displacement is easily detected in the so-called post-saccadic blanking paradigm [30]. In this version of the task, the saccade target disappears after saccade onset and reappears at its displaced position 200 ms after saccade end. Subjects then easily detect that the target reappeared at a displaced location (figure 1c). Studies with natural scenes revealed that target object displacements can be better detected than displacements of the background, which indicates that subjects are more sensitive around the saccade target region [31]. Interestingly, the blanking of objects also affects perception without eye movements [32]. When two objects are blanked and one of them is displaced during the blank, the first object that reappears is perceived as stable, independent of whether it had been displaced or not.

(d). Peri-saccadic modulation of receptive fields

In the brain, the different views collected across eye movements are not simply processed in a feed-forward manner and then at one location merged into a global canvas of our environment. The brain rather appears to alter perception around eye movements based on extraretinal, anticipatory signals. The term extraretinal signal is a common denominator for a number of different non-visual signal sources that may inform the brain about the occurrence of an eye movement or of the current eye position such as the efference copy [33] and the corollary discharge [34]. Corollary discharge and efference copy are copies of the oculomotor command that are fed back to the perceptual pathways of the brain. The target location of saccadic eye movements is primarily controlled by the superior colliculus (SC) and the frontal eye field (FEF). They consist of cells that integrate the visual information (visuomovement or built-up) and others that respond just prior to saccade onset (movement or burst). The FEF has a feed-forward projection to the SC, but both project to the brainstem that controls the motor response. However, the saccadic eye-displacement command from the SC provides a corollary discharge to the FEF [35]. Thus, the SC informs the FEF about upcoming saccades by projecting the activity of related cells to the FEF where they merge on cells that are similar in type (e.g. burst, built-up). The SC uses a retinocentric coordinate system. Therefore, the saccadic eye displacement is both an encoding of the saccade amplitude and an encoding of the saccade target location.

Almost 20 years ago, it was observed that neurons in the lateral intraparietal (LIP) area start responding to a stimulus presented in their future receptive field just before the eye movement [36]. The future receptive field is the region in visual space where the receptive field of the neuron will be located after the eye movement. Typically, a probe is presented in the centre of the future receptive field of a given cell. If the cell gets activated by the probe either before the eye movement or with a latency (relative to saccade onset) that is shorter than the latency that would be expected for the current receptive field, this cell is considered a predictive remapping cell. The activation of the cell by a stimulus in the future receptive field has been interpreted as a remapping of the receptive field from the current to the future position shortly before the saccade. However, the term remapping of receptive fields already suggests a particular interpretation of the data since a receptive field implies the dynamic routing of bottom-up signals rather than a top-down activation. The term (classical) receptive field refers to the area in space where a stimulus has to be presented to drive the response of a given cell under the assumption that the chosen stimulus is optimal for activating the cell at all. The receptive field borders are typically mapped by presenting the stimulus on an imaginable grid of different locations and sometimes a threshold is applied to determine the exact border. If a cell responds to a stimulus that is now presented displaced by the vector of the eye movement, outside the classical receptive field, the above definition of a receptive field suggests a shift of the receptive field. However, detailed mappings of receptive fields using a grid of probes have not been done in those studies. While the term receptive field shift is correct in the framework of the given definition, it nevertheless implies a feed-forward processing from the sensor to the neuron. An alternative concept would be a feedback activation from other cells at the same hierarchy or from even later areas. If the receptive field is more strictly defined only as describing the feed-forward pathway, the receptive field would not remap, but an internal update by lateral or feedback projections would take place.

Such predictive responses have been reported in the LIP area [36–38], the SC [39], the FEF [40,41] and even earlier areas like V3 and V3A [42]. Neurons in some of these areas show an increase in responsiveness at the future receptive field accompanied by a decrease in sensitivity at the present receptive field [38,42]. According to Sommer & Wurtz [41], neurons in the FEF mostly remain responsive at the present receptive field. The dynamic receptive field changes in the FEFs have been linked to a corollary discharge signal from the SC carrying the information about the impeding saccade [41].

Neurons in monkey V4 also show dynamic receptive field changes around the time of a saccade [43]. In this study, the receptive field of neurons in V4 has been mapped with a grid of stationary stimuli during fixation and around eye movement. Like the receptive field dynamics described above, these receptive field changes begin prior to saccade onset, but on average, they do not shift parallel to the saccade, but towards the saccade target.

(e). Eye position gain fields and cranio-centric encoding

Neurons in many cortical areas within the dorsal stream of the macaque (areas V3A, V6, MT, MST, LIP and 7A) modulate their firing rate as a function of eye position [44–49]. This modulation has been described as an eye position gain field. Neurons with eye position gain fields carry information not only about the retinal location of a stimulus but, implicitly in the firing rate, also about the location with respect to the head. In addition to many parietal areas, eye position gain fields have also been found in the premotor cortex [50,51], the frontal [52] and supplementary eye fields [53] and the SC [54]. Neurons in some areas even have cranio-topic or head-centred receptive fields that follow head coordinates when eye position changes. These have been observed in V6 [55], VIP [56] and premotor cortex [57]. Little is known about the temporal dynamics and the source of eye position signals. Eye position information may be provided by efference copy signals from oculomotor nuclei or alternatively by the proprioception of the eye muscles in providing eye position information [58,59].

3. Theories and computational models of peri- and trans-saccadic perception

The various localization errors and perceptual slips that are associated with saccades provide a valuable database to develop and test concepts of peri-saccadic visual processing. In the following, we discuss a number of theories and computational models of peri- and trans-saccadic perception. These approaches can be differentiated by the way they combine visual with extraretinal signals. Conceptions in which the extraretinal information arises from central mechanisms that generate a saccade, such as the efference copy or corollary discharge, are often referred to as outflow theories. Usage of proprioceptive information has been the claim of inflow theories of spatial stability [60,61].

Several theories, among them the optimal trans-saccadic integration [62], spatial localization by substraction [63,64], coordinate transformation by gain fields [65,66] and some models of retinocentric spatial updating by gain fields [67], assume the existence of a continuous internal eye position signal. Often this signal is assumed not to directly encode the actual eye position but a rather sluggish version of it that starts already before the eye movement and reaches its final position after the eyes have already landed. While eye position signals have been identified in the brain as discussed earlier, an eye position signal with such spatio-temporal characteristics has not been directly observed so far.

Corollary discharge, which encodes eye displacement and not eye position, has been used in models to remap visual information by the amplitude of the saccade [68,69], and to enhance visual processing of the saccade target via attentional gain changes [70]. By back-projection from the SC to the FEF via the thalamus, corollary discharge merges with the more visual-related signals in the FEF and from there on, the merged signal spreads to various visual areas. Thus, corollary discharge can also affect spatial attention [70].

The object reference theory [30,71] suggests an approach that avoids the need for any extraretinal signal. It rather suggests a trans-saccadic memory of reference stimuli, which will be used after saccade to align the pre-saccadic view to the post-saccadic one.

The models can also be categorized based on their level of abstraction. Models relying on a continuous, sluggish eye position signal are rather abstract conceptualizations since they do not refer to particular brain areas and signals that have been measured. Some more recent models try to incorporate only those signals that have been observed [69,70]. Moreover, some models also consider anatomical details such as receptive field size and cortical magnification [70].

None of the models explains all the discussed phenomena at the same time. The following description is organized by their use of the different extraretinal signals. We will begin with the object reference theory, which operates even without any extraretinal signal.

(a). Object reference theory

The object reference theory [30] and the saccade target theory [31,72] rely on visual information for space constancy and ascribe a particular role to the saccade target. They suggest that most of the visual information before the saccade is discarded, and that a new representation of the visual world is generated from new incoming information after the saccade [73]. Only some information from the pre-saccadic image, particularly the position and properties of the saccade target, are encoded in trans-saccadic memory. After the saccade, the visual system searches for the target near the landing point of the eye. If the target is found, its location is matched with its stored position, and the world is considered stable. The basic assumption in this theory is that the perceptual world remains stable if nothing changes during the saccade. The presence of the saccade target near the fovea after the saccade is considered sufficient evidence that nothing has changed and that the world has remained stable. If the post-saccadic target location cannot be established, however, the assumption of visual stability is dropped. In this case, the visual system is forced to use extraretinal signals to re-calibrate the visual scene with respect to current gaze direction.

The reference object theory offers a direct explanation for the saccadic suppression of image displacement because it contains a spatio-temporal window around the saccade target in which displacements of the target are tolerated without noticing any instability [30]. The theory moreover explains the paradoxic target blanking effect in which displacements become apparent when the target is blanked after the saccade: because the target could not be found after the saccade, the assumption of stability is broken, and displacements become visible [30].

Perhaps somewhat surprisingly, the post-saccadic visual reference object need not be identical or visually very similar to the saccade target. Instead of the target, an extended background or an object near the saccade target may serve as reference, provided it is visually available immediately after the saccade [74]. Even visually dissimilar objects that are present after the saccade can serve as reference objects [75]. The current version of the reference object theory thus claims that visual stability is maintained as long as some object is found around the fovea immediately after the saccade [71,75]. However, the theory has difficulties to explain the observation that a pre-saccadic target gap also facilitates displacement detection [30].

Despite the elegance and simplicity of this theory, the reference object theory has so far not been described in algorithmic terms or linked to physiological phenomena. While the descriptive model appears very simple, a computational solution would have to cope with algorithms that allow for localizing the target object around the fovea and with a decision stage that determines whether a target object is present or not.

(b). Optimal trans-saccadic integration

Whereas the object reference theory proposes that extraretinal signals are needed only in limited conditions, optimal trans-saccadic integration suggests that peri-saccadic perception represents the optimal solution to the integration of noisy visual and extraretinal signals in a Bayesian formalism [62]. Thus, the suppression of saccadic displacement is not a flaw in the mechanism of visual stability but the optimal perceptual response to stimuli that are usually not displaced. Using a Bayesian approach, the prior probability of a target displacement is combined with visual and extraretinal signals about stimulus and eye position to determine the optimal estimate of the stimulus location. Given a stimulus displacement, the probability density function of the sensory estimate for the displacement is determined from the joint probability of the saccade scatter, a hypometric internal eye position signal and the retinal stimulus position. Since the hypometric eye position deviates peri-saccadically from the true eye position, the sensory estimate of the displacement is distorted. Under normal conditions of a stationary environment, in which objects do not jump during saccades, the prior probability distribution of a target jump is sharply tuned. In this case, the prior knowledge dominates the final estimate so that the displacement is not perceived. To explain the post-saccadic blanking effect, however, optimal trans-saccadic integration needs to assume a change in the prior probability density distribution from a sharply tuned to a more broadly tuned distribution. Thus, the model assumes that the blanking paradigm leads to more uncertainty about the object position.

The model predicts that the suppression of saccadic displacement is more prominent for displacements parallel to the saccade than orthogonal to it and that the magnitude of unnoticed displacements increases with increasing post-saccadic scatter of eye positions. Both predictions have been confirmed experimentally [62,76].

Optimal trans-saccadic integration critically depends on the prior probability density distribution. Since the mechanisms that determine the prior probability density distribution are not part of the model, the prior probability is a free parameter that allows the model to be flexibly fitted to data. Future studies are needed to find potential neural correlates of the prior.

(c). Spatial localization by substraction

A class of models describe how visual stimuli can be localized across a saccade by combining retinal input with extraretinal eye position signals. They describe how retinocentric representations can be transformed into an eye-position-invariant reference frame when the head and body remain stable. In this case, a particular retinal stimulation site, for example, 10° left of the fovea, together with a particular eye position, for example, 4° leftward deviation from the rostro-caudal axis, characterize a stimulus that is 14° to the left of the rostro-caudal axis in space. If the visual system keeps an accurate representation of eye position, such a simple calculation would allow inference of the location of objects at any time given the neural representation of the object is clearly located in retinocentric space and time.

However, the observation that briefly flashed stimuli are mislocalized even just before a saccade shows that such an accurate transformation does not take place during eye movements. The peri-saccadic shift has been taken as evidence that the internal representation of eye position is erroneous around the time of a saccade [6,7,9,13,77–80]. For example, to explain the observed mislocalization first in and later against the direction of the saccade, it has been proposed that in the first phase the extraretinal eye position signal precedes the actual eye movement, that is, indicates eye position change even before saccade onset, and in the second phase lags behind the actual eye movement (figure 2, left). This would be an extraretinal (exR) signal that is anticipatory but sluggish with respect to the actual saccade. In the first phase, a stimulus at a particular retinal location would appear shifted in saccade direction because the eye position signal already signals a change in eye position. In the second phase, the same stimulus would appear shifted against the saccade direction because the eye position signal underestimates the true change in eye position.

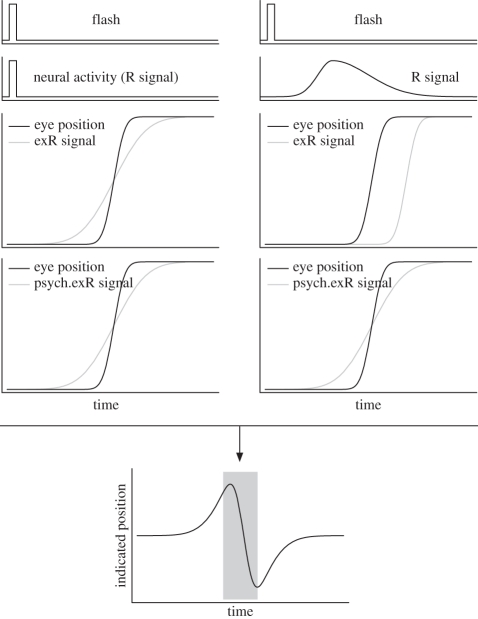

Figure 2.

Two different models of spatial localization by subtraction. They address how the interaction between the retinal signal of a flash and an extraretinal eye position signal can predict peri-saccadic shift following Honda [6] (left) and Pola [63] (right). In the model on the left, the retinal signal of the flash (R signal) is assumed to match the flash in timing and duration. The extraretinal eye position signal (exR signal) is assumed to be anticipatory but sluggish, i.e. it starts to develop before the true eye movement onset but does not arrive at the true final eye position until after the saccade is finished. Therefore, as shown in the centred bottom graph, the flash is mislocalized first in and later against the saccade direction. In the model on the right, the flash leads to retinal activation that has a certain onset latency and that lasts much longer than the flash itself. The extraretinal eye position signal is assumed to be faithful representation of the actual eye movement but also arriving in the system with a certain delay. The combination of the two signals leads to the same prediction as the model on the left, namely a mislocalization that is first in and then against saccade direction.

Most models of this type have assumed an eye position signal that varies continuously and provides essentially a rate code of eye position. It is alternatively possible to combine two competing gaze-related signals, one indicating gaze in the pre-saccadic direction and the other indicating gaze at the future or intended post-saccadic position [81]. Before the saccade, the activation of neurons at the current fixation decreases, and neurons representing the saccadic target begin to respond. A maximum-likelihood estimate of eye position from the two signal strengths then provides a gradual signal of eye position during the saccade.

Pola [63,82] presented a computational model and argued that the temporal properties of the retinal signal have to be taken into account. Important temporal properties are the time it takes from stimulus onset to activate a certain neural population, i.e. the stimulus latency, and the duration for which activity is maintained in neural populations after stimulus offset, i.e. the neural persistence. Pola's model from 2004 explicitly uses a time delay and dispersed visual activity to compute a realistic temporal behaviour of the retinal and extraretinal signals (figure 2, right). Both are combined with a so-called psychophysical extraretinal signal that is compared with the actual eye position to determine the degree of mislocalization. He discusses several model types that differ in the time delays and lags for these signals. When the retinal signal is modelled with a realistic delay and persistence and the extraretinal signal anticipates the eye movement, mislocalization in the model starts much too early. Thus, a fundamental prediction of his model is that the extraretinal signal starts only after saccade onset. This allows us to include inflow (proprioceptive) information in the extraretinal signal that earlier accounts had tried to disprove. However, this prediction must be evaluated in face of the decision criterion used. Pola [63] takes the part of the stimulus trace that is shifted away from the veridical retinal position as evidence for mislocalization. The fact that perceptual decision-making is also a temporal process could play a substantial role. If perceptual decision-making is a competitive process that starts with stimulus onset, most of the stimulus trace would initially support the veridical retinal position and only later on evidence for the non-veridical position would kick in owing to the interaction of the stimulus response with the extraretinal eye position signal. Thus, the claim that more detail about the stimulus response in the form of delay and persistence predicts a rather late extraretinal signal might not hold if one models also a more detailed temporal perceptual decision making process.

Binda et al. [64] developed a related model that also implements a realistic timing of visual and extraretinal signals. They model the spatial distortions of a stimulus response by a shifting eye position signal and emphasize the explanation of spatio-temporal distortions in topographic maps. In the input layer, the retinal signal is encoded by a linear operator that models temporal delay and lag and spatial distribution. A remapping encoding layer implements the shift according to an extraretinal signal. This is followed by a second encoding stage that introduces further temporal delay and lag. Finally, a decoding stage locates the stimulus in space and time by applying a hard threshold and convolving with templates similar to the linear operators of the encoding stages. In addition to spatial mislocalization, this model also allowed simulation of temporal distortions that occur around a saccade.

All of the above models do not predict peri-saccadic compression. Since they rely on an extraretinal eye position signal, they predict that the magnitude of the mislocalization should depend on saccade parameters. In particular, larger saccades should result in larger errors. However, Van Wetter & Van Opstal [10] found that the magnitude of the mislocalization saturates for larger saccadic amplitudes, which is difficult to accommodate in this class of models. At present, the new data by Van Wetter & Van Opstal [10] have not been accounted for by any model.

(d). Remapping of receptive fields for the subjective experience of visual stability

The theory of remapping has been developed from the physiological observations that neurons become responsive in anticipation of the upcoming saccade to stimuli presented in their future receptive field location [36,41,83,84]. It posits that the subjective experience of spatial stability is solved within retinocentric coordinates. In order to compensate for obvious displacements of the visual input in retinocentric reference frames owing to saccades, it has been suggested that the processing of stimuli at the future receptive field position can already start prior to saccade onset, i.e. before the arrival of the post-saccadic retinal information, and thus ensures visual stability and the continuity of visual perception [38,42]. Visual stability could then be explained on the basis of local detectors that compare the response at the future position before the eye movement with the one after the eye movement. Two successive increases of activity would argue for a stable environment since they simply reflect the response towards one and the same object before and after the saccade [84]. Just a single increase in activity would be the result of the occurrence of a new object [84].

Kusunoki & Goldberg [38] speculated that the vector encoded in the corollary discharge is (partially) added to the receptive field position. This concept of remapping requires an extraretinal signal that is distributed across the whole visual space and has the ability to change the effective connectivity of neurons in retinocentric maps. Such an explicit model of remapping has been proposed by Quaia et al. [68]. This model assumes communication between the FEF and the LIP to shift receptive fields along the saccade direction within area LIP. Each LIP cell is connected with other LIP cells at different locations throughout the retinotopic map. The strength of the connection between any two LIP cells is modulated by feedback connections of oculomotor neurons in the FEF. An FEF cell that encodes a certain saccade amplitude modulates the connection of the LIP cell that is excited by the current stimulus with the LIP cell that represents the future receptive field. Only the LIP–LIP connections that match the current saccade amplitude become activated because activation of the LIP–LIP connection and the FEF–LIP connection interacts multiplicatively. Thus, the activation of the current stimulus is routed to the LIP neurons at the future receptive field location by selective gating from the FEF. The model requires a massive interconnection within LIP and between LIP and FEF and also involves a number of different cell types and synaptic mechanism to account for the temporal properties of the remapped signals. While this model provides a putative architecture consistent with the observation of the predictive response to stimuli in the future receptive field, it does not address perceptual stability.

The exact function of the predictive responses in the future receptive field is still not fully clear. Aside from influencing perception, a putative role in motor control, sensorimotor adaptation and spatial memory has been discussed [85]. Despite its appealing idea, the theory of remapping for visual stability requires an in-depth explanation of how the change in receptive fields allows a subject to perceive the world as stable. For example, how could the increase in sensitivity in the future receptive field allow a subject to perceive an object being stable? Computational models providing potential solutions to this question would certainly be of high interest.

(e). Anticipatory saccade target processing by spatial re-entry

The corollary discharge encoding the saccade target may not only be used for remapping but also for enhancing perception at the future foveal location. According to this anticipatory saccade-target-processing model, an oculomotor signal, e.g. in the FEF, that emerges during spatial selection and encodes the internal representation of the saccade target, feeds back to visual areas and changes the gain of neurons at the visual locations of the motor fields. This framework emphasizes the role of re-entrant processing in visual perception. It links eye movements naturally to spatial attention since attention is explained as an emergent result of interactions between brain areas [86]. In most models of attention, the interaction between attention and eye movements remains abstract, since attention is simply reduced to a selection in an arbitrary saliency map, which may or may not correspond to a particular brain area. Here, attention is explained by re-entrant processing loops. As far as spatial attention is concerned, the FEF is one particular brain area that is in the position to establish such loops owing to its connectivity with other brain areas [86].

The core mechanism of the spatial re-entry model, a local gain increase, has been demonstrated in V4 while stimulating the FEF using currents below the level that evokes a saccade [87]. Although the FEF pathways projecting deeper into the motor areas become separated from those that project back to visual areas [88], it is well established that prior to the eye movement, the cells processing the saccade target area become more enhanced than cells processing other parts of the visual scene [89]. The model is also consistent with the observation that immediately before a saccade, attention is locked at the saccade target and discriminability is increased in this region [30,90–94]. Since the FEF is tightly linked to the SC by a back-projection from the SC through the thalamus [41], this framework also acknowledges a significant role of the corollary discharge in peri-saccadic perception.

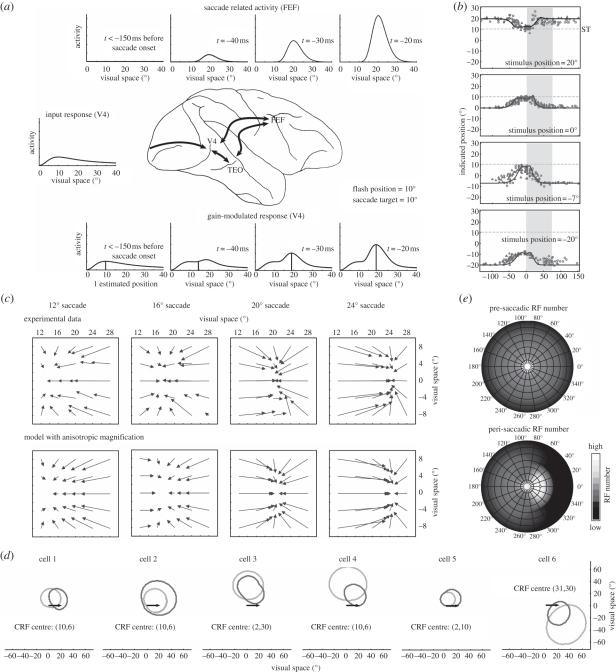

The framework has been implemented in a number of computational models [86,95,96] to explain attentive phenomena. Among others, it has been predicted that attention can be transiently split to two non-contiguous locations [96], which has been recently experimentally confirmed [97]. In Hamker et al. [70], we have formalized the earlier concepts into a detailed model of hierarchical processing. It was demonstrated that this model is able to explain the peri-saccadic compression of briefly presented stimuli towards the saccade target [15,21]. The explanation of compression by this model relies on well-accepted neural observations. First, a saccade plan leads to a build-up of neuronal activity in the SC and the FEF [89]. Second, FEF activity modulates the gain of V4 neurons [87]. As illustrated in figure 3a, the gain modulation by the FEF activity results in a distorted population response of the stimulus. For stimulus localization, the population response has simply been decoded for position relative to reference stimuli. Thus, this model uses reference stimuli only for coding of the flashed stimulus relative to another object such as the saccade target. If reference stimuli were not available, the localization of flashes during eye movements would require the use of extraretinal signals that would introduce additional errors. The mislocalization towards the saccade target, however, would be still included in the net effect. Third, the temporal period and strength of mislocalization fit well with the neural response of visuomovement and build-up neurons in SC and FEF (figure 3a). Fourth, the gain modulation in the model depends on stimulus energy [98–100] and thus is consistent with the observed dependency of mislocalization magnitude on stimulus contrast [19,20,101]. Finally, the model uses an explicit visuocortical mapping including cortical magnification [102]. By assuming a certain qualitative ratio of magnification along the rays and circles of constant eccentricity [103–105], the model is able to explain not only the mislocalization of spatially extended bars (figure 3b), but also the asymmetric two-dimensional spatial pattern of compression (figure 3c). The notion of cortical magnification as a crucial property to model the observed mislocalization has recently also been demonstrated by Richard et al. [101], who showed that the distance between stimulus and target in cortical space, as opposed to visual space, predicts the strength of the mislocalization.

Figure 3.

The spatial re-entry model for anticipatory saccade target processing [70]. The figure illustrates the impact of oculomotor feedback on neural populations, receptive fields and perceptual localization. (a) Population activity along the horizontal meridian of the input (middle) and the gain (bottom) stage are shown together with the feedback signal (top) in visual space. The saccade target is located at 20°. A stimulus is flashed at 10°. Long before saccade onset (t < −150 ms), the oculomotor feedback is inactive and thus, the population responses in the input and the gain stage are identical. The stimulus is seen at its true position. At t = −40 ms, the oculomotor feedback is sufficiently strong to distort the population response. The stimulus, as decoded from the population activity, is already shifted towards the saccade target. As the presentation time of the stimulus gets closer to saccade onset, the feedback signal, and thus the gain factor, increases further and the decoded stimulus position moves even closer to the saccade target. (b) Time course of compression obtained for four flash positions during a 20° saccade. Experimental data from Morrone et al. [15] is shown together with the model predictions (solid lines). The grey shaded area denotes the duration of the saccade. (c) Two-dimensional spatial pattern of compression as obtained for four saccade amplitudes. The top row shows the experimental data replotted from Kaiser & Lappe [21]. The bottom row shows the predictions of the model. (d) Examples of six model receptive fields for a fixation and a peri-saccadic epoch. In the fixation epoch, the current receptive field (CRF) was measured without the impact of oculomotor feedback. In the peri-saccadic epoch, receptive fields were measured immediately before saccade onset when the feedback signal is strongest. While cells 3, 4 and 6 show a peri-saccadic change reminiscent of V4 receptive field dynamics, i.e. shift and shrinkage towards the saccade target (arrows), cells 1, 2 and 5 show a pattern that is also consistent with the typical findings of predictive remapping, i.e. a shift or enlargement parallel to the saccade vector. (e) Effect of peri-saccadic receptive field changes on visual sampling density for a 20° saccade. At the top, the pre-saccadic number of receptive fields as measured in the fixation epoch are shown as a function of position in visual space. At the bottom, the peri-saccadic number of receptive fields as measured immediately before saccade onset is shown. The number of responsive cells increases in the saccade target region.

Because the visual responses of neurons in the spatial re-entry model are modulated by oculomotor feedback signals, the receptive fields in the model undergo dynamic changes before and during the saccade (figure 3d). These receptive field changes lead to an increase in the number of cells that effectively processes the saccade target region (figure 3e). Thus, the model explains the observed compression of visual space as the cost the visual system has to pay for a boosted pre-saccadic processing of the future fixation. The precise direction of the shift depends on the location of the receptive field in the space-variant retinotopic map and on the size of the saccade. Although the model was only fitted to psychophysical data, the receptive field shifts are similar to those observed in monkey area V4 [43] and surprisingly some receptive field dynamics seem to be consistent with the reported results in the remapping literature as described above [106]. The asymmetry of the two-dimensional compression arises from the visuocortical mapping. A model excluding cortical magnification predicts a simple radial pattern of compression towards the saccade target if the feedback signal is modelled as a radially symmetric Gaussian. The consideration of cortical magnification, in particular anisotropic cortical magnification, leads to the particular pattern observed. Anisotropic cortical magnification also predicts an asymmetrical shape of the feedback signal in visual space, which suggests that the spatial focus of attention is elongated rather than round, particularly for larger eccentricities.

While it as been speculated that other phenomena, such as a translation in cortical coordinates [107] or a stretching [108] or jumping [84] of receptive fields, could also account for mislocalization of visual stimuli towards the saccade target, the spatial re-entry model seems so far the most comprehensive account for the data of peri-saccadic compression. As it stands, the model explains the above-mentioned effects in a retinocentric frame of reference. To account for effects for which transformations to other coordinate systems may play a role, like the observed compression towards the adapted saccade target position [24] or effects in total darkness [20], the model has to be extended in future studies.

As far as perceptual stability is concerned, this model rather emphasizes the role of feature and object continuity across eye movements. Compression as predicted by this model is not used for a correction of peri-saccadic artefacts to realize a stable, spatially correct representation of the external world. We suggest that the anticipatory processing of the object of interest at the saccade target position is essential for the perception of a stable world, since we already deal with the object of interest before we even look at it. Thus, oculomotor feedback enables anticipatory processing and links the pre-saccadic representation with the post-saccadic one. First, oculomotor feedback reactivates the pre-saccadic representation of a stable stimulus at the saccade goal, which otherwise would decay close to baseline [109,110]. Second, a strong increase in the visual capacity around the saccade target may reveal details of the object that will otherwise only be seen when the eyes land. While most other models discussed here emphasize solely the spatial aspect, this model suggests in addition that the brain deals already with the object of interest while the eyes move.

(f). Coordinate transformation by gain fields

The question of spatial stability and of trans-saccadic localization is also tied to the question of reference frames for visual localization. Although perceptual stability could be maintained entirely in retinocentric coordinates, a transformation into non-retinal reference frames leads by definition to invariance against eye movements. As discussed above, neurons in many areas of the brain have retinocentric receptive fields that modulate their responses to visual stimuli with the position of the eye in the orbit. The influence of eye position on the activity of neurons has been termed the spatial gain field.

A representation of sensory information in different reference frames would have the advantage of a better multi-modal integration, e.g. of vision and sound in a head-centred reference frame. Moreover, the transition from vision to action might be operated on the best available reference frame and thus more fast, given a stimulus is simultaneously encoded in multiple reference frames. Several theoretical studies suggested that gain fields may serve to transform the coordinates of the incoming sensory signals to a non-retinocentric map. Zipser & Andersen [65] devised a network, called a multi-layer perceptron, that used an extraretinal eye position signal to transform retinocentric visual input into a head-centred representation. This class of network is well explored in the neural network literature. It consists of three layers. The input layer is composed of two parts, a retinal representation of the stimulus and a set of units representing eye position. The second layer, also known as the ‘hidden layer’, receives connections from the two input components and computes in each unit a response using a non-linear, S-shaped function. Similarly, the hidden layer units are connected to the output layer. Such networks can be trained by a supervised learning algorithm, e.g. back-propagation, to obtain any desired input–output mapping by adjusting all weights to minimize the error between the desired and actual output given a set of input–output samples. The emerging functionality of the units in the intermediate hidden layer was similar to the neurons in area 7A. When the operating point of the neurons is around the accelerating limb of the S-shaped function, the inputs tend to multiply, whereas in the linear, intermediate part of the S-shaped function, the inputs add to each other. Other studies have refined the general ideas of Zipser & Andersen [65], with more biologically plausible mechanisms [111], examining the consequences and function of head-centred coordinates in more detail. Xing & Andersen [66] improved on this approach by showing that network models of the posterior parietal cortex that encode several coordinate systems in their output (eye-centred, head-centred and body-centred) can be trained to better resemble the gain fields actually found in the PPC than single-output models.

A more theoretical approach considers eye position gain fields in the parietal cortex, as well as in other brain areas such as the SC, as basis functions for a distributed encoding of perceptual space [112,113]. In these models, the retinocentric representation of a stimulus and an eye position signal feeds into a basis-function layer where they interact multiplicatively. A diagonal readout of this layer yields a subtraction of the two values encoded in the input layers in a population code. If the input values are (i) a retinocentric stimulus position and (ii) the eye position, the output is an eye-position-independent stimulus position (figure 4). Networks of this type have been used as an attractor to read out (multi-modal) noisy population codes typically focused on static phenomena. Applications of these concepts to study the dynamic behaviour of parietal neurons have rarely been undertaken so far. Denève et al. [114] used a recurrent basis-function network to integrate a noisy moving input owing to body movement with an internal model about this movement. Since basis-function networks appear consistent with several observations, it would be interesting to explore the temporal dynamics of changes during eye movements and their relation to observations such as predictive remapping. A critical issue appears to be the conception of the eye position signal in such models when it comes to dynamic coordinate transformations during eye movements.

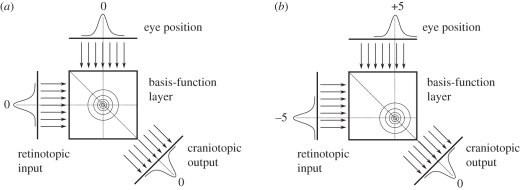

Figure 4.

Schematic of a basis-function network to achieve a coordinate transformation. For simplicity, stimulus representation and eye position are encoded in a single dimension each represented by a population code. In this case, a diagonal readout of this layer yields a subtraction of the two values encoded in the input layers—a head-centred representation. (a) The eye is looking straight ahead at 0°. The stimulus is at 0° in the world and therefore also at 0° on the retina. (b) The eye has moved to +5°. Since the stimulus is still at 0° in the world, it appears at −5° on the retina. The network computes the correct cranio-topic stimulus position in both cases.

(g). Retinocentric spatial updating by gain fields

A subset of gain field models are those that map a stimulus to its future position after a saccade while remaining within a retinocentric representation. Rather than implementing a coordinate transformation, they learn spatial updating. A typical experimental paradigm where such updating is beneficial is the double-saccade task, in which two targets are flashed. The saccade to the second target requires that this target is updated in memory after the first saccade since there is little evidence for memorizing the saccade target in eye-position-independent coordinates. Xing & Andersen [115] expanded the model of Zipser & Andersen [65] to allow recurrent processing in the hidden layer to address this question. They used visual and eye position signals as input, the desired motor error as output and two hidden layers. The first is for memorizing the second saccade target and the initial eye position. The second is for encoding the saccade based on the available information from the input and the memory. Thus, this model combines memory with the current eye position signal through gain fields to determine the next saccade. Although gain fields rely on an eye position signal, eye-velocity information can alternatively be used for this spatial updating [67].

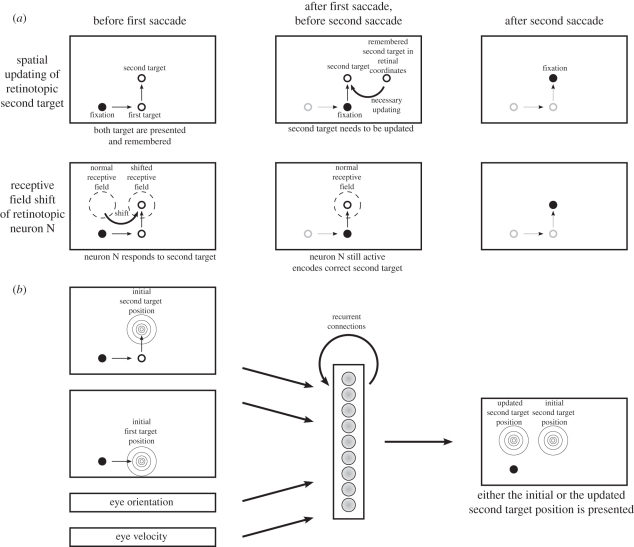

Keith et al. [69] recently investigated the dynamics of spatial updating for the double-saccade task in more detail. They also used a three-layer network with recurrent connections in the hidden layer. The network was trained to first generate an output to the second target in retinocentric coordinates and then remap the second-target position around the execution of the first saccade to feed the updated target position into the FEF or SC as the next saccade target. The updating was driven by one of three signals, a transient visual response to the first saccade target, a signal from motor burst neurons that start to discharge already before the saccade or an eye-velocity signal. They did not use eye position as in the earlier studies. Training did not occur within the updating interval around eye movements that allowed them to study the emergent dynamics of updating. The resulting dynamics depend on the updating signal used. At the output side, the population responses slowly move when eye velocity is used, stretch towards the new updated position when the burst signal is used and rather jump in case of the transient visual response. Neurons in the hidden layer show a large variety of receptive field shifts in the direction of the saccade but also in the opposite direction (figure 5).

Figure 5.

A model for the double-step paradigm. (a) The first row shows how the theory of spatial updating explains that the second saccade in the double-step task lands correctly. Before the first saccade, the second target is represented in retinotopic coordinates (left panel). After the first saccade, the second target is still remembered in retinotopic coordinates and has to be updated (middle panel) in order to yield a correct second saccade (right panel). In contrast, the second row shows the explanation in terms of receptive field shifts. There is a neuron N that has its normal receptive field at the retinotopic position where the second saccade target will be after the first saccade (middle panel). Right before the first saccade, this receptive field shifts, such that the second saccade target at this time falls into it and thus drives the neuron N (left panel). Therefore, this neuron's activity represents the second saccade target correctly after the first saccade (middle panel) and yields the correct second saccade (right panel). (b) A neural network model that can be trained to implement the spatial updating in the double-step task (adapted from [69]). It uses the initial first and second saccade target positions, the eye orientation and velocity to translate the second saccade target position by the inverse of the first target vector.

So far, these spatial updating models have been trained on the double-saccade task and their internal organization has been compared with physiological observations. Thus, it is not clear whether these mechanisms are potential explanations for the described behavioural peri-saccadic phenomena.

4. Computational mechanisms for visual stability

The discussion of different models in this review shows that each of the models addresses a particular phenomenon occurring during peri-saccadic perception. The different models of peri-saccadic perception are not necessarily alternatives to each other and sometimes rely on similar concepts at different levels of abstraction. However, the ability of a model to explain data beyond the experimental domain it has been designed for is rather limited. Visuomotor spatial localization models have been applied to explain the mislocalization in total darkness, but their success is now challenged by the observed saturation of mislocalization for long saccades [10]. The reference object theory and optimal trans-saccadic integration address saccadic suppression of displacement. The remapping of receptive fields is at present a framework to conceptualize a number of dynamic physiological observations around a saccade. The role of remapping in the subjective perception of a stable world must be backed up with more detailed modelling studies to provide a clear link from a neural implementation to function. The spatial re-entry model explains the phenomena of peri-saccadic compression—even quantitatively. Since the strength of the neural distortion depends on stimulus contrast and brightness, the effects diminish for bright stimuli in total darkness. This suggests that the mechanisms for compression and those for the uniform peri-saccadic shift are mediated by different networks.

In an attempt to explore the generalization of the reference object theory and optimal trans-saccadic integration with respect to peri-saccadic compression, we have conducted experiments with an additional location marker at the position of the upcoming flash to remove the uncertainty about the flash position [116]. If such prior knowledge about flash position is used by the brain to compute a sharp prior probability density about its location, one would expect no compression under this condition. The additional location marker could also serve as a landmark, since the flash was directly shown on the location marker. Since the subjects still mislocalized the stimulus towards the saccade target, compression does not appear to depend on a built-in assumption of a stable environment as put forward to explain saccadic suppression of displacement. Compression rather depends on the spatial re-entry of the signal encoding the saccade target [70]. However, the spatial re-entry model includes components of the object reference theory since the internal representation of the stimulus is decoded for position relative to a reference object, typically the saccade target.

The potential overlap of the mechanisms underlying the mislocalization in the direction of the saccade vector and the saccadic suppression of displacement is not well explored by existing models. Since optimal trans-saccadic integration uses an internal eye position signal, it suggests a relation between both phenomena. Because immediately after a saccade the internal eye position signal is typically considered hypometric, it predicts an asymmetric saccadic suppression of displacement pattern with respect to saccade and displacement direction. Although some indications for an asymmetric pattern have been observed [76], this property has not yet been systematically explored. From the other perspective, it is difficult to predict the prior probability distribution of optimal trans-saccadic integration for stimulus localization of flashes in total darkness, making the application of optimal trans-saccadic integration problematic for experiments using flashes in total darkness.

Despite these large conceptual differences between models and their range of applicability, there are also common phenomena. Dynamic changes of receptive fields as stressed by remapping are also predicted by basis-function networks and by the spatial re-entry model. Particularly, the spatial re-entry model predicts receptive field shifts very similar to remapping for a large part of the visual field [106]. Thus, recent data about trans-saccadic perception using adaptation and masking [117,118] that has been linked to remapping can also be explained on the basis of spatial re-entry. Model simulations predict that cells showing remapping ought to be confined to locations above and below the fovea stretching into the visual field opposite of the saccade target. This is distinct from global remapping of the whole retinocentric representation as proposed by the theory of remapping. The primary difference lies in the area around the saccade target, where a global remapping predicts a shift in the direction of the eye movement and the re-entry model predicts a shift in the direction towards the saccade target. However, both predict a cross-hemispherical transfer of activity as observed in several studies [119–121]. A difference is also apparent in the required amount of neuronal connections and the routing of visual signals in the two cases. Whereas remapping (e.g. [68]) needs full global interconnectivity within the remapping layer and between the remapping and the oculomotor layer, and routes visual information within the remapping layer, the spatial re-entry model assumes only feed-forward visual connections and feedback oculomotor connections between layers, and modulates merely the routing in the feed-forward path. Gain field models that are trained to map a stimulus within a retinocentric representation to its future position after a saccade could also be understood as a potential neural substrate of remapping [69,115]. However, their receptive fields that emerge after training show a large variability rather than a typical prototype of remapping. Thus, dynamic receptive field changes around the saccade can originate from multiple mechanisms and together with additional modelling studies, dynamic receptive field changes as observed in different brain areas must be investigated in more detail to better understand their function and neural implementation.

Moreover, attentional phenomena, albeit sometimes hidden, can be identified in several models. In the object reference theory, for example, a reference stimulus must be encoded in trans-saccadic memory. Attention to certain objects such as the saccade target makes these objects more likely to be used as a reference as illustrated also in a computational study [122]. Attention is also a critical component of the spatial re-entry model. The oculomotor feedback signal encoding the target location of the future eye movement feeds into visual areas and enhances the gain of those neurons around the future target, which in turn facilitates the processing of objects around the target location, including their preferred storage in memory. Variants of the original remapping theory in which attention determines the spatial area of remapping [123,124] have been recently suggested to account for the observation that LIP rather encodes salient objects but not a whole array of irrelevant objects [125]. However, the attention effects in area LIP [125] are somewhat different than those modelled in Hamker et al. [70]. LIP appears to encode salience, e.g. abrupt onsets or task relevance. In the latter case, this could be mediated by some form of feature-based attention, whereas Hamker et al. [70] model exclusively spatial attentional effects linked to eye movements.

One aspect that has been rarely addressed in models of peri-saccadic perception, but that appears to be critical for at least some predictions, is the process of perceptual decision-making. While static population decoding is to a first degree a good approximation, the temporal aspect of decision-making can be critical if the evidence for a hypothesis changes over time. The process of a perceptual decision takes about 50 ms in monkeys trained on a simple colour discrimination task [126]. Under these circumstances, typical models of perceptual decision-making (e.g. [127–130]) predict that early evidence has more impact on the final decision than late evidence. For example, Hamker [130] simulated a perceptual decision-making experiment known as feature inheritance [131]. In this experiment, a vernier or a tilted line are presented for a short time and followed immediately by a grating comprising a small number of straight elements. While the first stimulus remained consciously invisible to the subjects, the second stimulus inherited features from the first one, i.e. the grating is perceived as tilted. The decision-making process can be regarded as the accumulation of evidence (for tilt or no tilt) given the trace of activity in the visual areas [130]. Since feedback from the first stimulus affects processing of the second stimulus, the latter one is perceived as distorted (e.g. tilted). Models that do not simulate decision-making as a temporal process would have difficulties in explaining such observations. Thus, models of peri-saccadic perception could benefit when more realistic models of perceptual decision-making are incorporated.

In conclusion, in recent years, an increasing number of computational models have addressed peri-saccadic phenomena of visual perception. An understanding of the underlying mechanisms is slowly unfolding. According to our present evidence, we have to make a distinction between situations where visual references are available and where they are not. How exactly references are used to localize objects is less well understood. There seems to be a consensus that corollary discharge is preferably used to update spatial representations around saccade onset if no references are available. However, it would be too simple to draw the line just between the conditions where references are available or not. Corollary discharge can be understood as a special case of re-entrant processing. While in the past the late motor signals have been in the focus, present research is gradually shifting towards earlier, more visuomotor signals of feedback that affect perception [70,86,132].

Acknowledgements

This work has been supported by the German Federal Ministry of Education and Research grant ‘Visuospatial Cognition’, the FP7-ICT program of the European Commission within the grant ‘Eyeshots: Heterogeneous 3-D Perception across Visual Fragments’ and DFG LA-952/3.

Footnotes

One contribution of 11 to a Theme Issue ‘Visual stability’.

References

- 1.Helmholtz H. V. 1867. Handbuch der physiologischen Optik. Hamburg, Germany: Voss [Google Scholar]

- 2.Matin L., Pearce E., Pola J. 1970. Visual perception of direction when voluntary saccades occur: II. Relation of visual direction of a fixation target extinguished before saccade to a subsequent test flash presented before the saccade. Percep. Psychophys. 8, 9–14 [Google Scholar]

- 3.Matin E. 1972. Eye movements and perceived visual direction. In Eye movements and perceived visual direction (eds Jameson D., Hurvitch L.), pp. 331–380 Berlin, Germany: Springer [Google Scholar]

- 4.Matin L. 1976. Saccades and extraretinal signal for visual direction. In Eye movements and psychological processes (eds Monty R. A., Senders J. W.), pp. 205–219 Hillsdale, NJ: Erlbaum Assoc [Google Scholar]

- 5.Honda H. 1989. Perceptual localization of visual stimuli flashed during saccades. Percept. Psychophy. 45, 162–174 [DOI] [PubMed] [Google Scholar]

- 6.Honda H. 1991. The time courses of visual mislocalization and of extraretinal eye position signals at the time of vertical saccades. Vis. Res. 31, 1915–1921 10.1016/0042-6989(91)90186-9 (doi:10.1016/0042-6989(91)90186-9) [DOI] [PubMed] [Google Scholar]

- 7.Dassonville P., Schlag J., Schlag-Rey M. 1992. Oculomotor localization relies on a damped representation of saccadic eye displacement in human and nonhuman primates. Vis. Neurosci. 9, 261–269 10.1017/S0952523800010671 (doi:10.1017/S0952523800010671) [DOI] [PubMed] [Google Scholar]

- 8.Schlag J., Schlag-Rey M. 1995. Illusory localization of stimuli flashed in the dark before saccades. Vis. Res. 35, 2347–2357 10.1016/0042-6989(95)00021-Q (doi:10.1016/0042-6989(95)00021-Q) [DOI] [PubMed] [Google Scholar]

- 9.Schlag J., Schlag-Rey M. 2002. Through the eye, slowly: delays and localization errors in the visual system. Nat. Rev. Neurosci. 3, 191–215 10.1038/nrn750 (doi:10.1038/nrn750) [DOI] [PubMed] [Google Scholar]

- 10.Van Wetter S. M. C. I., Van Opstal A. J. 2008. Experimental test of visuomotor updating models that explain perisaccadic mislocalization. J. Vis. 8, 8. 10.1167/8.14.8 (doi:10.1167/8.14.8) [DOI] [PubMed] [Google Scholar]

- 11.Bischof N., Kramer E. 1968. Investigations and considerations of directional perception during voluntary saccadic eye movements. Psychol. Forsch. 32, 185–218 10.1007/BF00418660 (doi:10.1007/BF00418660) [DOI] [PubMed] [Google Scholar]

- 12.Honda H. 1993. Saccade-contingent displacement of the apparent position of visual stimuli flashed on a dimly illuminated structured background. Vis. Res. 33, 709–716 10.1016/0042-6989(93)90190-8 (doi:10.1016/0042-6989(93)90190-8) [DOI] [PubMed] [Google Scholar]

- 13.Dassonville P., Schlag J., Schlag-Rey M. 1995. The use of egocentric and exocentric location cues in saccadic programming. Vis. Res. 35, 2191–2199 10.1016/0042-6989(94)00317-3 (doi:10.1016/0042-6989(94)00317-3) [DOI] [PubMed] [Google Scholar]

- 14.Ross J., Morrone M. C., Burr D. C. 1997. Compression of visual space before saccades. Nature 386, 598–601 10.1038/386598a0 (doi:10.1038/386598a0) [DOI] [PubMed] [Google Scholar]

- 15.Morrone M. C., Ross J., Burr D. C. 1997. Apparent position of visual targets during real and simulated saccadic eye movements. J. Neurosci. 17, 7941–7953 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lappe M., Awater H., Krekelberg B. 2000. Postsaccadic visual references generate presaccadic compression of space. Nature 403, 892–895 10.1038/35002588 (doi:10.1038/35002588) [DOI] [PubMed] [Google Scholar]

- 17.Morrone M. C., Ma-Wyatt A., Ross J. 2005. Seeing and ballistic pointing at perisaccadic targets. J. Vis. 5, 741–754 10.1167/5.9.7 (doi:10,1167/5.9.7) [DOI] [PubMed] [Google Scholar]

- 18.Awater H., Lappe M. 2006. Mislocalization of perceived saccade target position induced by perisaccadic visual stimulation. J. Neurosci. 26, 12–20 10.1523/JNEUROSCI.2407-05.2006 (doi:10.1523/JNEUROSCI.2407-05.2006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Michels L., Lappe M. 2004. Contrast dependency of saccadic compression and suppression. Vis. Res. 44, 2327–2336 10.1016/j.visres.2004.05.008 (doi:10.1016/j.visres.2004.05.008) [DOI] [PubMed] [Google Scholar]

- 20.Georg K., Hamker F. H., Lappe M. 2008. Influence of adaptation state and stimulus luminance on peri-saccadic localization. J. Vis. 8, 15. 10.1167/8.1.15 (doi:10.1167/8.1.15) [DOI] [PubMed] [Google Scholar]

- 21.Kaiser M., Lappe M. 2004. Perisaccadic mislocalization orthogonal to saccade direction. Neuron 41, 293–300 10.1016/S0896-6273(03)00849-3 (doi:10.1016/S0896-6273(03)00849-3) [DOI] [PubMed] [Google Scholar]

- 22.Ostendorf F., Fischer C., Finke C., Ploner C. J. 2007. Perisaccadic compression correlates with saccadic peak velocity: differential association of eye movement dynamics with perceptual mislocalization patterns. J. Neurosci. 27, 7559–7563 10.1523/JNEUROSCI.2074-07.2007 (doi:10.1523/JNEUROSCI.2074-07.2007) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Awater H., Lappe M. 2004. Perception of visual space at the time of pro- and anti-saccades. J. Neurophys. 91, 2457–2464 10.1152/jn.00821.2003 (doi:10.1152/jn.00821.2003) [DOI] [PubMed] [Google Scholar]

- 24.Awater H., Burr D., Lappe M., Morrone M. C., Goldberg M. E. 2005. Effect of saccadic adaptation on localization of visual targets. J. Neurophys. 93, 3605–3614 10.1152/jn.01013.2004 (doi:10.1152/jn.01013.2004) [DOI] [PubMed] [Google Scholar]

- 25.Georg K., Lappe M. 2009. Effects of saccadic adaptation on visual localization before and during saccades. Exp. Brain Res. 192, 9–23 10.1007/s00221-008-1546-y (doi:10.1007/s00221-008-1546-y) [DOI] [PubMed] [Google Scholar]

- 26.Volkmann F. C., Riggs L. A., White K. D., Moore R. K. 1978. Contrast sensitivity during saccadic eye movements. Vis. Res. 18, 1193–1199 10.1016/0042-6989(78)90104-9 (doi:10.1016/0042-6989(78)90104-9) [DOI] [PubMed] [Google Scholar]

- 27.Campbell F. W., Wurtz R. H. 1978. Saccadic omission: why we do not see a grey-out during a saccadic eye movement. Vis. Res. 18, 1297–1303 10.1016/0042-6989(78)90219-5 (doi:10.1016/0042-6989(78)90219-5) [DOI] [PubMed] [Google Scholar]

- 28.Burr D. C., Holt J., Johnstone J. R., Ross J. 1982. Selective depression of motion sensitivity during saccades. J. Physiol. 333, 1–15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bridgeman B., Hendry D., Stark L. 1975. Failure to detect displacement of the visual world during saccadic eye movements. Vis. Res. 15, 719–722 10.1016/0042-6989(75)90290-4 (doi:10.1016/0042-6989(75)90290-4) [DOI] [PubMed] [Google Scholar]

- 30.Deubel H., Schneider W. X., Bridgeman B. 1996. Postsaccadic target blanking prevents saccadic suppression of image displacement. Vis. Res. 36, 985–996 10.1016/0042-6989(95)00203-0 (doi:10.1016/0042-6989(95)00203-0) [DOI] [PubMed] [Google Scholar]

- 31.Currie C. B., McConkie G. W., Carlson-Radvansky L. A., Irwin D. E. 2000. The role of the saccade target object in the perception of a visually stable world. Percept. Psychophys. 62, 673–683 [DOI] [PubMed] [Google Scholar]

- 32.Deubel H., Koch C., Bridgeman B. 2010. Landmarks facilitate visual space constancy across saccades and during fixation. Vis. Res. 50, 249–259 10.1016/j.visres.2009.09.020 (doi:10.1016/j.visres.2009.09.020) [DOI] [PubMed] [Google Scholar]

- 33.Von Holst E., Mittelstaedt H. 1950. Das Reafferenzprinzip. Naturwissenschaften 37, 464–476 [Google Scholar]

- 34.Sperry R. W. 1950. Neural basis of the spontaneous optokinetic response produced by visual inversion. J. Comp. Physiol. Psychol. 43, 482–489 10.1037/h0055479 (doi:10.1037/h0055479) [DOI] [PubMed] [Google Scholar]

- 35.Sommer M. A., Wurtz R. H. 2004. What the brain stem tells the frontal cortex. I. Oculomotor signals sent from superior colliculus to frontal eye field via mediodorsal thalamus. J. Neurophysiol. 91, 1381–1402 10.1152/jn.00738.2003 (doi:10.1152/jn.00738.2003) [DOI] [PubMed] [Google Scholar]

- 36.Duhamel J. R., Colby C. L., Goldberg M. E. 1992. The updating of the representation of visual space in parietal cortex by intended eye movements. Science 255, 90–92 10.1126/science.1553535 (doi:10.1126/science.1553535) [DOI] [PubMed] [Google Scholar]

- 37.Colby C. L., Duhamel J. R., Goldberg M. E. 1996. Visual, presaccadic, and cognitive activation of single neurons in monkey lateral intraparietal area. J. Neurophysiol. 76, 2841–2852 [DOI] [PubMed] [Google Scholar]

- 38.Kusunoki M., Goldberg M. E. 2003. The time course of perisaccadic receptive field shifts in the lateral intraparietal area of the monkey. J. Neurophysiol. 89, 1519–1527 10.1152/jn.00519.2002 (doi:10.1152/jn.00519.2002) [DOI] [PubMed] [Google Scholar]

- 39.Walker M. F., Fitzgibbon E. J., Goldberg M. E. 1995. Neurons in the monkey superior colliculus predict the visual result of impending saccadic eye movements. J. Neurophysiol. 73, 1988–2003 [DOI] [PubMed] [Google Scholar]

- 40.Umeno M. M., Goldberg M. E. 1997. Spatial processing in the monkey frontal eye field. I. Predictive visual responses. J. Neurophysiol. 78, 1373–1383 [DOI] [PubMed] [Google Scholar]