Abstract

The mechanisms and functional anatomy underlying the early stages of speech perception are still not well understood. One way to investigate the cognitive and neural underpinnings of speech perception is by investigating patients with speech perception deficits but with preserved ability in other domains of language. One such case is reported here: patient NL shows highly impaired speech perception despite normal hearing ability and preserved semantic knowledge, speaking, and reading ability, and is thus classified as a case of pure word deafness (PWD). NL has a left temporoparietal lesion without right hemisphere damage and DTI imaging suggests that he has preserved cross-hemispheric connectivity, arguing against an account of PWD as a disconnection of left lateralized language areas from auditory input. Two experiments investigated whether NL’s speech perception deficit could instead result from an underlying problem with rapid temporal processing. Experiment 1 showed that NL has particular difficulty discriminating sounds that differ in terms of rapid temporal changes, be they speech or non-speech sounds. Experiment 2 employed an intensive training program designed to improve rapid temporal processing in language impaired children (Fast ForWord; Scientific Learning Corporation, Oakland, CA) and found that NL was able to improve his ability to discriminate rapid temporal differences in non-speech sounds, but not in speech sounds. Overall, these data suggest that patients with unilateral PWD may, in fact, have a deficit in (left lateralized) temporal processing ability, however they also show that a rapid temporal processing deficit is, by itself, unable to account for this patient’s speech perception deficit.

Keywords: speech perception, rapid temporal processing, pure word deafness, lateralization

Despite the considerable interest in, and effort put into, understanding how we perceive speech, a clear understanding of the mechanisms and the functional anatomy that underlies speech perception remains elusive. In a very simple model of speech perception, a speech sound is first analyzed in terms of its linguistic features (acoustic or articulatory), and this analysis converges onto a representation at a level of phonemes, from which activation feeds to a level of word representations. Thus a deficit in speech perception could occur either due to damage to any of these representational levels (features, phonemes, or words), or due to damage to connections between them. Although one might imagine that it would be reasonably straightforward to tease apart the neural underpinnings of these levels via studies of speech perception deficits and neuroimaging studies of normal speech processing, it has so far proven difficult to do so. It is not yet even clear if the robust finding that language processing is, as a whole, strongly left lateralized (e.g., Binder et al., 1997) applies to the early stages of speech perception.

Like any type of auditory input, speech is initially processed in the primary auditory cortices bilaterally. So, at what point in the translation of auditory signals into linguistic representations does language processing become left dominant? One means of addressing this issue is to look at cases of pure word deafness (PWD).1 PWD is a rare condition characterized by severely impaired speech perception despite good hearing ability and preserved functioning in other domains of language (e.g., reading, writing, and speaking; for reviews, see Badecker, 2005; Buchman, Garron, Trost-Cardamone, Wichter, & Schwartz, 1986; Goldstein, 1974; Poeppel, 2001; Stefanatos, Gershkoff, & Madigan, 2005). Early conceptions of PWD implicated a rather late locus for lateralization as the syndrome was thought to derive from the disconnection between auditory input and stored auditory “word forms” (what today might be termed lexical phonological representations) that were assumed to be localized in Wernicke’s area (Geschwind, 1965; Lichtheim, 1885). Wernicke’s area itself was assumed to be preserved in PWD to account for these patients’ preserved speech production, under the assumption that such lexical phonological representations are shared in production and comprehension.

This disconnection account is also able to reconcile two different anatomical etiologies of PWD: Although PWD typically results from bilateral superior temporal lobe damage, it has also been reported following unilateral damage to the left temporal lobe (Caramazza, Berndt, & Basili, 1983; Eustache, Lechevalier, Viader, & Lambert, 1990; Lichtheim, 1885; Metz-Lutz & Dahl, 1984; Saffran, Marin, & Yeni-Komshian, 1976; Stefanatos et al., 2005; Takahashi et al., 1992; Wang, Peach, Xu, Schneck, & Manry, 2000; and, in one case, following unilateral right temporal damage: Roberts, Sandercock, & Ghadiali, 1987). Under this account, bilateral damage prevents auditory input from either hemisphere from being transmitted to Wernicke’s area whereas left unilateral damage of auditory cortex can be positioned such that it not only prevents transmission of left hemisphere auditory input to Wernicke’s area but also damages subcortical projections from the opposite hemisphere (or even such that both auditory cortices are spared but with damage to ipsilateral as well as contralateral input to Wernicke’s area).

A more recent proposal is that speech-specificity (and thus lateralization) occurs earlier in the processing stream–prior to access to lexical phonological representations, perhaps at the level of phoneme identification–fitting with claims that the primitives of language perception are processed in language-specific manner (Liberman & Mattingly, 1989; Liberman & Whalen, 2000). Indeed some evidence suggests early lateralization of speech signal processing: left hemisphere lesions are associated with deficits in phoneme perception (e.g., Caplan, Gow, & Makris, 1995; Miceli, Gainotti, Caltagirone, & Masullo, 1980), neuroimaging work shows left temporal involvement in phoneme perception (Liebenthal, Binder, Spitzer, Possing, & Medler, 2005; Naatanen et al., 1997) and there is even evidence for left lateralization of speech perception at subcortical levels of processing (Hornickel, Skoe, & Kraus, 2009). Furthermore, a left-hemisphere (right-ear) advantage for consonant perception occurs only when those sounds have linguistic significance (Best & Avery, 1999), suggesting a language-specific, but experience-dependent, speech perception mechanism in the left hemisphere.

However, evidence for the lateralization of neural responses to early speech sound components is somewhat equivocal overall (Demonet, Thierry, & Cardebat, 2005), and other recent frameworks suggest that early stages of speech processing (perhaps even up until conceptual processing) recruit superior temporal regions bilaterally (Binder et al., 2000; Hickok & Poeppel, 2000, 2004, 2007). Additionally, although language-specific responses to speech stimuli have been motivated in terms of a (left-lateralized) “phonetic module” (Liberman & Whalen, 2000) and phonemes typically play an important role in cognitive models of speech perception (e.g., Hickok & Poeppel, 2007; McClelland & Elman, 1986), there is actually very little evidence for cognitive representations of phonemes in speech perception (see Scott & Wise, 2004, for discussion). Instead, speech perception may rely on groups of speech sounds or on other aspects of the speech signal.

This suggests that a left hemisphere bias for speech processing may not reflect a specialization for speech stimuli per se, but rather reflect a more specific type of hemispheric specialization that is disproportionately involved in speech perception. An influential proposal along these lines is that the left hemisphere’s advantage for speech processing reflects an underlying specialization for the processing of rapid temporal aspects of sound, whereas the right hemisphere is specialized for the processing of spectral aspects of sound. This makes sense because the identification of speech sounds (particularly consonants) relies heavily on rapid temporal cues on the order of tens of milliseconds (e.g., Shannon, Zeng, Kamath, Wygonski, & Ekelid, 1995). This contrasts with other types of complex auditory stimuli such as environmental sounds or music, which rely more heavily on spectral information (de Cheveigné, 2005) and on temporal cues over a relatively slower time window (i.e., hundreds of milliseconds).

Early evidence for a left hemispheric specialization for rapid temporal processing came from observations of temporal judgment deficits in stroke patients with left hemisphere lesions (Efron, 1963; Lackner & Teuber, 1973). For example, Robin, Tranel, and Damasio (1990) reported that patients with temporoparietal left hemisphere damage were particularly impaired at tasks requiring temporal acuity (e.g., click fusion), whereas patients with right hemisphere temporoparietal damage were particularly impaired at tasks requiring spectral processing (e.g., frequency discrimination). There is also evidence from neuroimaging studies of non-brain-damaged participants suggesting that the left hemisphere preferentially processes rapid temporal aspects of sound stimuli whereas the right hemisphere processes spectral aspects of sound (e.g., Belin et al., 1998; Schönwiesner, Rübsamen, & von Cramon, 2005; Warrier et al., 2009; Zatorre & Belin, 2001; Zatorre, Belin, & Penhune, 2002; but see Scott & Wise, 2004, for a critique). An alternative (but related) proposal is that temporal aspects of speech are processed in both hemispheres, but the left hemisphere is specialized for short time windows (of approximately 25–50 ms), whereas the right hemisphere is specialized for longer time windows (of 150–250 ms; Boemio, Fromm, Braun, & Poeppel, 2005; Hickok & Poeppel, 2007; Poeppel, 2001; Poeppel & Monahan, 2008; see also Ivry & Robertson, 1998; Robertson & Ivry, 2000).

Given the important role of rapid temporal changes in speech stimuli, deficits in the ability to process rapid temporal changes in sound presumably would lead to speech perception difficulties. Indeed, a deficit in rapid temporal processing has been proposed as the source of speech perception deficits in PWD (Albert & Bear, 1974; Phillips & Farmer, 1990; Poeppel, 2001; Stefanatos, 2008). This claim comes from the observation that individuals with PWD typically have more difficulty with perception of temporally dynamic stimuli like consonants (especially with the perception of place of articulation and voicing in stop consonants) than with perception of steady state stimuli like vowels (e.g., Auerbach, Allard, Naeser, Alexander, & Albert, 1982; Miceli, 1982; Saffran et al., 1976; Stefanatos et al., 2005; Wang et al., 2000; Yaqub, Gascon, Al-Nosha, & Whitaker, 1988). PWD has also been associated with temporal processing deficits in non-speech stimuli. For example, patients with PWD often show elevated click-fusion and gap-detection thresholds (i.e., require much longer separations between two clicks to judge them as separate rather than perceiving them as one; Albert & Bear, 1974; Auerbach et al., 1982; Otsuki, Soma, Sato, Homma, & Tsuji, 1998; Wang et al., 2000; Yaqub et al., 1988; for a review, see Phillips & Farmer, 1990).

It is important to acknowledge, however, that such deficits in non-speech auditory temporal resolution (at least as assessed by click-fusion thresholds) do not always accompany PWD (Stefanatos et al., 2005). Of course, the speech perception problems of different patients classified as PWD might be due to disruptions at different levels in the processing stream. One hypothesis along these lines is that PWD from a left unilateral lesion would reflect a deficit in the processing of rapid temporal changes in speech stimuli whereas PWD from bilateral damage would lead to more general auditory deficits (including deficits in the perception of spectral aspects of sound and/or slower temporal changes). By this account, the speech perception deficit in PWD patients with unilateral left hemisphere damage reflects a deficit in temporal acuity (in a 25–50 ms timeframe), and indeed some recent cases support this conclusion (Stefanatos et al., 2005; Wang et al., 2000).

Some other accounts of PWD subtypes, however, propose the opposite pattern: Auerbach et al. (1982) suggested that a prephonemic subtype of PWD results from a deficit in temporal acuity and is associated with bilateral lesions, whereas a phonemic subtype (which presumably compromises knowledge of phonemic representations) cannot be attributed to a temporal processing deficit and arises from unilateral left hemisphere damage (see also Poeppel, 2001; Praamstra, Hagoort, Maassen, & Crul, 1991; Yaqub et al., 1988). Similar divisions have been proposed between apperceptive and associative versions of PWD (Buchtel & Stewart, 1989) and between word sound deafness and word form deafness (Franklin, 1989). These proposals fit with the claim that the early aspects of speech perception are processed bilaterally (e.g., Hickok & Poeppel, 2007), as spared right hemisphere mechanisms could presumably handle the early prephonemic stages of speech perception.

So while there are many suggestions in the literature that at least some cases of PWD result from a rapid temporal processing deficit, there is relatively little evidence for this claim, and even less consensus on the type of neuroanatomical damage that would lead to word deafness from an underlying temporal processing deficit. The current study attempts to shed some light on this specific issue, as well as on the role of rapid temporal processing in speech perception more generally, by presenting a detailed case study of a patient with PWD resulting from a unilateral left hemisphere lesion. We report two experiments focusing on the role that a temporal acuity deficit might play in a unilateral case of PWD: Experiment 1 investigates the patient’s discrimination of temporal and spectral cues in both speech and non-speech stimuli, and Experiment 2 evaluates the effectiveness of a treatment designed to improve rapid temporal processing. In addition, to determine if unilateral PWD cases also involve damage to cross-hemispheric connectivity (Poeppel, 2001; Takahashi et al., 1992)–a claim that has, to our knowledge, never been tested–we report what we believe is the first connectivity analysis presented on a PWD patient, presenting data from structural MRI combined with diffusion tensor imaging (DTI).

Background Information

At the time of testing, NL (identified here by a subject code) was a 66-year-old right-handed native English-speaking male. NL suffered a cerebrovascular accident (CVA) in July of 2005. Before this time, NL worked as an electrician and had completed a 1.5 years of post-high school education plus a 4-year apprenticeship program. Clinical assessment at the time of CVA resulted in an initial diagnosis of Wernicke’s aphasia. Over the next several months, many of his aphasic symptoms resolved with the exception of his ability to comprehend speech. When tested approximately three years post-stroke on the Boston Diagnostic Aphasia Examination (BDAE; Goodglass & Kaplan, 1983; see Table 1), NL performed poorly on single word perception tasks (word discrimination and body part identification) and on sentence comprehension tasks (commands and complex ideational material). NL did considerably better on the production sections of the BDAE, including word reading and visual confrontation naming tasks, though he did perform worse than controls on a verbal fluency (category naming) task. Performance on BDAE reading and writing tasks were above cutoff scores with the exception of the symbol and word discrimination task, on which his 90% accuracy contrasts with “the presumption that no failures should be anticipated [on this task] from nonaphasic adults” (Goodglass & Kaplan, 1983, p. 28). Thus this standard aphasia examination revealed marked difficulty in speech perception with minor to no deficits in production, reading, and writing, fitting the profile of PWD.

Table 1.

Accuracy rates (percentage correct) for patient NL on selected subtests of the Boston Diagnostic Assessments of Aphasia (BDAE).

| Task | Accuracy |

|---|---|

| Speech Perception | |

| Word Discrimination | 45.8% * |

| Body-part Identification | 20.0% * |

| Commands | 20.0% * |

| Complex Ideational Material | 8.3% * |

| Speech Production | |

| Word Reading | 90.0% |

| Visual Confrontation Naming | 96.2% * |

| Body-Part Naming | 80.0% |

| Animal-Naming (Fluency in Controlled Association) | 57.9% * |

| Oral Sentence-Reading | 80.0% |

| Written Comprehension | |

| Symbol and Word Discrimination | 90.0% * |

| Word Recognition | 75.0% |

| Word-picture Matching | 100.0% |

| Reading Sentences and Paragraphs | 80.0% |

| Written Production | |

| Mechanics of Writing | 100.0% |

| Recall of Written Symbols | 100.0% |

| Written Confrontation Naming | 90.0% |

| Narrative writing | 50.0% |

indicates accuracy rates significantly lower than control norms or cutoff scores.

A T1 weighted structural MRI scan (see below for details) shows that NL sustained left temporal and parietal lobe damage, including damage to the superior temporal gyrus, supramarginal gyrus, and angular gyrus. Pure-tone threshold audiometry revealed moderate sensorineural hearing loss at higher frequencies (3000 Hz and above), which is within the normal range for his age.

A variety of additional tests were conducted to evaluate NL’s linguistic abilities, as well as his non-linguistic auditory processing. NL’s ability to comprehend individual spoken words is severely impaired and it impossible to communicate with him over the telephone. However, he functions reasonably well when able to rely on lip reading and contextual cues. Thus appointments were made over e-mail and all assessments were conducted with face-to-face instruction and/or with instructions in writing. For tests where normative data were available, NL’s performance was compared either to published cutoff scores or to the scores of a control group by using Crawford and Howell’s (1998) modified t-test. A summary of the assessments discussed below is presented in Table 2.

Table 2.

Accuracy rates (percentage correct) for patient NL for background assessments.

| Task | Accuracy |

|---|---|

| Speech Perception | |

| Consonant Discrimination | |

| • CV minimal pairs | 33.3% * |

| • VC minimal pairs | 70.4% * |

| Vowel Discrimination | |

| • CV minimal pairs | 92.9% * |

| • VC minimal pairs | 85.7% * |

| Minimal Pair Discrimination (PALPA 2) | |

| • overall (different trials) | 63.9% * |

| • voicing difference | 50.0% |

| • manner difference | 83.3% |

| • place difference | 58.3% |

| Philadelphia Word Repetition | 8.3% * |

| Spoken word/picture matching | 50.0% * |

| Non-speech Auditory Perception | |

| Environmental sound/picture matching | 79.2% |

| Musical Chords Consonance/Dissonance Judgments | 90.0% |

| Montreal Battery for Evaluation of Amusia (MBAE) | |

| • Scale | 58.1% * |

| • Different Contour | 58.1% * |

| • Same Contour | 51.6% * |

| • Rhythmic Contour | 51.6% * |

| • Metric Task | 66.7% * |

| • Incidental Memory Test | 50.0% * |

| Speech Production | |

| Philadelphia Naming Task | 94.0% |

| Semantic Knowledge | |

| Philadelphia Naming Task (PNT) | 94.0% |

| Pyramids and Palm Trees | |

| • 3 picture version | 98.1% |

| • 3 written words version | 96.2% |

| Written Comprehension | |

| Visual lexcial decision (PALPA 25) | |

| • high imageability, high frequency | 100.0% |

| • high imageability, low frequency | 100.0% |

| • low imageability, high frequency | 80.0% * |

| • low imageability, low frequency | 93.3% |

| • nonwords | 78.3% * |

| Word reading (PALPA 35) | |

| • regularly spelled words | 96.7% * |

| • exception words | 90.0% * |

indicates accuracy rates significantly lower than control norms or cutoff scores.

Speech Perception

Speech perception tasks were administered either while the experimenter’s face was blocked from view or by using pre-recorded stimuli because NL benefits substantially from facial cues and lip reading (cf. Morris, Franklin, Ellis, Turner, & Bailey, 1996). NL’s ability to repeat words was very impaired: On the Philadelphia word repetition task (Saffran, Schwartz, Linebarger, & Bochetto, 1988), he was able to correctly repeat only 10 of the 120 words, produced incorrect words on 27 trials, and made no response on the remaining 83 trials.

NL was also tested on two minimal pair discrimination tasks, testing syllables and words respectively. The syllable minimal pair task required same/different discrimination on pairs of prerecorded syllables that were either the same or that differed on one or two phonetic features. Consonant and vowel minimal pairs were tested separately, each being tested in one block of 54 consonant-vowel (CV) syllable trials followed by a second block of 54 vowel-consonant (VC) syllable trials. Syllable pairs were presented in a random order with a 750 ms interval between each syllable. NL correctly responded “same” to 100% of the repeated-syllable trials, and was better able to detect differences in vowel minimal pairs (89.3% correct) than in consonant minimal pairs (51.9% correct; note that his performance was particularly impaired on consonant differences that occurred on the syllable onset, as can be seen in Table 2). NL’s accuracy on both types of stimuli was outside the range of 8 older adult control participants, whose accuracy ranged from 85–100% on consonant differences, and from 94–100% on vowel differences.

The word minimal pair task was taken from the Psycholinguistic Assessments of Language Processing in Aphasia (PALPA) battery (Kay, Lesser, & Coltheart, 1992), on which 72 word pairs were read aloud with flat intonation and with an approximately one-second interval between words. NL correctly identified 100% of the repeated words as “same”, but only correctly detected differences on 64% of the minimal pairs. His overall accuracy of 82% was significantly worse than controls reported by Kay et al. (1992) (t = −4.68, p <.001). NL was especially bad at differentiating between words differing in voicing (50% correct) or in place of articulation (58% correct), and did somewhat better with words differing in manner of articulation (83% correct). These patterns fit with the generalization that NL’s ability to detect differences is especially impaired for sounds that contrast in terms of rapid temporal changes as the identification of differences in place of articulation depends primarily on the perception of rapid formant transitions (Liberman, Delattre, Gerstman, & Cooper, 1956), whereas identification of manner of articulation in consonants and vowels depends to a greater degree on spectral cues (Syrdal & Gopal, 1986) and on temporal changes that occur over relatively longer durations (Lehiste & Peterson, 1961). It is clear, however, that NL’s performance was impaired even for discriminations that did not depend on perception of rapid temporal changes.

Speech Production and Semantic Knowledge

In contrast to NL’s impairment in speech comprehension, he did well on production tasks and on tasks tapping semantic knowledge. He scored within the normal range (94% correct) on the 175-item Philadelphia Picture Naming Task (Roach, Schwartz, Martin, Grewal, & Brecher, 1996); his errors were primarily formal paraphasias (e.g., “waggle” for wagon). He also performed within the normal range on both the picture and the written versions of the Pyramids and Palm Trees test (98% and 96% correct respectively; Howard & K. Patterson, 1992), which involves selecting from two items the one most closely associated semantically with a target, suggesting preserved ability to access semantics from both pictorial and written input.

Reading

In contrast to NL’s difficulties with speech perception, his comprehension of written language is relatively spared. NL performed mostly within the normal range on a visual lexical decision task that manipulated imageability and frequency (from the PALPA battery; Kay et al., 1992) with the exception of the high-frequency/low-imageability words (of which he erroneously judged 3 of 15 to be nonwords; t = −7.26, p <.0001) and the nonword items (of which he erroneously accepted 13 of 60 as words; t = 29.2, p <.0001). On a word-reading task (also from the PALPA; Kay et al., 1992), NL made only one error (out of 30) on regularly spelled words (reading peril as “preal”) and three errors (out of 30) on exception words. Note, however, that these errors do make his performance significantly worse than Kay et al.’s (1992) controls for both regular (t = −4.73, p <.0001) and exception words (t = −7.59, p <.0001).

Is NL’s word deafness “pure”?

Although much of the interest in PWD has resulted from its eponymous claim to language specificity, many studies of PWD have not systematically investigated other types of non-speech auditory processing. Thus it may be that many reported cases of PWD actually reflect a more general form of auditory agnosia. Even informal testing of non-speech auditory perception (e.g., identifying the sound when a doctor jangles keys or the patient reporting an inability to recognize melodies) has yielded mixed results. Some patients appear to show impaired processing of music and/or environmental sounds (e.g., Auerbach et al., 1982; Eustache et al., 1990; Pinard, Chertkow, Black, & Peretz, 2002; Roberts et al., 1987; Wang et al., 2000), leading to the suggestion that there may be no “pure” forms of word deafness after all (Buchman et al., 1986). Other word-deaf patients appear to show preserved perception of environmental sounds and music (Coslett, Brashear, & Heilman, 1984; Metz-Lutz & Dahl, 1984; Takahashi et al., 1992; Yaqub et al., 1988), although it is unclear how common such cases are because non-speech domains have not often been carefully tested. Thus to evaluate the specificity of NL’s deficit, his ability to perceive complex non-speech auditory stimuli was evaluated with both environmental sounds and musical stimuli.

Environmental Sound Perception

Environmental sound perception was evaluated with a 10-alternative forced choice environmental-sounds to picture matching task (Bozeat, Lambon Ralph, Patterson, Garrard, & Hodges, 2000). The task consists of 48 sounds from six categories (domestic animals, foreign animals, human sounds, household items, musical instruments, and vehicles) and 60 pictures (10 pictures per category); these items are listed in Appendix A. Each sound was presented with ten within-category pictures, and NL was allowed to hear one repetition of each sound. NL was 79% accurate on this task, which is above the mean accuracy of seven older adult control participants (mean = 74%, range: 63–90%). A spoken word-picture matching version of this same task was conducted one month later (using pre-recorded words spoken slowly and clearly by a native English speaker). Again, NL was allowed to hear one repetition of each word. On this word-picture matching version, NL was only 50% accurate, which falls considerably below the controls’ mean accuracy of 96% (range = 94–98%).

Appendix A.

Environmental sound stimuli from Bozeat et al. (2000). The 48 sounds correspond to the first eight items of each type, and each sound was presented with ten within-category pictures that included the additional two items listed under “foils”.

| Domestic animals | Household objects | Wild animals | Vehicles | Human sounds | Musical instruments |

|---|---|---|---|---|---|

| Cat | Telephone | Elephant | Train | Sneeze | Triangle |

| Horse | Vacuum cleaner | Tiger | Car | Cry | Drum |

| Pig | Washing machine | Flamingo | Bicycle | Yawn | Violin |

| Dog | Clock | Parrot | Airplane | Drink | Tambourine |

| Duck | Lawnmower | Monkey | Rowboat | Snore | Trumpet |

| Cow | Doorbell | Hyena | Helicopter | Walk | Xylophone |

| Frog | Coffee maker | Sea Lion | Bus | Laugh | Recorder |

| Hen | Frying pan | Wolf | Motorcycle | Clap | Guitar |

|

Foils | |||||

| Squirrel | Kettle | Giraffe | Tractor | Eat | Saxophone |

| Deer | Camera | Kangaroo | Skis | Blow Bubbles | Piano |

Music Perception

NL’s ability to perceive musical stimuli was assessed with both the Montreal Battery for Evaluation of Amusia (MBEA; Peretz, Champod, & Hyde, 2003) and with a consonance/dissonance judgment task (adapted from Patel, Iversen, Wassenaar, & Hagoort, 2008). The MBEA consists of six subtests, most of which use the same set of 30 melodic phrases. The first three subtests manipulate pitch: participants make same/different judgments of melody pairs where the different melodies contain a changed note that is in a different key (subtest 1), that is in the same key but that changes the contour (i.e., direction) of the melody (subtest 2), or that preserves both the key and contour (subtest 3). The fourth subtest manipulates the rhythm of the passage (still requiring same/different judgments), and the fifth subtest (which uses a different set of melodies) requires participants to classify individual phrases as either a waltz (i.e., in 3/4 time) or a march (i.e., in 4/4 time). The final subtest is an incidental memory test, in which participants categorize melodies (half of which were previously presented in the first four subtests) as old or new. As can be seen in Table 2, NL scored at or below the cutoff score for all six of these subtests.

In contrast, NL performed well on a consonance/dissonance judgment task that required him to identify chords as “tuned” (consonant) or “mistuned” (dissonant). These 30 chords, adapted from the instruction phase of a harmonic priming task (Patel et al., 2008), were 1 second long major Shepard tone triads, one-half of which were mistuned by flattening the upper note by.35 semitones. Three examples were given of consonant and dissonant stimuli, and feedback was given on each trial. NL correctly identified 90% of the chords, which is above the mean score of 10 Rice University undergraduates (mean = 82.0%, SD = 11.2%).

In general, NL appears to have a preserved ability to process environmental sounds and musical pitch, but a deficit in some aspect of melodic perception. Because most subtests of the MBEA require same/different judgments of musical phrases that last several seconds (the mean length of these musical phrases is about 5 seconds), it is unclear whether NL’s poor performance on the MBEA reflects a deficit in melodic perception per se, or a deficit in the short-term maintenance of melodies. It is also not possible to tell if NL’s poor performance on the MBEA is related to his stroke or reflects his current and premorbid lack of interest or ability in music: he claims to have never liked music (his only reported musical experience was from 7th grade school choir, which he claims to have hated) and he showed marked reluctance to participate in any musical assessment tasks. His reported lifelong disinterest in music along with his poor performance on the MBEA raises the possibility that NL is a congenital amusic (i.e., was premorbidly “tone deaf”, see, e.g., Peretz, 2008). It may seem surprising that someone with amusia would perform so well on the consonance/dissonance detection task, however this does fit with recent proposals that congenital amusia may not reflect a deficit in pitch processing so much as a deficit in the detection of pitch direction or pitch patterns (Griffiths, 2008; Liu, Patel, Fourcin, & Stewart, 2010; Patel, 2008; Stewart, 2008). Alternatively, his ability to detect dissonance might result from non-pitch psychoacoustic differences between consonant and dissonant chords such as roughness (see, e.g., Vassilakis & Kendall, 2010). In any case, NL’s deficit appears to be primarily confined to speech and to melodic aspects of musical stimuli, with preserved perception of environmental sounds.

Overall, these data (see Tables 1 and 2) show NL to have a significant impairment in his ability to comprehend speech, but with relatively well preserved speech production, written comprehension, semantic knowledge, and environmental sound perception (with somewhat equivocal findings on music perception). As NL’s deficit is relatively specific to speech stimuli, he provides a rare opportunity to investigate not only the underlying impairment involved in PWD, but also the processes underlying the early stages of speech perception in general. In the following sections, we report three studies. First, we report an investigation of the anatomical damage underlying NL’s deficit. Second, Experiment 1 investigates whether NL’s deficit is specific to speech or reflects an underlying deficit in rapid temporal processing. Finally, Experiment 2 reports a study of a treatment designed to improve NL’s ability to process rapid temporal transitions.

Lesion Localization and Fiber Tract Integrity

As mentioned above, most cases of PWD result from bilateral lesions involving the superior temporal cortices, with only a minority of cases (17 of the 59 cases reviewed in Poeppel, 2001) resulting from unilateral lesions. These unilateral cases of PWD have often been suggested to reflect damage both to the superior temporal cortex in the language-dominant hemisphere as well as to transcallosal projections from the opposite hemisphere STG (Geschwind, 1965; Lichtheim, 1885; Poeppel, 2001; Takahashi et al., 1992); however this claim has never (to our knowledge) been previously tested. We thus used Diffusion Tensor Imaging (DTI; see, e.g., Mori & Zhang, 2006), a relatively recent technique that allows visualization of subcortical white matter tracts (as inferred by the directionality of water diffusivity), to investigate the integrity of NL’s cross-hemispheric white matter pathways.

Imaging Methods

A T1-weighted anatomical MRI scan was obtained approximately 3 years post-stroke using a 3 Tesla whole body MR scanner (Philips Medical Systems, Bothell WA) with an eight-channel SENSE head coil. Diffusion weighted images were collected with a high angular resolution Philips 32-direction diffusion encoding scheme with gradient overplus option, and one B0 (non-diffusion weighted) image volume was acquired before acquisition of one repetition of diffusion-weighted scans (44 axial slices, matrix size = 256 × 256, slice thickness = 3mm, maximum b-values of 800 sec/mm2, pixel size =.938 ×.938 mm.). Diffusion-weighted images were realigned to the anatomical MRI, and diffusion tensors were computed using ANFI (Cox, 1996), and deterministic fiber tracking was performed with DTIQuery v1.1 using streamline tracking algorithm (STT) with recommended default parameters (Akers, Sherbondy, Mackenzie, Dougherty, & Wandell, 2004; Sherbondy, Akers, Mackenzie, Dougherty, & Wandell, 2005).

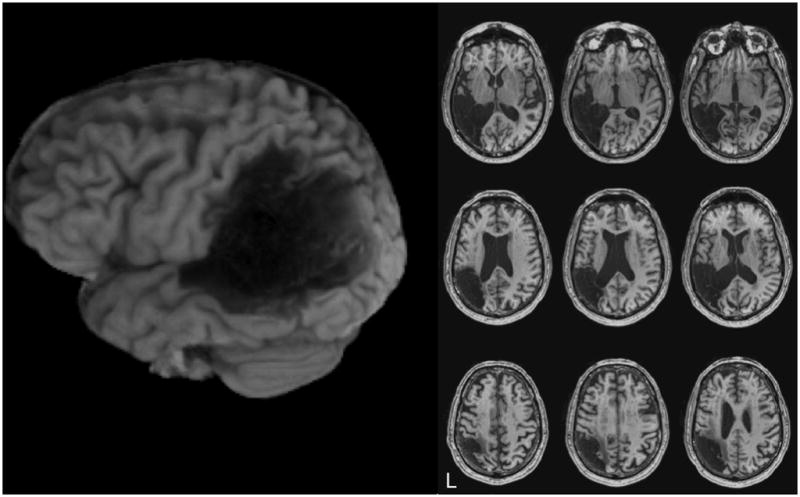

Imaging Results

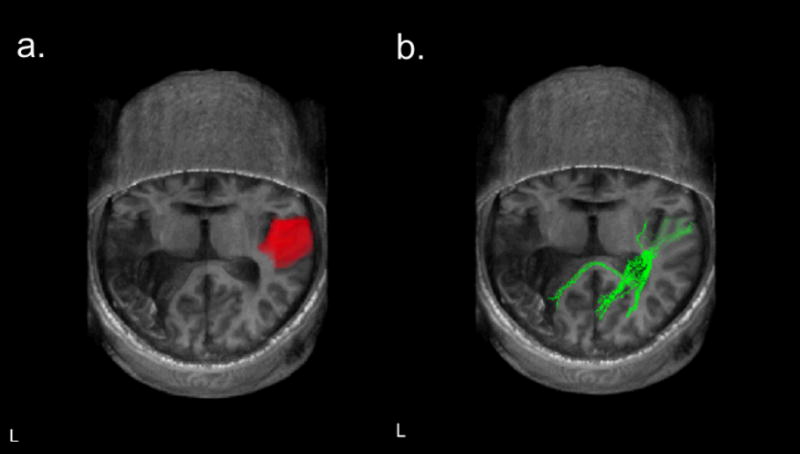

Figure 1 shows anatomical MRI images (axial slices) of NL’s brain. His lesion is unilateral, involving the left hemisphere temporal and parietal lobes, and includes damage to the superior temporal gyrus, supramarginal gyrus, and angular gyrus. Figure 2a shows the ROI used to define Heschl’s convolutions in the superior temporal gyrus and Figure 2b shows DTI pathways passing through this ROI, which were calculated using custom written Matlab software based on the open source DTI Query.pdb file format (Matlab functions available at http://openwetware.org/wiki/Beauchamp:DetermineTract). As can be seen in Figure 2, NL’s cross callosal projections are relatively robust, and pass through the posterior portion of the corpus callosum, which is likely to be the relevant pathway for speech perception (Damasio & Damasio, 1979). It is impossible to know if this pathway is impaired relative to NL’s pre-stroke condition, because of the wide variability in the size of these projections even in non-brain-damaged individuals (Westerhausen, Gruner, Specht, & Hugdahl, 2009). Nevertheless, these projections seem to be reasonably intact, suggesting that PWD can arise from a unilateral lesion even in the presence of intact cross-hemispheric connectivity.

Figure 1.

Anatomical MRI images of NL’s brain. The left side is a rendered image of NL’s left hemisphere, and the right side shows axial slices in neurological convention with the left hemisphere on the left side.

Figure 2.

a. Region of interest (ROI) used to define Heschl’s convolutions in NL’s right superior temporal gyrus. b. DTI pathways passing through the ROI shown in a. Images are shown in neurological convention with the left hemisphere on the left.

As is evident in Figure 2b, these cross-callosal fiber tracts terminate in perilesional regions. Thus, one might hypothesize that phonemic and/or lexical phonological representations specific to the left hemisphere have been damaged (Auerbach et al., 1982) and thus, even though auditory processing in the right hemisphere is carried out normally and the output transferred to the left, regions that would process this information linguistically no longer exist. A problem with this line of reasoning is that, under the assumption that phonological representations are shared between perception and production (Indefrey & Levelt, 2004), NL’s speech production should also be severely impaired. Given NL’s preserved production, one would have to surmise either that the brain regions supporting phonological aspects of production and perception are separate (e.g., Martin, Lesch, & Bartha, 1999) or that a single region exists, but that information from the right hemisphere does not reach this region because of damage on the left.2 In any event, it is clear that NL’s right hemisphere is spared and thus one can investigate the speech-relevant auditory processing that NL can carry out with this intact hemisphere.

Experiment 1

As discussed above, one claim regarding the neuroanatomy of speech perception is that prephonemic stages of processing are carried out bilaterally whereas phonemic processing is carried out in the left hemisphere (Auerbach et al., 1982; Hickok & Poeppel, 2000, 2004, 2007). If so, then PWD patients with bilateral temporal lobe damage are expected to show a deficit in temporal acuity, whereas those with unilateral left hemisphere lesions are expected to show a disruption of phonemic processing (or, given the lack of evidence for a phonemic level of processing (Scott & Wise, 2004), some other type of language-specific representational deficit) (Auerbach et al., 1982; Praamstra et al., 1991; Yaqub et al., 1988). The claim that bilateral lesions are involved in a rapid temporal processing deficit implies that both hemispheres can carry out this processing, and suggests that patient NL, with a unilateral left hemisphere lesion, should show normal rapid temporal processing ability and impaired phonemic analysis.

In contrast, it has also been claimed that rapid temporal processing is specialized to the left hemisphere (e.g., Belin et al., 1998) and thus a deficit in rapid temporal processing may be the source of PWD in patients with unilateral lesions (Stefanatos et al., 2005; Wang et al., 2000). In line with these studies, NL’s performance on background tests (see Table 2) suggested maximal difficulty with rapid temporal aspects of sound. In particular, he showed worse performance in detecting consonant differences than vowel differences on syllable minimal pairs, and worse performance detecting differences in minimal pair words differing in place of articulation (i.e., in terms of rapid formant transitions; Liberman et al., 1956) than in manner of articulation. This also fits with his performance on non-speech auditory stimuli: Environmental sounds do not typically contain the kinds of rapid temporal changes characteristic of speech, and so NL’s good ability to identify these stimuli could be based on preserved ability to process spectral information.

In general, these findings lend support to the idea that NL has a deficit in processing rapid temporal aspects of sounds, but do so only indirectly. The following experiment aimed to directly address NL’s ability to process rapid temporal changes in both speech and non-speech stimuli, and compare this to his ability to distinguish stimuli differing in non-temporal dimensions. Although some previous work has looked at this question using methods such as click fusion, there are concerns with using typical bandpass noise paradigms to isolate temporal processing ability (Phillips, Taylor, Hall, Carr, & Mossop, 1997) and rapidly presented clicks are not particularly well matched to the type of temporal changes encountered in speech. Thus we relied on speech and carefully matched non-speech stimuli that differed in either temporal or spectral aspects of the sound signal.

More specifically, we investigated NL’s ability to discriminate between speech and matched non-speech auditory stimuli that differed either in dynamic features (i.e., differed only in a rapid temporal aspect) or in steady-state spectral features. If his pure word deafness does, in fact, result primarily from a deficit in rapid temporal processing, then NL should have a significantly impaired ability to discriminate stimuli differing only in rapid dynamic features, but should show preserved discrimination of stimuli differing in steady-state spectral features. Crucially, this should be observed both in speech and in non-speech (tone) stimuli. In contrast, a language-specific source of his deficits would predict that NL would show impaired discrimination between speech stimuli – whether the speech tokens differ in temporal or spectral features – but preserved discrimination of non-speech tone stimuli.

Participants

Patient NL was tested four times over a three-week period. Additionally, a group of seven control subjects without any history of neurological damage was recruited from a pool of older participants who regularly participate in research at Rice University, and were each tested once on the same task. These control subjects (four males) ranged in age from 52 to 81 (mean = 62, SD = 8.9) and were all native speakers of English. Although NL’s performance was collapsed over four administrations of the task whereas controls were only tested once, an analysis using only NL’s first session yielded the same pattern of results.

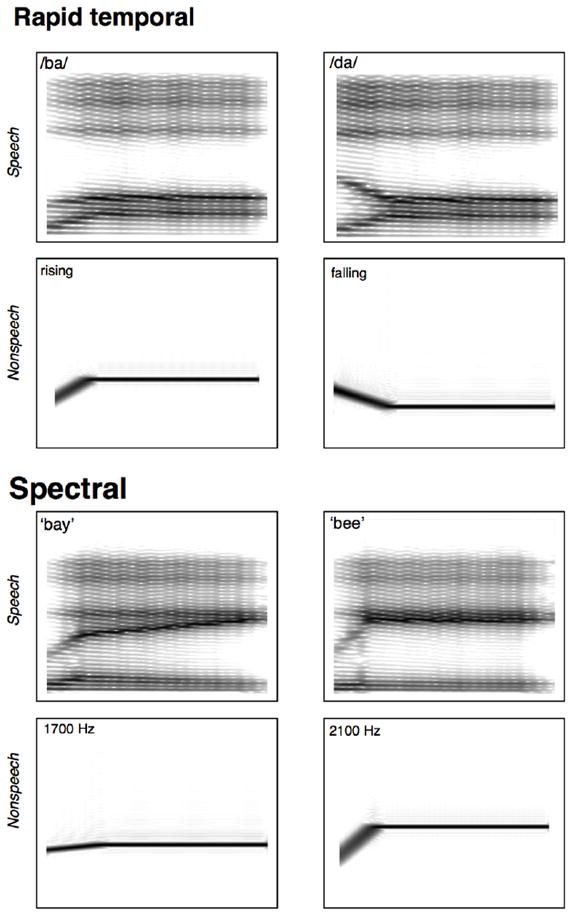

Materials

The stimuli were modeled after those used in Joanisse and Gati (2003) and consisted of four general types, corresponding to the crossing of two manipulations: linguistic status (speech or nonspeech) and acoustic cue type (temporal or spectral). See Figures 3a and 3b for sample spectrograms of these stimuli types.

Figure 3.

Spectrograms of stimuli used in Experiments 1 and 2. a. “easy” (maximally different 800 Hz vs. 1600 Hz onset frequency) /ba/ and /da/ stimuli (top) and their non-speech F2 analogues (bottom). b. “easy” (maximally different 1700 Hz vs. 2100 Hz steady state frequency) /be/ and /bi/ stimuli (top) and their non-speech F2 analogues (bottom).

Speech Stimuli

Speech stimuli were 220 ms synthesized CV syllables created with a digital implementation of the Klatt cascade/parallel formant synthesizer (Klatt, 1980). Manipulations of the consonant portions led to syllables that differed on a rapid temporal dimension and manipulations of the vowels led to syllables that differed spectrally. The consonant stimuli were four items along a continuum from /ba/ to /da/, in which the second formant (F2) transition changes the perceived place of articulation. Specifically, the stimuli differed only in F2 onset frequency (either 800 Hz, 1000 Hz, 1400 Hz, or 1600 Hz) with all other parameters of the onset and vowel held constant across items. This provided cross-category pairs that were “easy” prototypical exemplars of /ba/ and /da/ (i.e., the maximally different 800 Hz vs. 1600 Hz onset stimuli) or “hard” non-prototypical /ba/ and /da/ exemplars (i.e., the minimally different 1000 Hz vs. 1400 Hz onset stimuli). Additionally, three versions of each stimulus were created, each having different durations of the F2 transition: short (20 ms), medium (40 ms), or long (60 ms). (Note that typical formant transition durations in voiced stop consonants range from approximately 20 to 45 ms (Liberman et al., 1956), thus the ‘medium’ duration represents a canonical /ba/ − /da/ contrast). The following /a/ vowel was created by setting F1, F2 and F3 frequencies to 600, 990 and 2600 Hz, respectively for the remaining portion of the stimulus.

The vowel stimuli were four items along a continuum from /bi/ to /be/ (~ “bee” to “bay”), created by manipulating the steady-state F2 frequency of the vowel portion. Again, cross-category pairs were either “easy” (maximally different) or “hard” (minimally different). For the /be/ stimulus, the frequency of the steady state F2 component was set to 1700 (easy) or 1800 Hz (hard). For /bi/ the F2 was set to 2100 Hz (easy) or 2000 Hz (hard). These items had an initial 40 ms F2 sweep with an onset frequency of 900 Hz, which did not differ across pairs. The F1 and F3 portions also did not differ across stimuli, and were held constant at 200 and 2300 Hz respectively.

Non-speech Stimuli

Sinewave sweeps were created to match the F2 characteristics of the 12 consonant stimuli and the four vowel stimuli described above. Items consisted of a 220 ms sinewave tone that began with a brief time-varying sweep, followed by a steady-state portion. Just as with the speech stimuli, these sinewaves were paired to create maximally and minimally different contrasts. Stimuli differed either spectrally (i.e., differed with respect to the pitch of the steady-state tone as with the vowel stimuli) or temporally (i.e., differed with respect to the initial time-varying sweep as with the consonant stimuli).

Procedure

Participants were presented with pairs of sounds over headphones at a comfortable volume and were asked to judge whether each pair sounded the same or different by pressing an appropriately labeled key. Different pairs were always of the same stimuli type (e.g., speech/temporal would always be paired with another speech/temporal stimuli, never with another condition) and were always cross-category pairs (or their non-speech equivalents).

There were four blocks of 64 trials each. Each block contained one “same” trial for each of the individual sounds (making 32 “same” trials), and two “different” trials for each of the 16 experimental pairs (maximally and minimally different versions of the four speech and four non-speech pairs). Order of trials was randomized within each block. An experimental session took approximately 30 minutes.

Results

Control Participants

Table 3 lists control participants’ response accuracy by condition and by trial type (i.e., whether pairs were easy/maximally-different, hard/minimally-different, or the same). A 2 × 4 × 3 repeated measures ANOVA was conducted on accuracy rates to investigate the effects of linguistic status (speech or nonspeech), acoustic cue type (spectral, temporal-short, temporal-medium, or temporal-long) and trial type (different-easy, different-hard, or same). Because proportions are not normally distributed, these analyses were also conducted on arcsine-transformed proportions (Winer, Brown, & Michels, 1991). In all analyses, the results from analyses on arcsine-transformed data were similar to those on untransformed proportions. Thus for ease of interpretation, only the analyses on untransformed data are reported and proportions are reported as percentages.

Table 3.

Mean (SD) accuracy rates (percentage correct) for control subjects by condition.

| Task | Discrimination trial type |

||

|---|---|---|---|

| Different-hard | Different-easy | Same | |

| Speech | |||

| Consonant | |||

| short | 28.6 (30.4) | 28.6 (22.5) | 93.8 (5.1) |

| medium | 39.3 (23.3) | 85.7 (19.7) | 96.4 (4.9) |

| long | 44.6 (26.9) | 82.1 (18.9) | 96.4 (3.3) |

| Vowel | 21.4 (27.7) | 46.4 (26.7) | 100.0 (0.0) |

| Nonspeech | |||

| Tone Sweep | |||

| short | 58.9 (35.9) | 75.0 (29.8) | 94.6 (5.6) |

| medium | 83.9 (22.5) | 94.6 (6.7) | 95.5 (7.0) |

| long | 85.7 (18.3) | 98.2 (4.7) | 93.8 (8.8) |

| Tone Pitch | 100.0 (0.0) | 94.6 (14.2) | 97.3 (4.9) |

Note: Accuracy rates correspond to correct discriminations in the different-easy and different-hard conditions, and to correct rejections on the no-change trials.

A significant main effect of linguistic status shows that accuracy was worse for speech than nonspeech stimuli (61% vs. 92% correct, respectively; F(1,6) = 39.2, p <.0001). The main effect of acoustic cue type was also significant (F(3,18) = 8.7, p <.001), as was the main effect of trial type (F(2,12) = 57.5, p <.0001). Furthermore, there were significant interactions between linguistic status and trial type (F(2,12) = 19.3, p <.001), acoustic cue type and trial type (F(6,36) = 6.14, p <.001), and a significant 3-way interaction (F(6,36) = 3.63, p <.01). In large part, these interactions resulted from uniformly good accuracy on “same” trials, which was not influenced by linguistic condition or acoustic cue type (see the rightmost column of Table 3). In contrast, accuracy on the different trials was lower for speech than nonspeech stimuli, especially for the minimally different (different-hard) stimuli, and quite low for the spectrally-different speech stimuli (in contrast to very good performance on spectrally different nonspeech stimuli).

Patient NL

Table 4 lists patient NL’s response accuracies (and the accuracy ranges of control subjects) by condition and by trial type. The qualitative pattern of NL’s accuracies was similar to that of the control subjects: high accuracy on the “same” trials, lower accuracy on speech than nonspeech stimuli, and generally lower accuracy on the temporally than spectrally differing stimuli. NL’s performance was within the normal range on all of the same trials. On the different trials, NL performed worse than controls in nearly all speech and nonspeech temporal conditions. And while NL’s performance was not outside the range of control subjects in the most difficult temporal discrimination conditions (i.e., different-hard with short transitions), this does not so much reflect good performance by NL as floor performance of some control subjects in these conditions. On the spectrally different conditions, NL was within the range of controls, with the exception of the minimally different (different-hard) spectral nonspeech condition. It should be noted, however, that he scored near the bottom of the control range on the spectrally different vowel conditions and, again, at least one control in each of these conditions scored at floor.

Table 4.

Mean accuracy rates (percentage correct) for patient NL and accuracy ranges for control subjects (minimum and maximum percentage correct) by condition.

| Task | Discrimination trial type |

|||||

|---|---|---|---|---|---|---|

| Different-hard | Different-easy | Same | ||||

| NL | Control Range | NL | Control Range | NL | Control Range | |

| Speech | ||||||

| Consonant | ||||||

| short | 0.0 | (0 – 87.5) | 0.0 * | (12.5 – 75) | 100 | (87.5 – 100) |

| medium | 0.0 * | (12.5 – 62.5) | 3.1 * | (50 – 100) | 98.4 | (87.5 – 100) |

| long | 3.1 * | (12.5 – 87.5) | 6.3 * | (50 – 100) | 100 | (93.7 – 100) |

| Vowel | 3.1 | (0 – 75) | 21.9 | (0 – 75) | 100 | (100 – 100) |

| Nonspeech | ||||||

| Tone Sweep | ||||||

| short | 0.0 | (0 – 100) | 0.0 * | (12.5 – 100) | 98.4 | (87.5 – 100) |

| medium | 6.3 * | (37.5 – 100) | 9.4 * | (87.5 – 100) | 100 | (81.3 – 100) |

| long | 0.0 * | (50 – 100) | 25.0 * | (87.5 – 100) | 98.4 | (75.0 – 100) |

| Tone Pitch | 87.5 * | (100 – 100) | 93.8 | (62.5 – 100) | 96.9 | (87.5 – 100) |

Note: Accuracy rates correspond to correct discriminations in the different-hard and different-easy conditions, and to correct rejections on the no-change trials.

indicates NL’s scores that are outside the range of controls’ accuracy rates.

Discussion

Although NL has a unilateral lesion, and thus, according to some researchers, might be predicted to show a phonemic form of PWD rather than a prephonemic impairment (e.g., Auerbach et al., 1982), he shows markedly worse performance than controls at the processing of both speech and nonspeech stimuli that differ in rapid temporal features. NL was better able to distinguish between spectrally differing than between temporally differing stimuli, however he still showed some degree of impairment on pitch discrimination. These findings are contra the predictions of a model where the early stages of speech processing occur bilaterally (e.g., Hickok & Poeppel, 2007), in which NL’s undamaged right hemisphere should have been able to perform these discriminations without requiring access to the left hemisphere. Instead, these data support models where the left hemisphere is asymmetrically involved in the early aspects of speech perception.

These data complement two other cases of unilateral PWD showing a deficit in rapid temporal processing (Stefanatos et al., 2005; Wang et al., 2000) and suggest that the distinction between phonemic and prephonemic PWD does not, in fact, correspond with unilateral and bilateral lesions, respectively. Instead, these data fit with the idea that the left hemisphere is specialized for the processing of rapid temporal aspects of sound (Belin et al., 1998; Joanisse & Gati, 2003; Poeppel, 2001; Robin et al., 1990; Stefanatos et al., 2005; Zatorre & Belin, 2001), and that damage to left hemisphere regions alone can lead to deficits in the early stages of speech perception. It should be noted, however, that NL also did poorly on natural speech vowel stimuli relative to controls (see Table 2) and also performed near the bottom of the range for controls for the synthetic vowel stimuli in Experiment 1. Thus his speech perception deficit is not limited to stimuli where the perception of rapid temporal changes is crucial. Thus, it seems evident that a least part of NL’s speech perception deficit is due to something other than a deficit in rapid temporal processing.

One somewhat surprising aspect of these data is that even the non brain-damaged control participants performed poorly when discriminating temporal contrasts, especially in the more difficult conditions (i.e., those with short transitions and/or minimally different onset frequencies). Note, however, that the performance of these older adults, while relatively poor, is actually quite close to the performance of the college-aged participants reported in Joanisse and Gati (2003). It may also be surprising that control participants showed poor discrimination between the difficult speech conditions and were actually relatively better at discriminating between the difficult nonspeech conditions. One might have predicted the opposite–i.e., better performance in speech than nonspeech conditions–due to categorical perception and other top-down effects on speech sound processing (e.g., Liberman, Harris, Hoffman, & Griffith, 1957; Samuel, 2001). There are at least two explanations for participants’ worse performance on the speech than nonspeech stimuli. One idea is that, while perception of nonspeech stimuli is necessarily an auditory task, speech perception typically integrates stimuli from multiple modalities and so people rarely need rely only on small acoustic contrasts for speech categorization (see Rosenblum, 2008, for a brief review). Because of this, typical speech perception may come to rely somewhat less on pure auditory input than does nonspeech perception. A second factor is that the speech and nonspeech items differed not only with respect to phonetic content, but also in terms of acoustic richness. The speech tokens captured the broadband nature of speech, marked by peaks in acoustic energy across a large range of audible frequencies, whereas the sinewave stimuli were narrowband in nature, consisting of a single peak within a narrow frequency range at each time point. This difference in acoustic complexity of the speech and nonspeech stimuli likely played a role in participants’ relatively poorer performance on the speech sounds.

While data showing a deficit in discriminating speech and nonspeech signals on the basis of rapid temporal cues suggests that this deficit contributes to NL’s speech perception difficulties, stronger evidence would be obtained if we could show that recovery of his ability to process rapid temporal cues leads to an improvement in his speech perception. The next experiment examined the effects of a treatment designed to improve rapid temporal processing. There exist very few attempts to treat patients with PWD, perhaps under the assumption that chronic forms of aphasia are unlikely to improve with treatment, or perhaps just due to the rarity of this condition. Nevertheless, increasing work is finding some degree of success in the treatment of chronic aphasia (Moss & Nicholas, 2006; Robey, 1998), and there is at least one treatment program designed specifically to improve auditory temporal acuity (Merzenich et al., 1996), suggesting that a treatment for chronic PWD may have a beneficial effect.

Experiment 2

PWD is not the only deficit that has been claimed to result from problems in the processing of the temporal dynamics of speech. Considerable work has addressed the role of auditory processing speed in developmental language delays and deficits as well (Merzenich et al., 1996; Tallal et al., 1996; see Tallal, 2004, for a review). Furthermore, there is some evidence that these problems can be reduced by training programs that focus on rapid auditory processing skills (Gaab, Gabrieli, Deutsch, Tallal, & Temple, 2007; Stevens, Fanning, Coch, Sanders, & Neville, 2008), although there have been few well-controlled studies (McArthur, 2009). This work has typically used a treatment program marketed as Fast ForWord Language (hereafter FFW; Scientific Learning Corporation, Oakland, CA). FFW is a computerized training program that uses two types of stimuli: one is slowed speech stimuli that has the rapid transitional portions emphasized (amplified), and the other is synthesized non-speech stimuli that mimic the types of spectrotemporal changes found in speech.

As indicated previously, a few studies have attempted to treat the speech perception deficit in PWD. Morris et al. (1996) found improved phoneme discrimination performance after training a PWD patient on auditory minimal pair discrimination accompanied by lip reading, but Maneta, Marshall, and Lyndsay (2001) found no improvement in their patient after a similar treatment program. Tessier and colleagues (Tessier, Weill-Chounlamountry, Michelot, & Pradat-Diehl, 2007) report a single-case treatment study of PWD using a phoneme discrimination and identification task paired with visual cues (which were gradually delayed and eliminated), and found that this treatment led to a significant improvement in auditory perception. Thus there is at least some evidence that PWD is amenable to treatment. Given that PWD has been argued to result (at least partially) from a deficit in rapid temporal processing, a treatment specifically targeting temporal acuity would seem to be ideal.

Materials, Design, and Procedure

Pre- and post-testing

NL’s pre- and post-treatment auditory processing ability was assessed with the materials and task reported in Experiment 1, above. Experiment 1 reports aggregate data from the four pre-treatment administrations, and this same task was administered four times after completion of the treatment. Improvement could thus be evaluated not only within the treatment program itself, but also on materials different from those used in the treatment exercises. Although it would also be interesting to evaluate changes in nonlinguistic perception, we did not re-administer the musical perception measures after treatment because of NL’s aversion to these tasks.

Treatment

The treatment used the Fast ForWord–Language system (FFW), a computerized training program that uses acoustically modified speech and non-speech sounds in a computer game format. This treatment program was administered one-on-one by certified FFW coaches (where certification was obtained by successfully completing the online FFW training program) in 90-minute sessions, approximately 4 days per week for 2.5 months, leading to a total of 43 sessions. There are two general types of tasks in the FFW treatment. One type uses non-speech stimuli, and trains participants to categorize increasingly rapidly changing acoustic stimuli (such as tones that rise and fall in pitch). The other type uses speech stimuli that have been temporally extended and amplified to emphasize individual components of the speech signal (especially rapid temporal components), and trains participants to categorize these stimuli in increasingly faster (i.e., less slowed/modified) speech. Examples of both types of tasks can be found in Tallal (2004).

Results

Fast ForWord Exercises

Because the FFW program advances to further levels of difficulty only after a specific level of performance has been reached, progress in FFW exercises was measured as percent complete for a given exercise. (Although measures of compliance with the training schedule and attendance/participation are also measured in the FFW framework, these were deemed less relevant here than in classroom settings.) NL’s performance advancement was quite slow compared to typical results from children, who complete the FFW-Literacy program in an average of 6 to 8 weeks (Borman, Benson, & Overman, 2009), however for practical reasons the treatment was considered complete at 10 weeks. At this point, NL had fully completed one of the six exercises (“Galaxy Goal”, which requires the detection of a changed phoneme in a string of speech syllables, e.g., ba, ba, ba, ba, da), had made slow, but relatively consistent, advances in the other two phoneme discrimination exercises (“Spin Master” at 43% and “Lunar Tunes” at 35% complete), in a word discrimination exercise (“Star Pics” at 26% complete) and in a non-speech task requiring discrimination of rapid sinewave sweeps (“Space Racer” at 21% complete). In contrast, NL made little appreciable progress on the sentence comprehension exercise (“Stellar Stories”; reaching only 9% complete after 10 weeks).

Pre- vs. Post-test Results

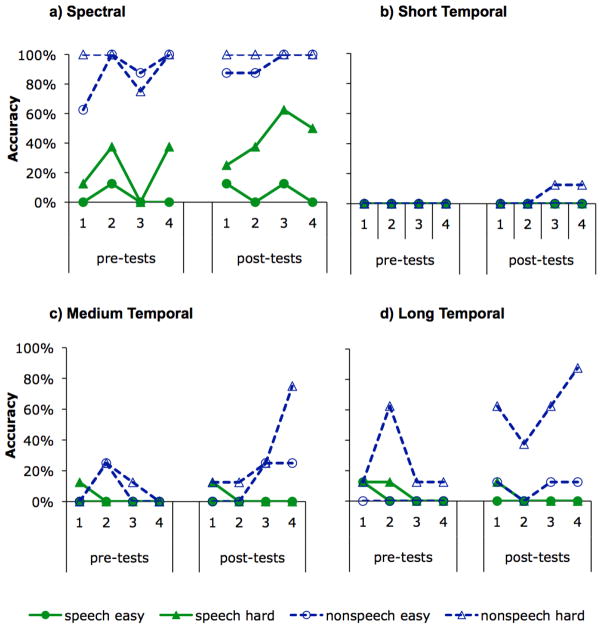

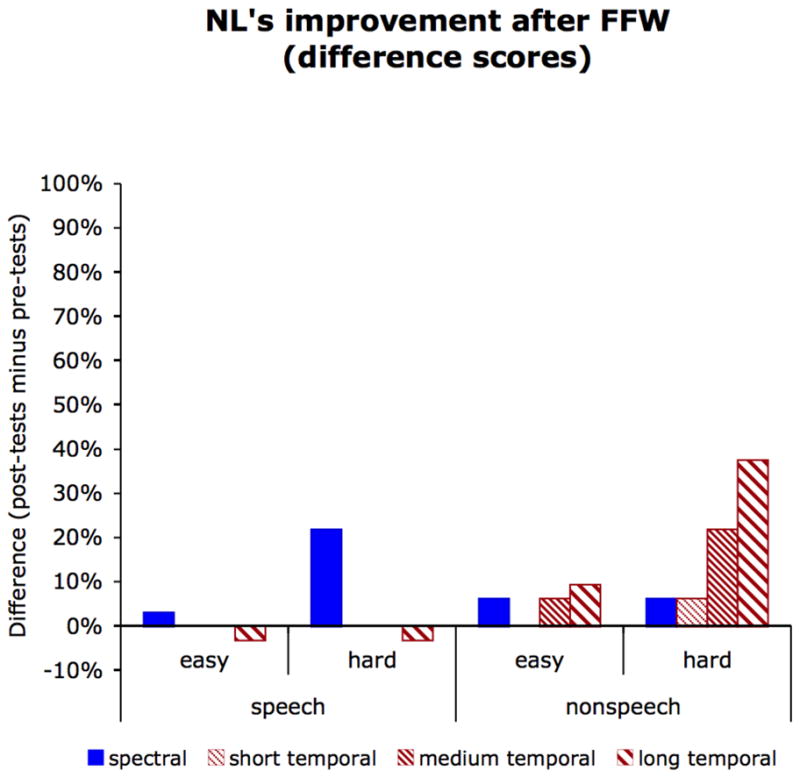

The more critical question with regard to the role of rapid temporal processing in PWD and to the efficacy of FFW in treating deficits in rapid temporal processing is whether NL showed improved ability to distinguish the temporally differing speech and/or nonspeech stimuli described in Experiment 1, above. Figures 4a-4d show NL’s performance on the entire 8 sessions (4 pre-treatment and 4 post-treatment) for each type of stimuli (spectral, and the three durations of temporally differing stimuli). Figure 5 shows degree of improvement collapsed across the individual pre- and post-treatment assessments.

Figure 4.

NL’s performance on the entire 8 sessions (4 pre-treatment and 4 post-treatment) for: a. spectrally different stimuli, b. temporally different stimuli with short (20 ms) transitions, c. temporally different stimuli with medium (40 ms) transitions, and d. temporally different stimuli with long (60 ms) transitions. Solid green lines represent performance on the synthetic speech stimuli and dashed blue lines represent performance on the nonspeech analogues. Performance on easy (maximally different) pairs is represented with circles and performance on hard (minimally different) pairs is represented with triangles.

Figure 5.

NL’s degree of improvement (pre-test minus post-test scores) collapsed across the individual pre- and post-treatment assessments for speech stimuli and their nonspeech analogues, and for easy (maximally different) and hard (minimally different) contrasts. Improvement in spectral discrimination is shown in solid blue, improvement in temporal discrimination is shown in hashed red, with fine hashing representing performance on stimuli with short (20 ms) transitions, medium hashing representing performance on stimuli with medium (40 ms) transitions, and wide hashing representing performance on stimuli with long (60 ms) transitions.

There is some controversy on how best to evaluate single-subject treatment data: Criticisms have been leveled at the traditional approach of simply visually analyzing graphical data (Matyas & Greenwood, 1990) as well as the use of inferential statistics that are often unjustified given the inherently autocorrelated data in a single-subject design (Robey, Schultz, Crawford, & Sinner, 1999). Therefore statistical significance of pre- to post-treatment changes (collapsed across testing sessions) was evaluated with McNemar’s test of correlated proportions (McNemar, 1947) and with exact (one-tailed) binomial probability calculations (Lowry, 2005).

Visual inspection of Figure 5 suggests that NL showed improvement in the discrimination of easy (i.e., maximally different) rapid temporal contrasts in non-speech stimuli; this effect was significant for the at the long (60 ms) transition duration (McNemar χ2 =6.55, 1 d.f., p < 0.05; binomial probability = 0.0085) and reached significance at the medium (40 ms) duration in the binomial test only (McNemar χ2 =3.77, 1 d.f., p = 0.052; binomial probability = 0.046). There were no changes for the detection of rapid temporal changes in speech stimuli. Although NL’s discrimination of the easy spectral contrasts in speech was numerically improved, this change reached only marginal statistical significance (McNemar χ2 =3.27, 1 d.f., p = 0.070; binomial probability = 0.059), and no other changes in spectral discrimination reached statistical significance.

Discussion

Although NL does show a deficit in discriminating sounds that differ in terms of rapid temporal transitions (as demonstrated in Experiment 1), his ability to discriminate linguistic stimuli that differ in rapid temporal features was not improved after 10 weeks of intensive FFW treatment. Interestingly, his ability to discriminate between non-speech rapid temporal transitions did improve, at least for relatively slower transitions, suggesting that the FFW exercises were effective in improving rapid temporal processing per se. This is somewhat surprising as only one of the six FFW exercises used here (“Space Racer”) involved tone sweeps analogous to the sounds on which NL improved, and his progress on that particular exercise was modest at best. In contrast, the FFW exercises on which NL made the most progress used synthesized CV stimuli, which are similar to the stimuli on which he showed no appreciable improvement after treatment.

Nevertheless, it is encouraging that NL’s ability to discriminate between some types of perceptually similar stimuli did improve significantly after treatment. This ability to recover (or perhaps re-learn) relatively low-level perceptual abilities supports the idea that some degree of plasticity exists even in adult chronic aphasics (e.g., Byng, Nickels, & Black, 1994; Mitchum, Haendiges, & Berndt, 1995) and is an encouraging finding for the possibility of effective treatments for PWD and other chronic aphasic conditions. However, these data suggest that while it does seem possible to improve rapid temporal discrimination in PWD with intensive training, the benefits do not extend to speech stimuli but rather are confined to the perception of very simple sounds. This further suggests that FFW is not an effective treatment for speech perception deficits, at least as far as PWD is concerned. Of course, it is important to note that FFW was developed as a treatment for language impairment in children, not for PWD, and there are likely to be many differences between developmental language delays and language processing deficits following brain damage.

General Discussion

Patient NL represents a rare prototypical case of PWD, as he shows a very impaired ability to comprehend spoken language despite preserved hearing, reading, speech production, and semantic knowledge. NL also shows a preserved ability to rely on visual speech cues, as evidenced by marked improvements in his comprehension when able to use visual lip-reading. Importantly, NL also shows dissociations between the perception of speech and some other types of complex auditory stimuli, including environmental sounds. As is typically the case with PWD, these speech/non-speech dissociations are not entirely straightforward: while NL was successfully able to analyze musical chords as tuned or mistuned, he performed poorly when discriminating melodic phrases (on the MBEA; Peretz et al., 2003). Whether this reflects problems with the processing of melody or harmonic structure, difficulty with musical memory, or simply is a function of his premorbid lack of musical interest and aptitude is, unfortunately, impossible to tell. Nevertheless, NL’s speech perception impairment does not simply reflect an inability to process all complex sounds, and he thus provides a useful source of information regarding the perceptual and neural mechanisms involved in speech perception.

Although PWD typically results from bilateral temporal damage, NL’s speech perception deficit followed from a unilateral left hemisphere lesion (as is true for approximately thirty percent of PWD cases; Poeppel, 2001), thus he also offers a window into the role of the left hemisphere in speech perception. The most common explanation for the existence of PWD following a unilateral lesion is that PWD can result when preserved language areas of the left hemisphere are unable to receive auditory input from either hemisphere (Geschwind, 1965; Lichtheim, 1885; Poeppel, 2001; Takahashi et al., 1992). To our knowledge, this is the first study to evaluate this explanation by investigating subcortical white matter tracts from primary auditory areas in the right hemisphere (specifically, from right Heschl’s gyrus) using DTI. A disconnection account of PWD predicts that NL’s deficit reflects damage to cross-hemispheric pathways from right-hemisphere auditory analysis regions, however NL has relatively robust projections from Heschl’s gyrus through the posterior corpus callosum, which is a region associated with projections relevant to speech perception in non brain-damaged individuals (Damasio & Damasio, 1979; Westerhausen et al., 2009). Of course, it is still possible that these pathways are damaged relative to NL’s premorbid state (a problem exacerbated by the fact that cross-hemispheric connectivity appears to be quite variable in the general population; Westerhausen et al., 2009). Nevertheless, this does show that PWD can arise from a unilateral lesion even in the presence of intact cross-hemispheric connectivity, and lends little support to the idea of unilateral PWD as a disconnection syndrome.

Instead, this suggests that NL’s speech perception deficit reflects a problem with an earlier level of auditory analysis that is disproportionally processed in the left hemisphere. Given a number of proposals that the left hemisphere is specialized for rapid temporal processing (whereas the right hemisphere is specialized for the processing of spectral information (e.g., Zatorre & Belin, 2001) or temporal information at a coarser grain (e.g., Poeppel & Monahan, 2008)), one possibility is that NL’s deficit reflects an underlying problem with rapid temporal processing. In fact, these data do show that NL has a deficit in temporal acuity, as he was less able to discriminate between stimuli differing in terms of rapid temporal transitions than between stimuli differing spectrally. Similar to what has been reported in previous case studies of PWD (e.g., Auerbach et al., 1982; Miceli, 1982; Saffran et al., 1976; Wang et al., 2000; Yaqub et al., 1988), NL had more difficulty discriminating contrasts in place of articulation or voicing, in which the differences are largely temporal, than contrasts in manner of articulation or between vowels, in which the acoustic differences are primarily spectral in nature. Importantly, this was also true for carefully controlled synthesized speech and non-speech stimuli, where NL was worse at discriminating temporally differing than spectrally differing stimuli. This shows that his deficit is not specific to speech, but extends to the ability to perceive temporal changes in very simple sounds as well.

NL’s deficit fits with other recent reports of a rapid temporal processing deficit in unilateral PWD (Stefanatos et al., 2005; Wang et al., 2000), but is inconsistent with suggestions that a prephonemic deficit in temporal acuity results from bilateral temporal lesions (e.g., Auerbach et al., 1982). Instead, this and other recent data suggest that a temporal acuity (i.e., prephonemic) deficit may result from a unilateral lesion. Presumably a temporal acuity deficit would also exist in bilateral cases (as bilateral cases also involve left temporal lesions), which implies that both unilateral and bilateral subtypes of PWD may involve underlying perceptual deficits.

Thus, in one sense, these data support to the idea that left hemisphere temporal regions are specialized for the processing of rapid temporal aspects of sound (e.g., Boemio et al., 2005; Poeppel & Monahan, 2008; Zatorre & Belin, 2001). However, these data also show that NL’s deficit in speech perception does not reduce to a problem with rapid temporal analysis. Although he was markedly better at discriminating spectral than temporal contrasts (in both natural and synthesized speech), his ability to discriminate between spectrally differing stimuli could hardly be called unimpaired. Furthermore, he consistently showed worse performance on speech than on simple non-speech stimuli, and Experiment 2 showed that an intensive training program focusing on the development of temporal acuity did improve NL’s ability to make rapid temporal discriminations, but only for simple non-speech stimuli.

So what does underlie NL’s speech perception deficit? One possibility is that the left hemisphere is specialized for the processing of complex sounds (where both spectral and temporal complexity are relevant), whereas the right hemisphere preferentially processes stimuli with dynamic pitch information (Scott & Wise, 2004). This would predict that PWD patients with bilateral damage would, unlike NL, have additional impairments in non-complex sound perception, such as with the non-speech spectrally manipulated (tone pitch) stimuli in Experiments 1 and 2 (i.e., the vowel F2 analogues). Although it is unclear exactly what aspects of complex sounds lead to a left-hemisphere bias under this account, it does fit with the fact that NL showed good discrimination of simple stimuli with dynamic pitch changes but poor discrimination of more complex speech sounds. Similarly, that training led to improvements in his ability to discriminate rapid temporal changes in simple sounds, but not in more complex sounds, suggests that some form of auditory complexity plays an important role. This further implies that the left lateralization of speech perception does not simply reflect enhanced temporal acuity of the left hemisphere, but rather a more complex type of specialization.

Still, it is remarkable that the FFW treatment program did lead to at least some types of perceptual improvement in this PWD patient. This is certainly not the first demonstration of treatment efficacy in chronic aphasia (for reviews, see Moss & Nicholas, 2006; Robey, 1998) or even in PWD (Tessier et al., 2007), but is nonetheless notable given the common assumption that most recovery in aphasia occurs only within the first year after stroke onset (Moss & Nicholas, 2006). However, NL’s improvement was restricted to simple sinewave stimuli and did not extend to his ability to discriminate between speech sounds, again suggesting that problems with rapid temporal discrimination, while impaired in PWD, do not fully account for these speech perception deficits. And while the FFW treatment program was not designed for PWD, the fact that improvements from FFW treatment did not lead to improvements in speech perception fit with the relative lack of well-controlled studies showing effectiveness of FFW as a treatment for auditory processing disorders in children (McArthur, 2009).

In sum, this study makes three general points. First, these data add to the evidence that unilateral left hemisphere damage is associated with a deficit in the perception of rapid temporal stimuli. Second, PWD is not well explained by a simple disconnection approach (Geschwind, 1965) as word deafness can emerge form left hemisphere damage even with intact cross-callosal projections from auditory cortex in the right hemisphere. Finally, these data show that temporal processing ability in PWD can be successfully treated with the FFW program, but these improvements in temporal discrimination are limited to non-speech stimuli without corresponding improvements in speech sound discrimination. This, along with the fact that NL also has difficulty with speech stimuli that do not critically rely on rapid temporal formant changes (as do other patients with PWD), suggests that a deficit in temporal acuity is only part of the underlying cause of speech perception problems in PWD. This further suggests that a specialization for rapid temporal analysis is unlikely to be the sole underlying cause of a left hemispheric specialization for speech perception more generally (see also Scott & Wise, 2004), but rather implicates a left hemisphere bias for spectrotemporally complex sounds.

Research Highlights.

A case of unilateral pure word deafness with preserved cross-callosal connectivity

His speech perception problems partially reflect a rapid temporal processing deficit

Treatment can improve temporal acuity, but improvements do not generalize to speech stimuli

Acknowledgments

This work was supported by NIH grants R01 DC-00218 and F32 DC-008723 and by the Maurin fund for Cognitive Psychology at Rice University. MFJ was supported by an operating grant from the Canadian Institute for Health Research.

We thank Phil Burton, Tim Ellmore, and Barbara Fullerton for helpful advice on various aspects of this project, Scientific Learning Corporation for providing the FFW materials, and Sarah Zelonis for administering the FFW treatment.

Footnotes

Other names for this syndrome such as verbal auditory agnosia may be preferable, however PWD seems to be the most commonly used term and so will be used here for the sake of consistency.