Abstract

Speech sounds can be classified on the basis of their underlying articulators or on the basis of the acoustic characteristics resulting from particular articulatory positions. Research in speech perception suggests that distinctive features are based on both articulatory and acoustic information. In recent years, neuroelectric and neuromagnetic investigations provided evidence for the brain’s early sensitivity to distinctive feature and their acoustic consequences, particularly for place of articulation distinctions. Here, we compare English consonants in a Mismatch Field design across two broad and distinct places of articulation - LABIAL and CORONAL - and provide further evidence that early evoked auditory responses are sensitive to these features. We further add to the findings of asymmetric consonant processing, although we do not find support for coronal underspecification. Labial glides (Experiment 1) and fricatives (Experiment 2) elicited larger Mismatch responses than their coronal counterparts. Interestingly, their M100 dipoles differed along the anterior/posterior dimension in the auditory cortex that has previously been found to spatially reflect place of articulation differences. Our results are discussed with respect to acoustic and articulatory bases of featural speech sound classifications and with respect to a model that maps distinctive phonetic features onto long-term representations of speech sounds.

Keywords: Auditory Cortex, Magnetoencephalography, Auditory Evoked Fields, Mismatch Fields, Place of Articulation, Asymmetric Speech Processing, Underspecification

Introduction

Speech sounds can be classified on the basis of their active articulators, pertaining to the aspects of how and where they are produced in the vocal tract. In this respect, manner of articulation (MoA) describes the way by which speech sounds are produced, i.e. with or without constriction in the oral cavity, while place of articulation (PoA) indicates where such a constriction occurs (cf. Ladefoged, 2001; Reetz & Jongman, 2008; Stevens, 1998). For instance, speech sounds produced at or in the vicinity of the lips are referred to as LABIAL sounds (e.g. English [v], [w]), while sounds produced between the alveolar ridge and the hard palate are labeled palato-alveolar, palatal, or more generally, CORONAL (e.g. English [ʒ], [j]). The differences between [v] and [w], and [ʒ] and [j] relate to differences between fricatives with a narrow constriction ([v], [ʒ]) and glides with a vowel-like configuration ([w], [j]). Naturally, these articulator configurations have particular acoustic consequences and characteristics. Together with specific articulator configurations, acoustic cues (“landmarks”, cf. Stevens & Blumstein, 1978; Stevens, 2002) have been taken to describe and classify sets of phonetic and phonological features. Not surprisingly, theories of feature systems differ as to whether the corresponding features are predominantly determined by their underlying articulators (Chomsky & Halle, 1968; Halle, 1983) or by their concomitant acoustic properties (e.g. Stevens, 2002).

A model that rather takes an eclectic position on the form and content of feature definitions and which makes some specific predictions for the mapping of distinctive phonetic features onto long-term memory representations for speech sounds during perception is the Featurally Underspecified Lexicon model (FUL, Lahiri & Reetz, 2002, 2010). It employs abstract features that may be related to either articulatory or acoustic bases, but not in a necessarily isomorphic way. For instance, the PoA features LABIAL, CORONAL and DORSAL stem from the articulatory positions lips, tongue corona, and tongue dorsum. However, their usage for both vowels and consonants implies a more abstract classification than is found in other theories (e.g. Sagey, 1986). Further, a crucial difference between consonants and vowels according to FUL is that PoA for consonants cannot be simultaneously LABIAL and CORONAL, while LABIAL can be a secondary place of articulation for coronal vowels. On the other hand, LABIAL and DORSAL can co-occur in both consonants and vowels.

Note that these assumptions regarding abstract phonological features bear on articulatory and acoustic bases: The three-way PoA distinction for consonants reflects their relative positioning in the oral tract, where labial is anterior to coronal, and coronal is anterior to dorsal. Due to the complex acoustic structure of labial consonants (Stevens, 1998), consonantal PoA cannot be related to one acoustic cue (such as the second formant frequency, F2). The two-way PoA for vowels, distinguishing between coronal (front) and dorsal (back), reflects the positional involvement of the tongue. The primary acoustic cue is F2. Labiality as secondary PoA indicates the involvement of the lips. Again, it cannot be attributed a single acoustic cue (such as F1 or F2). Thus, PoA distinctions in FUL are based on abstract features. These features have a relation to their articulatory and acoustic bases, but it is not isomorphic or linear (cf. Stevens, 1989). Finally, FUL assumes coronal underspecification. Coronal underspecification describes the assumption that the long-term memory representation of coronal consonants and vowels has no underlying PoA feature (Lahiri & Reetz, 2002, 2010). Amongst other phenomena, coronal underspecification accounts for asymmetric assimilation effects, whereby underspecified coronal [n] in lean bacon assimilates to [m] due to the following labial consonant [b], but specified labial [m] in cream dessert does not assimilate to [n]. Although coronals are underlyingly underspecified, their acoustic-phonetic surface forms naturally provide cues for their PoA.

In this article, we are interested in neuromagnetic responses to the perception of consonantal PoA distinctions LABIAL and CORONAL, as used in FUL. We take FUL as an exemplary framework for this study because the development of the theory has directly addressed behavioral and neurophysiological investigations and has provided detailed proposals on the basis of the mapping of distinctive phonetic features onto the long-term memory representations of speech sounds. The opposition of labial and coronal is especially interesting, since the two features cannot be distinguished by a solitary acoustic parameter and because FUL predicts that labial and coronal should show similar perceptual processing across different manners of articulation. We therefore chose to look at two different consonantal MoA for the labial-coronal contrast. Alternative models might predict that PoA cannot be generalized across MoA, since PoA differences are accompanied by different acoustic effects that crucially dependent on MoA. Such alternative approaches would focus on acoustic, rather than abstract, feature representations. Finally, in addition, FUL also claims that CORONAL is unspecified in the long-term memory representation of consonants and vowels. This should have specific effects on the opposition of these features in neurophysiological experiments, at least when combined with appropriate linking hypotheses (namely that the MMN standard (see below) is equated with the FUL long-term memory representation).

The methodology employed in these experiments is Mismatch Negativity (or its magnetic equivalent, the Mismatch Field, MMF; Näätänen, 2001; Näätänen & Alho, 1997; Näätänen, et al., 1997), a technique previously used to test the mapping of acoustic-phonetic surface features to underlying language specific long-term representations and to test specific processing predictions of the FUL model (Eulitz & Lahiri, 2004). A crucial advantage of Magnetoencephalography (MEG) is that the recording also allows for source estimations of the underlying brain activity, a measure previously used for testing the neuronal basis of PoA distinctions (Obleser, Lahiri, & Eulitz, 2003, 2004), allowing us to meaningfully compare our results with those obtained previously.

Testing features in the brain

Recent behavioral and neurophysiological studies support the proposal of abstract features as units of perception and representation in long-term memory (Lahiri & Reetz, 2002, 2010; Poeppel, Idsardi, & van Wassenhove, 2008; Reetz, 1998, 2000; Stevens, 2005). Coronal underspecification has been successfully tested in both consonants (Friedrich, Lahiri, & Eulitz, 2008; Friedrich, 2005; Ghini, 2001; Lahiri & Marslen-Wilson, 1991; Wheeldon & Waksler, 2004) and vowels (Eulitz & Lahiri, 2004; Lahiri & Reetz, 2002, 2010). MMN/MMF designs for the investigation of coronal underspecification rely on the creation of a central sound representation through the repetitive auditory presentation of the standard. This representation is assumed to reflect language-specific long-term memory representations of single segments (Näätänen, et al., 1997), affixes (Shtyrov & Pulvermüller, 2002a), and words (Pulvermüller, et al., 2001; Pulvermüller, Shtyrov, Kujala, & Näätänen, 2004; Shtyrov & Pulvermüller, 2002b). The infrequently occurring deviant, on the other hand, provides a more surface-faithful (input-driven) representation, involving an acoustic and feature-based mismatch to the standard representation (Eulitz & Lahiri, 2004; Winkler, et al., 1999). The MMN as an automatic change detection of the brain may be additionally modulated by the featural properties of standard and deviant. In Eulitz & Lahiri (2004), the coronal deviant vowel [ø] preceded by the dorsal standard vowel [o] elicited an earlier and larger MMN response than the dorsal deviant [o] preceded by the coronal vowel [ø]. Eulitz & Lahiri assumed that in the first case, the dorsal standard [o] activated its fully specified PoA representation. The coronal deviant supplied the mismatching feature ([coronal vs. [dorsal]), resulting in a larger MMN than in the second case, where the coronal standard activated an underspecified representation, for which the dorsal deviant did not supply a mismatching feature ([dorsal] vs. [-]). This principle should hold in general: Coronal deviants differing solely along the PoA dimension from their standards should always elicit larger MMN effects compared to their non-coronal counterparts.

While there is evidence for the MMN to be also modulated by lexical effects (Menning, et al., 2005; Pulvermüller & Shtyrov, 2006), earlier (i.e. preceding) evoked auditory components can more directly test acoustic-phonetic aspects of speech perception. These components (i.e. the N100m/M100 and M50, cf. Gage, Poeppel, Roberts, & Hickok, 1998; Poeppel, et al., 1996; Roberts, Ferrari, & Poeppel, 1998; Roberts & Poeppel, 1996; Tavabi, Obleser, Dobel, & Pantev, 2007) were used in neurophysiological investigations of acoustic-phonetic and phonological features (Obleser, Elbert, Lahiri, & Eulitz, 2003; Obleser, Lahiri, et al., 2003, 2004; Tavabi, et al., 2007). We focus on the M100, which is a component of the evoked magnetic field response, with latencies at around 100 ms post stimulus onset. It is considered an indicator of basic speech processing in the auditory cortices (Diesch, Eulitz, Hampson, & Ross, 1996; Shestakova, Brattico, Soloviev, Klucharev, & Huotilainen, 2004) and reliably elicited by tones, vowels, syllables, and word onsets. The latency and amplitude of the M100 has been shown to correlate with the fundamental frequency of tones (Roberts & Poeppel, 1996; Woods, Alain, Covarrubias, & Zaidel, 1995) and the first formant frequency of vowels (Poeppel & Marantz, 2000; Roberts, et al., 1998). Recent work suggests an additional sensitivity to vowel formants ratios in dense vowel spaces (Monahan & Idsardi, 2010). Larger ratios between the first and third vowel formant (F1/F3) elicited earlier M100 latencies. With respect to PoA, Obleser and colleagues investigated the equivalent current dipoles (ECDs) underlying the M100 response to vowels, consonants, and consonant-vowel syllables. Obleser, Lahiri, et al. (2004) tested 7 naturally spoken German vowels and found that dorsal vowels elicited ECDs with a more posterior location in the auditory cortex than coronal vowels. Intriguingly, the spatial differences only held for the coronal/dorsal, not for the labial-coronal/coronal comparison. This suggests that the ECD separation is particularly sensitive to mutually exclusive PoA features (such as coronal and dorsal), which in this case also map to an acoustic difference in the second formant (F2). The ECD distinction in the anterior-posterior dimension for PoA differences was replicated with CV syllables containing either a dorsal ([o]) or a coronal vowel ([ø]), irrespective of the initial consonant, which was a labial, coronal, or dorsal stop ([b], [d], [g], Obleser, Lahiri, et al., 2003). Consonantal differences were reflected in ECD source orientations, again distinguishing between coronal and dorsal. Due to potentially mutual influences between the consonant and vowel in this study, coronal ([t]) and dorsal ([k]) stop consonants were separately analyzed as single segments. In order to demonstrate that the previous ECD location pattern did not solely result from acoustic spectro-temporal characteristics, Obleser, Scott, & Eulitz (2006) used dorsal and coronal stops in an intelligible and in an unintelligible condition. The unintelligible condition was matched to the intelligible condition for spectro-temporal complexity and diversity. Intriguingly, dorsal stops elicited more posterior dipoles, in accordance with previous findings, but only in the intelligible condition. Further, the spatial dipole pattern was not affected by the co-occurring voicing feature, which distinguishes between [t,k] and [d,g]. In line with the observation that the M100 can be modulated by top-down processes (Näätänen & Picton, 1987; Näätänen & Winkler, 1999; Sanders, Newport, & Neville, 2002), Obleser, Elbert, & Eulitz (2004) showed that the absolute ECD dipole locations differed when attention was shifted away from the linguistic stimulus characteristics, while ECDs to dorsal vowels still were significantly more posterior than ECDs to coronal vowels. Thus, there is evidence that the spatial alignment of the evoked auditory M100 follows featural PoA distinctions, especially if these are mutually exclusive, which is true for DORSAL and CORONAL for both vowels and consonants in German and in English. Further, it could be the case that the specific spatial arrangement of dorsal and coronal dipoles actually parallels relative articulatory positions, in that speech sounds at more front locations in the vocal tract elicit dipoles that are posterior to dipoles of sounds at more back locations. Independent evidence for such an assumption comes from studies showing an articulatory-based alignment of centers of activation in the somatosensory cortex (Picard & Olivier, 1983; Tanriverdi, Al-Jehani, Poulin, & Olivier, 2009). While our experiment is not set up to directly tease apart an articulatory and an acoustic approach to dipole locations in the auditory cortex, the somatosensory assumption may be worthy to pursue in future research with a better controlled stimulus set.

Place of articulation differences in English consonants

On the basis of previous findings regarding featural speech sound representations, we are interested in the PoA distinction between labial and coronal consonants in American English. This distinction is interesting because according to FUL it refers to mutually exclusive PoAs for consonants, but not for vowels. Again, note that consonantal PoA distinctions are not always mutually exclusive. In fact, one of our stimuli, labial [w], may have a secondary PoA, DORSAL. With respect to the two auditory evoked components – MMF and M100 – we expect coronal and labial consonants to yield asymmetries in the MMF and separable ECD dipole locations underlying the M100. In particular, if the relative articulator hypothesis is correct, we expect the consonantal labial dipoles to be more anterior than the coronal dipoles, since labial consonants are produced in a more “front” position in the vocal tract than coronals.

We tested both labial and coronal glides ([w], [j]; Experiment 1) and fricatives ([v], [ʒ]; Experiment 2), for a couple of reasons. First, comparing PoA differences across different MoAs enables us to test whether PoA effects are in fact independent of MoA within the consonants. Second, our selection lets us compare more vowel-like consonants (glides) with less vowel-like consonants (fricatives). Finally, LABIAL and CORONAL oppositions in fricatives have not been systematically tested with neuromagnetic methods before. Table 1 illustrates the experimental material for Experiment 1 and 2.

Table 1.

Design of Experiment 1 and 2. Palatal and palatal-alveolar consonants are subsumed under the macro-category coronal, while labio-dental/labio-velar consonants are subsumed under the category labial.

| MoA | LABIAL | PoA | CORONAL | Experiment |

|---|---|---|---|---|

| glide | [w] | [j] | 1 | |

| fricative | [v] | [ʒ] | 2 |

The respective consonants were embedded in V_V contexts, using the vowel [a] in all cases. We opted for naturally spoken /VCV/ stimuli in order to ensure that the stimuli resembled natural speech as closely as possible. Further, since phonetic research has shown that the initial vowel-consonant transition is shaped by the final vowel in VCV syllables (Oehman, 1966), we made sure that the same vowel preceded and followed the consonant of interest in our stimuli. Thus, a constant vowel environment ensures that potential co-articulatory effects on the initial vowel can be attributed to the medial consonant of interest, and that differences between responses to initial vowels are the result of the subsequent consonant rather than the categorical identity of either of the vowels.

Experiment 1

Material

For Experiment 1, we chose the labio-velar and palatal glides [w] and [j], and matched the PoA to the labial and palato-alveolar fricatives [v] and [ʒ] in Experiment 2. We assign the labio-velars and labio-dentals to the category LABIAL, and likewise, the palatals and palatal-alveolars to CORONAL.

The consonants of interest were embedded in VCV sequences and spoken by a phonetically trained native speaker of English, recorded on a computer with a sampling rate of 44.1 kHz and an amplitude resolution of 16 bits, and further processed in PRAAT (Boersma & Weenink, 2009). Care was taken that the phonetically trained speaker avoided a velar constriction for [w], thus producing a pure labial glide. Stimulus sequences in Experiment 1 consisted of a 190 ms-vowel portion, followed by the respective consonants with an average duration of 112 ms. With a final vowel duration of 212 ms on average, acoustic stimuli had an overall duration of 514 ms.

We used 20 different exemplars of each sequence, in order to obtain stimulus material with substantial natural variation and in order to ensure that standards activated more abstract representations, such that a pure acoustic explanation of the resulting MMF can be excluded (cf. Phillips, et al., 2000). From these 20 exemplars, we chose 10 for each stimulus sequence with pitch, loudness, and intensity characteristics in a relative narrow, but nevertheless variable range.

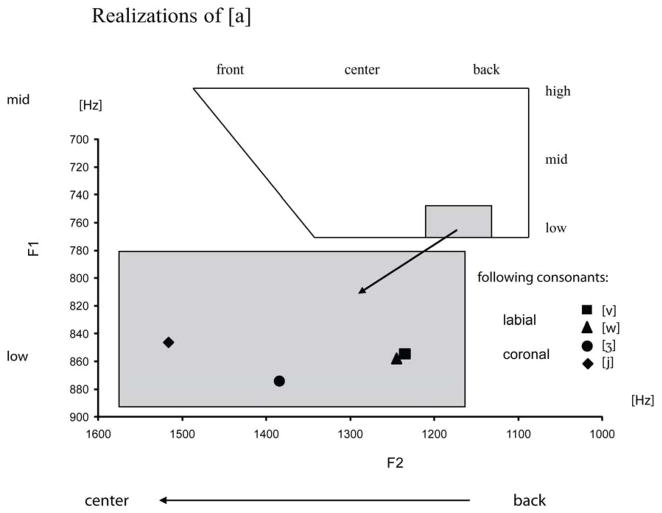

In order to assess the co-articulatory influences of the respective medial consonants onto the first vowel [a] of each stimulus, we measured formant frequencies (F1, F2) in the middle of the initial vowels (at 100 ms). Figure 1 illustrates the location of the initial vowels in the F2/F1 vowel space.

Figure 1.

First and second formant values at 100 ms of the initial vowel [a] of each stimulus, depending on the following consonant context. Note that the F1 axis shows only a small portion of the full F1 range, which is indicated in the upper half of the figure.

Statistically, there were no effects for the first vowel formant, F1 (all Fs < 3, n.s., based on Linear Mixed Model analyses, cf. Baayen, 2008; Baayen, Davidson, & Bates, 2008). However, the second vowel formant, F2, differed across place (F(1,36) = 121.17, p < .001) and manner of articulation (F(1,36) = 13.87, p < .001) of the following consonant. Coronality of the consonant led to a fronting of the initial vowel, i.e. to an increase in its F2 value. Furthermore, there was a significant interaction of place and manner (F(1,36) = 10.21, p < .01), reflecting stronger fronting by coronal glides than by coronal fricatives.

Behavioral task

The stimulus material was tested in a same-different AX discrimination task in which subjects heard every possible opposition of the categorical contrasts (3 × 4) for each exemplar (10) in two repetitions, yielding a total of 240 pairs. 12 native speaker of American English (6 female; mean age 23) participated in this task, but neither in Experiment 1 nor in Experiment 2.

Subjects’ accuracy as measured in d′ (Macmillan & Creelman, 2005) showed acceptable values in all conditions (d′>1), implying that there were no a priori psycho-acoustic differences in discriminability between the stimulus categories.

Stimulus organization and presentation

Stimuli were organized in a classic many-to-one odd-ball paradigm. In Experiment 1, the VCV sequences [aja] and [awa] were distributed over two blocks in which they occurred in either standard (p=0.875, N=700) or deviant position (p=0.125, N=100). The number of standards between two deviants varied randomly in each block and for each subject. The total number of stimulus presentations per block was 800. The inter-stimulus interval varied randomly between 1200 and 1400 ms. Each block lasted for about 20 minutes. The stimuli presentation was done using the software package PRESENTATION (Neurobehavioral Systems).

Subjects and procedure

14 participants (5 female, mean age 23.6, SD 6.4), graduate and undergraduate students of the University of Maryland, College Park without hearing or neurological impairments participated for class credit or monetary compensation. They were all native speakers of American English and had not participated in the behavioral same-different task. Participants gave their informed consent and were tested for their handedness, using the Edinburgh Handedness Inventory (Oldfield, 1971). The cut-off (exclusion) criterion was 80%, but no subject was excluded for this reason, all being strongly enough right-handed. Prior to the main experiment, participants’ head shapes were digitized, using a POLHEMUS 3 Space Fast Track system. Together with localization data as measured from two pre-auricular, and three pre-frontal electrodes, these data allowed us to perform dipole localization analyses, as reported in the Analysis section.

MEG recording

For the MEG recording, participants lay supine in a magnetically shielded chamber with their heads in a whole-head device of 157 axial gradiometers (Kanazawa Institute of Technology, Kanazawa, Japan). Magnetic field recording were done with a sampling rate of 500 Hz, a low-pass filter of 200 Hz, and a notch filter of 60 Hz. Before the main experiment, participants were screened with a 2-tone perception task during which they were instructed to silently count a total of 300 high (1000 Hz) and low (250 Hz) sinusoidal tones, occurring in (pseudo)-random succession. The scalp distribution of the resulting averaged evoked M100 field was consistent with the typical M100 source in the supra-temporal auditory cortex (Diesch et al., 1996). Only participants with a reliable bilateral M100 response were included in further analyses. One participant was excluded on this criterion. Based on the topography of the M100, we selected 10 channels per participant in each hemisphere (5 source, 5 sink). The selection was based on the strongest average field of the M100 peak.

The main experiment consisted of a passive oddball paradigm as described above. Participants were tested in two blocks that differed as to whether [aja] or [awa] occurred in standard or deviant position. If [aja] was standard in block 1, it was deviant in block 2. The block order was counter-balanced across subjects.

In order to reduce eye movements and to keep participants awake, they were presented a silent movie during the passive listening task, which was projected onto a screen approximately 25 cm in front of them. Furthermore, the experimenter guaranteed a short break every 10 minutes. The total experiment lasted for about an hour.

Data Analysis

MEG raw data were filtered with respect to environmental and scanner noise (de Cheveigné & Simon, 2007, 2008). Trial epochs with a length of 800 ms (100 ms pre-stimulus interval) were averaged for each condition, using the MegLaboratory Software (Kanazawa Institute of Technology, Japan). Artifact rejection was done by visual inspection. The cut-off criteria were amplitudes higher than 3 pico-Tesla (pT) or more than 3 consecutive eye blinks within one epoch. Due to excessive noise and artifacts in the raw data, one participant was excluded from further analyses, since more than 15% of standard and deviant epochs had to be excluded. Averaged data were base-line corrected and band-pass filtered (0.03–30 Hz). Amplitudes and peak latencies were calculated on the basis of pre-defined time frames, using the selected left- and right-hemispheric channels from the 2-tone screening. The time frames were selected based on visual inspection of the grand averaged data across all subjects. These time windows were in accordance with the usual time course of MEG components (Ackermann, Hertrich, Mathiak, & Lutzenberger, 2001). The first window covered the prominent M100 peaks of the initial vowel (between and 75 and 175 ms). The second window (225 – 325 ms) included the M100 of the consonant, while the third window covered the area of the mismatch negativity (350 – 450 ms, ca. 150–250 ms after consonant onset).

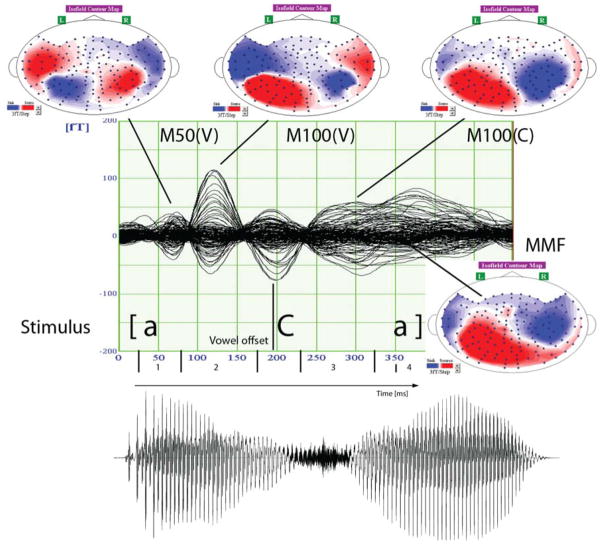

The time course of these windows is illustrated in Figure 2.

Figure 2.

Time course of the MEG responses to the VCV stimuli. The onset of deviance in each stimulus is at the offset of the initial vowel (190 ms). The mismatch field is expected between 160 and 260 ms post onset of deviance, i.e. between 350 and 450 ms post stimulus onset.

Mixed Effect Model analyses with subject as random factor (MEM, Baayen, 2008; Baayen et al., 2008) were calculated with mean magnetic field strengths in the four time windows as independent variable based on the RMS values for the selected left- and right-hemispheric channels. The fixed effects comprised the within-subject factors position (standard/deviant), word (aja/awa) and hemisphere (left/right). Due to the few-to-many design of an oddball paradigm, we randomly selected the same amount of standard epochs than deviant epochs for this analysis, in order to guarantee that the RMS values did not involve unequal variance within the factor position. In the RMS analysis across our four time windows, any difference in mismatch responses between the two stimuli sequences should produce significant interactions of word x position. We used the same factors for the analyses with RMS peak latency and peak amplitude as dependent variables.

Dipole fitting

Equivalent current dipole (ECD) fitting was based on 32 channels surrounding the selected 10 channels from the 2-tone screening and followed the procedure of Obleser et al. (2004). ECD modeling used a sphere model that was fitted to the head shape of each participant. Left and right hemispheric dipoles were modeled separately (Sarvas, 1987). Source parameters of the vowel and consonant M100 as well as for the deviants’ mismatch field were determined based on the median of the five best ECD solutions on the rising slope of each of the components, covering a time range of 10 ms. No solution after the respective peaks was included (cf. Scherg, Vajsar, & Picton, 1990). Only solutions with a fit better than 90% were used for the fitting calculation.

For the statistical analyses, we used the dependent measures dipole intensity, orientation (in the sagittal and axial dimension) and location in the medial-lateral, anterior-posterior, and inferior-superior dimension. Within-subject independent factors comprised word and position.

Results

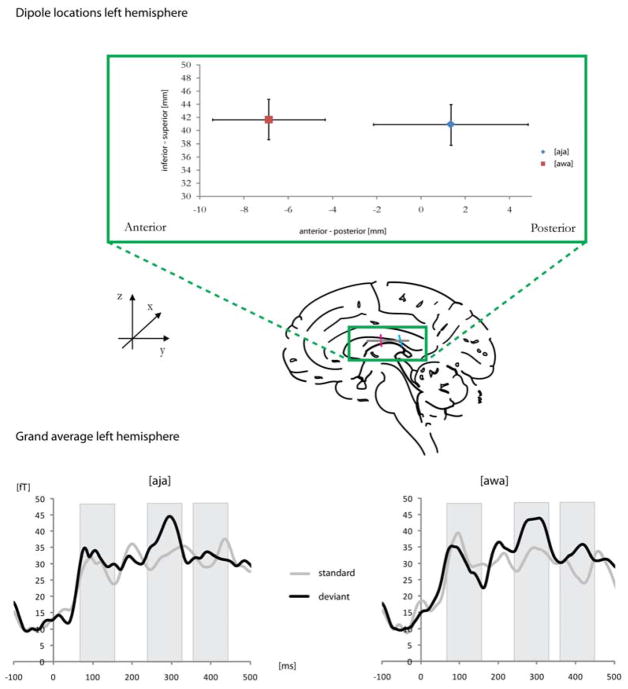

Generally, effects were larger in the left than in the right hemisphere. MMFs between standard and deviant responses were significantly different between 350 and 450 ms ([aja]: t = 3.69, p < 0.01; [awa]: t = 12.98, p < 0.001, Figure 3, lower panel). In the following, we report significant main effects and interactions involving the factors position and word for all three time frames.

Figure 3.

Dipole locations in the anterior-posterior and inferior-superior plane in the auditory cortex, based on the consonant M100. Grand averages for standards and deviants [aja] and [awa] are plotted in the lower panel.

75–175 ms

No amplitude effects were found in this time frame. The latency analysis revealed an interaction of word x position (F(1,932) = 13.58, p < .001), differing across hemispheres, as seen in the three-way interaction hemisphere x word x position (F(1,932) = 4.03, p < .05). Based on RMS peaks, the standards [aja] and [awa] peaked at the same time, while the deviant [awa] elicited a significantly earlier M100 (t = 3.65, p < .01) in the left hemisphere.

225–325 ms

RMS amplitudes differed between standards and deviants, as seen in the main effect of position (F(1,77) = 16.21, p < .001). This effect was replicated in the peak amplitude analysis (F(1,74) = 8.14, p < .01). The latencies of the RMS peaks differed between position and hemispheres (F(1,74) = 6.13, p < .05), reflecting left-hemispheric earlier deviant latencies, and right-hemispheric earlier standard latencies.

350–450 ms

The RMS analysis revealed a significant interaction of word x position (F(1,77) = 3.90, p < .05). The difference between standard and deviant was greater for [awa] than for [aja]. Latencies did not differ.

Dipoles

Consonant and vowel M100 dipole parameters were analyzed in MEMs, separately for each hemisphere. In the left hemisphere, the intensity analysis showed a trend for a word effect in the same direction as seen in the amplitude analyses. The deviant [awa] produced a stronger mismatch response than the deviant [aja] (F(1,7) = 3.82, p = .11). For the M100 consonant dipole, intensities differed in the left hemisphere between standard and deviant (F(1,24) = 11.28, p < .01), and for the M100 vowel dipole, intensities differed on the left (F(1,29) = 23.40, p < .001) as well as on the right hemisphere (F(1,10) = 9.10, p < .05). The dipole latency analysis showed a position effect for the consonant M100 only (F(1,24) = 7.26, p < .01). Deviant responses peaked earlier than standard responses.

M100 consonant dipole orientations differed in the left-hemispheric sagittal plane depending on position (standard, deviant) and word ([aja], [awa]), as seen in a significant interaction (position x word: F(1,24) = 7.02, p < .05). This was driven by the standard [awa] that showed a more vertical orientation than the other stimuli. Further, the M100 vowel dipole orientation differed across position (F(1,29) = 11.52, p < .01). Standards were oriented more horizontally, pointing anterior. Finally, dipole locations differed in the anterior-posterior dimension of the left hemisphere. There was a trend of an interaction of position x word for the M100 consonant dipole (F(1,24) = 2.67, p = .11), driven by a more anterior location of the deviant [awa], compared to the deviant [aja] (t = 3.98, p <.05). This interaction was significant for the M100 vowel dipole (F(1,29) = 6.84, p < .05), reflecting again a more anterior location of the deviant [awa] (Figure 3, upper panel).

Discussion

The MMF pattern of Experiment 1 with the English glides [w] and [j] showed an interesting amplitude asymmetry. This asymmetry, however, was oriented in the opposite direction than predicted by the FUL model. Crucially, while FUL would predict that coronal, underspecified [j] should elicit a larger MMF, we found that in fact, the labial, specified [w] elicited larger MMF amplitudes, while latencies did not differ. Hence, on the basis of our data, we found evidence disconfirming the predictions of the FUL theory for directionality effects in mismatch experiments; coronals do not always elicit larger MMF amplitudes.

What could have caused our observed asymmetry, if coronal underspecification according to FUL is ruled out? Perhaps, the labial deviant may have recruited more neuronal resources due to its underlying articulators (the lips) that are ‘more front’ in the vocal tract, thus providing additional visual cues (cf. among others Boysson-Bardies & Vihman, 1991; Dorman & Loizou, 1996; MacNeilage, Davis, & Matyear, 1997; Vihman, 1986; Winters, 1999, see General Discussion). Our behavioral data, however, do not lend support to this hypothesis, since the discrimination accuracy did not differ between labials and coronals.

With respect to the M100 dipoles, the results of Experiment 1 are in line with the findings of Obleser and colleagues (Obleser, Elbert, et al., 2004; Obleser, Lahiri, et al., 2003, 2004; Obleser, et al., 2006). As in their studies, dipole localizations in Experiment 1 differed along the anterior-posterior axis. Deviants with labial [w] produced cortical activation with a more anterior dipole than deviant segments with coronal [j]. In addition, the consonant dipole orientation for [awa] was more vertical. Note that the particular location of centers of activation might be interpreted as paralleling somatosensory representations. Places of articulation involving the lips (hence, being further “front” in the vocal tract) resulted in more anterior ECDs, compared to places of articulation involving the coronal area of the tongue that had more posterior ECDs. The relative positions of the dipoles in combination with the data by Obleser and colleagues may allow for the interpretation that broad articulatory locations (undoubtedly with very characteristic acoustic consequences) are paralleled in cortical areas. Again, this can only be a speculation at this point, since our experiment does not allow for a precise separation of acoustic and articulatory effects and since we would need a real third point, viz. dorsals, to back up our relative position hypothesis.

The reason of why we found significant dipole location differences only for deviants may have to do with effects of habituation to the standards (cf. Woods & Elmasian, 1986). Repeated presentation of standard exemplars leads to a reduction in M100 amplitude, paralleling suppression of activity in response to the predictable somatosensory consequences of self-speech (Dhanjal, Handunnetthi, Patel, & Wise, 2008). This may introduce more variance in the dipole fitting process, since the surface signal for the inverse solution is less robust.

Taken together, the results of Experiment 1 provide evidence for a featural PoA sensitivity of early auditory evoked responses on the basis of acoustic information, while we failed to support FUL’s coronal underspecification view. On the other hand, if the distinction between labial and coronal consonants is based on robust acoustic and articulatory cues and in fact a salient distinction during early stages of speech perception, we expect to find a similar pattern of responses with consonants of a different MoA. For that reason, we used the fricatives [v] and [ʒ] in Experiment 2 with the same design and setup as in Experiment 1. Note that if the source- and sensor-space patterns of Experiment 1 were driven solely by acoustic or articulatory properties of our stimuli, we would expect to find a different outcome in Experiment 2, as the place distinctions within fricatives and glides are not tied to the same set of acoustic values. PoA distinctions of fricatives are less based on formant values within the fricatives, but rather on spectral peak locations and spectral moments of the frication noise (Jongman, Wayland, & Wong, 2000). This can be seen by the coarticulatory influences of the fricatives onto their preceding vowels of our stimuli. These influences were more pronounced for the glides [w] and [j] that have a more vowel-like formant structure, than for the fricatives [v] and [ʒ] without such structure. Note also that on a narrower articulatory classification, [w] and [v] and [j] and [ʒ] slightly differ from each other with regard to PoA (e.g. [w] is bilabial, while [v] is labio-dental), while their relative positions within the oral tract (front vs. less front) are still preserved. Given lower acoustic amplitudes of fricatives, localization results may show greater variance in Experiment 2.

Experiment 2

Material, subjects, and procedure

In Experiment 2, the VCV sequences [ava] and [aʒa] were distributed over standard and deviant positions as described in Experiment 1. Stimulus sequences in Experiment 2 had similar duration properties than in Experiment 1. They consisted of a 190 ms-vowel portion, followed by the respective consonants with an average duration of 115 ms. Together with final vowel durations of 250 ms on average, acoustic stimuli had an overall duration of 555 ms.

15 participants (7 female, mean age 21.4, SD 2.6), graduate and undergraduate students of the University of Maryland in College Park, without hearing or neurological impairments, participated for class credit or monetary compensation. They were all native speakers of American English and had neither participated in Experiment 1 nor in the behavioral same-different task. Participants gave their informed consent and were tested for their handedness, using the Edinburgh Handedness Inventory (Oldfield, 1971). The cut-off (exclusion) criterion was at 80%, but all participants performed above this threshold. Two subjects were excluded from further analyses, since their 2-tones screening test did not allow for a reliable M100 localization. They additionally had excessive proportions of artifacts in the denoised data.

The experimental procedure, MEG recordings, data analyses, and dipole fittings were identical to Experiment 1.

Results

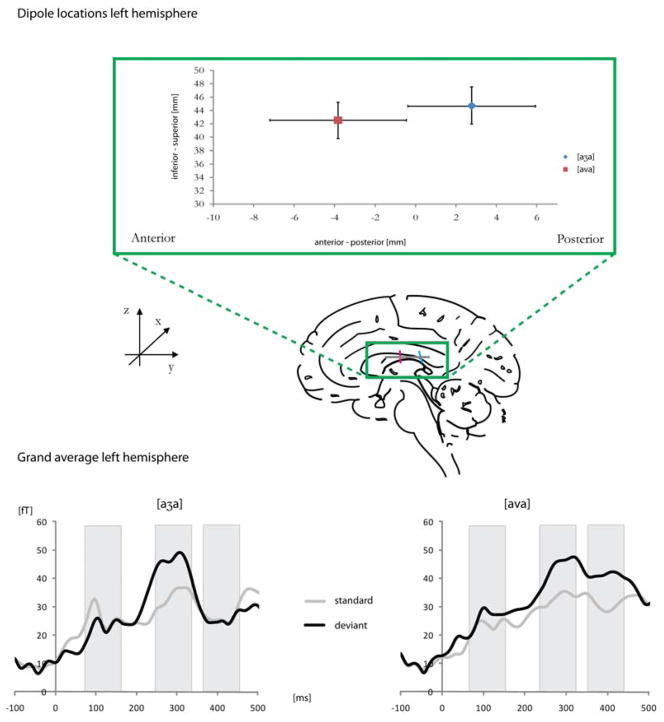

The analysis windows for the amplitude and latency data corresponded to those of Experiment 1 (cf. Figure 2). Again, effects were stronger in the left than in the right hemisphere. Dipole analyses were based on the M100 of the vowel and the consonant. MMFs as significant differences between deviants and standards of the same VCV sequence were reliable between 350 and 450 ms post stimulus onset ([ava]: t = 32.26, p < 0.001; [aʒa]: t = 10.61, p < 0.01, Figure 4, lower panel). This window was used for the MMF analysis.

Figure 4.

Dipole locations in the anterior-posterior and inferior-superior plane in the auditory cortex, based on the consonant M100. Grand averages for standards and deviants [aʒja] and [ava] are plotted in the lower panel.

75–175 ms

There were no RMS amplitude or latency effects in this time window.

225–325 ms

The RMS amplitude analysis indicated higher deviant than standard amplitudes (F(1,84) = 16.35, p < .001), while the RMS latencies did not differ. The RMS amplitude effect was replicated in the peak amplitude analysis (position: F(1,78) = 16.00, p < .001).

350–450 ms

The RMS amplitude analysis replicated the mean amplitude findings. Crucially, [ava] produced higher amplitudes (F(1,84) = 5.40, p < .05), which was restricted to the left hemisphere (F(1,84) = 5.52, p < .05). Further, [ava] produced a greater mismatch than [aʒa] (word x position: F(1,84) = 4.58, p < .05), and more so in the left than the right hemisphere. Peak amplitudes showed a similar pattern, except that the interaction word x position was marginally significant (F(1,83) = 3.09, p = 0.08). There were no RMS latency effects.

Dipoles

Dipole parameters were investigated in MEMs separately for each time frame and hemisphere. Intensities differed between standards and deviants left- (F(1,31) = 12.84, p < .01) and right-hemispheric (F(1,18) = 23.59, p <.01) for the consonant M100, and left-hemispheric only for the vowel M100 (F(1,25) = 13.51, p < .01).

Word differences were seen as trends and corresponded to the analyses in sensor space, yet were not significant. Deviants led to earlier consonantal dipole latencies left (F(1,31) = 12.50, p < .01) and right-hemispheric (F(1,18) = 4.97, p < .05). In the anterior-posterior dimension, dipole locations showed trends for [ava] to be more anterior than [aʒa]. This was true for the consonantal M100 dipole (F(1,26) = 1.86, p = .18) and the vowel M100 dipole (F(1,21) = 3.38, p = .07) on the left hemisphere. The consonantal dipole furthermore showed that the trend of a more anterior [ava] dipole held only for the deviant position (t = 3.68, p = .08, Figure 4, upper panel).

Consonantal dipole orientations in the sagittal plane especially differed between standard and deviant in the left hemisphere (F(1,26) = 13.08, p < .01). Compared to standard dipoles, deviant dipoles were oriented less vertical, pointing more into the anterior direction.

Combined analyses

In order to assess the place of articulation asymmetry across Experiment 1 and 2, we performed a combined RMS amplitude and dipole location analysis. The factor place in this analysis comprised the two values coronal (for the stimuli [aja] and [aʒa]) and labial (for [awa] and [ava]). Additionally, we used the between-experiment factor manner (glide, fricative).

The amplitude analysis showed an interaction of place x position for the MMF (F(1,160) = 8.34, p < .01), yielding higher amplitudes for labial deviants. Importantly, there were no significant effects or interactions with manner (all Fs < 1, n.s.).

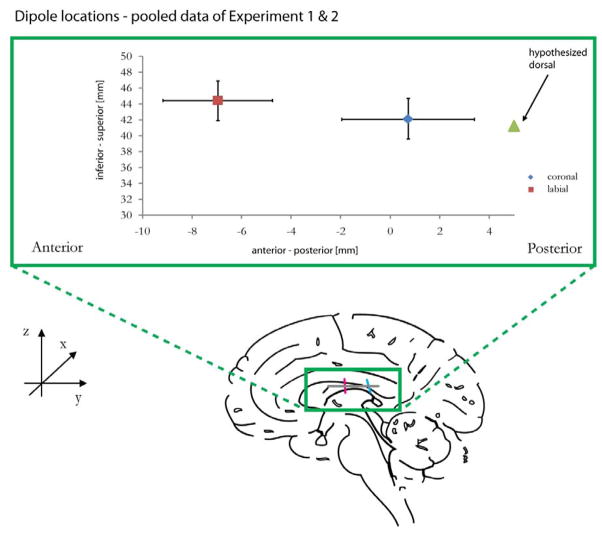

The left-hemispheric dipole location analysis showed a significant interaction of position x place for the consonantal dipole (F(1,49) = 4.24, p < .05). Crucially, labial deviants were located at more anterior locations compared to all other stimuli (cf. Figure 5). These results were independent of MoA, as revealed by a lack of significant main effects or interactions involving the factor manner (all Fs < 1, n.s.).

Figure 5.

Dipole locations in the anterior-posterior dimension for the consonant M100, pooled data from Experiment 1 & 2. Labial deviants were located more anterior than coronal deviants. Based on Obleser et al. (2004), we hypothesize that dorsal (i.e. velar, uvular etc.) consonants would be about 2.5 mm more posterior in relation to coronal consonants.

Interestingly, the vowel dipole showed a main effect for place (F(1,48) = 8.20, p < .01, cf. Figure 5). Again, the labial-coronal relation was preserved: If the vowel was followed by a labial consonant, its ECD was at more anterior locations than if it was followed by a coronal consonant. There was a trend for an interaction with position (F(1,48) = 2.76, p = 0.09), showing that the location difference between labials and coronals in the anterior-posterior dimension was greater for deviants than for standards. Again, this pattern of results was independent of MoA.

We also tried to account for the dipole localizations with a regression analysis of the F2/F1 ratio against the positions in the anterior-posterior dimension. This ratio positively correlated with the anterior-posterior location of the corresponding dipole (r = 0.38, p < 0.05). However, a comparison between the acoustic model (with F2/F1) and an acoustic model including the factor place (labial/coronal) yielded a significantly better fit for the latter model (L-ratio = 7.48, p < 0.01; cf. Pinheiro & Bates, 2000).

Discussion

The most important result of Experiment 2 is that it replicated the sensor- and source-space pattern of Experiment 1. The labial deviant [ava] elicited larger MMF responses than the deviant [aʒa]. This outcome is again not in accordance with the predictions of FUL, and again disconfirms the directionality prediction of the MMF response.

In source space, ECDs for coronal [ʒ] and labial [v] differed on the anterior-posterior axis in a similar way than in Experiment 1, although there was only a trend for [v] to be located at a more anterior location than [ʒ]. We attribute this weaker effect to the fact that fricatives are articulated with less amplitude than glides.

Combined ECD analyses of Experiment 1 and 2 showed that the difference in dipole location along the anterior/posterior axis was robust: Labials elicited dipoles with more anterior locations than coronals. This spatial location was independent of MoA: Anterior-posterior locations did not differ between [w] and [v] or [j] and [ʒ]. A statistical model comparison showed that although the ratio of F2 and F1 is the best predictor for an acoustic model regarding the ECD location along the anterior/posterior axis, a model with the additional fixed effect place (labial/coronal) provided a better fit to the location data. This can be interpreted as evidence for top-down categorical influence on the acoustically driven dipole location in auditory cortex. Intriguingly, the combined analysis showed the same effect for the vowel ECD location. This suggests coarticulatory effects, whereby the acoustic cues for labiality or coronality spread onto the initial vowel (cf. Figure 1). Note that this vowel was consistently [a], such that any response differences must be attributed to the following consonants.

The repercussions of these findings and potential subsequent investigations are considered in the General Discussion.

General Discussion

The main result of our experiments is that the classification of speech sounds according to their place of articulation is reflected in early pre-attentive brain magnetic measures as well as in the spatial alignment of the corresponding centers of activities in the auditory cortex. The fact that we found the same pattern of results for different manners of articulation suggests that PoA information is in fact abstract in the sense that it does not refer to a single acoustic difference (e.g. within the glides), but rather to several acoustic differences underlying the respective PoA. For this reason, a featural approach that defines features entirely on the basis of their acoustics cannot satisfyingly account for our data. On the other hand, we do not deny the importance of acoustic information in our experiments. On the basis of our M100 dipole findings, we propose that the brain’s sensitivity to featural long-term representations is initially based on acoustic information. This acoustic classification is then modulated or warped towards cognitive categories that may be expressed as abstract phonological features or by reference to broad articulatory positions, such as labial and coronal. These PoAs can be considered “preferred” articulator positions within which acoustic correlates provide robust cues for feature-based speech sound distinctions. If we take previous ECD findings into consideration (Obleser, Elbert, et al., 2004; Obleser, Lahiri, et al., 2003, 2004; Obleser, et al., 2006), the relative positioning of dipoles elicited by labials, coronals, and dorsals is such that labials precede coronals, and coronals precede dorsals. Again, we are aware that this linear hypothesis has to be put to test in an experiment with all three PoAs, but we think that this account is a sensible conjecture, since the sequence labial-coronal-dorsal describes a relative order of articulatory positions commonly used for phonetic descriptions cross-linguistically (cf. Ladefoged, 2001). Further, the alignment of lip and tongue positions on the sensory strip in the somatsensory cortex (Picard & Olivier, 1983; Tanriverdi, et al., 2009) is reminiscent of the proposed alignment of articulatory positions in the auditory cortex. We hypothesize that the somatosensory sequence is paralleled in the auditory cortex, an assumption that is also reasonable with respect to the relatively robust communication between primary somatosensory cortex and superior temporal areas of the two cortices.

We are aware that ECD locations in the auditory cortex are primarily based on the acoustic properties of the experimental stimuli. In this respect, previous research has established tonotopy in the auditory cortex (cf. Diesch & Luce, 1997; Huotilainen, et al., 1995; Langner, Sams, Heil, & Schulze, 1997; Pantev, et al., 1988; Pantev, Hoke, Lutkenhoner, & Lehnertz, 1989; Tiitinen, et al., 1993). Pantev et al. (1989) showed that M100 dipoles elicited by tones with a high (subjective) pitch were located more medial as opposed to low pitch tones, which were located more lateral in the axial plane. Similar results were provided by Huotilainen et al. (1995) for magnetic responses (M100 and sustained fields) from the supratemporal plane of the auditory cortex. Further research showed that more complex (and more speech-like) stimuli showed yielded dipole arrangements which differed in other dimensions than the tonotopic axial plane resulting from pure tones (Diesch & Luce, 2000; Mäkelä, Alku, & Tiitinen, 2003; Obleser, Elbert, et al., 2003; Obleser, Lahiri, et al., 2003, 2004; Shestakova, et al., 2002; Shestakova, et al., 2004). The results of Diesch & Luce (2000) demonstrated that vowels with high frequency formants elicited magnetic responses with dipoles located more to the anterior than dipoles of vowels with low frequency formants. This is reminiscent of the findings by Obleser and colleagues (Obleser, Lahiri, et al., 2003, 2004), where coronal – which was found to elicit more anterior ECDs – corresponds to high F2 values. Interestingly, Mäkelä et al. (2003) could show that labial vowels led to more anterior dipole localizations than non-labial vowels in their experiment. Acoustically, their vowels primarily differed in the dimension of the first formant, which suggests that the acoustic distinction between labial and coronal might based on the ratio of F2 and F1. Note that the lower frequency bands may provide cues for PoA distinctions even in fricatives, where the most salient cues are spectral peak locations and spectral moments in higher frequency bands (Jongman, et al., 2000). Formant structures of glides, on the other hand, resemble those of their closest vowels (cf. Stevens, 1998), such that we were able to use the F2/F1 ratio as estimator for the consonant dipole location in the auditory cortex which proved to correlate with the anterior-posterior location of the corresponding dipole. This is not a contradiction to our positional hypothesis, whereby relations between articulatory locations are reflected in the auditory cortex. It just so happens that the F2/F1 ratio appears to be the most robust acoustic cue for the articulator positions LABIAL and CORONAL. Importantly, this cue is crucially dependent on linguistic intelligibility, as suggested by previous work (Obleser, et al., 2006), which strengthens the view that this acoustic-to-articulator mapping (i.e. F2/F1 to PoA) is a solid basis for distinctive feature theory. We do not think that the positional hypothesis – albeit not directly tested in our experiments – is a superfluous assumption, since the converging evidence for an articulator-based ECD ordering and our model comparison suggest that a pure acoustic account of the dipole locations is not as good as an account comprising categorical, articulatory information. We agree with Obleser et al. (2004) that relations between speech sounds may be warped towards a representation of perceptually salient contrasts, rather than towards a representation of linear acoustic distances. We further speculate, against a simplistic reading of our actual behavior results, that in our experiment, the labial glides and fricatives were perceptually more salient due to their visual cues, which in turn may have caused their stronger MMF responses.

Our view that the featural basis is both acoustic and articulatory information is bolstered by our coarticulatory finding, as seen in the vowel M100 dipole locations, which followed the same pattern as the consonants. As seen in Figure 1, fricatives and especially glides exerted a measurable coarticulatory influence on the initial vowel of our stimuli. This influence is expressible in slight changes of F2 and F1, but did not change the vowel category (i.e. the [a] did not change to [e]). Obviously, this slight acoustic change transported knowledge about the corresponding articulator movements responsible for the production of the upcoming consonants.

Regarding lexical feature specifications, our experiment provided no new evidence for featural underspecification as proposed by Lahiri & Reetz (2002). In sensor space, we found that the MMF in our MEG study was consistently stronger if the deviants had a labial place of articulation. In addition, the contrast between a coronal standard and a labial deviant led to a stronger brain magnetic response than vice versa. Since coronals are assumed to be underspecified, while labials are specified, our outcome is inconsistent with the Eulitz & Lahiri (2004) findings and proposed explanation. It is worth noting, however, that we used different segment types (consonants instead of vowels) and a different language (English instead of German), limiting the strict equivalence of our results. We leave it open for future research whether the theoretical assumptions regarding the PoA of English glides and fricatives have to be revised, or whether the Mismatch Negativity may not be the best or most direct way to assess the assumption of coronal underspecification for consonants. Note that previous work on vowels showed Mismatch Negativity results that were compatible with FUL’s predictions (Eulitz & Lahiri, 2004; Scharinger, Eulitz, Lahiri, 2010). At this point we cannot completely reconcile these findings, a synthesis subsuming both sets of results will almost certainly require additional MMN studies.

Regarding latencies, our data are less consistent and showed no effects for PoA. We attribute this to the selection of naturally spoken VCV stimuli, where acoustic and co-articulatory cues might have become available at variable times so that we cannot provide a concise account of the time course of feature processing and integration. Interestingly, the coronal fricative showed earlier latencies in the consonantal M100 and mismatch field time windows, which could have resulted from an earlier availability of spectral energy at the consonant onset.

Taken together, our data indicate that the brain uses feature-based categories to structure the phoneme inventory of a given language (here: American English). The responses in source and sensor space seemed to be driven primarily by both acoustic information, and modulated by categorical top-down influences. We suggest that the features labial and coronal circumscribe particular articulatory configurations that result in robust acoustic cues over a range of manners of articulation. Our MMF results provide evidence for the assumption that LABIAL and CORONAL are featurally distinct PoAs for consonants. The corresponding ECDs of their evoked auditory M100 responses predominantly differed along the anterior/posterior axis in the auditory cortex. Thus, the brain keeps spatially apart robust acoustic information emerging from distinct articulator configurations during early stages of speech perception.

Acknowledgments

We would like to thank David Poeppel, Diogo Almeida, and Philip Monahan for helpful comments on earlier versions of this manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Ackermann H, Hertrich I, Mathiak K, Lutzenberger W. Contralaterality of cortical auditory processing at the level of the M50/M100 complex and the mismatch field: A whole-head magnetoencephalography study. Neuroreport. 2001;12(8):1683–1687. doi: 10.1097/00001756-200106130-00033. [DOI] [PubMed] [Google Scholar]

- Baayen H. Analyzing Linguistic Data: A Practical Introduction to Statistics using R. Cambridge: Cambridge University Press; 2008. [Google Scholar]

- Baayen H, Davidson DJ, Bates DM. Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory & Language. 2008;59(4):390–412. [Google Scholar]

- Baayen H, Piepenbrock R, Gulikers L. The CELEX Lexical Database [CD-Rom] Philadelphia, PA: Linguistic Data Consortium, University of Pennsylvania; 1995. [Google Scholar]

- Boersma P, Weenink D. PRAAT: Doing Phonetics by Computer (ver. 5.1.0) Amsterdam: Institut for Phonetic Sciences; 2009. [Google Scholar]

- Bonte ML, Mitterer H, Zellagui N, Poelmans H, Blomert L. Auditory cortical tuning to statistical regularities in phonology. Clinical Neurophysiology. 2005;116:2765–2774. doi: 10.1016/j.clinph.2005.08.012. [DOI] [PubMed] [Google Scholar]

- Bonte ML, Poelmans H, Blomert L. Deviant neurophysiological responses to phonological regularities in speech in dyslexic children. Neuropsychologia. 2007;45(7):1427–1437. doi: 10.1016/j.neuropsychologia.2006.11.009. [DOI] [PubMed] [Google Scholar]

- Boysson-Bardies B, Vihman MM. Adaptation to language: Evidence from babbling and first words in four languages. Language. 1991:297–319. [Google Scholar]

- Chomsky N, Halle M. The Sound Pattern of English. New York: Harper and Row; 1968. [Google Scholar]

- de Cheveigné Ad, Simon JZ. Denoising based on time-shift PCA. Journal of Neuroscience Methods. 2007;165(2):297–305. doi: 10.1016/j.jneumeth.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Cheveigné Ad, Simon JZ. Sensor noise suppression. Journal of Neuroscience Methods. 2008;168(1):195–202. doi: 10.1016/j.jneumeth.2007.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dhanjal NS, Handunnetthi L, Patel MC, Wise RJS. Perceptual systems controlling speech production. Journal of Neuroscience. 2008;28(40):9969–9969. doi: 10.1523/JNEUROSCI.2607-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diesch E, Eulitz C, Hampson S, Ross B. The neurotopography of vowels as mirrored by evoked magnetic field measurements. Brain and Language. 1996;53(2):143–168. doi: 10.1006/brln.1996.0042. [DOI] [PubMed] [Google Scholar]

- Diesch E, Luce T. Magnetic fields elicited by tones and vowel formants reveal tonotopy and nonlinear summation of cortical activation. Psychophysiology. 1997;34(5):501–510. doi: 10.1111/j.1469-8986.1997.tb01736.x. [DOI] [PubMed] [Google Scholar]

- Diesch E, Luce T. Topographic and temporal indices of vowel spectral envelope extraction in the human auditory cortex. Journal of Cognitive Neuroscience. 2000;12(5):878–893. doi: 10.1162/089892900562480. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Loizou PC. Relative spectral change and formant transitions as cues to labial and alveolar place of articulation. The Journal of the Acoustical Society of America. 1996;100:3825–3825. doi: 10.1121/1.417238. [DOI] [PubMed] [Google Scholar]

- Eulitz C, Lahiri A. Neurobiological evidence for abstract phonological representations in the mental lexicon during speech recognition. Journal of Cognitive Neuroscience. 2004;16(4):577–583. doi: 10.1162/089892904323057308. [DOI] [PubMed] [Google Scholar]

- Friedrich C, Lahiri A, Eulitz C. Neurophysiological evidence for underspecified lexical representations: Asymmetries with word initial variations. Journal of Experimental Psychology: Human Perception & Performance. 2008;34(6):1545–1559. doi: 10.1037/a0012481. [DOI] [PubMed] [Google Scholar]

- Friedrich CK. Neurophysiological correlates of mismatch in lexical access. BMC Neuroscience. 2005;6:64. doi: 10.1186/1471-2202-6-64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gage N, Poeppel D, Roberts TP, Hickok G. Auditory evoked M100 reflects onset acoustics of speech sounds. Brain Research. 1998;814(1–2):236–239. doi: 10.1016/s0006-8993(98)01058-0. [DOI] [PubMed] [Google Scholar]

- Ghini M. Asymmetries in the Phonology of Miogliola. Berlin: Mouton de Gruyter; 2001. [Google Scholar]

- Halle M. On the origin of the distinctive features. International Journal of Slavic Linguistics and Poetics. 1983;27(Supplement):77–86. [Google Scholar]

- Hume E, Johnson K, Seo M, Tserdanelis G, Winters S. A cross-linguistic study of stop place perception. Paper presented at the Proceedings of the XIVth International Congress of Phonetic Sciences.1999. [Google Scholar]

- Huotilainen M, Tiitinen H, Lavikainen J, Ilmoniemi RJ, Pekkonen E, Sinkkonen J, et al. Sustained fields of tones and glides reflect tonotopy of the auditory cortex. NeuroReport. 1995;6(6):841–841. doi: 10.1097/00001756-199504190-00004. [DOI] [PubMed] [Google Scholar]

- Jongman A, Wayland R, Wong S. Acoustic characteristics of English fricatives. The Journal of the Acoustical Society of America. 2000;108:1252–1252. doi: 10.1121/1.1288413. [DOI] [PubMed] [Google Scholar]

- Ladefoged P. A Course in Phonetics. 4. Fort Worth: Harcourt College Publishers; 2001. [Google Scholar]

- Lahiri A, Marslen-Wilson WD. The mental representation of lexical form: A phonological approach to the recognition lexicon. Cognition. 1991;38:245–294. doi: 10.1016/0010-0277(91)90008-r. [DOI] [PubMed] [Google Scholar]

- Lahiri A, Reetz H. Underspecified recognition. In: Gussenhoven C, Warner N, editors. Laboratory Phonology VII. Berlin: Mouton de Gruyter; 2002. pp. 637–677. [Google Scholar]

- Lahiri A, Reetz H. Distinctive features: Phonological underspecification in representation and processing. Journal of Phonetics. 2010;38:44–59. [Google Scholar]

- Langner G, Sams M, Heil P, Schulze H. Frequency and periodicity are represented in orthogonal maps in the human auditory cortex: Evidence from magnetoencephalography. Journal of Comparative Physiology A: Neuroethology, Sensory, Neural, and Behavioral Physiology. 1997;181(6):665–676. doi: 10.1007/s003590050148. [DOI] [PubMed] [Google Scholar]

- Macmillan NA, Creelman CD. Detection Theory: A User’s Guide. Mahwah, NJ: Erlbaum; 2005. [Google Scholar]

- MacNeilage PF, Davis BL, Matyear CL. Babbling and first words: Phonetic similarities and differences. Speech Communication. 1997;22(2–3):269–277. [Google Scholar]

- Mäkelä AM, Alku P, Tiitinen H. The auditory N1m reveals the left-hemispheric representation of vowel identity in humans. Neuroscience Letters. 2003;353(2):111–114. doi: 10.1016/j.neulet.2003.09.021. [DOI] [PubMed] [Google Scholar]

- Menning H, Zwitserlood P, Schöning S, Hihn H, Bölte J, Dobel C, et al. Pre-attentive detection of syntactic and semantic errors. Neuroreport. 2005;16(1):77–80. doi: 10.1097/00001756-200501190-00018. [DOI] [PubMed] [Google Scholar]

- Monahan P, Idsardi WJ. Auditory sensitivity to formant ratios: Toward an account of vowel normalization. Language and Cognitive Process. 2010;25(6):808–839. doi: 10.1080/01690965.2010.490047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Näätänen R. The perception of speech sounds by the human brain as reflected by the mismatch negativity (MMN) and its magnetic equivalent (MMNm) Psychophysiology. 2001;38:1–21. doi: 10.1017/s0048577201000208. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Alho K. Mismatch negativity (MMN) - the measure for central sound representation accuracy. Audiology and Neurotology. 1997;2:341–353. doi: 10.1159/000259255. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Lehtokoski A, Lennes M, Cheour M, Huotilainen M, Ilvonen A, et al. Language-specific phoneme representations revealed by electric and magnetic brain responses. Nature. 1997;385:432–434. doi: 10.1038/385432a0. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Paavilainen P, Rinne T, Alho K. The mismatch negativity (MMN) in basic research of central auditory processing: A review. Clinical Neurophysiology. 2007;118(12):2544–2590. doi: 10.1016/j.clinph.2007.04.026. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Picton T. The N1 wave of the human electric and magnetic response to sound: A review and an analysis of the component structure. Psychophysiology. 1987;24(4):375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Winkler I. The concept of auditory stimulus presentation in cognitive neuroscience. Psychological Bulletin. 1999;125:826–859. doi: 10.1037/0033-2909.125.6.826. [DOI] [PubMed] [Google Scholar]

- Obleser J, Elbert T, Eulitz C. Attentional influences on functional mapping of speech sounds in human auditory cortex. BMC Neuroscience. 2004;5(1):24–24. doi: 10.1186/1471-2202-5-24. [10.1186/1471–2202–5-24] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obleser J, Elbert T, Lahiri A, Eulitz C. Cortical representation of vowels reflects acoustic dissimilarity determined by formant frequencies. Cognitive Brain Research. 2003;15(3):207–213. doi: 10.1016/s0926-6410(02)00193-3. [DOI] [PubMed] [Google Scholar]

- Obleser J, Lahiri A, Eulitz C. Auditory-evoked magnetic field codes place of articulation in timing and topography around 100 milliseconds post syllable onset. Neuroimage. 2003;20:1839–1847. doi: 10.1016/j.neuroimage.2003.07.019. [DOI] [PubMed] [Google Scholar]

- Obleser J, Lahiri A, Eulitz C. Magnetic brain response mirrors extraction of phonological features from spoken vowels. Journal of Cognitive Neuroscience. 2004;16(1):31–39. doi: 10.1162/089892904322755539. [DOI] [PubMed] [Google Scholar]

- Obleser J, Scott SK, Eulitz C. Now you hear it, now you don’t: Transient traces of consonants and their unintelligible analogues in the human brain. Cerebral Cortex. 2006;16(8):1069–1076. doi: 10.1093/cercor/bhj047. [DOI] [PubMed] [Google Scholar]

- Oehman SEG. Coarticulation in VCV utterances: Spectrographic measurements. Journal of the Acoustical Society of America. 1966;39(1):151–168. doi: 10.1121/1.1909864. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: The Edinburgh Inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Pantev C, Hoke M, Lehnertz K, Lütkenhöner B, Anogianakis G, Wittkowski W. Tonotopic organization of the human auditory cortex revealed by transient auditory evoked magnetic fields. Electroencephalography & Clinical Neurophysiology. 1988;69:160–170. doi: 10.1016/0013-4694(88)90211-8. [DOI] [PubMed] [Google Scholar]

- Pantev C, Hoke M, Lutkenhoner B, Lehnertz K. Tonotopic organization of the auditory cortex: Pitch versus frequency representation. Science. 1989;246(4929):486–488. doi: 10.1126/science.2814476. [DOI] [PubMed] [Google Scholar]

- Phillips C, Pellathy T, Marantz A, Yellin E, Wexler K, Poeppel D, et al. Auditory cortex accesses phonological categories: An MEG mismatch study. Journal of Cognitive Neuroscience. 2000;12:1038–1105. doi: 10.1162/08989290051137567. [DOI] [PubMed] [Google Scholar]

- Picard C, Olivier A. Sensory cortical tongue representation in man. Journal of Neurosurgery. 1983;59:781–789. doi: 10.3171/jns.1983.59.5.0781. [DOI] [PubMed] [Google Scholar]

- Pinheiro JC, Bates DM. Mixed-effects models in S and S-PLUS. Springer Verlag; 2000. [Google Scholar]

- Poeppel D, Idsardi WJ, van Wassenhove V. Speech perception at the interface of neurobiology and linguistics. Philosophical Transactions of the Royal Society London. 2008;363(1493):1071–1086. doi: 10.1098/rstb.2007.2160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poeppel D, Marantz A. Cognitive neuroscience of speech processing. In: Marantz A, Miyashita Y, O’Neil W, editors. Image, Language, Brain. Cambridge, MA: MIT Press; 2000. pp. 29–29. [Google Scholar]

- Poeppel D, Yellin E, Phillips C, Roberts TP, Rowley HA, Wexler K, et al. Task-induced asymmetry of the auditory evoked M100 neuromagnetic field elicited by speech sounds. Cognitive Brain Research. 1996;4(4):231–242. doi: 10.1016/s0926-6410(96)00643-x. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Kujala T, Shtyrov Y, Simola J, Tiitinen H, Alku P, et al. Memory traces for words as revealed by the mismatch negativity. Neuroimage. 2001;14:607–616. doi: 10.1006/nimg.2001.0864. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Shtyrov Y. Language outside the focus of attention: The mismatch negativity as a tool for studying higher cognitive processes. Progress in Neurobiology. 2006;79:49–71. doi: 10.1016/j.pneurobio.2006.04.004. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Shtyrov Y, Kujala T, Näätänen R. Word-specific cortical activity as revealed by the mismatch negativity. Psychophysiology. 2004;41:106–112. doi: 10.1111/j.1469-8986.2003.00135.x. [DOI] [PubMed] [Google Scholar]

- Reetz H. Unpublished Habilitationsschrift. Universität des Saarlandes; Saarbrücken: 1998. Automatic Speech Recognition with Features. [Google Scholar]

- Reetz H. Underspecified phonological features for lexical access. Phonus: Reports in Phonetics, Universität des Saarlandes. 2000;5:161–173. [Google Scholar]

- Reetz H, Jongman A. Phonetics: Transcription, Productions, Acoustics and Perception. Oxford: Wiley-Blackwell; 2008. [Google Scholar]

- Roberts TP, Ferrari P, Poeppel D. Latency of evoked neuromagnetic M100 reflects perceptual and acoustic stimulus attributes. Neuroreport. 1998;9(14):3265–3269. doi: 10.1097/00001756-199810050-00024. [DOI] [PubMed] [Google Scholar]

- Roberts TP, Poeppel D. Latency of auditory evoked M100 as a function of tone frequency. Neuroreport. 1996;7(6):1138–1140. doi: 10.1097/00001756-199604260-00007. [DOI] [PubMed] [Google Scholar]

- Sagey E. Doctoral Dissertation. Cambridge, MA: MIT; 1986. The representation of features and relations in non-linear phonology. [Google Scholar]

- Sanders LD, Newport EL, Neville HJ. Segmenting nonsense: An event-related potential index of perceived onsets in continuous speech. Nature Neuroscience. 2002;5(7):700–703. doi: 10.1038/nn873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarvas J. Basic mathematical and electromagnetic concepts of the biomagnetic inverse problem. Physics in Medicine & Biology. 1987;32:11–22. doi: 10.1088/0031-9155/32/1/004. [DOI] [PubMed] [Google Scholar]

- Scharinger M, Eulitz C, Lahiri A. Mismatch Negativity Effects of alternating vowels in morphologically complex word forms. Journal of Neurolinguistics. 2010;23:383–399. [Google Scholar]

- Scherg M, Vajsar J, Picton TW. A source analysis of the late human auditory evoked field. Journal of Cognitive Neuroscience. 1990;1:336–355. doi: 10.1162/jocn.1989.1.4.336. [DOI] [PubMed] [Google Scholar]

- Shestakova A, Brattico E, Huotilainen M, Galunov V, Soloviev A, Sams M, et al. Abstract phoneme representations in the left temporal cortex: Magnetic mismatch negativity study. Neuroreport. 2002;13(14):1813–1816. doi: 10.1097/00001756-200210070-00025. [DOI] [PubMed] [Google Scholar]

- Shestakova A, Brattico E, Soloviev A, Klucharev V, Huotilainen M. Orderly cortical representation of vowel categories presented by multiple exemplars. Cognitive Brain Research. 2004;21:342–350. doi: 10.1016/j.cogbrainres.2004.06.011. [DOI] [PubMed] [Google Scholar]

- Shtyrov Y, Pulvermüller F. Memory traces for inflectional affixes as shown by mismatch negativity. European Journal of Neuroscience. 2002a;15:1085–1091. doi: 10.1046/j.1460-9568.2002.01941.x. [DOI] [PubMed] [Google Scholar]

- Shtyrov Y, Pulvermüller F. Neurophysiological evidence of memory traces for words in the human brain. Cognitive Neuroscience and Neuropsychology. 2002b;13(4):521–526. doi: 10.1097/00001756-200203250-00033. [DOI] [PubMed] [Google Scholar]

- Stevens K. On the quantal nature of speech. Journal of Phonetics. 1989;17:3–45. [Google Scholar]

- Stevens K, Blumstein SE. Invariant cues for place of articulation in stop consonants. Journal of the Acoustical Society of America. 1978;64:1358–1368. doi: 10.1121/1.382102. [DOI] [PubMed] [Google Scholar]

- Stevens KN. The quantal nature of speech: Evidence from articulatory-acoustic data. In: David EE, Denes PB, editors. Human communication: A unified view. New York: McGraw-Hill; 1972. pp. 51–66. [Google Scholar]

- Stevens KN. Constraints imposed by the auditory system on the properties used to classify speech sounds: Data from phonology, acoustics, and psychoacoustics. In: Myers T, Anderson J, editors. The cognitive representation of speech. New York: North-Holland Publishing Co; 1981. pp. 61–74. [Google Scholar]

- Stevens KN. Acoustic phonetics. Vol. 30. Cambridge, MA; London, England: The MIT Press; 1998. [Google Scholar]

- Stevens KN. Toward a model for lexical access based on acoustic landmarks and distinctive features. The Journal of the Acoustical Society of America. 2002;111:1872–1891. doi: 10.1121/1.1458026. [DOI] [PubMed] [Google Scholar]

- Stevens KN. Features in speech perception and lexical access. In: Pisoni DB, Remez RE, editors. The Handbook of Speech Perception. Oxford: Blackwell; 2005. pp. 125–156. [Google Scholar]

- Tanriverdi T, Al-Jehani H, Poulin N, Olivier A. Functional results of electrical cortical stimulation of the lower sensory strip. Journal of Clinical Neuroscience. 2009;16:1188–1194. doi: 10.1016/j.jocn.2008.11.010. [DOI] [PubMed] [Google Scholar]

- Tavabi K, Obleser J, Dobel C, Pantev C. Auditory evoked fields differentially encode speech features: An MEG investigation of the P50m and N100m time courses during syllable processing. European Journal of Neuroscience. 2007;25:3155–3162. doi: 10.1111/j.1460-9568.2007.05572.x. [DOI] [PubMed] [Google Scholar]

- Tiitinen H, Alho K, Huotilainen M, Ilmoniemi RJ, Simola J, Näätänen R. Tonotopic auditory cortex and the magnetoencephalographic (MEG) equivalent of the mismatch negativity. Psychophysiology. 1993;30(5):537–540. doi: 10.1111/j.1469-8986.1993.tb02078.x. [DOI] [PubMed] [Google Scholar]

- Vihman MM. Phonological development from babbling to speech: Common tendencies and individual differences. Applied Psycholinguistics. 1986;7(1):3–40. [Google Scholar]

- Wheeldon L, Waksler R. Phonological underspecification and mapping mechanisms in the speech recognition lexicon. Brain and Language. 2004;90:401–412. doi: 10.1016/S0093-934X(03)00451-6. [DOI] [PubMed] [Google Scholar]

- Winkler I, Lehtokoski A, Alku P, Vainio M, Czigler I, Csepe V, et al. Pre-attentive detection of vowel contrasts utilizes both phonetic and auditory memory representations. Cognitive Brain Research. 1999;7(3):357–369. doi: 10.1016/s0926-6410(98)00039-1. [DOI] [PubMed] [Google Scholar]

- Winters SJ. Testing the relative salience of audio and visual cues for stop place of articulation. The Journal of the Acoustical Society of America. 1999;106:2271–2271. [Google Scholar]

- Woods DL, Alain C, Covarrubias D, Zaidel O. Middle latency auditory evoked potentials to tones of different frequencies. Hearing Research. 1995;85:69–75. doi: 10.1016/0378-5955(95)00035-3. [DOI] [PubMed] [Google Scholar]

- Woods DL, Elmasian R. The habituation of event-related potentials to speech sounds and tones. Electroencephalography and Clinical Neurophysiology. 1986;65(6):447–459. doi: 10.1016/0168-5597(86)90024-9. [DOI] [PubMed] [Google Scholar]