Abstract

Purpose

The goal of this study was to determine if older blind participants recognize time-compressed speech better than older sighted participants.

Method

Three groups of adults with normal hearing participated (n = 10/group): older sighted, older blind, and younger sighted listeners. Low-predictability sentences that were uncompressed (0% time compression ratio, TCR) and compressed at three rates (40%, 50%, and 60% TCR) were presented to listeners in quiet and noise.

Results

Older blind listeners recognized all time-compressed speech stimuli significantly better than older sighted listeners in quiet. In noise, the older blind adults recognized the uncompressed and 40% TCR speech stimuli better than the older sighted adults. Performance differences between the younger sighted adults and older blind adults were not observed.

Conclusions

The findings support the notion that older blind adults recognize time-compressed speech considerably better than older sighted adults in quiet and noise. Their performance levels are similar to those of younger adults, suggesting that age-related difficulty in understanding time-compressed speech is not an inevitable consequence of aging. Rather, frequent listening to speech at rapid rates, which was highly correlated with performance of the older blind adults, may be a useful technique to minimize age-related slowing in speech understanding.

Older adults, even those with hearing sensitivity within the normal range, often complain of difficulty understanding rapid speech or speech in a background of noise. Numerous researchers have identified a significant age effect, independent of the effects of hearing loss, on recognition tasks using simulations of rapid speech via time compression, conducted either in quiet or background noise (Gordon-Salant & Fitzgibbons, 1993, 1995; Sommers, 1997; Tun, 1998). Evidence from many studies also points to a significant age effect, again independent of hearing loss, for speech recognition performance in a background of noise, even when the speech has not been altered temporally (Dubno, Dirks, & Morgan, 1984; Dubno, Horwitz, & Ahlstrom, 2002; Pichora-Fuller, Schneider, & Daneman, 1995; Tun, 1998; Tun, O’Kane, & Wingfield, 2002). Exploration of the sources of older listeners’ difficulty in understanding time-compressed speech and speech in the presence of background noise is ongoing.

There is general agreement that the origins of the observed deterioration are not solely peripheral in nature. Studies in which researchers have carefully controlled for the effects of peripheral hearing loss have clearly shown an independent effect of age on auditory temporal processing measures, as assessed with psychophysical tasks (e.g., Fitzgibbons & Gordon-Salant, 2001) and speech recognition tasks using temporally altered speech (e.g., Gordon-Salant & Fitzgibbons, 1993). Experiments in which the speech recognition tasks have been made more challenging, either by increasing the complexity of the tasks, degrading the stimuli or both, have been particularly effective in demonstrating age-related changes in performance (Dubno, et al., 1984; Gordon-Salant & Fitzgibbons, 1995). Electrophysiologic studies with rapid acoustic signals confirm some of the age-related effects observed on behavioral tasks of processing acoustic stimuli presented at rapid rates. For example, electrophysiologic studies using speech stimuli with rapid inter-stimulus intervals have shown increased late-potential latencies (N1-P2 complex) for older adults in comparison to younger listeners (Tremblay, Billings, and Rohila, 2004). Various auditory and cognitive mechanisms of decline have been cited as potential sources of age-related deterioration in speech recognition performance in older adults, but specific loci of degeneration are still under investigation. Based on the research, it seems likely that a combination of factors, including central auditory processing decline, working memory changes, overall cognitive slowing, and reduced inhibitory mechanisms, in addition to age-related hearing loss when present, play a role in the observed deterioration in performance (Salthouse, 1985; Spehar, Tye-Murray, & Sommers, 2004).

Recent studies have explored the possible benefits of auditory or other types of compensatory training for older adults, including protocols related to working memory function, speed of processing, and practice-driven adaptation to time-compressed speech (e.g., Carretti, Borella, & DeBeni, 2007; Henderson-Sabes & Sweetow, 2007; Peelle & Wingfield, 2005; Vance et al., 2007). While some promising results have been reported, further investigation is required to identify effective methods for reducing or remediating the detrimental effects of age-related deterioration in speech perception.

Blind adults, who cannot utilize visual cues to aid them in speech processing, rely more heavily on the auditory modality to interact with their environment. In addition, some blind adults listen to recorded materials (i.e., books or lectures on tape, computerized text-to-speech synthesizers, or even telephone answering machines) at an accelerated rate of playback to improve their efficiency in receiving and processing these materials. These observations suggest that blind adults may be better able to utilize the auditory modality for speech processing tasks than sighted adults. This implies that blind adults may perform better on speech perception tasks of degraded or distorted speech than sighted adults with equivalent hearing status, and it is possible that this exceptional speech perception ability is maintained throughout the lifespan. Performance differences between blind and sighted adults, if observed, may be related to training, neurophysiologic changes or other factors. Evidence from a variety of studies supports the existence of superior auditory temporal processing, cross-modal reorganization at the cortical level, or some combination of the two in selected samples of blind adults.

In the absence of visual sensory input, blind individuals rely greatly on the haptic and auditory modalities in order to function effectively in the world around them. Over the last few decades, a number of researchers have investigated the ways in which total deprivation of the visual modality affects functioning in unimpaired sensory modalities. Evidence for cross-modal plasticity (also referred to as neural reorganization), enhancement of functioning in the auditory modality, or both has emerged from many studies (Kujala et al., 1995; Kujala et al., 2005; Niemeyer & Starlinger, 1981; Roder, Rosler, & Neville, 2000). Neural reorganization occurs when brain structures perform functions not typically associated with them, such as visual cortex becoming active during auditory processing tasks. Alternatively, an enhancement in functioning in an intact modality might be demonstrated by the recording of shorter auditory evoked potential (AEP) latencies, implying more rapid processing of auditory inputs. The results of studies in these areas support the idea that neural structures may have the capacity to adapt and compensate for sensory deprivation. However, participants in most studies have been in their first through fourth decades of life, with few investigations of older blind adults. The majority of studies in humans have capitalized on technological advances, using electrophysiology and imaging techniques, to investigate possible functional cerebral reorganization or adaptation in blind adults.

In one study using fMRI to evaluate the functional neuroanatomy of speech comprehension in congenitally blind adults (ages 21–33), it was found that blind participants showed greater bilateral and posterior activation (including areas of visual cortex) during language processing than sighted controls (Roder, Stock, Bien, Neville, & Rosler, 2002). In another study, Amedi, Raz, Pianka, Malach, and Zohary (2003) observed task-specific areas of visual cortex activation in blind participants that were absent in the sighted controls during experimental tasks involving auditory, tactile, and linguistic modalities. Other studies, though fewer in number, have used behavioral and psychophysical methods to explore auditory and somatosensory function in blind adults. Many of the studies assessing auditory processing in blind adults have involved localization tasks (e.g. Weeks et al., 2000). In one study utilizing speech stimuli (Muchnik, Efrati, Nemeth, Malin, & Hildesheimer, 1991), the investigators evaluated four monaural speech measures on three groups of young adult participants: congenitally blind adults, adults with acquired blindness, and sighted controls. Speech recognition thresholds (SRT) and supra-threshold speech recognition scores were measured in quiet and noise. Results of these experiments indicated a significant group × condition interaction, with the blind participants performing significantly better than the sighted controls on the speech-in-noise task, but not on the measure of speech recognition in quiet. The results appear to support the presence of a performance advantage in blind adults when task parameters are sufficiently challenging.

Additional evidence of performance advantages in blind adults on attention-demanding tasks was presented by Hugdahl and colleagues (2004). Three different attention conditions were employed on tasks of dichotic listening to consonant-vowel (CV) syllables. While all participants showed a marked right-ear advantage, blind participants performed significantly better than sighted controls on free recall and directed-left conditions. The authors suggested that these results reflected the superior ability of blind adults to attend to auditory stimuli and inhibit responses to opposing auditory stimuli, postulating some pre-frontal cortex location for this advantage. Several potential confounding factors limit the validity of the findings, however. The samples of blind and sighted participants were poorly matched, both in number and age of group members. The presentation order of conditions was not reported as randomized, and presentation levels were subjectively determined on an individual basis. Also, the method of scoring could have been affected by experimenter bias, although the results appear consistent overall with findings from previous studies. Results such as these may indicate advantages for blind adults in attending to desired auditory stimuli or inhibiting undesired auditory stimuli. However, the complex cause and effect relationships between structural changes and functional activities are not clear.

The evidence supporting compensation across and within sensory modalities in blindness is compelling. Whether the source of compensation lies in increased processing efficiency in intact modalities, neural reorganization across sensory modalities, attention or memory advantages, or a combination of all of these, it appears that the brain has the capacity to adjust and maximize performance. However, the vast majority of studies in this area have been conducted on young or middle-aged adults. Very few have included older adults as participants or made performance comparisons between older populations of blind and sighted adults. None of the studies specifically examined the perception of rapid, complex, sentence-length speech stimuli, in quiet or noise, by older blind adults. In addition, most of the studies of blind adults and children have used participants who had either a congenital or early onset of blindness, typically within the first few years of life. Very little is known regarding later-onset sensory deprivation. Whether or not the performance advantages identified in young blind adults are also present in older blind adults, or in older adults with a later onset of blindness, are questions that require additional investigation.

The overall objective of this investigation was to determine if older blind adults, who rely on the auditory modality, perform better than older sighted adults on speech recognition tasks that are known to be difficult for older listeners. Several inter-related experimental questions were examined: 1) Is there an effect of blindness on the ability of older listeners with normal hearing to recognize spoken sentences presented at normal and fast speech rates? 2) Is this effect observed in both quiet and noise? 3) Does the performance of older blind participants approximate that of a control group of young sighted participants on these speech measures? 4) For the blind participants, is performance on the rapid speech task related to frequency of listening to recorded speech materials at accelerated rates? To answer these questions, the performance of older sighted adults, older blind adults, and younger sighted adults, all with normal hearing, was compared on listening tasks using low-context sentence materials presented at a variety of time-compression rates. Speech stimuli were alternately presented in quiet or noisy backgrounds in an effort to sufficiently tax the auditory system in order to identify possible differences in performance between the blind and sighted participants.

Method

Participants

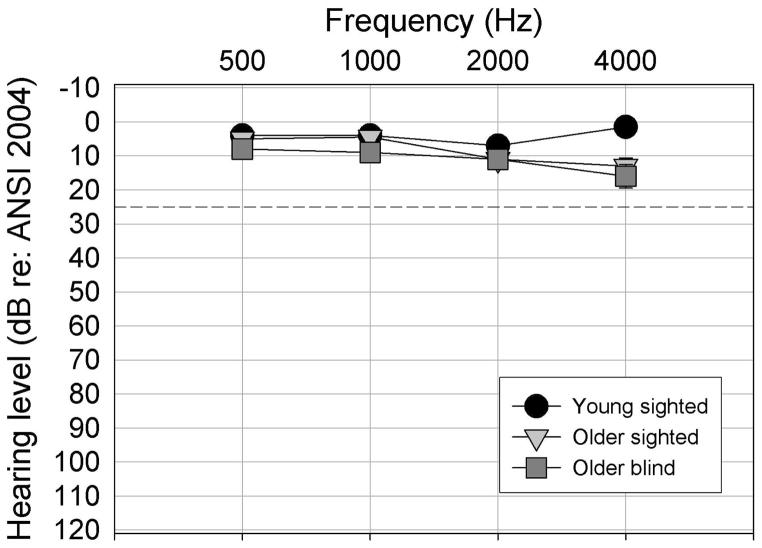

Three listener groups participated in these experiments, with ten listeners in each group. All participants had normal hearing sensitivity, defined as pure-tone air conduction thresholds ≤ 25 dB HL (ANSI, 2004) from 500–4000 Hz. The first and second groups were sighted, young and older listeners (ages ranging from 18–30 and 60–80 years, respectively). The third group included older listeners (ages ranging from 60–80 years) who were totally blind, defined as light-dark sensitivity at best, for a minimum of 20 years. The mean ages of the adults in the older blind and sighted groups were 67.0 years and 66.7 years, respectively. Mean audiograms for the three listener groups in the test ear are presented in Figure 1. All participants were required to demonstrate good to excellent monosyllabic word recognition as measured on Northwestern University Test No. 6 (Tillman & Carhart, 1966), to have normal tympanograms according to the published criteria (Roup, Wiley, Safady, & Stoppenbach, 1998), and to pass a brief screening test for cognitive awareness (the Short Portable Mental Status Questionnaire, Pfeiffer, 1975). Listeners selected for the experiment were all native speakers of English and lived independently in the community.

Figure 1.

Test-ear pure-tone hearing levels in dB HL (re: ANSI, 2004) for the three listener groups.

General case history information was collected from all participants, which included history of otologic disease, noise exposure, and education. Nearly all of the participants were college graduates. The mean years of education for the younger sighted listeners, older sighted listeners, and older blind listeners were 16.8 (s.d. = 1.47), 16.83 (s.d. =2.8), and 16.33 (s.d. = 3.31) years, respectively. The number of years of education was not significantly different between the three groups (F (2,29) = .105, p>.05). Additional case history information was gathered from the blind participants, including years and cause of blindness, and degree of light-dark sensitivity. Information was also obtained from all participants regarding experience in the use of recorded speech materials or computerized text-to-speech synthesizers at a faster-than-normal rate of playback. Specifically, participants were asked to indicate the number of hours/week they listened to recorded or digitized speech at faster-than-normal rates and if known, the speech rate. All participants were also tested on the two subtests of working/short-term memory of the WAIS-III (Wechsler, 1997): Letter-Number Sequencing and Digit Span. These measures were used to examine whether or not differences in working memory and short-term (primary) memory contributed to the performance of the older listeners on the experimental measures. The Letter-Number Sequencing and Digit Span subtests were appropriate for administration to the blind participants, because they did not involve visual information. The scores on the Letter-Number Sequencing subtest for three groups (young: M = 13.1, SD = 2.3; older sighted: M =10.7, SD = 2.4; older blind: M = 12.7, SD = 3.0) did not differ significantly [F(2,29) = 2.44, p>.05]. However, the mean scores on the Digit Span Test for the three groups (young: M = 20.7, SD = 4.1; older sighted: M = 16.6, SD = 3.4; older blind: M = 22.9, SD = 4.2) were significantly different [F(2,29) = 6.71, p<.01], with older blind participants performing significantly better than older sighted participants. The Letter Number Sequencing subtest is considered a test of working memory, whereas the Digit Span subtest represents short-term (primary) memory (Bopp & Verhaeghen, 2005). Thus, the three groups were reasonably equated for general cognitive abilities on the basis of years of education and working memory.

Stimuli

Stimuli for these experiments were the low-predictability (LP) sentences of the Revised Speech Perception in Noise Test (R-SPIN) (Bilger, Nuetzel, Rabinowitz, & Rzeczkowski, 1984). A total of 250 sentences (8 lists × 25 unique LP sentences per list) were used to minimize repetition of the individual sentences. These sentences contain minimal semantic contextual cues that can be used for predicting the final word in the sentence (e.g., “Miss Black was considering the net.”). The baseline speech rate for the sentences was the original, natural-rate of approximately 205 words per minute (WPM). All sentences in each of the eight lists were then modified to create three additional presentation rates: 40%, 50%, and 60% time compression ratio (TCR). Using WEDW waveform-editing software on the digitized sentences (for details on the digitization process see Gordon-Salant & Fitzgibbons, 1993), each sentence was initially edited to delete any silence at the beginning and/or end. Following this procedure, the duration of each sentence was measured using the WEDW software, and the post-compression sentence duration for each of the three TCRs was calculated for each sentence. The rates of time-compression used to generate the stimuli were based on findings by Gordon-Salant and Fitzgibbons (1993), which revealed main effects of age and distortion condition for TCRs of 40, 50, and 60% when the materials were presented in quiet. Every sentence was compressed at each of the three TCRs, for a total of 600 time-compressed sentences and 200 original-length sentences. The rms levels for all 800 sentences were sampled and equated using Cool Edit software (version 2.0, Syntrillium Software).

Preparation of the 32 sentence lists for recording (eight lists at each of the three TCRs, plus eight original-length lists) was carried out using speech paradigm software (UAB software, University of Alabama at Birmingham). Stimuli were presented with a carrier phrase (“Number 1, Number 2”) taken from the original R-SPIN recordings; these carrier phrases preceded stimulus onset by one second. . The inter-stimulus interval (ISI) was eight seconds. Based on a sampling of individuals in both of the study’s age groups, it was determined that this ISI was sufficient for a participant to repeat the sentence he or she had just heard, in accordance with the experimental procedures described below. Sixteen of the completed stimulus lists (four unique lists for each of the four presentation rates) were output from the computer to a pre-amplifier (ARTcessories MicroMix), and recorded onto digital audio tapes (Sony Pro-DAT Plus) using a two-channel DAT recorder (TASCAM DA-40). The remaining 16 unique sentence lists were recorded onto a second set of tapes on which the second channel contained the 12-talker babble of the original SPIN tapes. A 1000-Hz calibration tone, which was equivalent in rms level to the sentences, was created and recorded on the same channel of each tape prior to the speech stimuli. A second calibration tone, equivalent in rms level to the 12-talker babble, was recorded on the second channel prior to the onset of the babble.

Procedures

During the experiment, the speech stimuli and background babble were played through separate channels of the digital audio tape player (TASCAM DA-40), amplified (Crown 40), attenuated (HP350D), mixed through a Colbourn audio-mixer amplifier (S24) and routed to a single insert earphone (Etymotics ER3A). The test ear was the ear with better hearing thresholds or the listeners preferred ear. Calibration was conducted prior to each experimental session. This entailed separately calibrating Channels 1 and 2 of the DAT using a sound-level meter (Larson-Davis 800b) coupled to the insert earphone with a 2cm3 coupler. Channel 1 (speech) was calibrated to 85 dB SPL, and Channel 2 (noise) was calibrated to 78 dB SPL, for a signal-to-noise ratio (SNR) of +7 dB. After calibrating each channel individually, the channel outputs were mixed and calibration was performed again to ensure that the total output did not exceed 85 dB SPL. In the quiet conditions, the Channel 2 output was disconnected. The output level of 85 dB SPL for the speech was chosen to ensure good audibility for all participants and to facilitate comparison of the results with previous studies. The SNR of +7 dB was determined based on pilot testing with young adults yielding performance scores between 50% and 85% correct for the rapid-rate speech conditions. This range of performance scores was expected to tax the auditory processing abilities of the three participant groups, while avoiding floor and ceiling effects on performance, even with older listeners.

The experiment comprised eight different listening conditions administered to each participant: the sentences were presented in an unaltered format (natural-rate speech, designated as 0% TCR), and with uniform time-compression of 40, 50, and 60%. Each of the four TCR conditions (0, 40, 50, & 60% TCR) was presented once in quiet, and once in a background of multi-talker babble. A different LP-SPIN list was used for each condition.

Presentation order for the eight TCR conditions was randomized across listeners using the Web-based Research Randomizer tool (version 3.0, http://www.randomizer.org). In addition, the sentence list used for each TCR condition was randomly determined for each listener.

Participants were seated in a double-walled, sound-attenuating booth in the Hearing Science Laboratory at the Department of Hearing and Speech Sciences at the University of Maryland throughout the experimental procedure. Prior to commencing with the eight test conditions, each participant was familiarized with the task by completing two, 10-sentence practice lists, one in quiet and the other with the multitalker babble. Both practice lists contained a random sampling of the four TCR conditions. The participant’s task was to listen to each sentence and repeat it aloud, as clearly and completely as possible. Participants were also told that, in some conditions, the sentences would be presented in a background of many people talking. In these instances, they were instructed to ignore the noise to the best of their ability, and repeat each sentence in its entirety. Participant responses were recorded on an Olympus DM-1 digital voice recorder for later scoring. A percent-correct score was calculated for each condition based on the number of correctly identified content words in each sentence list. Content words included all nouns, verbs, adverbs, and adjectives.

Informed consent was obtained from all participants prior to commencing the preliminary tests. The entire procedure, including preliminary and experimental measures, was completed in approximately 2 hours. Listeners were given frequent breaks during the experiment and were reimbursed for their listening time at the conclusion of the experiment. The experimental protocol was approved by the University of Maryland Institutional Review Board for Human Subjects Research.

Results

Scoring was conducted after the participant completed the experiment, using the digital recordings of each participant’s verbal responses. A computer-based scoring tool was developed in MatLab (version 7.2 R2006a, MathWorks) for this purpose. The total number of correctly identified content words per sentence list was calculated for each listener. The maximum number of correct words per list was tabulated in advance using all words except articles and prepositions. The resulting total number of content words per list ranged from 99–112 (mean: 106 words). Eight scores were obtained for each participant, representing percent-correct speech recognition performance, in quiet and noise, for four different TCR conditions (0, 40, 50, & 60%).

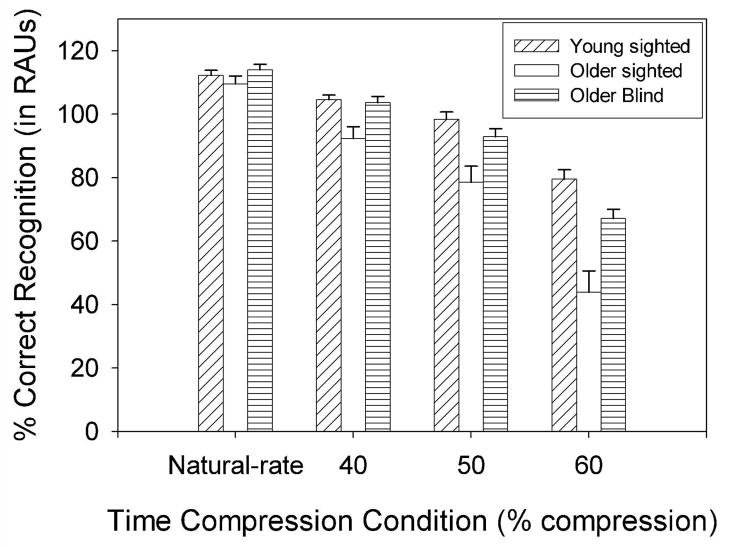

All percent-correct recognition scores were transformed to arcsine units prior to statistical analysis, which is useful when a proportional relationship exists between sample means and standard deviations and the distribution has a binomial form. Specifically, the data were converted to rationalized arcsine units (RAUs), a procedure often used for speech recognition scores in order to graph the data on a scale that appears close visually to the 0–100% scale of the original percent-correct scores (Studebaker, 1985). Mean scores for each of the three listener groups, at each rate of time compression in quiet and noise, are presented in Figures 2 and 3, respecitvely. An omnibus analysis of variance (ANOVA) was conducted using a mixed-model repeated measures design with one between-subjects variable (Group) and two within-subjects variables (TCR and Background). The ANOVA revealed significant main effects of Group [F(2, 27)=14.651, p<.001], TCR [F(2.729, 73.683)=414.475, p<.001], and Background [F(1, 27)=1249.060, p<.001], as well as a significant Group × TCR interaction [F(5.458, 73.683)=436.052, p<.01]. Because the main effect of Background was not involved in an interaction, the results indicated (as expected) that all listeners performed more poorly in noise than in quiet.

Figure 2.

Mean percent-correct recognition performance and standard errors for natural-rate speech and speech presented at TCRs of 40, 50, and 60% in quiet, for the three listener groups.

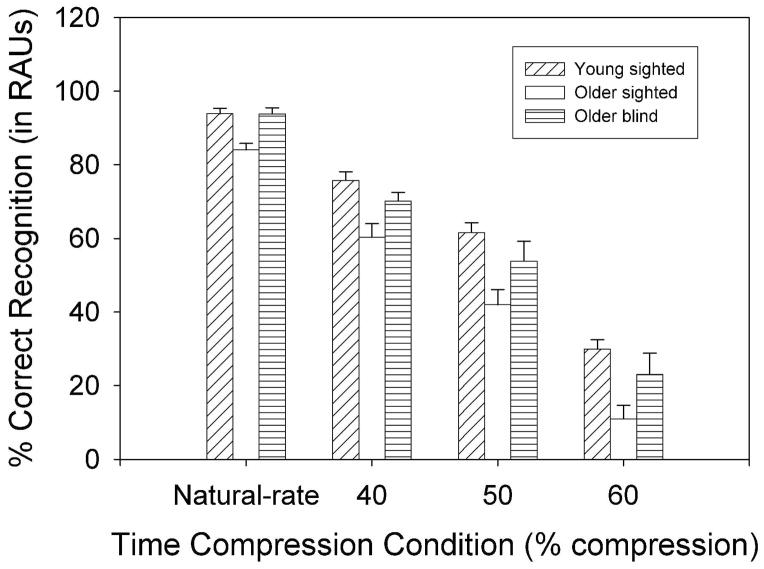

Figure 3.

Mean percent-correct recognition performance and standard errors for natural-rate speech and speech presented at TCRs of 40, 50, and 60% in noise (SNR = +7 dB) for the three listener groups.

Simple main effects analyses and post-hoc multiple comparison tests (Fisher’s Least Significant Differences or LSD) were conducted subsequently to examine the interaction between Group and TCR condition. The Fisher’s LSD post-hoc test protects the Type-I error rate across the family of pairwise comparisons as long as the omnibus F-test is used as an initial decision tool allowing for pairwise comparisons (Hancock & Klockars, 1996). In the present study, all post-hoc analyses using Fisher’s LSD procedure were preceded by a significant omnibus F-test, thereby meeting the requirement for protecting the Type-1 error rate. Table 1 presents the results of the one-way ANOVAs examining the significance of group effects in each condition. This table shows that there were significant group differences in all conditions except the natural-rate speech condition in quiet. Results of the multiple comparison tests revealed that older blind listeners performed significantly better than older sighted listeners at 40, 50, and 60% TCRs in quiet [p<.01]. The older blind listeners also performed significantly better than the older sighted listeners for the natural-rate and 40% TCR conditions in noise [p<.05]. There were no significant performance differences between young sighted listeners and older blind listeners in any of the experimental conditions. However, the young sighted listeners performed significantly better than the older sighted listeners in all conditions in quiet and noise except natural-rate speech in quiet.

Table 1.

Results of one-way ANOVAs examining group effects at each TCR

| Condition | df | F | significance |

|---|---|---|---|

| natural rate – quiet | 2, 29 | 1.30 | .29 |

| TC40 – quiet | 2, 29 | 7.16 | .003** |

| TC50 – quiet | 2,29 | 8.49 | .001** |

| TC60 – quiet | 2,29 | 15.81 | .000** |

| natural rate – noise | 2,29 | 11.95 | .000** |

| TC40 – noise | 2,29 | 7.59 | .002** |

| TC50 – noise | 2,29 | 5.46 | .01* |

| TC60 – noise | 2,29 | 5.17 | .01* |

significant at p<.05

significant at p<.01

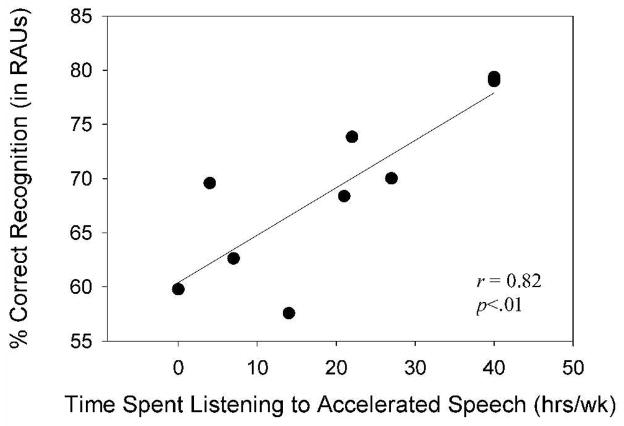

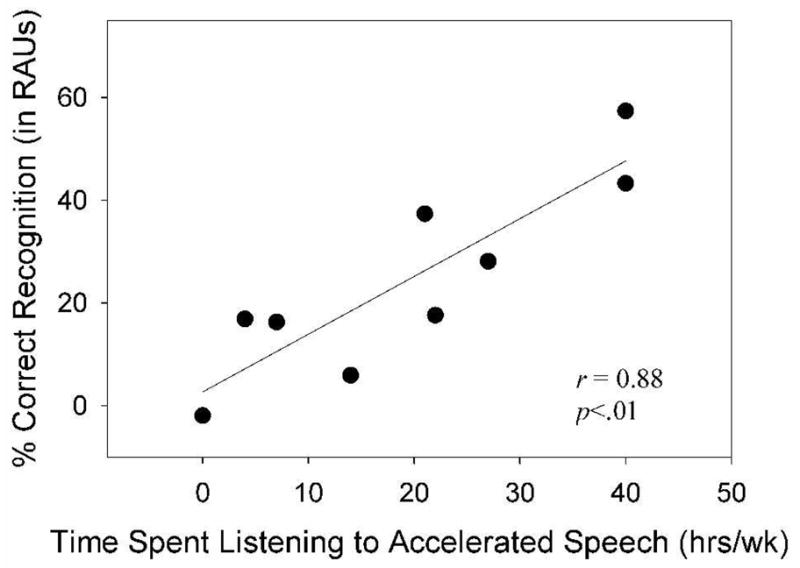

As noted previously, information was collected from all participants regarding the average number of hours per week during the past year in which they listened to recorded and/or computerized text-to-speech materials at an accelerated rate of playback. None of the young or older sighted participants had any experience with these types of materials. Among the older blind listeners, eight of the ten had some experience with these materials, ranging in hours from an estimated 4–40 per week. Correlation analyses were conducted on the data from this group to assess possible relationships between average weekly use of rapid-rate speech materials and performance on the experimental tasks. The results of these analyses revealed significant and strong correlations between the number of hours/week listening to rapid speech and speech recognition scores for the most difficult TCR conditions (60%) in quiet (r = .82, p<.01) and noise (r = .88, p<.01). These data are depicted in Figures 4 and 5. None of the other correlations between number of hours/week listening to rapid speech and speech recognition scores (TCR conditions of 0, 40, or 50% TCR) were significant (p>.05). Additionally, correlations between hours of listening to rapid speech and age or education were not significant (p>.05).

Figure 4.

Correlation results for older blind participants between average hours/week listening to rapid-rate speech materials and speech perception performance at a TCR of 60% in quiet.

Figure 5.

Correlation results for older blind participants between average hours/week listening to rapid-rate speech materials and speech perception performance at a TCR of 60% in noise.

Multiple regression analyses were also performed on the data of the three listener groups to examine the contributions of age, test-ear hearing thresholds, and scores on tests of working and short-term memory from the Wechsler Adult Intelligence Scale – Third Edition (WAIS-III). The dependent variable, in separate regression analyses, was the score on each of the eight speech recognition conditions. Significant models were revealed for all of the time compression conditions in quiet and noise; significant models were not revealed for the natural-rate speech conditions. Of the six models identified, the factor, age, was the only consistent variable contributing to the significance of the model in five of these analyses (all except TC40 in quiet). The variable, digit span, also contributed significantly to the regression model in three of the six models (TC40 in quiet, TC60 in quiet, and TC60 in noise). As noted previously, there was a significant group effect on the digit span measure [F(2,27) = 6.71, p<.01], in which older blind listeners performed significantly better than the older sighted listeners.

Discussion

The overarching goal of this study was to determine if a history of long-term blindness among older adults with normal hearing has an effect on recognition of temporally degraded speech. The findings clearly show that the effect of blindness is significant in most speech conditions that are degraded by time compression and/or noise, among older listeners with normal hearing.

Effect of Blindness

The analyses focused on comparing the performance of the two older listener groups with normal hearing to determine whether or not there is an effect of blindness on recognition of spoken sentences presented at normal and fast speech rates, in both quiet and noise. In quiet, the performance of the older blind group was not significantly different from that of the older sighted group in the natural-rate speech condition. This finding suggests that the two groups were equated quite well for recognition of undistorted speech. However, the older blind listeners exhibited significantly higher speech recognition scores than the older sighted listeners in every time compression condition. Thus, with fast speech presentation rates, the older blind participants demonstrated that they were able to recognize these stimuli with considerably higher accuracy than the older sighted listeners. Although investigations of auditory temporal processing and aging among sighted adults are numerous, very few studies reported in the literature have specifically examined auditory processing abilities of older, totally blind adults. None to date have focused on speech recognition performance of rapid-rate, sentence-length speech stimuli in this population. The findings of the present study appear consistent with results from earlier studies of young blind adults, revealing enhanced auditory processing for speech stimuli in people who are totally blind (e.g., Muchnik et al., 1991; Weeks et al., 2000). In the present study, these performance advantages were observed in individuals with a congenital or early age of blindness onset (five blind participants had an onset of blindness between 0–2 years of age), as well as in individuals with a later onset of blindness (the remaining five blind participants had an onset of blindness between 7–29 years of age), suggesting that the superior performance exhibited in blind versus sighted older adults is not tied exclusively to age of blindness onset.

The older blind participants also outperformed the older sighted participants in two of the conditions in noise. The effect of blindness was observed in the natural-rate and 40% TCR speech conditions, but not in the 50% and 60% TCR conditions. Examination of Figure 3 suggests a similar group effect across all four conditions, but there was more variability in the performance scores of the older blind participants in 50% and 60% TCR conditions compared to the natural-rate and 40% TCR conditions. It is possible that this level of variability in the most severe degradation conditions precluded the observation of a possible effect of blindness. Additional study with a larger number of participants in each group may clarify this observation. Nevertheless, it appears that there is some advantage for older blind adults in processing speech stimuli presented in noisy backgrounds. Older blind adults appear better able than older sighted adults to either attend to desired stimuli or inhibit the interference of noise on speech perception tasks, at least in conditions where temporal degradation of the speech signal is not too severe.

Effect of Age Among Sighted and Blind Participants

The third experimental question concerned the effect of age on performance. An initial examination of the data compared the performances of the younger and older sighted participants. The findings indicate that the older sighted participants consistently performed more poorly than the young sighted participants in every experimental condition involving noise and time compression, although their performance was comparable for natural-rate speech presented in quiet. The age effect in noise is consistent with numerous prior investigations (e.g., Dubno et al., 1984), and the age effect in time compression conditions agrees with prior studies that compared younger and older listeners using these materials in quiet (e.g., Wingfield, et al., 1985) and noise (e.g., Gordon-Salant & Fitzgibbons, 1995). Thus, the findings of this study confirm those of previous researchers regarding the presence of a significant age effect in the sighted population for tasks involving noise and/or time-compressed speech.

In the present investigation, a comparison also was made between the performance of the older blind participants and the younger sighted participants, in order to determine if the performance of the older blind participants approximates that of young sighted participants on these speech measures. This comparison revealed no significant differences in performance between these two groups in any of the eight experimental conditions. These findings suggest that the frequent observation of an age-related decline in recognition of rapid speech, especially in noise, may not simply be a function of the aging process. Rather, these results imply that greater attention to auditory information, which is the primary means of receiving spoken information by blind adults, may reduce the expected age-related decline in auditory temporal processing.

It is possible that there is an age-related decline in auditory temporal processing among blind adults. That is, younger blind adults may be exceptionally accurate in recognizing speech that is time-compressed at unusually high rates (60%, 70%, 80% time compression), even in noise, and this exceptional ability could exceed that of the older blind participants tested in the current investigation. However, the current study did not evaluate young, totally blind adults. Therefore the existence of a significant age effect for auditory temporal processing performance among blind adults could not be established. The absence of significant performance differences between older blind and young sighted adults reported here may reflect either the nonexistence of a significant age effect in the population of blind adults, or the presence of a significant age-related decline in this population (with the younger blind adults achieving exceptional performance levels). Data comparing the performance of young and older blind adults on tasks such as those in this study might contribute to an understanding of whether the older blind adults’ superior performance (in comparison to older sighted adults) is linked to intra- or cross-modal plasticity, training, or a combination of these elements.

Factors contributing to performance

The final question addressed is whether performance on the rapid speech task is related to frequency of listening to recorded speech materials at accelerated rates. None of the sighted participants reported listening to such rapid speech materials. However, the majority of blind participants reported listening to pre-recorded materials (such as audio books) or digitized text-to-voice materials (such as computerized playback of daily newsprint) at rapid playback rates. Some of the older blind participants reported listening to rapid digitized or recorded speech for 40 hours/week, on average.

The results of the correlation analyses showed that there was a significant and strong correlation between the number of hours per week spent listening to rapid speech and performance on the two conditions with the fastest presentation rates (60% TCR in quiet and noise). In particular, the high correlation coefficient observed in the 60%TCR condition in noise (r = .88) indicates that over 70% of the variance in speech recognition scores in this condition could be accounted for by weekly experience in listening to rapid speech. These intriguing findings lend some support to the notion that training of older adults with listening exercises involving rapid speech presentation rates and noise might improve their ability to process temporally degraded speech and speech in a background of noise. Clearly those blind adults who listened more often to accelerated speech had an advantage over the other blind adults in the most difficult and taxing of the experimental tasks. Whether or not this skill would generalize to other listening situations, populations, or rates of speech is unclear.

Summary and Conclusions

In summary, the results of this study suggest that difficulties in processing rapid speech and speech in noise may not be inevitable byproducts of the aging process. Older blind adults recognized time-compressed speech better than older sighted adults in most time compression conditions. More importantly, the older blind adults exhibited comparable performance levels to younger sighted adults in all time-compressed conditions in quiet and noise, whereas the older sighted adults performed more poorly than their younger counterparts in all of these conditions. The findings demonstrate superior auditory abilities among older blind adults compared to sighted counterparts on a taxing speech recognition task that is encountered in daily life, and have important implications for promoting training in listening to rapid speech in order to minimize auditory slowing that is frequently observed with aging.

Acknowledgments

This research was supported by a MERIT award from the National Institute on Aging, NIH.

This research was supported in part by MERIT award R37AG09191 from the National Institute on Aging of the National Institutes of Health (NIH), training grant DC 00046 from the National Institute of Deafness and Communicative Disorders (NIDCD) of the NIH, and core center grant P30DC004664 from the NIDCD, NIH. The authors gratefully acknowledge Dr. Edward Smith for developing the MATLAB scoring tool used in this experiment and Dr. Gregory R. Hancock for his assistance with the statistical analyses. Finally, the authors extend their sincere appreciation to the many individuals who participated in the experiment, especially the older blind adults who traveled sometimes considerable distances to participate.

References

- Amedi A, Raz N, Pianka P, Malach R, Zohary E. Early ‘visual’ cortex activation correlates with superior verbal memory performance in the blind. Nature Neuroscience. 2003;6(7):758–766. doi: 10.1038/nn1072. [DOI] [PubMed] [Google Scholar]

- American National Standards Institute. ANSI S3.6-2004, “American National Standard Specification for Audiometers (Revision of ANSI S3.6-1996) American National Standards Institute; New York: 2004. [Google Scholar]

- Bilger RC, Nuetzel JM, Rabinowitz WM, Rzeczkowski C. Standardization of a test of speech perception in noise. Journal of Speech and Hearing Research. 1984;27:32–48. doi: 10.1044/jshr.2701.32. [DOI] [PubMed] [Google Scholar]

- Bopp KL, Verhaegen P. Aging and verbal memory span: A meta-analysis. Journal of Gerontology: Psychological Sciences. 2005;60B(5):P223–P233. doi: 10.1093/geronb/60.5.p223. [DOI] [PubMed] [Google Scholar]

- Carretti B, Borella E, DeBeni R. Does strategic memory training improve the working memory performance of younger and older adults? Experimental Psychology. 2007;54(4):311–320. doi: 10.1027/1618-3169.54.4.311. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Dirks DD, Morgan DE. Effects of age and mild hearing loss on speech recognition. Journal of the Acoustical Society of America. 1984;76:87–96. doi: 10.1121/1.391011. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Horowitz AR, Ahlstrom JB. Benefit of modulated maskers for speech recognition by younger and older adults with normal hearing. Journal of the Acoustical Society of America. 2002;111:2897–2907. doi: 10.1121/1.1480421. [DOI] [PubMed] [Google Scholar]

- Fitzgibbons PJ, Gordon-Salant S. Aging and temporal discrimination in auditory sequences. Journal of the Acoustical Society of America. 2001;109:2955–2963. doi: 10.1121/1.1371760. [DOI] [PubMed] [Google Scholar]

- Gordon-Salant S, Fitzgibbons PJ. Temporal factors and speech recognition performance in young and elderly listeners. Journal of Speech and Hearing Research. 1993;36:1276–1285. doi: 10.1044/jshr.3606.1276. [DOI] [PubMed] [Google Scholar]

- Gordon-Salant S, Fitzgibbons P. Recognition of multiply degraded speech by young and elderly listeners. Journal of Speech and Hearing Research. 1995;38:1150–1156. doi: 10.1044/jshr.3805.1150. [DOI] [PubMed] [Google Scholar]

- Hancock GR, Klockars AJ. The quest for alpha: Developments in multiple comparison procedures in the quarter century since Games (1971) Review of Educational Research. 1996;66:269–306. [Google Scholar]

- Henderson-Sabes J, Sweetow RW. Variables predicting outcomes on listening and communication enhancement (LACE) training. International Journal of Audiology. 2007;46:374–383. doi: 10.1080/14992020701297565. [DOI] [PubMed] [Google Scholar]

- Hugdahl K, Ek M, Takio F, Rintee T, Tuomainen J, Haarala C, Hämäläinen H. Blind individuals show enhanced perceptual and attentional sensitivity for identification of speech sounds. Cognitve Brain Research. 2004;19:28–32. doi: 10.1016/j.cogbrainres.2003.10.015. [DOI] [PubMed] [Google Scholar]

- Kujala T, Alho K, Kekoni J, Hamalainen H, Reinikainen K, Salonen O, et al. Auditory and somatosensory event-related brain potentials in early blind humans. Experimental brain research. 1995;104(3):519–526. doi: 10.1007/BF00231986. [DOI] [PubMed] [Google Scholar]

- Kujala T, Palva MJ, Salonen O, Alku P, Huotilainen M, Jarvinen A, et al. The role of blind humans’ visual cortex in auditory change detection. Neuroscience letters. 2005;379(2):127–131. doi: 10.1016/j.neulet.2004.12.070. [DOI] [PubMed] [Google Scholar]

- Muchnik C, Efrati M, Nemeth E, Malin M, Hildesheimer M. Central auditory skills in blind and sighted subjects. Scandinavian Audiology. 1991;20:19–23. doi: 10.3109/01050399109070785. [DOI] [PubMed] [Google Scholar]

- Niemeyer W, Starlinger I. Do the blind hear better? Investigations on auditory processing in congenital or early acquired blindness II. Central functions. Audiology. 1981;20:310–315. doi: 10.3109/00206098109072719. [DOI] [PubMed] [Google Scholar]

- Peelle JE, Wingfield A. Dissociations in perceptual learning revealed by adult age differences in adaptation to time-compressed speech. Journal of Experimental Psychology: Human Perception and Performance. 2005;31(6):1315–1330. doi: 10.1037/0096-1523.31.6.1315. [DOI] [PubMed] [Google Scholar]

- Pfeiffer E. A short portable mental status questionnaire for the assessment of organic brain deficit in elderly patients. Journal of the American Geriatric Society. 1975;23:443–441. doi: 10.1111/j.1532-5415.1975.tb00927.x. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller MK, Schneider BA, Daneman M. How young and old adults listen to and remember speech in noise. Journal of the Acoustical Society of America. 1995;97:593–608. doi: 10.1121/1.412282. [DOI] [PubMed] [Google Scholar]

- Roder B, Rosler F, Neville HJ. Event-related potentials during auditory language processing in congenitally blind and sighted people. Neuropsychologia. 2000;38:1482–1502. doi: 10.1016/s0028-3932(00)00057-9. [DOI] [PubMed] [Google Scholar]

- Roder B, Stock O, Bien S, Neville HJ, Rosler F. Speech processing activates visual cortex in congenitally blind humans. European Journal of Neuroscience. 2002;16:930–936. doi: 10.1046/j.1460-9568.2002.02147.x. [DOI] [PubMed] [Google Scholar]

- Roup CM, Wiley TL, Safady SH, Stoppenbach DT. Tympanometric screening norms for adults. American Journal of Audiology. 1998;7:55–60. doi: 10.1044/1059-0889(1998/014). [DOI] [PubMed] [Google Scholar]

- Salthouse TA. A Theory of Cognitive Aging. Amsterdam: North-Holland; 1985. [Google Scholar]

- Sommers MS. Factors contributing to the speech perception difficulties of older adults: The importance of speech-specific cognitive abilities. Journal of the American Geriatrics Association. 1997;45:633–637. doi: 10.1111/j.1532-5415.1997.tb03101.x. [DOI] [PubMed] [Google Scholar]

- Spehar B, Tye-Murray N, Sommers M. Time-compressed visual speech and age: a first report. Ear and Hearing. 2004;25:565–572. doi: 10.1097/00003446-200412000-00005. [DOI] [PubMed] [Google Scholar]

- Studebaker GA. A “rationalized” arcsine transform. Journal of Speech & Hearing Research. 1985;28:455–462. doi: 10.1044/jshr.2803.455. [DOI] [PubMed] [Google Scholar]

- Tillman TW, Carhart RC. An expanded test for speech discrimination utilizing CNC monosyllabic words: N.U. Auditory Test No. 6. USAF School of Aerospace Medicine, Report No. SAM-TR-66-55. 1966 doi: 10.21236/ad0639638. [DOI] [PubMed] [Google Scholar]

- Tremblay C Billings, Rohila N. Speech evoked cortical potentials: effects of age and stimulus presentation rate. Journal of the American Academy of Audiology. 2004;15:226–273. doi: 10.3766/jaaa.15.3.5. [DOI] [PubMed] [Google Scholar]

- Tun PA. Fast noisy speech: Age differences in processing rapid speech with background noise. Psychology and Aging. 1998;13:424–434. doi: 10.1037//0882-7974.13.3.424. [DOI] [PubMed] [Google Scholar]

- Tun PA, O’Kane G, Wingfield A. Distraction by competing speech in younger and older listeners. Psychology and Aging. 2002;17:453–467. doi: 10.1037//0882-7974.17.3.453. [DOI] [PubMed] [Google Scholar]

- Vance D, Wadley V, Roenker D, Dawson J, Edwards J, Rizzo M. The Accelerate Study: the longitudinal effect of speed of processing training on cognitive performance of older adults. Rehabilitation Psychology. 2007;52(1):89–96. [Google Scholar]

- Wechsler D. Wechsler Adult Intelligence Scale. 3. San Antonio: The Psychological Corporation; 1997. (WAIS-III) [Google Scholar]

- [last updated 2005; last accessed 2/13/10];WEDW (Waveform Editing Software) Accessed from: http://www.asel.udel.edu/speech/Spch_proc/software.html.

- Weeks R, Horwitz B, Aziz-Sultan A, Tian B, Wessinger CM, Cohen LG, et al. A positron emission tomographic study of auditory localization in the congenitally blind. The Journal of Neuroscience. 2000;20(7):2664–2672. doi: 10.1523/JNEUROSCI.20-07-02664.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wingfield A, Poon LW, Lombardi L, Lowe D. Speed of processing in normal aging: Effects of speech rate, linguistic structure, and processing time. Journal of Gerontology. 1985;40:479–585. doi: 10.1093/geronj/40.5.579. [DOI] [PubMed] [Google Scholar]