Abstract

Objective

To study the effects of payment timing, form of payment, and requiring a social security number (SSN) on survey response rates.

Data Source

Third-wave mailing of a U.S. physician survey.

Study Design

Nonrespondents were randomized to receive immediate U.S.$25 cash, immediate U.S.$25 check, promised U.S.$25 check, or promised U.S.$25 check requiring an SSN.

Data Collection Methods

Paper survey responses were double entered into statistical software.

Principal Findings

Response rates differed significantly between remuneration groups (χ32=80.1, p<.0001), with the highest rate in the immediate cash group (34 percent), then immediate check (20 percent), promised check (10 percent), and promised check with SSN (8 percent).

Conclusions

Immediate monetary incentives yield higher response rates than promised in this population of nonresponding physicians. Promised incentives yield similarly low response rates regardless of whether an SSN is requested.

Keywords: Physicians, surveys, response rate, incentives

Survey research is a critical tool in health services research necessary to assess clinical practice patterns that influence the implementation of evidence-based care in an era of comparative effectiveness research. However, survey research, especially among physicians, suffers from lackluster participation relative to their nonphysician counterparts, with response rates to surveys of the former about 10 percentage points lower than surveys of the latter, on average (Asch, Jedrziewski, and Christakis 1997).

Although emerging evidence indicates that response rates are poorly correlated with response bias (Groves 2006; Groves and Peytcheva 2008;), in our experience few general medical journals are willing to publish physician surveys with response rates below 50 percent. A recent survey of scientific journal editors showed that approximately 90 percent believe response rate is at least somewhat important in publication decision making (Carley-Baxter et al. 2009). In addition, virtually none of the surveyed editors indicated they have changed their response rate standards in the past 10 years, despite widespread evidence of decreasing response rates to both general population surveys (Steeh et al. 2001; de Leeuw and de Heer 2002; Curtin, Presser, and Singer 2005; Berk, Schur, and Feldman 2007;) and surveys of physicians (Cummings, Savitz, and Konrad 2001; Cull et al. 2005;) over that timeframe.

Commonly, survey researchers face the difficult decision of whether to send an additional wave of surveys in hopes of surpassing a key response rate threshold for the general medical literature such as 50 percent or 60 percent. Knowing how to do so judiciously remains an important challenge. Optimizing physician response rates without adversely influencing response bias also remains a key concern among health services researchers.

Physician participation in surveys has been shown to be effectively increased through the use of incentives, especially when the incentive is monetary and offered in advance of completing a survey (prepaid) versus being offered contingent on completion of a survey (promised) (Asch, Christakis, and Ubel 1998; Kellerman and Herold 2001; VanGeest, Johnson, and Welch 2007; Flanigan, McFarlane, and Cook 2008;). However, the evidence supporting prepayment over promised monetary incentives is undermined by the lack of controlled experiments testing the two approaches among physicians. As such, the literature can only be construed as suggestive on this matter. Moreover, the extant literature offers little guidance on whether the effectiveness of prepaid monetary incentives varies by the method of its delivery (i.e., cash versus check).

Finally, from a research subject protection perspective, immediate incentives (check or cash) that do not require the participant to disclose a tax identification number such as a social security number (SSN) allow participants to better protect their personal privacy and maintain confidentiality—the primary risks associated with survey research. Nevertheless, many institutions require tax identification numbers as a condition of dispensing funds to research participants. This can severely limit the mode and timing of remuneration—each of which have significant implications for response rates. In an era of identity theft, it is important to determine the best ways to both optimize response rates by offering remuneration to research participants who receive small, one-time survey incentives while protecting their privacy.

To better understand the effects of payment timing (prepaid versus promised), form of payment (cash versus check), and having to provide an SSN on response rate, response bias, and item nonresponse, we embedded a randomized incentive experiment crossing several of these factors within the third-wave mailing to a nationally representative sample of U.S. physicians. We hypothesized that immediate incentives offered without the provision of an SSN would increase response rates beyond the rates from promised incentives, that cash is more effective than check as an immediate incentive, and that promised incentives not requiring provision of an SSN would yield higher response rates than those requiring it.

METHODS

Sample and Procedures

This study was approved by the Mayo Clinic Institutional Review Board. In May 2009, we mailed a confidential, self-administered, eight-page questionnaire on ethical and moral beliefs to 2,000 practicing U.S. physicians ages 65 and under representing all specialties. Our random sample of physicians was selected from the AMA Physician Masterfile, a database devised to include virtually all U.S. physicians.

The initial mailing of the survey included a cover letter, a book (The Quotable Osler) as a gift, and promised an additional U.S.$25 check to all respondents. Mayo Clinic's current institutional policy for research participant remuneration requires the collection of participants' SSNs for tax purposes. Prospective respondents were, therefore, instructed to provide their SSN on a form enclosed with their completed survey in order to receive the promised U.S.$25 check.

Physicians who did not respond to the first mailing were sent a subsequent mailing 6 weeks later. This mailing included a cover letter, the survey, and the same promise of a U.S.$25 check to all respondents contingent on their provision of an SSN. The administration of both the first and second wave mailings was managed by the Mayo Clinic Survey Research Center. Physicians who did not respond to the second mailing were included in the third and final mailing after an additional 6 weeks had passed.

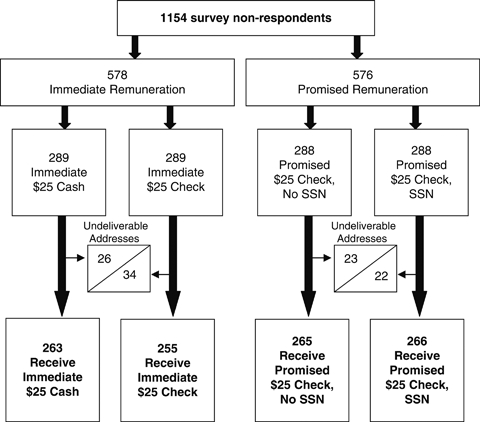

These remaining physicians (n=1,154) were randomized to receive one of four types of remuneration for completing the survey: immediate U.S.$25 cash (n=289), immediate U.S.$25 check (n=289), promised U.S.$25 check not requiring SSN (n=288), and promised U.S.$25 check requiring SSN (n=288) (Figure 1). To avoid institutional constraints, we contracted with an external vendor to provide the cash, immediate checks, and promised checks that did not require an SSN. The promised checks requiring an SSN were processed and disbursed by the Mayo Clinic accounting department.

Figure 1.

Randomization Scheme for Incentive Experiment

The mailing of all survey materials in the third wave was coordinated and carried out by our external vendor. Physicians in all four groups received a cover letter printed on Mayo Clinic letterhead signed by the principal investigator; the survey; and a stamped, preaddressed return envelope that routed all completed surveys to the Mayo Clinic Survey Research Center. The cash or check sent to physicians randomized to one of the two immediate incentive groups was paper clipped to the cover letter. In addition to the cover letter, physicians in the promised check/no SSN condition were also sent a form containing their address and were asked to return the form after making any needed corrections to ensure they would receive the promised check. Physicians randomized to the promised check/SSN group were asked to complete and return a form on which to record their SSN. Surveys were sent by priority mail.

All materials (i.e., envelopes, return envelopes) used by our external vendor in the third-wave mailing were the same as those utilized in the previous two mailings. However, the group receiving a U.S.$25 check also received a flyer clarifying that, although the check was generated by the external vendor, the study was being conducted by Mayo Clinic and all completed surveys would be sent directly to Mayo for processing.

Analysis

Mailed paper survey responses were double entered and imported into SAS version 9.1 (SAS Institute, Cary, NC). The main outcome variable of interest was the response rate in each group. Response rates were defined as the number of completed responses divided by the number of physicians by whom a survey was received (American Association for Public Opinion Research 2009). Physicians with undeliverable addresses were, therefore, not included in the denominator of response rate calculations.

Pearson χ2 tests were used to assess differences in overall response rates by incentive group; differences in response rates after controlling for physician sex, age group, region, and specialty; the characteristics of surveyed physicians both overall and by incentive group; the characteristics of respondents and nonrespondents within each incentive group; and item nonresponse for a section of the survey assessing participants' moral beliefs.

RESULTS

The overall survey response rate increased from 44 percent to 54 percent (1,032/1,895) after the third-wave mailing. Of the 1,154 nonresponding physicians mailed a survey in the third wave, 105 (9 percent) could not be contacted due to undeliverable addresses. Of the remaining 1,049 physicians who actually received a survey in the third-wave mailing, a total of 186 returned completed surveys (18 percent).

Using unadjusted χ2 tests, we observed a significant difference in response rates across the four incentive groups (χ32=80.1, p<.0001). The highest response rate was seen in the immediate cash group (34 percent, 90/263), followed by immediate check (20 percent, 50/255), promised check without SSN (10 percent, 26/265), and promised check with SSN (8 percent, 20/266) (Table 1). Differences between each combination of groups were statistically significant (p<.01), with one exception. The two groups receiving promised incentives (one requiring an SSN and the other not) did not differ significantly (p=.47). The same pattern of differences in overall response rates across the four incentive groups was also observed after stratifying on sex, age, region, and specialty (Table 1).

Table 1.

Physician Response Rates in the Four Incentive Groups, Both Overall and after Stratifying on Physician Characteristics

| Number/Total Number (%) | |||||

|---|---|---|---|---|---|

| Immediate U.S. $25 Cash | Immediate U.S. $25 Check | Promised U.S. $25 Check, No SSN | Promised U.S. $25 Check, SSN | p-Value* | |

| Overall | 90/263 (34) | 50/255 (20) | 26/265 (10) | 20/266 (8) | <.0001 |

| Characteristic | |||||

| Female sex | 22/81 (27) | 11/69 (16) | 6/69 (9) | 6/78 (8) | .002 |

| Age (years) | |||||

| <50 | 45/143 (31) | 25/131 (19) | 13/136 (10) | 6/137 (4) | <.0001 |

| 50 or older | 43/115 (37) | 24/116 (21) | 11/114 (10) | 14/118 (12) | <.0001 |

| Region | |||||

| South | 30/98 (31) | 21/100 (21) | 6/88 (7) | 3/80 (4) | <.0001 |

| Northeast | 20/46 (43) | 8/52 (15) | 8/63 (13) | 6/79 (8) | <.0001 |

| West | 20/60 (33) | 10/51 (20) | 8/61 (13) | 5/59 (8) | .003 |

| Midwest | 17/51 (26) | 11/47 (23) | 4/49 (8) | 6/47 (13) | .007 |

| Primary specialty | |||||

| Primary care | 42/129 (33) | 13/97 (13) | 12/115 (10) | 11/102 (11) | <.0001 |

| Surgery | 10/40 (25) | 10/60 (17) | 5/54 (9) | 3/54 (6) | .03 |

| Procedural specialty | 18/48 (38) | 13/42 (31) | 4/40 (10) | 3/51 (6) | .0001 |

| Nonprocedural specialty | 16/38 (42) | 12/49 (24) | 5/52 (10) | 3/47 (6) | <.0001 |

| Nonclinical | 3/5 (60) | 2/4 (50) | — | 0/6 (0) | .10 |

| Other | 1/3 (33) | 0/3 (0) | 0/4 (0) | 0/4 (0) | .43 |

Using the Pearson χ2 test or Fisher's exact test when cell counts <5.

To assess the possibility of response bias, we analyzed the demographic characteristics of physicians who received a survey in the third-wave mailing. Physician sex, age group, region of residence, and specialty did not differ by incentive group. We also did not observe significant differences between respondent and nonrespondent sex, age, region, or specialty within the four incentive groups; however, physicians randomized to the promised check/SSN group who completed a survey were more likely to be over the age of 50 than those who did not respond (p=.03).

As a proxy measure for survey response quality across the four incentive groups, we also sought to determine whether the number of missing items in one key content section of the survey (moral beliefs) differed significantly. For the 32 questions on moral beliefs, the mean number of missing items did not differ significantly between any of the incentive groups (p=.82), nor did the percent of respondents who had one or more missing item out of the 32 moral beliefs questions (p=.53). Among respondents in the U.S.$25 cash group, 11 percent (10/90) had 1 or more missing item out of the 32 moral beliefs questions, versus 18 percent (9/50) in the U.S.$25 check group, 8 percent (2/26) in the promised U.S.$25 check/no SSN group, and 10 percent (2/20) for the promised U.S.$25 check/SSN group.

DISCUSSION

This study offers support for the efficacy of prepaid cash incentives to optimize response rates in physician surveys. Specifically, we found that delivering immediate incentives to nonresponding physicians participating in a national survey yielded dramatically higher response rates than promised incentives. In addition, we also observed significantly higher response rates among those receiving immediate cash versus immediate check. These incentive strategies allowed us to achieve an overall response rate to our survey that exceeded our goal of 50 percent. Such results would not have been attained with the standard incentive approach required by our institution.

Our findings are also consistent with recent reviews of the literature suggesting that prepaid cash incentives are the most effective in encouraging physicians to respond to a survey (VanGeest, Johnson, and Welch 2007; Flanigan, McFarlane, and Cook 2008;). However, our study is among the few to test these factors simultaneously and in a nationally representative physician survey. In the past, most researchers have demonstrated the effects of monetary incentives in physician surveys using a single paradigm such as prepaid checks (Kasprzyk et al. 2001; Keating et al. 2008;) or promised cash (Gunn and Rhodes 1981), but rarely have they investigated these factors in a contemporaneous manner that affords an assessment of their relative merits.

Counter to our expectations, we also found that requiring an SSN did not directly impede response rates compared with other promised incentives. We had hypothesized that requiring the provision of an SSN would heighten physician concerns over privacy that would, in turn, diminish enthusiasm for the survey and attenuate participation levels in this group. It may be that physicians, unlike their counterparts in the general population, understand why that information might need to be collected and trust that it be protected, especially if the request comes from a well-known institution. Nonetheless, institutional policies requiring an SSN for survey research remuneration purposes implicitly require using a delayed mode of remuneration, which we did find to significantly impede response rates. Notably, our data suggest that enhancing response rates with an alternative mode of remuneration had minimal if any effect on who responded or the quality of their responses.

Our study had several limitations. Because we have focused on incentives provided in the third mailing of a survey, these findings can only be generalized to the most difficult-to-reach physician respondents—those who did not respond despite the promise of a U.S.$25 check in two previous mailings. However, because it is precisely this group of physicians to whom efforts toward improving response rates should be targeted, our findings nonetheless have important and practical implications. Although we did not identify significant differences in respondent versus nonrespondent demographics that would suggest the presence of response bias, the sensitive nature of our survey's content—namely, physicians' moral and ethical beliefs—could have kept some physicians who might otherwise have responded to persist in their nonresponse status.

The scope of our conclusions is also limited by the fact that our experiment was not fully crossed. However, our decision to forego creation of groups for every single combination of our three factors (immediate/promised, cash/check, no SSN/SSN) was intentional: We could not envision a scenario whereby physicians could reasonably be offered a gift of immediate cash or check along with the request to provide an SSN. Similarly, we could never see anyone promising cash while requesting an SSN. As such, we omitted these two conditions even though their inclusion would have allowed for a more fully balanced design.

Future work is still needed to determine whether the same response tendencies are seen for earlier responders, potentially mitigating the need for a third contact and thus reducing respondent burden. It is also important to note that prepaid incentives, particularly prepaid cash incentives, are the most expensive because nonresponders and responders alike receive remuneration. A full-cost analysis is beyond the scope of this paper, but it should be pursued in future work to determine whether the increased response rate to prepaid incentives is worth the additional costs associated with this strategy.

The importance of surveying physicians will not diminish in the foreseeable future, even though there is evidence that doing so is proving increasingly difficult. We must continue to build on the work of others (Kellerman and Herold 2001; McMahon et al. 2003; Cull et al. 2005; VanGeest, Johnson, and Welch 2007;) by continuing this line of inquiry in an attempt to find the optimal approach to surveying physicians. Otherwise the physician perspective may not be adequately represented in debates and issues germane to the practice of medicine or to the realm of health policy.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: This publication was made possible by Mayo Clinic Department of Medicine funding to Dr. Tilburt and from grant 1 KL2 RR024151 from the National Center for Research Resources (NCRR), a component of the National Institutes of Health (NIH), and the NIH Roadmap for Medical Research. Its contents are solely the responsibility of the authors and do not necessarily represent the official view of NCRR or NIH. Information on NCRR is available at http://www.ncrr.nih.gov/. Information on Reengineering the Clinical Research Enterprise can be obtained from http://nihroadmap.nih.gov. The investigators received valuable support in the design and implementation of the study from the Mayo Clinic Survey Research Center as well as the University of Chicago Survey Lab.

Disclosures: None.

Disclaimers: None.

SUPPORTING INFORMATION

Additional supporting information may be found in the online version of this article:

Appendix SA1: Author Matrix.

Please note: Wiley-Blackwell is not responsible for the content or functionality of any supporting materials supplied by the authors. Any queries (other than missing material) should be directed to the corresponding author for the article.

REFERENCES

- American Association for Public Opinion Research. Standard Definitions: Final Dispositions of Case Codes and Outcome Rates for Surveys. 6th Edition. Deerfield, IL: AAPOR; 2009. [Google Scholar]

- Asch DA, Christakis NA, Ubel PA. Conducting Physician Mail Surveys on a Limited Budget. A Randomized Trial Comparing $2 Bill Versus $5 Bill Incentives. Medical Care. 1998;36(1):95–9. doi: 10.1097/00005650-199801000-00011. [DOI] [PubMed] [Google Scholar]

- Asch DA, Jedrziewski MK, Christakis NA. Response Rates to Mail Surveys Published in Medical Journals. Journal of Clinical Epidemiology. 1997;50(10):1129–36. doi: 10.1016/s0895-4356(97)00126-1. [DOI] [PubMed] [Google Scholar]

- Berk ML, Schur CL, Feldman J. Twenty-Five Years of Health Surveys: Does More Data Mean Better Data? Health Affairs (Millwood) 2007;26(6):1599–611. doi: 10.1377/hlthaff.26.6.1599. [DOI] [PubMed] [Google Scholar]

- Carley-Baxter LR, Hill CA, Roe DJ, Twiddy SE, Baxter RK, Ruppenkamp J. Does Response Rate Matter? Journal Editors Use of Survey Quality Measures in Manuscript Publication Decisions. Survey Practice. 2009 November. Available at: http://surveypractice.org/2009/10/17/editors-decisions/ [Google Scholar]

- Cull WL, O'Connor KG, Sharp S, Tang SF. Response Rates and Response Bias for 50 Surveys of Pediatricians. Health Services Research. 2005;40(1):213–26. doi: 10.1111/j.1475-6773.2005.00350.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cummings SM, Savitz LA, Konrad TR. Reported Response Rates to Mailed Physician Questionnaires. Health Services Research. 2001;35(6):1347–55. [PMC free article] [PubMed] [Google Scholar]

- Curtin R, Presser S, Singer E. Changes in Telephone Survey Nonresponse over the Past Quarter Century. Public Opinion Quarterly. 2005;69(1):87–98. [Google Scholar]

- de Leeuw E, de Heer W. Trends in Household Survey Nonresponse: A Longitudinal and International Comparison. In: Groves RM, Dillman DA, Eltinge JL, Little RJA, editors. Survey Nonresponse. New York: John Wiley & Sons; 2002. pp. 41–54. [Google Scholar]

- Flanigan T, McFarlane E, Cook S. 2008. “Conducting Survey Research among Physicians and Other Medical Professionals—A Review of Current Literature.” Proceedings of the Survey Research Methods Section, American Statistical Association, pp. 4136–47.

- Groves R. Nonresponse Rates and Nonresponse Bias in Household Surveys. Public Opinion Quarterly. 2006;70(5):646–75. [Google Scholar]

- Groves R, Peytcheva E. The Impact of Nonresponse Rates on Nonresponse Bias. Public Opinion Quarterly. 2008;72(2):167–89. [Google Scholar]

- Gunn WJ, Rhodes IN. Physician Response Rates to a Telephone Survey: Effects of Monetary Incentive on Response Level. Public Opinion Quarterly. 1981;45(1):109–15. doi: 10.1086/268638. [DOI] [PubMed] [Google Scholar]

- Kasprzyk D, Montano DE, St Lawrence JS, Phillips WR. The Effects of Variations in Mode of Delivery and Monetary Incentive on Physicians' Responses to a Mailed Survey Assessing STD Practice Patterns. Evaluation and the Health Professions. 2001;24(1):3–17. doi: 10.1177/01632780122034740. [DOI] [PubMed] [Google Scholar]

- Keating NL, Zaslavsky AM, Goldstein J, West DW, Ayanian JZ. Randomized Trial of $20 Versus $50 Incentives to Increase Physician Survey Response Rates. Medical Care. 2008;46(8):878–81. doi: 10.1097/MLR.0b013e318178eb1d. [DOI] [PubMed] [Google Scholar]

- Kellerman SE, Herold J. Physician Response to Surveys. A Review of the Literature. American Journal of Preventive Medicine. 2001;20(1):61–7. doi: 10.1016/s0749-3797(00)00258-0. [DOI] [PubMed] [Google Scholar]

- McMahon SR, Iwamoto M, Massoudi MS, Yusuf HR, Stevenson JM, David F, Chu SY, Pickering LK. Comparison of E-Mail, Fax, and Postal Surveys of Pediatricians. Pediatrics. 2003;111(4, Part 1):e299–303. doi: 10.1542/peds.111.4.e299. [DOI] [PubMed] [Google Scholar]

- Steeh C, Kirgis N, Cannon B, DeWitt J. Are They Really as Bad as They Seem? Nonresponse Rates at the End of the Twentieth Century. Journal of Official Statistics. 2001;17(2):227–47. [Google Scholar]

- VanGeest JB, Johnson TP, Welch VL. Methodologies for Improving Response Rates in Surveys of Physicians: A Systematic Review. Evaluation in the Health Professions. 2007;30(4):303–21. doi: 10.1177/0163278707307899. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.