Abstract

We describe the feasibility of audio-enhanced personal digital assistants (ADPAs) for data collection with 60 Latino migrant farmworkers. All participants chose to complete APDA surveys rather than using paper-and-pencil. No one left the study prematurely: two (3%) data cases were lost due to technical difficulties. Across all data .27% missing data were observed: nine missing responses on eight items. Participants took 19 minutes on average to complete the 58-question survey. The factor most influential for completion was education level. APDA methodology enabled both English- and Spanish-speaking Latino migrant farmworkers to become active research participants with minimal loss of data.

Keywords: migrant farmworker, data collection, data quality

The Latino population in the US is the most rapidly growing ethnic minority with 28 million American residents with Mexican nativity or parents with Mexican birth (U.S. Census Bureau, 2006). An estimated 3–5 million migrant farmworkers are in the US; 90% are of Latino ethnicity, with the majority originating from Mexico (U.S. Department of Labor [USDoL], 2005). As a group, migrant farmworkers display worse health status indicators than the majority population (Kandula, Kersey, & Lurie, 2004; Rubalcave, Teruel, Thomas, & Goldman, 2008; United States Department of Health and Human Services [USDHHS], 2002a). Levels of education influence reading ability, and although the highest grade completed varies by place of birth, foreign-born migrant farmworkers on average complete the sixth grade in contrast to completion of the 11th grade by U.S.-born migrant farmworkers (USDoL). In addition, 81% of the migrant farmworkers speak predominantly Spanish (National Center for Farmworker Health, 2009).

Latino children are under-represented in clinical trials and therapeutic research (Walsh & Ross, 2003). Health disparity research with Latino children and the children of itinerant Latino migrant farmworkers is limited, but necessary to understand group demographics, identify needs, and guide development of health promotion interventions (Holmes, 2006). The challenge is to reach poor, non-English-speaking participants, like migrant farmworkers, for inclusion in research studies.

Health literacy, or the level at which individuals have the capacity to obtain, process, and understand information needed to make appropriate health decisions (USDHHS, 2005, 2008) can have an effect on participation in research studies. For health behavior research, low health literacy may affect a person’s ability to complete complex forms, share personal information, and understand the expectations of all parties during participation in research studies. An individual’s cultural and linguistic competency may affect health literacy. Vulnerable (e.g., minority populations, low-income, immigrant) populations have limited health literacy (USDHHS, 2005; White, Chen, & Atchison, 2008; Zagaria, 2008). Non-English-speaking minorities are more marginalized because of their linguistic differences (Leyva, Sharif, & Ozuah, 2005). To address the barriers of literacy, alternative approaches to collecting research data from vulnerable populations are being created and experimentally tested.

When collecting data from low-literacy or non-English speaking populations, interviewers are often needed to read or translate questions (Aday & Cornelius, 2006). Face-to-face interviews provide the researcher with opportunities to clarify study questions for participants, answer questions, and improve data quality through knowledgeable navigation of the survey (Czaja & Blair, 1996). These benefits should be weighed against the intense time and staffing resources required for face-to-face interviews, as well as the potential introduction of social desirability bias (Couper et al., 1998; Tourangeau & Smith, 1996). Potential sources of error can also be introduced when an instrument that is designed to be self-administered is read to subjects with low levels of literacy (Al-Tayyib, Rogers, Gribble, Villarroel, & Turner, 2002; Lange, 2002).

To address the high cost of face-to-face interviews, as well as the difficulties of reading and navigating paper-based questionnaires, researchers have developed audio computer-assisted self-interviewing (ACASI), which has been used successfully in various populations and shown to improve data quality and standardize survey administration through faster data entry and fewer non-responses (Ramos, Sedivi, & Sweet, 1998). The use of audio recording has been shown to reduce issues of literacy and comprehension among adolescent populations (Al-Tayyib et al., 2002; Romer et al., 1998; Turner et al., 1998). Portability of traditional ACASI systems is difficult, however, due to the fact that desktop or laptop computers are used to implement the surveys (Beebe, Harrison, McRae, Anderson, & Fulkerson, 1998), making this impractical for in-the-field community health and outreach research projects.

For many, personal digital assistants (PDAs) have transformed the management of daily lives and have become research tools. The successful use of electronic devices such as PDAs in research data collection has been reported in studies examining data equivalency of paper-and-pencil versus electronic devices for dietary intake and nutrition surveys (Dale & Hagen, 2007; Green & Kreuter, 2006; Piasecki, Hufford, Solhan, & Trull, 2007), as well as survey administration (Bobula et al., 2005; Saleh et al., 2002; van Griensven et al., 2006) with most studies showing an improvement in the quality of data and acceptability of the technology by study participants. Additionally, PDAs are portable, and they offer flexibility for selection of research venues (Gravlee, 2002).

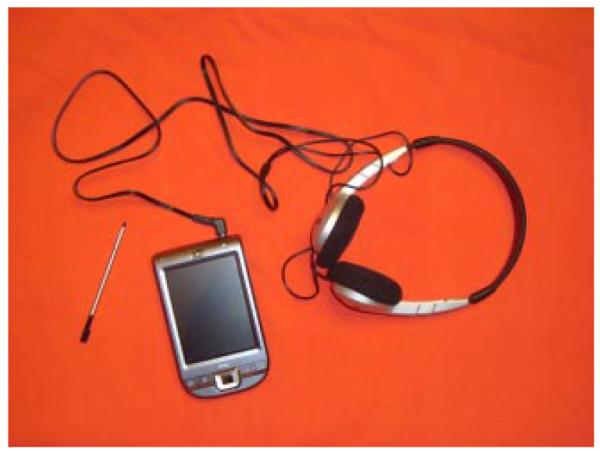

Further modifying the PDA’s use, sTrapl et al. (2005) used audio-enhanced PDAs (APDA) to collect data from a large sample (N=645) of seventh-grade students in an urban school district with poor reading scores and a significant proportion of non-native English speakers. APDAs are PDAs programmed to execute surveys that have corresponding voice files that can be heard by the respondent via personal headphones (See Figure 1). Similar to findings using ACASI, the newly developed APDA system was well received by all students, resulted in improvements in data administration, and showed decreased missing data. In a follow-up study, Trapl (2007) compared the use of the APDA with non-audio PDA and paper-based surveys among seventh-grade students with a range of language abilities. They found that audio enhancement and the technology component significantly reduced missing data and improved data quality. Although this technology had not been studied in adult populations, it would seem to have the same potential benefits among adult populations with limited literacy skills.

FIGURE 1.

HP iPAQ 111 Classic Handheld PDA that has been audio-enhanced shown with Koss portable headphones.

The purpose of this article is twofold: to describe the feasibility of the implementation of ADPAs for data collection with migrant farmworker parents, and to assess data quality as measured by mode preference, survey time to completion, and missing items. Data quality issues, such as response rates, were not addressed. This pilot study was a component of a larger self-management Phase 1 study of “Dietary Intake and Nutritional Education (DINE) of Migrant Farmworker Children.” The goal of DINE was to design a culturally appropriate health-promotion intervention to reduce the high rates of overweight and obesity in these children and improve healthy eating (Kilanowski & Ryan-Wenger, 2007). Phase 1 is a descriptive study of the demographic characteristics of the migrant farmworker family and children’s dietary intake.

METHODS

Participants

Research participants were recruited from six midwest migrant camps. The target audience was migrant farmworker parent–child dyads. Inclusion criteria were: (a) parent 18 years or older with children 2–13 years who resided in a migrant camp; (b) able to sign consent in Spanish or English; and (c) able to complete short questionnaires. The University Institutional Review Board (IRB) approved this study, and the human subjects’ protection application material was submitted for an internal cultural review. This is a valuable additional step in the IRB process as many of the materials developed for limited English proficiency do not undergo a cultural assessment; direct translation of research surveys into the Spanish language is not sufficient to ensure cultural accuracy (Hendrickson, 2003).

Survey Development and PDA Use

For this study, six HP iPAQ 111 Classic Handheld PDAs (China) with a cost of $280 each were purchased to decrease potential waiting time among respondents and to offset any mechanical failure. A protection plan against damage was purchased at $60 each. Additionally, six 1-gigabyte secure digital cards ($6) were purchased to hold the survey voice files and survey results; portable headphones ($2.50) were purchased for each participant.

The 58-question APDA survey used in this study included a demographic questionnaire, the General Efficacy Scale (Mailbach & Murphy, 1995), Short Acculturation Scale for Hispanics (Marin, Sabogal, Marvin, Otero-Sabogal, & Perez-Stable, 1987), and the U.S. Household Food Security Survey (Nord, Andrews, & Carlson, 2004). Sound-Enhanced Data Collection Application (Stork, 2006) was used to design and execute the PDA-based survey and an institution license ($50) was secured. The administrative design module SEDCAadmin—an iteration of the Surveyor software package—was used to design an English and a Spanish version of the survey on a desktop computer. The resulting surveys were then transferred to the PDA through the device’s syncing software program and executed on the PDA using the SEDCApda module. General instructions for use of the APDA, survey questions, and transitions between surveys were seen and heard on 45 screens. The item text changed from black to red to black-colored font as the text was read to the participant. The number of questions per screen was automatically determined by the SEDCA program. Only survey questions were read to the participants; participants had the option to repeat the voice if needed. Although the response options themselves were not read after each question, many of the response options were included in the brief instructions provided prior to each section of the survey (i.e., Demographic Questionnaire, General Efficacy Scale, Short Acculturation Scale for Hispanics, and the U.S. Household Food Security Survey). The survey questions and response options had reading levels measured with the use of the Flesch–Kincaid readability scale, which is automated in Microsoft Word and has been demonstrated to be reliable and valid (Kincaid, Fishburne, Rogers, & Chisson, 1975). Based on simple response options, it was the best judgment of the researchers to lower survey time burden for participants by not having the response options read to the participants after each individual survey question. For the audio component of the survey, the researcher and an assistant used the university library-recording studio to create voice files in a *.wav format. An individual voice file was created for the APDA introduction, explanation of use, and each survey question in both English and Spanish.

Data Collection

Following signed consent, participants were offered either APDA or paper-based versions of the self-administered surveys described above. Although project staff remained neutral when explaining both survey modes, participants who chose the APDA had the additional incentive of being able to keep the portable headphones. APDAs were handed to participants, headphones adjusted, and introductory instructions given until the first sample question was answered (“What is your favorite season?”), at which point participants were able to continue the survey at their own pace. Participants were instructed to raise their hands upon completion of the ADPA survey, and the APDAs were then collected by the research team who directed participants about how to complete the paper-based food frequency survey. Also noted were each respondent’s survey start and end times in the PDA data logs. Results files saved in text format were removed from the PDAs after each data collection session. The resulting *.txt files were manually condensed into a single *.txt file and imported into SPSS 15.0 (via Import Wizard) for data analysis.

Research data were collected in the migrant farmworker residential camp at the conclusion of the workday so no participant had to forfeit work hours to participate in the study. Recruitment advertisements included a notice that information would be collected by paper-and-pencil or APDAs. The participants were compensated $10.00 for their time. The research team included two research assistants who were fluent in Spanish and of Latina heritage.

Measures and Analysis

Evaluation of the feasibility and data quality of the APDA were measured by mode preference, survey time to completion, and missing items. Mode preference was measured by participants’ self-selection of the APDA or paper-and-pencil surveys, participant survey completion rates, anecdotal participants’ comments, and observed behaviors of participants. Survey time to completion was measured in minutes by the internal time clock on the PDA recording start and end times, and mean, median, and range were determined. Missing items were determined by examination of the number of survey questions and the number of answers to those questions. Patterns of omitted questions were reviewed.

RESULTS

Sixty migrant farmworker parents agreed to participate in the study. Two participant surveys were not able to be retrieved and saved, thus reducing the final sample to 58. Demographics of the sample are shown in Table 1. Ninety-eight percent of the participants reported ownership of a cell phone, 28% said they had previously used a computer, 83% a calculator, and 12% a PDA.

Table 1.

Demographics of Study Participants (N=58)

| Variable | n | % |

|---|---|---|

| Gender | ||

| Men | 3 | 5 |

| Women | 55 | 95 |

| Language at home | ||

| English | 3 | 5 |

| Spanish | 44 | 76 |

| Both | 8 | 14 |

| Other | 1 | 2 |

| Missing | 2 | 3 |

| Monthly family income | ||

| Less than $500 | 29 | 50 |

| $500–$1000 | 19 | 33 |

| $1000–$2000 | 6 | 10 |

| Above $2000 | 3 | 5 |

| Prefer not to answer | 1 | 2 |

| Marital status | ||

| Married | 35 | 60 |

| Living with partner | 2 | 4 |

| Widowed | 12 | 21 |

| Divorced | 3 | 5 |

| Single parent | 6 | 10 |

| Ethnicity | ||

| Hispanic | 43 | 74 |

| Non-Hispanic | 12 | 21 |

| Other | 3 | 5 |

| Work status | ||

| Full time | 37 | 64 |

| Part time | 20 | 34 |

| Not employed | 1 | 2 |

| Adult self-selected race | ||

| American Indian | 6 | 10 |

| Asian | 1 | 2 |

| Black | 1 | 2 |

| Native Hawaiian | 2 | 3 |

| White | 9 | 16 |

| Multi-group | 7 | 12 |

| Other | 32 | 55 |

| Highest parental education | ||

| Less than 9th grade | 40 | 69 |

| Grades 9–11 | 9 | 15 |

| High school graduate | 5 | 9 |

| Some college | 4 | 7 |

Mode Preference

All participants chose to have the survey administered via APDA; no paper-and-pencil format was chosen. Of the original 60 subjects using the APDA, 13% (8) requested the English version and 87% (52) requested the Spanish version. Everyone who entered the study finished the survey on the APDA.

Survey Time to Completion

Time spent completing the 58-question survey with the APDA averaged 19.47 minutes (SD=7.19), with a range of 7–37 minutes. Those taking the survey in English (M=13.25, SD=5.52) finished more quickly (t-test, p=.007), than those taking the Spanish version (M=20.45, SD=6.96). Time to completion for the surveys with the APDAs was significantly related to personal characteristics: those who had not previously used a computer, chose Spanish to complete the survey, were less acculturated as measured on the Short Acculturation Scale for Hispanics, and had less than a ninth-grade education took longer to complete the APDA survey than their counterparts. When a multivariate model was run (using a general linear model) to assess the overall contribution of the participants’ characteristics to time to completion, only education level remained significant. For respondents who had less than a ninth-grade education, the adjusted mean survey completion time was longer, compared to those with a ninthgrade education or higher (21.32 minutes vs. 15.96 minutes, p=.016).

Missing Data

Of the 3,364 survey questions asked of all the subjects, only 14 questions randomly dispersed among the 58 participants were unanswered. Six participants (10.3%) had missing data. On any given item, 3.4% was the largest percent of missing data, as only two people missed the same question. Only one person who was having difficulty with the PDA asked for individual attention. She answered the PDA in Spanish, reported other ethnicity, and spoke the Mixteco dialect, which is neither Spanish nor English thus, none of the acculturation survey response options were appropriate. This participant missed five of six questions on the acculturation survey that asked about the use of language (Spanish or English). Removing this particular respondent as an outlier, the number of study survey questions was reduced to 3,306 and then across all data only .27% missing data was observed. This left nine missing responses on eight items.

All respondents completed a paper-and-pencil dietary survey during the same data collection session: 20% of the paper-and-pencil dietary surveys administered at the same time were unable to be scored due to missing, incomplete, or duplicate data. Order of survey mode showed no difference in completion rate for the dietary surveys.

In the Field

This study was conducted at migrant camps located in rural agricultural areas. Electrical supply availability was unknown. PDA batteries were charged to 100% capacity the night before use in the field, and no problems were encountered with low batteries. A portable canopy was in place to shield the participants from the sun and bottled water was offered. The research team brought all that was needed for the conduct of the study. The APDAs allowed participants to answer survey questions privately. Parents, mostly mothers, were comfortably seated in scattered lawn chairs with headphones in place, and some had a child on their lap or sitting nearby (See Figure 2). After the PDA introduction the research team did not interact with the participants except to answer occasional questions or to make sure the PDA screens did not darken due to increased lapsed time between answering questions. The team volunteered to hold crying or fussy babies. We noticed parents were quiet and appeared focused when compared to the researchers’ other studies that only used paper-and-pencil surveys. Parents appeared minimally distracted by surrounding noises or their nearby children, and had fewer conversations with fellow participants. One PDA stylus was lost in the grass of the migrant camp after use; no PDAs were lost, stolen, or damaged.

FIGURE 2.

Migrant farmworker mother using APDA at data collection site.

DISCUSSION

The complete and accurate capture of data is important in research, and the use of handheld devices could improve this endeavor (Hardwick, Pulido, & Adelson, 2007). As has been found in other studies, PDAs are easily transported, relatively inexpensive on a limited grant budget, and easy to use (Gravlee, 2002; Trapl et al., 2005).

Although given a choice for the initial study surveys, all participants chose to use the APDA and no paper-and-pencil surveys were completed. Participant choice of mode may have been influenced by the additional incentive of the headphones; those participating later in the day may have been encouraged to try the new technology by observing other participants using this method. No one left the study prematurely. The APDA methodology worked both for those who preferred English and those who preferred Spanish; participants’ low educational attainment was not a barrier to obtaining complete data. Almost all participants equated a hand-held electronic game to a computer or PDA: their experience with both was limited. Familiarity with their children’s electronic games seemed to gave them confidence in using the APDA. Because it was not part of the original study design, we could not compare data quality to that of the paper-and-pencil dietary intake survey administered at the same session. The survey contents were not identical and although we could not say mode was a determining factor, only 80% of the dietary intake surveys were sufficiently completed to be used in data analysis. Increased survey time to completion was related to having no previous experience using a computer, low acculturation, speaking Spanish, and lower levels of education. The greatest influence on length of time to complete the surveys was education level.

Similar to other computerized survey administration methods, the PDA software prohibited subjects from entering multiple responses to the same questions and entering invalid responses, and it eliminated undistinguishable responses due to handwriting. Personnel budget costs were reduced as there was no need to employ research assistants to enter data; there were no errors due to data entry and less data handling. There was decreased paper and printing, promoting a green environment and reducing budget costs. The PDA time clock allowed us to calculate the number of participants we could accommodate at a given research site, and that information will assist in the scheduling of future data collections. Furthermore, the increased portability and ease of use in the public health research environment was a valuable attribute. Finally, protection of subject privacy in data collection was maintained with the use of security access passwords to enter the PDA database. As a positive sidebar, these Latina women, who live in a traditional machismo society, appeared proud to tell their children and nearby husbands that they had used a mini-computer.

A limitation of this study includes participants residing only in the midwest who chose to participate, and no information from those who choose not to participate. Anyone who had apprehensions regarding participation would have already self-selected themselves out of the study. Potential technical malfunction can be the chief disadvantage of the adoption of any electronic data collector. The disadvantages of using APDAs for data collection in this study were few. Only 2 of 60 (3%) data cases were lost. The two lost entries occurred in the first two farms visited and might have been a result of the research team’s inexperience. We cannot determine if this was due to a malfunction of the software, the research team, or if participants failed to understand the directions in moving along the survey and were too shy to ask for assistance from the research team. Evidence of data quality is limited and based on previous data collection experiences with migrant families.

CONCLUSION

The U.S. Government’s Healthy People 2010 (USDHHS, 2002a,b) initiative makes the reduction of health disparities an overarching goal of our national health agenda. To accomplish this, more research with racial and ethnic minority groups must be conducted. Challenges in conducting research with diverse racial and ethnic groups include varying levels of health literacy; Healthy People 2010 included health communication as a national health agenda priority. Proposed new objectives in Healthy People 2020 (USDHHS, 2009) include increasing the proportion of persons who use electronic personal health management tools. Culturally sensitive health communication is important to provide ethnic and racially diverse research participants with information about the research process (Kreps & Sparks, 2008). In addition to information about the process, methods to improve the quality of gathered research data are needed. To meet the needs of culturally diverse research participants (Andrulis & Brach, 2007) with limited native language literacy or English proficiency, new technologies to assist with reading or understanding the written word must be developed. The use of APDAs as described here with migrant farmworkers addresses this need.

Acknowledgments

Contract grant sponsor: National Center for Research Resources (NCRR); Contract grant number: UL1 RR024989.

Contract grant sponsor: National Institutes of Health, National Institute for Nursing Research Grant; Contract grant number: P30NRO10676.

Footnotes

This publication was made possible by the Case Western Reserve University/Cleveland Clinic CTSA Grant Number UL1 RR024989 from the National Center for Research Resources (NCRR), a component of the National Institutes of Health (NIH) and NIH roadmap for Medical Research. Its contents are solely the responsibility of the authors and do not necessarily represent the official view of NCRR or NIH. Additional support was from the National Institutes of Health, National Institute for Nursing Research grant (P30NRO10676), Center of Excellence to Build the Science of Self-Management: A Systems Approach; the Dietary Intake and Nutrition Education (DINE) Project. The authors would like to thank the research team from Frances Payne Bolton School of Nursing, Kimberly Garcia, RN, and Emily Horacek, Bachelor of Science Nursing student; and the staff of the Center for Health Promotion at Case Western Reserve University, Nathan Gardner and Sarah Boyle.

REFERENCES

- Aday LA, Cornelius LJ. Designing and conducting health surveys: A comprehensive guide. 3rd ed John Wiley & Sons; San Francisco: 2006. [Google Scholar]

- Al-Tayyib AA, Rogers SM, Gribble JN, Villarroel M, Turner CF. Effect of low medical literacy on health survey measurements. American Journal of Public Health. 2002;92:1478–1480. doi: 10.2105/ajph.92.9.1478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andrulis DP, Brach C. Integrating literacy, culture, and language to improve health care quality for diverse populations. American Journal of Health Behavior. 2007;31:S122–S133. doi: 10.5555/ajhb.2007.31.supp.S122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beebe TJ, Harrison PA, McRae JA, Anderson RE, Fulkerson JA. An evaluation of computer-assisted self-interviews in a school setting. Public Opinion Quarterly. 1998;62:623–632. [Google Scholar]

- Bobula JA, Anderson LS, Riesch SK, Canty-Mitchell J, Duncan A, Kaiser-Krueger HA, et al. Enhancing survey data collection among youth and adults: Use of handheld and laptop computers. CIN: Computer, Informatics, Nursing. 2005;22:255–265. doi: 10.1097/00024665-200409000-00004. [DOI] [PubMed] [Google Scholar]

- Couper MP, Baker RP, Bethlehem J, Clark CZF, Martin J, Nicholls WL, et al., editors. Computer assisted survey information collection. John Wiley & Sons; Hoboken, NJ: 1998. [Google Scholar]

- Czaja R, Blair J. Designing surveys: A guide to decisions and procedures. Pine Forge Press; Thousand Oaks, CA: 1996. [Google Scholar]

- Dale O, Hagen KB. Despite technical problems personal digital assistants outperform pen and paper when collecting patient diary data. Journal of Clinical Epidemiology. 2007;60:8–17. doi: 10.1016/j.jclinepi.2006.04.005. [DOI] [PubMed] [Google Scholar]

- Gravlee CC. Mobile computer-assisted personal interviewing with handheld computers: The Entryware System 3.0. Field Methods. 2002;14:322–336. [Google Scholar]

- Green AS, Kreuter MW. Paper or plastic? Data equivalence in paper and electronic diaries. Psychological Methods. 2006;11:87–105. doi: 10.1037/1082-989X.11.1.87. [DOI] [PubMed] [Google Scholar]

- Hardwick ME, Pulido P, Adelson W. The use of handheld technology in nursing research and practice. Orthopaedic Nursing. 2007;26:251–256. doi: 10.1097/01.NOR.0000284655.62377.d9. [DOI] [PubMed] [Google Scholar]

- Hendrickson SG. Beyond translation. Cultural fit. Western Journal of Nursing Research. 2003;25:593–608. doi: 10.1177/0193945903253001. [DOI] [PubMed] [Google Scholar]

- Holmes SM. An ethnographic study of the social context of migrant health in the United States. PLoS Medicine. 2006;3:1776–1793. doi: 10.1371/journal.pmed.0030448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kandula NR, Kersey M, Lurie N. Assuring the health of immigrants: What the leading health indicators tell us. Annual Review of Public Health. 2004;25:357–376. doi: 10.1146/annurev.publhealth.25.101802.123107. [DOI] [PubMed] [Google Scholar]

- Kilanowski JF, Ryan-Wenger N. Health status in an invisible population: Carnival and migrant worker children. Western Journal of Nursing Research. 2007;29:100–120. doi: 10.1177/0193945906295484. [DOI] [PubMed] [Google Scholar]

- Kincaid JP, Fishburne RP, Rogers RL, Chisson BS. U.S. Department of the Navy; Washington, DC: Derivation of new readability formulas (Automated Readability Index, Fog Count, and Flesch Reading Ease Formula) for Navy enlisted personnel. 1975

- Kreps GL, Sparks L. Meeting the health literacy needs of immigrant populations. Patient Education and Counseling. 2008;71:328–332. doi: 10.1016/j.pec.2008.03.001. [DOI] [PubMed] [Google Scholar]

- Lange JW. Methodological concerns for non-Hispanic investigators conducting research with Hispanic Americans. Research in Nursing & Health. 2002;25:411–419. doi: 10.1002/nur.10049. [DOI] [PubMed] [Google Scholar]

- Leyva M, Sharif I, Ozuah P. Health literacy among Spanish-speaking Latino parents with limited English proficiency. Ambulatory Pediatrics. 2005;5:56–59. doi: 10.1367/A04-093R.1. [DOI] [PubMed] [Google Scholar]

- Mailbach E, Murphy D. Self-efficacy in health promotion research and practice: Conceptualization and measurement. Health Education Research. 1995;10:37–50. [Google Scholar]

- Marin G, Sabogal F, Marvin BV, Otero-Sabogal R, Perez-Stable E. Development of a Short Acculturation Scale for Hispanics. Hispanic Journal of Behavioral Sciences. 1987;9:183–205. [Google Scholar]

- National Center for Farmworker Health [Retrieved November 23, 2009];Demographics. 2009 from www.ncfh.org/docs/fs-Migrant%20Demographics. pdf. [Google Scholar]

- Nord M, Andrews M, Carlson S. United States Department of Agriculture; Washington, DC: Household food security in the United States (No. 11) 2004

- Piasecki TM, Hufford MR, Solhan M, Trull TJ. Assessing clients in their natural environments with electronic diaries: Rationale, benefits, limitations, and barriers. Psychological Assessment. 2007;19:25–43. doi: 10.1037/1040-3590.19.1.25. [DOI] [PubMed] [Google Scholar]

- Ramos M, Sedivi BM, Sweet EM. Computerized self-administered questionnaires. In: Couper MP, Baker RP, Bethlehem J, Clark CZF, Martin J, Nicholls WL, et al., editors. Computer assisted survey information collection. John Wiley & Sons; Hoboken, NJ: 1998. pp. 389–408. [Google Scholar]

- Romer D, Hornik R, Stanton B, Black M, Xiannian L, Ricardo I, et al. “Talking” computers: A reliable and private method to conduct interviews on sensitive topics with children. Journal of Sex Research. 1998;34:3–9. [Google Scholar]

- Rubalcave LN, Teruel GM, Thomas D, Goldman N. The healthy migrant effect: New findings from the Mexican Family Life Survey. American Journal of Public Health. 2008;98:78–84. doi: 10.2105/AJPH.2006.098418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saleh KJ, Kassim RA, Moussa J, Dykes D, Bottolfson H, Gioe TJ, et al. Comparison of commonly used orthopaedic outcome measures using palm-top computers and paper surveys. Journal of Orthopaedic Research. 2002;20:1146–1151. doi: 10.1016/S0736-0266(02)00059-1. [DOI] [PubMed] [Google Scholar]

- Stork P. SEDCA_Sound Enhanced Data Collection Application [Computer software] Don’t Pa..panic Software; Cleveland OH: 2006. [Google Scholar]

- Tourangeau R, Smith TW. Asking sensitive questions: The impact of data collection, mode, question format, and question context. Public Opinion Quarterly. 1996;60:275–304. [Google Scholar]

- Trapl ES. Understanding adolescent survey responses: Impact of mode and other characteristics on data outcomes and quality (Doctoral dissertation, Case Western Reserve University, 2007) Dissertation Abstracts International. 2007;68:2303. [Google Scholar]

- Trapl ES, Borawski EA, Stork PP, Lovegreen LD, Colabianchi N, Cole ML, et al. Use of audio-enhanced handheld computers for school-based data collection. Journal of Adolescent Health. 2005;37:296–305. doi: 10.1016/j.jadohealth.2005.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner CF, Ku L, Rogers SM, Lindberg LD, Pleck JH, Sonenstein FL. Adolescent sexual behavior, drug use, and violence: Increased reporting with computer survey technology. Science. 1998;280:867–873. doi: 10.1126/science.280.5365.867. [DOI] [PubMed] [Google Scholar]

- United States Census Bureau [Retrieved December 9, 2009];Table 7.2 Nativity by Sex and Hispanic Origin. 2006 from http://www.census.gov/population/socdemo/hispanic/cps2006/2006_tab7.2.xls.

- United States Department of Health and Human Services [Retrieved December 8, 2009];A systematic approach to health improvement. Healthy people 2010. 2002a from http://www.healthypeople.gov/DOCUMENT/html/uih/uih_2.htm.

- United States Department of Health and Human Services [Retrieved December 8, 2009];Healthy people 2010. 2002b from http://www.healthypeople.gov.

- United States Department of Health and Human Services [Retrieved December 8, 2009];Healthy people 2020: The road ahead. 2009 from http://www.healthypeople.gov/HP2020.

- United States Department of Health and Human Services. National Network of Libraries of Medicine [Retrieved December 8, 2009];Health literacy. 2008 from http://nnlm.gov/outreach/consumer/hlthlit.html.

- United States Department of Health and Human Services. Office of Disease Prevention and Health [Retrieved December 8, 2009];Health literacy basics. 2005 from http://www.health.gov/communication/literacy/quickguide/factsbasic.htm.

- United States Department of Labor. Office of the Assistant Secretary for Policy Office [Retrieved December 8, 2009];Findings from the National Agricultural Workers Survey (NAWS), 2001–2002: A demographic and employment profile of the United States farmworker (Report No. 9) 2005 from http://www.doleta.gov/agworker/report9/naws_rpt9.pdf.

- van Griensven F, Naorat S, Kilmarx PH, Jeeyapant S, Manopaiboon C, Chaikummao S, et al. Palmtop-assisted self-interviewing for the collection of sensitive behavioral data: Randomized trial with drug use urine testing. American Journal of Epidemiology. 2006;163:271–278. doi: 10.1093/aje/kwj038. [DOI] [PubMed] [Google Scholar]

- Walsh C, Ross L. Are minority children under- or overrepresented in pediatric research? Pediatrics. 2003;112:890–895. doi: 10.1542/peds.112.4.890. [DOI] [PubMed] [Google Scholar]

- White S, Chen J, Atchison R. Relationship of preventive health practices and health literacy: A national study. American Journal of Health Behavior. 2008;32:227–242. doi: 10.5555/ajhb.2008.32.3.227. [DOI] [PubMed] [Google Scholar]

- Zagaria M. Issues in pharmacotherapy. Health literacy: Striving for effective communication. American Journal for Nurse Practitioners. 2008;12:23–26. [Google Scholar]