Abstract

Deaf bilinguals for whom American Sign Language (ASL) is the first language and English is the second language judged the semantic relatedness of word pairs in English. Critically, a subset of both the semantically related and unrelated word pairs were selected such that the translations of the two English words also had related forms in ASL. Word pairs that were semantically related were judged more quickly when the form of the ASL translation was also similar whereas word pairs that were semantically unrelated were judged more slowly when the form of the ASL translation was similar. A control group of hearing bilinguals without any knowledge of ASL produced an entirely different pattern of results. Taken together, these results constitute the first demonstration that deaf readers activate the ASL translations of written words under conditions in which the translation is neither present perceptually nor required to perform the task.

After decades of interest in the topic of how bilinguals keep their two languages separate, an increasing number of studies show that both languages are active when bilinguals read (Dijkstra, 2005), listen (Marian & Spivey, 2003), and speak (Kroll, Bobb, & Wodniecka, 2006) each language. The growing consensus is that bilinguals do not “switch off” the language not in use, even when it might be beneficial to do so. Cross-language activation has been observed for many different bilingual language pairings (e.g., Dijkstra, 2005; Emmorey, Borinstein, Thompson, & Gollan, 2008), but has not yet been documented in deaf bilinguals.

The present study investigated cross-language activation in deaf individuals whose first language (L1) is American Sign Language (ASL), and second language (L2) is English. For hearing unimodal bilinguals, spoken or written words are assumed to activate lexical competitors that are phonologically or orthographically similar. Cross-language activation sometimes disrupts processing, as in English coin and French coin, which share orthography but not phonology or semantics; sometimes simultaneous activation speeds processing, as in Dutch appel and English apple, which share phonology, orthography and semantics. But ASL and English have very little phonological or orthographic overlap because the languages rely on different articulators and ASL lacks a widely used written system. Thus, if cross-language activation is observed in deaf ASL-English bilinguals, it indicates that English orthography can directly map to ASL phonology despite the lack of form relatedness, or that cross-language activation does not require form-based mediation. The goal of this study is to determine whether deaf bilinguals activate signs when reading words in the absence of explicit ASL input.

Thierry and Wu (2007) investigated L1 activation during bilingual written-word processing in the L2 by asking Chinese-English bilinguals to decide whether two English words, such as novel and violin, were semantically related. Although the task was performed in English (L2) only, half of the word pairs had form-related translations in Chinese. For example, novel and violin share a character when translated into Chinese. The amplitude of the N400 response in the ERP record was reduced when the Chinese translations of the English words shared a character. Monolingual English speakers did not show this effect, suggesting bilinguals implicitly activate L1 translations while reading L2 words even across languages with different orthographic systems.

We adapted the semantic relatedness paradigm from Thierry and Wu (2007) to ask whether deaf readers activate the ASL translations of English words. Thierry and Wu’s results indicate that the bilingual’s two languages need not share the same specific orthographic or phonological forms for parallel activation to occur. However, English and Chinese represent their phonology and orthography in the same modality. In the current study, we ask whether activation can spread across languages in the absence of phonological and orthographic representations in the same modality. Instead of sharing characters, the ASL translations of the English words in our experiment had commonalities in their manual form. ASL signs vary along four formational parameters: handshape, location, movement and orientation (Battison, 1978; Stokoe, Croneberg, & Casterline, 1965). Studies have shown that these parameters influence psycholinguistic processing (e.g., Bellugi, Klima, & Siple, 1975). We selected English word pairs whose translations shared several formational parameters, and compared responses to English words whose translations were unrelated. If signers perform the English judgment without activating ASL, the translations should have no effect. However, if ASL is activated when processing English print, signers should find it difficult to reply NO to two words such as movie and paper, that differ in meaning, because the ASL signs they activate are similar in form (see Figure 1). Likewise, they should be faster to reply YES to two words that are similar in meaning, such as duck and bird, when the ASL signs they activate are also similar in form. Because the task is performed in English only, there is no reason to expect ASL to be activated, unless signers routinely activate signs while reading English words.

Figure 1.

ASL signs for MOVIE (left) and PAPER (right).

Experiment 1: Deaf ASL-English Bilinguals

Participants

Nineteen deaf adults (11 female) were selected from a pool of 52 deaf participants. Criteria for inclusion were prelingual hearing loss of 90dB or greater in the better ear, age 18 to 55 yrs, fluency in ASL, and English reading equivalence of Grade 8.9 or higher. The 19 ASL-English bilinguals were highly proficient in ASL (ASL-SRT, M = 261, range [19, 32], Hauser, Paludneviciene, Supalla & Bavalier, 2008) and English (Passage Comprehension subtest of the Woodcock-Johnson III, M = 38, range [35, 45]). Two had completed high school; all others had attended college and four completed a degree (3 BA/BS, 1 MA).

Materials

One hundred and twenty English word pairs were divided between two response conditions. Sixty pairs were semantically related (heart-brain), and 60 were semantically unrelated (baby-lion). Experimental items were a subset including 32 semantically related and 34 semantically unrelated pairs. Fourteen of the semantically related pairs had phonologically-related translations in ASL (e.g., bird-duck), and sixteen of the semantically unrelated pairs had phonologically-related translations (e.g., movie-paper). Phonological similarity was defined as sharing a minimum of two formational parameters. The remaining 54 word pairs were fillers.

Semantic similarity ratings on a 7-point scale were collected from 27 hearing English monolinguals. Items rated between 2.75 and 4.0 were eliminated. Stimulus pairs were also rated by five deaf ASL-English bilinguals. Any pairs not assigned to the appropriate semantic condition by the majority of informants were excluded. Because it was impossible to use objective measures to identify all lexical features that might vary across the critical pairs, the set of 120 words was first presented to 13 hearing English monolinguals with no knowledge of ASL in the experimental task. Item analyses were then performed on the monolingual data to identify a subset of the materials for which the monolinguals showed no effect of the ASL phonology (see Appendix 1). There were also no effects of ASL phonology on the selected subset in a subject analysis of the monolingual data (see Figure 2). Stimulus pairs without phonologically-related ASL translations were selected that matched the stimulus pairs with phonologically-related ASL translations on word length, number of syllables and frequency relying on statistics from the English Lexicon Project database (http://elexicon.wustl.edu/). There were no differences in these characteristics across conditions (see Table 1).

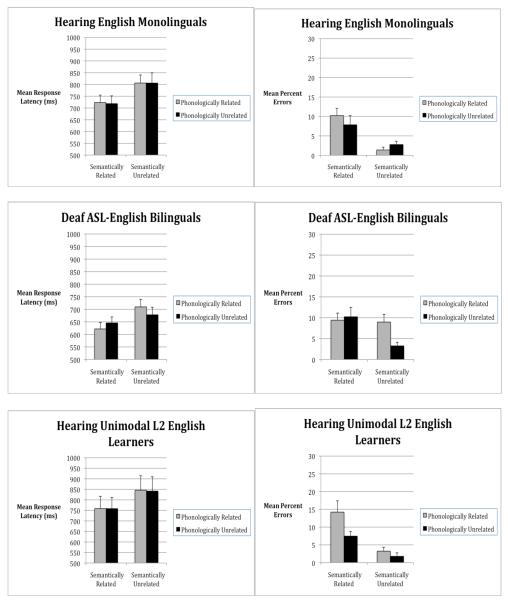

Figure 2.

Mean latencies (in milliseconds; left) and percent errors (right) and standard error bars in the semantic judgment task as a function of the semantic relationship and the phonological form of the translation in ASL in hearing monolinguals (top), deaf ASL-English bilinguals (middle) and hearing L2 English learners (bottom).

Table 1.

Lexical characteristics of the English stimuli by condition

| Semantically Unrelated | Semantically Related | |||||

|---|---|---|---|---|---|---|

| Phonologically | Phonologically | |||||

| Unrelated | Related | t-test | Unrelated | Related | t-test | |

| Semantic Similarity Rating (1 – 7) |

1.61 | 1.61 | n.s. | 5.32 | 5.36 | n.s. |

| Word length (# letters) |

5.72 | 5.50 | n.s. | 5.47 | 6.11 | n.s. |

| # Syllables | 1.78 | 1.67 | n.s. | 1.75 | 1.82 | n.s. |

| HAL Log Frequency |

10.14 | 9.78 | n.s. | 9.69 | 9.49 | n.s. |

Procedure

Participants first completed a background questionnaire and the language proficiency tasks. Experimental trials began with a 500 ms fixation cross. Two lower-case English words were presented sequentially centered on the computer screen. The first word appeared for 500 ms. Following a 500 ms interval, the second word was presented until the participant responded, up to 2500 ms. Participants were asked to respond with their dominant hand when the words were “related in meaning” and the non-dominant hand when the words were “not related in meaning”. RT was measured to the nearest millisecond from the onset of the second word. Participants received feedback on their accuracy during 10 practice trials.

After completing the experiment, participants translated each English word into ASL. If they did not produce the expected sign, the trial was eliminated unless the response still fit the condition criteria (1.2% of responses were eliminated for this reason). RTs under 300 ms or more than 2.5 s.d. from the mean (2.9% of the responses) were identified as outliers and also removed. Participants whose overall accuracy was below 85% were excluded from the analysis. One participant was excluded for this reason. For the remaining participants inaccurate responses were removed from the RT analysis and replaced with condition means.

Results

A 2 (Semantics) × 2 (Phonology) repeated measures ANOVA across subjects (F1) and items (F2) revealed effects of ASL similarity on semantic relatedness RTs. Participants were significantly faster to respond to semantically related (633 ms) than unrelated (694 ms) English pairs, F1(1, 17) = 17.67, p < .001, η2P =.510, F2(1, 62) = 21.29, p < .001, η2P =.256. Critically, the interaction of Semantics and Phonology was significant as well, F1(1, 17) = 11.14, p < .01, η2P = .396, F2(1, 62) = 4.49, p < .05, η2P =.068. Pairwise comparisons indicated that participants were faster to accept semantically related words with phonologically-related ASL translations (622 ms) than with phonologically-unrelated ASL translations (645 ms), p < .02, but slower to reject semantically unrelated words with phonologically-related translations (709 ms) than with phonologically-unrelated translations (678 ms), p < .05. (See Figure 2.)

There were no main effects or interactions for accuracy. However, the pattern of errors rules out a speed-accuracy trade-off for this participant group. Participants made more errors in the conditions in which responses were slower (semantically related, but phonologically unrelated; and semantically unrelated but phonologically related). The evidence indicates that proficient ASL-English bilinguals access ASL translations when reading English words for meaning.

Experiment 2: Hearing L2 English Controls

Participants

Fifteen hearing adult L2 learners of English (13 female) were recruited. Participants who had a range of first languages were selected to avoid consistent effects of L1 co-activation on the L2 English task. L1s included Chinese, German, Ghomala, Hungarian, Luo, Russian and Spanish. All participants were graduate or undergraduate students at the University of New Mexico and were ages 18 to 55 yrs. Participants’ English proficiency was evaluated with the Passage Comprehension subtest of the Woodcock-Johnson III Tests of Achievement (M = 38, range [35, 45]).

Materials and Procedure

The materials and procedure were identical to those used in Experiment 1 except that there was no assessment of ASL proficiency, and participants were not asked to translate the English words into ASL. 1.2% of correct responses were identified as outliers and removed. Two participants were not included in the analysis because overall accuracy was below 85%. For the remaining 13 participants, inaccurate responses were removed from the RT analysis and replaced with condition means.

Results

Like the deaf bilinguals, the hearing bilinguals were faster to respond to semantically related (761 ms) than to semantically unrelated (844 ms) English word pairs, F1(1,12) = 6.88, p < .05, η2P =.364, F2(1,62) = 9.90, p < .001, η2P =.324. 2 However, there was no interaction of semantics and phonology, F1(1,12) = .218, p = .649, η2P =.018, F2(1,62) = .017, p = .90, η2P =.001. The hearing bilinguals made significantly more errors on the semantically related word pairs (11%) than semantically unrelated word pairs (2.5%), F1(1,12) = 13.73, p < .01, η2P =.534, F2(1,62) = 5.43, p < .01, η2P =.208. There was also a main effect of phonology on accuracy in the subject analysis, F1(1,12)= 6.54, p < .05, η2P =.353, that approached significance in the item analysis, F2(1,62) = 3.51, p = .07, η2P =.042. Hearing bilinguals made significantly more errors on word pairs with phonologically-related ASL translations (9%) than on word pairs with phonologically-unrelated ASL translations (5%, see Figure 2). Crucially, this pattern is not that observed for the deaf bilinguals, who made more errors for word pairs with phonologically-related translations in ASL if and only if the phonological relationship conflicted with the semantic relationship (i.e., phonologically related, but semantically unrelated). A likely explanation of this result for the hearing bilinguals is that item selection for this condition was more constrained due to the need to match items on phonology, resulting in item pairs that were not as obviously related (e.g., complain-disgust) without knowledge of the phonological similarity in ASL. English monolinguals may have been more likely to consider these words semantically related because they are familiar with a broader range of meanings than non-native speakers of English. There was no interaction of semantics and phonology for the hearing bilinguals, F1(1, 12) = 2.09, p = .17, η2P =.148, F2(1, 62) = 1.34, p = .25, η2P =.021.

Discussion

These results provide compelling evidence that deaf bilinguals activate signs while processing written words of a spoken language. In a previous attempt to detect cross-language activation in ASL-English bilinguals, Hanson and Feldman (1989) presented deaf adults with an English lexical decision task in which English primes and targets had varying morphological relationships. They found facilitation when the words shared a morpheme, but not when the ASL translations of the prime and the target shared a morpheme. The priming effects across languages may be too fleeting to be detected in a task that includes multiple intervening items between prime and target. However, another recent study (Ormel, Hermans, Knoors, & Verhoeven, under review) reported results that converge with those presented here. They found that the time for deaf children to make decisions about whether a written word in Dutch matched a picture was influenced by the iconicity and phonology of the sign translation of the word and picture. While it is clear from these results that signs are active during written word processing, the study design does not allow us to conclude with certainty whether English orthography maps directly to ASL phonology, or whether semantic mediation plays an intervening role. Several factors that were not controlled in the current study could provide further insight to this question in future investigations, including the phonological parameters that were shared by the translation equivalents, the morphological relationship between those items, or the prevalence of initialization or mouthing as a standard component of the translation equivalents. One sensible prediction would be that different phonological parameters of signs influence cross-language activation differently, as has been found in sign recognition and priming studies (Carreiras, Gutierrez-Sigut, Baquero, & Corina, 2008; Dye & Shih, 2006; Mayberry, 2007; Morford & Carlson, in press; Orfanidou, Adam, McQueen, & Morgan, 2009).

A counterintuitive finding in our study and Thierry and Wu (2007) is that activation of the L1 translation occurred in relatively proficient bilinguals. Models of L2 lexical development (e.g., Kroll & Stewart, 1994) suggest that the translation equivalent may be important during early stages of L2 learning but that skilled L2 users can access the meaning of L2 words without L1 mediation (Kroll, Michael, Tokowicz, & Dufour, 2002; Sunderman & Kroll, 2006). Past evidence for cross-language activation in proficient bilinguals has been restricted to lexical form relatives, i.e., orthographic or phonological neighbors of the target word (e.g., Dijkstra, 2005). The new findings raise questions about how proficiency is related to cross-language activation. Although the deaf participants in the present study were highly skilled in English, the results indicate that ASL was active during this English-only task. Possibly, a learning history in which signs are presented with English print creates co-activation across languages3 that persists even after individuals have full proficiency in their L2.

How does activation spread between words in two languages differing in modality? Deaf bilinguals may be able to activate English and ASL phonological representations simultaneously without competition. Casey and Emmorey (2009) documented the tendency of hearing ASL-English bilinguals to produce ASL signs while speaking English. Subsequently, Emmorey, Petrich, and Gollan (2009) demonstrated that while there is a cognitive cost to generating a message in two modalities, there are comprehension benefits for the interlocutor. An implication of these results is that inhibition levels may be reduced in bilinguals whose languages are produced in different modalities. Recently, Emmorey, Luk, Pyers, and Bialystok (2008) have shown that hearing bimodal bilinguals appear not to exhibit the same cognitive advantages in executive function as unimodal bilinguals, suggesting that although both languages may be active, they may not compete for selection in the same way as two spoken languages. These findings for hearing bimodal bilinguals have yet to be investigated in deaf bimodal bilinguals. The tendency to produce ASL in isolation of spoken words, and to learn English print prior to or in tandem with spoken English word forms, may have ramifications for the internal structure of the bilingual lexicon for deaf bilinguals. The interaction between semantics and ASL phonology in our study may reflect the way in which the stronger of the two languages modulates feedback to the semantics.

Our results cannot, however, be attributed entirely to the unique properties of bimodal bilingualism because they are similar to those reported by Thierry and Wu (2007) for unimodal Chinese-English bilinguals. The similar pattern suggests that another critical factor may be the cross-language form difference. With no cross-language overlap in orthography or phonology, there may be increased reliance on semantics. However, the level of English-reading fluency in both ASL-English and Chinese-English bilinguals would make it more likely that the activation of the translation is a consequence of semantic access rather than a mediator to meaning. For less proficient L2 readers, the translation may function to mediate access and provide a critical link to meaning, as proposed by the Revised Hierarchical Model (Kroll & Stewart, 1994). For more proficient L2 readers, that link may be unnecessary, but under conditions that permit or encourage access to the translation, it may enhance the nuances of meaning available to the L2.

A recent study by Guo, Misra, Tam, and Kroll (under review) provides some support for this claim. Proficient Chinese-English bilinguals indicated whether a Chinese word was the correct translation of an English word. On trials when the Chinese word was not the correct translation of the English word, it was similar to the translation in form or meaning. When the stimulus onset asynchrony (SOA) between the two words was long (750 ms), providing sufficient time to generate the translation, bilinguals were sensitive to both the form and meaning of the translation. However, when the SOA was short (300 ms), they were sensitive to the meaning but not the form of the translation, suggesting that access to the translation followed rather than preceded access to the meaning of the L2 English word. Because the timing in the present study and also Thierry and Wu (2007) included relatively long SOAs, it is possible that the proficient bilinguals in each of these studies used the additional time to retrieve the L1 translation after they understood the meaning of the L2 English word. If this is correct, then we might predict that if the SOA is reduced, the effect of the translation equivalent should be reduced or eliminated.

In sum, the results of the present study show that deaf ASL-English bilinguals activate the ASL translations of written words in English even when the task does not explicitly require the use of ASL. Like other recent bilingual studies, these data suggest a high degree of activity among alternatives in the language not in use. Unlike prior studies, our results demonstrate that cross-language interactions occur across modality, suggesting that parallel activation does not depend on ambiguity in lexical form. Instead, they demonstrate a universal feature of the architecture of the bilingual lexicon that appears to function at a relatively abstract level of representation and processing.

Acknowledgments

We thank our participants and Richard Bailey, Brian Burns, Mark Minnick and Gabriel Waters for help in data collection and analysis. This research was supported by the National Science Foundation Science of Learning Center Program, under cooperative agreement number SBE-0541953. The writing of this article was also supported by NSF Grant BCS-0418071 and NIH Grant R01-HD053146 to Judith Kroll.

Appendix 1

| Semantically unrelated English word pairs with phonologically related ASL translations |

Semantically related English word pairs with phonologically related ASL translations |

||

|---|---|---|---|

| blood | bread | alligator | crocodile |

| butter | soap | bird | duck |

| cleaning | counting | complain | disgust |

| earth | melon | congress | senate |

| finish | gesture | detective | policeman |

| horse | uncle | excited | emotion |

| make | lock | girl | aunt |

| nice | week | king | queen |

| paper | movie | know | think |

| religion | tendency | morning | evening |

| stars | socks | mother | father |

| summer | ugly | mouse | rat |

| tree | noon | swallow | thirsty |

| water | cigar | three | eight |

| work | warn | ||

| yesterday | dormitory | ||

Footnotes

Standardization of the ASL-SRT is currently underway. Average score for native deaf signers (n=23) in that study is 25.9, s.d. = 4.0.

The slower reaction time of the control group may be related in part to the fact that these are hearing readers. The monolingual native speakers were also slower on this task than the deaf participants.

If this is indeed the case, it raises the possibility that the effects seen here are not strictly cross-language effects, to the extent that a direct mapping of English orthography to ASL phonology could be construed as a within-language mapping of complex visual (i.e., not phonetic, and not English-specific) orthographic patterns to ASL phonology. Subsequent associations of spoken English word forms with English orthography could nevertheless be acquired and also influence performance on this task.

Portions of this study were presented at the 7th International Symposium on Bilingualism, Utrecht, The Netherlands.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Battison R. Lexical borrowing in American Sign Language. Linstok Press; Silver Spring, MD: 1978. [Google Scholar]

- Bellugi U, Klima ES, Siple P. Remembering in signs. Cognition. 1975;3:93–125. [Google Scholar]

- Carreiras M, Gutierrez-Sigut E, Baquero S, Corina D. Lexical processing in Spanish Sign Language (LSE) Journal of Memory and Language. 2008;58:100–122. [Google Scholar]

- Casey S, Emmorey K. Co-speech gesture in bimodal bilinguals. Language and Cognitive Processes. 2009;24(2):290–312. doi: 10.1080/01690960801916188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dijkstra T. Bilingual visual word recognition and lexical access. In: Kroll JF, De Groot AMB, editors. Handbook of Bilingualism: Psycholinguistic Approaches. Oxford University Press; New York: 2005. pp. 179–201. [Google Scholar]

- Dye MWG, Shih S. Phonological priming in British Sign Language. In: Goldstein LM, Whalen DH, Best CT, editors. Laboratory Phonology. Vol. 8. Mouton de Grutyer; Berlin: 2006. pp. 241–263. [Google Scholar]

- Emmorey K, Borinstein HB, Thompson RL, Gollan TH. Bimodal Bilingualism. Bilingualism: Language and Cognition. 2008;11:43–61. doi: 10.1017/S1366728907003203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Luk G, Pyers JE, Bialystok E. The source of enhanced cognitive control in bilinguals. Psychological Science. 2008;19:1201–1206. doi: 10.1111/j.1467-9280.2008.02224.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Petrich J, Gollan T. Simultaneous production of American Sign Language and English costs the speaker but benefits the perceiver; Paper presented at the 7th International Symposium on Bilingualism; Utrecht, The Netherlands. Jul, 2009. [Google Scholar]

- Guo T, Misra M, Tam JW, Kroll JF. On the time course of accessing meaning in a second language: An electrophysiological investigation of translation recognition. doi: 10.1037/a0028076. (under review) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanson V, Feldman L. Language specificity in lexical organization: Evidence from deaf signers’ lexical organization of American Sign Language and English. Memory & Cognition. 1989;17:292–301. doi: 10.3758/bf03198467. [DOI] [PubMed] [Google Scholar]

- Hauser P, Paludneviciene R, Supalla T, Bavalier D. American Sign Language-Sentence Reproduction Test: Development and Implications. In: de Quadros RM, editor. Sign Language: Spinning and unraveling the past, present and future. Arara Azul; Petrópolis, Brazil: 2008. pp. 160–172. [Google Scholar]

- Kroll JF, Bobb SC, Wodnieka Z. Language selectivity is the exception, not the rule: Arguments against a fixed locus of language selection in bilingual speech. Bilingualism: Language and Cognition. 2006;9:119–135. [Google Scholar]

- Kroll JF, Michael E, Tokowicz N, Dufour R. The development of lexical fluency in a second language. Second Language Research. 2002;18:137–171. [Google Scholar]

- Kroll JF, Stewart E. Category interference in translation and picture naming: Evidence for asymmetric connections between bilingual memory representations. Journal of Memory and Language. 1994;33:149–174. [Google Scholar]

- Marian V, Spivey MJ. Competing activation in bilingual language processing: Within- and between-language competition. Bilingualism: Language and Cognition. 2003;6:97–115. [Google Scholar]

- Mayberry RI. When timing is everything: Age of first-language acquisition effects on second-language learning. Applied Psycholinguistics. 2007;28:537–549. [Google Scholar]

- Morford JP, Carlson ML. Sign perception and recognition in non-native signers of ASL. Language Learning and Development. doi: 10.1080/15475441.2011.543393. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orfanidou E, Adam R, McQueen JM, Morgan G. Making sense of nonsense in British Sign Language (BSL): The contribution of different phonological parameters to sign recognition. Memory & Cognition. 2009;37:302–315. doi: 10.3758/MC.37.3.302. [DOI] [PubMed] [Google Scholar]

- Ormel E, Hermans D, Knoors H, Verhoeven L. Cross-Language effects in visual word recognition: The case of bilingual deaf children. (under review) [Google Scholar]

- Stokoe W, Casterline D, Croneberg C. A dictionary of American Sign Language on linguistic principles. Gallaudet College Press; Washington, DC: 1965. [Google Scholar]

- Sunderman G, Kroll JF. First language activation during second language lexical processing: An investigation of lexical form meaning and grammatical class. Studies in Second Language Acquisition. 2006;28:387–422. [Google Scholar]

- Thierry G, Wu YJ. Brain potentials reveal unconscious translation during foreign language comprehension. Proceeding of National Academy of Sciences. 2007;104:12530–12535. doi: 10.1073/pnas.0609927104. [DOI] [PMC free article] [PubMed] [Google Scholar]