Abstract

We present a deconvolution estimator for the density function of a random variable from a set of independent replicate measurements. We assume that measurements are made with normally distributed errors having unknown and possibly heterogeneous variances. The estimator generalizes the deconvoluting kernel density estimator of Stefanski and Carroll (1990), with error variances estimated from the replicate observations. We derive expressions for the integrated mean squared error and examine its rate of convergence as n → ∞ and the number of replicates is fixed. We investigate the finite-sample performance of the estimator through a simulation study and an application to real data.

Keywords: Bandwidth, Bootstrap, Deconvolution, Hypergeometric Series, Measurement Error

1 Introduction

We consider estimating the density function of an unobservable random variable from a set of replicate measurements. Let X have unknown density function fx. Suppose that X1, …, Xn are measured independently and repeatedly as , where Wr,j = Xr + Ur,j and mr ≥ 2. Then the density of the observed Wr,j is related to fx by the convolution, fWr,j= fx * fUr,j. We present an estimator for fx under the assumption that , independent of Xr, r = 1, …, n and j = 1, …, mr. We do not model the error variances , and thus our methods are completely general. We note that in applications where reliable models for variances (say, as functions of the means) can be specified, more efficient estimators can likely be obtained.

Several authors have studied estimation of fx when measurement errors are identically distributed with a known density function. The approach we take is closely related to that of Stefanski and Carroll (1990), who presented deconvoluting kernel density estimators appropriate for a wide class of error distributions. The properties of deconvoluting estimators have been studied in detail (see, for example, Carroll and Hall, 1988; Devroye, 1989; Stefanski, 1990; Fan, 1991a, 1991b, 1992; Wand, 1998). Recently, authors have studied deconvolution under less restrictive assumptions. Estimating fx when errors are identically distributed with an unknown density function has been addressed by Diggle and Hall (1993), Patil (1996), Li and Vuong (1998), Meister (2006), Neumann (2007) and Delaigle et al. (2007, 2008), among others.

Fewer authors have addressed deconvolution in the presence of heteroscedastic measurement error. Staudenmayer et al. (2007) assume the availability of replicate measurements and model measurement error variances as functions of the unobserved data. The density function fx is modeled as a convex mixture of B-spline densities. Delaigle and Meister (2007, 2008) present a generalization to the deconvoluting kernel estimator for the case where measurement errors are heteroscedastic and have known distributions. The characteristic function for the measurement error density is described by a weighted average of characteristic functions. Additionally they discuss estimation when error densities are imperfectly known but estimable, for example, from replicate measurements. Under certain conditions, the known error densities may be replaced with their estimates without affecting convergence properties.

We assume a fixed number of replicate measurements and approach the problem via the conditional distributions of the sample means and variances of these measurements. A natural estimator arises from the results of Stefanski et al. (2005), and generalizes the deconvoluting estimator of Stefanski and Carroll (1990). Measurement errors are assumed normal, but not identically distributed. The estimator accommodates heteroscedastic and homoscedastic measurement errors. However as the main focus of this paper is estimation in the presence of heteroscedastic error, a detailed examination of our estimator’s properties is restricted to this case.

We start with a brief review of the results of Stefanski et al. (2005). In Section 2, we define our estimator and show its connection to the Stefanski and Carroll (1990) estimator. We derive and examine the asymptotic mean integrated squared error in Section 3, and in Section 4 we investigate finite-sample properties via simulation. We address bandwidth estimation in Section 5, describing a bootstrap bandwidth selection procedures. In Section 6 we present an application to real data.

1.1 Background

Our estimator of fx uses results of Stefanski et al. (2005), who presented a method for constructing unbiased estimators of g(μ) where μ is the mean of a normally distributed random variable and g() is an entire function over the complex plane. For reference, we present the following theorem, without proof, from Stefanski et al. (2005).

Theorem 1.1.1

Suppose μ̂ and σ̂2 are independent random variables such that μ̂ ~ N (μ, τ̂2) and (dσ̂2/σ2) ~ Chi-Squared(d). Define , where Z1, …, Zd are independent and identically distributed N(0, 1) random variables, and let . Let g() be an entire function, so that g() has a series expansion defined at each point in the complex plane, and suppose that the interchange of expectation and summation in this expansion is justified. Then the estimator,

| (1) |

is uniformly minimum variance unbiased for g(μ) provided Var(θ̂)< ∞.

A proof and discussion may be found in Stefanski et al. (2005) and Stefanski (1989). However, a few comments about the estimator θ̂ are in order. Although g() is complex-valued, θ̂ is real-valued, because the imaginary part of g() has expectation 0. For most functions g(), however, finding a closed-form expression for the estimator in (1) is difficult. A Monte Carlo approximation to θ̂ is given by

| (2) |

where T1, …, TB are independent replicates of the variable T. Variances of (1) and (2) are real-valued, but generally do not have closed-form expressions. However, Monte Carlo methods can be used to estimate both as described in Stefanski et. al (2005).

2 The Deconvolution Estimator

2.1 Heteroscedastic Measurement Errors

The estimators in (1) and (2) can be used to estimate the density function of a random variable X that is measured with normally distributed error having unknown, nonconstant variance, provided each value of X is measured at least twice, and that the measurement error variance is constant among replicates but may differ between replicates. Suppose that the variables X1, …, Xn are measured independently and repeatedly as , mr ≥ 2, where for each r = 1, …, n and j = 1, …, mr,

| (3) |

Ur,j is independent of Xr and is unknown. Define the sample moments and . Then conditioned on Xr, the following are true: 1) W̄r and are independent random variables, 2) , and 3) . Let Q(x) be a probability density function that is entire over the complex plane, and for each r = 1, …, n define , where the Zr,j are independent N(0, 1) random variables. It follows from Theorem 1.1.1 that the estimator

| (4) |

is such that

| (5) |

The right hand side of (5) is a kernel density estimator of fx(x), with bandwidth λ> 0. Thus it follows that unconditionally, f̂het(x) has the same expectation and same bias as the kernel density estimator constructed from the true, unobserved data.

Note that Theorem 1.1.1 also applies to the case where the measurement error variance in (3) is constant and data are pooled to estimate the common variance σ2. Let be the pooled estimator of the measurement error variance based on degrees of freedom, and let where the Zr,j are independent N(0, 1) random variables. It follows from Theorem 1.1.1 that the estimator

| (6) |

possesses the key property in (5), i.e., that conditioned on the true data, it is an unbiased estimator of a true-data, kernel density estimator.

2.1.1 Connection to the Deconvoluting Kernel Density Estimator

The estimators in (4) and (6) generalize the deconvoluting kernel density estimator of Stefanski and Carroll (1990). They considered the case with data , such that Wr = Xr + Ur, and are independent and identically distributed with known characteristic function Φu(t) = E(eitu), and are independent of . Their estimator is based on Fourier inversion of the empirical characteristic function of the kernel density estimator of the observed-data density, fw(w). Let Q(x) be a probability density function whose extension to the complex plane is entire throughout the complex plane, and denote the characteristic function of Q(x) by ΦQ(t). For the case of N(0, σ2) measurement errors, the deconvoluting estimator is given by

| (7) |

where Qd() is the deconvoluting kernel defined as

| (8) |

We now establish the connection between f̂sc(x) and our estimator under heteroscedastic errors, f̂het(x). Arguments similar to those presented below also apply to the homoscedastic-error estimator f̂hom(x). Consider again f̂het(x) in (4). Define

| (9) |

and note that is real-valued. With this definition we write

| (10) |

The connection between the estimators f̂het(x) in (10) and f̂sc(x) in (7) is made clear through examination of the functions and Qd(). For each r = 1, …, n, the sample mean W̄r measures Xr with a normally distributed measurement error that has variance . The deconvoluting kernel in equation (8) depends on the assumed known inverse of the measurement-error characteristic function . However, when is unknown, so too is . As we show next, unbiasedly estimates through unbiased estimation of .

First, applying the Fourier inversion formula and interchanging the operations of integration and expectation in (9) yields

| (11) |

Because Tr,mr−1 is independent of the data, the conditional expectation in (11) is the characteristic function of Tr,mr−1 evaluated at the argument θ (t) = it{(mr − 1)/mr}1/2σ̂r/λ. By construction, Tr,mr−1= TTe1, where TT is a random vector uniformly distributed on the (mr−1) dimensional unit sphere, and e1 is the (mr−1)×1 dimensional unit vector having a one in the first position. The characteristic function of TTe1 is given by (Watson, 1983)

| (12) |

Here Jν(z) denotes a Bessel function of the first kind,

Substituting equation (12) into equation (11) yields

| (13) |

where

| (14) |

The only random quantity in is the sample variance, , which appears in . From the relationship

| (15) |

it follows that , the inverse of the characteristic function of a random variable evaluated at t/λ. Substitution into (13) yields

Comparison with (8) reveals that is unbiased for .

2.2 Monte Carlo Estimation

In most cases, the estimators in (4) and (6) do not have simple closed-form expressions. However, Monte Carlo approximations to the conditional expectations in equations (4) and (6) follow directly from (2). Consider the expression for f̂het(x) in (4). For each r = 1, …, n, generating Tr,1, …, Tr,B as independent replicates of yields an approximation to f̂het(x),

Note that when mr = 2, Tr,mr−1 in (4) is equal to either 1 or −1, each with probability 1/2. Thus in the special case of two replicate measurements, the conditional expectation in (4) can be evaluated without Monte Carlo approximation, and is

In the case of homoscedastic measurement error, the Monte Carlo version of (6) is

where Tr,1, …, Tr,B are independent copies of for each r.

3 Mean Integrated Squared Error

We now derive the mean integrated squared error (MISE) of the heteroscedastic-error estimator in (4). An objective is to compare the asymptotic properties of (4) to those of the known-variance deconvolution estimator. It was shown by Carroll and Hall (1988) that when measurement errors are normally distributed with constant variance, the optimal rate at which any deconvolution estimator can converge to fx(x) is {log(n)}−2. The deconvoluting kernel density estimator achieves this rate of convergence (Stefanski and Carroll, 1990), and does so with the optimal bandwidth of λ= σ{log(n)}−1/2 (Stefanski, 1989).

For each Xr, assume a fixed number mr ≥ 2 of replicate measurements are observed and used to estimate . The independence of the summands in (4) simplifies the derivation of the MISE and results in closed-form expressions for certain special cases of mr. In practice, it is common that the number of replicates is small and we examine in detail the case of two replicate measurements, mr = 2 for all r. In addition, we present a weighted estimator, a natural extension in the case of nonconstant variances, where weights are selected to minimize the asymptotic integrated variance. We derive the MISE of the weighted estimator under the assumption that the weights are known. We note that, although we limit our asymptotic analysis to n → ∞, it is also reasonable to consider asymptotic behavior as the number of replicates increases to infinity. Delaigle (2007) provides convincing arguments for this approach.

3.1 Heteroscedastic Measurement Errors

We obtain expressions for the MISE of f̂het(x) using results on hypergeometric series. We start with notation and definitions. For all real x define the rising factorial

and note that for real x ≠ 0 and y ≠ 0,

| (16) |

Denote the generalized hypergeometric series (Erdelyi, 1953) by

| (17) |

where it is assumed that the aj and bj are such that division by 0 does not arise. We use the relationships (for b ≠ 1/2)

| (18) |

A proof of the first equality appears in Bailey (1928); the second follows upon simplification after substitution into (17). Because 0F1(; b; x) is well-defined and continuous in b at b = 1/2, the identity in (18) can be extended via

| (19) |

The limit in (19) is readily verified using the properties in (16). We now state and prove our main result.

Theorem 3.1.1

Consider data following model (3) and the estimator f̂het(x) in (4). As n → ∞ and λ → 0, with ,

| (20) |

where

| (21) |

Proof

First from (14) we write where

Now consider the decomposition of MISE{f̂het(x)},

| (22) |

It follows from (5) that as λ → 0,

| (23) |

The integrated variance can be further decomposed and from (10), ∫Var{f̂het(x)}dx = V1 − V2 where

| (24) |

using a change of variables and Parseval’s Identity, and

| (25) |

The second equality is a consequence of Theorem 1.1.1. The last two follow from a change of variables and Parseval’s Identity. It remains to evaluate the expectation of {0F1(; (mr −1)/2; θ̂)}2 in (24). Using equations (15), (17), and (18) (for mr ≥ 3) or (19) (for mr = 2), it is easily verified that

| (26) |

so that

| (27) |

The result follows from (22) and the fact that V2 = o{(nλ)−1}.

The infinite sum makes the asymptotic behavior of the MISE difficult to examine for general sequences mr, r = 1, 2, …. This sum converges for mr ≥ 2, and closed-form expressions can be obtained for special cases of mr. We focus the remainder of our analysis on the important case of two replicate measurements.

3.1.1 Heteroscedastic Measurement Errors: mr = 2

When mr = 2 for all r, the expectation in equation (26) simplifies to

and V1 in equation (27) becomes

so that as n → ∞ and λ → 0,

| (28) |

Asymptotic analysis of the MISE (n → ∞, λ → 0) in equation (28) is difficult because of the dependence of the MISE on the particular sequence of variances ,…. However useful insights can be gained under the assumption that the empirical distribution function of ,…, converges to an absolutely continuous distribution. McIntyre (2003) shows that when measurement error variances are distributed uniformly over a finite interval, the rate of convergence of f̂het is proportional to {log(n)}−2, the same rate found by Stefanski and Carroll (1990) for the deconvoluting estimator when measurement errors are normally distributed with known, constant variance. Furthermore, both the optimal bandwidth and the optimal rate of convergence depend on the upper support boundary of the variance distribution. The indicated conclusions are that estimating heteroscedastic error variances from just two replicates has no effect on the asymptotic rate of convergence, but that the constant multiplying this rate, and also the bandwidth needed to achieve the rate, are dependent on the larger error variances.

3.2 Heteroscedastic Measurement Errors: Weighting

We next investigate the use of weights to reduce the variability of f̂het(x) in (4). As f̂het(x) is the sum of independent components, having a common mean but different variances, it is reasonable to expect that weighting will reduce variability.

Optimal weights for the weighted estimator are derived under the assumption that the measurement error variances are known, and weights are selected to minimize the asymptotic integrated variance. We examine the asymptotic properties of the estimator calculated with these optimal weights, and our results provide intuition for the properties of f̂wt(x), which uses the set of estimated weights calculated with the unknown measurement error variances replaced by their sample estimates.

The optimal weighted estimator has the general form

| (29) |

where w1, …, wn are known constants, wr ≥ 0 for all r and . The integrated variance of f̃wt(x) is ∫Var{f̃ (x)}dx = Ṽ1 − Ṽ2 where

| (30) |

and

and Q*(z, λ, mr, σ̂r) is defined as in equation (9).

It follows from equation (25) that, provided wr ≤ B/n for r = 1, …, n and some 0< B < ∞, Ṽ2 = o{(nλ)−1}. Thus asymptotically, Ṽ1 is the dominant term in the integrated variance, and we select weights to minimize this quantity. Using (21), let

and note from equation (27) that is proportional to the contribution to the asymptotic integrated variance from the rth component of f̂het(x). Define

| (31) |

for r = 1, …, n. A straightforward application of the Cauchy-Schwartz inequality shows that these weights minimize (30). Substitution into (30) yields

| (32) |

Finally, substituting the sample variances, ,…, for the true variances in equation (31) forms the set of estimated weights, ŵ1, …, ŵn, and the estimator

| (33) |

3.2.1 Mean Integrated Squared Error of f̃wt(x)

We now consider the asymptotic properties of f̃wt(x), calculated with the optimal weights in equation (31). We derive an expression for the MISE of f̃wt(x) under the assumption that the sequence of measurement error variances, ,…, , is known. This analysis provides guidelines for the asymptotic behavior of f̂wt(x) in equation (33). Because the estimated weights are not consistent for the true weights, the resulting estimator is not guaranteed to perform as well as the true-weight estimator asymptotically. Nevertheless, the simulation results reported in Section 4 indicate the approximate weighting has a significant beneficial effect in finite samples.

The estimator f̃wt(x) has the same key property in equation (5) as f̂het(x), and so has the same integrated squared bias as the kernel density estimator of fx(x) based on the true data. Combining (23) and (32), we have that as n → ∞ and λ → 0,

| (34) |

The expression simplifies in the case of two replicate measurements. From equations (32) and (21), when mr = 2 for all r, as n → ∞ and λ → 0,

and the MISE becomes

The asymptotic behavior of MISE again depends on the sequence of variances ,…, . McIntyre (2003) shows that when the variances are uniformly distributed over a finite interval, the MISE of f̂wt(x) converges to 0 at a rate proportional to {log(n)}−2, the same rate achieved by the unweighted estimator under that assumption. The result implies that when measurement error variances are known and uniformly distributed, there is no advantage to weighting in terms of the rate of convergence of the MISE. However, the constant multiplying the rate is reduced. Furthermore, the primary advantage of weighting is in finite samples as we study via simulation in Section 4.

4 Simulation Study

We performed a simulation study to investigate the finite-sample properties of the proposed estimators in equations (4), (29), and (6). These were calculated using the kernel Q(x) ∝ {sin(x)/x}4, where Q(x) was scaled to have mean 0 and variance 1. We also included in the study the so-called naive estimator which ignores measurement error. Specifically, we studied the naive estimator to be the kernel density estimator calculated with the sample means of the replicate measurements,

where φ(x) is the standard normal density.

Estimators were compared on the basis of average integrated squared error (ISE). For each simulated data set, estimators were computed at their optimal bandwidths, found by minimizing the integrated squared error. As this requires knowledge of the unknown density fx(x), the estimators in this study are not true estimators. However our results provide insight into their relative optimal performances, independent of the problem of estimating a bandwidth. We defer a discussion of bandwidth estimation to Section 5. For additional comparison, however, we also calculated the naive estimator using the popular normal reference bandwidth estimator, τ̂ = 1.06σ̂W̄n−1/5 where is the sample variance of the means W̄1, …, W̄n (Silverman, 1986).

We examined three factors in this study. First, six different sample sizes were considered, n = 100, 500, 1000, 2000 and 2500. Second, we examined the effect of the true-data density, generating data, X1, …, Xn, from the N(0, 1) density and the Chi-squared(4) density, standardized to have mean 0 and variance 1. Finally, we examined the effect of the homogeneity of measurement error variances on the performance of each estimator. Observed data were generated with normal measurement errors having variances for the case of constant variances, and ,…, chosen uniformly over the interval (0, 2) for the case of nonconstant variances. All estimators were computed on each set of observed data.

In practice, often only a small number of replicate measurements is observed, with mr = 2 common. Thus in our simulations, we considered only the case of mr = 2 replicate measurements. All results are based on fifty simulated data sets.

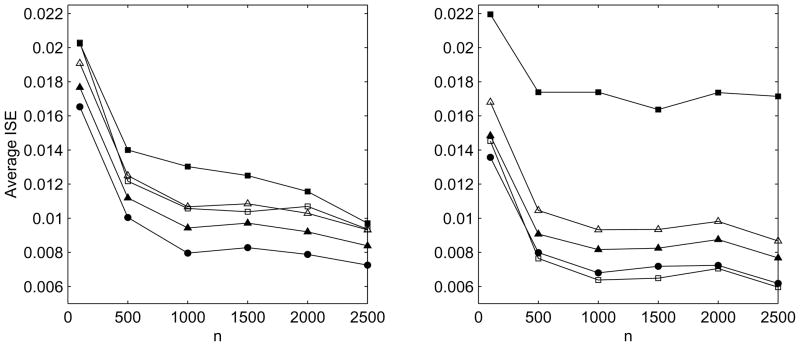

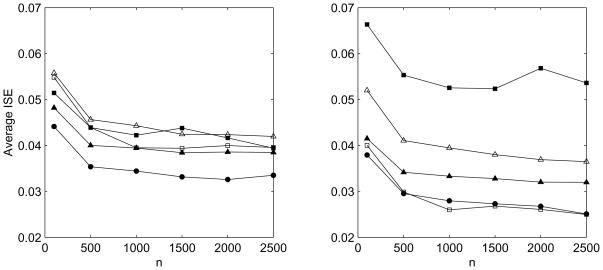

Average ISEs are plotted by sample size in Figure 1 for Xr ~ N(0, 1) and Figure 2 for Xr ~ Chi-squared(4). For simulations that considered homoscedastic measurement error, the average ISE for f̂hom(x) was significantly smaller (α=0.05) than that of the other estimators for each sample size except n=100, where all estimators were most variable. For simulations that considered heteroscedastic measurement errors, f̂het(x) had a significantly higher average ISE than all other estimators for sample sizes of n ≥ 500, and was also much more variable. The estimators f̂hom(x) and f̂wt(x) had significantly lower average integrated squared errors than all other estimators for n ≥ 500, but differences between the two were generally not significant.

Figure 1.

Average ISE by sample size for X ~ N(0, 1). Left: homoscedastic measurement errors, N(0, 1). Right: heteroscedastic measurement errors, with uniform on (0, 2). Open triangle: f̂naive with plug-in bandwidth; Closed triangle: f̂naive with optimal bandwidth; Closed square: f̂het; Open square: f̂wt; Closed circle: f̂hom.

Figure 2.

Average ISE by sample size for . Left: homoscedastic measurement errors, N(0, 1). Right: heteroscedastic measurement errors, with uniform on (0, 2). Open triangle: f̂naive with plug-in bandwidth; Closed triangle: f̂naive with optimal bandwidth; Closed square: f̂het; Open square: f̂wt; Closed circle: f̂hom.

It is evident from the simulations that weighting is effective in reducing the ISE of f̂het(x), and f̂wt(x) is preferred. In almost every case, the average ISE of f̂wt(x) was significantly less than that of f̂het(x). However, even the weighted estimator performed relatively poorly when measurement error variances were constant, not surprising considering that here f̂wt(x) relies on n estimates of the constant variance each obtained from only two replicate measurements. The estimator for homoscedastic errors performed well for both types of measurement errors, suggesting it is a good choice even when there is doubt about the homogeneity of the error variances, provided the heterogeneity is not too great. It is encouraging that for the sample sizes considered here, the measurement error-corrected estimators perform as well or better than the naive estimator. This suggests that given a reliable rule for selecting a bandwidth, correcting for measurement errors is worthwhile in many situations.

5 A Bootstrap Method for Bandwidth Selection

We now describe a bootstrap bandwidth selection procedure, and illustrate it with data in Section 6. The bootstrap has been used for bandwidth selection in traditional kernel density estimation (Marron, 1992). Delaigle and Gijbels (2004) extended it to the deconvolution estimator (7). With the bootstrap method the bandwidth estimate can be obtained without resampling. We note that the bandwidth-selection method proposed by Delaigle and Hall (2008) based on SIMEX (Cook and Stefanski, 1994; Stefanski and Cook, 1995) could also be applied to our estimators.

We obtain an initial estimate of bandwidth, τ̂, used to construct an initial density estimate for generating bootstrap samples. For our deconvolution problem, we use the naive estimator,

where τ̂ is the normal reference bandwidth (Silverman 1986). This provides a reasonable, though overly smoothed, estimate of fx(x).

For heteroscedastic measurement errors, bootstrap data are generated for r = 1, …, n and j = 1, …, mr as , where is generated from the density f̂naive(x; τ̂), σ̂r is the estimate of σr from the original data and Zr,j ~ N(0, 1). In the case of homoscedastic measurement errors, σ̂r is replaced with the pooled estimate of variance, σ̂.

Let denote any of the three deconvolution estimators from (4), (6) or (29) computed with the bootstrap sample. The bootstrap estimate of the optimal bandwidth for a measurement error-corrected estimator is the value h that minimizes

where EBS denotes expectation with respect to the bootstrap distribution. The optimal bandwidth can be determined empirically by computing a large number of bootstrap estimates over a dense grid of bandwidths and selecting the value of h that achieves the smallest average ISE. However because f̂naive(z, τ̂) replaces the unknown f(x), the optimal bandwidth can also be determined by direct calculation of MISE*(h) over the grid of bandwidths, using analytical expressions for MISE, e.g., equations (20) and (34). For more details see McIntyre (2003).

6 An Application

We illustrate the bootstrap bandwidth procedure using data from a U.S. EPA study of automobile emissions. Measurements of carbon monoxide (CO) in automobile emissions were collected with a remote sensing device stationed at a highway entrance ramp in North Carolina. The device measured CO in the exhaust of passing automobiles, and also photographed their license plates. Measurements were taken on several different dates resulting in replicate measurements from cars that passed the location multiple times. Of a total of 3002 automobiles observed, 1233 had multiple measurements (946 with mr = 2 and 287 with mr = 3).

An objective of the study was to characterize average CO emissions among the population of automobiles. Measurements of CO taken from a stationary point on the roadway are subject to error from several sources including meteorological conditions, variations in engine temperature, speed and acceleration, and instrument error. The multiple sources of variation are expected to result in multiplicative errors. Thus we log-transformed the CO measurements.

The log-transformed data showed evidence of heteroscedastic measurement errors. A loess curve fit to the scatter plot of σ̂r versus W̄r exhibited substantial nonlinearities over the range of sample means, although globally there was a tendency for larger sample standard deviations to be associated with larger sample means. Testing the latter via a simple linear regression of the sample standard deviations on the sample means resulted in a significant slope of 0.0936 (p < .0001).

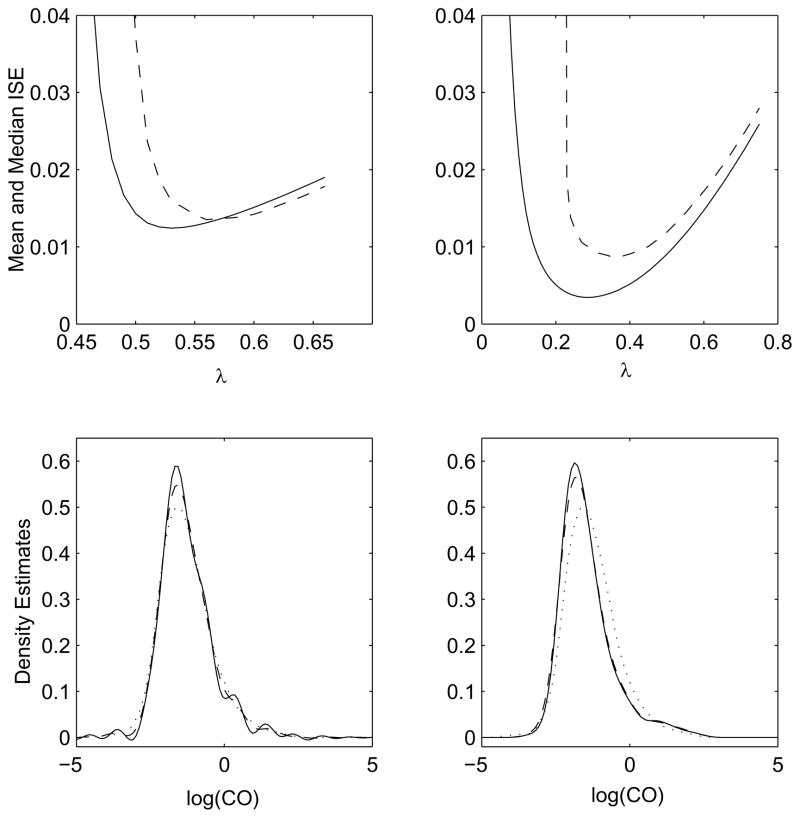

We estimated the density function of the log-transformed CO measurements using the 1233 automobiles with multiple measurements. For comparison, we fit the weighted estimator for heteroscedastic errors and the estimator for homoscedastic errors. Bandwidths were selected using both analytical and empirical calculation of the bootstrap bandwidth estimate as described in Section 5. Each empirical estimate was based on 100 bootstrap data sets and the bandwidth was selected to minimize the median integrated squared error. Results are shown in Figure 3. Also shown is the naive estimate, calculated using the sample means of replicate observations and the normal reference bandwidth. All measurement error-corrected estimators show a higher peak and slightly thinner tails than the naive estimator. This is expected when the effects of measurement error are removed from the observed-data estimate.

Figure 3.

Top row: Analytical mean (Solid line) and empirical median (Dashed line) ISE of bootstrap density estimates as a function of bandwidth. Left: Homoscedastic estimator; Right: Weighted estimator; Bottom row: Density estimates with bandwidth minimizing bootstrap MISE (solid line) and median ISE (dashed line). Left: Homoscedastic estimator; Right: Weighted estimator. Dotted line in both panels is the naive estimator with plug-in bandwidth.

Acknowledgments

We are grateful to the anonymous reviewers whose comments led to substantial improvements in content and clarity of the paper. We also acknowledge financial support provided by the US National Science Foundation VIGRE Program and NSF grants DMS-0304900 and DMS-0504283.

Contributor Information

Julie McIntyre, Department of Mathematics and Statistics, University of Alaska Fairbanks, Fairbanks, AK 99775, USA.

Leonard A. Stefanski, Department of Statistics, North Carolina State University, Raleigh, NC 27695-8203, USA

References

- Andrews G, Askey R, Roy R. Special Functions. Cambridge University Press; 1999. [Google Scholar]

- Bailey WN. Products of generalized hypergeometric series. Proceedings of the London Mathematical Society. 1928;2:242–254. [Google Scholar]

- Carroll RJ, Hall P. Optimal rates of convergence for deconvolving a density. Journal of the American Statistical Association. 1988;83:1184–1186. [Google Scholar]

- Cook JR, Stefanski LA. Simulation-extrapolation estimation in parametric measurement error models. Journal of the American Statistical Association. 1994;89:1314–1328. [Google Scholar]

- Delaigle A. An alternative view of the deconvolution problem. 2007. To appear in Statistica Sinica. [Google Scholar]

- Delaigle A, Gijbels I. Bootstrap bandwidth selection in kernel density estimation from a contaminated sample. Annals of the Institute of Statistical Mathematics. 2004;56:19–47. [Google Scholar]

- Delaigle A, Hall P. Using SIMEX for smoothing-parameter choice in errors-in-variables problems. Journal of the American Statistical Association. 2008;103:280–287. [Google Scholar]

- Delaigle A, Hall P, Meister A. On Deconvolution with repeated measurements. Annals of Statistics. 2008;36:665–685. [Google Scholar]

- Delaigle A, Hall P, Müller H. Accelerated convergence for nonparametric regression with coarsened predictors. Annals of Statistics. 2007;35:2639–2653. [Google Scholar]

- Delaigle A, Meister A. Nonparametric regression estimation in the heteroscedastic errors-in-variables problem. Journal of the American Statistical Association. 2007;102:1416–1426. [Google Scholar]

- Delaigle A, Meister A. Density estimation with heteroscedastic error. Bernoulli. 2008;14:562–569. [Google Scholar]

- Devroye L. Consistent deconvolution in density estimation. The Canadian Journal of Statistics. 1989;17:235–239. [Google Scholar]

- Diggle PJ, Hall P. A Fourier approach to nonparametric deconvolution of a density estimate. Journal of the Royal Statistical Society, Series B. 1993;55:523–531. [Google Scholar]

- Erdelyi AE. Higher Transcendental Functions. Vol. 2. McGraw-Hill; 1953. [Google Scholar]

- Fan J. Asymptotic normality for deconvolution kernel density estimators. Sankhya, Series A, Indian Journal of Statistics. 1991a;53:97–110. [Google Scholar]

- Fan J. On the optimal rates of convergence for nonparametric deconvolution problems. Annals of Statistics. 1991b;19:1257–1272. [Google Scholar]

- Fan J. Deconvolution with supersmooth distributions. The Canadian Journal of Statistics. 1992;20:155–169. [Google Scholar]

- Li T, Vuong Q. Nonparametric estimation of the measurement error model using multiple indicators. Journal of Multivariate Analysis. 1998;65:139–165. [Google Scholar]

- Marron JS. Bootstrap bandwidth selection. In: LePage R, Billard L, editors. Exploring the Limits of Bootstrap. John Wiley & Sons; 1992. [Google Scholar]

- McIntyre J. PhD Thesis. North Carolina State University; 2003. Density deconvolution with replicate measurements and auxiliary data. [Google Scholar]

- Meister A. Density estimation with normal measurement error with unknown variance. Statistica Sinica. 2006;16:195–211. [Google Scholar]

- Neumann MH. Deconvolution from panel data with unknown error distribution. Journal of Multivariate Analysis. 2007;98:1955–1968. [Google Scholar]

- Patil P. A note on deconvolution density estimation. Statistics & Probability Letters. 1996;29:79–84. [Google Scholar]

- Silverman BW. Density estimation for statistics and data analysis. Chapman & Hall Ltd; 1986. [Google Scholar]

- Staudenmayer J, Ruppert D, Buonaccorsi JP. Density estimation in the presence of heteroskedastic measurement error. Journal of the American Statistical Association. 2008 To appear. [Google Scholar]

- Stefanski LA. Unbiased estimation of a nonlinear function of a normal mean with application to measurement error models. Communications in Statistics, Series A. 1989;18:4335–4558. [Google Scholar]

- Stefanski LA. Rates of convergence of some estimators in a class of deconvolution problems. Statistics & Probability Letters. 1990;9:229–235. [Google Scholar]

- Stefanski LA, Carroll RJ. Deconvoluting kernel density estimators. Statistics. 1990;21:169–184. [Google Scholar]

- Stefanski LA, Cook JR. Simulation-extrapolation: The measurement error jackknife. Journal of the American Statistical Association. 1995;90:1247–1256. [Google Scholar]

- Stefanski LA, Novick SJ, Devanarayan V. Estimating a nonlinear function of a normal mean. Biometrika. 2005;92:732–736. [Google Scholar]

- Wand MP. Finite sample performance of deconvolving density estimators. Statistics & Probability Letters. 1998;37:131–139. [Google Scholar]

- Watson GS. Statistics on Spheres. John Wiley & Sons; 1983. [Google Scholar]