Abstract

The relationship between primary motor cortex and movement kinematics has been shown in nonhuman primate studies of hand reaching or drawing tasks. Studies have demonstrated that the neural activities accompanying or immediately preceding the movement encode the direction, speed and other information. Here we investigated the relationship between the kinematics of imagined and actual hand movement, i.e. the clenching speed, and the EEG activity in ten human subjects. Study participants were asked to perform and imagine clenching of the left hand and right hand at various speeds. The EEG activity in the alpha (8 Hz – 12 Hz) and beta (18 Hz – 28 Hz) frequency bands were found to be linearly correlated with the speed of imagery clenching. Similar parametric modulation was also found during the execution of hand movements. A single equation relating the EEG activity to the speed and the hand (left vs. right) was developed. This equation, which contained a linear independent combination of the two parameters, described the time-varying neural activity during the tasks. Based on the model, a regression approach was developed to decode the two parameters from the multiple-channel EEG signals. We demonstrated the continuous decoding of dynamic hand and speed information of the imagined clenching. In particular, the time-varying clenching speed was reconstructed in a bell-shaped profile. Our findings suggest an application to providing continuous and complex control of non-invasive brain-computer interface for movement-impaired paralytics.

Keywords: electroencephalography, event-related desynchronization, motor imagery, hand movement decoding, brain-computer interface

Introduction

How kinematic parameters are encoded in the motor cortex is an important question for understanding the physiology of motor control as well as for the development of neuromotor prostheses. The seminal discoveries on this topic were made in the late 1980s on the cosine-tuning properties of neurons recorded in the primary motor cortex (Georgopoulos et al 1982). Later on, tremendous strides were made to reveal that the position, velocity (including direction and speed) and other kinematics of the hand movement were represented in the single-unit spiking, multi-unit activity and local field potentials from electrodes implanted in the nonhuman primates (Kettner et al 1988, Fu et al 1995, Schwartz 1994, Moran and Schwartz 1999, Paninski et al 2004). These findings led to the development of neural prostheses which were able to decode the kinematics from neural recordings and thus restore the motor function via bypassing the neuromuscular pathways, i.e. the so-called brain-machine or brain-computer interface (BMI/BCI, Schwartz et al 2006, Wolpaw et al 2002). Researchers have demonstrated the ability to decode hand kinematics in order to drive a computer cursor or a robotic arm (Wessberg et al 2000, Serruya et al 2002, Taylor et al 2002, Santhanam et al 2006, Velliste et al 2008). Recently, such invasive recording technology was tested on patients with tetraplegia and results reported that spiking activities of single neurons in the human primary motor cortex were tuned to intended/imagined movements (Hochberg et al 2006, Truccolo et al 2008).

In the meantime, tremendous efforts were made to use noninvasive recording technology, e.g. Electroencephalogram (EEG) or Magnetoencephalography (MEG) to decode movement- or imagery-related information. It has been found that the planning and execution of movement leads to predictable changes in the alpha (8 Hz – 12 Hz) and beta (13 Hz – 28 Hz) frequency bands, commonly known as event related synchronization/desynchronization (ERS/ERD, Pfurtscheller and Lopes da Silva 1999, Wang et al 2004). Such characteristic changes in EEG rhythms can be used to classify brain states relating to the planning/imagery of different types of limb movement. Studies have demonstrated that human subjects were able to use motor imagery as a mental strategy to achieve multi-dimensional control of a computer cursor based on the EEG recordings (Wolpaw and McFarland 1994, Wolpaw and McFarland 2004, Yuan et al 2008, Royer and He 2009). So far, most studies of motor imagery have been focusing on classifying the type of imageries based on the spatial-temporal patterns derived from EEG or MEG recordings. However, no further kinematic information has been extracted from these recordings.

In the present study, we aimed to test whether the kinematic parameters, i.e. the speed of hand clenching or imagined clenching, can be represented in the scalp EEG recordings. Subjects performed and imagined clenching of their left or right hands at various speeds while their EEG signals were recorded. The spatial-temporal dynamics of EEG in the alpha and beta frequency bands were compared at different speeds. We further developed a single equation of a linear model to relate the band-limited EEG power to the parameters of the clenching speed and hand (left vs. right). Finally, we tested the possibility of decoding the two parameters from multi-channel recordings and demonstrated the feasibility of continuously and independently decoding the speed and hand information associated with motor imageries.

Materials and Methods

Subjects

Ten healthy subjects (six males and four females) were recruited to participate in the study (age range, 20–26 years; mean ± SD, 22±2.0 years). All subjects were right-handed according to the Edinburgh Handedness Inventory (Oldfield 1971) and were previously naïve to BCI usage. The study protocol was approved by the Institutional Review Board of the University of Minnesota. Informed consent was obtained from all subjects prior to the study.

Experimental Design and Data Acquisition

Subjects were instructed to clench or imagine clenching the left hand or right hand at seven different speeds (0.5 Hz, 1 Hz, 1.5 Hz, 2 Hz, 2.5 Hz, 3 Hz, and 3.5 Hz), paced by a metronome. Task blocks (30 seconds each) were interleaved with rest blocks (10 seconds each). Within a task block were nine events during which subjects performed the instructed task for 2 s interleaved with inter-trial intervals of varying durations from 1 s to 2 s. The number of clenches in each task interval was identical within a run and ranged from one to seven across various runs. Visual cues were presented to indicate the conditions of events. Each run consisted of two repeats of movement/imagery of left/right hand resulting in eight total task blocks per run. Each speed was repeated twice in the data acquisition. The sequence of block conditions and sequence of speeds were randomized and balanced across runs and subjects.

Subjects were trained on the task for less than one hour before the data were collected. They were instructed to perform a kinematic imagery of the hand movement – imagining themselves clenching the hand, rather than mentally watching them or another person executing the task. During the training, subjects practiced the movement and imagery for each speed type. Meanwhile, their EMG activity was monitored online and also visually inspected by the researchers. Substantial training was conducted until the researchers verified that subjects performed the movement appropriately and also until subjects reported vivid imagery of the task. Prior to the data collection of the task on a different day, all subjects also practiced using an imagery-based 1D BCI (Yuan et al 2008) for ten runs (5 min each). The data from the BCI sessions were not included in the present analysis and used only for instructional purpose.

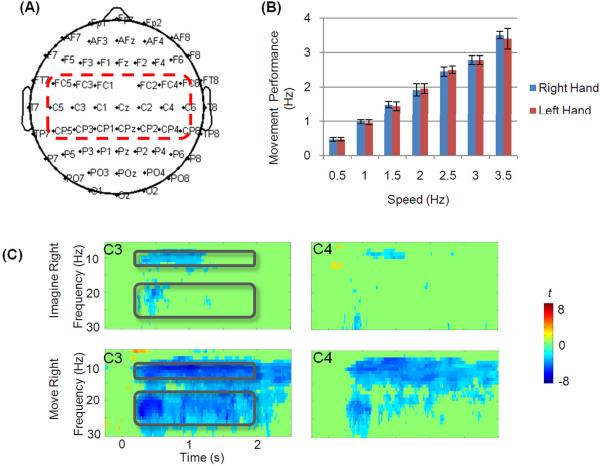

The EEG signals were acquired with a SynAmps2 amplifier (Neuroscan Compumedics) at a sampling frequency of 1000 Hz. The electrode layout was shown in Fig. 1(A). Electromyogram (EMG) was also recorded at both left and right hands to monitor muscle movements. Bipolar EMG electrodes were placed at the ventral side of each forearm approximately two inches from the wrist. EMG activity was monitored during the recording by researchers and also reviewed later in the data analysis. Bursts of EMG signals were clearly identified to be associated with each movement while no EMG bursts were present during imagery tasks.

Figure 1.

(A) The electrode layout for the EEG recording. The electrodes in the central region were highlighted with a red rectangle. (B) plots the subjects' performance (average and standard derivation) of actual clenching of the right and left hands at each speed. (C) shows the group Time-Frequency representation of EEG changes from electrode C3 and C4 during imagery and movement of the right hand. Power changes relative to baselines are depicted as t statistic thresholded by p<0.001 (uncorrected for multiple-comparison). Black rectangles in each plot indicate the alpha- and beta-frequency bands of interest.

EEG and EMG Analysis

EEG recordings were band-pass filtered from 1 Hz to 30 Hz using a zero-phase FIR filter and segmented into epochs from −1 s to 2.75 s with respect to the onset of visual cues for tasks. Each epoch was then baseline corrected and detrended. Epochs with eye movements were visually identified and excluded from further analysis. The artifact-free signals were down-sampled to 200 Hz. The EMG recordings were high-pass filtered at 20 Hz, rectified, and segmented along with the EEG signals. The actual clenches for movement trials were identified from the EMG bursts. The clenching speed was defined as the number of clenches per unit time. For trials of imagery conditions, the EMG during the task periods (0.3 s ~ 2 s) were compared to the EMG baseline activity (−1 s ~ −0.1 s).

The time-frequency (TF) representations of single-trial EEG signals were calculated using Complex Morlet's wavelet. The EEG signal, x(t), was first convoluted by wavelets, , with A = (σtπ1/2)−1/2, σt = 1/(2πσf), and σf = f/7. The trade-off ratio (f/σf) was chosen as 7 to create a wavelet family (Tallon-Baudry et al 1997; Qin et al 2004). For each time t and frequency f, the power of the EEG signal, P(t,f), was the squared norm of the convolution: P(t, f)=|ω(t, f)*x(t)|2. The normalized time-varying power of EEG was computed for visualization. For each subject it was characterized in t statistic from the population of multiple trials by contrasting the power at each time-frequency pair during the task to the power at the same frequency before the task (from −150 ms to −50 ms) using an unpaired Student's t test. The group average changes was calculated using a random-effect model across all subjects (one-sample Student's t-test) and thresholded with p<0.001. The characteristic alpha and beta frequency bands and the time window of changes were identified from the group TF statistic plots.

The spectral change induced by movement and motor imagery was quantified by comparing the average power of task intervals with that of the pooled baseline intervals based on the wavelet calculations. The relative change was defined as the difference between task and baseline power and divided by the baseline power. The topologies and magnitudes of the relative changes during movements and imageries were compared across different clenching speeds. The time-varying power in the alpha and beta frequency band was also extracted from the TF matrices and used for the encoding and decoding analysis described below.

Encoding Model

For each channel over the scalp, different levels of EEG activity in the alpha and beta frequency bands were observed during left and right hand tasks and across different clenching speeds. A linear model was used to find the relationship between the EEG power and the variables of the speed and hand (left vs. right) for each channel using the following equation:

| (1) |

where y is the EEG observation in a frequency band at the ith channel, v is the speed variable, λ is either 1 or −1 (indicating left hand or right hand respectively), b0 is the grand mean of EEG activity found across both hands and all speeds, and b1 and b2 are the weighting coefficients for the speed and hand respectively. Multiple linear regression was used to find the constants b0, b1 and b2. Each trial was an independent observation in the regression. Epochs of both left and right hands across all seven speeds were used for estimating the model coefficients. The EEG power during intervals of 200 ms – 500 ms, 500 ms – 1000 ms, 1000 ms – 1500 ms and 1500 ms – 2000 ms was averaged respectively to show the dynamic variation of model fitting. Separate regression model coefficients were estimated for imagery and movement.

Decoding Model

In the above encoding model, hand and clenching speed were used as variables to explain the EEG activity observed at each channel. In the subsequent analysis, we aimed at decoding the hand and speed from EEG observations in multiple frequency bands at multiple channels. In order to continuously decode the variables of hand (d[t]) and speed (u[t]), a linear decoding model was used:

| (2) |

| (3) |

where and are EEG power measured at the nth electrode at time sample t in the alpha and beta frequency band respectively, N and M are the total number of channels used for alpha and beta band respectively, and the k and l variables are constant coefficients derived from multiple linear regression based on the training data. Laplacian spatial filtering was applied to the artifact-free EEG signals in order to reduce the volume conduction effect and thus enhance the decoding performance (Wang et al 2004). Laplacian-filtered EEG signals were subject to the wavelet calculation. The instantaneous EEG power extracted from the time-frequency representations were used as inputs to the decoding model, which can also be estimated using a short time windowed Fourier transform. Channels that were significantly fitted into the linear encoding model (p<0.01) were selected for decoding. Note that the hand and speed information was estimated from the same EEG observations using different sets of weighting coefficients. The final output of the decoding was:

| (4) |

The output signals were smoothed using a low-pass filler with the stop band at 1 Hz.

Each trial was designated with a speed value as subjects maintained the same clenching speed during each task period. However, in order to reconstruct a continuous time course of speed variable that can be directly transformed into instantaneous BCI control, we used a bell-shaped speed profile (shown in Fig. 5A) as a reference time course for training. The simulated speed profile spans for the duration of the task period and its height was scaled by the speed value. Ten-fold cross validation was applied to assess the performance of the decoding. All trials of EEG during imagination of left and right hand clenches were randomly divided into ten groups. Nine of them were pooled to train the decoding model which was applied to the rest group for testing. This procedure was repeated ten times and each group was tested as a test group. The temporal properties of the decoding results were assessed by calculating the correlation coefficient (CC) between the reference time courses and the outputs of the test data sets across all folds. In addition, the classification of hand (left vs. right) was also evaluated in terms of accuracy. The hand output d[t] was integrated from 0.5 s to 1.9 s and compared with the sign of the expected hand value (1 or −1). The percentage of the correct hand value was calculated across all folds.

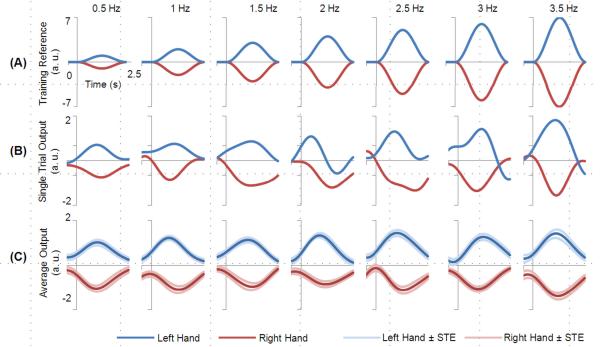

Figure 5.

Decoding of the hand and speed from a typical subject. Blue and red curves indicate the imagination of the left and right hand, respectively. (A) shows the computer-generated reference time course used for training. (B) shows single-trial traces of decoded outputs for all speeds. (C) plots the averaged outputs of the test dataset according to their speed and hand. The curves in lighter red and blues color indicate the standard errors (STE).

Results

EEG Changes in Alpha and Beta Bands at Different Speeds

The subjects' performance in the movement task is shown in Fig. 1(B). The executed clenching speeds corresponded well to the designated speeds. For all subjects, no significant EMG activities were detected during the motor imagery (all p>0.05, paired Student's t test).

The time-frequency representations of task-induced changes from the group analysis are presented in Fig. 1(C). Characteristic decrease (ERD) accompanying the imagery and movement can be found in the alpha band (8 Hz – 12 Hz) and beta band (18 Hz – 28 Hz) from 0.2 s to 2 s.

Fig. 2(A) and Fig. 3(A) show topologies of average EEG changes in the alpha frequency band across subjects using the time and frequency windows obtained from group analysis. The relative changes were observed to be focused at the electrodes in the central region over the motor areas, as illustrated in Fig. 1(A). As speed was increased, a larger decrease of EEG activity was observed. A contralaterally dominant decrease was shown at all speeds for both imagination and movement conditions. Ipsilateral ERD was more prominent in the movement conditions. Some increase was also found at the posterior area but not at all speeds.

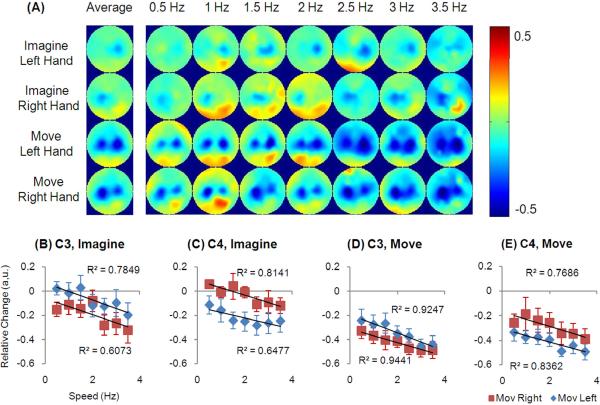

Figure 2.

Relative EEG change in the alpha frequency band. (A) shows the topologies corresponding to all speeds and the average. (B–E) shows the changes at C3 and C4 electrode during imagery and movement.

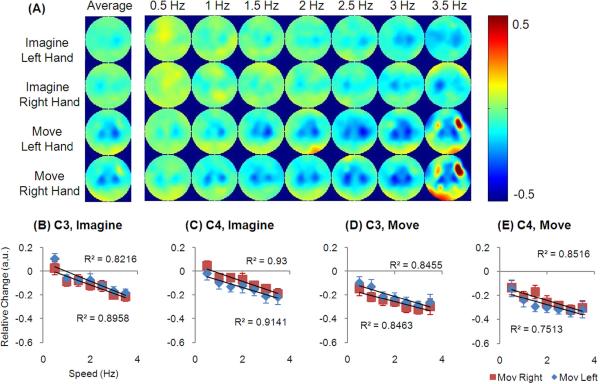

Figure 3.

Relative EEG change in the beta frequency band. (A) shows the topologies corresponding to all speeds and the average. (B–E) shows the changes at C3 and C4 electrode during imagery and movement.

Fig. 2 (B–E) and Fig. 3(B–E) show the EEG changes in the alpha and beta frequency bands from the C3 and C4 channels, where the strongest decrease was found. The averaged decrease across subjects is plotted at all speeds. For both imagery and movements, there was a negative linear relationship between speeds and EEG changes (all p<0.05). Such negative linearity existed at C3 and C4 channels when it was either contralateral or ipsilateral to the side of the movement. When the contralateral and ipsilateral activity was summarized at each electrode, it resulted in a component that represented the non-hand-related activity which was also linearly correlated with the speed (data not plotted, all p<0.05). Then the EEG activity was subtracted from the non-hand-related component and the remaining component demonstrated no correlation with speeds (all p>0.05), whereas there was a constant offset for each electrode.

Contralateral electrodes displayed a larger decrease than the electrodes at the ipsilateral area (p<1e-16 and p<1e-7 for alpha and beta activity respectively, paired t test). Particularly, the hemispheric difference in the alpha band is larger than that in the beta band (p<1e-6, paired t test). However, in regard to the speeds, no linear correlation was found for the difference between C3 and C4 channels during imagination or movement conditions (all p>0.05).

Encoding of Hand and Speed in EEG Alpha and Beta Activities

A linear model was developed to explain the EEG activity observed at each channel. The model included hand and speed as two variable factors. For each channel, the EEG changes across different speeds approximated a linear line (shown in Fig 2 and Fig. 3). Furthermore, the trends of speeds corresponding to the left and right hand were closely parallel to each other for the same channel, suggesting that the effects of hand and speed were independent to one another. Therefore, the hand and speed were included in the model as two additive variable factors.

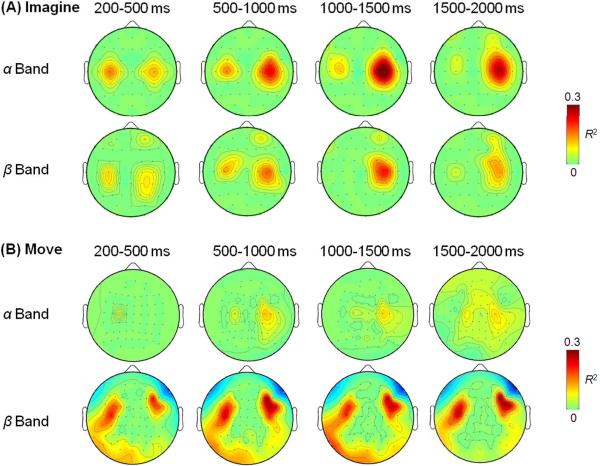

Dynamic EEG activities from intervals of 200 ms – 500 ms, 500 ms – 1000 ms, 1000 ms – 1500 ms, and 1500 ms – 2000 ms were fitted into the linear model in Eq. (1). R2 were estimated for each channel and the topologies from representative subjects are plotted in Fig. 4 (thresholded by p<0.001). EEG activity centered at C3 and C4 electrodes were best fit into the model. The observed pattern was similar between imagery (Fig. 4A) and movement (Fig. 4B) conditions. Compared among the four intervals, the R2 values peaked in the middle of the task period while its topology maintained the spatial pattern across different time intervals.

Figure 4.

Time-varying topologies of R2 in the linear model (Eq. 1) relating the hand and speed to the EEG activities in the alpha and beta frequency band from recordings during imagery (A) and movement (B). All maps were threshold at p<0.001 (uncorrected for multiple comparison).

The maximum R2 values of each subject and its corresponding channel are listed in Table 1 and Table 2 for imagery and movement respectively. A paired t test was applied to the R2 from alpha and beta activities to determine whether they were drawn from populations of different mean values. Results showed that EEG activities in the alpha and beta band were fitted into the linear model equally well (p>0.05 for both imagery and movement). Nevertheless, their corresponding channels can be different as shown in Tables 1 and 2.

Table 1.

Maximum R2 of model fitting using Eq. (1) and the associated p value and channel for EEG activity in the alpha and beta bands during motor imagery.

| Subject No. | α Band | β Band | ||||

|---|---|---|---|---|---|---|

| Max R2 | p value | Channel | Max R2 | p value | Channel | |

| 1 | 0.14 | 0 | C3 | 0.23 | 0 | FC4 |

| 2 | 0.02 | 0.005 | CP3 | 0.03 | 0.0002 | C5 |

| 3 | 0.38 | 0 | C4 | 0.28 | 0 | C4 |

| 4 | 0.12 | 0 | C2 | 0.06 | 0 | C6 |

| 5 | 0.05 | 0.0004 | C3 | 0.09 | 0 | Cz |

| 6 | 0.02 | 0.003 | CP6 | 0.13 | 0 | C3 |

| 7 | 0.11 | 0 | CP6 | 0.24 | 0 | CP6 |

| 8 | 0.15 | 0 | C4 | 0.04 | 0.0002 | CP4 |

| 9 | 0.08 | 0 | C4 | 0.16 | 0 | CP3 |

| 10 | 0.02 | 0.003 | CP2 | 0.03 | 0.0001 | FCz |

|

| ||||||

| Average | 0.11±0.11 | -- | -- | 0.13±0.09 | -- | -- |

Table 2.

Maximum R2 of model fitting using Eq. (1) and the associated p value and channel for EEG activity in the alpha and beta bands during movement.

| Subject No. | α Band | β Band | ||||

|---|---|---|---|---|---|---|

| Max R2 | p value | Channel | Max R2 | p value | Channel | |

| 1 | 0.15 | 0 | CP4 | 0.29 | 0 | C3 |

| 2 | 0.12 | 0 | C4 | 0.26 | 0 | FC6 |

| 3 | 0.15 | 0 | C4 | 0.17 | 0 | CP3 |

| 4 | 0.08 | 1e-13 | Cz | 0.08 | 1e-13 | C2 |

| 5 | 0.13 | 0 | C4 | 0.08 | 0 | C3 |

| 6 | 0.09 | 1e-12 | CP4 | 0.11 | 0 | C4 |

| 7 | 0.09 | 0 | CP6 | 0.26 | 0 | CP6 |

| 8 | 0.11 | 0 | CP6 | 0.05 | 0.0001 | C6 |

| 9 | 0.12 | 0 | C3 | 0.06 | 1e-8 | C3 |

| 10 | 0.04 | 1e-5 | C3 | 0.06 | 0 | C1 |

|

| ||||||

| Average | 0.11±0.03 | -- | -- | 0.14±0.10 | -- | -- |

Decoding of Hand and Speed from EEG Alpha and Beta Activities

Multiple variables (hand and speed) were instantaneously estimated using equations (2) and (3) at each sample time t. The final outputs were combined in Eq. (4), which can be directly applied to prosthetic executors with one-dimensional (1D) direction control, sign (d[t]), and an independent continuous control of the speed, u[t].

The decoded traces from a representative subject (#4) are shown in Fig. 5. The surge and falling of the speed profile was demonstrated in the single trial traces in Fig. 5(B) and agreed well with the training reference time courses shown in Fig. 5(A). The single trial decoded results from the test data sets were averaged separately according to their true values of hand and speed and plotted in Fig. 5(C). In the averaged results the hand value was well maintained during most of the task interval. The absolute peak value gradually increased with the speed values.

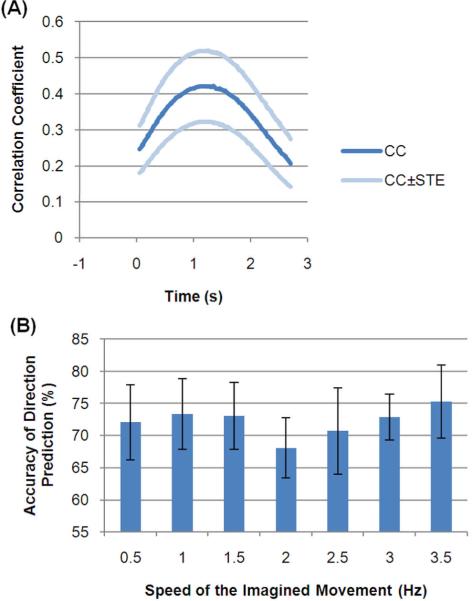

The CC between the decoded outputs (hand*speed) and the reference time courses are listed in Table 3 (for all CC, p=0). The CC was also calculated at each time sample and plotted in Fig. 6(A). The peak of the CC was found in the middle of the task interval (at about 1 s). The accuracy of the hand prediction is also presented in Table 3. The average of the CC and accuracy across subjects was 0.32±0.21 and 74±15% respectively. The accuracy of hand prediction was further calculated separately at each speed (Fig. 6B). The highest speed (3.5 Hz) was found to be associated with the highest accuracy and the lowest accuracy at the middle speed (2 Hz).

Table 3.

Decoding results of all the subjects. CC of Speed Prediction: correlation coefficient between the desired output and decoded results. Accuracy of Hand Prediction: the percentage of correct decoding of the hand (left vs. right) through integration from 0.5s – 1.9 s. See Methods for details.

| No. | CC of Speed Prediction | Accuracy of Hand Prediction (%) |

|---|---|---|

| 1 | 0.55 | 92 |

| 2 | 0.11 | 57 |

| 3 | 0.57 | 93 |

| 4 | 0.58 | 91 |

| 5 | 0.31 | 77 |

| 6 | 0.06 | 55 |

| 7 | 0.17 | 60 |

| 8 | 0.46 | 80 |

| 9 | 0.30 | 73 |

| 10 | 0.07 | 57 |

| Average | 0.32±0.21 | 74±15 |

Figure 6.

(A) The time-varying correlation coefficient between the expected output and the decoded results. (B) The percentage of correct predictions of the hand at all speeds. STE: standard error.

Discussion

In the present study, we investigated the EEG activity associated with imagined and executed hand clenching at various speeds. Our results for the first time demonstrated the parametric modulation of clenching speed for both imagery and movement in the alpha and beta band activity of EEG recordings. Furthermore, a single linear equation was developed to model the relationship between EEG activity and the factors of hand and speed. Based on the linear model, we demonstrated the feasibility of continuously decoding the hand and speed parameters of motor imagery from multiple channel EEG activity using linear regression.

Encoding of the Hand and Speed

Previous studies have revealed how the primary motor cortex controls hand movement during reaching or drawing tasks. Kinematic parameters of hand position and velocity (including direction and speed) were found to be represented in the neural recordings obtained from invasively implanted electrodes (Fu et al 1995, Georgopoulos et al 1982, Kettner et al 1988, Moran and Schwartz 1999, Schwartz 1994, Heldman et al 2006) in the nonhuman primates. Recently, the directional tuning of single neuron discharges was also demonstrated in a tetraplegic patient who imagined reaching a hand (Hochberg et al 2006, Truccolo et al 2008). Meanwhile, noninvasive EEG signals recorded from the surface of scalp were able to recover the body part of imagined movements in human subjects but without any kinematic information (Wang et al 2004, Pfurtscheller et al 2006). Our study investigated whether the kinematic parameters, i.e. the clenching speed, can be represented in the human EEG recordings during actual movement and motor imagery. In the present study, the clenching speed was defined as the number of clenches per unit time. It is worthy to note that the clenching speed is a different kinematic description than the displacement speed, i.e. the distance traveled per unit time, such as the hand reaching speed. Our results have demonstrated that the parameters of the clenching speed as well as the hand are simultaneously embedded in the multi-channel EEG modulations associated with imagery and movement.

In regard to the speed encoding, our results have showed that the clenching speed was linearly correlated to the EEG activity in the alpha or beta frequency band, suggesting that the effect of speed was a gain factor. The summation of EEG activity during left-hand and right-hand conditions was also linearly correlated with the speed. Thus we obtained a non-hand-related speed factor for the EEG activity. Meanwhile, the linear trends corresponding to the two hands were parallel to each other. When we subtracted the EEG activity from the non hand-related speed factor, the remaining EEG activities of left and right hand were not correlated with the speed and were different at a certain offset, the non-speed-related hand factor. Therefore, the hand and speed were two independent factors of the EEG activities for the imagined and executed clenching. In this regard, we developed a single equation (Eq. 1) relating the EEG to the two factors in which the hand acted as an offset while the speed was a gain factor.

Our findings on the speed encoding were consistent with previous studies of the relation between velocity and cortical recordings during hand reaching. Moran and Schwartz found that the reaching speed in a center-out task was a continuous gain factor on the firing rate of single-cell activities (1999). Their results showed that the reaching speed was tightly correlated with part of the neural discharge that was not related to any specific direction. Additionally, an interactive component of the speed and direction was also found represented in the neuronal discharge rate, in which the speed effect was multiplicative on the directional tuning. Although the type of hand movement was different in our study, our results suggest that speed can be a common gain factor to the cortical recordings at both microscale and macroscle levels across various hand tasks, even including motor imageries.

The speed effect of hand clenching is also related to vast functional imaging studies which investigated the relationship between hemodynamic responses and the speed of movement using functional Magnetic Resonance Image (fMRI). These imaging results have shown positive correlation between the change of hemodynamic signal and the task demand associated with various movement rates (Rao et al 1996). Our results regarding the EEG activity are consistent with these findings, considering that EEG activities in the alpha and/or beta frequency bands were reported to be inversely coupled to the hemodynamic signals (Goldman et al 2002, Moosmann et al 2003, Mukamel et al 2005). However, the speed effect on the hemodynamic signal was usually observed over the interval of more than ten seconds, whereas our results showed a sustained speed effect on the EEG within two seconds.

Spatial Organization of Encoding

Among all sensors over the scalp, contralateral and ipsilateral electrodes over the sensorimotor cortex gave the best fitting results to the encoding model of hand and speed. Previous studies have mainly been focused on the contralateral side of the brain largely due to a practical reason that the recording electrodes were implanted only at the contralateral hemisphere. A particularly interesting result of our study was that such encoding of speed information was well maintained in both contralateral and ipsilateral hemispheres for either left-hand or right-hand movements, suggesting the bilateral involvement in the control of speed for imagined and executed movement.

While the speed and hand information was simultaneously represented in the two hemispheres, it also demonstrated lateral dominance. Although both hemispheres showed similar linear trend against the speed, the contralateral side was found to have a stronger decrease than the ipsilateral side. Interestingly, the difference between the two hemispheres was not correlated with the speed. In EEG-based BCI studies, the hemispheric difference is commonly used as a signal channel to achieve one-dimensional control by classifying between imageries of left hand and right hand (Wolpaw and McFarland 1994, Wolpaw and McFarland 2004, Yuan et al 2008). However, our results indicate that the speed information can be eliminated in such a way of subtracting. Thus using the traditional way of 1D control the hand and speed information may not be simultaneously available.

Both the maps of EEG modulations (particularly for actual movement) and of regression coefficients demonstrated two segregated areas at the contralateral and ipsilateral sides. Such regionally focused reaction of both hemispheres was not likely to be due to the volume conduction from one side. Furthermore, cortical activities at the corresponding contralateral and ipsilateral areas of the sensorimotor cortex have been demonstrated to be the sources underlying such EEG modulations at the two hemispheres by source analysis studies (Qin and He 2004; Kamousi et al 2005, 2007; Yuan et al 2008) and also combined study using EEG and fMRI (Yuan et al 2009). Although the EEG modulations are dominated by the sources at the corresponding hemisphere, Laplacian filtering can accentuate localized activity and reduce diffusion in multichannel EEG (He 1999; Wang et al 2004). Therefore we applied Laplacian filtering in the decoding analysis to enhance the decoding performance.

Application for Brain-Computer Interface

Motor imagery is widely used in noninvasive BCI studies as a mental strategy to provide one-dimensional or multi-dimensional degree of control. Previous studies have been focused on classifying the types of imagery associated with different body parts (using a linear or nonlinear approach), and converting it to the control of a prosthetic executor, e.g. computer cursor (Pfurtscheller et al 2006, Wolpaw and McFarland 2004). However, few studies attempted to recover the kinematic parameters from noninvasive EEG or MEG recordings until recently. Some groups have decoded the direction of actual reaching movement in center-out hand tasks (Bradberry et al 2009, Waldert et al 2008). Our results for the first time have demonstrated that the speed information of imagined hand movement can be continuously decoded together with the hand information from the EEG signals. Although one sensor simultaneously encoded both the hand and speed information, the two parameters were independently derived from multiple channels using a linear regression method. The reconstructed traces of hand and speed from single-trial data can be directly applied to the instantaneous control of the 1D direction and continuous speed of a prosthetic device. The speed decoding is able to add one more degree of control to the direction, which can allow for a much finer control of point-to-point movement or can be used as an independent degree of control. The implementation of such kind of an online BCI system will be the future direction of our study. The participating subjects of the current study had very little BCI training experience (less than two hours) before the data were collected, but the intended hand and speed of clenching imagery were reliably reconstructed. More training in a close-loop setup is expected to reduce the variance of performance and improve the control of effectors (Wolpaw et al 2004).

Furthermore, we were able to recover the temporal profile of the bell-shaped speed for imagined hand movement, which approximated the speed of a natural hand reaching movement. Behavioral studies have demonstrated that the speed profile manifested as a bell shape in a wide range of hand tasks (Soechting and Lacquaniti 1981). The bell shape is ideal for a smooth control and was demonstrated to be efficient in restoring a natural feeding movement in non-human primates (Velliste et al 2008). Although in our study each trial of imagery clenching was designated with a single speed value, we introduced the bell-shaped speed profile as the training signal in order to reconstruct a continuous time course of speed variable that can be directly transformed into instantaneous BCI control. The resulting decoded traces successfully approximated the bell-shape profile and correlated well with the training profiles while maintaining the accuracy of hand prediction, which suggests the feasibility of linking our decoding results to the application of prosthesis effectors, e.g. a robotic arm, for restoring two-hand functioning in the movement-impaired paralytics.

Time-varying power of EEG signals were derived from wavelet analysis and used for regression analysis in the current study. Considering that the imagery-induced modulation usually happens in narrow frequency bands and the spectral modulation also demonstrates temporal dynamics (Pfurtscheller et al 2006), we used the wavelet analysis to extract the time-varying power, which was thought to provide a better compromise between time and frequency resolution than using short-term Fourier transforms (Tallon-Baudry et al 1997). Fourier-based time-frequency analysis should also be suitable for decoding purposes and may be more appropriate in a practical implementation of a real-time BCI system.

Acknowledgement

The authors are grateful to Drs. A. David Redish, Zhongming Liu, and Audrey Royer for helpful discussions. Also the authors would like to thank Dan Rinker and Rahel Ghenbot for assistance in collecting the experimental data in the subjects. This work was supported in part by NSF CBET-0933067, NIH RO1EB007920, NIH RO1EB006433. H.Y. was supported in part by an NIH Neuro-Physical-Computational Sciences Fellowship R90 DK070106.

Reference

- Bradberry TJ, Rong F, Contreras-Vidal JL. Decoding center-out hand velocity from MEG signals during visuomotor adaptation. NeuroImage. 2009;47:1691–1700. doi: 10.1016/j.neuroimage.2009.06.023. [DOI] [PubMed] [Google Scholar]

- Fu QG, Flament D, Coltz JD, Ebner TJ. Temporal encoding of movement kinematics in the discharge of primate primary motor and premotor neurons. Journal of Neurophysiology. 1995;73:836–854. doi: 10.1152/jn.1995.73.2.836. [DOI] [PubMed] [Google Scholar]

- Georgopoulos AP, Kalaska JF, Caminiti R, Massey JT. On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex. The Journal of Neuroscience. 1982;2:1527–1537. doi: 10.1523/JNEUROSCI.02-11-01527.1982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman RI, Stern JM, Engel J, Jr, Cohen MS. Simultaneous EEG and fMRI of the alpha rhythm. Neuroreport. 2002;13:2487–2492. doi: 10.1097/01.wnr.0000047685.08940.d0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He B. Brain Electric Source Imaging: Scalp Laplacian Mapping and Cortical Imaging. Critical Reviews in Biomedical Engineering. 1999;27:149–188. [PubMed] [Google Scholar]

- Heldman DA, Wang W, Chan SS, Moran DW. Local field potential spectral tuning in motor cortex during reaching. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2006;14:180–183. doi: 10.1109/TNSRE.2006.875549. [DOI] [PubMed] [Google Scholar]

- Hochberg LR, Serruya MD, Friehs GM, Mukand JA, Saleh M, Caplan AH, Branner A, Chen D, Penn RD, Donoghue JP. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- Kamousi B, Liu Z, He B. Classification of Motor Imagery Tasks for Brain-Computer Interface Applications by means of Two Equivalent Dipoles Analysis. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2005;13:166–171. doi: 10.1109/TNSRE.2005.847386. [DOI] [PubMed] [Google Scholar]

- Kamousi B, Amini AN, He B. Classification of Motor Imagery by Means of Cortical Current Density Estimation and Von Neumann Entropy for Brain-Computer Interface Applications. Journal of Neural Engineering. 2007;4:17–25. doi: 10.1088/1741-2560/4/2/002. [DOI] [PubMed] [Google Scholar]

- Kettner RE, Schwartz AB, Georgopoulos AP. Primate motor cortex and free arm movements to visual targets in three-dimensional space. III. positional gradients and population coding of movement direction from various movement origins. The Journal of Neuroscience. 1988;8:2938–2947. doi: 10.1523/JNEUROSCI.08-08-02938.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moosmann M, Ritter P, Krastel I, Brink A, Thees S, Blankenburg F, Taskin B, Obrig H, Villringer A. Correlates of alpha rhythm in functional magnetic resonance imaging and near infrared spectroscopy. NeuroImage. 2003;20:145–158. doi: 10.1016/s1053-8119(03)00344-6. [DOI] [PubMed] [Google Scholar]

- Moran DW, Schwartz AB. Motor cortical representation of speed and direction during reaching. Journal of Neurophysiology. 1999;82:2676–2692. doi: 10.1152/jn.1999.82.5.2676. [DOI] [PubMed] [Google Scholar]

- Mukamel R, Gelbard H, Arieli A, Hasson U, Fried I, Malach R. Coupling between neuronal firing, field potentials, and FMRI in human auditory cortex. Science. 2005;309:951–954. doi: 10.1126/science.1110913. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Paninski L, Fellows MR, Hatsopoulos NG, Donoghue JP. Spatiotemporal tuning of motor cortical neurons for hand position and velocity. Journal of Neurophysiology. 2004;91:515–532. doi: 10.1152/jn.00587.2002. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, Lopes da Silva FH. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clinical Neurophysiology. 1999;110:1842–1857. doi: 10.1016/s1388-2457(99)00141-8. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, Brunner C, Schlogl A, Lopes da Silva FH. Mu rhythm (de)synchronization and EEG single-trial classification of different motor imagery tasks. NeuroImage. 2006;31:153–159. doi: 10.1016/j.neuroimage.2005.12.003. [DOI] [PubMed] [Google Scholar]

- Rao SM, Bandettini PA, Binder JR, Bobholz JA, Hammeke TA, Stein EA, Hyde JS. Relationship between finger movement rate and functional magnetic resonance signal change in human primary motor cortex. Journal of Cerebral Blood Flow and Metabolism. 1996;16:1250–1254. doi: 10.1097/00004647-199611000-00020. [DOI] [PubMed] [Google Scholar]

- Royer AS, He B. Goal selection versus process control in a brain-computer interface based on sensorimotor rhythms. J Neural Eng. 2009;6:016005. doi: 10.1088/1741-2560/6/1/016005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santhanam G, Ryu SI, Yu BM, Afshar A, Shenoy KV. A high-performance brain-computer interface. Nature. 2006;442:195–198. doi: 10.1038/nature04968. [DOI] [PubMed] [Google Scholar]

- Schwartz AB. Direct cortical representation of drawing. Science. 1994;265:540–542. doi: 10.1126/science.8036499. [DOI] [PubMed] [Google Scholar]

- Schwartz AB, Cui XT, Weber DJ, Moran DW. Brain-controlled interfaces: Movement restoration with neural prosthetics. Neuron. 2006;52:205–220. doi: 10.1016/j.neuron.2006.09.019. [DOI] [PubMed] [Google Scholar]

- Serruya MD, Hatsopoulos NG, Paninski L, Fellows MR, Donoghue JP. Instant neural control of a movement signal. Nature. 2002;416:141–142. doi: 10.1038/416141a. [DOI] [PubMed] [Google Scholar]

- Soechting JF, Lacquaniti F. Invariant characteristics of a pointing movement in man. The Journal of Neuroscience. 1981;1:710–720. doi: 10.1523/JNEUROSCI.01-07-00710.1981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor DM, Tillery SI, Schwartz AB. Direct cortical control of 3D neuroprosthetic devices. Science. 2002;296:1829–1832. doi: 10.1126/science.1070291. [DOI] [PubMed] [Google Scholar]

- Tallon-Baudry C, Bertrand O, Delpuech C, Permier J. Oscillatory gamma-band (30–70 Hz) activity induced by a visual search task in humans. Journal of Neuroscience. 1997;17:722–734. doi: 10.1523/JNEUROSCI.17-02-00722.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Truccolo W, Friehs GM, Donoghue JP, Hochberg LR. Primary motor cortex tuning to intended movement kinematics in humans with tetraplegia. The Journal of Neuroscience. 2008;28:1163–1178. doi: 10.1523/JNEUROSCI.4415-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Velliste M, Perel S, Spalding MC, Whitford AS, Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 2008;453:1098–1101. doi: 10.1038/nature06996. [DOI] [PubMed] [Google Scholar]

- Waldert S, Preissl H, Demandt E, Braun C, Birbaumer N, Aertsen A, Mehring C. Hand movement direction decoded from MEG and EEG. The Journal of Neuroscience. 2008;28:1000–1008. doi: 10.1523/JNEUROSCI.5171-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang T, Deng J, He B. Classifying EEG-based motor imagery tasks by means of time-frequency synthesized spatial patterns. Clinical Neurophysiology. 2004;115:2744–2753. doi: 10.1016/j.clinph.2004.06.022. [DOI] [PubMed] [Google Scholar]

- Wessberg J, Stambaugh CR, Kralik JD, Beck PD, Laubach M, Chapin JK, Kim J, Biggs SJ, Srinivasan MA, Nicolelis MA. Real-time prediction of hand trajectory by ensembles of cortical neurons in primates. Nature. 2000;408:361–365. doi: 10.1038/35042582. [DOI] [PubMed] [Google Scholar]

- Wolpaw JR, McFarland DJ. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proceedings of the National Academy of Sciences of the United States of America. 2004;101:17849–17854. doi: 10.1073/pnas.0403504101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpaw JR, McFarland DJ. Multichannel EEG-based brain-computer communication. Electroencephalography and Clinical Neurophysiology. 1994;90:444–449. doi: 10.1016/0013-4694(94)90135-x. [DOI] [PubMed] [Google Scholar]

- Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clinical Neurophysiology. 2002;113:767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- Yuan H, Doud A, Gururajan A, He B. Cortical imaging of event-related (de)synchronization during online control of brain-computer interface using minimum-norm estimates in frequency domain. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2008;16:425–431. doi: 10.1109/TNSRE.2008.2003384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan H, Liu T, Szarkowski R, Rios C, Ashe J, He B. Negative covariation between task-related responses in alpha/beta-band activity and BOLD in human sensorimotor cortex: An EEG and fMRI study of motor imagery and movements. Neuroimage. 2009 doi: 10.1016/j.neuroimage.2009.10.028. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]