Abstract

Context: This article presents the main results from a large-scale analytical systematic review on knowledge exchange interventions at the organizational and policymaking levels. The review integrated two broad traditions, one roughly focused on the use of social science research results and the other focused on policymaking and lobbying processes.

Methods: Data collection was done using systematic snowball sampling. First, we used prospective snowballing to identify all documents citing any of a set of thirty-three seminal papers. This process identified 4,102 documents, 102 of which were retained for in-depth analysis. The bibliographies of these 102 documents were merged and used to identify retrospectively all articles cited five times or more and all books cited seven times or more. All together, 205 documents were analyzed. To develop an integrated model, the data were synthesized using an analytical approach.

Findings: This article developed integrated conceptualizations of the forms of collective knowledge exchange systems, the nature of the knowledge exchanged, and the definition of collective-level use. This literature synthesis is organized around three dimensions of context: level of polarization (politics), cost-sharing equilibrium (economics), and institutionalized structures of communication (social structuring).

Conclusions: The model developed here suggests that research is unlikely to provide context-independent evidence for the intrinsic efficacy of knowledge exchange strategies. To design a knowledge exchange intervention to maximize knowledge use, a detailed analysis of the context could use the kind of framework developed here.

Keywords: Knowledge exchange, knowledge use, systematic review, organizations, policymaking, framework

Understanding the relations between knowledge and action has been an academic preoccupation since the beginning of philosophy (Majone 1989; Rich 1979; Van de Ven and Schomaker 2002). In the past thirty years or so, however, this question has gravitated increasingly toward center stage as a result of diverse but convergent disciplinary approaches to the debate. For example, the ideological questioning of social science public funding in the late 1970s in the United States prompted a heated debate on science's social usefulness. In the field of evaluation, a parallel debate on the use of evaluation results greatly influenced the frameworks used to conceptualize the relation between knowledge and action. During the same period, new data on the discrepancy between scientific knowledge and the average medical practice gave birth to the evidence-based medicine (EBM) perspective, whose influence both inside and outside the realm of clinical practices was tremendous. Organizational theories on decision making, as well as theoretical and empirical developments in political science concerning the relation between information and policies, also contributed to the debate. Some of these perspectives were eventually merged in new frameworks, while others continued to evolve autonomously. Taken together, these different streams of scholarly work modified quite radically the way that knowledge producers, institutions, and decision makers are currently expected to interact. In this article, we systematically survey and review some of those streams to provide insight into what is known about collective-level interventions aimed at influencing policymaking or organizational behavior though knowledge exchange, and especially about the contextual conditions affecting their efficacy. We use the term collective level to describe interventions in organizational or policymaking settings, as distinct from interventions for influencing individual behavior. We offer a more detailed definition in the following section. Several scholars (Dobrow, Goel, and Upshur 2004; Eccles et al. 2005; Grol et al. 2007; Grol and Grimshaw 2003) have highlighted the individual and collective levels as being both complementary and necessary to the success of clinical-level changes and quality improvement.

We conducted a large-scale analytical literature review focused on two relatively autonomous bodies of literature on knowledge exchange (Sabatier 1978). The first was derived from mostly three sources: debates about the role of social sciences in society; studies of the use of evaluation results; and, to a lesser extent, rationalist management perspectives influenced by the success of EBM at the clinical level. The second body of literature was developed in the field of political science. It comprises theoretical and empirical work on the deliberate use of information by interest groups in policymaking processes (lobbying) and on the influence of networks’ shape, nature, and composition on information circulation in policymaking.

We begin with a short discussion of the boundaries of this phenomenon. Then we describe our data collection and synthesis method. Finally, we explore the definitions of collective-level knowledge exchange and use that emerge from the literature we reviewed and discuss three characteristics of context that have a core influence on those processes.

Definition of the Phenomenon

Defining “Knowledge”

Because of its integrative focus, we based our review on a broad and encompassing definition of the notion of “knowledge.” Actually, some traditions seldom use the terms knowledge or evidence. For example, a significant part of the literature on exchange processes was developed in the field of evaluation, in which the content of the exchange process itself is “evaluation results.” More fundamentally, other fields refrain from normative distinctions regarding the nature of the information transmitted. In political science or organizational analysis, decision-making processes are conceptualized as being informed by various types of information, including, in some cases, scientific evidence. Consequently, in order to integrate various traditions, we have not distinguished the nature of the information transmitted through the interventions.

Collective-Level Processes of Knowledge Exchange

Our review assumes that knowledge exchange processes can occur at two complementary levels that should be analytically distinguished. On one hand, some knowledge exchange processes are aimed at autonomous individuals. Here autonomy refers to the fact that the potential users of knowledge targeted by the exchange processes are usually sovereign in their capacity to mobilize knowledge and, consequently, to modify their practices. The individuals targeted will respond to the knowledge exchange process to varying degrees, and both the context and the individuals’ characteristics will have an impact. The outcome of the knowledge exchange process, however, remains an individual reaction at the confluence of individual, contextual, and process factors. Interventions aimed at modifying the clinical behavior of professionals mostly fall within this definition of individual-level interventions (Eccles et al. 2005; Freemantle et al. 2002; Grimshaw et al. 2002, 2006; Grol and Grimshaw 2003; King's Fund 2006).

On the other hand, knowledge exchange processes can occur in systems characterized by high levels of interdependency and interconnectedness among participants. Interdependency here refers to the fact that none of the participants has enough autonomy or power to translate the information into practices on his or her own (Havelock 1969; Jordan and Maloney 1997; Kickert, Klijn, and Koppenjan 1999; Leviton 2003; Lynn 1978; March 1988; March and Olsen 1976; Pressman and Wildavsky 1973; Rhodes 1990). In such contexts, individuals are embedded in systemic relations in which knowledge use depends on processes such as sense making (Nonaka 1994; Russell et al. 2008; Weick 1995), coalition building (Heaney 2006; Lemieux 1998; Salisbury et al. 1987), and rhetoric and persuasion (Majone 1989; Milbrath 1960; Perelman and Olbrechts-Tyteca 1969; Russell et al. 2008; Van de Ven and Schomaker 2002).

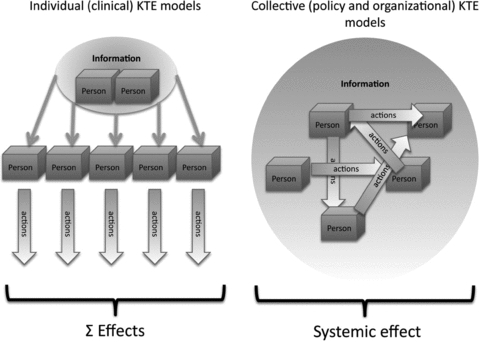

We suggest that causal relations between knowledge exchange processes and their outcomes are sufficiently different at the individual and collective levels to warrant different approaches, in both knowledge exchange interventions and their analysis and evaluation (Bowen and Zwi 2005). Given that the intended effects of individual-level interventions are usually repeated behaviors by numerous autonomous individuals, this allows us to use robust quantitative methods to study behavioral or practice changes. Conversely, a collective knowledge exchange intervention that involves numerous individuals usually produces systemic outcomes that cannot be easily specified (Langley et al. 1995) and, as such, that considerably complicate (or preclude) a valid measurement of the effects (Alkin and Taut 2003; Beyer and Trice 1982; Booth 1990; Carden 2004; Cousins and Leithwood 1986; Dunn 1983; Florio and Demartini 1993; Henry and Mark 2003; Huberman 1987; Larsen 1980; Lester and Wilds 1990; Leviton and Hughes 1981; Logan and Graham 1998; Mitton et al. 2007; Pelz 1978; Pelz and Horsley 1981; Rich 1977; Rich and Oh 1993; Shulha and Cousins 1997; Snell 1983; Weiss 1981). Figure 1 illustrates the difference in the two levels’ effects.

Figure 1.

Individual versus Collective Knowledge Transfer and Exchange (KTE) Processes.

This difference explains the gap between the strength of available evidence regarding the effectiveness of clinical-level interventions (e.g., Freemantle et al. 2002; Grimshaw et al. 2002, 2006; King's Fund 2006; Oxman et al. 1995) and the relative weakness of the evidence on collective-level interventions (e.g., the reviews from Beyer and Trice 1982; Cousins and Leithwood 1986; Johnson 1998; Leviton and Hughes 1981; McNie 2007; Mitton et al. 2007; Ward, House, and Hamer 2009). From the outset, we focused exclusively on the collective level. Individual-level interventions are fundamental to improve quality, effectiveness, and efficiency in the delivery of care. But as many scholars have stated (Dobrow, Goel, and Upshur 2004; Eccles et al. 2005; Gabbay and le May 2004; Grol et al. 2007; Grol and Grimshaw 2003; Mitton et al. 2007), individual-level interventions alone cannot achieve those objectives; policymaking and organizational-level interventions play a major role. Our aim in this review is to strengthen our understanding of the processes in such interventions.

Knowledge Exchange Interventions

In conducting our review, we further limited our definition of knowledge exchange interventions. First, although information flows are both natural and ubiquitous in organizational and policy systems, we narrowed our review to include only active, deliberate communication efforts (Knott and Wildavsky 1980), similar to the distinction between naturally occurring “diffusion” and active “dissemination” proposed by some scholars (Greenhalgh, Robert, Bate, et al. 2004; Lomas 1993a, 1993b). We describe active knowledge exchange efforts as deliberate or instrumental, in the sense that some people use them as instruments to influence the opinions or actions of others (Brunsson 1982; Henry and Mark 2003).

Second, opinions and actions encompass what are usually described as “decisions,” as well as the production of discourse (Edelman 1977; Majone 1989; Nonaka 1994; Van de Ven and Schomaker 2002; Weick 1995). The study of knowledge transfer processes is greatly influenced by the concepts of “decision” and “decision making,” yet the operation of those concepts in collective systems (March and Olsen 1976; Sabatier and Jenkins-Smith 1999) is highly problematic (Langley et al. 1995; Weiss 1977c; Weiss and Bucuvalas 1980a). We therefore refrained from relying on the concept of decision and instead used the notion of “action”—as discussed later in more detail—to describe the target of the knowledge exchange intervention.

Third, the heuristically useful distinction between instrumental and symbolic use (Beyer and Trice 1982; Knorr 1977; Weiss and Bucuvalas 1980a) is challenging on a practical level. In the words of Beyer and Trice, “instrumental use involves acting on research results in specific, direct ways,” while “symbolic use involves using research results to legitimate and sustain predetermined positions” (1982, 598). Empirically, making the distinction would require perfect access to users’ cognitive processes, and it remains debatable whether even users would be able to make the distinction (Gilovich, Griffin, and Kahneman 2002; Kahneman, Slovic, and Tversky 1982; Kahneman and Tversky 2000). Similarly, because we are concentrating here on deliberate dissemination interventions, we intentionally left out conceptual use (Beyer and Trice 1982; Knorr 1977; Weiss and Bucuvalas 1980a), even though it can be an important externality of deliberate intervention.

To summarize, our review is focused on the collective level of analysis in order to understand deliberate interventions aimed at influencing behaviors or opinions though the communication of information.

Methods

Data Collection

From the outset, our aim in this review was to develop an integrated interdisciplinary framework for understanding collective-level knowledge exchange interventions. This made it challenging to identify a coherent and precise set of keywords for the search process. In a field similar to the one reviewed here, but with less interdisciplinary ambition, the review published by Mitton and colleagues (2007) relied on a keyword approach that enabled the identification of 169 relevant documents out of 4,250 hits (before triaging on the basis of strength of evidence). We anticipated that in our case, a similar strategy would yield even more chaff and less wheat because the disciplinary traditions targeted are broader and each relies on distinct vocabulary and conceptualizations. Inspired in a large part by the work of Greenhalgh and colleagues (Greenhalgh et al. 2005; Greenhalgh and Russell 2006), we relied instead on a non-keyword-based reviewing process that we dubbed double-sided systematic snowball.

Our goal was to identify documents that made a core contribution, either conceptually or empirically, to the understanding of the phenomenon. Our starting point was to identify, through team consensus, some seminal papers (n= 33) that were considered to have shaped the evolution of the field. We started by identifying a heuristic list of seven “traditions”: (1) political science literature on lobbying and group politics; (2) works on agenda-setting processes in policymaking; (3) literature on policy networks (and related phenomena, e.g., iron triangles and subgovernments); (4) “mainstream” literature on knowledge transfer and exchange; (5) works in the evaluation field about the use of evaluation results; (6) organizational-level literature on decisional processes and learning; and (7) social network analysis works on information circulation. Each tradition was exemplified by one or more publications. The definition of “traditions” and the identification of specific publications were interdependent processes conducted on a consensus basis. At the end of the process, we had produced a list of thirty-three “seminal” sources (see the appendix).

Although any such starting point has limitations, we contend that the double-sided systematic snowball process we used can compensate for omissions in the initial list. We then used the ISI Web of Science Citation Index to identify all documents (n= 4,201) that cited those seminal papers. The snowball process here was prospective, since it exclusively targeted documents published after the selected seminal paper. We then triaged the results using the titles and (if present) the abstracts, using a decision grid based on the definition of the phenomenon under review, as discussed in the previous section. This process identified 189 documents that we then retrieved and read for further selection according to the same criteria. At the end of this prospective snowballing, we selected 102 documents for detailed analysis. Next we used the bibliographies of those 102 documents as a basis for retrospective systematic snowball sampling. We entered each document's complete bibliography in a database (n= 5,622) and used algorithms to identify all articles cited five times or more and all books cited seven times or more. The thresholds for articles and books were set individually by trial and testing to achieve an acceptable precision rate. The principle, however, was the same and was aimed at identifying what Greenhalgh, Robert, Bate and colleagues (2004) called landmark papers. From this, we identified twenty-three new books (twenty-seven references cited seven times or more, belonging to twenty-three books, many of which were edited) and fifty-seven articles. Among the articles, we excluded fourteen based on relevance criteria and twelve that were already among the 102 identified in the first step. Finally, we included forty-nine other documents either through deliberate selection during the first step of analysis because of their empirical or conceptual contribution, or through nonsystematic sampling of the field. In the end, we analyzed 205 documents in detail (for the complete bibliography, see http://www.medsp.umontreal.ca/scinf/transfertConnaissances/indexEN.html). We will return later to our decision not to include triaging on the basis of strength of evidence in our reviewing strategy, but first we will discuss the exhaustiveness of the data collected.

The low level of data saturation obtained after the two iterations of snowball sampling surprised us because we identified twice only twelve documents out of 217. This prompted some questioning about the actual size of the total population of relevant documents. To estimate the total population size, we used the Lincoln-Petersen capture-mark-recapture formula commonly used in ecology. Depending on how the book chapters are treated, population estimates vary between 561 and 595. The accuracy of this formula rests on the assumption that individual items have the same probability of being captured in each of the two rounds. This assumption was clearly not upheld in our sampling strategy, and our estimate of population size thus is probably significantly inaccurate. Nevertheless, this estimate can provide an order of magnitude, which in turn highlights the fact that this review was systematic only to the extent that it relied on an “explicit, rigorous and transparent methodology” (Greenhalgh, Robert, McFarlane et al. 2004, 582). Given the considerable proportion of eligible documents that were likely neither identified nor reviewed (around 70 percent if the population size were not too far from our estimate), we should acknowledge that systematic here does not mean exhaustive. It is important to emphasize, however, that we have no reason to believe that keyword-based approaches are more accurate or exhaustive.

Another important issue in systematic reviews concerns the exclusion criteria used for triage. As mentioned earlier, we applied the same relevance criteria at every step of the process and abstained from triaging on strength-of-evidence criteria. We made this methodological decision during the review because of practical problems we encountered while using strength-of-evidence grading tools (Greenhalgh, Robert, Macfarlane, et al. 2004; Lohr 2004; Mitton et al. 2007; Øvretveit et al. 2002). Many documents that we considered insightful, informed, and central would have been excluded by such grading tools because they were not based on explicitly empirical data (actually, 44 percent of the documents reviewed were classified as mostly or exclusively theoretical). Illustrations of such situations are works from Carol Weiss or Jonathan Lomas, who have considerable knowledge of both theoretical and practical issues related to our phenomenon, gathered from active empirical experience, and who wrote conceptual papers based on this tacit knowledge. For example, Weiss's (1979) paper was cited in thirty-nine of the 102 documents identified in the first snowballing step but would have been triaged out for not being based on explicit empirical data.

Synthesis Approach

The greatest challenge in conducting a review in a broad and complex field like the one defined by our phenomenon of interest is not data collection but data synthesis. How could we make sense of several thousand pages of data in a usable and publishable format? The data in our field are almost exclusively narrative (Mays, Roberts, and Popay 2001) in the sense that, whether the primary data sources were quantitative or qualitative, the data we actually collected and analyzed are documents whose meaning cannot be accessed in any other way than through their narrative structure. Many factors explain this situation, in particular, the conceptual diversity of the field, the complexity of the interventions themselves, and their systemic—as opposed to summative—outcomes (see figure 1). The last two characteristics are core features of the phenomenon studied that make it ontologically more suited to case studies than to any other method, as confirmed by the data collected in this review.

We used two main synthesis techniques to deal with narrative material. One uses stringent triage criteria to summarize the main findings of the selected sources, in either tables or new narratives. Stringent triaging is mandatory to keep the output to a usable size. For example, a recent synthesis on knowledge transfer by Ward, House, and Hamer (2009) went from 9,522 keyword-generated hits to twenty-eight paper-based models, whereas Mitton and colleagues’ review (2007) went from 4,250 keyword-generated hits to eighty-one papers. The second technique is what Forbes and Griffiths (2002) call an analytical or theoretical synthesis, quite close to what Pawson and colleagues (2005) described as a realist review approach, whose aim is to synthesize the data as a new integrated theoretical model. The well-known metanarrative-mapping approach of Greenhalgh and colleagues (Greenhalgh, Robert, Bate, et al. 2004; Greenhalgh et al. 2005) is at the junction of the two. It pushes summarization to its limits by treating as the unit whole research traditions rather than individual documents and, by doing this, offers a new integrated theoretical model of its phenomenon of interest.

The main strength of the summary approach is the transparency of the summarization process. Its principal weaknesses are the loss of detail and the understanding of contextual influences provided by primary sources, the loss of overview, and the disputable usability of results too often presented as long and lifeless summary tables (Mays, Roberts, and Popay 2001). Analytical approaches have the opposite qualities. Whereas the analytical summarization process itself may be opaque to readers, it is intended to help them make sense of the primary sources by iteratively building a new model that will serve as a heuristic tool to comprehend convergences and divergences in the causal relations proposed by those primary sources (Forbes and Griffiths 2002; Pawson et al. 2005). We also contend that such approaches are more likely to provide usable results in the context of complex interventions. We should note that using analytical approaches makes formal triaging on the basis of strength of evidence much less crucial to the validity of results. The documents’ scientific strength is assessed during the in-depth analysis, at which point empirically weak papers, as well as those providing only a marginal conceptual contribution, are given a secondary place in the analysis.

Our review's summarization process had three phases. Each of the first 102 documents were randomly assigned to be read by a primary reviewer (one of three), who prepared a brief synopsis of its contribution to the understanding of the phenomenon. The synopses were then cross-validated by a second reviewer, from among the same three. The 102 synopses were the basis of the first-draft synthesis document produced by team discussions. Then the second step of the data collection started, and each new document's marginal contribution was directly integrated into the draft synthesis without an earlier synopsis. During this step, we also discussed the draft on four occasions with three senior decision makers in Quebec's health care system to assess the usefulness of the model developed. The draft synthesis was edited and developed iteratively until marginal modifications were considered minor enough to stop the process.

Results

We concentrated our integration of the reviewed literature on two main dimensions. First, we analytically defined and discussed three basic components of knowledge exchange systems: the roles of individual actors working in collective systems, the nature of the knowledge exchanged, and the process of knowledge use. Second, we conceptualized knowledge exchange interventions as being a part of larger collective action systems with three core dimensions: polarization, cost sharing, and social structuring. We should stress that the material we reviewed extends beyond those dimensions and that other levels of analysis—for example, individual-level strategies of communication—are possible as well.

Individuals in Collective Knowledge Exchange Networks

The various disciplinary traditions reviewed here have comparable typologies categorizing individuals into three groups—producers, intermediaries, and users—according to their relative position on the knowledge circulation continuum. At one end are those individuals who work in socially legitimate knowledge production institutions and systems without the capacity to put the knowledge developed to use (Arendt 1972; Caplan 1979; Rich 1979). At the other end are individuals who hold institutionally and socially sanctioned positions (Weber 1971) that allow them to intervene in the practices, rules, and functioning of organizational, political, or social systems. In between are various kinds of intermediaries, called conveyors(Havelock 1969), brokers(Weiss 1977a), intermediaries(Huberman 1994), or lobbyists(Milbrath 1960, 1963), all of whom contribute to the information flow. Many models or actual knowledge exchange interventions concern only two of the three. For example, political science models of lobbying often neglect the production side, and some knowledge-based models of evidence transfer tend to disregard actual utilization processes.

Those three groups should, however, be used with caution in empirical analysis. Actual knowledge exchange systems are composed of numerous individuals, and the intragroup diversity of positions, opinions, preferences, and interests should never be discounted. Although collective, the processes analyzed here are, in the end, actuated by individuals. All these individuals are exposed to institutional incentives and broader social norms and values. The definition of the interdependence among the social structures, individual perceptions, and action used in the analysis rests mainly on the work of the French sociologist Pierre Bourdieu (Bourdieu 1980, 1981, 1984a, 1984b, 1994, 2001). According to his perspective, humans internalize the results of their daily interactions with the social world into a subconscious habitus. Every habitus is unique to the extent that it is the product of the individual's history, past practices, and interactions with social structures; yet it also reflects the objective cultural, social, and institutional structures within which the individual lives. This explains the overall convergence of perceptions among individuals exposed to similar experiences and conditions. At a rather prosaic level, the institutional and social positions of actors in knowledge exchange systems shape their views of their role in these systems, which in turn interact with their cognitive processes, which do not resemble simple rational models (Gilovich, Griffin, and Kahneman 2002; Kahneman, Slovic, and Tversky 1982; Kahneman and Tversky 2000; Patton 1977). Actual knowledge exchange systems thus are complex because they are made up of complex human actors. This may seem obvious, but the literature is rife with oversimplifications, the three most common of which are discussing the hypothetical relations between one user and one producer, reifying users or producers as homogeneous groups (a slightly more sophisticated version of the first error), and disregarding the complexity of human motivations by attributing intrinsic group-based preferences or interests to users, producers, or intermediaries.

Knowledge from Information to Evidence

The first building block in any framework for analyzing knowledge exchange interventions is a definition of “knowledge.” The scientific literature reviewed does not offer a clear, dominant definition. The smallest common denominator is the generic notion of “information” set by our heuristic definition of the field. Various scholars and disciplinary traditions offer competing typologies established on the basis of the source or the nature of information. The concept of “knowledge exchange,” however, especially in health care, rests on an implicit commonsense notion that this “knowledge” must be evidence based. Theoretically, the distinction between information and evidence rests on the strong internal validity of the latter. Internal validity refers here to the scientific plausibility of the causal links implied in the message. Although the literature offers much normative advice to encourage users to favor scientifically valid evidence and to disregard other sources of information, much of the converging evidence suggests that internal validity per se does not influence information use (although perceived legitimacy does positively influence use, as we explain later) (Beyer and Trice 1982; Burstein and Hirsh 2007; Gabbay et al. 2003; Huberman 1987; Van de Vall and Bolas 1982; Weiss and Bucuvalas 1980a).

It is unclear whether the lack of relation between internal validity and use is due to the users’ lack of training in assessing or understanding internal validity criteria or because the concept of internal validity is irrelevant to the actual use. This second hypothesis might be explained by three different arguments. The first is that at the collective-action level, relevance to users has less to do with internal validity than with external validity, that is, whether the causal link would hold in the users’ specific context (Dobrow, Goel, and Upshur 2004; Elliott and Popay 2000). The second, somewhat related, argument is specific to social sciences results and suggests that evidence derived from social sciences always, in fact, offers “shallow” insights (Bardach 1984) not very different from common sense or what users can infer from their own experience (Albaek 1995; Caplan 1977; Caplan, Morisson, and Stambaugh 1975; Knott and Wildavsky 1980; Pelz 1978; Weiss and Bucuvalas 1980a, 1980b). We do not share this view of the ontological shallowness of social science results, but we will return to this argument in our discussion of the concept of knowledge use. Finally, the third, and probably the most important, argument has to do with competition. From this standpoint, users’ responsibility is to balance various kinds of information (e.g., see the typologies of information from Hall and Deardorff 2006; Peterson 1995; Phillips and Phillips 1984; Sabatier 1978). Although developed mostly in political science, this third explanation is quite economical in its implication that knowledge exchange systems are first and foremost systems of competition in which various kinds and sources of information compete for scarce resources, namely, the users’ attention (Lindblom 1959; Simon 1971).

If we accept that users are exposed to diverse kinds of relevant and legitimate information, only some of which is produced through scientific methods, then it follows logically that they cannot sort through and prioritize the information based on internal validity per se (Albaek 1995; Booth 1990; Bowen and Zwi 2005; Bryant 2003; Caplan, Morisson, and Stambaugh 1975; Elliott and Popay 2000; Florio and Demartini 1993; Freeman 2007; Shulha and Cousins 1997; Weiss 1983; Weiss and Bucuvalas 1980a; Whiteman 1985; Willison and MacLeod 1999). In their assessment of its relevance and credibility, users can either take internal validity into account or not (Arendt 1972), but then validity will be treated as only one criterion of credibility among others (we will return to this subject in the next section). There also is evidence that tacit knowledge and experience are often accorded significant weight in this process (Cousins and Leithwood 1986; Dobrow, Goel, and Upshur 2004; Gabbay et al. 2003; Peterson 1995; Weiss and Bucuvalas 1980a; Whiteman 1985).

The literature reviewed offers compelling support for the idea that in the exchange and utilization processes, scientific evidence is treated no differently than other types of information. Depending on the users’ views (Bowen and Zwi 2005; Hutchinson 1995; Lester and Wilds 1990; Weiss and Bucuvalas 1980a) and the institutional culture or rules (Barnsley, Lemieux-Charles, and McKinney 1998; Dunn 1980; Glaser, Abelson, and Garrison 1983; Havelock 1969; Lovell and Turner 1988; March and Olsen 1976; Steed 1988; Webber 1986, 1987; Zucker 1988), the internal validity and consistency with scientific procedures of any given piece of information contribute to its perceived credibility to an unknown extent—with no extent being a credible hypothesis in many contexts. We thus suggest that knowledge exchange interventions should be conceptualized as generic processes unrelated to the internal validity of the information exchanged. Many normative recommendations for knowledge exchange emphasize techniques aimed at ensuring that the message is scientifically sound. Suggesting, as we do, that knowledge exchange processes are not related to the scientific strength of the message in no way implies that validity does not matter, for it obviously does. What it implies is that developing scientifically sound advice and then designing knowledge exchange interventions to translate that advice into practices at the collective level are two different processes. The challenge of deliberate knowledge exchange interventions is working on a plausible linking of these two processes.

Defining Knowledge Use at the Collective Level

The data we collected showed that there are tens, if not hundreds, of definitions of knowledge use, but unfortunately none seems to dominate. The literature we reviewed, however, quite consistently suggests that scientific evidence seldom, if ever, directly solves organizational or policy-level problems (Dobrow, Goel, and Upshur 2004; Elliott and Popay 2000; Lomas 1990; Sabatier 1978). To be relevant, usable, and meaningful, evidence needs to be embedded in what political science calls policy options and could generically be called action proposals. Action proposals are assertions that employ rhetoric to embed information in arguments to support a causal link between a given course of action and its anticipated consequences (Bardach 1984; Brunsson 1982; Haas 1992; Knott and Wildavsky 1980; Majone 1989; Smith 1984; Van de Ven and Schomaker 2002). In this article, we define collective-level knowledge use as the process by which users incorporate specific information into action proposals to influence others’ thought and practices.

This definition calls for some elaboration. First, because the practical capacity of any given user to influence the collective system within which he or she intervenes (whether a service, an organization, a sector, or a policy) is contingent on context-specific factors, this definition dissociates knowledge use from actual practices or outcomes (Henry and Mark 2003; Weiss 1978). Second, we mentioned earlier that social science results were often described as offering “shallow” insights. This definition of use suggests that “shallowness” is not a characteristic of the evidence itself (whether developed in the social or the natural sciences) but a characteristic of collective-level contexts, in which the internal validity of the evidence dissolves during the elaboration of action proposals (Kothari, Birch, and Charles 2005). Third, although our review is not focused on the individual level, the actual capacity of a given actor to influence thought, practices, or rules is highly dependent on his or her rhetorical abilities (Majone 1989; Russell et al. 2008; Van de Ven and Schomaker 2002) and symbolic capital (Bourdieu 1980, 1981, 1984a, 2001). Finally, in interpreting the definition, we should keep in mind that at the collective level, knowledge plays a central role in the processes by which issues are problematized, conceptualized, and prioritized, something described in political science as agenda setting(Austen-Smith 1993; Baumgartner and Jones 1993; Considine 1998; Haas 1992; Kingdon 1984; Larocca 2004; Lewis and Considine 1999; Oh and Rich 1996; Smith 1995).

Ideology and Polarization

The literature we reviewed generally agrees—although mostly implicitly in political science—that the use of knowledge is influenced by its relevance, legitimacy, and accessibility. Relevance refers to timeliness, salience, and actionability, all heavily context-dependent characteristics (Beyer and Trice 1982; Black 2001; Burstein and Hirsh 2007; Landry, Amara, and Lamari 2001a; Larsen 1985; Lavis et al. 2003; Leviton and Hughes 1981; Lindblom and Cohen 1979; McNie 2007; Mitton et al. 2007; Owens, Petts, and Bulkeley 2006).Legitimacy refers to the credibility of the information (Bowen and Zwi 2005; Caplan, Morisson, and Stambaugh 1975; Cousins and Leithwood 1986; Florio and Demartini 1993; Gano, Crowley, and Guston 2007; Johnson 1980; Leviton and Hughes 1981; McNie 2007; Rein and White 1977; Sabatier 1978; Weiss and Bucuvalas 1980a). Accessibility refers to dimensions such as formatting and availability (Amara, Ouimet, and Landry 2004; Campbell et al. 2009; Denis, Lehoux, and Tré 2009; Frenk 1992; Leviton and Hughes 1981). The causal link between knowledge characteristics and use, however, is mediated by users’ perceptions (Feldman and March 1981; Gabbay et al. 2003; Greenberg and Mandell 1991; Havelock 1969; Hennink and Stephenson 2005; Huberman 1987, 1994; Hutchinson 1995; McNie 2007; Milbrath 1960; Weiss and Bucuvalas 1980b). Linking utilization to users’ perceptions, rather than to the characteristics of knowledge per se, in turn allows us to understand how politics and ideology influence knowledge exchange.

Every individual involved in collective-level networks of knowledge exchange has opinions, preferences, and interests (Bourdieu 1980; Dunn 1980; Knott and Wildavsky 1980; Larsen 1980; Oh and Rich 1996; Patton 1977, 1978; Weiss and Bucuvalas 1980a). Those opinions, preferences, and interests are central to each user's individual assessment of knowledge characteristics. If a user's understanding of the implications of a given piece of information is contrary to either his or her opinions or preferences (which can be assumed to include, but not be limited to, the expression of his or her interests), the user will ignore, contradict, or, at least, subject this piece of information to strong skepticism and low use (Florio and Demartini 1993; Gabbay et al. 2003; Knott and Wildavsky 1980; Larsen 1985; Lomas 1990; Potters and Van Winden 1992; Sabatier 1978; Weiss 1977c; Weiss and Bucuvalas 1980a, 1980b). Moreover, not all individual users and groups in a collective-action setting should be presumed to have similar perceptions about any given piece of information, which introduces the notion of issue polarization (Bourgeois and Nizet 1993; Lynn 1978; Rich and Oh 2000; Sabatier 1978; Weiss 1977c).

Contexts are said to be characterized by low issue polarization when potential users share similar opinions and preferences regarding (1) the problematization of the issue (consensus on the perception that a given situation is a problem and not the normal or desirable state of affairs), (2) the prioritization and salience of the issue (compared with other potential issues), and (3) the criteria against which potential solutions should be assessed. Conversely, as the level of consensus on those aspects diminishes, issue polarization grows.

In the literature we reviewed, there is consensus that issue polarization is a core feature of the knowledge exchange context. Low issue polarization is a sine qua non condition for technically focused debates, in which participants try to resolve differences though dialogue and “rational” arguments based on shared worldviews (Stone 2002). Conversely, high issue polarization leads to political debates and strategic-type processes in which dialogue is unlikely to bring consensus and participants try to impose their views on others. The literature we reviewed is sharply divided on how knowledge exchange interventions should adapt to variations in issue polarization. There is a clearly perceptible normative bias in much of the literature reviewed in favor of instrumental knowledge use, as opposed to symbolic use (Knorr 1977). We should note, parenthetically, that interesting arguments are provided at least on the theoretical level, suggesting that symbolic use can indeed lead to desirable outcomes (Albaek 1995; Brunsson 1982; Feldman and March 1981; Greenberg and Mandell 1991; Henry and Mark 2003; Huberman 1987; Whiteman 1985). But since high issue polarization is negatively associated with instrumental use (Beyer and Trice 1982), much of the literature suggests that a polarized context is intrinsically incompatible with success in knowledge exchange interventions (Knott and Wildavsky 1980; Lynn 1978; Weiss and Bucuvalas 1980a).

Nevertheless, this view is not shared by the political science literature, for which a polarized context is the normal state of affairs. In the lobbying tradition, the way in which divergences in opinions, preferences, and interests are organized explains (1) the extent of involvement in knowledge exchange activities (Ainsworth 1993; Baumgartner and Leech 1996; Coglianese, Zeckhauser, and Parson 2004; Epstein and Ohalloran 1995; Larocca 2004; Sloof and Van Winden 2000), (2) the structure and shape of knowledge exchange networks (Browne 1990; Carpenter, Esterling, and Lazer 2004; Hall and Deardorff 2006; Heclo 1978; Heinz et al. 1993), and (3) the content of the information exchanged (Austen-Smith and Wright 1992; Burstein and Hirsh 2007; Phillips and Phillips 1984). In the policy network tradition, polarization is also the core variable explaining the network's shape (Jordan and Maloney 1997; Kickert, Klijn, and Koppenjan 1999; Klijn 1996; Knoepfel and Kissling-Naf 1998; Konig and Brauninger 1998; Rhodes 1990). This tallies with observations from other traditions regarding the influence of ideological proximity on knowledge exchange processes (Caplan 1979; Havelock 1969; Rein and White 1977; Sabatier 1978; Webber 1983; Weiss 1977b, 1983; Weiss and Bucuvalas 1980a, 1980b). What the political science tradition added, beginning with the seminal works of Bauer, Pool, and Dexter (1963) and Milbrath (1963), is the explicit consideration that because information is a prized commodity in political struggles, with both a price and a value (Hall and Deardorff 2006), it should be offered to allies and strategically used against opponents. This observation is supported by strong empirical data (Heaney 2006; Heinz et al. 1993; Lowery et al. 2005; Smith 1995; Wright 1990). According to this view, the crucial element in understanding or designing knowledge exchange interventions is not so much the level of polarization as the way in which the system is divided and polarized.

The Cost-Sharing Equilibrium in the Knowledge Exchange System

The idea that knowledge has both a cost and a value was used by Bardach to suggest that knowledge will reach those “for whom the utility of having it exceeds the disutility of obtaining it” (1984, 126). Although this statement is highly rationalist in essence, a widely shared, broader assumption in the literature is that producers (Amara, Ouimet, and Landry 2004; Landry, Amara, and Lamari 2001a; Landry, Lamari, and Amara 2003), intermediaries (Austen-Smith and Wright 1992; Carpenter, Esterling, and Lazer 2003; Coglianese, Zeckhauser, and Parson 2004; Larocca 2004; Olson 1965), and users (Black 2001; Campbell et al. 2009; Harries, Elliott, and Higgins 1999; Jacobson, Butterill, and Goering 2005; Knott and Wildavsky 1980) all invest their energy and resources in knowledge exchange processes to the extent that they perceive this investment to be profitable. This, in turn, translates into encouragement for the implementation of institutional incentive schemes to increase use (e.g., for producers, tagged grant money or institutional recognition, and for users, either allocated time or favorable organizational rules, procedures, norms, and culture) (Campbell et al. 2009; Lomas 1990, 1993a, 2000; Mitton et al. 2007; Weiss 1978).

If we accept the idea that knowledge exchange activities imply some forms of cost (e.g., time, money, attention), it follows logically that some people in the knowledge exchange system must incur those costs. The challenge, then, is to understand the cost-sharing equilibrium between individuals and groups (if, in fact, one does exist, which we suggest is not always the case). Given the agreement in the literature on the typology of producers, intermediaries, and users, this typology can be used heuristically to discuss cost sharing. The user-pull (users invest resources to obtain knowledge perceived as useful) and producer-push (producers invest efforts to disseminate their findings) models already imply that the effort is assumed primarily by individuals from one or the other end of the process (Landry, Amara, and Lamari 2001b; Lavis, Ross, and Hurley 2002; Yin and Moore 1988). Likewise, a concentration of either benefits or losses (Baumgartner and Leech 1996; Olson 1965) or a high level of polarization turns many actors into de facto lobbyists (intermediaries) advocating for specific action proposals, that is, deliberately transmitting knowledge to defend their preferences or advance their interests.

Social Structuring

The last important contextual factor raised by our review is the influence of social structures. Both empirical analyses of knowledge exchange interventions (Belkhodja et al. 2007; Beyer and Trice 1982; Carpenter, Esterling, and Lazer 2004; Huberman 1987; Kramer and Wells 2005; Landry, Amara, and Lamari 2001a; Shulha and Cousins 1997) and individual-level theories of human behavior (Albaek 1995; Bourdieu 1980, 1994) show that interpersonal trust facilitates and encourages communication and that repeated communications create trust. In the long run, this feedback process helps open natural and enduring communication channels and is at the core of the numerous recommendations in favor of developing a close collaboration between producers and users (Barnsley, Lemieux-Charles, and McKinney 1998; Beyer and Trice 1982; Havelock 1969; Lavis et al. 2008; Lester and Wilds 1990; Lomas 1990, 2000; Nagel 2006; Rich 1991; Shulha and Cousins 1997; Yin and Gwaltney 1981). Empirical analysis of lobbying techniques (Carpenter, Esterling, and Lazer 2004; Hansen 1991; Heaney 2006; Heinz et al. 1993; Milbrath 1963) also supports the idea that developing and maintaining communication channels is a credible way to enhance use because doing this both facilitates access and raises the perceived value of the information transmitted.

From an instrumental standpoint, the question is the extent to which it is possible to actually intervene in the shape and nature of communication networks. Modifying the organization's formal structure is one way to act on those factors, a well-known example of which is the creation of “knowledge broker” positions. Knowledge brokers are hired by users’ organizations and are expected to work as boundary spanners, identifying, selecting, and obtaining information from the environment and efficiently transmitting it within the organization according to needs. Although conceptually appealing, presentations of this model often fail to discuss the practical difficulties of such a role in communication networks in which numerous sources of information are competing, polarization and politics matter, and information is unlikely to be neutral, objective data but, rather, bundled action proposals. The brokers’ structural position inside organizations is likely to limit their actual interventional capacity to contexts with low polarization and significant user investment.

We should also note that, by nature, knowledge exchange systems are not restricted to formal communication channels but extend well beyond organizational boundaries. Deliberately modifying network characteristics of such systems is thus very difficult. The framework developed here suggests that close collaboration between users and producers or intermediaries can exist only when a viable cost-sharing equilibrium is found. Such an equilibrium can be found, for example, when users are willing to invest enough resources to hire producers as consultants (Jacobson, Butterill, and Goering 2005; Patton 1978, 1988). Another viable equilibrium highlighted in the material reviewed is seen when producers or, much more often, intermediaries perceive knowledge exchange activities as a legitimate and viable means to defend their own opinions, preferences, or interests and decide to invest in lobby-like activities and bear most of the costs. In contexts in which the boundaries of polarization are relatively stable, this last equilibrium is likely to give rise to self-reinforcing institutionalized communication networks among allies with a significant amount of mutual trust (Carpenter, Esterling, and Lazer 2003, 2004; Heaney 2006; Heinz et al. 1993; Jordan and Maloney 1997; Knoepfel and Kissling-Naf 1998; Konig and Brauninger 1998; Rhodes 1990; Sabatier and Jenkins-Smith 1993).

Discussion

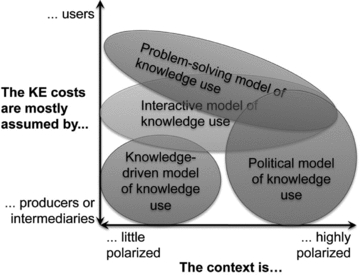

Carol Weiss developed a typology of the different meanings of “knowledge use” that had a considerable impact on the field (Weiss 1977a, 1977b, 1978, 1979). Weiss's typology is based on seven “meanings,” four of which are of interest here because their conditions of efficacy are, in our opinion, closely related to the core contextual dimensions we discuss. Those four are the knowledge-driven, problem-solving, interactive, and political models. To summarize—quite drastically—Weiss's exhaustive argument, the knowledge-driven model rests on the ideas that basic research discloses opportunities that may be relevant to public policy and that procedures should be implemented to ensure that promising knowledge is tested and, when pertinent, implemented. The problem-solving model reverses that logic, positing that research should try to provide empirical evidence that helps solve pre-identified policy problems. The interactive model posits that those engaged in policymaking seek information from a variety of sources through a disorderly set of interconnections that contribute, in the end, to making sense of the problem and developing solutions. Finally, in the political model, users have already taken a stand that research is not likely to change, and so information becomes ammunition for the side that finds its conclusions congenial and supportive.

In figure 2, we extend Weiss's typology by suggesting that these models can be interpreted not only as “meanings” but also as actual types of use. We contend, too, that two of the core dimensions of context discussed here can be helpful in understanding the conditions of efficacy for the models of use.

Figure 2.

Models of Use in Relation with Cost-Sharing and Polarization Dimensions of the Context.

This contention tallies with the main conclusion of this review, that context dictates the realm of the possible for knowledge exchange strategies aimed at influencing policymaking or organizational behavior. If a given issue's salience and prioritization are high enough for users to initiate knowledge exchange efforts and invest resources in them, then the probability of its use and impact can, from the outset, be presumed to be high. In minimally polarized contexts, use will likely resemble Weiss's problem-driven model (1979), and in highly polarized ones it will probably look like a political model of use (Weiss 1979).

When users are unwilling to bear the costs of the knowledge exchange intervention, a viable cost-sharing equilibrium exists only if others, whether producers or intermediaries, are motivated enough to disseminate information actively through lobby-like techniques. Again, this is much more likely in significantly polarized contexts, which are amenable to the political use of knowledge (Weiss 1979). In producer-driven, minimally polarized contexts, a viable cost-sharing equilibrium is much less probable, but if it is found, use is likely to resemble Weiss's (1979) knowledge-driven model. We acknowledge, however, the conspicuous absence in the material we reviewed of any empirical data or convincing theoretical frameworks supporting the hypothesis that significant use is likely in this last situation. In contrast, models that are mostly derived from political science offer quite interesting theoretical justifications for the fact that political use can lead to positive outcomes (Albaek 1995; Brunsson 1982; Feldman and March 1981; Greenberg and Mandell 1991; Henry and Mark 2003; Huberman 1987). Such models are based on the idea that knowledge—conceptualized here as convincing and politically viable action proposals—is considered as a commodity and is shared with allies. As long as the information is scientifically sound, its strategic dissemination and exchange in a collective system can only increase its marginal impact, compared with that of other information sources, something that would be construed by many people as desirable. Questions remain about the degree to which this transmission process distorts knowledge through a form of lying by omission.

From a use perspective, the ideal situation is one in which both users and producers are willing to invest in knowledge exchange. This allows for collaborative models of knowledge exchange (Kothari, Birch, and Charles 2005; Lavis et al. 2008; Weiss 1979), in which knowledge is jointly produced (Jacob 2006) through the pooling of users’ expertise (i.e., in-depth understanding of the context and of implementation-relevant dimensions) (Glaser, Abelson, and Garrison 1983; Julnes and Holzer 2001; Lomas 1993a; Pressman and Wildavsky 1973) and producers’ expertise (i.e., capacity to identify and access available knowledge, assess its internal validity, and contribute to knowledge production). At the macrolevel, many scholars have pointed out that both the institutional recognition of formal scientific knowledge use and individual-level incentives affect the likelihood of achieving viable cost-sharing equilibria (Barnsley, Lemieux-Charles, and McKinney 1998; Campbell et al. 2009; Dunn 1980; Havelock 1969; Kothari, Birch, and Charles 2005; Lomas 1990, 1993b, 2000; Lovell and Turner 1988; Sauerborn, Nitayarumphong, and Gerhardus 1999; Steed 1988; Webber 1986, 1987). Potential avenues of intervention, for instance, would be to have institutional rules that value scientific validity and independence in the commissioning and use of policy-relevant studies and that structure participants’ norms and values into the relative weighting of such information (Fox 2010; Gano, Crowley, and Guston 2007). That said, however, very few levers are available at the micro level to act on the perceptions of users or producers in order to influence their willingness to invest resources or efforts in knowledge transfer. Our review failed to find any knowledge transfer techniques likely to bring about significant nonpolitical use in situations in which there is no viable cost-sharing equilibrium or the equilibrium places most of the effort on producers or intermediaries.

Conclusion: Advice for Practice and Research

From this analytical review of the literature on collective-level knowledge transfer in diverse disciplinary fields, we can offer an integrative model to understand and analyze this phenomenon's main dimensions. The model we developed is based on three core dimensions that emerged inductively from the analysis: level of polarization (politics), cost-sharing equilibrium (economics), and institutionalized channels of communication (social structuring).

To conclude, we offer some reflections on the future of research and practice in the field. On the research side, as previous results have suggested (Mitton et al. 2007), our analysis of the literature shows that the quest for context-independent evidence (e.g., Dobbins et al. 2009) on the efficacy of knowledge exchange strategies is probably doomed. Collective knowledge exchange and use are phenomena so deeply embedded in organizational, policy, and institutional contexts that externally valid evidence pertaining to the efficacy of specific knowledge exchange strategies is unlikely to be forthcoming. On the practice side, our results suggest that the best available source of advice for someone designing or implementing a knowledge exchange intervention will probably be found in empirically informed and sound conceptual frameworks that can be used as field guides to decode the context and understand its impact on knowledge use and the design of exchange interventions. Much of the available practice-oriented advice on knowledge transfer promotes either one particular technique as a solution to the challenges of knowledge exchange and utilization or else very linear, knowledge-driven processes. The evidence reviewed here does not support most of that kind of advice. To design a knowledge exchange intervention to maximize knowledge use, we suggest starting with a detailed analysis of the context using the kind of framework developed here.

Acknowledgments

A Canadian Institutes for Health Research (CIHR) open grant (MOP 84259) made this research possible. The authors also want to thank Pierre Bergeron, Denis Roy, and Jean Rochon from the Institut National de Santé Publique du Québec (INSPQ), as well as John Lavis from McMaster University and Astrid Brousselle from Université de Sherbrooke, for their insightful comments on this material.

Appendix

List of “Seminal” References

| 1 | Ainsworth, S., and I. Sened. 1993. The Role of Lobbyists: Entrepreneurs with Two Audiences. American Journal of Political Science 37(3):834–66. |

| 2 | Allison, Graham. 1971.Essence of Decision: Explaining the Cuban Missile Crisis, 1 ed. Boston: Little Brown. The snowball search included the 2nd edition as well: Allison, G., and P. Zelikov. 1999.Essence of Decision: Explaining the Cuban Missile Crisis. New York: Addison-Wesley. |

| 3 | Austen-Smith, D. 1993. Information and Influence: Lobbying for Agendas and Votes. American Journal of Political Science 37(3):799–833. |

| 4 | Bennedsen, M., and S.E. Feldmann. 2006. Informational Lobbying and Political Contributions. Journal of Public Economics 90(4/5):631–56. |

| 5 | Carpenter, D.P., K.M. Esterling, and D.M.J. Lazer. 1998. The Strength of Weak Ties in Lobbying Networks: Evidence from Health-Care. Journal of Theoretical Politics 10(4):417–44. |

| 6 | Carpenter, D.P., K.M. Esterling, and D.M.J. Lazer. 2003. The Strength of Strong Ties: A Model of Contact-Making in Policy Networks with Evidence from U.S. Health Politics. Rationality and Society 15(4):411–40. |

| 7 | Choo, C.W. 1991. Towards an Information Model of Organizations. Canadian Journal of Information Science—Revue canadienne des sciences de l’information 16(3):32–62. |

| 8 | Choo, C.W. 2007. The Social Use of Information in Organizational Groups. In Information Management: Setting the Scene, vol. 1, ed. A. Huizing and E.J. de Vries, 111–126. Oxford: Elsevier Science. |

| 9 | Cohen, M.D., J.G. March, and J.P. Olsen. 1972. A Garbage Can Model of Organizational Choice. Administrative Science Quarterly 17(1):1–25. |

| 10 | Considine, M. 1998. Making up the Government's Mind: Agenda Setting in a Parliamentary System. Governance 11(3):297–317. |

| 11 | Forester, J. 1984. Bounded Rationality and the Politics of Muddling Through. Public Administration Review 44(1):23–31. |

| 12 | Huberman, M. 1987. Steps toward an Integrated Model of Research Utilization. Knowledge: Creation, Diffusion, Utilization 8(4):586–611. |

| 13 | Huberman, M. 1994. Research Utilization: The State of the Art. Knowledge, Technology & Policy 7(4):13–33. |

| 14 | Jordan, G. 1990. Sub-governments, Policy Communities and Networks: Refilling the Old Bottles? Journal of Theoretical Politics 2(3):319–38. |

| 15 | Knott, J., and A. Wildavsky. 1980. If Dissemination Is the Solution, What Is the Problem? Knowledge: Creation, Diffusion, Utilization 1(4):537–78. |

| 16 | Lavis, J.N., S.E. Ross, and J.E. Hurley. 2002. Examining the Role of Health Services Research in Public Policymaking. Milbank Quarterly 80(1):125–54. |

| 17 | Lindblom, C.E. 1959. The Science of “Muddling Through.” Public Administration Review 19(2):79–88. |

| 18 | Lomas, J. 1993a. Diffusion, Dissemination, and Implementation: Who Should Do What? Annals of the New York Academy of Sciences 703(1):226–35. |

| 19 | Lomas, J. 1993b. Retailing Research: Increasing the Role of Evidence in Clinical Services for Childbirth. The Milbank Quarterly 71(3):439–75.During the snowball process we noted a significant number of articles citing only the short introductory essay for the group of articles that included the Lomas article and thus we also included this source in the snowball search: Lomas, J., J.E. Sisk, and B. Stocking. 1993. From Evidence to Practice in the United States, the United Kingdom, and Canada. The Milbank Quarterly 71(3):405–10. |

| 20 | March, J.G. 1988. Decisions and Organizations. New York: Blackwell. |

| 21 | March, J.G. 1994. A Primer on Decision Making: How Decisions Happen. New York: Free Press. |

| 22 | Milbrath, L.W. 1960. Lobbying as a Communication Process. Public Opinion Quarterly 24(1):32–53. |

| 23 | Pettigrew, A.M. 1972. Information Control as a Power Resource. Sociology 6(2):187–204. |

| 24 | Pfeffer, J., and G.R. Salancik. 1974. Organizational Decision Making as a Political Process: The Case of a University Budget. Administrative Science Quarterly 19(2):135–51. |

| 25 | Potters, J., and F. Van Winden. 1990. Modelling Political Pressure as Transmission of Information. European Journal of Political Economy 6(1):61–88. |

| 26 | Rhodes, R. 1990. Policy Networks: A British Perspective. Journal of Theoretical Politics 2(3):293–317. |

| 27 | Rich, R.F. 1977. Use of Social Information by Federal Bureaucrats: Knowledge for Action versus Knowledge for Understanding. In Using Social Research in Public Policy Making, ed. C.H. Weiss, 199–233. Lexington, MA: Lexington Books. |

| 28 | Rich, R.F. 1979. The Pursuit of Knowledge. Knowledge: Creation, Diffusion, Utilization 1(1):6–30. |

| 29 | Sabatier, P.A., and H.C. Jenkins-Smith. 1993.Policy Change and Learning: An Advocacy Coalition Approach. Boulder, CO: Westview Press. |

| 30 | Sabatier, P.A., and H.C. Jenkins-Smith. 1999.Theories of the Policy Process. Boulder, CO: Westview Press. |

| 31 | Salisbury, R.H. 1969. An Exchange Theory of Interest Groups. Midwest Journal of Political Science 13(1):1–32. |

| 32 | Weiss, C.H. 1979. The Many Meanings of Research Utilization. Public Administration Review 39(5):426–31. |

| 33 | Yin, R.K., and M.K. Gwaltney. 1981. Knowledge Utilization as a Networking Process. Knowledge: Creation, Diffusion, Utilization 2(4):555–80. |

References

- Ainsworth S. Regulating Lobbyists and Interest Group Influence. Journal of Politics. 1993;55:41–56. [Google Scholar]

- Albaek E. Between Knowledge and Power: Utilization of Social Science in Public Policy Making. Policy Sciences. 1995;28:79–100. [Google Scholar]

- Alkin MC, Taut SM. Unbundling Evaluation Use. Studies in Educational Evaluation. 2003;29:1–12. [Google Scholar]

- Amara N, Ouimet M, Landry R. New Evidence on Instrumental, Conceptual, and Symbolic Utilization of University Research in Government Agencies. Science Communication. 2004;26:75–106. [Google Scholar]

- Arendt H. Vérité et politique. In: Arendt H, editor. La crise de la culture. Paris: Folio; 1972. pp. 289–336. [Google Scholar]

- Austen-Smith D. Information and Influence: Lobbying for Agendas and Votes. American Journal of Political Science. 1993;37:799. [Google Scholar]

- Austen-Smith D, Wright JR. Competitive Lobbying for a Legislator's Vote. Social Choice and Welfare. 1992;9:229–57. [Google Scholar]

- Bardach E. The Dissemination of Policy Research to Policymakers. Knowledge: Creation, Diffusion, Utilization. 1984;6:125–44. [Google Scholar]

- Barnsley J, Lemieux-Charles L, McKinney MM. Integrating Learning into Integrated Delivery Systems. Health Care Management Review. 1998;23:18–28. doi: 10.1097/00004010-199801000-00003. [DOI] [PubMed] [Google Scholar]

- Bauer RA, Pool Id, Dexter LA. American Business & Public Policy: The Politics of Foreign Trade. New York: Atherton Press; 1963. [Google Scholar]

- Baumgartner F, Jones BD. Agendas and Instability in American Politics. Chicago: University of Chicago Press; 1993. [Google Scholar]

- Baumgartner F, Leech BL. The Multiple Ambiguities of “Counteractive Lobbying. American Journal of Political Science. 1996;40:521–42. [Google Scholar]

- Belkhodja O, Amara N, Landry R, Ouimet M. The Extent and Organizational Determinants of Research Utilization in Canadian Health Services Organizations. Science Communication. 2007;28:377–417. [Google Scholar]

- Beyer JM, Trice HM. The Utilization Process: A Conceptual Framework and Synthesis of Empirical Findings. Administrative Science Quarterly. 1982;27:591–622. [Google Scholar]

- Black N. Evidence Based Policy: Proceed with Care. British Medical Journal. 2001;323:275. doi: 10.1136/bmj.323.7307.275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth T. Researching Policy Research: Issues of Utilization in Decision Making. Knowledge: Creation, Diffusion, Utilization. 1990;12:80–100. [Google Scholar]

- Bourdieu P. Le sens pratique. Paris: Éditions de Minuit; 1980. [Google Scholar]

- Bourdieu P. La représentation politique: Éléments pour une théorie du champ politique. Actes de la recherche en sciences sociales. 1981:36–37. 3–24. [Google Scholar]

- Bourdieu P. La délégation et le fétichisme politique. Actes de la recherche en sciences sociales. 1984a:52–53. 49–55. [Google Scholar]

- Bourdieu P. Homo academicus. Paris: Éditions de Minuit; 1984b. [Google Scholar]

- Bourdieu P. Un acte désintéressé est-il possible? In: Bourdieu P, editor. Raisons pratiques sur la théorie de l’action. Paris: Éditions du Seuil; 1994. pp. 149–67. [Google Scholar]

- Bourdieu P. Le mystère du ministère: Des volontés particulières à la “volonté générale.”. Actes de la recherche en science sociale. 2001;140:7–11. [Google Scholar]

- Bourgeois E, Nizet J. Influence in Academic Decision-Making: Towards a Typology of Strategies. Higher Education. 1993;26:387–409. [Google Scholar]

- Bowen S, Zwi AB. Pathways to “Evidence-Informed” Policy and Practice: A Framework for Action. Plos Medicine. 2005;2:600–605. doi: 10.1371/journal.pmed.0020166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Browne WP. Organized Interests and Their Issue Niches: A Search for Pluralism in a Policy Domain. Journal of Politics. 1990;52:477–509. [Google Scholar]

- Brunsson N. The Irrationality of Action and Action Rationality: Decisions, Ideologies and Organizational Actions. Journal of Management Studies. 1982;19:29–44. [Google Scholar]

- Bryant T. A Critical Examination of the Hospital Restructuring Process in Ontario, Canada. Health Policy. 2003;64:193–205. doi: 10.1016/s0168-8510(02)00157-4. [DOI] [PubMed] [Google Scholar]

- Burstein P, Hirsh CE. Interest Organizations, Information, and Policy Innovation in the US Congress. Sociological Forum. 2007;22:174–99. [Google Scholar]

- Campbell D, Redman S, Jorm L, Cooke M, Zwi AB, Rychetnik L. Increasing the Use of Evidence in Health Policy: Practice and Views of Policy Makers and Researchers. Australia and New Zealand Health Policy. 2009;6:21. doi: 10.1186/1743-8462-6-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caplan N. A Minimal Set of Conditions Necessary for the Utilization of Social Science Knowledge in Policy Formulation at the National Level. In: Weiss CH, editor. Using Social Research in Public Policy Making. Lexington, CA: Lexington Books; 1977. pp. 183–98. [Google Scholar]

- Caplan N. The Two-Communities Theory and Knowledge Utilization. American Behavioral Scientist. 1979;22:459. [Google Scholar]

- Caplan N, Morisson A, Stambaugh RJ. The Use of Social Science Knowledge in Policy Decisions at the National Level: A Report to Respondents. Ann Arbor: The University of Michigan, Center for Research on Utilization of Scientific Knowledge; 1975. [Google Scholar]

- Carden F. Issues in Assessing the Policy Influence of Research. International Social Science Journal. 2004;56:135–51. [Google Scholar]

- Carpenter DP, Esterling KM, Lazer DMJ. The Strength of Strong Ties: A Model of Contact-Making in Policy Networks with Evidence from U.S. Health Politics. Rationality and Society. 2003;15:411. [Google Scholar]

- Carpenter DP, Esterling KM, Lazer DMJ. Friends, Brokers, and Transitivity: Who Informs Whom in Washington Politics? Journal of Politics. 2004;66:224–46. [Google Scholar]

- Coglianese C, Zeckhauser R, Parson E. Seeking Truth for Power: Informational Strategy and Regulatory Policymaking. Minnesota Law Review. 2004;89:277–341. [Google Scholar]

- Considine M. Making up the Government's Mind: Agenda Setting in a Parliamentary System. Governance. 1998;11:297–317. [Google Scholar]

- Cousins JB, Leithwood KA. Current Empirical Research on Evaluation Utilization. Review of Educational Research. 1986;56:331–64. [Google Scholar]

- Denis J-L, Lehoux P, Tré G. L’utilisation des connaissances produites. In: Ridde V, Dagenais C, editors. Approches et pratiques en évaluation de programme. Montréal: Presses de l’Université de Montréal; 2009. pp. 177–91. [Google Scholar]

- Dobbins M, Hanna SE, Ciliska D, Manske S, Cameron R, Mercer SL, O’Mara L, DeCorby K, Robeson P. A Randomized Controlled Trial Evaluating the Impact of Knowledge Translation and Exchange Strategies. Implementation Science. 2009;4:61. doi: 10.1186/1748-5908-4-61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dobrow MJ, Goel V, Upshur REG. Evidence-Based Health Policy: Context and Utilisation. Social Science & Medicine. 2004;58:207–17. doi: 10.1016/s0277-9536(03)00166-7. [DOI] [PubMed] [Google Scholar]

- Dunn WN. The Two-Communities Metaphor and Models of Knowledge Use: An Exploratory Case Survey. Knowledge: Creation, Diffusion, Utilization(Science Communication) 1980;1:515–36. [Google Scholar]

- Dunn WN. Measuring Knowledge Use. Knowledge: Creation, Diffusion, Utilization(Science Communication) 1983;5:120. [Google Scholar]

- Eccles M, Grimshaw J, Walker A, Johnston M, Pitts N. Changing the Behavior of Healthcare Professionals: The Use of Theory in Promoting the Uptake of Research Findings. Journal of Clinical Epidemiology. 2005;58(2):107–12. doi: 10.1016/j.jclinepi.2004.09.002. [DOI] [PubMed] [Google Scholar]

- Edelman M. Political Language: Words that Succeed and Policies that Fail. New York: Academic Press; 1977. [Google Scholar]

- Elliott H, Popay J. How Are Policy Makers Using Evidence? Models of Research Utilisation and Local NHS Policy Making. Journal of Epidemiology and Community Health. 2000;54:461–68. doi: 10.1136/jech.54.6.461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein D, Ohalloran S. A Theory of Strategic Oversight: Congress, Lobbyists, and the Bureaucracy. Journal of Law Economics & Organization. 1995;11:227–55. [Google Scholar]

- Feldman M, March JG. Information in Organizations as Signal and Symbol. Administrative Science Quarterly. 1981;26:171–86. [Google Scholar]

- Florio E, Demartini JR. The Use of Information by Policy-Makers at the Local Community Level. Knowledge: Creation, Diffusion, Utilization. 1993;15:106–23. [Google Scholar]

- Forbes A, Griffiths P. Methodological Strategies for the Identification and Synthesis of “Evidence” to Support Decision-Making in Relation to Complex Healthcare Systems and Practices. Nursing Inquiry. 2002;9:141–55. doi: 10.1046/j.1440-1800.2002.00146.x. [DOI] [PubMed] [Google Scholar]

- Fox DM. The Convergence of Science and Governance: Research, Health Policy, and American States. Berkeley: University of California Press; 2010. [Google Scholar]

- Freeman R. Epistemological Bricolage—How Practitioners Make Sense of Learning. Administration & Society. 2007;39:476–96. [Google Scholar]

- Freemantle N, Nazareth I, Eccles M, Wood J, Haines A. A Randomised Controlled Trial of the Effect of Educational Outreach by Community Pharmacists on Prescribing in UK General Practice. British Journal of General Practice. 2002;52(477):290–95. [PMC free article] [PubMed] [Google Scholar]

- Frenk J. Balancing Relevance and Excellence: Organizational Responses to Link Research with Decision Making. Social Science & Medicine. 1992;35:1397–1404. doi: 10.1016/0277-9536(92)90043-p. [DOI] [PubMed] [Google Scholar]

- Gabbay J, le May A. Evidence Based Guidelines or Collectively Constructed “Mindlines?” Ethnographic Study of Knowledge Management in Primary Care. British Medical Journal. 2004;329(7473):1013–18. doi: 10.1136/bmj.329.7473.1013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabbay J, le May A, Jefferson H, Webb D, Lovelock R, Powell J, Lathlean J. A Case Study of Knowledge Management in Multi-agency Consumer-Informed “Communities of Practice”: Implications for Evidence-Based Policy Development in Health and Social Services. Health: An Interdisciplinary Journal for the Social Study of Health, Illness and Medicine. 2003;7:283–310. [Google Scholar]

- Gano GL, Crowley JE, Guston D. “Shielding” the Knowledge Transfer Process in Human Service Research. Journal of Public Administration Research and Theory. 2007;17:39–60. [Google Scholar]

- Gilovich T, Griffin D, Kahneman D. Heuristics and Biases: The Psychology of Intuitive Judgment. New York: Cambridge University Press; 2002. [Google Scholar]

- Glaser EM, Abelson HH, Garrison KN. Putting Knowledge to Use: Facilitating the Diffusion of Knowledge and the Implementation of Planned Change. San Francisco: Jossey-Bass; 1983. [Google Scholar]

- Greenberg DH, Mandell MB. Research Utilization in Policymaking: A Tale of Two Series (of Social Experiments) Journal of Policy Analysis and Management. 1991;10:633–56. [Google Scholar]

- Greenhalgh T, Robert G, Bate P, Kyriakidou O, Macfarlane F, Peacock R. How to Spread Good Ideas: A Systematic Review of the Literature on Diffusion, Dissemination and Sustainability of Innovations in Health Service Delivery and Organisation. London: Report for the National Co-ordinating Centre for NHS Service Delivery and Organisation (NCCSDO); 2004. [Google Scholar]

- Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of Innovations in Service Organizations: Systematic Review and Recommendations. The Milbank Quarterly. 2004;82(4):581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O, Peacock R. Storylines of Research in Diffusion of Innovation: A Meta-narrative Approach to Systematic Review. Social Science & Medicine. 2005;61(2):417–30. doi: 10.1016/j.socscimed.2004.12.001. [DOI] [PubMed] [Google Scholar]

- Greenhalgh T, Russell J. Reframing Evidence Synthesis as Rhetorical Action in the Policy Making Drama. Healthcare Policy. 2006;1:34–42. [PMC free article] [PubMed] [Google Scholar]

- Grimshaw J, Eccles M, Thomas R, MacLennan G, Ramsay C, Fraser C, Vale L. Toward Evidence-Based Quality Improvement: Evidence (and Its Limitations) of the Effectiveness of Guideline Dissemination and Implementation Strategies 1966–1998. Journal of General Internal Medicine. 2006;21:S14–20. doi: 10.1111/j.1525-1497.2006.00357.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimshaw JM, Eccles MP, Walker AE, Thomas RE. Changing Physicians’ Behavior: What Works and Thoughts on Getting More Things to Work. Journal of Continuing Education in the Health Professions. 2002;22:237–43. doi: 10.1002/chp.1340220408. [DOI] [PubMed] [Google Scholar]

- Grol R, Bosch MC, Hulscher M, Eccles MP, Wensing M. Planning and Studying Improvement in Patient Care: The Use of Theoretical Perspectives. The Milbank Quarterly. 2007;85(1):93–138. doi: 10.1111/j.1468-0009.2007.00478.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grol R, Grimshaw J. From Best Evidence to Best Practice: Effective Implementation of Change in Patients’ Care. The Lancet. 2003;362:1225–30. doi: 10.1016/S0140-6736(03)14546-1. [DOI] [PubMed] [Google Scholar]

- Haas P. Epistemic Communities and International Policy Coordination: Introduction. International Organization. 1992;46:1–35. [Google Scholar]

- Hall RL, Deardorff AV. Lobbying as Legislative Subsidy. American Political Science Review. 2006;100:69–84. [Google Scholar]

- Hansen JM. Gaining Access: Congress and the Farm Lobby, 1919–1981. Chicago: University of Chicago Press; 1991. [Google Scholar]

- Harries U, Elliott H, Higgins A. Evidence-Based Policy-Making in the NHS: Exploring the Interface between Research and the Commissioning Process. Journal of Public Health Medicine. 1999;21:29–36. doi: 10.1093/pubmed/21.1.29. [DOI] [PubMed] [Google Scholar]

- Havelock RG. Planning for Innovation through Dissemination and Utilization of Knowledge. Ann Arbor: The University of Michigan Institute for Social Research; 1969. [Google Scholar]

- Heaney MT. Brokering Health Policy: Coalitions, Parties, and Interest Group Influence. Journal of Health Politics, Policy and Law. 2006;31:887–944. doi: 10.1215/03616878-2006-012. [DOI] [PubMed] [Google Scholar]

- Heclo H. Issue Networks and the Executive Establishment: Government Growth in an Age of Improvement. In: Beer SH, King AS, et al., editors. The New American Political System. Washington, CA: American Enterprise Institute for Public Policy Research; 1978. pp. 87–124. [Google Scholar]

- Heinz JP, Laumann EO, Nelson RL, Salisbury RH. The Hollow Core: Private Interests in National Policy Making. Cambridge: Harvard University Press; 1993. [Google Scholar]