Abstract

In this paper, we propose a novel symmetrical EEG/fMRI fusion method which combines EEG and fMRI by means of a common generative model. We use a total variation (TV) prior to model the spatial distribution of the cortical current responses and hemodynamic response functions, and utilize spatially adaptive temporal priors to model their temporal shapes. The spatial adaptivity of the prior model allows for adaptation to the local characteristics of the estimated responses and leads to high estimation performance for the cortical current distribution and the hemodynamic response functions. We utilize a Bayesian formulation with a variational Bayesian framework and obtain a fully automatic fusion algorithm. Simulations with synthetic data and experiments with real data from a multimodal study on face perception demonstrate the performance of the proposed method.

Keywords: Multimodal fusion, M/EEG source localization, Spatial adaptivity, Total variation, Variational Bayes

Introduction

Electroencephalography (EEG) is one of the most widely used functional brain mapping methods. A main advantage of EEG is that it provides a direct measure of electrical activity in the brain via voltage sensors on the scalp and thus can achieve a high temporal resolution. However, locating the sources of activity in the brain from the EEG measurements is a difficult problem as there is an indefinite number of source configurations which give rise to the same measurements. The same problem is also encountered in magnetoencephalography (MEG), where the electrical activity in the brain is measured using magnetic field sensors. Due to the problem that the same measurements can be generated by an indefinite number of source configurations, EEG and MEG source localization are referred to as ill-posed inverse problems (Hämäläinen et al., 1993).

In the last two decades a large number of EEG and MEG source localization methods have been proposed in the literature. Due to the similarity of the inverse problems most methods are applicable to either modality and can be divided into two groups. The first group assumes that there is a small number (typically 1–5) of sources, each modeled by an equivalent current dipole (ECD) (Scherg and Von Cramon, 1986). The locations of the dipoles are found by performing a nonlinear optimization which minimizes the discrepancy to the data with respect to the dipole locations. While ECD methods are popular in practice, they have some major limitations: First, the number of dipoles has to be specified by the user and second, the optimization algorithm can get trapped in a local minimum and thus might not be able to find the optimal dipole locations. In fact, ECD methods are known to be unreliable when more than one dipole is used (Yao and Dewald, 2005). The second, and more recently proposed group of methods is referred to as distributed methods (Hämäläinen et al., 1993). Methods in this group assume a large number, typically several thousands, of dipoles with fixed locations which are distributed over the cortical surface. Source localization then amounts to finding the current amplitudes for all dipoles simultaneously, which is still an ill-posed problem since the number of dipoles is much larger than the number of sensors. However, the use of dipoles with fixed locations means that the forward problem is linear and source localization can be regarded as solving an underdetermined linear system of equations, which is similar to problems encountered in signal and image processing.

In order to find a unique solution, it is necessary to make assumptions about the solution. Such assumptions can be formulated as deterministic regularization terms, such as in the minimum norm method (Hämäläinen and Ilmoniemi, 1994), which finds the source configuration with minimal energy or in the low resolution electromagnetic tomography (LORETA) method (Pascual-Marqui et al., 1994), where a regularization term based on a spatial Laplacian is used to enforce a smooth solution.

The source localization problem can also be formulated as a Bayesian inference problem (Baillet and Garnero, 1997), which allows for an elegant way to include a priori information about the solution in the form of priors, such as spatial and temporal smoothness priors (Baillet and Garnero, 1997). The priors can be either fixed or can be automatically selected from a set of candidate priors, by means of Bayesian model selection. Examples of methods using fixed priors are ℓ2-norm methods (Baillet et al., 2001), ℓ1-norm methods (Uutela et al., 1999; Huang et al., 2006), as well as, the Bayesian formulation of the LORETA method (Pascual-Marqui et al., 1994). As stated in Wipf and Nagarajan (2009) there is a number of methods which attempt to perform Bayesian model selection. Examples of methods which automatically select priors using Bayesian model selection are methods which use a Gaussian prior with a linear combination of covariance components (Phillips et al., 2005; Mattout et al., 2006; Friston et al., 2006, 2008). These methods employ an empirical Bayesian scheme to estimate the hyperparameters controlling the contribution of each component. This formulation is very flexible and allows for the combination of priors such as spatial Laplacian, minimum norm, and depth constraints. Methods which use automatic relevance determination (ARD) (MacKay, 1992; Tipping, 2001; Ramírez, 2005; Wipf, 2006; Wipf et al., 2010) are based on similar ideas, i.e., the estimation of covariance components, but are more effective when the number of components is large. Typically, a separate hyperparameter is used for every diagonal element of the covariance matrix, which leads to a sparse solution, i.e., a solution with a small number of active dipoles, similar to ℓ1-norm regularization. Many existing M/EEG source localization methods can be formulated in a unified Bayesian framework; we refer to Wipf and Nagarajan (2009) where the framework is introduced for a more thorough review of Bayesian M/EEG source localization methods.

The Bayesian treatment of M/EEG source localization offers advantages other than the automatic determination of relevant priors. The Bayesian formulation offers a formal way to include information from other functional neuroimaging modalities, such as functional magnetic resonance imaging (fMRI), into the source localization problem.

In recent years, fMRI has become a prominent neuroimaging method as it offers a very high spatial resolution. On the other hand, the temporal resolution is limited by technical and physical constraints, which limit the repetition time (TR) to be in the order of seconds, as well as, by the indirect mechanism fMRI uses to measure neuronal activity, i.e., the so-called blood oxygen level dependent (BOLD) contrast (Ogawa et al., 1990; Frahm et al., 1992), which depends on slow hemodynamic processes. However, the complementary advantages of EEG and fMRI and the fact that they can be acquired simultaneously (Laufs et al., 2008) make the modalities attractive candidates to be combined, or “fused”, with the goal of obtaining functional neuroimaging data with high spatial and temporal resolution.

A number of methods have been proposed for combining M/EEG and fMRI for source localization. They are all based on the assumption that a subset of the neuronal activity is detectable by both modalities (Pflieger and Greenblatt, 2001), thus fMRI data can be used to inform the source localization method about the location of the sources. In terms of ECD methods, it is possible to constrain the location of the dipoles to be within fMRI active areas (George et al., 1995) or to use them as starting points for the optimization algorithm (dipole seeding) (Hillyard et al., 1997). More recently, an ECD method using a Bayesian formulation with an fMRI location prior and Markov Chain Monte Carlo sampling has been proposed (Jun et al., 2008). In the distributed formulation, fMRI active areas can be assigned different weights when using a weighted minimum norm method (Liu et al., 1998), or principal component analysis (PCA) and independent component analysis (ICA) can be used to obtain basis signals which can explain both the EEG and fMRI observations (Brookings et al., 2009). Another method is based on an adaptive Wiener filter where it is assumed that the energy of the electrical activity at every location on the cortex is proportional to the magnitude of the BOLD response at the same location (Liu and He, 2008). It can also be assumed that the cortical activity is sparse, i.e., there are a small but unknown number of active dipoles, which are often located in fMRI active areas. This assumption can be formulated in a Bayesian framework using an ARD prior with different hyperparameters for fMRI active areas (Sato et al., 2004). Another approach is to employ a Bayesian EEG source localization method which can automatically select priors from a set of candidate priors (Phillips et al., 2005; Mattout et al., 2006). When using such a method for EEG/fMRI fusion, location priors can be derived from fMRI activation maps (Mattout et al., 2006). An advantage of this formulation is the possibility to include every fMRI active cluster as a separate location prior (Henson et al., 2010). Doing so enables the method to automatically adjust the relative prior weights by means of model evidence maximization, which is very powerful since it allows the method to emphasize valid fMRI priors (Henson et al., 2010).

All these methods are considered asymmetric since the fMRI data set is analyzed separately and location priors for source localization are derived from the obtained fMRI activation maps. Since some neuronal activity may only be visible in one modality, the introduction of a fixed fMRI based prior can cause an estimation bias which strongly depends on the way the fMRI prior is introduced (Mattout et al., 2006).

Symmetrical EEG/fMRI fusion methods, which analyze the EEG and fMRI jointly and do not use an explicit fMRI prior are believed to be more robust against possible discrepancies between EEG and fMRI. Recently, a method which combines EEG and fMRI symmetrically by means of a common generative model has been proposed (Daunizeau et al., 2007). The method links the modalities by means of a time invariant spatial profile and uses temporal smoothness priors for the cortical currents and the hemodynamic response functions, as well as, a spatial smoothness prior based on a spatial Laplacian, which is also used in the LORETA method (Pascual-Marqui et al., 1994). By using a fully Bayesian formulation and variational Bayesian (VB) inference (Jordan et al., 1999; Attias, 2000) the method can estimate all parameters from the data and does not depend on any user defined parameters. Recently, a method with a similar generative model structure has been proposed (Ou et al., 2010). A key difference is that the generative model is not fully symmetric since the hemodynamic response function for each voxel is treated as an input to the algorithm. Together with a gradient descent based optimization method, this leads to advantages in terms of computational efficiency. Another difference lies in the prior model, the method uses a spatially adaptive Laplacian spatial smoothness prior and does not use temporal smoothness priors.

In this paper, we propose a symmetrical EEG/fMRI fusion method which uses a common generative model and spatially adaptive priors. We extend the method by Daunizeau et al. (2007) in several directions and achieve a higher source localization performance. Specifically, we assume that the spatial profile can contain sharp boundaries between active and inactive regions. We model this by means of a total variation (TV) prior (Rudin et al., 1992) for the spatial profile of cortical activity. In contrast to LORETA-type, i.e., spatial Laplacian, priors (Pascual-Marqui et al., 1994), which are commonly employed in existing methods, the TV prior is spatially adaptive, that is, the degree of spatial smoothness imposed by the prior varies depending on the location. Our generative model can therefore explain abrupt changes in cortical activity, which typically occur at the boundaries of brain regions involved in event related processing, while simultaneously enforcing smoothness in the solution (we refer to Strong and Chan (2003) for a thorough analysis of the properties of the TV prior). A fundamental difference between the spatially adaptive Laplacian prior used in Ou et al. (2010) and the TV prior is that the former can only adapt the degree of spatial smoothness on a per-region basis while the TV prior can do so on a per-vertex basis. The spatially adaptive Laplacian prior therefore depends on an a priori segmentation of the cortex and changes in the degree of spatial smoothness can only occur at region boundaries. The TV prior on the other hand does not depend on such a segmentation and can explain changes in the degree of smoothness at arbitrary locations on the cortex. The TV prior was used in Adde et al. (2005) as a deterministic regularization term for the spatial current distribution at a single time instant. The use of the TV prior in this paper in the context of Bayesian inference is fundamentally different and also requires a different discretization. The proposed method also utilizes spatially adaptive temporal priors, allowing for adaptation of the amount of temporal smoothness according to the estimated activity in different brain regions. We use a fully Bayesian formulation and estimate all parameters from the data. Due to the form of the TV prior, it is not possible to directly apply standard variational Bayesian methods to estimate the posterior distribution. Therefore, in order to draw inference, we resort to a majorization method recently proposed in Babacan et al. (2008). The method employs a Gaussian approximation to the TV prior, which renders variational distribution approximation possible, but retains the spatial adaptivity of the TV prior.

We demonstrate the effectiveness of the proposed method using both simulation experiments with synthetic EEG and fMRI data and real data from a multimodal study on face perception. We also include comparisons with existing source localization algorithms and show that the proposed method provides higher performance than existing methods in terms of estimation of the spatio-temporal cortical current distribution. Due to the novel prior model, the proposed method also estimates the hemodynamic response functions more accurately than previous symmetrical fusion methods.

Organization of this paper

This paper consists of 5 sections. In the first section we model the EEG/fMRI fusion problem using the Bayesian paradigm and introduce new realistic prior distributions for the spatio-temporal cortical current distribution and the hemodynamic response functions. The Bayesian inference scheme is introduced in the second section. In the third section we report on experiments with simulated data and in the fourth section we apply the proposed method to real data from a multimodal study on face perception. The paper is discussed and conclusions are drawn in the last section. Appendices with a description of the anatomical parceling, a definition of the signal to noise ratio, an explanation of the quality metrics used, and a detailed derivation of the calculated posterior distributions using the variational framework complete the paper.

Notation

We use the following notation throughout this paper: Aij and Ai,j denote the element at the i-th row and j-th column of matrix A, while the i-th element of a vector a is denoted as ai. Ai. denotes a row vector containing the elements of the i-th row of A, while A.i is a column vector containing the elements of the i-th column of A. The operator diag(A) extracts the main diagonal of A as a column vector, whereas Diag(a) a is a diagonal matrix with a as its diagonal. The operator vec (A) vectorizes A by stacking its columns, tr(A) denotes the trace of matrix A, and ⊗ denotes the Kronecker product.

Hierarchical Bayesian modeling

In this section we define the hierarchical generative model which forms the basis of the proposed method. In the first part we model the process which gives rise to the observed EEG and fMRI data when the current distribution on the cortex and the hemodynamic response function at every location are known. This constitutes the observation model which corresponds to the lowest level of the hierarchical model. In the second part we describe the spatio-temporal decomposition, which divides the cortex into a number of temporally coherent regions and establishes a connection between EEG and fMRI by means of an unknown time invariant spatial profile. We proceed by describing the spatio-temporal prior model, where we introduce the TV spatial prior, as well as, temporal priors which model varying degrees of temporal smoothness across the surface of the cortex. Following a fully Bayesian formulation, prior distributions for all hyperparameters of the model are defined next. At the end of this section, we combine the introduced probability density functions (pdf) to obtain a joint pdf over the observed data and all parameters of the model, which will enable us to obtain the Bayesian inference procedure defined in the next section.

Observation model

In the following we assume that the data is only related to a single event type. For EEG this means that the raw data is averaged over trials for the same event type in order to obtain event related potentials (ERPs) and for fMRI the event onset times for a single event type are used.

Using the distributed source framework (Hämäläinen et al., 1993) the EEG data is modeled as

| (1) |

where M is an m×t1 matrix containing the EEG recordings with duration t1 obtained from m electrodes placed on the scalp, S is an unknown n×t1 matrix representing the responses of n normal-oriented current dipoles distributed on the cortical surface, i.e., a spatio-temporal cortical current distribution, L is a known m×n forward operator, also known as lead-field matrix, which can be calculated from the head geometry and tissue conductivities, and η1 is an m×t1 matrix representing noise.

We model the noise η1 for EEG as zero-mean, independent and identically distributed (i.i.d.) Gaussian, resulting in

| (2) |

where α1 is the hyperparameter corresponding to the EEG noise precision.

In order to model the fMRI observations it is assumed that there is a linear relationship between the stimulus and the BOLD response, which leads to the following observation model (Marrelec et al., 2002)

| (3) |

where Y is the t2 × n matrix containing the fMRI measurements at n voxels on the cortical surface (we assume here that the locations of the voxels coincide with the locations of the EEG current dipoles), H is an unknown k × n matrix representing the hemodynamic response function (HRF) of length k for each voxel, and η2 is the t2 × n matrix with additive noise. The t2 × k matrix B is different from the design matrix in classical fMRI analysis (Friston et al., 1995). The matrix used here implements a convolution and is given by

| (4) |

where the experimental time course (xi)1≤i≤t2−k+1 is a discrete time series in which the i-th element encodes an event onset during the i-th fMRI acquisition, i.e., the time series is all zero except at indices corresponding to event onsets where we use xi=1 to encode the onset. From Eq. (3) and the structure of B in Eq. (4) it can be seen that the acquired fMRI time series of the j-th voxel is modeled as a convolution of the HRF with the experimental time course x plus additive noise, i.e.,

| (5) |

where * denotes the (discrete) convolution operator.

For the fMRI noise we also assume that the noise is zero-mean, i.i.d. Gaussian, resulting in

| (6) |

where α2 is the hyperparameter corresponding to the fMRI noise precision.

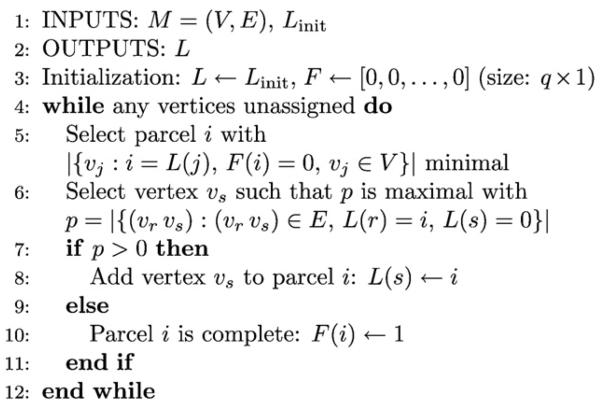

Spatio-temporal decomposition model

In this section we introduce the spatio-temporal decomposition model, which allows us to link EEG and fMRI by means of a common time invariant spatial profile. We adopt the model proposed in Daunizeau et al. (2007) as it provides an elegant way to combine EEG and fMRI. The model utilizes a hierarchical description of the cortical current distribution and the hemodynamic response functions. In order to obtain the hierarchical description, it is assumed that the cortical activity can be described by a set of regions where the responses within a region have similar temporal characteristics, i.e., the responses within a region are temporally coherent. In order to introduce the spatio-temporal decomposition, let us first define a fixed segmentation of the cortex into q regions, or parcels, which we encode using a fixed n × q segmentation matrix C defined as

| (7) |

In this work the matrix C is obtained by a segmentation procedure which uses a region growing algorithm; the procedure is described in Appendix A. However, we note that the segmentation procedure itself is not an integral part of the proposed EEG/fMRI fusion method. By assuming that the electrical responses within each region have the same shape with different scales, the coherency assumption for EEG is formalized by

| (8) |

where wEEG is a n × 1 vector representing the unknown spatial profile of the cortical currents, X is a q × t1 matrix with the unknown temporal shape of the currents for each region, and ρ1 is a n × t1 matrix representing residual activity which cannot be explained by the model. From Eqs. (7) and (8) it can be seen that if the i-th dipole lies within the j-th parcel, the current waveform of the dipole is modeled as the waveform of the j-th parcel Xj. scaled by the scaling variable for the i-dipole , i.e.,

| (9) |

We assume that all the residuals in ρ1 are zero-mean, i.i.d. Gaussian distributed and obtain the following hierarchical prior for the cortical currents

| (10) |

Utilizing the same coherency assumption for the HRFs leads to

| (11) |

where Z is a q × k matrix containing the unknown HRFs of the parcels, wfMRI is a n × 1 vector describing the spatial profile, and ρ2 is a n × k matrix representing the modeling residual. Note that we use HT instead of H in Eq. (11) since HT and S have the same spatio-temporal structure, i.e., the rows correspond to waveforms at different locations on the cortex. Therefore, by using HT in Eq. (11) the equation has the same form as Eq. (8).

As for EEG, we assume that ρ2 is zero-mean, i.i.d., Gaussian and obtain the following hierarchical prior for the HRFs

| (12) |

In order to establish a connection between the imaging modalities, a common spatial profile is assumed, i.e.,

| (13) |

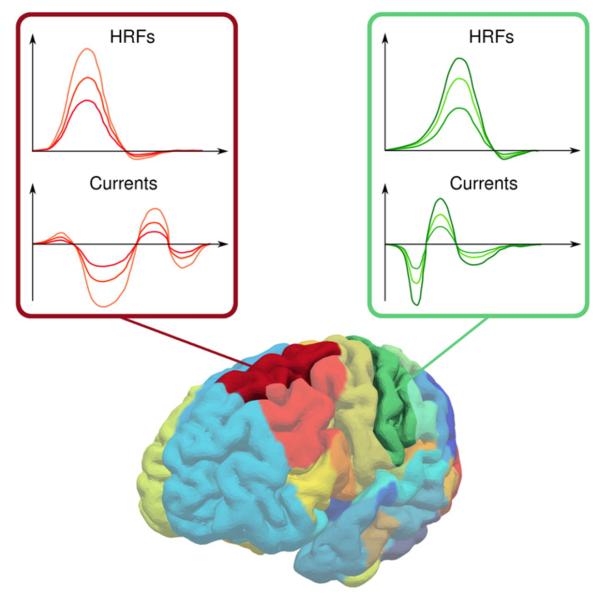

Note how the temporal characteristics of EEG and fMRI are modeled by X and Z, respectively, while the time invariant spatial profile w is responsible for the scale. Therefore, the hierarchical generative model represents a spatio-temporal decomposition and no assumptions are made about the relationship between the temporal shapes of the HRFs and cortical currents. The spatio-temporal decomposition is illustrated in Fig. 1 where the cortical currents and HRFs are shown for two parcels.

Fig. 1.

Illustration of the spatio-temporal decomposition model. The cortical currents and the HRFs within a parcel are assumed to be temporally coherent, i.e., the temporal shape is the same but with different scales, which are modeled by the time invariant spatial profile w. The spatial profile links the EEG and fMRI modalities since wi controls the scale of the current response as well as the scale of the HRF at the i-th vertex. This is illustrated here for two parcels and three waveforms per parcel; the waveforms belonging to the same vertex are drawn with the same color.

Spatial prior model

It is widely known that event related processing in the brain occurs in a number of specialized brain regions. Based on this, we assume that the spatial profile w contains sharp boundaries between active and inactive regions. In this work, this a priori knowledge is incorporated by utilizing a total variation (TV) prior, given by

| (14) |

where Z(γ) is the partition function and TV(·) is a discrete version of the total variation integral, which is given by

| (15) |

where Ω denotes the domain over which f(·) is defined and ∥▽f(·)∥ denotes the magnitude of the gradient of f(·). The hyperparameter γ is similar to the precision (inverse variance) parameter of a Gaussian prior, i.e., it controls the strength of the prior. As will be shown later, following a fully Bayesian approach γ will be treated as unknown and estimated from the data. Total variation priors have been used with great success in a number of inverse problems, such as image denoising and restoration (Rudin et al., 1992; Babacan et al., 2008). A property of the TV prior is that it promotes piecewise smooth solutions, which matches well with our assumption that the spatial profile contains sharp boundaries between smooth regions. An intuitive explanation for the promotion of piecewise smooth solutions can be obtained by thinking of TV regularization as ℓ1-norm regularization of the magnitude of the gradient. While regular ℓ1-norm regularization leads to a sparse solution, i.e., a solution where few entries are non-zero, TV regularization leads to a solution where only few locations have non-zero gradient magnitudes, which corresponds to a piecewise smooth solution.

There are two main difficulties in utilizing a TV-prior on the spatial profile w. First, the spatial profile w is defined on the folded surface of the cortex, such that the calculation of the gradient is not straightforward as in image processing applications where the image is defined on a rectangular 2-D lattice. The second difficulty is that the partition function Z(γ) in Eq. (14) is intractable. Both these difficulties are addressed below.

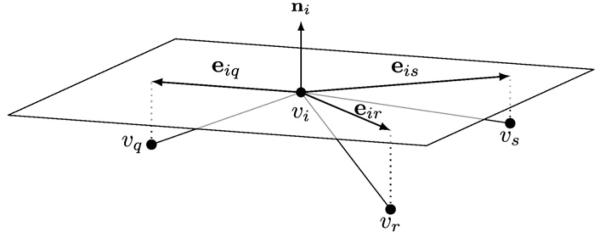

We address the first problem by defining the gradient of the spatial profile on a differentiable 2-manifold representing the cortical surface embedded in ℝ3. In practice, the geometry of the manifold is approximated by a triangular mesh denoted by M=(V,E), where V= {v1,v2, …, vn} is the set of n vertices, and E denotes the set of edges each connecting a pair of vertices. Let us denote ▽Mwi the gradient of w at vertex vi. This gradient ▽Mwi is the result of discretizing the gradient on a 2-manifold, i.e., the gradient is in the tangent space of M at vi, which is a Euclidean space in ℝ2 orthogonal to the surface normal vector at vi. As the surface normal vector at a vertex we utilize the angle-weighted average of the surface normal vectors of the adjacent triangles (Thürmer and Wuthrich, 1998). In order to calculate the gradient, we project the neighboring vertices vj∀j∈𝒩i onto the tangent space at vi, where 𝒩i denotes the ordered set of neighborhood vertex indices defined as 𝒩i =(j|(vi vj)∈E). By doing so we obtain for every neighbor a vector eij in ℝ2 which points from vertex vi to the projected location of vj, as depicted in Fig. 2. To calculate the gradient, note that the gradient can be used to obtain a first order approximation, i.e.,

| (16) |

where r denotes the residual error. By using all neighbors and rewriting Eq. (16) in matrix form we obtain

| (17) |

which enables us to estimate the gradient by minimizing the residual ∥r∥2, resulting in

| (18) |

Fig. 2.

Illustration of the tangent plane at vertex vi, which is assumed to have three neighbors 𝒩i = (q, r, s). The tangent plane is a Euclidean space in ℝ2 oriented orthogonal to the vertex normal ni. By projecting the neighboring vertices {vq, vr, vs} onto the tangent plane the vectors eiq, eir, and eis in ℝ2 are obtained. The vectors are utilized for calculating the gradient operator matrix at vertex vi.

Note that since the 2×|𝒩i| gradient matrix Gi for vertex vi solely depends on the geometry of the mesh, the gradient matrices for all vertices of the mesh have to be computed only once.

We also note that

| (19) |

where Δi is a |𝒩i×n matrix whose j-th row consists of zeros except at the columns i and 𝒩i(j) where it has the values −1 and 1, respectively.

Finally, the discrete version of the total variation integral in Eq. (14) can be expressed as

| (20) |

A second difficulty arising from the use of a TV prior is that the partition function Z(γ) in Eq. (14) has to be calculated as

| (21) |

which is intractable since the integral cannot be calculated analytically. Note that we cannot resort to numerical methods, such as Monte Carlo integration, to calculate the partition function as it would require drawing samples from p(w|γ) and there is no known method for this task. To address this difficulty, we use the following method to approximate the partition function. We can express the gradient at the i-th vertex as g = [g1g2]T = GiΔiw and thus . Using this we can calculate the partition function for a single vertex as follows

| (22) |

By combining the partition functions of all n vertices of the mesh we use this to approximate p(w|γ) in Eq. (14) as

| (23) |

where c is a constant and φ is a parameter with a value of φ=2.0 if the gradient at every vertex is assumed to be independent from the gradients at all other vertices. Due to the dependency between the gradient values, we empirically found that using φ=1.0 improves the performance of the algorithm and we therefore used this value throughout the rest of this paper.

Temporal prior model

We also make the assumption that the HRFs and the cortical currents are smooth in the temporal dimension. This assumption can be expressed by a Gaussian prior which penalizes the second order temporal derivative; a prior of this form was also used in Marrelec et al. (2002) and Daunizeau et al. (2007). In contrast to previous work, we assume that the degree of temporal smoothness varies across the surface of the cortex. We model this by utilizing a separate Gaussian prior for every parcel, i.e., for the temporal shapes of the cortical currents we use

| (24) |

where T1 is a t1 × t1 matrix given by

| (25) |

and β1 is a q×1 vector with per-parcel precision hyperparameters, each controlling the smoothness and scale of the cortical current waveform of a parcel. The use of separate hyperparameters allows for spatially adaptive temporal smoothness of the cortical currents, i.e., the model can reduce the degree of temporal smoothness in active regions while enforcing a higher degree of smoothness in inactive regions.

For the temporal shape of the hemodynamic response functions we use

| (26) |

where T2 is a k × k matrix that is defined analogously to T1 and β2 is a q × 1 vector with per-parcel precision hyperparameters. As with the cortical currents, the use of separate hyperparameters allows for spatially adaptive temporal smoothness of the HRF.

Hyperparameter prior model

Following the Bayesian approach we proceed by defining priors for all hyperparameters of the model. In order to obtain priors for the EEG and fMRI noise precisions, we obtain pre-stimulus data segments M0 for EEG and Y0 for fMRI containing only noise with sizes and , respectively. From the Gaussian noise assumption it follows that p(α1|M0 and p(α2|Y0) are gamma distributed (Daunizeau et al., 2007), which motivates the use of the following prior distribution for the EEG noise precision hyperparameter

| (27) |

The gamma distribution is defined as

| (28) |

where a > 0 and b > 0 are the shape and inverse scale parameters, respectively. Similarly, we use the following prior distribution for the fMRI noise precision hyperparameter

| (29) |

Note that the prior distributions become more sharply peaked as the lengths of the pre-stimulus segments increase. Longer pre-stimulus segments cause the fusion algorithm to rely more on the initial noise estimates, i.e., the noise estimated by the algorithm becomes almost entirely decided by the initial estimates. On the other hand, as the length of the pre-stimulus segments goes towards zero, the prior distributions become flat and the noise precision is estimated solely by the fusion algorithm.

For the precision parameter vectors β1 and β2, which control the per-parcel temporal smoothness and scale of the cortical currents and hemodynamic response functions, respectively, we use a hyperparameter prior model which allows us to control the degree of spatial adaptivity. In order to do so, we use gamma priors as follows

| (30) |

| (31) |

where and are fixed shape parameters and the unknown inverse scale parameters are denoted by δ1 and δ2. The use of fixed shape parameters allows us to control the degree of spatial adaptivity. As will become clear after the derivation of the approximate posterior distribution in the next section, by using a value close to zero for the posterior distributions of (β1)i, …,(β1)q can be drastically different. Hence, the model is fully spatially adaptive. On the other hand, when is very large, all posterior distributions will be almost identical and the prior model is not spatially adaptive, which is similar to the temporal prior model in Daunizeau et al. (2007). We empirically find that the proposed method performs best when the degree of spatial adaptivity for the EEG side is limited by using while using a higher degree of spatial adaptivity for the fMRI side with . These values are used throughout the rest of this paper. We note here that the proposed method is not very sensitive to the exact values of the shape parameters, i.e., a value in the range 10, …, 200 works well for while any value close to 0 works well for .

We make no assumptions about the remaining hyperparameters and consequently use noninformative Jeffreys priors given by

| (32) |

where θ ={δ1, δ2, ε1, ε2, γ}, to define

| (33) |

We note here that an important reason for selecting gamma distributions as priors for the hyperparameters is that the gamma distribution is the conjugate prior for the precision of a Gaussian distribution, as well as, for the inverse scale parameter of the gamma distribution, which simplifies the Bayesian inference since the posterior distributions of the hyperparameters will also be gamma distributions. As will be shown in the next section, in order to draw inference we employ a quadratic approximation to the energy of the TV prior in the form of a Gaussian distribution and consequently the conjugate prior for γ is a gamma distribution.

Global modeling

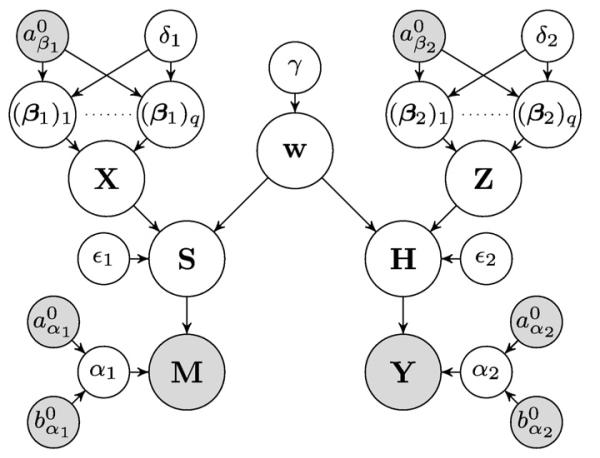

By combining all distributions introduced above, we obtain the joint probability density function as follows

| (34) |

where Θ = {S, H, w, X, Z, α1, α2, β1, β2,}∪θ is the set of all unknowns. The dependencies between the variables in the joint pdf are illustrated as a directed acyclic graphical model in Fig. 3.

Fig. 3.

Directed acyclic graphical model describing the joint pdf (gray: known, white: unknown).

The joint pdf allows us to derive a fusion algorithm using Bayesian inference, which is described in the next section.

Bayesian inference

Inference is based on the posterior distribution

| (35) |

However, the posterior p(Θ|M, Y) is intractable since

| (36) |

cannot be calculated analytically. Therefore, we utilize an approximation to the posterior. In this work, we employ the Variational Bayesian (VB) method using the mean field approximation (Jordan et al., 1999; Attias, 2000), i.e., we approximate the true posterior by a distribution which factorizes over the nodes of the graphical model

| (37) |

As stated in Jaakkola and Jordan (1998), mean field theory (Parisi, 1998) provides an intuitive explanation of the mean field approximation. That is, in a dense graph each node is influenced by many other nodes such that the influence from each other node is weak and the total influence is approximately additive. Hence, each node can be characterized by its mean value, which is unknown and related to the mean values of all other nodes. The task then becomes finding the relation between the mean values and designing an algorithm which can find a consistent assignment of mean values. This is exactly what we will do in the following. First, we will find a distribution for each node in the graphical model shown in Fig. 3. The distributions describe the relation to all other nodes in the model and allow us to obtain an inference algorithm in which we iteratively update the distribution of each node leading to a consistent assignment of distributions.

The posterior approximation q(Θ) is found by performing a variational minimization of the Kullback–Leibler (KL) divergence, which is given by

| (38) |

and is non-negative and equal to zero only if q(Θ) = p(Θ|M, Y). In variational Bayesian analysis, the optimal q(Θ) is found by

| (39) |

Using a standard result from variational Bayesian analysis (Bishop, 2006), for each variable the distribution which minimizes Eq. (38) is given by

| (40) |

where 𝔼Θ\Θi[·] denotes the expectation with respect to all variables except the variable of interest.

Unfortunately, the form of the TV prior prevents us from calculating the expectation in Eq. (40) and thus from finding an analytical form of q(Θ). Therefore, we resort to a majorization method which approximates 𝒦(q(Θ)) by upper-bounding functionals which render the calculation of the expectation tractable (Babacan et al., 2008). First, let us consider the geometric–arithmetic mean inequality (Hardy et al., 1988) which states that for two positive numbers a ≥ 0 and b > 0

| (41) |

We proceed by defining for w, γ, and an n × 1 vector u∈(ℝ+)n following functional:

| (42) |

Using inequality Eq. (41) in Eq. (23) with and b = ui, and comparing with Eq. (42), we obtain

| (43) |

The auxiliary variable u is related to the spatial smoothness in w and needs to be updated by the inference algorithm, as will be shown later. Using Eq. (43) in Eq. (34), we obtain a lower bound of the joint probability density function, i.e.,

| (44) |

which allows us to derive an inference procedure, as will be shown below. It should be noted that the proposed method therefore does not employ the TV prior directly; doing so would not lead to a tractable inference. Instead, the proposed method uses the lower bound F(w, u, γ) to the TV prior, which retains many of its desirable characteristics, i.e., the ability to model sharp boundaries, and allows for a tractable inference.

To derive the inference procedure, let us now define

| (45) |

which is the KL divergence between q(Θ) and F(Θ, u, M, Y). By using Eqs. (38) and (44), we obtain

| (46) |

Therefore we can obtain a sequence of distributions {q(Θ)} which monotonically decreases 𝒦̃(q(ϴ), u) for a fixed u. From Eq. (46) it can be seen that this leads to a monotonically decreasing upper bound to CKL(q(Θ)∥p(Θ|M, Y)) and therefore leads to an approximation of the true posterior distribution. Moreover, we can minimize 𝒦̃(q(ϴ), u) with respect to u for each distribution q(Θ), which tightens the upper bound to the KL divergence and thus leads to a more accurate distribution approximation. The two interleaved minimization steps naturally lead to the iterative distribution estimation algorithm. During each iteration the algorithm first minimizes the functional 𝒦̃(q(ϴ), u) with respect to q(Θ); the distribution approximation which minimizes this functional has the same form as in standard VB analysis (see Eq. (40)) and the distribution approximation of the node Θi∈Θ is given by

| (47) |

Using Eq. (47) we obtain a distribution for every node of the graphical model. The distributions of the nodes S, H, X, Z, and w are found to be Gaussian while the hyperparameter distributions are found to be gamma distributions (since conjugate priors were used). The form of the distributions obtained by applying Eq. (47) is given in Table 1 and the corresponding derivations are shown in Appendix D. In order to update the distributions and therefore to minimize 𝒦̃(q(ϴ), u) in the first step of the algorithm, the algorithm updates the parameters of the distributions in Table 1 using the most recently updated parameters, i.e., either from the previous or from the current iteration. The distributions are updated in the following order: q(S), q(α1), q(X), q(ε1), q((β1)1), …, q((β1)q), q(δ1), q(H),q(α2), q(Z),q(ε2), q((β2)1), …, q((β2)q), q(δ2), and q(w).

Table 1.

Distributions for the nodes of the graphical model obtained using Eq. (47). Derivations are shown in Appendix D, where matrices Q, P1, P2, and W(u) and the cov(·) operator are defined. The matrix R(k, q) is a kq × kq permutation matrix with the property R(k, q)vec(ZT)=vec(Z) (the matrix R(t1, q) is defined analogously).

| Functional form | Parameters |

|---|---|

| q(S) = 𝒩(vec(S)|vec(〈S〉),It1⊗ΣS) | 〈S〉 = ΣS(〈α1〉LTM + 〈∊1〉Diag(〈w〉)C〈X〉) |

| ΣS = (〈α1〉LTL + 〈∊1〉In)−1 | |

| q(H) = 𝒩(vec(H)|vec(〈H〉),In⊗ΣH) | 〈H〉 = ΣH(〈α2〉BTY + 〈∊2〉〈Z〉TCTDiag (〈w〉)) |

| ΣH = (〈α2〉BTB + 〈∊2〉Ik)−1 | |

| q(X)= 𝒩(vec(X)|vec(〈X〉), Σx) | vec(〈X〉) = 〈∊1〉 Σx(It1⊗CTDiag(〈w〉))vec(〈S〉) |

| q(Z) = 𝒩(vec(Z) | vec(〈Z〉), ΣZ) | vec(〈Z〉) = 〈∊2〉 ΣZ(Ik⊗CTDiag(〈w〉))vec(〈H〉T) |

| q(w) = 𝒩(w | 〈w〉, Σw) | 〈w〉 = Σwdiag(〈∊1〉 〈S〉 〈X〉TCT + 〈∊2〉〈H〉T 〈Z〉TCT) |

| Σw = (〈∊1〉 P1 + 〈∊2〉 P2 + 〈γ〉W(u))−1 | |

| q(α1)= Γ(α1|aα1, bα1) | |

| q(α2) = Γ(α2 | aα2, bα2) | |

| q(∊1) = Γ(∊1|a∊1, b∊1) | |

| q(∊2) = Γ(∊2|a∊1, b∊1) | |

| q((β1)i) = Γ((β1)i|(aβ1)i, (bβ1)i) | |

| q((β2)i) = Γ((β2)i|(aβ2)i, (bβ2)i) | |

| q(δ1) = Γ(δ1|aδ1, bδ1) | aδ1 = aβ10q |

| q(δ2)= Γ(δ2|aδ2, bδ2) | aδ2 = aβ20q |

| q(γ) = Γ(γ|aγ, bγ) | aγ = φn |

After updating q(Θ) in the first step of an iteration of the algorithm, the algorithm minimizes the functional 𝒦̃(q(ϴ), u) with respect to u in the second step of an iteration, which is equivalent to

| (48) |

Since Eq. (48) is a linear combination of n functions where the i-th function is convex with respect to ui, the minimizer is found by calculating the derivative with respect to ui and equating to zero, which results in the following update

| (49) |

for i = 1, …, n. It is clear from Eq. (49) that the auxiliary vector u is related to the gradient of the estimated spatial profile w. Moreover, as can be seen from q(w) (shown in Table 1), the vector u introduces spatially adaptive smoothing through the matrix W(u) into the estimation process (see Appendix D). This matrix controls the amount of smoothing at each vertex depending on the local variation of the spatial profile.

Computational complexity

To conclude this section we discuss the per-iteration computational complexity of the proposed method. Note that this does not take into account the computational cost of obtaining the parcellation of the cortex and the cost of computing the gradient projection matrices, as these operations only have to be performed once for a given cortical mesh. Excluding these operations from the discussion is also justified by the fact that the time required to perform them is typically shorter than the time required for one iteration of the proposed method. The per-iteration computational complexity of the proposed method is governed by the complexity of the matrix inversions needed to compute the covariance matrices in Table 1. For many applications it is possible to avoid the explicit inversion of matrices by employing efficient linear system solvers, such as the conjugate gradient method. Unfortunately, this is not possible in fully Bayesian methods, such as the one proposed in this work, since the covariance matrices are required for the computation of hyperparameters. By assuming that the inversion of an N × N matrix has complexity O(N3) and by taking into account the sizes of the covariance matrices in Table 1, the per-iteration complexity of the proposed method is found to be . From this one can see how the number of parcels q, which is in the range [1, n], affects the computational complexity. Ideally one would like to use a large number of parcels, such that parcels are small and the probability of having multiple sources in the same parcel is low. However, doing so can lead to prohibitively high computational demands and one has to chose q ≪ n in order to satisfy the constraints imposed by the computational resources available.

Simulations

In this section we evaluate the proposed method using simulations with synthetic EEG and fMRI data. The use of synthetic data enables us to compare the proposed method and existing methods by means of objective quality metrics.

At the end of this section we evaluate the results and compare the proposed method to several existing methods. Two EEG/fMRI fusion methods are used for the comparison. The first method is the symmetrical BASTERF method (Daunizeau et al., 2007), which is similar to the proposed method but uses a different prior model. The second method is the fMRI weighted minimum norm method (fWMN) (Liu et al., 1998), which can be considered one of the simplest methods for asymmetrical EEG/fMRI fusion. As an additional reference we include several EEG-only source localization methods in the comparison. The MSP method (Friston et al., 2008) is a recently proposed method that uses multiple sparse priors (256 per hemisphere are used here) with an empirical Bayesian modeling and can be considered a state of the art EEG source localization method. We also include two classic EEG source localization methods, namely the LORETA method (Pascual-Marqui et al., 1994), and the minimum norm method (MNE) with Tikhonov noise regularization (Dale and Sereno, 1993).

EEG forward model

The lead field matrix L used for the simulations was calculated as follows. First, the template cortical mesh included in SPM8 (http://www.fil.ion.ucl.ac.uk/spm) with a total of 8196 vertices was down-sampled to n = 1000 vertices. While the coarser mesh provides a less accurate geometrical description of the cortex, it significantly reduces the computational requirements. The lead field matrix was then computed using the BEM method from FieldTrip (http://fieldtrip.fcdonders.nl) with standard sensor locations for a 64 channel montage and canonical scalp, outer skull, and inner skull meshes, which are included in SPM8.

Simulated EEG and fMRI data

In order to simulate a range of source configurations and various degrees of agreement between EEG and fMRI a total of 5 different simulation scenarios are used in our evaluation. In the first simulation scenario we use a complex source configuration with more widespread sources, such sources are for example known to occur in children (Friedrich and Friederici, 2004; Sanders et al., 2006). We denote the scenario CPX and use a total of 4 sources, among which 2 are more widespread. All sources are hemodynamically, as well as, electrically active. Due to the complexity of source configuration, it can be expected that EEG/fMRI fusion methods have a significant advantage over EEG-only methods for this scenario. The remaining simulation scenarios use simpler source configurations with only 2 sources and are used to depict situations where some sources can be detectable by either only one modality or both (a similar experiment was presented in Daunizeau et al. (2007)). In practice such situations can for example occur when a source is active for a short time and can be detected by EEG but does not generate a BOLD response strong enough to be detectable by fMRI. On the other hand, it is possible that a source is far from the surface of the scalp, and thus generates a weak EEG signal while having a strong BOLD response. The scenarios are denoted as MM for the scenario with 2 multimodal, i.e., electrically and hemodynamically active, sources, ME for the scenario with one multimodal source and another source that only exhibits electrical activity, MH with one multimodal source and another source that is only hemodynamically active, and EH where one source is electrically active and the other is hemodynamically active. The EH scenario is included for completeness and it should be noted that it fundamentally violates the assumption which motivates fusion of EEG and fMRI, that is, the assumption that a subset of the neuronal activity is detectable by either modality. An overview of the simulation scenarios is given in Table 2. For each scenario, two sources each with a spatial extent of either 8 or 16 vertices are placed at random, non-overlapping locations on the cortical surface. Note that we assume no knowledge about the parcellation used by our algorithm when placing the sources on the cortex. It is therefore possible that the sources overlap parcel boundaries or that multiple sources are within the same parcel.

Table 2.

Simulation scenarios used in the empirical evaluation. A multimodal source is denoted as “M” while sources which are only electrically or hemodynamically active are denoted as “E” and “H”, respectively. The numbers indicate the spatial extent in vertices of the source, e.g., M(16) denotes a multimodal source with a spatial extent of 16 vertices. The source waveforms of the various sources are depicted in Fig. 4.

| Scenario | Source 1 | Source 2 | Source 3 | Source 4 |

|---|---|---|---|---|

| CPX | M(8) | M(8) | M(16) | M(16) |

| MM | M(8) | M(8) | ||

| ME | M(8) | E(8) | ||

| MH | M(8) | H(8) | ||

| EH | E(8) | H(8) |

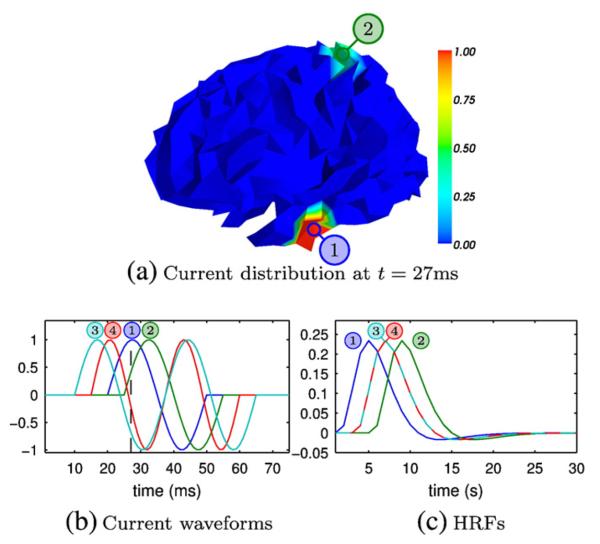

To simulate source waveforms, we use sinusoids with different starting points and frequencies as the current waveforms of electrically active sources and a shifted canonical HRF from SPM8 with a positive peak at 5 s and a smaller negative peak at 12 s for hemodynamically active sources. The source waveforms of the sources, as well as, an example of the source distribution on the cortex for the MM scenario are illustrated in Fig. 4. The rest of the simulation parameters are as follows. For EEG we use m = 64 sensors, t1 = 75 (we assume a sampling rate of 1 kHz), and signal to noise ratios (SNRs) of 15 dB, 20 dB and 25 dB (refer to Appendix B for a definition of the SNR). For fMRI we use t2 = 1000, k = 30 with 30 random occurrences of the event of interest, and an SNR of 5 dB. We use q = 32 anatomical parcels which are obtained using the procedure described in Appendix A. Note that we use the same parceling for the proposed method and for the BASTERF method. A summary of all parameters used for the simulations is shown in Table 3.

Fig. 4.

Source configurations used for simulations. The upper panel illustrates an example current distribution of a simulation with the MM scenario (two multimodal sources); the lower panels show the current waveforms and HRFs used for the simulations. The numbers refer to the source numbers in Table 2.

Table 3.

Summary of simulation parameters.

| Common | |||

|---|---|---|---|

| Size cortical mesh | n = 1000 | ||

| Number of parcels | q =32 | ||

| EEG | fMRI | ||

|

| |||

| Number of sensors | m = 64 | Length HRF | k =30 |

| Time points | t1 = 75 | Time points | t2 = 1000 |

| Sampling rate | 1 kHz | Sampling rate | 1 Hz |

| SNR | 15 dB, 20 dB, 25 dB |

SNR | 5 dB |

We perform 25 simulations per scenario and SNR configuration for each algorithm. For all algorithms the same random source configurations and noise manifestations are used in order to provide a fair comparison.

Initialization

In order to start the iterative inference procedure we initialize the parameters of the proposed method as follows. For the EEG noise precision we assume that the noise only data window M0 is one third of the length of M, i.e., 25 columns, anduse , , where is the EEG noise variance. Similarly, we use for the fMRI noise precision hyperparameters , . The expectations of the remaining hyperparameters and the vector u are initialized with small values of 10−3. The variables 〈Z〉, 〈X〉, and 〈w〉 and their covariance matrices are initialized with all zero values, while minimum norm estimates are used for 〈S〉 and 〈H〉 together with all zero covariance matrices. After the initialization the algorithm is started and the variables are updated in the order given in the previous section. While we do not provide a detailed analysis of the convergence properties of the proposed method, we note here that we find that the method is insensitive to parameter initialization, which agrees with earlier work where the same inference scheme is used (Babacan et al., 2008). For example, the proposed method typically converges to the same solution when it is initialized using the method stated above as when it is initialized with the solution found by the BASTERF method.

Results

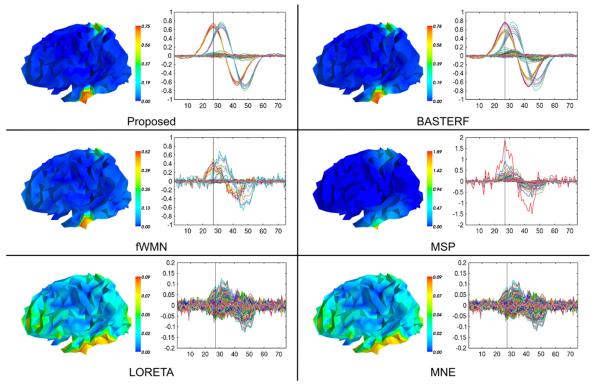

Estimated cortical current waveforms and their spatial distribution on the cortex in one simulation for scenario MM where both sources are electrically and hemodynamically active are shown in Fig. 5. The currents estimated by the proposed method are closer to the ground truth than those estimated by existing methods, i.e., the spatial distribution of the currents contains sharper transitions between active and inactive regions and the temporal waveforms have an appropriate degree of temporal smoothness. While currents estimated by the BASTERF method are both spatially and temporally smooth, the method fails to recover the sharp transitions at the boundaries of the sources and therefore provides a lower localization performance than the proposed method. This behavior can be explained by the fact that the BASTERF method uses LORETA-type spatial prior which is not spatially adaptive. Due to the lack of spatial smoothness priors the current distribution obtained by the fWMN method is more widespread than the distribution obtained by the proposed and the BASTERF methods. Considering the simplicity of the fWMN method, the results obtained by the fWMN method are surprisingly good. It should be noted however that in our evaluation the fWMN method has an unfair advantage over the symmetrical fusion methods (proposed and BASTERF) since the true locations of the hemodynamically active sources are used to obtain the weights for the fWMN method. Among the EEG-only methods, the MSP method clearly outperforms the other methods (LORETA and MNE) but due to the lack of fMRI information does not recover the spatio-temporal source distribution as well as the evaluated EEG/fMRI fusion methods. The advantage of spatially adaptive priors can also be seen when comparing the HRFs estimated by the proposed method and the BASTERF method, as shown in Fig. 6. As with the cortical currents, spatial adaptivity enables the proposed method to obtain estimates which are closer to the ground truth with sharper transitions between active and inactive regions and a more accurate degree of temporal smoothness.

Fig. 5.

Butterfly plots of the estimated currents (Ŝ) and their projection onto the cortical mesh at t=27 ms for one simulation of the scenario MM (SNR EEG=20 dB). The ground truth for this simulation is depicted in Fig. 4. Note that the color scales are adjusted for each method to show the full range of the source distribution and that the y-axis of the butterfly plots for the MSP, LORETA, and MNE methods has been adjusted to allow for a clear depiction of the estimated current waveforms.

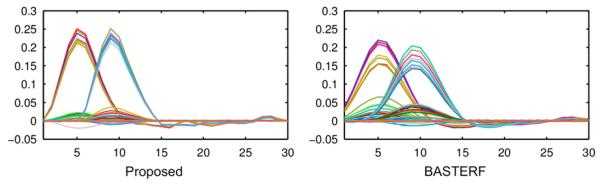

Fig. 6.

Estimated HRFs (Ĥ) by the proposed method and the BASTERF method for one simulation of the scenario MM (SNR EEG=20 dB). The ground truth for this simulation is depicted in Fig. 4 (hemodynamic sources 1 and 2 are active).

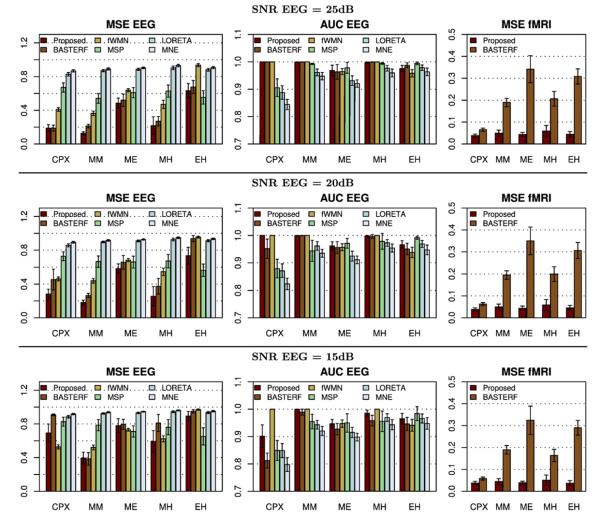

Objective quality metric scores from all simulations are shown in Fig. 7. To evaluate the reconstruction of the current distribution we use the mean squared error (MSE), denoted MSE EEG, as well as, the area under the ROC curve (AUC EEG). For fMRI we evaluate the reconstruction of the HRFs using the MSE, which we denote MSE fMRI. Refer to Appendix C for the definition of the quality metrics used.

Fig. 7.

Objective quality metric scores for different simulation scenarios. The mean squared error scores for the estimated currents and hemodynamic response functions are denoted as MSE EEG and MSE fMRI, respectively. The area under the ROC curve for EEG is denoted as AUC EEG. For mean squared error scores lower values are better while a value of 1.0 indicates the best performance in terms of AUC EEG. The error bars indicate the 95% confidence intervals.

We observe that the proposed method clearly outperforms the other evaluated methods for medium and high EEG SNRs (20 dB and 25 dB), except for the EH scenario where the MSP method performs better. Note, however, that such a result is not unexpected since the EH scenario, which uses one source that is only electrically active and another source that is only hemodynamically active, fundamentally violates the assumption which motivates EEG/fMRI fusion, i.e., that a subset of activity is detectable by both modalities. A method which does not use fMRI information has an advantage in this case since it does not have a bias towards fMRI active locations. From the results for scenario EH it can also be seen that the proposed method is more robust against disagreements between EEG and fMRI than the other EEG/fMRI fusion methods (BASTERF and fWMN). Also note that whenever there is a strong agreement between EEG and fMRI (scenarios CPX and MM), the fusion methods (proposed, BASTERF and fWMN) clearly outperform the EEG-only methods (MSP, LORETA and MNE). It is also interesting to note that the performance for all fusion algorithms is worse when there are current sources which are hemodynamically inactive (scenario ME) than when there are spurious hemodynamic sources (scenario MH), which is in agreement with previously reported results (Liu et al., 1998; Ahlfors and Simpson, 2004; Daunizeau et al., 2005; Daunizeau et al., 2007). As expected, the performance of all evaluated methods degrades when lowering the EEG SNR to 15 dB. It should be noted that the performance of some methods degrades more than that of others, e.g., the advantage of the proposed method over the BASTERF method typically becomes clearer when lowering SNR. A surprising result is that the fWMN method performs better than the other fusion methods for the CPX scenario at a low SNR. However, the same is not true for the other simulation scenarios. Potentially, this is again due to the fact that the fWMN has an unfair advantage over the other methods since the true source locations are used to obtain the weights used in the method. From Fig. 7 it can also be seen that the proposed method clearly outperforms the BASTERF method in terms of MSE of the hemodynamic response function, which can mainly be attributed to the use of spatially adaptive temporal smoothness priors in the proposed method. Another observation is that the reconstruction of the HRFs is largely unaffected by the EEG SNR and the agreement between EEG and fMRI and mainly depends on the number of hemodynamically active sources (CPX: 4 sources, MM,MH: 2 sources, ME,EH: 1 source). This result is not unexpected since unlike the estimation of S, the estimation of H does not amount to a localization problem, i.e., it is not possible to use a source configuration with different source locations and obtain the same observation (assuming no noise). Hence, it can be concluded that for realistic fMRI SNRs the estimation of theHRFsdoesnot benefit from the EEG information.

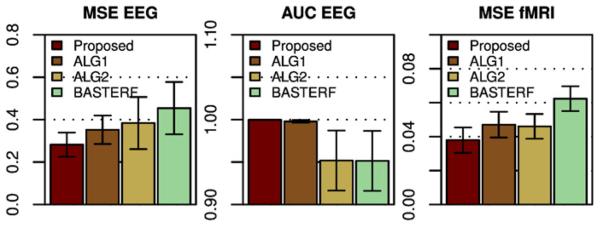

The advantage of the proposed method comes from the improved prior model, consisting of a spatially adaptive TV prior for the spatial profile and spatially adaptive temporal priors for the estimated currents and HRFs. An interesting question is how is the estimation performance affected by each prior? We try to answer this question by repeating the simulations of the CPX scenario with two modified versions of the proposed method, where one prior is replaced with the prior used in the BASTERF method. More specifically, the first method (denoted by ALG1) adopts the spatial Laplacian prior from BASTERF to model w and employs spatially adaptive temporal priors to model X and Z, while the second method (denoted by ALG2) uses a TV prior together with the temporal priors from BASTERF, which are not spatially adaptive. As can be seen from the results in Fig. 8, both additional priors contribute to the improved performance in terms of MSE EEG and MSE HRF. An interesting observation is that for the area under the ROC curve (AUC EEG), methods that use spatially adaptive temporal priors (proposed and ALG1) have higher scores than methods that use temporal priors without spatial adaptivity (ALG2 and BASTERF). While we only show results for the CPX scenario, these results are typical and correspond well with our observations that both parts (spatial and temporal) of the improved prior model contribute to the higher performance of the proposed method.

Fig. 8.

Results for the CPX scenario (SNR EEG=20 dB) for the proposed method, the BASTERF method, and intermediate methods, denoted by ALG1 and ALG2. The method ALG1 uses a Laplacian spatial prior (as in BASTERF) together with spatially adaptive temporal priors (as in the proposed method) and ALG2 uses a TV prior (as in the proposed method) together with temporal priors that are not spatially adaptive (as in BASTERF). It can be seen that the improved spatial prior as well as the improved temporal priors contribute to the higher performance of the proposed method. The error bars indicate the 95% confidence intervals.

To conclude this evaluation, we also mention run times and convergence properties of the evaluated algorithms. Naturally, while using a more complex symmetrical model, as with the proposed and the BASTERF methods, allows for higher performance, doing so comes at the cost of higher computational complexity. For the simulations used in this evaluation, all methods except the proposed method and the BASTERF method require less than 1 s to perform one simulation. The symmetrical fusion methods (proposed and BASTERF) are significantly more complex and both require about 10 s for one iteration (on a standard 2.6 GHz PC). Note that the time required for one iteration is about the same since the computationally most expensive operations are matrix inversions and both methods perform matrix inversions of the same order during each iteration, i.e., the proposed method and the BASTERF method have the same per-iteration time complexity. The time required for one simulation is in the order of 1 h, as both methods typically require several hundred iterations to reach convergence.

Application to real data

In this section, we demonstrate the performance of the proposed method in a real data set. The EEG and fMRI data was acquired for a multimodal study on face perception; details of the experimental paradigm can be found in Henson et al. (2003) and the data is available at http://www.fil.ion.ucl.ac.uk/spm/data/mmfaces/. The experiment involved the subjects making symmetry judgments for pictures of familiar faces, unfamiliar faces, and scrambled faces. In the following, familiar and unfamiliar faces are combined to create the face condition (F) whereas scrambled faces form the scrambled face condition (S). The data set available contains the data for one subject (male, 33 years old, neurologically healthy).

EEG data

The EEG data was collected using a 128-channel BioSemi ActiveTwo system with two additional electrodes, one on each earlobe, and a sampling rate of 2048 Hz. Faces and scrambled faces were presented in random order for 600 ms, every 3600 ms. Data was collected in two (identical) sessions; 86 faces and 86 scrambled faces were presented in each session. The EEG data was downsampled to 200 Hz, referenced to the average across all channels, and epoched from −100 ms to 600 ms. Trials for which the voltage exceeded 120 μV at any channel were rejected, leaving a total of 136 trials for faces and 134 trials for scrambled faces. The remaining trials were baseline corrected from −100 ms to 0 ms and averaged to create one ERP for the face condition and one ERP for the scrambled face condition.

EEG forward model

The EEG forward operator G was calculated using a BEM method implemented in FieldTrip (http://fieldtrip.fcdonders.nl). Subject specific meshes were used for the calculation; the cortex mesh was obtained from a high resolution T1-weighted structural MRI (1 mm3 resolution) of the subject using BrainVisa 3.2 (http://brainvisa.info). The high resolution cortex mesh obtained by BrainVisa was downsampled to 5998 vertices. The remaining meshes needed for the BEM calculation, namely the scalp, outer skull, and inner skull meshes, were obtained as follows. A nonlinear inverse normalization transform using the T1-weighted structural MRI of the subject was calculated using SPM8 (http://www.fil.ion.ucl.ac.uk/spm/). The transform was used to warp template scalp, outer skull, inner skull, and cortex meshes from a standard space into a subject specific space (the template meshes are included in SPM8). The meshes were then used together with electrode locations, which were obtained using a Polhemus Isotrak digitizer, as inputs to the BEM method.

fMRI data

The fMRI data was collected in 2 sessions; 64 faces and 86 scrambled faces were presented in each session. The experimental paradigm was slightly different from that used for EEG, i.e., the stimuli were presented for 600 ms but the time between trials was randomly distributed between 3 s and 18 s to allow for an estimation of the HRF. The data was acquired using a gradient-echo EPI sequence on a 3 T Siemens TIM Trio scanner with 32 slices, voxel size 3×3×3 mm (skip 0.75 mm), and a TR of 2 s. For each session 390 volumes were obtained. The fMRI data was preprocessed using SPM8, which involved the following steps: Slice timing correction to account for descending slice order, realignment for motion correction using 4-th degree b-spline interpolation, co-registration with the T1-weighted structural MRI of the subject, and spatial smoothing using a symmetric Gaussian kernel with a full width at half maximum (FWHM) of 8 mm. In order to be able to use fMRI data as input to the fusion algorithm, the volumetric data has to be interpolated onto the cortical surface, i.e., the cortical mesh of 5998 vertices which was also used for the EEG BEM model. We use the method proposed in Grova et al. (2006) to perform the interpolation. The method uses a binary gray matter mask to construct a 3D geodesic Voronoi diagram with one Voronoi cell for each vertex of the mesh. The interpolated value at a given vertex is then obtained by averaging the voxels belonging to the Voronoi cell which is associated with the vertex. Compared to simplistic interpolation methods, such as integrating over a sphere around each vertex, this interpolation method has the advantage that each gray matter voxel is associated with exactly one vertex. Therefore no signal mixing occurs between neighboring vertices and no signal is lost due to gray matter voxels being too far away from the closest vertex. Here, the gray matter mask was obtained from the T1-weighted structural MRI using BrainVisa 3.2. After interpolation of the fMRI data for each session onto the cortical mesh, low frequency drifts were removed by fitting and subtracting a third order polynomial to the fMRI waveform of each vertex. The interpolated data from the two sessions were then concatenated and upsampled by a factor of 2 to obtain a pseudo TR of 1 s resulting in an fMRI data matrix Y of size 1560×5998.

Noise estimates

The proposed method uses two noise-only data segments M0 and Y0, for EEG and fMRI, respectively, to obtain noise precision hyperparameters using Eqs. (27) and (29). The pre-stimulus time window from −100 ms to −5 ms was used to obtain an EEG noise matrix M0 of size 128×20. For fMRI, ideally the data segment Y0 is obtained from a sufficiently long time window during which no event onsets occurred, i.e., it can be assumed that the data segment only contains noise (consisting of measurement noise from the MRI scanner and noise from other sources such as spontaneous brain activity). Unfortunately, the fMRI data provided in the dataset does not contain data from a long period during which no event onsets occurred. In order to obtain an initial noise estimate, first note that the SNR for fMRI is very low and only a small number of brain regions exhibit significant task induced hemodynamic activity. Therefore, calculated across the whole brain and over a long time window, the power of the event related signal is negligible compared to the noise power. Hence, we simply used data from the first 30 s of the experiment, i.e., the first 30 rows in Y, as Y0. Due to the above arguments the noise parameter is quite accurate but may be slightly larger than the “true” due to event onsets during the first 30 s of the experiment. It can be expected that this inaccuracy does not affect the result since the noise precision is mostly estimated by the fusion algorithm itself.

Application of the fusion algorithm

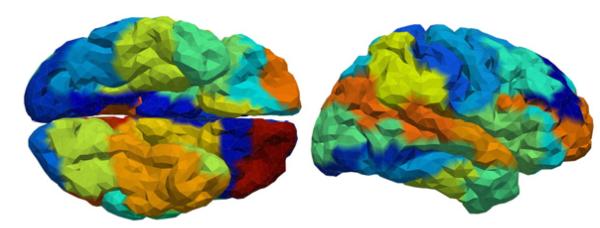

The preprocessed EEG and fMRI data were used as inputs to the proposed EEG/fMRI fusion method, as well as, to the BASTERF method (Daunizeau et al., 2007), which was included for comparison purposes. The fusion methods were applied for each condition (face and scrambled face) separately. Prior to applying the algorithms, the cortical mesh was parcellated into 48 regions using the procedure described in Appendix A; the parcellation is illustrated in Fig. 9. The size EEG data matrix M was 128×61 corresponding to a time window from 0 ms to 300 ms after event onset. The length of the HRF for fMRI was chosen to 20 s, resulting in a design matrix B of size 1560×20. The design matrix was obtained using Eq. (4) with an experimental time course which was zero everywhere except at locations corresponding to the onset times of the condition of interest, where the value of the time course was set equal to 1.

Fig. 9.

Ventral (left) and right lateral (right) views of the cortical mesh showing the parcellation of 5998 vertices into 48 regions.

Results

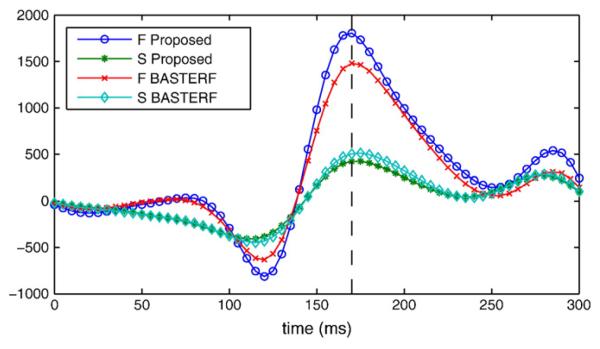

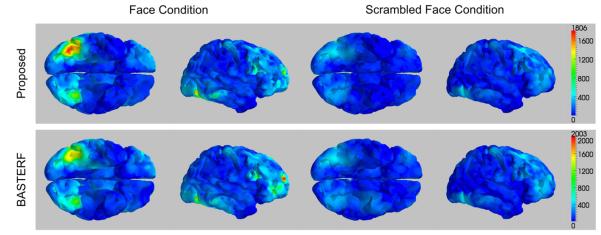

Previous EEG studies (Henson et al., 2003) have shown that the difference between the face (F) and scrambled face (S) conditions is apparent in the negative component of the right occipito-temporal channels at 170 ms after event onset, which is known as N170. This effect is clearly visible in the estimated current waveforms of the dipoles in the right fusiform region, as illustrated in Fig. 10. Notice that the difference between the F and the S condition is larger for the proposed method than for the BASTERF method. The difference between the methods can be attributed to the spatial adaptivity of the proposed method which allows for more focal sources with adaptive temporal smoothness.

Fig. 10.

Estimated current waveforms for a dipole in the right fusiform region. The dipole was selected as the dipole with the maximum current magnitude over all time instants for the face condition and the proposed method. The difference between the face (F) and the scrambled face (S) condition at t=170 ms is clearly visible. Note that the difference is larger for the proposed method than for the BASTERF method.

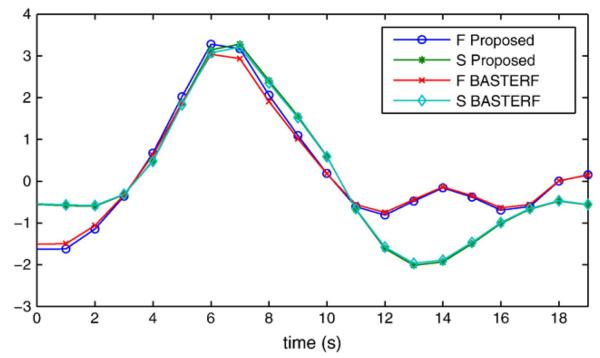

The hemodynamic response functions estimated by both methods look mostly similar as shown in Fig. 11. The similarity between the methods indicates that for this particular example the improved prior model has little influence on the estimates. An explanation for this is that there is a large amount of fMRI data available (86 event onsets for each condition) for the estimation of the HRFs. Hence, the Bayesian methods reduce the weight of the priors and the particular type of prior used has less influence on the estimate. The distributions of the current magnitudes for the F and S conditions at 170 ms are shown in Fig. 12. The results for both, the proposed and the BASTERF methods, are generally consistent with previously reported EEG source localization results for the same data (Trujillo-Barreto et al., 2008; Friston et al., 2008). There is bilateral activity in the fusiform region with emphasis on the right side, as well as, activity in the right superior temporal sulcus and the right middle frontal gyrus. Compared to previously reported results, the current sources, especially the ones in the bilateral fusiform regions, are more clearly separated from inactive regions. This is clear from the sharp boundaries between active and inactive regions shown in Fig. 12. This effect can be explained by the fact that the evaluated EEG/fMRI fusion methods use fMRI information, which allows for more accurate source localization and estimation of the spatial extent of the sources. While the current distributions estimated by the proposed method and the BASTERF method are quite similar, notice that the proposed method obtains sharper boundaries and therefore a better localization of the brain activity. Both methods also find some activity in the medial superior frontal region, which is inconsistent with previous EEG source localization results (Trujillo-Barreto et al., 2008; Friston et al., 2008). Notice that for the BASTERF method, the dipole with the largest magnitude at 170 ms is located in the medial superior frontal region and not in the right fusiform region. More recent MEG results (Henson et al., 2007) show some activity in the medial superior frontal region for some subjects, which suggests that it is possible that previous EEG source localization studies did not report this activity since the employed source localization methods simply failed to detect the activity in the medial superior frontal region. On the other hand, activity in the medial superior frontal region for fMRI and positivity in the frontocentral electrodes for EEG at 550 ms has been reported to be related to the familiarity of faces (Henson et al., 2003). While not shown here, both fusion methods find some hemodynamic medial superior frontal activity. This activity is much weaker than the activity in the fusiform region but may in fact be related to electrical activity that occurs at 550 ms, i.e., outside the EEG time window used in our analysis. The currents in the medial superior frontal regions found by the fusion algorithms may therefore be spurious estimates caused by hemodynamic activity which is related to electrical activity outside the time window of interest. This behavior illustrates a possible shortcoming of EEG/fMRI fusion methods: As the estimated hemodynamic response function is much longer than the EEG time window of interest, information about cortical activity occurring after 300 ms is included into the fusion process, which causes invalid fMRI location priors in the time invariant spatial profile w. While both the proposed method and the BASTERF method have some robustness against spurious hemodynamic sources, the current estimates are still biased towards regions with hemodynamic activity and the currents in the medial superior frontal region at 170 ms may in fact be spurious current estimates caused by invalid fMRI location priors.

Fig. 11.

Estimated hemodynamic response functions for a vertex in the right fusiform region corresponding to the location of the dipole used in Fig. 10.

Fig. 12.

Distributions of the current magnitudes at t=170 ms for the multimodal face data. Results obtained by the proposed method are shown in the top panel while the bottom panel shows the results obtained by the BASTERF method. The color maps are scaled to the range of the current magnitudes for the face condition for each algorithm.

Conclusions

In this paper we proposed a novel symmetrical EEG/fMRI fusion method. The method utilizes a hierarchical generative model with symmetrical structure which explains both EEG and fMRI observations. In contrast to previous symmetrical fusion methods, the proposed method uses spatially adaptive signal priors, leading to an improved performance. Specifically, the use of a total variation (TV) prior allows sharp boundaries between active and inactive brain regions. Unlike LORETA-type (Pascual-Marqui et al., 1994) spatial priors, the TV prior is spatially adaptive, such that it not only imposes spatial smoothness but also allows for abrupt changes in brain activity at the boundaries of active regions. We also assume that although each response is temporally smooth, the degree of smoothness varies from one spatial location to another, which is incorporated by utilizing a spatially adaptive temporal smoothness prior. We use a fully Bayesian formulation with a variational Bayesian inference method. The method utilizes a spatially adaptive bound to the TV prior which makes the calculation of the variational posterior distribution approximation possible.

We used simulations with synthetic EEG and fMRI data and objective quality metrics to evaluate the proposed method and to compare it to existing methods. In terms of estimation of the spatio-temporal cortical current distribution, our results show that the proposed method outperforms existing methods for simulation scenarios with high agreement between EEG and fMRI, i.e., scenarios where the sources of cortical activity are detectable by either modality. In situations where there is a strong disagreement between EEG and fMRI, the performance of the proposed method was slightly lower than that of the EEG-only MSP method but higher than the performance of other fusion methods, suggesting that the proposed method is more robust against disagreement between EEG and fMRI. In terms of estimation of the hemodynamic response function, the proposed method consistently outperformed the BASTERF method (Daunizeau et al., 2007), which can be attributed to the improved prior model.