Abstract

Perception entails interactions between activated brain visual areas and the records of previous sensations, allowing for processes like figure–ground segregation and object recognition. The aim of this study was to characterize top-down effects that originate in the visual cortex and that are involved in the generation and perception of form. We performed a functional magnetic resonance imaging experiment, where subjects viewed 3 groups of stimuli comprising oriented lines with different levels of recognizable high-order structure (none, collinearity, and meaning). Our results showed that recognizable stimuli cause larger activations in anterior visual and frontal areas. In contrast, when stimuli are random or unrecognizable, activations are greater in posterior visual areas, following a hierarchical organization where areas V1/V2 were less active with “collinearity” and the middle occipital cortex was less active with “meaning.” An effective connectivity analysis using dynamic causal modeling showed that high-order visual form engages higher visual areas that generate top-down signals, from multiple levels of the visual hierarchy. These results are consistent with a model in which if a stimulus has recognizable attributes, such as collinearity and meaning, the areas specialized for processing these attributes send top-down messages to the lower levels to facilitate more efficient encoding of visual form.

Keywords: DCM, fMRI, form, visual

Introduction

To learn about the environment and interact adaptively with it, humans need to extract regularities from the incoming sensory signals and make sense of them. This perceptual inference takes place continuously, even without awareness (Reber 1967; Saffran et al. 1996; Chun and Jiang 1998; Perruchet and Pacton 2006), with contributions from all levels of sensory and cognitive processing. In vision, the grouping of elements is based on the extraction of regularities such as collinearity, orientation, or proximity (Wertheimer 1923; Kofka 1935), properties to which neurons in low-level stages, such as V1–V3, are selectively responsive (e.g., orientation—Hubel and Wiesel 1968; Zeki 1978; collinearity—Kapadia et al. 1995). Higher stages appear to be involved in the recognition of arrangements of features that constitute different objects and in their association to particular semantic concepts (Desimone et al. 1984; Tanaka et al. 1991; Price et al. 1996; Ishai et al. 1999, 2000b; Gerlach et al. 2002; Sigala and Logothetis 2002; Vuilleumier et al. 2002; Bar et al. 2006; Gerlach 2009). However, it is not clear how the information extracted at each processing stage influences activity at successive or antecedent levels.

Stages in the visual processing of scenes, involved in processes such as figure–ground segregation and object recognition, are hypothesized to interact with each other through recursive loops of top-down and bottom-up signals (Grossberg 1994; Hupe et al. 1998; Lee et al. 1998; Lamme and Roelfsema 2000; Gerlach et al. 2002), mediated in the brain by forward and backward connections between different visual areas (Rockland and Pandya 1979, 1981; Felleman and Van Essen 1991). Top-down signals can help to disambiguate percepts and make the processing in lower areas more efficient, either by reducing the activity that is inconsistent with the high-level interpretation or by enhancing the activity of populations encoding percepts efficiently or more sparsely (Mumford 1992; Hupe et al. 1998; Grossberg 1999; Rao and Ballard 1999; Friston 2003). In a hierarchical system in which top-down signals originate in several levels, the signal from each functionally specialized level may represent different aspects of the visual input. In this scenario, all top-down influences may complement each other to optimize processing in lower areas.

Single-unit recordings in macaques’ V1 have shown that the response rate of a neuron increases when an oriented stimulus presented within its receptive field is accompanied by a second collinear stimulus in the surround of its classical receptive field, while the same oriented stimulus presented orthogonal to the main axis will produce inhibition or at least less facilitation (Kapadia et al. 1995). Responses in V1 are stronger when the same texture is part of a figure than when it is part of the background (Lamme 1995). Given the latencies of these stronger evoked responses, this process is thought to be mediated by excitatory top-down influences.

Evidence obtained using functional magnetic resonance imaging (fMRI) has shown that objects activate intermediate areas (such as V3A, V4, and the lateral occipital complex [LOC]) more strongly than do scrambled images (Malach et al. 1995; Grill-Spector et al. 1998b) and that real objects cause stronger activations than nonreal objects in high-level areas such as the middle occipital, inferior temporal, middle temporal, fusiform, and inferior frontal cortex (Price et al. 1996; Gerlach et al. 2002; Vuilleumier et al. 2002). Activations are also higher in the precuneus, medial parietal cortex and fusiform gyrus, when the same visual pattern is recognized as a meaningful image compared with when it is not (Dolan et al. 1997; Kanwisher et al. 1998; Andrews and Schluppeck 2004; McKeeff and Tong 2007). This suggests that higher visual areas are recruited if high-order regularities can be extracted from stimuli (i.e., objects vs. scrambled objects; meaningless objects vs. meaningful objects). In areas V1, V2, and V3, it has been demonstrated that colinear lines cause higher activations than randomly orientated lines (this effect was even larger in the LOC) (Altmann et al. 2003; Kourtzi et al. 2003; Cardin and Zeki 2005) and that responses are enhanced when forms have a global coherence (i.e., responses are higher when a group of visual features are organized into a coherent global shape but not when they lose this global arrangement because of reorientation or sequential, instead of simultaneous, presentation; Ban et al. 2006; McMains and Kastner 2009). Again, these effects are probably mediated by top-down signals, given that the local information in the stimuli is the same.

In contrast, higher activations have also been shown in V1 with a random arrangement of lines compared with closed shapes (Murray et al. 2002; Dumoulin and Hess 2007) and with incoherent motion compared with coherent motion (McKeefry et al. 1997; Harrison et al. 2007), effects also thought to be mediated by top-down mechanisms. Furthermore, Fang et al. (2008) have shown in V1 a reciprocal pattern of increased higher level and decreased lower level activity as a function of perceived coherence of identical moving patterns. These results showing higher activations in lower visual areas with less coherent patterns are in agreement with a predictive coding theory of cortical processing, in which higher level areas send top-down signals to lower ones and the system aims to reduce the mismatch between top-down signals and the bottom-up inputs (Mumford 1992; Rao and Ballard 1999; Friston 2003).

The evidence from lower areas presented above, which seems at first contradictory, could be reconciled if it is considered that top-down signals aim to reduce the mismatch between the sensory input and the high-level interpretation, and also mediate the enhancement of the activity of populations that are coding the percepts more efficiently.

In summary, images that contain recognizable patterns, such as global structure or previously observed objects, cause greater activations in higher visual areas specialized in processing the recognizable feature. The ensuing signals can then be sent to yet higher ones for processing of higher order attributes of the scene, but they can also be sent back to lower levels, to help “disambiguate” the sensory input. These top-down signals are often thought of as constraints or empirical priors, which optimize inference at lower levels. If top-down and bottom-up signals influence activity in different visual areas, one might expect to see that coherence in the images increases the coupling (i.e., the influence activity in one region exerts over activity in another; Friston 1994) between the brain areas that are critical for perceptual inference. Top-down effects have been shown with connectivity analysis in previous neuroimaging studies, hypothesizing that these effects are critical for perceptual inference and that could contribute to the disambiguation of sensory inputs (Bar et al. 2006; Summerfield et al. 2006; Kveraga et al. 2007; Eger et al. 2007). Effective connectivity analyses, such as dynamic causal modeling (DCM), aim to estimate and make inferences about the coupling between brain areas and how this coupling is influenced by experimental manipulations (Friston et al. 2003). Models of effective connectivity are appropriate for situations where there is a priori knowledge and experimental control over the system of study (Friston 1994; Friston et al. 2003). It is therefore possible to apply this approach to the study of the brain by modeling interactions among neural populations using neuroimaging methods: time series obtained with fMRI or magnetoencephalography. The advantage of DCM is that it makes use of the temporal information contained in fMRI data, allowing us to make inferences about the causal relationships of activity patterns in different brain areas (Friston et al. 2003).

Knowing where signals originate and which areas they target should help us understand the role of top-down and bottom-up mechanisms involved in the perception of visual forms. With this in mind, we wanted to investigate if top-down signals come from more than one higher area, and whether they are functionally inhibitory or excitatory.

To answer these questions, we performed an fMRI experiment where subjects were presented with 3 different sorts of images and were asked to perform a symmetry judgment task (to ensure attention to the global configuration and figure–ground segregation without recognition-related judgments; see Methods; Henson et al. 2003; Eger et al. 2005). The stimuli consisted of oriented lines grouped together to produce different levels of recognizable patterns. In the first condition (noncollinear), some lines were oriented differently from the background, forming a nonsense figure that could be segregated from the background lines (Fig. 1). In the second (meaningless), the differently oriented lines were collinear, lending the images a recognizable regularity (i.e., collinearity) even though they were still nonsense objects (Fig. 1). In the third group (meaningful), the lines were collinear but also represented objects such as a pram or a Christmas tree (Fig. 1), allowing subjects to associate the figures in them with semantic concepts. We expected that collinear and meaningful images would engage higher visual areas more than noncollinear, meaningless images, resulting in a differential increase in blood oxygen level–dependent (BOLD) signal. These areas could send outputs to even higher visual areas for further processing and to lower ones to optimize low-level processing. We anticipated an increase in the connectivity between higher and lower areas when collinearity or meaning is present in the stimuli. An analysis of coupling between different areas, using DCM, was performed to test this hypothesis.

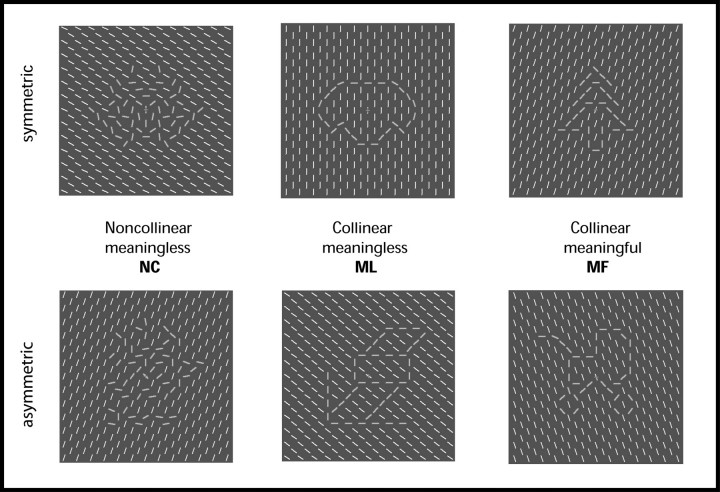

Figure 1.

Examples of the stimuli used in the experiment. There were 3 sorts of stimuli: MF, ML, and NC. Stimuli in each condition were composed of 313 oriented white lines presented against a gray background. Each stimulus was presented for 500 ms, and subjects had to judge if the figures were symmetrical or asymmetrical (see Methods for details). Oriented lines that constituted the figures are shown as thick gray lines for schematic purposes; in the experiment all the lines were white.

Methods

Stimuli and Experimental Design

Seventeen subjects participated in the study. All had normal or corrected-to-normal vision, were at least 18 years old, and had no history of neurological or psychiatric disorders. They all gave informed written consent to participate in the study, in accordance with the Declaration of Helsinki and with the approval of the Ethics Committee of the National Hospital for Neurology and Neurosurgery, London, United Kingdom. One subject was excluded due to problems with recording the button presses and 2 others were excluded because they fell asleep during the experiment. The results from 14 subjects (10 male, mean age = 28.5 years [range 23–40], 2 left handed) were analyzed and are reported here.

The stimuli were visual images consisting of a rectangular area (5° vertical and horizontal) filled with 313 oriented white lines against a gray background. They were projected using an LCD projector onto a screen located at a distance of 60 cm, which subjects viewed through an angled mirror. There were 3 stimulus conditions: collinear-meaningful (MF), collinear-meaningless (ML), and noncollinear-meaningless (NC), each of which comprised 70 individual images. For the MF condition, some of the lines were reoriented and made collinear to form abstract representations of meaningful objects (Fig. 1). In the ML condition, lines were collinear but represented figures with no meaning (Fig. 1). Stimuli in the ML and MF groups were matched to have the same number of straight and round components, and the same symmetry in the vertical, horizontal, and diagonal axes. An analysis of the spatial frequency components showed no significant differences in the power spectrum between both groups of stimuli (P < 0.05, corrected). Stimuli for the NC condition were generated by recombining the lines (keeping their orientation constant) of all the stimuli of MF and ML into new NC figures, maintaining 1) the mean change in orientation for each position, 2) the mean number of lines oriented differently from the background, and 3) the symmetry of the figures. On average, there were 33.31 ± 1.33 standard error of mean lines per image oriented differently from the background in the MF group, 32.13 ± 1.26 in the ML and 32.74 ± 2.36 in the NC.

Due to the abstract appearance of the stimuli and to differences between individual subjects in interpreting them, stimuli were classified prior to scanning by a different group of subjects, and only those stimuli that were recognized as meaningful more than 70% of the time, and meaningless less than 30%, were included in the MF condition. The opposite criteria were adopted to include stimuli in conditions ML and NC. After the scanning, subjects were asked to judge the figures as “meaningful” or “meaningless” in a 2-alternative forced-choice (2-AFC) task (see Results), the whole set of stimuli being presented over 2 sessions, with the same presentation and interstimulus time used during the scanning period.

Subjects participated in 4 scanning runs of 8–10 min each. The whole set of 210 stimuli (70 per condition) was presented twice: once in the first 2 runs and for a second time in the following 2 runs. The order of stimuli was counterbalanced across runs and subjects. The flicker of a red central fixation cross indicated the beginning of each trial. After a fixation period of 2–2.7 s, a stimulus was presented for 500 ms. Subjects’ task was to determine, in 2-AFC task, if the stimulus was symmetric or asymmetric across the vertical axis. The task was chosen to ensure subjects paid attention to the whole image and separated the figures from the background, without making any semantic evaluation. Similar symmetry judgment tasks have been used before to ensure configural processing without explicit semantic elaboration (Henson et al. 2003; Eger et al. 2005). Subjects were instructed to answer as fast as possible, without compromising accuracy, by pressing a button with their right or left hand. The hand used to respond “symmetric” and “asymmetric” switched between runs, the order counterbalanced across subjects. As soon as subjects made a response the fixation cross flickered, indicating the beginning of a new trial. If no response was made after 7 s, the next trial started automatically. Each condition had the same amount of symmetric and asymmetric figures. In each session, there were 20–40 null events. Since the main purpose of the symmetry judgment task was to ensure attention to the stimuli and efficient figure–ground segregation, but not its analysis, the symmetric and asymmetric images within each form group are not equated for low-level features (note that groups of forms are equated for low-level features, but within a given form group, symmetric and asymmetric images are not). In particular, the orientation of the given lines with respect to the background, and the number of lines oriented differently from the background orientation, will be unequal between symmetric and asymmetric images. Therefore, we did not analyze the effect of symmetry on the brain activity since any result obtained from this analysis could not be uniquely interpreted in terms of this variable.

Before the experimental sessions, subjects performed a practice session inside the scanner with a set of 30 stimuli (different from those used in the experiment), to get used to the scanning environment and the task.

All stimuli were designed and displayed using Matlab v6.5 (Mathworks Inc.) and Cogent (www.vislab.ucl.ac.uk).

Eye Movements

To demonstrate that there were no differences in eye movements between conditions, we conducted a separate experiment outside the scanner. Six different subjects took part in 2 experimental sessions, where the whole set of 210 stimuli (70 per condition) was presented once. The experiment had exactly the same design as during scanning, where subjects had to decide, in a 2-AFC task, if the figures were symmetric or asymmetric. Eye movements were measured with an ASL 5000 eyetracker system (Applied Science Laboratories) with an infrared pan-tilt camera. The percentage of eye movements bigger than 0.5° from fixation was 15.5 ± 6.1% for MF, 18.8 ± 8.7% for ML, and 14.2 ± 5.8% for NC, with no significant differences between conditions (F2,10 = 1.79, P = 0.21].

Imaging

BOLD contrast–weighted echoplanar images were acquired with a 3T Siemens ALLEGRA scanner fitted with a head coil. Each volume comprised 38 axial slices of 2-mm thickness and 1-mm gaps, with an in-plane resolution of 3 × 3 mm2, covering the whole brain with a repetition time (TR) of 2.47 s. The first 5 volumes of each scanning run were discarded to allow for T1 equilibration effects. Images were preprocessed and analyzed using SPM5 (http://www.fil.ion.ucl.ac.uk/spm). They were realigned to the first volume of the first experimental session, resliced to a final voxel resolution of 3 × 3 × 3 mm3, normalized to the Montreal Neurological Institute (MNI) reference brain in Talairach space, and smoothed spatially with a Gaussian kernel of 8-mm full width at half maximum. All coordinates are in MNI space. In all figures, the right hemisphere is shown on the right-hand side of the image.

Main Analysis

The experiment was designed in an event-related manner with a total of 3 different conditions: MF, ML, and NC. For every condition, each stimulus presentation was modeled as a stick function (a boxcar of duration 1/16th of the TR), convolved with SPM5's canonical hemodynamic response function, and entered into a multiple regression analysis to generate parameter estimates for each regressor at every voxel. The natural logarithm of the reaction times (RTs), for each stimulus presentation, was included as a first-order parametric effect of no interest for each experimental condition (see Results). Null events were modeled in a separate regressor, with the onset being specified at the time the fixation period finished. Head movement parameters, obtained during the realignment step, and the subjects’ button presses were included in the analysis as events of no interest. Data were high-pass filtered with a low-frequency cutoff of 1/128 Hz to remove low-frequency signal drifts. Contrast images were created for each subject and entered separately into voxel-wise 1-sample t-tests (individual contrasts: [MF–ML], [ML–NC], [ML–MF], [NC–ML]; Figs 3 and 4) or one-way analyses of variance (ANOVAs) (conjunction analysis; Fig. 2), in a random effects analysis (Penny et al. 2003), for inference at the between-subject level. Conjunction analyses were performed testing the “conjunction null” hypothesis.

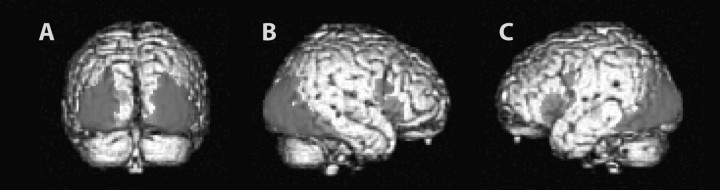

Figure 2.

Areas commonly activated by all visual stimuli. The figure shows SPMs obtained with a conjunction analysis (conjunction null) for (MF∧ML∧NC). All activations are shown at a threshold of P < 0.001, uncorrected, for display purposes, but only those significant at P < 0.05, corrected, are discussed in the text. See Supplementary Figure 1 for a color version of this figure.

If not indicated otherwise, statistical parametric maps (SPMs) are shown and summarized in tables at a threshold of P < 0.001, uncorrected for multiple comparisons, for display purposes, but activations are discussed only if they survive whole-brain correction for multiple comparisons at P < 0.05 or a small volume correction (SVC) if we had a strong anatomical hypothesis (i.e., regions previously known to be involved in the processing of visual objects). The SVC procedure, as implemented in SPM5, allows results to be corrected for multiple nonindependent comparisons within a defined region of interest (ROI). In all cases, the SVC was applied for the activation peak for an aspheric volume of, at least, 8-mm radius from the contrast maxima.

Dynamic Causal Modeling

To test for changes in connectivity between brain areas under the different experimental conditions, we performed an effective connectivity analysis using DCM (Friston et al. 2003), as implemented in SPM5. DCM makes use of the temporal information contained in fMRI data, allowing one to make inferences about the causal relationships of activity patterns between different brain areas (Friston et al. 2003). Given a set of regional responses and connections, DCM models the activity at the neuronal level and transforms it into area-specific BOLD signals using a hemodynamic model of fMRI measurements. Hemodynamic and coupling parameters are estimated using a Bayesian estimation scheme, such that the BOLD signals obtained with the joint forward model are as similar as possible to the observed BOLD responses (Friston et al. 2003; Mechelli et al. 2003).

Three kinds of coupling parameters are estimated in DCM: 1) direct, extrinsic inputs to the system (i.e., the effect of all visual stimuli); 2) “intrinsic” or “fixed” connections that couple neuronal states between regions (i.e., the connectivity strength between areas); and 3) modulatory parameters that model the changes in fixed connectivity induced by the experimental manipulations (i.e., the additive change a certain manipulation, like collinearity, has on the strength of a connection). The parameters describe the speed at which the neural population response changes, which has an exponential decay nature (Penny et al. 2004b; Stephan et al. 2005; Stephan 2007). Therefore, parameters correspond to rate constants of the modeled neurophysiological processes with units in Hertz.

Time series were extracted from 4 ROIs in the left hemisphere (see Results): medial middle occipital (mMO), lateral middle occipital (lMO), posterior inferior temporal (pIT), and inferior temporal/fusiform gyrus (IT/F). Subject-specific time series for each ROI were extracted as the principal eigenvariate of the responses across all sessions. For each subject, each ROI was based on anatomical and functional criteria. First, the centers of each ROI were determined as the maxima in subject-specific SPMs testing for the appropriate effects, within 25 mm of the group maximum for that particular contrast and ROI. The maxima for mMO were identified using the contrast [ML–MF], for lMO and pIT using the contrast [ML–NC], and for IT/F with the contrast [MF–ML]. As a summary time series, the first eigenvector was computed across all voxels that were above the indicated peak threshold (Supplementary Table 1) for an F-test of all the conditions of interest within a 6-mm-radius sphere centered on the maxima for each subject (with the exception of S6 where a 3-mm-radius sphere was used for areas mMO and lMO to avoid overlap between the voxels of each area). For each subject and each ROI, the highest possible peak threshold was used (0.05 Bonferroni corrected, or 0.001, 0.005, 0.002, 0.01, and 0.05, uncorrected) to extract the first eigenvector from the most significantly active voxels. The average number of voxels for each ROI was 38.2 ± 10.4 for mMO, 55.6 ± 10.4 for lMO, 86.1 ± 9.9 for pIT, and 65.0 ± 11.8 for IT/F. Supplementary Table 1 also shows the coordinates of each ROI for each participant.

We modeled as inputs all the visual presentations (a single variable covering all trials from conditions NC, ML, and MF), entering the DCM through areas mMO and lMO. There were 2 modulatory influences: “collinearity,” which included conditions ML and MF, and “meaning,” which included only condition MF. These modulatory parameters were allowed to affect every connection.

For each subject, 4 models were specified (Fig. 5B) and estimated separately. To choose the optimal model given our data, we used Bayesian model selection, which takes into account the relative fit and complexity (number of free parameters) of competing models (Penny et al. 2004a). This entails a comparison of the model evidence, which can be considered as a normalization constant for the product of the likelihood of the data and the prior probability of the parameters. Bayesian model comparison was performed by calculating, separately for each subject, the free energy (negative log evidence) for each of the 4 models. The log evidences were then pooled across subjects for each model. A model with a larger evidence (log evidence) is considered better. Note that a difference in log evidence of 3 between 2 models represents strong evidence in favor of the first model (i.e., odds of 20 to 1) (Penny et al. 2004a). The 4 models differed on the presence or absence of a lateral connection between regions in the first level (i.e., between middle occipital areas; see Results), and a connection between the first and third levels (i.e. between middle occipital and the IT/F; see Results). These 2 connections were considered as different factors in a 2 × 2 repeated measures ANOVA of the log evidences over subjects to evaluate their contribution to the models and to inform the selection of the best model.

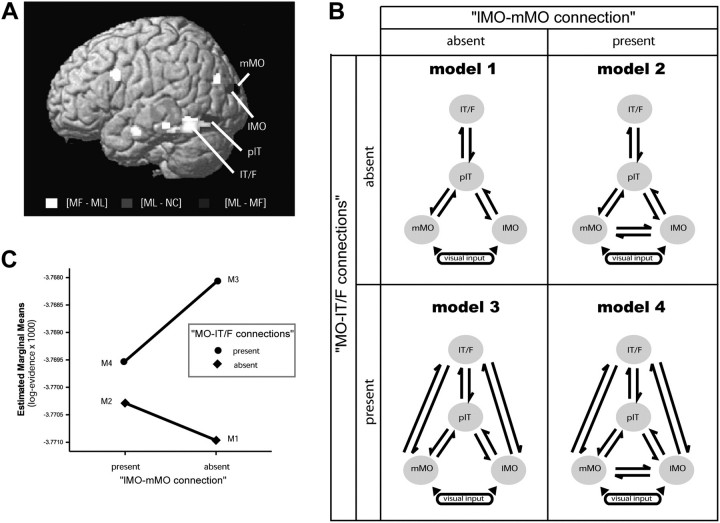

Figure 5.

DCM model comparison. (A) Location of areas included in the DCM analysis and the contrasts used to isolate them. See Supplementary Figure 7 for a color version of this figure. (B) Schematic representation of the models tested. The best model was selected using Bayesian model comparison (see Methods). The difference between the models is that they either have or do not have 1) connections between lMO and mMO (models 1 and 2) and 2) connections between MO areas and IT/F (models 3 and 4). (C) Mean log evidence averaged across subjects for each DCM model. The log evidence units are dimensionless.

Based on the Bayesian model comparison results, a model was chosen, and parameters for the inputs, fixed connections, and modulations were obtained for each subject and were used to make statistical inferences at the group level with 1-sample t-tests. DCM was performed using SPM5 software. Analysis of the coupling parameters at the group level was performed using MATLAB 6.1 and SPSS 14.0.

Results

Behavioral Data

Subjects participated in 4 scanning sessions during which stimuli containing NC, ML, and MF stimuli were shown for 500 ms. After each presentation, subjects had to determine, in a 2-AFC task, if the figures in the image were symmetric or asymmetric across the vertical axis. This task was selected to ensure that subjects were attending to the images, evaluating them as a whole and grouping the lines perceptually. Mean accuracy in the task was 79.49 ± 1.39% for MF, 77.04 ± 1.55% for ML, and 78.52 ± 1.93% for NC, with no significant difference between conditions (F2,26 = 1.85, P = 0.176). Average RTs were 1105 ± 69 ms for MF, 1083 ± 61 ms for ML, and 1168 ± 72 ms for NC. A 1-way repeated measures ANOVA revealed a significant effect of condition (MF, ML, and NC) on the RT (F2,26 = 15.83, P < 0.001). Individual contrasts indicated that RTs are significantly higher for condition NC compared with MF and ML ([MF–NC]: F1,13 = 19.83, P < 0.001; [ML–NC]: F1,13 = 18.47, P < 0.001), but there was no significant difference between MF and ML ([MF–ML]: F1,13 = 3.24, P = 0.095). This suggests that NC stimuli were more difficult to judge. Due to these differences in RT between conditions, we included the log RT as confounding effect in our statistical models.

Imaging Results

Conjunction Analysis

We first identified brain regions significantly active under the 3 experimental conditions. All conditions are made of oriented lines with equaled low-level manipulations (see Methods). From each of these images, the figure embedded needs to be segregated from the background, and the sensory input will presumably be matched with some store representation (even when this was not the task). All images will therefore share, up to a certain level, a common neural representation. To identify this, a conjunction analysis (conjunction null hypothesis) was performed at the second (between-subject) level (Friston et al. 2005). Contrast images were generated for each condition and each subject, and entered into a one-way ANOVA with 3 levels (MF, ML, and NC). We found a significant conjunction (i.e., the minimum t-value was significant at P < 0.05, corrected) in the inferior and medial occipital cortex dorsally, extending to the ventral occipitotemporal cortex and the anterior fusiform gyrus (Fig. 2 and Supplementary Fig. 1; Table 1). This is consistent with previous studies of object and form processing (Malach et al. 1995; Kanwisher et al. 1996; Price et al. 1996; Grill-Spector et al. 1998a, 1998b; Ishai et al. 1999; Gerlach et al. 2002). We also observed significant conjunctions (P < 0.05, whole-brain corrected; Fig. 2 and Supplementary Fig. 1; Table 1), in the anterior part of the insula (bilaterally), left cerebellum, right thalamus, and right inferior frontal gyrus (however, all these activations are bilaterally significant at P < 0.001; see Fig. 2 and Supplementary Fig. 1). These results are to be expected when subjects are engaged in a demanding visual task (Gerlach et al. 1999; Pernet et al. 2004; Lehmann et al. 2006). A conjunction analysis (conjunction null hypothesis; Supplementary Fig. 2) of all the parametric modulators of the form regressors shows activations in areas such as the anterior insular cortex, the supramarginal gyrus, and regions in the frontal lobe, known to be involved in cognitive control tasks (Holcomb et al. 1998; Bokde et al. 2005; Cole and Schneider 2007), demonstrating that this covariate successfully removes any difficulty-related activity. In addition, there was no significant difference (P < 0.001, uncorrected) between any of the parametric modulators, suggesting that there is no difference in the strategy used to solve the task in each condition.

Table 1.

Significant conjunction (MF∧ML∧NC)

| Side | Brain areas | Z-score | x | y | z |

| R | Posterior fusiform gyrus | 7.93 | 34 | −68 | −8 |

| L | Anterior fusiform gyrus | 7.16 | −38 | −46 | −18 |

| R | Middle occipital cortex | 7.55 | 32 | −90 | 0 |

| L | Middle occipital cortex | 7.16 | −30 | −98 | 4 |

| L | Cerebellum | 5.96 | −6 | −72 | −18 |

| R | Inferior frontal gyrus (pars opercularis) | 5.00 | 50 | 10 | 24 |

| R | Thalamus | 4.99 | 10 | −14 | −6 |

| L | Insula | 4.96 | −40 | 10 | 4 |

| R | Insula | 4.90 | 30 | 24 | −4 |

Note: All peaks at P < 0.05, whole-brain corrected.

Individual Contrasts

When evaluating the effect of meaning with the contrast [MF–ML], we observed significant activations (P < 0.05, SVC) in anterior fusiform gyrus and inferior temporal gyrus (bilaterally, Fig. 3 and Supplementary Fig. 3B,C,D; Table 2). This contrast not only showed significant activations (P < 0.05, SVC) in visual areas but also in bilateral inferior frontal gyrus (pars opercularis) and hippocampus, left anterior inferior temporal gyrus, right inferior frontal gyrus (pars triangularis), and left inferior parietal lobule (bilaterally in the last 2 regions at P < 0.005, uncorrected; data not shown). These results replicate the results of previous studies that compared real and nonreal objects (Price et al. 1996; Gerlach et al. 2002; Vuilleumier et al. 2002). The [ML–NC] contrast testing for the effect of collinearity revealed significant activations (P < 0.05, SVC) in bilateral pIT gyrus, fusiform gyrus, and parietal lobule (Fig. 3 and Supplementary Fig. 3A–D; Table 2). Collinearity also has an effect in lower visual areas located in the middle occipital cortex (Fig. 3 and Supplementary Fig. 3A,B, blue clusters; Table 2), where we did not observe any significant effect of meaning in the stimuli.

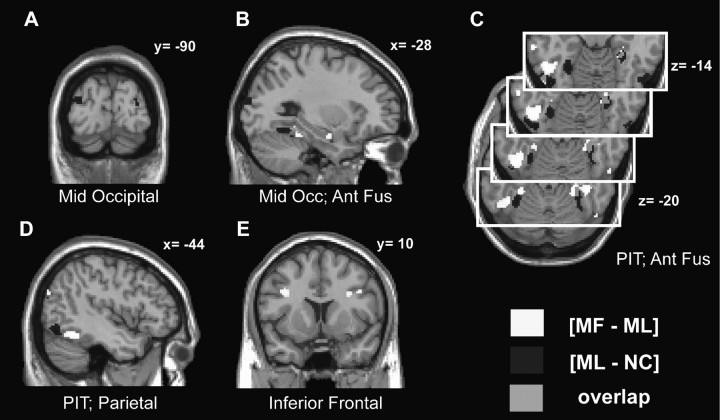

Figure 3.

Clusters activated by “collinearity” and “meaning.” Suprathreshold regions obtained with the contrast [MF–ML] are shown in white and those identified by the contrast [ML–NC] in dark gray. Regions commonly activated are shown in light gray. SPMs are displayed on coronal (A and E), sagittal (B and D), and transversal (C) slices, and show activations in middle occipital cortex (A and B), anterior fusiform gyrus (B and C), pIT gyrus (PIT; C and D), parietal lobule (D), and inferior frontal gyrus (E). All activations are shown at a threshold of P < 0.001, uncorrected, for display purposes, but only those significant at P < 0.05 (Bonferroni or SVC if a strong prior hypothesis) are discussed in the text. See Supplementary Figure 3 for a color version of this figure.

Table 2.

Activations and deactivations in response to “collinearity” and “meaning”

| Side | Brain areas | Z-score | x | y | z |

| Activation effect of meaning: MF > ML | |||||

| R | Anterior fusiform gyrus | 4.69* | 42 | −38 | −22 |

| L | Anterior fusiform gyrus | 3.74 | −28 | −40 | −20 |

| L | Posterior IT/F | 4.13* | −48 | −56 | −14 |

| R | Posterior inferior temporal gyrus | 3.40 | 52 | −66 | −20 |

| L | Anterior inferior temporal gyrus | 4.08 | −64 | −34 | −16 |

| R | Middle temporal gyrus | 3.38 | 56 | −50 | −4 |

| R | Hippocampus | 3.76 | 20 | −2 | −26 |

| L | Hippocampus | 3.44 | −24 | −8 | −22 |

| L | Inferior frontal gyrus (pars opercularis) | 3.61 | −36 | 12 | 32 |

| R | Inferior frontal gyrus (pars opercularis) | 3.49 | 32 | 10 | 28 |

| R | Inferior frontal gyrus (pars triangularis) | 3.60 | 50 | 30 | 16 |

| L | Inferior parietal lobule | 3.38 | −44 | −80 | 26 |

| Activation effect of collinearity: ML > NC | |||||

| L | Posterior inferior temporal gyrus | 4.79 | −46 | −72 | −14 |

| R | Posterior inferior temporal gyrus | 3.63 | 52 | −70 | −14 |

| R | Anterior fusiform gyrus | 4.40 | 30 | −40 | −16 |

| L | Fusiform gyrus | 4.14 | −32 | −50 | −20 |

| L | Middle occipital cortex | 4.09 | −32 | −92 | 18 |

| R | Middle occipital cortex | 4.02 | 30 | −88 | 16 |

| L | Middle occipital cortex | 3.54 | −36 | −94 | 0 |

| R | Superior parietal lobule | 4.02 | 32 | −60 | 64 |

| R | Precuneus | 3.58 | 4 | −68 | 54 |

| L | Inferior parietal lobule | 3.37 | −46 | −80 | 22 |

| Deactivation effect of meaning: ML > MF | |||||

| L | Middle/superior occipital gyrus | 4.88 | −22 | −98 | 18 |

| Deactivation effect of collinearity: NC > ML | |||||

| R | Superior occipital cortex | 4.15 | 10 | −102 | 18 |

| L | Superior occipital cortex | 3.71 | −10 | −104 | 12 |

| L | V1/V2 | 4.06 | −8 | −94 | 10 |

| L | V1/V2 | 4.03 | −8 | −82 | −12 |

Note: All voxels significantly active at P 0.001 (uncorrected) and P< 0.05 (SVC for the activation peak within a sphere of, at least, 8-mm radius). Only those voxels in which we had a prior hypothesis to do an SVC are included.

Significant at cluster-level P < 0.05, whole-brain corrected.

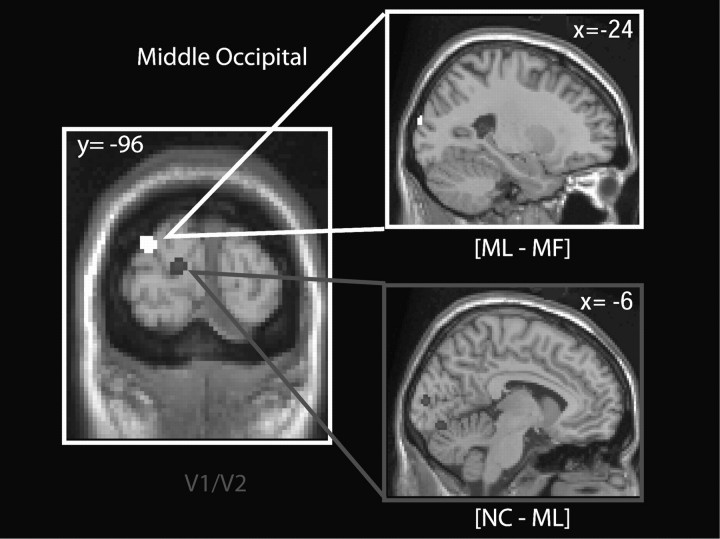

In summary, 2 clusters of activation were observed in the ventral occipitotemporal cortex for collinearity and meaning, but those elicited by the former were in a more posterior location than those elicited by the latter (Fig. 3 and Supplementary Fig. 3B–D). There is an overlap between both effects in the right anterior fusiform gyrus. These results show that more recognizable stimuli evoke stronger activations in more anterior areas compared with less recognizable ones. If each activated area sends an inhibitory signal to lower areas, we would expect to see posterior visual areas to be more active for NC than ML, and for ML than MF. This is what we observed (Fig. 4 and Supplementary Fig. 4). Areas V1/V2, both in the dorsal and the ventral portion, are more active for NC than for ML (Fig. 4 and Supplementary Fig. 4, [NC–ML], visual areas 17/18; P < 0.05, SVC; Supplementary Fig. 5). When we tested the contrast [ML–MF], to identify areas relatively deactivated by meaning, we observed significant effects in the left middle occipital cortex (Fig. 4 and Supplementary Fig. 4, P < 0.05, corrected), which were bilateral at a lower threshold (P < 0.005, Supplementary Fig. 6). It is important to point out that these results show relative deactivation (i.e. areas less active for MF than for ML stimuli) but not necessarily BOLD signal levels below those of baseline. As shown in Figure 4 and Supplementary Figure 4, significant clusters are more posteriorly located for the contrast [NC–ML] than for [ML–MF]. The coordinates of the activations seen with the contrasts [NC–ML] and [ML–MF] are shown in Table 2.

Figure 4.

Deactivations by “collinearity” and “meaning” in early visual areas. Suprathreshold regions obtained with the contrast [ML–MF] are shown in white, and those obtained with the contrast [NC–ML] are shown in gray. SPMs are displayed on coronal and sagittal slices, showing clusters in the middle occipital cortex (orange) and V1/V2 (blue). The label of V1/V2 was given using the calcarine sulcus as a landmark and the Anatomy toolbox in SPM (Eickhoff et al. 2005). All activations are shown at a threshold of P < 0.001, uncorrected. See Supplementary Figure 4 for a color version of this figure.

In summary, conventional SPM analyses have shown that stimuli with recognizable high-order patterns cause greater activations in higher, more anterior visual areas. In contrast, when the images contain more random unrecognizable arrangements, activations are greater in posterior visual areas. This occurs at different levels of the visual processing—ML elicit more anterior activations than NC, and MF yet more anterior ones than ML. Furthermore, NC activates posterior regions more strongly than ML, and ML activates more posterior regions than MF. There is thus a posterior to anterior axis of increased activation with more coherent patterns, and an anterior to posterior axis of stronger deactivation. These results are consistent with the hypothesis that coherent stimuli recruit higher (anterior) visual areas that then send messages to lower (posterior) areas. If this signal matches the input, the result will be a more efficient coding in lower sensory cortices and a concomitant decrease in activation. Under this hypothesis, the decrease in posterior responses is due to top-down signals. Therefore, with an effective connectivity analysis, which studies the effects that one neural system has over another (Friston 1994), we should see a negative coupling with coherent stimuli; that is, a stronger negative coupling between regions will be the result of higher visual areas reducing activity in lower areas through top-down effects when the high-level predictions match the sensory inputs.

In contrast, if the top-down signals target neuronal populations encoding relevant stimulus features, the coupling between higher and lower visual areas should be positive because the top-down effect will be increasing the overall activity of the lower visual area (or relevant units).

Effective Connectivity Analysis—DCM

To distinguish between these alternatives, we carried out an effective connectivity analysis using DCM. The aim of this kind of analysis is to estimate and make inferences about the coupling between brain areas, and how this coupling is influenced by experimental manipulations (Friston et al. 2003). The advantage of DCM over other methods of connectivity analysis is that it makes use of the temporal information contained in fMRI data, allowing us to make inferences about the causal relationships of activity patterns in different brain areas (Friston et al. 2003). This means that if we see an increase in connectivity from region A to region B, we can say not only that there is a correlation between the activity in both regions but, since this analysis is causal, also that an increase in activity in A causes the increase in B and not the other way around. The goal of this analysis was to see whether there is a top-down modulation when stimuli have high-order attributes compared with when they do not. Our specific aim was to investigate if top-down signals could arise from one or more “higher” areas, and if they are predominantly inhibitory or excitatory. We used the simplest model that was sufficient to answer these questions (to reduce the number of free parameters and make estimation more accurate). For this reason, we constrained the DCMs to areas in the left hemisphere and restricted the models to an input and 2 further levels: one activated by collinearity and one activated by meaning. DCM was performed in areas of the left hemisphere because behavioral evidence shows preferential encoding there of viewpoint-independent and more abstract categorical information than in the right hemisphere, where there is preferential processing of exemplars and viewpoint-specific images (Marsolek 1995; Burgund and Marsolek 2000). Furthermore, neuroimaging data have shown preferential processing for objects in ventral visual areas in the left hemisphere (Sergent et al. 1992; Dolan et al. 1997; Shen et al. 1999); in particular, object-specific learning effects (Dolan et al. 1997) and viewpoint-independent priming effects (Vuilleumier et al. 2002). We chose the middle occipital cortex as the input stage as it is a relatively lower visual area, and because it showed a significant main effect for any visual presentation (Fig. 2 and Supplementary Fig. 1) and a greater activation with collinear stimuli compared with random lines (Fig. 5A and Supplementary Fig. 7, [ML–NC], green) and also a decrease in activity with MF stimuli compared with ML ones (Fig. 5A and Supplementary Fig. 7, [ML–MF], blue). Since this cortical zone was more strongly activated by the first level of coherence (collinear stimuli), but less active in response to meaningful stimuli, we could potentially discern top-down effects that result in an increase in activity (with collinearity as a modulatory effect) and effects that result in a decrease in activity (with meaning as a modulatory effect). Voxels more active for the contrast [ML–NC] were deployed more laterally than those activated in the contrast [ML–MF]. Therefore, we defined 2 areas in the middle occipital cortex: medial and lateral (mMO: −22, −98, 18; and lMO: −32, –92, 18), the first centered on the maxima for the contrast [ML–MF] and the other centered on the maxima for the contrast [ML–NC]. These 2 regions constitute the first level of our hierarchy. The following level in the model was a region in the pIT cortex centered on the maxima of the contrast [ML–NC], with coordinates −46, –72, –14 (Fig. 5A and Supplementary Fig. 7, green). Finally, for the third level, we chose the more anterior cluster in the posterior IT/F (−48, −56, −14), centered on the maxima for the contrast [MF–ML] (Fig. 5A and Supplementary Fig. 7, red). The pIT and IT/F were the regions that showed the most significant activations to collinear and meaningful stimuli, respectively, in the left hemisphere; therefore, we could expect top-down effects to originate here. Also, IT/F is more anterior than pIT, which respects the hierarchical structure of our models. For these reasons, we chose these regions for the DCM analysis over the other ones also significantly active in the presence of meaning and collinearity. To see how recognition of high-order form induced changes in connectivity between brain areas, we included collinearity (ML “and” MF) and meaning (MF) as modulatory effects, and allowed them to change any connection in the model.

In summary, for each subject, 4 ROIs were defined—mMO, lMO, pIT, and IT/F (Fig. 5A,B and Supplementary Fig. 7; the response in these areas to each experimental condition is shown in Supplementary Fig. 8), to construct hierarchical models with 3 levels: the first activated by collinearity and deactivated by meaning; the second more activated by collinearity, and the third by meaning. This DCM allowed us to ask if the activations and deactivations in the first level were due to top-down influences from one or both of the higher levels, whether these influences depended on stimulus coherences, and whether they were positive or negative in their effect on target populations.

We do not know if mMO and lMO are different parts of the same area, or if they are 2 adjacent areas; therefore, we did not make any assumption about connectivity between these regions and evaluated, using Bayesian model comparison, models with and without these connections (Fig. 5B). Anatomical studies in monkeys have revealed connections between areas in the middle occipital lobe and the ventral occipitotemporal cortex (Seltzer and Pandya 1978; Distler et al. 1993; Felleman et al. 1997; Beck and Kaas 1998), and these connections are assumed to be reciprocal (Felleman and Van Essen 1991; Distler et al. 1993). Therefore, we constructed models with reciprocal connections from lMO and mMO to pIT and IT/F. We also used Bayesian model comparison to adjudicate between models in which the middle occipital areas are connected to both areas in the inferior temporal gyrus (models 3 and 4; Fig. 5B), or just to the level above (models 1 and 2; Fig. 5B). Reciprocal connections between the anterior and posterior part of the inferior temporal cortex have been described in macaque (Desimone et al. 1980; Shiwa 1987; Webster et al. 1991; Distler et al. 1993). We therefore included a reciprocal connection between IT/F and pIT in our models.

Bayesian model comparison was performed by calculating the log evidence (free energy) for each of the 4 models separately for each subject (see Methods, Fig. 5C, and Supplementary Table 2) and then taking these values to a between-subject analysis. The pooled (summed) log evidences across subjects for each model, relative to the first, was as follows: M1 = 0; M2 = 9.5; M3 = 40.5; and M4 = 20.3 (Fig. 5C). Note that a difference in log evidence of 3 between 2 models represents strong evidence in favor of the first model (i.e., odds of 20 to 1) (Penny et al. 2004a). These results (Fig. 5C) indicate stronger evidence for M3 > M4 > M2 > M1. A 2 × 2 repeated measures ANOVA of the log evidences with factors “MO–IT/F connections” (present, absent) and “lMO–mMO connections” (present, absent) showed a significant main effect of MO–IT/F connections (F1,13 = 9.51, P = 0.009), reflecting the fact that the presence of a connection between the middle occipital regions and the IT/F increases the log evidence of a model consistently across subjects. There was also a significant interaction between the factors (F1,13 < 10.81, P = 0.006). However, there was no significant main effect of the lateral lMO–mMO connections (F1,13 < 1, P = 0.44). Moreover, the difference between M3 and M4, even when in favor of M3, was not significant across subjects (Fig. 5C; t13 = −2.04, P = 0.062). Based on these results, there is strong evidence for models with a top-down connection between middle occipital areas (first level) and IT/F (third level), but there is no evidence for lateral connections between lMO and mMO (first level). However, we decided to use the more complete model M4 for further analysis because it allowed us to compare top-down and lateral connections in a quantitative sense. The results of this model comparison resolve our first question and allow us to conclude that top-down effects are not limited to neighboring levels in the visual hierarchy but can transcend multiple levels.

To see the effect of stimulus on each connection, the posterior means of the fixed connection and modulatory effects were harvested for each subject and taken to a second-level (between-subject) analysis using 1-sample t-tests. Figure 6 and Table 3 show the results. Panel A shows the results for the fixed connections. These represent the connectivity between areas in the absence of modulatory or bilinear effects due to collinearity or meaning. There were significant reciprocal connections between lMO and pIT, and between pIT and IT/F. There was also a significant positive coupling from lMO to IT/F, and a negative coupling from IT/F to mMO. These results suggest a default circuit between areas mMO, pIT, and IT/F in the processing of any visual image, which is in agreement with the conjunction analysis (Fig. 2 and Supplementary Fig. 1) that shows that all these areas are significantly active with all the experimental conditions. Interestingly, the only negative or functional inhibitory effect was expressed by the top-down connection from IT/F to mMO.

Figure 6.

DCM results. Schematic representations of the group results obtained with a DCM analysis. Each DCM comprised 4 areas: mMO, lMO, pIT, and IT/F. Positive parameters are represented by thick black lines and negative ones by thick gray lines. Parameters can be understood as rate constants with units in Hertz (see Methods). Thick lines represent significant modulations at P < 0.05, * P < 0.01, and ** P < 0.001. Dotted lines show connections that were not statistically significant at the between-subject level.

Table 3.

Group results for model 4

| Connection | Fixed |

“Collinearity” |

“Meaning” |

|||

| Mean ± SEM | P | Mean ± SEM | P | Mean ± SEM | P | |

| mMO → lMO | −0.012 ± 0.033 | 0.73 | 0.070 ± 0.022 | 0.03 | 0.005 ± 0.016 | 0.807 |

| mMO → pIT | 0.091 ± 0.057 | 0.16 | 0.070 ± 0.027 | 0.07 | 0.023 ± 0.013 | 0.191 |

| mMO → IT/F | −0.088 ± 0.050 | 0.13 | 0.034 ± 0.022 | 0.26 | 0.073 ± 0.025 | 0.045 |

| lMO → mMO | 0.020 ± 0.022 | 0.42 | 0.016 ± 0.023 | 0.61 | −0.134 ± 0.013 | <0.001 |

| lMO → pIT | 0.250 ± 0.039 | <0.001 | 0.172 ± 0.019 | <0.001 | 0.041 ± 0.015 | 0.057 |

| lMO → IT/F | 0.116 ± 0.033 | 0.006 | 0.073 ± 0.029 | 0.07 | 0.152 ± 0.024 | <0.001 |

| pIT → mMO | −0.004 ± 0.053 | 0.94 | 0.004 ± 0.008 | 0.70 | −0.032 ± 0.004 | <0.001 |

| pIT → lMO | 0.062 ± 0.022 | 0.018 | 0.025 ± 0.005 | 0.003 | 0.007 ± 0.009 | 0.568 |

| pIT → IT/F | 0.097 ± 0.036 | 0.027 | 0.012 ± 0.009 | 0.34 | 0.027 ± 0.008 | 0.022 |

| IT/F → mMO | −0.097 ± 0.034 | 0.020 | −0.012 ± 0.005 | 0.096 | −0.022 ± 0.007 | 0.025 |

| IT/F → lMO | 0.038 ± 0.022 | 0.13 | 0.015 ± 0.006 | 0.08 | 0.012 ± 0.008 | 0.290 |

| IT/F → pIT | 0.124 ± 0.044 | 0.022 | 0.014 ± 0.007 | 0.15 | 0.003 ± 0.005 | 0.627 |

Note: The table shows the strength of the connection between the indicated areas under the fixed connection and the modulatory effect of “collinearity” and “meaning.” Parameters can be understood as rate constants with units in Hertz (see Methods). Significant values are shown in bold.

SEM, standard error of mean.

With collinearity in the visual stimuli, there is an increase in the strength of the connectivity between lMO and pIT, both in the backward and forward connections (Fig. 6B). Collinearity also has a positive modulatory effect in the connection from mMO to lMO, which was not significant for the fixed connectivity. It should be noted that there was no significant negative coupling with collinearity; this was expected since none of the areas included in DCM showed a decrease in activity with condition ML compared with condition NC. Finally, when meaning is also present in the stimuli, there is a significant positive modulation in all the forward connections to IT/F but not to pIT or lMO (Fig. 6C). In contrast, there was a significant increase in the negative coupling in the backward connections from pIT and IT/F to mMO, and also a very strong negative change in the coupling from lMO to mMO. Therefore, top-down effects account, at least partly, for the increase in activity observed with collinearity in mMO and the decrease observed with meaning in lMO.

In summary, the results of the DCM analysis reveal a circuit involved in the processing of all forms that includes lMO, pIT, and IT/F, all positively coupled, and a negative connection from IT/F to mMO. With collinearity, there is an increase in the connectivity between lMO and pIT in both directions; and with meaning, there is a positive modulation in all the forward connections toward IT/F and a negative modulation in all the connections to mMO, but not to any other region. These results show that top-down signals are generated at each level of the visual hierarchy, that a single area can receive top-down influences from multiple levels, and that inhibitory effects are seen only in top-down and lateral coupling. This is consistent with a role of top-down signals disambiguating activity in lower areas and enabling a more efficient processing of low-level visual attributes that conform to the processing established by higher level areas.

Discussion

Using a conventional general linear model analysis, we have shown that stimuli with recognizable high-order form cause stronger activations in anterior (higher) visual areas and deactivations in posterior (lower) areas. With an effective connectivity analysis (DCM), we demonstrated that high-order form induces top-down signals from more than one level of the visual brain. Our analysis shows that all our stimuli caused activations in visual areas, from those located in the inferior occipital cortex to the ones in middle occipital cortex dorsally, and the anterior fusiform gyrus ventrally. This suggests that form information is transmitted continuously and in parallel between levels in the visual brain (Zeki and Shipp 1988; Humphreys et al. 1999; Cardin and Zeki 2005), and not in a discrete fashion, where information processing needs to be completed at one level before being sent to a next level. Our results are not only in agreement with previous studies showing stronger anterior activations in the selective processing of object's structural and semantic properties (Gerlach et al. 1999, 2002; Vuilleumier et al. 2002; Simons et al. 2003) but also show that coherence in the stimuli causes deactivations in lower visual areas following a hierarchical organization—V1/V2 were less active with collinearity, whereas the middle occipital cortex, a more anterior region, was less active with meaning. Stronger responses for meaningful objects were observed in higher visual areas (bilateral anterior fusiform gyrus and inferior temporal gyrus) and also in areas associated with memory, semantics, and object-based decisions, such as the hippocampus and inferior frontal gyrus (Gabrieli et al. 1997; Gerlach et al. 1999; Stern et al. 2001; Adams and Janata 2002; Vuilleumier et al. 2002; Simons et al. 2003). Areas responding more strongly to collinear stimuli were purely visual, with clusters in the fusiform gyrus, pIT gyrus and middle occipital cortex. All areas more active for collinear stimuli were always located more posteriorly than those activated by meaningful ones. Furthermore, collinearity and meaning caused deactivations in lower visual areas, also with a posterior to anterior progression, where the deactivations caused by collinearity are located in V1/V2 and those by meaning were found in the middle occipital cortex. This suggests that the presence of learnt regularities in the visual input cause activations in more anterior (higher) areas, which are accompanied by deactivations in posterior (lower) ones. These results agree with previous studies showing deactivations in lower visual areas with increases in coherence (McKeefry et al. 1997; Murray et al. 2002; Dumoulin and Hess 2007; Fang et al. 2008) and also show that they occur in reverse hierarchical order, in which deactivations caused by an abstract property, such as meaning, are found in more anterior areas than those caused by a lower level property, such as collinearity. Deactivations in lower visual areas with more coherent stimuli have been argued to be due to statistical properties of the image and not global form (Dumoulin and Hess 2007), but in this case the authors acknowledge that these could be mediated by top-down signals. Overall, these results are in agreement with a predictive coding theory of cortical processing, where higher areas send top-down signals to lower ones. The goal of the implicit recurrent message passing among different levels is to reduce the mismatch between top-down predictions and bottom-up sensory information. Once the mismatch has been accounted for in neural terms, the predictions are a sufficient representation of the sensory input (Mumford 1992; Rao and Ballard 1999; Friston 2003). In the context of our study, areas activated by collinearity and meaning will send top-down signals that will account for these regularities in the input, resulting in a refined representation of the sensory stimulation. This process will continue in further stages, in which top-down influences from areas in the parietal and frontal cortices could contribute signals related to prior beliefs, attention, or imagery. All these combined signals will contribute to a more efficient overall coding of the form, including its segregation from the background and recognition. The refined information will then be used for efficient decision making and the corresponding deployment of motor responses. Since the same task was performed while participants viewed all categories, and we found no differences in brain activity between the regressors coding for task difficulty, any difference in the results are due to the processing of stimulus properties, and not to differences in the strategy used to judge the symmetry of meaningful or meaningless images; that is they reflect a network and dynamics of successful form perception (in particular figure–ground segregation) independently of task. Further support for our conclusions come from extensive behavioral and computational evidence showing top-down influences that assist recognition and figure–ground segregation (see Gilbert and Sigman 2007 for a recent review). For example, when 2 regions of space are separated by an edge, the one that has the shape of a known object is more likely to be assigned as the figure, showing the influence that previous knowledge has on the segmentation of the scene (see Peterson and Skow-Grant 2003 for a review). Furthermore, evidence from computer science also supports this view, with successful computer models of figure–ground segregation achieved when top-down knowledge is included to aid the segmentation process (Ullman 2006; Sharon et al. 2006).

To identify the nature and origin of potential top-down and bottom-up modulations, we performed a DCM analysis using a simple model that included 3 stages: 1) an input level (mMO and lMO), parts of which were either more active or inhibited by high-order structure; 2) an intermediate level (pIT) activated more by simple high-order form (collinearity); and 3) a final level (IT/F) activated more by forms with semantic associations (meaning). The DCM analysis showed a strong fixed coupling (i.e., when the system was processing any condition) between lMO, pIT, and IT/F, in agreement with the fact that we saw activations in all these areas with any kind of visual stimulus (Fig. 2 and Supplementary Fig. 1). Again, all these areas seem to be involved in the processing of form, but more anterior ones are more active if the stimulus is recognizable. This is reflected in the positive modulation observed in the forward connections to pIT with collinearity and to IT/F with meaning. Collinearity also caused a positive modulation in the backward connections from pIT to lMO, which results in an overall increase in the connectivity loop between these 2 areas. In contrast, meaning caused a negative modulation in the backward connections to an mMO region. A simple account of these findings is that mMO represents the lowest level of the visual processing hierarchy and that top-down signals are aimed at reducing the mismatch between the sensory input and the high-level interpretation. However, there are many alternative explanations; for example, in the human visual brain, the foveal to peripheral representation runs from lateral to medial parts of retinotopically mapped cortex (Wandell et al. 2005). In our stimuli, the lines subtending recognizable “figures” were always central. This suggests that positive top-down signals may be sent to populations coding relevant information (in this case the figure) and inhibitory signals to those responsive to potentially irrelevant information (the background). It should be noticed that in the DCM analysis, inhibitory signals came from both higher and lateral areas, suggesting that any activated area can contribute inhibitory signals. Top-down signals of different nature in the same visual area have been shown in humans and macaques (Hupe et al. 1998; Williams et al. 2008). In macaque, top-down signals have been shown to amplify responses to objects within a classical receptive field and enhance suppression evoked by background stimuli in the surrounding region (Hupe et al. 1998). In humans, Williams et al. (2008) have shown, using fMRI, differential top-down signals to cortical regions that are part of the same visual area but represent different portions of the visual field. In their experiment, they observed a foveal response to object categories presented in the periphery of the visual field. Although the authors did not speculate about the positive or negative nature of the top-down signal, they showed that their response was position invariant and task relevant, and therefore probably caused by top-down signals from areas such as LOC. In brief, our DCM analysis shows not only negative top-down signals that suppress activity in lower visual areas with recognizable stimuli but also a positive top-down signal to areas already activated by the visual stimulus. This positive signal could enhance the activity of those neurons coding relevant information, as shown in figure–ground segregation experiments (Lamme 1995; Hupe et al. 1998). Our conclusions are based on top-down effects elicited by collinearity and meaning involving visual areas in the occipital and temporal lobe. However, the organizational principles that resulted from our study could probably be applied to any stage in the processing of sensory information, such as top-down modulations in object processing areas elicited by attention (O'Craven et al. 1999; Hopfinger et al. 2000; Pessoa et al. 2003; Vuilleumier et al. 2008), working memory (Rose et al. 2005), mental imagery (Ishai et al. 2000a; O'Craven and Kanwisher 2000; Mechelli et al. 2004), semantics (Humphreys et al. 1997; Chao et al. 1999; Noppeney et al. 2006), prior beliefs (Summerfield et al. 2006; Summerfield and Koechlin 2008), emotion (Vuilleumier et al. 2001), and task demands (Righart et al. 2009), many of which originate in prefrontal and parietal regions.

To conclude, using a conventional analysis we have shown that stimuli with high-order forms cause larger activations in more anterior visual areas. In contrast, when the images contain random or unfamiliar arrangements, activations are greater in posterior visual areas. In addition, with a DCM analysis we demonstrated that form regularities engage higher visual areas and generate top-down signals simultaneously, at several stages in the visual system. These results are consistent with the hypothesis that each visual area activated sends a top-down signal accounting for an attribute of the stimuli to lower areas. If a stimulus has many recognizable patterns, the specialized areas processing these patterns will send parallel top-down influences to the area concerned, helping to explain different hierarchical attributes of the visual scene. These top-down signals could optimize the transmission of relevant information, which will result in a more efficient encoding of the sensory stimulation.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/

Funding

Wellcome Trust (personal grant to S.Z.; PhD studentship to V.C.).

Supplementary Material

Acknowledgments

Conflict of Interest: None declared.

References

- Adams RB, Janata P. A comparison of neural circuits underlying auditory and visual object categorization. NeuroImage. 2002;16:361–377. doi: 10.1006/nimg.2002.1088. [DOI] [PubMed] [Google Scholar]

- Altmann CF, Bulthoff HH, Kourtzi Z. Perceptual organization of local elements into global shapes in the human visual cortex. Curr Biol. 2003;13:342–349. doi: 10.1016/s0960-9822(03)00052-6. [DOI] [PubMed] [Google Scholar]

- Andrews TJ, Schluppeck D. Neural responses to Mooney images reveal a modular representation of faces in human visual cortex. NeuroImage. 2004;21:91–98. doi: 10.1016/j.neuroimage.2003.08.023. [DOI] [PubMed] [Google Scholar]

- Ban H, Yamamoto H, Fukunaga M, Nakagoshi A, Umeda M, Tanaka C, Ejima A. Toward a common circle: interhemispheric contextual modulation in human early visual areas. J Neurosci. 2006;26:8804–8809. doi: 10.1523/JNEUROSCI.1765-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmid AM, Schmidt AM, Dale AM, Hämäläinen MS, Marinkovic K, Schacter DL, et al. Top-down facilitation of visual recognition. Proc Nat Acad Sci U S A. 2006;103:449–454. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beck P, Kaas J. Cortical connections of the dorsomedial visual area in new world owl monkeys (Aotus trivirgatus) and squirrel monkeys (Saimiri sciureus) J Comp Neurol. 1998;400:18–34. doi: 10.1002/(sici)1096-9861(19981012)400:1<18::aid-cne2>3.0.co;2-w. [DOI] [PubMed] [Google Scholar]

- Bokde ALW, Dong W, Born C, Leinsinger G, Meindll T, Teiple SJ, Reiser M, Hampel H. Task difficulty in a simultaneous face matching task modulates activity in face fusiform area. Cog Brain Res. 2005;25:701–710. doi: 10.1016/j.cogbrainres.2005.09.016. [DOI] [PubMed] [Google Scholar]

- Burgund ED, Marsolek CJ. Viewpoint-invariant and viewpoint-dependent object recognition in dissociable neural subsystems. Psycho Bull Rev. 2000;7:480–489. doi: 10.3758/bf03214360. [DOI] [PubMed] [Google Scholar]

- Cardin V, Zeki S. Form construction in the human visual brain. Program No. 46.20. Neuroscience 2005 Abstracts. Washington, DC: Society for Neuroscience Online; 2005. [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Chun MM, Jiang Y. Contextual cueing: implicit learning and memory of visual context guides spatial attention. Cog Psychol. 1998;36:28–71. doi: 10.1006/cogp.1998.0681. [DOI] [PubMed] [Google Scholar]

- Cole MW, Schneider W. The cognitive control network: integrated cortical regions with dissociable functions. NeuroImage. 2007;37:343–360. doi: 10.1016/j.neuroimage.2007.03.071. [DOI] [PubMed] [Google Scholar]

- Desimone R, Albright TD, Gross CG, Bruce C. Stimulus-selective properties of inferior temporal neurons in the macaque. J Neurosci. 1984;4:2051–2062. doi: 10.1523/JNEUROSCI.04-08-02051.1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Fleming JFR, Gross CG. Prestriate afferents to inferior temporal cortex: an HRP study. Brain Res. 1980;184:41–55. doi: 10.1016/0006-8993(80)90586-7. [DOI] [PubMed] [Google Scholar]

- Distler C, Boussaoud D, Desimone R, Ungerleider LG. Cortical connections of inferior temporal area TEO in macaque monkeys. J Comp Neurol. 1993;334:125–150. doi: 10.1002/cne.903340111. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Fink GR, Rolls E, Booth M, Holmes A, Frackowiak RSJ, Friston KJ. How the brain learns to see objects and faces in an impoverished context. Nature. 1997;389:596–599. doi: 10.1038/39309. [DOI] [PubMed] [Google Scholar]

- Dumoulin SO, Hess RF. Cortical specialization for concentric shape processing. Vis Res. 2007;47:1608–1613. doi: 10.1016/j.visres.2007.01.031. [DOI] [PubMed] [Google Scholar]

- Eger E, Henson RN, Driver J, Dolan RJ. Mechanisms of top-down facilitation in perception of visual objects studied by fMRI. Cereb Cortex. 2007;17:2123–2133. doi: 10.1093/cercor/bhl119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eger E, Schweinberger SR, Dolan RJ, Henson RN. Familiarity enhances invariance of face representations in human ventral visual cortex: fMRI evidence. NeuroImage. 2005;26:1128–1139. doi: 10.1016/j.neuroimage.2005.03.010. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Sthepan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage. 2005;25:1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Fang F, Kersten D, Murray SO. Perceptual grouping and inverse fMRI activity patterns in human visual cortex. J Vis. 2008;8:1–9. doi: 10.1167/8.7.2. [DOI] [PubMed] [Google Scholar]

- Felleman D, Burkhalter A, Van Essen D. Cortical connections of areas V3 and VP of macaque monkey extrastriate visual cortex. J Comp Neurol. 1997;379:21–47. doi: 10.1002/(sici)1096-9861(19970303)379:1<21::aid-cne3>3.0.co;2-k. [DOI] [PubMed] [Google Scholar]

- Felleman D, Van Essen D. Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Friston KJ. Functional and effective connectivity in neuroimaging: a synthesis. Hum Brain Mapp. 1994;2:56–78. [Google Scholar]

- Friston KJ. Learning and inference in the brain. Neural Netw. 2003;16:1325–1352. doi: 10.1016/j.neunet.2003.06.005. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Harrison L, Penny W. Dynamic causal modelling. NeuroImage. 2003;19:1273–1302. doi: 10.1016/s1053-8119(03)00202-7. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Penny W, Glaser D. Conjunction revisited. NeuroImage. 2005;25:661–667. doi: 10.1016/j.neuroimage.2005.01.013. [DOI] [PubMed] [Google Scholar]

- Gabrieli JD, Brewer JB, Desmond JE, Glover GH. Separate neural bases of two fundamental memory processes in the human medial temporal lobe. Science. 1997;276:264–266. doi: 10.1126/science.276.5310.264. [DOI] [PubMed] [Google Scholar]

- Gerlach C. Category-specificity in visual object recognition. Cognition. 2009;111:281–301. doi: 10.1016/j.cognition.2009.02.005. [DOI] [PubMed] [Google Scholar]

- Gerlach C, Aaside CT, Humphreys GW, Gade A, Paulson OB, Law I. Brain activity related to integrative processes in visual object recognition: bottom-up integration and the modulatory influence of stored knowledge. Neuropsychologia. 2002;40:1254–1267. doi: 10.1016/s0028-3932(01)00222-6. [DOI] [PubMed] [Google Scholar]

- Gerlach C, Law I, Gade A, Paulson OB. Perceptual differentiation and category effects in normal object recognition: a PET study. Brain. 1999;122:2159–2170. doi: 10.1093/brain/122.11.2159. [DOI] [PubMed] [Google Scholar]

- Gilbert CD, Sigman M, Sigman M. Brain states: top-down influences in sensory processing. Neuron. 2007;54:677–696. doi: 10.1016/j.neuron.2007.05.019. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Itzchak Y, Malach R. Cue-invariant activation in object-related areas of the human occipital lobe. Neuron. 1998a;21:191–202. doi: 10.1016/s0896-6273(00)80526-7. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Hendler T, Edelman S, Itzchak Y, Malach R. A sequence of object-processing stages revealed by fMRI in the human occipital lobe. Hum Brain Mapp. 1998b;6:316–328. doi: 10.1002/(SICI)1097-0193(1998)6:4<316::AID-HBM9>3.0.CO;2-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossberg S. 3-D vision and figure-ground separation by visual cortex. Percept Psychophys. 1994;55:48–121. doi: 10.3758/bf03206880. [DOI] [PubMed] [Google Scholar]

- Grossberg S. The link between brain learning, attention, and consciousness. Conscious Cogn. 1999;8:1–44. doi: 10.1006/ccog.1998.0372. [DOI] [PubMed] [Google Scholar]

- Harrison LM, Stephan KE, Rees G, Friston KJ. Extra-classical receptive field effects measured in striate cortex with fMRI. NeuroImage. 2007;34:1199–1208. doi: 10.1016/j.neuroimage.2006.10.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henson RNA, Goshen-Gottstein Y, Ganel T, Otten LJ, Quayle A, Rugg MD. Electrophysiological and haemodynamic correlates of face perception, recognition and priming. Cereb Cortex. 2003;13:793–805. doi: 10.1093/cercor/13.7.793. [DOI] [PubMed] [Google Scholar]

- Holcomb HH, Medoff DR, Caudill PJ, Zhao Z, Lahti AC, Dannals RF, Tamminga CA. Cerebral blood flow relationships associated with a difficult tone recognition task in trained normal volunteers. Cereb Cortex. 1998;8:534–542. doi: 10.1093/cercor/8.6.534. [DOI] [PubMed] [Google Scholar]

- Hopfinger JB, Buonocore MH, Mangun GR. The neural mechanisms of top-down attentional control. Nat Neurosci. 2000;3:284–291. doi: 10.1038/72999. [DOI] [PubMed] [Google Scholar]

- Hubel DH, Wiesel TN. Receptive fields and functional architecture of monkey striate cortex. J Physiol. 1968;195:215–243. doi: 10.1113/jphysiol.1968.sp008455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphreys GW, Price CJ, Riddoch MJ. From objects to names: a cognitive neuroscience approach. Psychol Res. 1999;62:118–130. doi: 10.1007/s004260050046. [DOI] [PubMed] [Google Scholar]

- Humphreys GW, Riddoch MJ, Price CJ. Top-down processes in object identification: evidence from experimental psychology, neuropsychology and functional anatomy. Philos Trans R Soc Lond B Biol Sci. 1997;352:1275–1282. doi: 10.1098/rstb.1997.0110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hupe JM, James AC, Payne BR, Lomber SG, Girard P, Bullier J. Cortical feedback improves discrimination between figure and background by V1, V2 and V3 neurons. Nature. 1998;394:784–787. doi: 10.1038/29537. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Haxby JV. Distributed neural systems for the generation of visual images. Neuron. 2000a;28:979–990. doi: 10.1016/s0896-6273(00)00168-9. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Martin A, Haxby JV. The representation of objects in the human occipital and temporal cortex. J Cogn Neurosci. 2000b;12:35–51. doi: 10.1162/089892900564055. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Martin A, Schouten JL, Haxby JV. Distributed representation of objects in the human ventral visual pathway. Proc Nat Acad Sci U S A. 1999;96:9379–9384. doi: 10.1073/pnas.96.16.9379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, Tong F, Nakayama K. The effect of face inversion on the human fusiform face area. Cognition. 1998;68:B1–B11. doi: 10.1016/s0010-0277(98)00035-3. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Woods R, Iacoboni M, Mazziotta J. A locus in human extrastriate cortex for visual shape analysis. J Cogn Neurosci. 1996;91:133–142. doi: 10.1162/jocn.1997.9.1.133. [DOI] [PubMed] [Google Scholar]

- Kapadia MK, Ito M, Gilbert CD, Westheimer G. Improvement in visual sensitivity by changes in local context: parallel studies in human observers and in V1 of alert monkeys. Neuron. 1995;15:843–856. doi: 10.1016/0896-6273(95)90175-2. [DOI] [PubMed] [Google Scholar]

- Kofka K. Principles of Gestalt psychology. New York: Harcourt & Brace; 1935. [Google Scholar]

- Kourtzi Z, Tolias AS, Altmann CF, Augath M, Logothetis NK. Integration of local features into global shapes: monkey and human FMRI studies. Neuron. 2003;37:333–346. doi: 10.1016/s0896-6273(02)01174-1. [DOI] [PubMed] [Google Scholar]

- Kveraga K, Boshyan J, Bar M. Magnocellular projections as the trigger of top-down facilitation in recognition. J Neurosci. 2007;27:13232–13240. doi: 10.1523/JNEUROSCI.3481-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamme VA. The neurophysiology of figure-ground segregation in primary visual cortex. J Neurosci. 1995;15:1605–1615. doi: 10.1523/JNEUROSCI.15-02-01605.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamme VA, Roelfsema PR. The distinct modes of vision offered by feedforward and recurrent processing. Trends Neurosci. 2000;23:571–579. doi: 10.1016/s0166-2236(00)01657-x. [DOI] [PubMed] [Google Scholar]

- Lee T, Mumford D, Romero R, Lamme V. The role of the primary visual cortex in higher level vision. Vis Res. 1998;38:2429–2454. doi: 10.1016/s0042-6989(97)00464-1. [DOI] [PubMed] [Google Scholar]

- Lehmann C, Vannini P, Wahlund L-O, Almkvist O, Dierks T. Increased sensitivity in mapping task demand in visuospatial processing using reaction-time-dependent hemodynamic response predictors in rapid event-related fMRI. NeuroImage. 2006;31:505–512. doi: 10.1016/j.neuroimage.2005.12.064. [DOI] [PubMed] [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Tootell RB. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Natl Acad Sci U S A. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marsolek CJ. Abstract visual-form representations in the left cerebral hemisphere. J Exp Psychol Hum Percept Perform. 1995;21:375–386. doi: 10.1037//0096-1523.21.2.375. [DOI] [PubMed] [Google Scholar]

- McKeeff TJ, Tong F. The timing of perceptual decisions for ambiguous face stimuli in the human ventral visual cortex. Cereb Cortex. 2007;17:669–678. doi: 10.1093/cercor/bhk015. [DOI] [PubMed] [Google Scholar]

- McKeefry DJ, Watson JD, Frackowiak RS, Fong K, Zeki S. The activity in human areas V1/V2, V3, and V5 during the perception of coherent and incoherent motion. NeuroImage. 1997;5:1–12. doi: 10.1006/nimg.1996.0246. [DOI] [PubMed] [Google Scholar]