Abstract

Enabling collection of clinical data directly from patients has the potential to increase data accuracy and augment patient engagement in the care process. Most patient data entry systems have been created independent of electronic health records, and few studies have explored how patient entered data can be integrated in the documentation of a clinical encounter. In this paper we describe a formative evaluation study using three different methodologies through which we identified requirements for direct data entry by patients and the subsequent incorporation of these data into the documentation process. The greatest challenges included ensuring confidentiality of records between patients, capturing medication histories from patients, displaying and distinguishing new and previously entered data for provider review, and supporting patient educational needs. The resulting computer tablet-based data collection tool has been deployed to 30 primary care optometry practices where it is successfully used to document care for patients with glaucoma.

Introduction

Enabling direct entry of clinical information by patients into electronic record systems has the potential to streamline the data collection process, to collect more comprehensive data, and to increase patients’ engagement in their own care.1 The initial use of electronic systems for capturing data directly from patients dates back to the mid 1960s,2 and the advantages and disadvantages of this data collection modality across diverse venues have been extensively reviewed.3 In general, computers have been shown to collect a larger quantity of historical information and more sensitive information than clinician-patient interviews or paper forms.3–5 Computers can also capture critical information that would otherwise go undocumented by routine processes. 6 The reliability of computer-collected information relative to interviewer-collected information has been measured at over 95%.4,7–10 While patients have generally found these systems easy to use,11 until recently most data collection tools have been stand-alone systems that are external to providers’ clinical documentation tools3 or paper-based forms that are scanned to extract information.12 Very few studies have explored how patient-entered data can be integrated into the care documentation process, especially for eye care.13

In this paper we describe a multifaceted formative evaluation study that we conducted in order to create a human-computer interface that would enable patients with glaucoma to enter information directly into an electronic health record that was subsequently incorporated into the provider’s documentation for the encounter. In the end, we created a tablet computer-based tool that has been deployed to thirty eye care practices as part of a randomized controlled trial to assess the impact of computer-assisted patient/provider data collection and decision making on the quality of eye care for open angle glaucoma.

Methods

Definition of User Tasks

At the initiation of this interface development project, we identified the tasks that would need to be supported in order to enable patients to enter historical and observational data directly using a tablet computer. A list of tasks was elicited through interviews with eye care professionals and patients. The specific data items to be collected were identified from the above interviews and observations, and from abstraction of data elements from paper-based data collection forms and from published recommendations from eye care professional societies regarding optimal data collection.14

Methodological Approaches

We employed three methodologies to assess various approaches to supporting direct electronic data entry by patients. The study methodologies we used to inform our system development process included focus groups with patients and providers, cognitive response interviews (“think-aloud” sessions) during observed user sessions, and monitored pilot implementations of a system prototype at two clinic sites.

Focus Groups: We conducted two focus groups with optometrists and two focus groups with patients with glaucoma. After a brief overview of the data entry interface for the tablet computer, participants responded either to simulated data entry screens or direct hands-on use of the device. After this exposure, participants discussed strengths and weaknesses of the system with a group facilitator. Responses were recorded and later grouped by themes.

Cognitive Response Sessions: Cognitive response interviews were conducted with patients with glaucoma and optometrists who had not previously been exposed to the system. Participants were paired with one or more observers who documented the system users’ comments and the screens to which they were responding as the users sought to enter data based on their personal experience for patients or on patients they had seen in their practices for optometrists. Observations from these sessions were organized around themes and later converted into development specifications. These proposed specifications were reviewed by the project advisory group and moved into production when deemed appropriate.

Observed Sessions at Pilot Sites. After modifications had been made based on the focus group findings and cognitive response interviews, two optometrists were observed using the system in the context of caring for a patient in order to assess the tablet computer data entry interface in a practice setting. Two trained observers documented the interaction of the user with the tablet computer, specifically focusing on points of disruption in workflow and on comments of the user during the data entry process.

This study was approved by the Institutional Review Board for the Duke University School of Medicine.

Results

Focus Group Participant Characteristics

Patient focus groups included 2 women and 4 men with glaucoma with an average age of 67 years and a racial make-up of 4 Caucasians and 2 African Americans. Sessions lasted 90 minutes and included lunch. Provider focus groups included 4 female and 6 male optometrists with an average age of 45 years and an observed racial make-up of 8 Caucasians and 2 Asians. Sessions lasted 2 hours and included dinner.

Design Specifications and System Characteristics

The three approaches to gather and refine the system specifications identified 14 critical features. These features representing the lessons we learned and the challenges we faced are summarized in Table 1 along with the method we selected to implement each feature.

Table 1.

Design Specifications for Data Collection and How Requirement Was Addressed.

| Requirement Specification | Approach to Fulfill Requirement | |

|---|---|---|

| Patient |

|

|

| Provider |

|

|

The requirements definition process also identified the data elements that were to be collected directly from patients. These data elements included the following:

Reason for Visit, Referring Provider

Eye Symptoms and Ocular History

Impairment of Activities of Daily Living

Selected Medical and Surgical History

Selected Family and Social History

Allergies

Eye Medications (Drops and Oral)

Medication Side Effects & Compliance Level

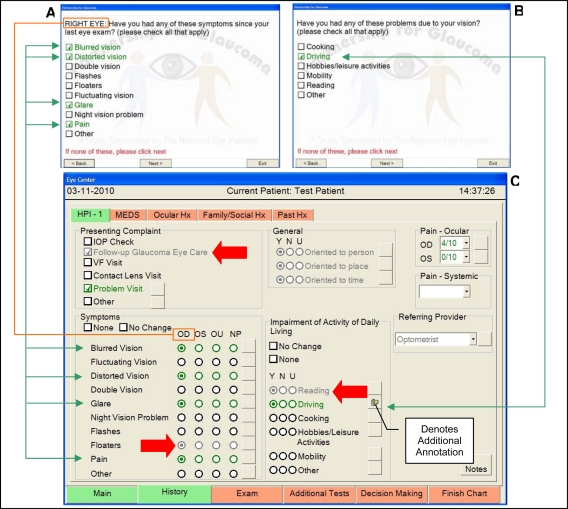

Sample screenshots from the resultant data collection tool are shown in Figure 1. Data elements that are pulled forward from a prior encounter are shown in a gray font (large arrows) in the data collection system where as data elements that are newly entered during the current encounter are shown in a green font (thin arrows). Additionally, a one-toone correlation exists between patient entered data elements and data elements displayed for the provider’s review.

Figure 1.

Screen Shots from the Patient Data Entry Module (A & B) and the Provider Data Entry Module (C). See Text for Details.

Findings from Formative Evaluation Studies

The formative sessions with patients and providers were extremely helpful to identify major pitfalls that resulted in data being incorrectly entered. Data from each eye needed to be collected separately in order to show only one question per screen. Initially, patients were failing to correctly recognize for which eye the data were being collected. To address this issue, the phrasing of the eye-related questions was modified to state “right” or “left” eye first, and this eye designation was highlighted in capital letters (Figure 1, thin line connecting boxes). A second major challenge for patients was correctly entering their medications. We discovered that patients often recognized a medication as the “drops in the bottle with a green cap that I use twice a day.” The medication names, especially the dose or strength, were frequently not known by the patients. To address this issue, we opted to collect only medications related to eye care from the patients, to collect medication information through pick-lists as opposed to free text, and to allow the provider to verify the data. Capturing only this limited set of eye-related medication data still required six screens.

Another theme that emerged from the observed patient sessions was the need for a consistent uniform approach to navigation. As an approach to save “clicks” in the initial design, we automated advancing to the next screen following a question for which only a single answer could be selected (i.e., a radio button). Patient users became confused regarding when the screen would auto-advance vs. when they needed to manually advance the screen after a pick-list style question with several possible answers. As a resulting design specification, we required manual advancing between all screens for patient users.

For providers, a major challenge was identified during the formative sessions related to collecting details beyond structured data entries. Accordingly, we enabled annotation for almost every structured data element using “electronic paper” so that providers could include all necessary details (Figure 1).15 Formative sessions with providers also revealed that in some clinics only a visual field or an optic nerve examination would be done at a single visit for workflow reasons. This limitation led us to identify an approach for decision support for staging of the severity of a patient’s glaucoma that was based solely on visual field results and was considered acceptable by optometrists.16 Finally, formative sessions served to identify the core patient information needs appreciated by both patients and providers. The needs included anticipatory guidance for future testing and care, which we fulfilled through a tailored patient handout; and educational needs concerning what is open angle glaucoma, what are the risk factors for glaucoma, and how to use eye drops, which we fulfilled through brief educational videos at the conclusion of the patient data entry session.

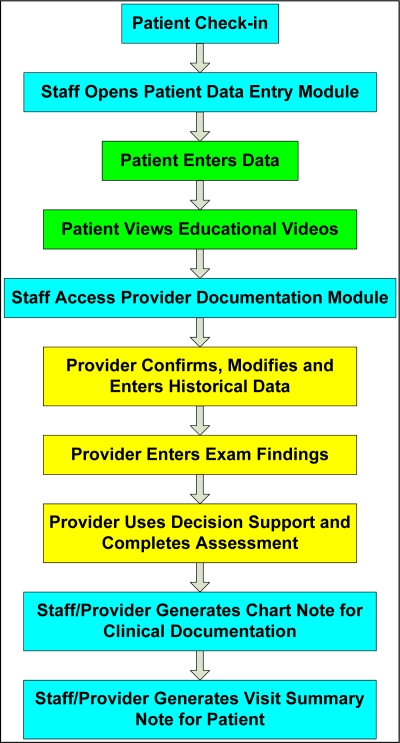

Integrating Patient Data Collection into Clinic Workflow

In order to operationalize data collection from patients, we recognized that we needed to integrate this process seamlessly into the clinic workflow. We used our formative sessions with providers to define and subsequently refine a workflow process that supported patient data entry (Figure 2). This process insured confidentiality by allowing the staff to control access for a patient to only his/her record, and limited the provider’s role to tasks that only he/she could do.

Figure 2.

Workflow for Computer Tablet Use. Aqua boxes = staff tasks, green boxes = patient tasks, yellow boxes = provider tasks.

System Utilization

The data collection tool for glaucoma for patients and providers has been deployed in 30 private practice sites across three states since November, 2009. This system is being evaluated in a two-year randomized controlled trial to assess the impact of computer-aided data collection and decision-making on both care quality and the progression of glaucoma relative to usual care.

Discussion

Using a multifaceted formative methodology, we have created a tablet computer-based system that enables patients with glaucoma to enter relevant information directly into an electronic health record. This system also allows providers to utilize data collected directly from patients in their documentation of clinical encounters. This data collection tool is successfully in use at 30 private optometry practices as part of a clinical trial to assess the impact of computer-assisted data collection and decision-making on care quality and clinical outcomes for glaucoma.

This system collects data in a structured, coded format from both patients and providers whenever possible so that the data can be used for analysis or decision support purposes. In this instance data are being used to stage glaucoma and to provide guideline-based management plans for both providers and patients. Our system is designed to accommodate patients with low literacy, limited computer skills, and impaired vision. It also distinguishes between newly entered data and historical data elements that are pulled forward and allows the provider to modify these elements. With regard to confidentiality issues, we have derived a workflow that permits a patient access only to his/her record. Finally, the system further integrates the patient into the care process by providing educational videos on pertinent topics and by generating a patient-tailored visit summary handout that includes anticipatory guidance for future studies and encounters.

The findings of this study are limited in that our user group involved only a small number of eye care professionals and patients. However, other formative approaches report diminishing discovery of new insights after five subjects.17 As an additional limitation, this study focused on data collection for only a single condition related to eye care. Nonetheless, the lessons learned and approaches used to enable direct collection of data from patients for this project could be tested for multiple conditions and other clinical domains.

Integrating patients into the care delivery process could become an increasingly important intervention for improving healthcare quality and lowering costs. Involving patients in the direct collection of their own data increases their awareness of their conditions and the related symptoms and treatments. This process also can expose patients to contextually relevant educational resources such as the videos used in this project.

Future research is needed to explore the role of direct data collection from patients across the spectrum of medical specialties and in multiple languages. Additionally, as patients increasingly engage in recording their health information in tools such as personal health records, the need for integration of patient-entered data with provider documentation and commercial electronic health record is likely to increase. Further research will also be needed to explore how such integration can be effectively achieved.18

Conclusion

Through formative studies involving patients and providers, we have successfully developed and deployed an application that integrates patients into the care process by enabling them to directly enter their data. This tool overcomes issues related to patient literacy, computer experience, visual impairment, and maintaining patient confidentiality to integrate seamlessly into the workflow and data collection processes of optometrists in diverse private practice settings.

Acknowledgments

This study was funded in part by R01 EY018405 from the National Eye Institute, P30 EY0054722 NEI Core Grant for Vision Research, and the Duke Department of Ophthalmology.

References

- 1.Bachman J. Improving care with an automated patient history. Fam Pract Manag. 2007;14:39–43. [PubMed] [Google Scholar]

- 2.Slack WV, Hicks GP, Reed CE, Van Cura LJ. A computer-based medical-history system. N Engl J Med. 1966;274:194–198. doi: 10.1056/NEJM196601272740406. [DOI] [PubMed] [Google Scholar]

- 3.Bachman JW. The patient-computer interview: a neglected tool that can aid the clinician. Mayo Clin Proc. 2003;78:67–78. doi: 10.4065/78.1.67. [DOI] [PubMed] [Google Scholar]

- 4.Grossman JH, Barnett GO, McGuire MT, Swedlow DB. Evaluation of computer-acquired patient histories. JAMA. 1971;215:1286–1291. [PubMed] [Google Scholar]

- 5.Quaak MJ, Westerman RF, van Bemmel JH. Comparisons between written and computerised patient histories. BMJ Clinical Research Ed. 1987;295:184–190. doi: 10.1136/bmj.295.6591.184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Porter SC, Cai Z, Gribbons W, Goldman DA, Kohane IS. The asthma kiosk: a patient-centered technology for collaborative decision support in the emergency department. J Am Med Inform Assoc. 2004;11:458–467. doi: 10.1197/jamia.M1569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pearlman MH, Hammond WE, Thompson HK., Jr An automated “well-baby” questionnaire. Pediatrics. 1973;51:972–9. [PubMed] [Google Scholar]

- 8.Chun RW, Van Cura LJ, Spencer M, Slack WV. Computer interviewing of patients with epilepsy. Epilepsia. 1976;17(4):371–375. doi: 10.1111/j.1528-1157.1976.tb04448.x. [DOI] [PubMed] [Google Scholar]

- 9.Logie AR, Madirazza JA, Webster IW. Patient evaluation of a computerised questionnaire. Computers and Biomedical Research. 1976;9(2):169–176. doi: 10.1016/0010-4809(76)90040-9. [DOI] [PubMed] [Google Scholar]

- 10.Millstein SG, Irwin CE., Jr Acceptability of computer-acquired sexual histories in adolescent girls. J Pediatrics. 1983;103(5):815–819. doi: 10.1016/s0022-3476(83)80493-4. [DOI] [PubMed] [Google Scholar]

- 11.Hess R, Santucci A, McTigue K, Fischer G, Kapoor W. Patient Difficulty Using Tablet Computers to Screen in Primary Care. J Gen Int Med. 2008;23:476–480. doi: 10.1007/s11606-007-0500-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Biondich PG, Anand V, Downs SM, McDonald CJ. Using Adaptive Turnaround Documents to Electronically Acquire Structured Data in Clinical Settings. AMIA Annu Symp Proc. 2003:86–90. [PMC free article] [PubMed] [Google Scholar]

- 13.Porter SC, Silvia MT, Fleisher, et al. Parents as direct contributors to the medical record: validation of their electronic input. Ann Emerg Med. 2000;35:346–52. doi: 10.1016/s0196-0644(00)70052-7. [DOI] [PubMed] [Google Scholar]

- 14.American Optometric Association Consensus Panel Care of the Patient with Open Angle Glaucoma. American Optometric Association, 2002. Available at: http://www.aoa.org/documents/CPG-9.pdf (accessed 2010.03.14)

- 15.Lobach DF, Silvey GM, Macri JM, Hunt M, Kacmaz RO, Lee PP. Identifying and overcoming obstacles to point-of-care data collection for eye care professionals. AMIA Annl Symp Proc. 2005:465–469. [PMC free article] [PubMed] [Google Scholar]

- 16.Brusini P, Filacorda S. Enhanced Glaucoma Staging System (GSS 2) for classifying functional damage in glaucoma. J Glaucoma. 2006;15:40–46. doi: 10.1097/01.ijg.0000195932.48288.97. [DOI] [PubMed] [Google Scholar]

- 17.Nielsen J. Usability engineering. Boston, MA: Academic Press Inc.; 1993. [Google Scholar]

- 18.Kaelber DC, Jha AK, Johnston D, Middleton B, Bates DW. A Research Agenda for Personal Health Records (PHRs) J Am Med Inform Assoc. 2008;15:729–736. doi: 10.1197/jamia.M2547. [DOI] [PMC free article] [PubMed] [Google Scholar]