Abstract

Electronic health records contain an abundance of valuable information that can be used to guide patient care. However, the large volume of information embodied in these records also renders access to relevant information a time-consuming and inefficient process. Our ultimate objective is to develop an automated summarizer that succinctly captures all relevant information in the patient record. In this paper, we present a cognitive study of 8 clinicians who were asked to create summaries based on data contained in the patients’ electronic health record. The study characterized the primary sources of information that were prioritized by clinicians, the temporal strategies used to develop a summary and the cognitive operations used to guide the summarization process. Although we would not expect the automated summarizer to emulate human performance, we anticipate that this study will inform its development in instrumental ways.

Introduction

Electronic health records (EHRs) provide an abundance of data. Clinicians can read previous notes, reports of different tests and radiology studies, as well as access laboratory data. While EHRs are very effective at amassing such information, they often lack the functionality to aggregate or synthesize the available data for a given patient, especially when it comes to the information conveyed in the notes themselves. When accessing the “raw” record (that is, the collection of labs, notes and reports amassed over time), physicians have to sift through so much information that it becomes difficult to differentiate relevant information from irrelevant or duplicated information. In an outpatient setting, for instance, it is not uncommon to see patients with several hundred notes ranging over several years, especially for patients with chronic disease. Information overload represents a significant obstacle to their care.1

A document that summarizes a longitudinal patient record can provide physicians with all the pertinent information he or she needs without the corresponding overload. Previous studies have shown that patient record summaries which present a structured overview of patient health information can have a positive impact on overall patient care.2 The goal of our work is to understand how physicians synthesize information about patients when reviewing their records and to develop a cognitive model of summarization of longitudinal patient information. This, in turn, will inform our work on the automated summarization of the patient record. This study focuses on the process of summarization by physicians, rather than the resulting summary itself. The particular research questions we focused on are (i) what are the sources of information physicians rely on when summarizing information about a patient? (ii) what strategies do physicians follow to prioritize the selection of information? and (iii) how can we characterize the cognitive operations that guide the summarization process?

Background

The EHR promises efficient retrieval of medical information.3 Unfortunately, EHRs have still not been widely embraced by the medical community.4 One of the reasons is that the lack of structure in the narrative text embodied in most longitudinal records is not optimally conducive to patient care. The emergence of EHR dashboards are a promising development in that they provide a means for easy access to relevant data. However, they tend to emphasize findings most central for quality reporting and population management.5 On the other hand, summaries have the capability to be fine-tuned to the needs of clinicians for individualized patient care.

The central purpose of summarization is to take a body of information and reduce its size and content to its important points. The study of the summarization process has been a primary method of study in cognitive science for many years.6 It is an effective means to gain insight into the process of understanding. One of our objectives in the development of an automated summarizer is to achieve a measure of congruence between the summary representation and the physician’s mental model of the patient. Towards that objective, we endeavor to characterize how physicians prioritize and represent relevant information when reviewing a patient chart. The study of the human summarization process has also proven to be useful in the development of heuristics for generating technical documents.7 This research is informed by cognitive methods and theories of human-computer interaction in the context of health information technologies.8 To the best of our knowledge, there have been no previous cognitive research studying EHR summarization.

Methods

Experimental Setup

Eight physicians (two attending and six fellows) were recruited and four patients were selected for this study. Each physician was asked to create summaries for two of the four patients, all of whom had a diagnosis of chronic kidney disease, based on data contained in the patients’ electronic health record. The physicians, all nephrologists, were given full access to the two patient records on our institution’s EHR (WebCIS) and were able to view all patient information (notes, labs, reports, demographics, visits, etc.) with no constraints on the historical nature of the data. Patients had mostly clinic visit notes but also notes and data pertaining to admissions. We developed a scenario in which the physicians were assigned the task of developing a summary document that would best communicate all the necessary information that an internist outside the institution would need if care for the patient was being transferred to that provider. The physicians were given up to 30 minutes per patient to create a document. They had access only to the information in WebCIS to create the summary and were to assume that the internist to whom care was being transferred had no access to the electronic record. In total, 16 summaries were created. The study was approved by our institution’s Internal Review Board.

EHR Environment

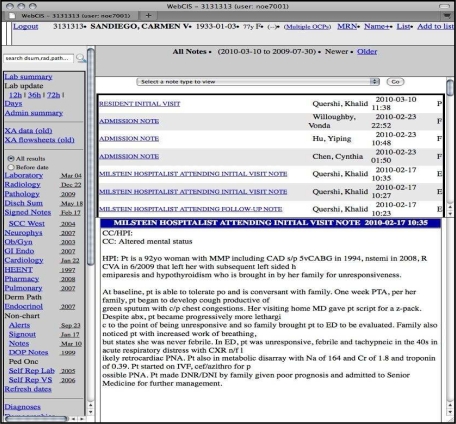

WebCIS is the in-house EHR at the Columbia University Medical Center. Patient information can be accessed by clicking on the various hyperlinks along the left-hand side of the interface. We will refer to the hyperlinks in this paper as the “Sections” of the EHR. Sections include “Notes”, “Laboratory”, “Radiology” and others. Clicking on “Cardiology,” for example, will direct a physician to cardiological test results such as an “Exercise Thallium Test.” Figure 1 shows a screen shot of the WebCIS interface.

Figure 1.

WebCIS interface illustrating dummy patient data.

Data Collection

Sixteen 30-minute sessions were captured in Morae™, a multifaceted usability, video capture and analysis tool. Morae™ provides a video of all screen activity and logs a wide range of events and system interactions. This enables us to study how each physician created the summary in depth. We also asked the physician to think-aloud as he or she created each summary.

We used a method of goal-action coding with a ‘think-aloud’ protocol in order to analyze the strategies physicians used to create each summary.8 This approach draws on the concept of a cognitive walkthrough, a task-based expert system evaluation based on Norman’s Theory of Action that simulates users’ experience with a system by identifying the goal–action sequences required for completion of a specific task. During a think-aloud protocol, individuals with domain knowledge are asked to verbalize or think out loud as they carry out tasks.

Analysis

We first focused on a quantitative analysis of the time the physicians spent in the different sections of the EHR and the strategies they employed to navigate the patient record. This analysis was aimed at answering our first two research questions regarding summarization, namely the sources of information physicians rely on and the strategies they follow to prioritize the selection of information. We calculated the portion of time a physician spent in each section of WebCIS as a percentage of the total time he or she spent in WebCIS during that session. For this calculation, we excluded any time during the session that the physician spent writing or revising the summary document and focused on just the time the physicians were viewing the patient record.

We also analyzed the order that each section was visited and how frequently the physicians went back to sections already visited earlier in that session. Using Morae™, we delineated the start of a section visit when the physician clicked on that section’s link and the end of a section visit when he or she clicked on a different section’s link.

Our third area of analysis was more qualitative in nature and aimed to answer our last research question to characterize the cognitive operations that guide the summarization process based primarily on what the physicians said while creating the summaries in the think-aloud protocol. We coded task-based goals that the physicians set as they moved through WebCIS and, using Morae, we marked the instances in the recordings where these goals were executed.

Results

Average Time in Each Section of the EHR

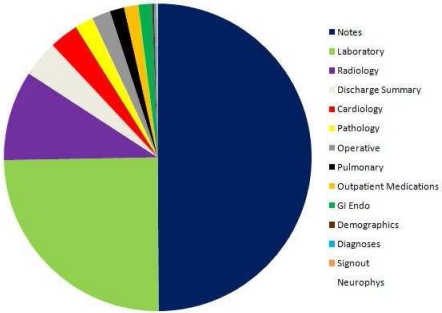

Figure 2 represents the average percent of time spent by the physicians in each section of WebCIS over all 16 recorded sessions. All but two of the sessions lasted longer than 18 minutes with most of them lasting over 25 minutes.

Figure 2.

The average percent of time that physicians spent in each section of the EHR.

Physicians, on average, spent the majority of their session time in the “Notes” section followed by “Laboratory” then “Radiology” sections. In fact, these three sections alone accounted for more than 80% of the sections where the physicians searched for relevant information in WebCIS. The percentage of time spent in the remaining sections ranged from 4% for the “Discharge Summary” section to less than half of one percent for the “Neurophys” section.

Order of and Duration of Time in Accessed Sections

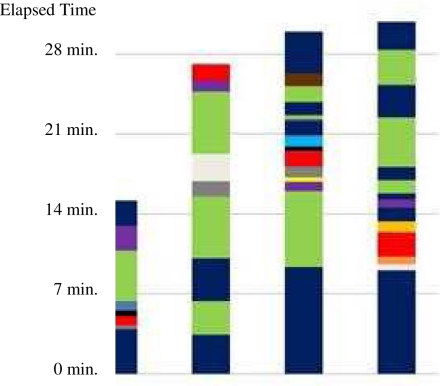

For each of the sessions, we analyzed when the physician transitioned between tasks and moved on to other sections. Figure 3 shows four separate sessions all for the same patient, each session represented by a colored bar with the elapsed time of the session along the left hand side.

Figure 3.

The chart above represents four individual sessions consisting of four different physicians creating a summary for the same patient. Each bar shows how the physician moved from one section of the EHR to another as he or she created the patient summary. For example, the second physician spent approximately 3.5 minutes in “Notes”, moved to “Laboratory” for about 2.5 minutes and then navigated back to “Notes”. (See Figure 2 for section legend.)

Every one of these four physicians started their session by visiting “Notes” but then varied both the order and the frequency of the section visits. For example, one physician (the third bar from the left) frequently went back and forth to areas already visited while another (the first) only returned to “Notes” to complete the summary. Also, note in Figure 3 how three of the four physicians ended their session in the “Notes” section (the first, third and fourth bars from the left) as they reviewed the notes again before declaring the summary completed.

Identification of Goals

Based on our analysis of the think-aloud protocols, we discovered three primary goals guiding physicians in reviewing the patient record. These goals were: Identify, Validate, and Ascertain Status.

Identify

Since the physicians had no knowledge of the patients before reviewing their records, their first goal was to “identify” problems that needed to be communicated in the summary. This task tended to occur primarily in the most recent notes as the physicians reviewed what other doctors had discovered and documented. The “Identify” goal continued throughout the session as the physicians reviewed the patient records looking for findings he or she may have missed.

Validate

After the “Identify” phase, the physicians attempted to validate their initial findings by looking in other areas of the record to confirm a problem or a diagnosis that was noted earlier. For example, some physicians used the “Outpatient Medications” section to ensure that the identified problems were confirmed by the medications taken by the patient and to check for the existence of any prescribed medications being taken to treat problems not previously identified. Other physicians used sections such as “Laboratory”, “Pathology” and “Cardiology” to confirm that the tests verified the earlier findings.

Furthermore, physicians also tended to look in older “Notes” to validate what they had discovered in the more recent ones, particularly when the older notes were of a different type or written by another clinician.

Ascertain Status

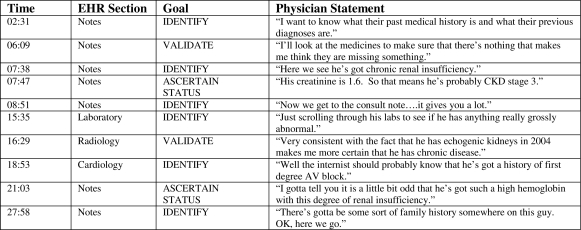

Once a disease or problem was identified and the diagnosis was confirmed, the physicians often looked to “ascertain the status” of the problem by scanning the patient record to discover if the condition was stable, getting better or getting worse. This step tended to occur mostly in the “Notes” and the “Laboratory” sections. Figure 4 presents an excerpt of a think-aloud protocol. We coded the physician’s comments as “Identify”, “Validate” or “Ascertain Status”, noted the time of the session when the comment was made and the section of the EHR he was negotiating at the time.

Figure 4.

Excerpts from a session in which the physician verbalized his thoughts aloud while performing the summary-creation tasks. We categorized his comments into one of three phases: Identify, Validate, Ascertain Status. The table also presents elapsed time (the physician had 30 minutes to complete the summary) as well as the section of the EHR the physician was reviewing when he made the corresponding statement.

Discussion

Our first research question was to identify the sources of information that physicians employed when summarizing the patient record. We can use the elapsed time spent in each section of the EHR to infer the overall importance physicians placed on that section to create their summaries (Figure 2). On average, physicians spent more than half their time reviewing clinical notes to glean important information. In addition, they spent considerable amounts of time reviewing and extracting laboratory and radiology findings. This provides a basis for inferring the prioritization scheme.

While the physicians, on average, tended to focus less on the other sections like “Pathology” and “Pulmonary”, these areas could, of course, depending on the patient, contain relevant patient information necessary for a satisfactory summary document. However, the sessions indicate that much of the information in these lesser-visited areas are represented elsewhere in the patient record particularly in the “Notes” section. This is consistent with other studies that have identified the large amount of narrative redundancy found in many electronic health records.9

The second research question was to characterize physicians’ summarization strategies. Based on our analysis of the recorded sessions, we can infer several potential strategies as to how these physicians decided on what sections to visit and in what order.

One strategy was for the physician to follow, the structure and sequential layout of the sections of the EHR. In Figure 3, one physician (third from the left) first clicked on “Notes” and then visited other sections of the EHR in the order that they are presented down the left-hand side of the WebCIS display (e.g., “Laboratory”, followed by “Radiology”, and then by “Pathology”).

A second strategy was to select information based on the temporal structure of the data. In other words, the most recent information was given the highest priority. A third strategy was to adhere to a mental template of the category of information that should be included in a summary document (e.g., surgical history) and searched through the record to instantiate these categories.

The fourth strategy involved a diagnostic approach in which the physicians discovered findings of importance in the clinical notes and then subsequently pursued findings in different sections in view to expand or confirm a diagnostic hypothesis. For example, when clinicians discovered that patient had chronic renal insufficiency, they subsequently sought out the patient’s creatinine value in the Laboratory section. This was a strategy used by the physician represented by the right-most bar in Figure 3. In that figure, we can see how, after spending almost 7 minutes in the “Notes” section, he successively alternates between Notes and other sections in view to confirm various hypotheses that were derived from findings in the notes.

Every clinician we observed evidenced at least one of these strategies. Most physicians employed a combination of strategies. For example, one physician started by following the WebCIS interface, and then discovered a finding which compelled her to navigate sections in view to expand on a hypothesis based on this finding. The physician also focused on the temporal order of documents in the patient record.

The third research question was to characterize the cognitive operations that guide the summarization process. In this study, we documented three operations that permeated the think-aloud protocols. Previous studies have recognized that reasoning strategies are a key component in many medical tasks including decision making, clinical problem solving and understanding of medical texts. The identification of these reasoning strategies has proved instrumental to the design of tools that help physicians to perform their work efficiently.11

In our view, clinicians’ summaries provide a window into their mental models of patient problems. The objective of a summary is to provide a succinct representation of the patient’s medical history that informs patient care in substantive ways. The study elucidated the sources of information that clinicians value most in constructing a summary. In addition, we identified clinicians’ summarization strategies and operations that are used to develop elements of a summary. The objective of our research program is to develop an automated summarizer for patient records. Although we would not expect this tool to emulate human performance, we anticipate that this study will inform the development of an automated summarizer in instrumental ways. In addition to providing insight into clinicians’ mental construction of summaries, the study could seed heuristics for source selection, prioritization and sequential strategies.

It is important to note that the physicians in our study were all nephrologists. This limits the generalizability of our results. In addition, the sample size was restricted to 8 clinicians and 4 patient cases. We cannot conclude that the sample of subjects or cases was representative of the larger population of clinicians. This is a formative study and we are continuing to investigate the cognitive processes involved in summarization. Specifically, we continue to pursue questions regarding the expert or “optimal” prioritization of summary information for a given task or problem. It is particularly important to better understand the temporal structure in summary construction and the expected value of information type (e.g., specific lab values) for a given task.

Conclusion

The goal of this study is to provide informative findings, which can aid in the programmatic generation of an efficient clinical summary document. By observing physicians manually create summaries, by analyzing what areas of the EHR they focused on and how they navigated through the longitudinal patient record, and by recognizing that they tended to follow the same basic goal-oriented approach of “Identify”, “Validate” and “Ascertain Status”, we have provided developers of automated summarization tools with a possible framework to consider for the design of their applications.

Acknowledgments

This work is supported by the grant R01 LM010027 (NE) from the National Library of Medicine.

References

- 1.Weir CR, Nebeker JR. Critical issues in an electronic documentation system. AMIA Annu Symp Proc. 2007:786–90. [PMC free article] [PubMed] [Google Scholar]

- 2.Wilcox AB, Jones SS, Dorr DA, Cannon W, Burns L, Radican K, et al. Use and impact of a computer-generated patient summary worksheet for primary care. AMIA Annu Symp Proc. 2005:824–828. [PMC free article] [PubMed] [Google Scholar]

- 3.Bates DW, Gawande AA. Improving safety with information technology. N Engl J Med. 2003;348(25):2526–2634. doi: 10.1056/NEJMsa020847. [DOI] [PubMed] [Google Scholar]

- 4.Berner ES, Detmer DE, Simborg D. Will the wave finally break? A brief view of the adoption of electronic medical records in the United States. J Am Med Inform Assoc. 2005;12:3–7. doi: 10.1197/jamia.M1664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Linder JA, Schnipper JL, Palchuk MB, Einbinder JS, Li Q, Middleton B. Improving care for acute and chronic problems with smart forms and quality dashboards. AMIA Annu Symp Proc. 2006:1193. [Google Scholar]

- 6.Alterman R. Understanding and summarisation. Artificial Intelligence Review. 1991;5(4):239–254. [Google Scholar]

- 7.Wolf CG, Alpert SR, Vergo JG, Kozakov L, Doganata Y. Summarizing technical support documents for search: expert and user studies. IBM Systems Journal. 2004;43(3) [Google Scholar]

- 8.Patel VL, Kaufman DR. Cognitive science and biomedical Informatics. In: Shortliffe EH, Cimino JJ, editors. Biomedical Informatics: Computer Applications in Health Care and Biomedicine. New York: Springer-Verlag; 2006. pp. 133–185. [Google Scholar]

- 9.Rizzo A, Marchigiani E, Andreadis A. The AVANTI project: prototyping and evaluation with a cognitive walkthrough based on the Norman’s model of action. Proc. Symposium on Designing Interactive Systems. 1997.

- 10.Wrenn JO, Stein DM, Bakken S, Stetson PD. Quantifying clinical narrative redundancy in an electronic health record. J Am Med Inform Assoc. 2010;17(1):49–53. doi: 10.1197/jamia.M3390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Arocha JF, Wang D, Patel VL. Identifying reasoning strategies in medical decision making: a methodological guide. Biomed Inform. 2005;38(2):154–71. doi: 10.1016/j.jbi.2005.02.001. [DOI] [PubMed] [Google Scholar]