Abstract

Without being included in accepted vocabulary standards, the results of completed patient assessment instruments cannot be easily shared in health information exchanges. To address this important barrier, we have developed a robust model to represent assessments in LOINC through iterative refinement and collaborative development. To capture the essential aspects of the assessment, the LOINC model represents the hierarchical panel structure, global item attributes, panel-specific item attributes, and structured answer lists. All assessments are available in a uniform format within the freely available LOINC distribution. We have successfully added many assessments to LOINC in this model, including several federally required assessments that contain functioning and disability content. We continue adding to this “master question file” to further enable interoperable exchange, storage, and processing of assessment data.

INTRODUCTION

Despite progress on many fronts, interoperable health information exchange continues to be hampered by the plethora of idiosyncratic conventions for representing clinical concepts in different electronic systems. Many times, the lack of interoperable connections between systems means that valuable results are unavailable to clinicians when they need it.1 LOINC® (Logical Observation Identifiers Names and Codes) is a universal code system for identifying laboratory and other clinical observations with the purpose of facilitating exchange and pooling of results for clinical care, outcomes management, and research.2 LOINC is developed and made available at no cost by the Regenstrief Institute. Some domains of LOINC like laboratory testing2,3 and radiology reports4 are very mature and have demonstrated good coverage of content in live systems.

Patient assessment instruments like survey instruments and questionnaires represent an important and widely used method to measure a broad range of health attributes and aspects of care delivery, from functional status to depression, quality of life, and many other domains. Because of its focus on all clinical observations, LOINC has embraced the representation of assessments since its early development when it included codes for standardized scales such as the Glasgow Coma Score and the Apgar Score. Prior work5,6 has demonstrated the capability of LOINC’s semantic model to represent many assessments with only modest extensions.

Over time, we have both significantly refined LOINC’s model for patient assessments and added much new content. Here we present a summary of this progress. Specifically, the purpose of this paper is to describe LOINC’s model for assessments, the methods and rationale by which this model was developed, the current assessment content, and some of the lessons learned in the process.

BACKGROUND

Fully specified LOINC names are constructed on six main axes (Component, Property, Timing, System, Scale, and Method) containing sufficient information to distinguish among similar observations.2 Different LOINC codes are assigned to observations that measure the same attribute but have different clinical meanings. The LOINC codes, names, and other attributes are distributed in the main LOINC database made available at no cost in regular releases on the LOINC website (http://loinc.org). In addition to the LOINC database, Regenstrief develops and distributes at no cost a software program called RELMA that provides tools for searching the LOINC database, viewing detailed accessory content, and for mapping local terminology to LOINC terms.

LOINC’s aim in including assessment instrument content is to provide a “master question file” and uniform representation of the entire instrument’s essential aspects to support interoperable exchange, storage, and processing of the results. In 2000, Bakken et al5 evaluated the LOINC semantic structure for representing 1,096 items from 35 different assessment instruments. Overall, their analysis supported the adequacy of LOINC’s semantic model for this content with a few minor extensions to the LOINC axes. Example extensions included allowing aggregate units like “family” in the System axis and distinguishing among reported and observed findings in the Method. Through discussion with the Clinical LOINC Committee, items from several of the modeled assessment instruments were included in LOINC version 2.00 (January 2001). That LOINC release also included new fields to store the exact question text and the survey question’s source.

Choi et al7 demonstrated the capability of the LOINC model in pilot work to represent items from the Outcomes and Assessment Information Set (OASIS)-B1, a comprehensive assessment completed for all clients of home health agencies certified to participate in the Medicare or Medicaid programs. White and Hauan6 later proposed an additional set of extensions to the LOINC schema that were derived from the Dialogix tool that implements many types of assessment instruments.

METHODS

The principal methods we used to develop LOINC’s model for representing patient assessments were iterative refinement and collaborative development.

Iterative Refinement

LOINC’s general approach in this domain recognizes that standardized assessments have psychometric properties that are essential to their interpretation. Thus, we include elements such as the actual question text and the allowable answer options as attributes of the LOINC observation code. Consistent with LOINC’s overall development philosophy8, new content is added based on requests from the end-user community and other stakeholders, with modeling guided by Regenstrief and the LOINC Committee. The extensions proposed by White and Hauan6 began a conversation within the Clinical LOINC Committee about the existing model. As we added new assessments to LOINC, we continued uncovering new wrinkles needing debate and discussion.

Collaborative Development

Our early extensions grew out of efforts to fully represent in LOINC the Minimum Data Set (MDS) version 2, which is required by Centers for Medicare and Medicaid Services (CMS) in skilled nursing facility assessments. This project was championed by the Office of the Assistant Secretary for Planning and Evaluation (ASPE) in the U.S. Department of Health and Human Services9,10 and also included collaboration with the Consolidated Health Informatics Functioning and Disability Workgroup as they reviewed interoperability standards for this domain. Simultaneously, we built the full complement of items for OASIS-B1. In 2007, we began working with an extended team of collaborators through RTI International on a LOINC representation of the Continuity Assessment Record and Evaluation (CARE)11 instrument that was being developed by CMS for use in post acute care settings. We welcomed this first opportunity to dialogue and work alongside the instrument developers at such an early stage. With support from ASPE, we later joined many other collaborators in developing HL7’s Draft Standard for Trial Use (DSTU) “CDA Framework for Questionnaire Assessments and CDA Representation of the Minimum Data Set Questionnaire Assessment”12 and built the full representation of MDS version 313 and subsequently OASIS-C14.

RESULTS

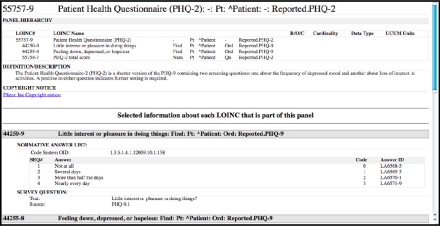

Presently, LOINC includes nearly 3,000 terms from more than 20 assessment instruments, including current and future versions of MDS and OASIS, the developing CARE tool, and many others. Figure 1 is an example of a short assessment from the detailed display of RELMA that illustrates some of the rich assessment content. In the following sections we present this structure and the additional attributes in detail, highlighting complexity of the information contained in these instruments.

Figure 1.

RELMA details view (partial screenshot) of the PHQ-2 panel term and associated details.

Hierarchical Panel Structure

The LOINC model for patient assessments builds on the basic panel model first used for laboratory batteries. A LOINC panel term is linked to an enumerated set of child elements in a hierarchical structure. Child elements can themselves be panel terms, which enables nesting. Fully specified LOINC names for panel terms typically have the assessment name in the Component, a “-” for the Property and Scale (because the child elements vary in these axes). Many assessment instruments exist in several variants that contain different subsets of the assessment’s items. For example, the OASIS-C instrument has five unique forms that represent subsets for Start of Care, Resumption of Care, Follow-up, Transfer to Facility, and Discharge from Agency. We assign a separate LOINC panel code and build its complete structure for each variant because they are used independently.

Attributes of Individual Assessment Items

In addition to the codes and term names for individual assessment items, the main LOINC table contains other fields for additional attributes. Some of these additional attributes were needed for and are used almost exclusively by assessment LOINCs (e.g. Question Text). Others are used widely by other LOINC concepts. Table 1 lists a subset of attributes that are relevant for assessment items.

Table 1.

LOINC Attributes Relevant for Assessment Items.

| LOINC Attribute | Description |

|---|---|

| Question Text | Exact text of survey question |

| Question Source | Assessment name and question number |

| External Copyright | External copyright notice |

| Definition/Description | Narrative describing this item |

| Example Units | Example units of measure |

| HL7 Field Sub ID | HL7 field where content should be delivered (if Null, presume OBX) |

| HL7 v2 Data Type | HL7 v2 data type |

| HL7 v3 Data Type | HL7 v3 data type |

Many assessment instruments are copyrighted and made available under specific terms-of-use. To capture this information, we added to the LOINC table an External Copyright field that stores the copyright notice (up to 250 characters). When using the RELMA program, codes with terms-of-use beyond LOINC’s license are visually highlighted and have links to view the full conditions. Consistent with LOINC’s overall distribution aims, we have included content that allows free use and distribution for clinical, administrative, and research purposes either with permission or under applicable terms-of-use.

To accomodate the complete set of items in some assessments, we created LOINC codes for concepts like “patient first name” that are an exception to our usual rule about not creating terms for information that has a designated field in an HL7 message (e.g. PID-5.2).8 To clarify that these data have a dedicated place in the message and make it easy to create an appropriate transform, we added an HL7 Field Sub ID attribute to the LOINC table for identifying this content and specifying its designated HL7 field.

Structured Answer Lists

Because the clinical meaning of assessment questions is tightly coupled with the allowable answers, we built a data structure to represent answer lists. At the level of the individual answers, we store a LOINC-generated answer ID, the exact answer string, answer sequence in the list, and the local code (if it exists). We can also store the score value if the answer is used in a scoring scheme and an alternate global identifier (code, name, and code system), e.g. from SNOMED-CT or UMLS, if appropriate.

At the answer list level, we store a flag that identifies the list as “normative” (true for most assessments) or an “example” list. This flag cues users about whether a particular LOINC code has a precicely defined answer list (e.g. from a validated instrument or authoritative source) or an answer list that is meant as a “starter set” or example. For answer lists with enumerated options stored in LOINC and not defined elsewhere, Regenstrief generates an OID to identify that collection of answers. For items whose answers can be drawn from a large terminology such as ICD or CPT, we do not enumerate those lists but rather identify them with a flag and indicate the codesystem and its OID. We have also added a field to store a URL for an external system (e.g. PHIN VADS) where users can find additional information.

Panel-specific Attributes

As we added new assessment content, it became clear that we needed to represent some attributes at the level of the instance of the item within the panel. These non-defining attributes could vary for the same LOINC concept used in different assessments or on different forms of the same assessment. For example, “measured body weight” appears as component of many different assessments. In the context of each instrument, that item could have different local codes, help text, validation rules, or associated branching logic. Thus, all of these attributes must be tied to the instance of the item in a particular panel. Table 2 lists some of these panel-specific attributes.

Table 2.

Panel-specific Attributes for Assessment items.

| LOINC Attribute | Description |

|---|---|

| Display name override | Display name for item on form |

| Cardinality | Allowable repetitions for item |

| Observation ID in form | Local code for item |

| Skip logic | Branching logic |

| Data type in form | Form-specific data type for item |

| Answer sequence override | Override of default answer sequence |

| Consistency checks | Validation rules for item on form |

| Relevance equation | Determines relevance of item on form |

| Coding Instructions | Directions to answer item on form |

Item display names also vary across instruments. Often, the Component of a LOINC term name works as the name to capture the item text. As previously described, for items that are asked as questions we store the exact question text and use that field to capture the item text. However, there are also circumstances where the same clinical observation has different labels across instruments (e.g. “BMI” versus “Body Mass Index”). To capture this variability, we added a “display name override” field associated with the instance of an item in a panel.

Assessment Content in the LOINC Distribution

In addition to their inclusion within RELMA, beginning with LOINC version 2.26 (January 2009), an export of the panels and forms content has been available as a separate download in the LOINC release. This spreadsheet contains separate worksheets for the three files defining the the full assessment content: one for the hierarchical structure and panel-specific attributes, another for the LOINC concepts and associated attributes, and another defining the answer lists associated with each concept. Table 3 gives the various assessments available in this format in the current LOINC release.

Table 3.

Assessments available in structured export format in LOINC version 2.30.

| Assessment Name |

|---|

| Brief Interview for Mental Status (BIMS) |

| Continuity Assessment Record and Evaluation (CARE) |

| Clinical Care Classification (CCC) Classification |

| Confusion Assessment Method (CAM) |

| Geriatric Depression Scale (GDS) |

| Geriatric Depression Scale (GDS) – short version |

| HIV Signs and Symptoms (SSC) Checklist |

| howRU |

| Living with HIV (LIV-HIV) |

| Mental Residual Functional Capacity (RFC) Assessment Form |

| Minimum Data Set (MDS) version 2 |

| Minimum Data Set (MDS) version 3 |

| Nursing Management Minimum Data Set (NMMDS) |

| Omaha System |

| Outcome and Assessment Information Set (OASIS) – B1 |

| Outcome and Assessment Information Set (OASIS) – C |

| Patient Health Questionnaire (PHQ) – 9 |

| Patient Health Questionnaire (PHQ) – 2 |

| Physical Residual Functional Capacity (RFC) Assessment Form |

| Quality Audit Marker (QAM) |

| US Surgeon General Family Health Portrait |

DISCUSSION

We have developed a robust model in LOINC for representing a wide variety of patient assessments. By refining this model and continuing to expand the assessment content included in LOINC we are building a freely available “master question file” and uniform representation of the essential aspects of assessment instruments. Such standardization is an enabling step towards interoperable exchange, storage, and processing of assessment data.

LOINC’s model for representing assessment content has been endorsed by the National Committee on Vital and Health Statistics based the recommendations from the Consolidated Health Informatics workgroup on Functioning and Disability.15 These recommendations adopt as a standard the LOINC representation of federally-required assessment (1) questions and answers, and (2) assessment forms that include functioning and disability content. The LOINC model has been incorporated into the HL7 DSTU “CDA Framework for Questionnaire Assessments and CDA Representation of the Minimum Data Set Questionnaire Assessment”. Informed by this prior work, the Health Information Technology Standards Panel incorporated the HL7 DSTU approach into the C83 CDA Content Modules Component.16

We believe that LOINC’s representation of patient assessments has several advantages. Collecting the details about individual observations as well as panels and forms into a single database makes it easy for system implementers to access the content in a common format. The LOINC representation contains enough information to automatically generate a data collection form. Indeed, the Personal Health Record being developed at the National Library of Medicine17 has capabilities to read the LOINC assessments definition and dynamically generate data collection forms. Many promising opportunities also exist for adding new assessment content, and Regenstrief is already engaging in conversations about including widely used mental health instruments, public health case report forms, the PhenX protocols for clinical research, and PROMIS item bank for use in computerized adaptive testing.

Lessons and Recommendations

Variation abounds

Despite the growth of content in LOINC, reuse of items across instruments (and even between new versions of the same instrument) is less than we had expected. For example, although many of the items in MDS version 3 were similar to those in CARE the look-back reference period is different (seven days versus two days). Although there may be valid reasons for the change in reference period, it will interfere with the comparability of the data collected with these instruments.

Furthermore, we noticed differences that might have been avoided. For example, both CARE and MDS version 3 include questions from the PHQ18, which is a validated and copyrighted instrument, but both differs from the original PHQ by breaking each question into two responses and differ from each other in their answer options. Similarly, the MDS version 2, MDS version 3, OASIS-B1, and CARE instruments all ask providers to record the number of pressure ulcers at a given stage, but each does it differently. OASIS-B1 limits the scale to “4” which means “4 or more”; “9” on MDS version 2 means “9 or more” whereas “9” on CARE means “unknown”.

We urge clinical researchers and other potential assessment instrument developers to look closely at existing instruments. In some cases there may be good justification for making new instruments or tailoring existing ones. But before inventing yet another variant, the potential benefits should weighed against the loss of data comparability. The greater the amount of existing data and its generalizability, the more carefully we should approach a modification.

Starting from a uniform data model may bring clarity

Our starting point with most assessment instruments was typically a paper form, though some had their own unique software and data structures. In our journey to represent these various assessments in a uniform data model, we were forced to reconcile many potential discrepancies: how were “unknown” or “undetermined” answers stored, for items with an answer of “other specified ___” how is the “other” value stored, which text was really the item and which was supplementary, are units of measure implied, etc. We also noted big differences in question styles. Some instruments required answers of yes, no, or unknown to a large list of potential diseases whereas others would store only the active diseases. We encourage researchers to consider starting with the LOINC model as a template that may help elucidate these hidden challenges.

Intellectual property issues present large challenges

In a resource-consuming step, Regenstrief must negotiate separate agreements with each copyright holder prior to making the content available in LOINC (even if it is licensed for other purposes). Most owners want attribution and protection against changing the items, which are reasonable. But some owners restrict use in difficult ways and/or require royalties for each use. These strong demands present large barriers to widespread standards-based adoption. We implore the agencies that fund development of assessments to require that the developers avoid such restrictive licenses.

CONCLUSION

Through iterative refinement and collaborative development we have built a successful model for representing assessment content in LOINC. We will continue adding to this freely available “master question file” to support interoperable exchange, storage, and processing of assessment data.

Acknowledgments

The authors thank the Clinical LOINC committee, Kathy Mercer, Jo Anna Hernandez, Michelle Dougherty, Barbara Gage, Jennie Harvell, and Tom White for their valuable contributions. This work was performed at the Regenstrief Institute, Indianapolis, IN, and was supported in part by contracts HHSN2762008000006C from the National Library of Medicine, FORE-ASPE-2007-5 from the AHIMA Foundation, and 0-312-0209853 from RTI International.

REFERENCES

- 1.Smith PC, Araya-Guerra R, Bublitz C, et al. Missing clinical information during primary care visits. JAMA. 2005;293(5):565–571. doi: 10.1001/jama.293.5.565. [DOI] [PubMed] [Google Scholar]

- 2.McDonald CJ, Huff SM, Suico JG, et al. LOINC, a universal standard for identifying laboratory observations: a 5-year update. Clin Chem. 2003;49(4):624–633. doi: 10.1373/49.4.624. [DOI] [PubMed] [Google Scholar]

- 3.Vreeman DJ, Finnell JT, Overhage JM. A rationale for parsimonious laboratory term mapping by frequency. AMIA Annu Symp Proc. 2007;11:771–5. [PMC free article] [PubMed] [Google Scholar]

- 4.Vreeman DJ, McDonald CJ. Automated mapping of local radiology terms to LOINC. AMIA Annu Symp Proc. 2005:769–773. [PMC free article] [PubMed] [Google Scholar]

- 5.Bakken S, Cimino JJ, Haskell R, et al. Evaluation of the clinical LOINC semantic structure as a terminology model for standardized assessment measures. J Am Med Inform Assoc. 2000;7(6):529–38. doi: 10.1136/jamia.2000.0070529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.White TM, Hauan MJ. Extending the LOINC conceptual schema to support standardized assessment instruments. J Am Med Inform Assoc. 2002;9(6):586–99. doi: 10.1197/jamia.M1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Choi J, Jenkins ML, Cimino JJ, et al. Toward semantic interoperability in home health care: formally representing OASIS items for integration into a concept-oriented terminology. J Am Med Inform Assoc. 2005;12(4):410–7. doi: 10.1197/jamia.M1786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Huff SM, Rocha RA, McDonald CJ, et al. Development of the Logical Observation Identifier Names and Codes vocabulary. J Am Med Inform Assoc. 1998;5(3):276–92. doi: 10.1136/jamia.1998.0050276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Carter J, Evans J, Tuttle M, et al. Making the Minimum Data Set compliant with health information technology standards. Available: http://aspe.hhs.gov/daltcp/reports/2006/MDS-HIT.htm.

- 10.Tuttle MS, Weida T, White T, Harvell J. Standardizing the MDS with LOINC® and vocabulary matches. Available: http://aspe.hhs.gov/daltcp/reports/2007/MDS-LOINC.htm.

- 11.RTI International Post Acute Care Payment Reform Demonstration. Available at: http://www.pacdemo.rti.org.

- 12.HL7 Implementation Guide for CDA Release 2: CDA Framework for Questionnaire Assessments Release 1. Available: http://tinyurl.com/HL7QuestionnaireAssessmentsR1.

- 13.Centers for Medicare & Medicaid Services MDS 3.0 for Nursing Homes and Swing Bed Providers. Available: https://www.cms.gov/NursingHomeQualityInits.

- 14.Centers for Medicare & Medicaid Services . OASIS Overview. Available: https://www.cms.gov/OASIS. [Google Scholar]

- 15.National Committee on Vital and Health Statistics. Consolidated Health Informatics standards adoption recommendation: Functioning and Disability. Available: http://www.ncvhs.hhs.gov/061128lt.pdf.

- 16.Health Information Technology Standards Panel C83 – CDA Content Modules Component v2.0.1. Available: http://www.hitsp.org/ConstructSet_Details.aspx?&PrefixAlpha=4&PrefixNumeric=83.

- 17.National Library of Medicine The NLM Personal Health Record. Available: http://tinyurl.com/NLMPHROverview.

- 18.Kroenke K, Spitzer RL, Williams JB. The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med. 2001 Sep;16(9):606–13. doi: 10.1046/j.1525-1497.2001.016009606.x. [DOI] [PMC free article] [PubMed] [Google Scholar]