Abstract

As providers and systems move towards meaningful use of electronic health records, the once distant vision of data reuse for automated quality reporting may soon become a reality. To facilitate consistent and reliable reporting and benchmarking beyond the local level, standardization of both electronic health record content and quality measures is needed at the concept level. This degree of standardization requires local and national advancement and coordination. The purpose of this paper is to review national efforts that can be leveraged to guide local information modeling and terminology work to support automated quality reporting. Moreover, efforts at Partners HealthCare to map electronic health record content to inpatient quality metrics, terminology standards and to align local efforts with national initiatives are reported. We found that forty-one percent (41%) of the elements needed to populate the inpatient quality measures are represented within the draft documentation content and an additional 29.5% are represented within other Partners HealthCare (PHS) electronic applications. Recommendations are made to support data reuse based on established national standards and identified gaps. Our work indicates that value exists in individual healthcare systems engaging in local standardization work by adopting established methods and standards where they exist. A process is needed, however, to ensure that local work is shared and available to inform national standards.

Keywords: quality, electronic health record, meaningful use, terminology standards

Background and Significance

The electronic health record (EHR) has been recognized as a key factor in achieving quality care since the Institute of Medicine (IOM) published its early reports on patient safety.1–2 In preparation for implementation of electronic clinical documentation across Brigham and Women’s (BWH) and Massachusetts General Hospital (MGH) a draft set of interdisciplinary clinical content was identified, modeled (referencing terminology and quality standards), standardized and vetted over the course of six months in 2009. Over 200 interdisciplinary stakeholders from departments at both hospitals were involved in this effort. While the focus of the sessions was the vetting of content needed to capture primary data to support clinical care, stakeholders from quality departments and PHS Information Systems were included to provide feedback on secondary use of data requirements such as quality reporting and decision support. However, clinical stakeholders had final say regarding adding content for the sole purpose of quality reporting without perceived value to clinical care. The standardized content was then mapped to inpatient quality metrics and terminology standards. An analysis of the gap between content requirements for existing inpatient quality measures and the draft documentation content was completed. In addition, research was done to identify efforts occurring at the national level that might be leveraged to guide local information modeling and terminology work to support future automated quality reporting at PHS. The purpose of this paper is to report on these efforts and the strategies used to align local PHS efforts with national initiatives and to resolve existing gaps.

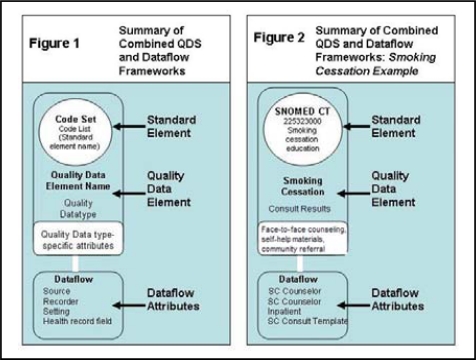

On the national level, a number of ongoing efforts have been conducted to support accurate, consistent and automated quality reporting. In 2007, the National Quality Forum (NQF) appointed a Health Information Technology Expert Panel (HITEP) to establish the building blocks needed to move the United States healthcare system away from manual data abstraction and performance measurement approaches towards automation. HITEP articulated early requirements for an automated process including the need to standardize quality measures down to the concept level to facilitate performance measurement and comparison beyond local benchmarking. HITEP put a framework3 for standardization in place by identifying a set of 11 common data categories and 38 data types that relate to the highest priority quality measures (based on the Institute of Medicine’s priority conditions). In addition, HITEP proposed a Quality Data Set (QDS) measure development framework defined as “a minimum set of data elements or types of data elements that can be used as the basis for developing harmonized and machine-computable quality measures”.4 The QDS specifies two levels of information (See figures 1–2):

Standard element: Consists of the data element name and the recommended code set (e.g., Smoking cessation education/SNOMED CT)

Quality data elements: Consists of the standard element (above), plus a quality data type (derived from set of 38 defined by HITEP).

Figures 1–2:

Summary of Combined QDS and Dataflow Frameworks. Adapted from NQF Issue Brief No. 17, October 2009, pg. 3.

The lower portion of Figures 1–2 include the QDS-Data Flow Model Framework (including attributes) which was included to ensure that the correct data are extracted from the EHR for quality measurement.4 In Figure 2, the framework is applied to capture Smoking Cessation Counseling quality measure data elements and attributes. The combined QDS-Data Flow Framework was designed to represent the data and information (both clinical and administrative) needed to calculate performance with quality measures. Incorporation of this framework into the development of future measures may be prerequisite for NQF endorsement.

In 2008, the Healthcare Information Technology Standards Panel (HITSP) produced a provisional data dictionary5 based on the requirements of the HITEP QDS-Data Flow Model Framework and the list of high priority quality measure concepts. The data dictionary serves to constrain vocabularies used to represent quality concepts to those that are harmonized with HITSP interoperability specifications. At this time, HITSP recommendations for terminology standards have been released for implementation. Mappings to specific concepts within the recommended terminologies has been initiated for 16 Center for Medicare and Medicaid Services (CMS) and Joint Commission inpatient measures in the domains of Stroke, Venous Thromboembolism and Emergency Department as part of a quality measure retooling effort. The consequent work published in the HITSP Quality Interoperability Specification report6 provides a detailed use case of how existing standards are employed to specify measures electronically and may serve as an exemplar for the retooling of quality measures at the local level in ways that are consistent with national efforts.

Defined standards7 (where they exist) to support “meaningful use” by HITEP data types are included in Table 1. Full automation of quality reporting requires that the complete data elements (e.g., the entire question-answer pair) are represented by standardized terminologies and codes within an EHR system and that the same standards are used both locally and nationally. Published national work to date largely relates to data values/value sets (e.g., the set of allowable answers of the question-answer pair).

Table 1:

Categories and Types of Data Elements Common to High Priority Measures and Adopted Terminology Standards

| Data Categories | Data Types | Adopted Vocabulary Standards to Support Meaningful Use Stage 1/Stage 2 | |

|---|---|---|---|

| Adverse Drug Event | Allergy | Intolerance | NA/Unique Ingredient Identifier (UNII) |

| Communication | Provider-provider | Provider-patient | |

| Diagnostic Study | Order | Result | ICD-9 CM, CPT4/ICD-10 CM/CPT4 |

| Diagnosis | Outpatient (billing) | Inpatient | ICD-9 CM, SNOMED CT/ICD-10 CM; SNOMED CT |

| Outpatient (problem list) | |||

| History | Behavioral (smoking) | Language | |

| Birth | Payment source | ||

| Care classification | Primary care | ||

| Death | provider | ||

| Enrollment trial | Sex | ||

| Ethnicity/race | Symptoms | ||

| Laboratory | Order | Result | LOINC |

| Location | Source/current/target | Transfer type | |

| Medication | Discontinue order | Outpatient duration | RX Norm |

| Inpatient administered | Outpatient order | ||

| Outpatient filled | |||

| Inpatient ordered | |||

| Opt out | Other reason | ||

| Physical Exam | Vitals | ||

| Procedure | Inpatient end | Outpatient | ICD-9 CM, CPT4/ICD-10 CM/CPT4 |

| Inpatient start | Past history | ||

| Order | Consult results | ||

Source: Corrigan, J. (2009) HIT Standards Committee Quality Workgroup (http://healthit.hhs.gov/portal/server.pt/gateway/PTARGS_0_10741_873897_0_0_18/HIT_Standards_QualityWG_Summary_508.ppt#261,3,HITEP 2008 -- Quality Data Set); Federal Register, Vol 75 (8), January 13, 2010, p. 2033; HITSP: http://hitsp.org/ConstructSet_Details.aspx?&PrefixAlpha=1&PrefixNumeric=6

Also of note, HITEP developed a set of criteria to assess the caliber of each data type as they currently exist in EHRs and calculated an overall score for each priority measure to determine its readiness for EHR implementation.7 The ultimate goal of these efforts is to create a national repository of quality data requirements (such as values of coded/enumerated data types, data elements, coding systems) and data definitions7 to support automated computing of quality measures (likely focus of Meaningful Use, Phase 2). In the short term, the framework can guide local efforts to ensure that the methodology used to facilitate integration of quality measures into local systems is consistent with national goals and with the likely direction of future national policy.

Project Sites

Designing and implementing systems with the capability of data reuse for quality reporting and decision support is a high priority for Partners HealthCare System (PHS). While provider order entry has been in place since the 1990s at the PHS academic medical centers and barcode based electronic medication administration records (eMAR) have been in place since 2005, inpatient clinical documentation currently occurs using paper-based records.

Research Methods

The following questions are addressed in the sections that follow.

What percentage of the data required to populate inpatient quality measures exists within the draft documentation content?

What percentage of the data required to populate inpatient quality measures exists in a structured format within other PHS systems?

What percentage of data elements’ core concepts (i.e., main topic of the question) required to populate inpatient quality measures can be represented by standardized terminologies (SNOMED CT, LOINC, International Classification of Nursing Practice (ICNP), and Clinical Care Classification System (CCC)).

What percentage of data elements represented by standardized terminologies have defined data values in the HITSP Quality Interoperability Specification report?

What strategies can be used to ensure accurate and consistent capture of clinical information in PHS systems to support future automation of quality reporting efforts?

Methods for questions 1–2

A list of inpatient quality metrics measured at both sites was obtained from quality leaders from BWH and MGH. Each quality metric was cross-referenced with published measure requirements to ensure that all required data elements were included and then entered into a spreadsheet. A lexical matching algorithm was used to identify potential matches from the draft documentation content to each quality data element.8 A list of candidate matches was generated for each data element along with a similarity rating ranging from 0 to 1.

A manual process was used to fully evaluate each match and to enter complete matches into a spreadsheet. All data elements were classified according to the Categories and Types of Data Elements identified by the NQF’s HITEP. Quality data elements without matches with documentation content were manually evaluated for potential matches with content in other systems.

Methods for question 3–4

The content from four reference terminologies (SNOMED CT, LOINC, ICNP and CCC) were obtained from standards organizations and combined into a single file. The same lexical matching algorithm described above was used to identify potential matches from the standard terminologies for each quality data element. An important caveat is that with the exception of LOINC, which defines coded/enumerated data elements (i.e., the question), the other three reference terminologies include only concepts. Therefore, when matched, SNOMED, ICNP and CCC correspond to the main ‘topic’ (or concept) of the data element and not the quality data element itself. Matches were generated with a list of candidate matches for each data element and a similarity rating ranging from 0 to 1. A manual process was used to fully evaluate each match and to enter complete matches into a spreadsheet. In addition, a manual process was used to map HITEP defined data values to quality data elements.

Results

A total of 19 inpatient quality measures were submitted by BWH and MGH Quality departments. Based on these measures, 278 discrete data elements are required to populate the outcome, inclusion and exclusion criteria of the measure numerators and denominators. Forty-one percent (41%) of the elements needed to populate the inpatient quality measures are represented within the draft documentation content (assessments, structured notes and problem lists) and an additional 29.5% are represented within other PHS electronic applications (e.g., Electronic Medication Administration applications (eMAR/eMAPPS), Partners Enterprise Allergy Repository (PEAR), Provider Order Entry (OE/POE), Clinical Data Repository (CDR), Discharge Modules, Incident Reporting System). About 60% of the data elements required to populate quality measures were successfully mapped to one or more standardized terminologies. Of the data elements that were mapped to standardized terminologies, 28.7% have value sets defined in the HITSP Quality Interoperability Specification report.6 Data elements were categorized using the HITEP classification system (see Table 2). Four items (1.4%) were unable to be classified using these categories and 6 items (1.1%) were classified at the data category level, but unable to be classified using the HITEP data types.

Table 2:

Percentages of PHS quality data elements classified by HITEP Data Category and HITEP Data Type.

| HITEP Data Category | % | HITEP Data Type | % |

|---|---|---|---|

| Adverse Drug Event | .70% | Allergy | .7% |

| Intolerance | 0 | ||

| Communication | 22% | Provider-provider | 5.8% |

| Provider-patient | 16.2% | ||

| Diagnostic Study | 4.0% | Order | 0 |

| Result | 4.0% | ||

| Diagnosis | 2.5% | Outpatient (billing) | 0 |

| Outpatient (problem list) | 0 | ||

| Inpatient | 2.5% | ||

| History | 4.3% | Behavioral (smoking) | 1.1% |

| Birth | .4% | ||

| Care classification | .4% | ||

| Death | .4% | ||

| Enrollment trial | .4% | ||

| Ethnicity/race | .72% | ||

| Language | .4% | ||

| Payment source | 0 | ||

| Primary care provider | 0 | ||

| Sex | .4% | ||

| Symptoms | 0 | ||

| Unable to classify | .4% | ||

| Laboratory | 6.5% | Order | 0 |

| Result | 5.4% | ||

| Unable to classify | 1.1% | ||

| Location | 4.0% | Source/current/target | 0.7% |

| Transfer type | 3.2% | ||

| Medication | 18.7% | Discontinue order | .4% |

| Inpatient administered | 9.4% | ||

| Inpatient ordered | 3.2% | ||

| Outpatient duration | .7% | ||

| Outpatient order | 4.3% | ||

| Outpatient filled | 0 | ||

| Will not fit in above categories | .7% | ||

| Opt out | 0 | Other reason | 0 |

| Physical Exam | 3.0% | Vitals | 3% |

| Procedure | 32.8% | Inpatient end | 2.5% |

| Inpatient start | 2.2% | ||

| Order | .4% | ||

| Outpatient | 0 | ||

| Past history | 0 | ||

| Consult results | 27.7 | ||

| Unable to Classify | 1.4% | 1.4% | |

The majority of data elements (80%) fell under the following HITEP categories and subcategories:

- Procedure: 32.8%

- – Consult results: 27.7%

- Communication: 22%

- – Provider-Provider: 16.2%

- – Provider -Patient: 5.8%

- Medication: 18.7%

- – Inpatient administered: 9.4%

- Laboratory: 6.5%

- – Results: 5.4%

Discussion

A major goal of health information technology is data reuse for performance measurement and quality reporting. The standardization work completed on the national level by the NQF and other groups forms the ground work for automated quality performance measurement and reporting. The QDS-Data Flow Model Framework laid the foundation for future measures that are machine computable. However, this work is still relatively untested and needs to be adopted and trialed in real systems. PHS and other healthcare systems can leverage this foundational work by adopting the HITSP recommended terminology standards for vocabulary including LOINC for results and assessments and SNOMED CT to represent detailed clinical content.

There is also value in healthcare systems providing recommendations to standards organizations regarding the detailed quality concepts that cannot be represented with existing standards or are represented by terminology standards that are not fully harmonized with SNOMED CT (i.e., ICNP). In addition, the QDS-Data Flow Model Framework and attributes can be leveraged to inform the design of the data and information models for clinical documentation and other electronic systems.

While 41% of the elements needed to populate the inpatient quality measures were successfully mapped to draft documentation content, the actual percentage that can be used for automated reporting will likely be lower, particularly before the documentation system is fully rolled out. However, understanding the subset of documentation content that readily links to organizational quality reporting efforts is an essential first step to building documentation templates consistent with these efforts and for closing gaps in the draft content. Inclusion of the quality parameters in the documentation content cross-walk will ensure that the parameters needed to populate quality measures are given full consideration when building documentation templates.

The planned roll-out for the documentation system at PHS is phased over several years. Initially flow sheets and a limited number of progress notes will go-live on acceptance testing units. We found that approximately 20% of data elements needed to populate the quality measures are contained on the flowsheets. About one-half (52%) of these data elements relate to nursing-sensitive measures (i.e., fall prevention, pressure ulcer risk assessment and restraint care). The other half relate to basic vital signs, line, catheter and ventilator days, and venous thromboembolism prophylaxis.

Progress notes hold potential for populating a large percentage of data elements (note that many of these data elements are also included on flowsheets). Inclusion of structured physician history and physical in early phases of the documentation project will support routine capture of these data elements. Adopting key recommendations from the HITSP Quality Interoperability Specification may be useful in this regard. For example, HITSP recommends that a standard specialty evaluation note/document, e.g., a Clinical Document Architecture (CDA) template is used to enable consistent determination of completed consults.6 Progress notes are a potential source for quality data elements related to consults, safety pause, procedures and sedation. However, the plan for phased rollout of documentation may limit the usefulness of electronic forms for quality reporting.

Inclusion of quality-related data elements on the documentation templates in isolation is not sufficient to assure data reuse. Reliable data for reporting is also dependent on use of the structured templates and completion of all structured fields by clinicians at the point of care. Therefore, it is essential that quality leaders, clinicians and informaticians collaborate on designing templates that support clinical workflow and quality reporting.

There are some limitations to this work. This paper describes ongoing efforts at a single healthcare system. However, because the approaches we have adopted are aligned with national standards, we believe that the work described and associated processes are generalizable to other health care systems and will become more meaningful as more systems engage in similar work and share their results.

Conclusion

While adoption of national standards holds clear benefits with regard to automated reporting, the local process to vet standards and gain consensus is slow. It may be unrealistic for healthcare systems to wait until a national standard is fully defined for all inpatient quality measures before beginning efforts to specify pertinent measures locally. Ideally, work completed on the local level using methods described in national exemplars such as the HITSP Quality Interoperability Specification report6 could in turn, potentially inform national standards. Therefore, value exists in individual healthcare systems engaging in local standardization work by adopting established methods and standards where they exist. A process is needed, however, to ensure that local work is shared and available to inform national standards. This method would move health systems away from “siloed” approaches to quality measurement and towards automated data capture and national benchmarking.

References

- 1.Linda T, Kohn JT, Corrigan JM, Donaldson MS, editors. To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Press; 2000. [PubMed] [Google Scholar]

- 2.Committee on Quality of Health Care in America, Institute of Medicine . Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academy Press; 2001. [Google Scholar]

- 3.Corrigan J. HIT Standards Committee Quality Workgroup. 2009. http://healthit.hhs.gov/portal/server.pt/gateway/PTARGS_0_10741_873897_0_0_18/HIT_Standards_QualityWG_Summary_508.ppt#261,3,HITEP 2008 --Quality Data Set. Last accessed, March 10, 2010.

- 4.National Quality Forum. Issue Brief No. 17, October 2009, pg. 1. www.qualityforum.org. Last accessed, March 11, 2010.

- 5.HITSP. Quality Data Elements of HITSP Quality Interoperability Specification Version:2.0 http://hitsp.org/ConstructSet_Details.aspx?&PrefixAlpha=1&PrefixNumeric=6. Last accessed, March 11, 2010.

- 6.HITSP Quality Interoperability Specification report. http://hitsp.org/constructset_details.aspx?prefixalpha=5&prefixnumeric=906. Last accessed, March 11, 2010.

- 7.Department of Health and Human Services Health Information Technology: Initial Set of Standards, Implementation Specifications and Certification Criteria for EHR Technology; Interim Final Rule. Federal Register. 2010 Jan 13;75(8):2033. [PubMed] [Google Scholar]

- 8.Rocha RA, Huff SM. Using Digrams to Map Controlled Medical Vocabularies. Proc Ann Symp Comput Appl Med Care. 1994;18:172–176. [PMC free article] [PubMed] [Google Scholar]