Abstract

Online health knowledge resources can be integrated into electronic health record systems using decision support tools known as “infobuttons.” In this study we describe a knowledge management method based on the analysis of knowledge resource use via infobuttons in multiple institutions.

Methods:

We conducted a two-phase analysis of laboratory test infobutton sessions at three healthcare institutions accessing two knowledge resources. The primary study measure was session coverage, i.e. the rate of infobutton sessions in which resources retrieved relevant content.

Results:

In Phase One, resources covered 78.5% of the study sessions. In addition, a subset of 38 noncovered tests that most frequently raised questions was identified. In Phase Two, content development guided by the outcomes of Phase One resulted in a 4% average coverage increase.

Conclusion:

The described method is a valuable approach to large-scale knowledge management in rapidly changing domains.

Introduction

Online health knowledge resources are decision support tools that help clinicians fulfill their information needs, improving patient care decisions.1 Like other knowledge-based systems, knowledge resources need to be comprehensive and current to address clinicians’ needs adequately.2 Yet, the increasing complexity and fast-paced growth of medical knowledge challenge the development and maintenance of large knowledge content collections, underscoring the importance of effective and efficient knowledge management methods.3

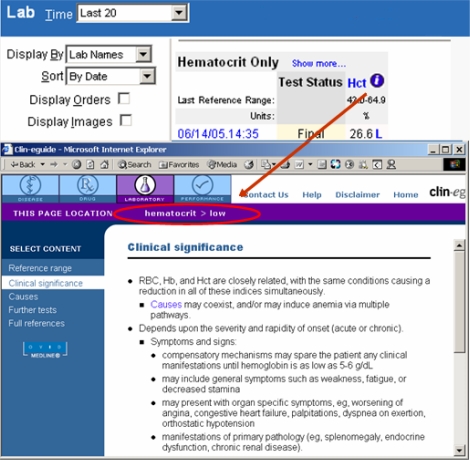

Knowledge resources have been integrated with electronic health record (EHR) systems in ways that take into account clinicians’ workflow and the context in which knowledge needs arise. Applications that provide this type of integration have been generally named “infobuttons.”4 Infobuttons offer context-specific links to a variety of resources, addressing questions in multiple domains, such as medications, diseases, and laboratory tests. For example, in a laboratory test results module, infobuttons may direct clinicians to resources that assist with the interpretation of the specific tests under review (Figure 1).

Figure 1.

A laboratory test results screen with an infobutton next to a low hematocrit (Hct) result.

One of the key success factors of infobuttons is the availability of resources that address the most common questions that arise during an EHR session. Therefore, it is essential for knowledge resource providers to identify frequent knowledge needs to prioritize knowledge development. In addition, resources with different target audiences or subdomains of knowledge may coordinate their activities to avoid unnecessary knowledge overlap.

Infobutton sessions are recorded in log files that can be analyzed for various purposes, such as usage trends, knowledge needs research, and debugging. A promising application of infobutton log files is to support knowledge management processes; more specifically, helping those charged with maintaining knowledge resources to identify areas that warrant closer attention.

In this study, we investigated the use of log files to identify knowledge areas for resource expansion using laboratory tests as a case study. The study used infobutton log files from Intermountain Healthcare, Columbia University, and Partners Healthcare that recorded access to two knowledge resources: Clin-eguide™ and ARUP Consult®.

Background

Inappropriate or unnecessary ordering has been estimated to affect 5% to 50% of all inpatient laboratory test orders, increasing healthcare costs and potentially leading to patient harm.5 Knowledge gaps due to rapid advances in diagnostic technology are among the causes of inappropriate laboratory test use and interpretation, suggesting that context-specific access to knowledge resources at the point of decision-making may be part of the solution.6 However, the rapid progress in diagnostic technology and the complexity behind the use and interpretation of tests constitute a significant barrier to the development of comprehensive and current knowledge resources in this domain.

Intermountain Healthcare:

‘Intermountain’ is an integrated delivery system of 22 hospitals and over 120 outpatient clinics in Utah and southeastern Idaho. Clinicians at Intermountain have access to infobuttons in the medication order entry, laboratory test results, and problem list modules of a Web-based EHR system.7 The laboratory results infobuttons are the second most popular, having accounted for 30% of the sessions in 2007.

Columbia University:

The Columbia University Department of Biomedical Informatics provides infobuttons for multiple institutions.4 For this study, we included instances of infobutton use at New York Presbyterian Hospital, including WebCIS and Sunrise Clinical Manager (Eclipsys Corporation, Boca Raton, Florida). Infobuttons are available in several functions, such as order entry, laboratory test results display, and discharge diagnosis list. The lab results infobuttons are the most popular, having accounted for 52% of the sessions in 2007 and 2008.

Partners Healthcare:

Partners Healthcare is an integrated health system of multiple community and specialty hospitals and a network of outpatient health centers in Boston. Infobuttons are available in a variety of clinical applications, such as the outpatient EHR, lab results viewer, inpatient order entry, and electronic medication administration records.8 In 2007, infobuttons were used 643,627 times by 15,218 distinct users.

Clin-eguidei:

The Clin-eguide lab module includes reference knowledge for over 100 commonly ordered lab tests. The lab content is coded with LOINC codes for seamless integration with electronic health records using the HL7 Infobutton standard.9

ARUP Consultii:

ARUP Consult is a free online laboratory test knowledge resource. It is maintained by ARUP Laboratories, an enterprise of the University of Utah and its Department of Pathology. Content is reviewed by University of Utah faculty.

Methods

We analyzed laboratory test infobutton sessions that originated from the three study sites. At these sites, requests for laboratory test knowledge are expressed in terms of LOINC® (Logical Observation Identifiers Names and Codes) codes. The main study measure was “session coverage,” i.e. the percentage of infobutton sessions in which resources retrieved relevant content. To determine session coverage, the frequency of infobutton requests in terms of LOINC codes was compared with the set of codes in the knowledge resource coverage lists. Sessions in which the laboratory test was not expressed as a LOINC code were excluded from the analysis.

The analysis was carried out in two phases: Phase One and Two analyzed sessions conducted in 2007 and 2008 respectively. Phase One provided a baseline and identified the most frequent coverage gaps. In Phase Two, we assessed the impact on session coverage after one of the resources, Clin-eguide, used the Phase One results to improve content coverage. The Chi-square test was used to compare session coverage between the two phases for each knowledge resource.

In addition to session coverage, the following measures were obtained: 1) test code coverage; 2) number of noncovered test codes that would have increased a resource’s coverage to 80% and 90%; and 3) overlap between the two knowledge resources.

The reports generated in Phase One were shared with the knowledge resources for further analysis and feedback. Clin-eguide classified the most frequently searched laboratory test codes according to “noncoverage reason” and “planned action” as follows: 1) “content not available / create new content”; 2) “content not available / do not create new content (e.g., test falls outside the scope of a laboratory test knowledge resource); and 3) “incomplete indexing / add test code to index.”

ARUP is in the process of applying a similar classification scheme to its noncovered content.

Results

Phase One:

In 2007, 34,111 instances in which a user clicked on an infobutton while reviewing laboratory results (1,376 unique LOINC codes) at the three sites were analyzed. Although on average this represents 24.8 infobutton sessions per laboratory test, a minority of 207 (15%) test codes accounted for 27,289 (80%) of the sessions.

The two knowledge resources combined covered 530 (38.5%) unique test codes, accounting for 26,779 (78.5%) of the sessions at the three sites (Table 1). Expanded coverage for a subset of 38 most frequently searched test codes would have increased Clin-eguide’s overall session coverage from 70.1% to 80%. Guided by this analysis, Clin-eguide developed four new content topics and added 31 LOINC codes to the index of existing topics. The cost of this work was approximately 100 man-hours; two thirds of which dedicated to new content development and one third to indexing enhancement. ARUP Consult is planning to follow a similar process, focusing on a subset of 63 codes that would have increased its coverage from 59% to 80%.

Table 1.

Overall knowledge resource coverage at the three study sites combined in 2007 and 2008.

| 2007 | 2008 | |

|---|---|---|

| Sessions | 39,067 | 46,956 |

| Sessions with LOINC | 34,111 (87.3%) | 38,221 (81.4%) |

| Unique LOINC codes | 1,376 | 1,523 |

| Clin-eguide | ||

| Sessions covered | 23,920 (70.1%) | 28,165* (73.7%) |

| LOINC codes covered | 306 (22.2%) | 342 (22.5%) |

| Additional codes for 80% / 90% coverage | 38 / 154 | 21 /138 |

| ARUP Consult | ||

| Sessions covered | 20,150 (59.1%) | 21,901* (57.3%) |

| LOINC codes covered | 344 (25.0%) | 364 (23.9%) |

| Additional codes for 80% / 90% coverage | 63 / 174 | 68 / 186 |

| Both resources | ||

| Sessions covered | 26,779 (78.5%) | 31,248 (81.8%) |

| LOINC codes covered | 530 (38.5%) | 577 (37.9%) |

p<0.0001

Overlap analysis showed that only 8.7% of the test codes and 50.7% of the sessions were covered by both knowledge resources in 2007 (Table 2).

Table 2.

Knowledge resource coverage at each study site in 2007 and 2008.

| Intermountain | Columbia | Partners | ||||

|---|---|---|---|---|---|---|

| 2007 | 2008 | 2007 | 2008 | 2007 | 2008 | |

| Sessions | 12,632 | 14,018 | 10,553 | 11,158 | 15,882 | 21,780 |

| Sessions with LOINC | 11,647 (92.2%) | 13,426 (95.8%) | 7,305 (69.2%) | 7,515 (67.4%) | 15,159 (95.4%) | 17,280 (79.3%) |

| Sessions covered by Clin-eguide | 8,081 (69.4%) | 9,640* (71.8%) | 5,314 (72.76%) | 5,962* (79.3%) | 10,525 (69.4%) | 12,563* (72.7%) |

| Sessions covered by ARUP Consult | 6,989 (60%) | 7,774† (57.9%) | 4,282 (58.6%) | 4,347‡ (57.8%) | 8,879 (58.6%) | 9,780† (56.6%) |

p< 0.0001;

p<0.001;

not significant

Phase Two:

In 2008, 38,221 laboratory test infobutton sessions expressed in terms of LOINC codes were conducted at the three sites (Table 1). Clin-eguide’s session coverage increased by a range of 2.4% at Intermountain to 6.6% at Columbia (Table 3). Session coverage for ARUP Consult decreased at Intermountain (2.1%) and Partners (2.0%). In addition, if Clin-eguide had been left unchanged after the 2007 analysis, it would have experienced a decrease of 2% in session coverage in 2008. To identify areas for future content development, Phase Two also identified a gap of noncovered tests that most frequently raised questions (Table 1).

Table 3.

Test code and session overlap between knowledge resources (cell upper and lower lines respectively) in 2007. C = covered; NC = not covered.

| Clin-eguide | |||

|---|---|---|---|

| ARUP | C | NC | |

| C | 120 (8.7%) | 224 (16.3%) | |

| 17,291 (50.7%) | 2,859 (8.4%) | ||

| NC | 186 (13.5%) | 846 (61.5%) | |

| 6,629 (19.4%) | 7,332 (21.5%) | ||

Discussion

This study describes a method to support large-scale knowledge management through the analysis of laboratory test knowledge sessions from multiple institutions. The study brings up five important points. First, the participation of three healthcare institutions with different information technology solutions strengthens the external validity of the results and the replicability of the method. Second, the proposed process and observed results offer an additional argument to compel health care institutions and knowledge providers to share data on the use of knowledge as well as knowledge itself. Third, point-of-care knowledge consumption, at least in the domain of laboratory tests, closely follows an 80/20 distribution, indicating that knowledge management prioritization is not only feasible but desirable. Fourth, the analysis suggests the need for a continuous, iterative, and targeted knowledge management effort to enable resources to keep abreast of changes in rapidly evolving domains such as diagnostic tests and medications.5 Fifth, institutions can use this type of analysis as a tool to assist with the selection of resources for their clinicians.

Phase One (2007):

This phase revealed knowledge areas that raised questions more frequently and therefore deserved expansion, more frequent updates, and more comprehensive indexing. For example, Clin-eguide used the reports provided in Phase One to prioritize new content development and indexing improvement. ARUP Consult plans to follow a similar approach, especially for tests that are performed at the ARUP Laboratories.

The two knowledge resources combined covered 78.5% of the infobutton sessions conducted at the study sites in 2007, leaving clinicians with no relevant knowledge in 21.5% of the sessions. The method proposed in this study helped knowledge resources to narrow this gap. Although a fairly large number of test codes triggered infobutton sessions, a relatively small subset accounted for most of the sessions. Therefore, knowledge resources that focus on this core subset will likely attain a broader level of coverage. For example, Clin-eguide’s overall coverage in 2007 would have increased from 70.1% to 80% if the 38 most frequent noncovered tests were accounted for. Moreover, 8 out of those 38 tests would be covered simply by adding the missing test code to the content index in Clin-eguide.

Infobuttons typically restrict the resources offered in a given context to the ones that are known to cover a given laboratory test.7 As a result resources cannot rely on their own usage logs to identify unfulfilled requests. Therefore, the sharing of knowledge use data from multiple institutions with knowledge providers is a crucial element in the prioritization of continuous content development.

Phase Two (2008):

The analysis revealed a 4.1% increase in Clin-eguide’s coverage for the sessions conducted in Phase Two in relation to Phase One. On the other hand, coverage decreased by 1.6% for ARUP Consult, which remained unchanged after Phase One, demonstrating the value of the focused knowledge management effort.

Although Clin-eguide’s coverage gains may not necessarily sound impressive, it is noteworthy that the observed improvement was achieved with a fairly modest content development effort. Additional gains are expected once Clin-eguide addresses the updated set of core noncovered tests identified in Phase Two. In addition, Clin-eguide would have experienced a decrease in session coverage had it been left unchanged. Finally, the 4.1% increase translates into over 1,100 additional sessions in which clinicians have been offered potentially relevant knowledge as a result of the expanded coverage.

Overall Findings:

The results of this study also suggest that a single knowledge resource is unlikely to offer complete coverage for laboratory tests, especially with the accelerated advances in diagnostic technology.5 This observation is in accordance with a previous report, in which no single knowledge resource was able to answer more than 10% of physicians’ questions.10 In a more realistic scenario, knowledge resources will specialize in subdomains that match their area of expertise or the primary needs of their customer base.

The log analysis showed little test code overlap between Clin-eguide and ARUP Consult (8.7% of the codes searched in the three sites during Phase One). In addition, ARUP Consult showed higher coverage of test codes whereas Clin-eguide showed higher session coverage. This finding is consistent with the stated goals of these resources. While Clin-eguide focuses on a core subset of most frequently ordered tests, ARUP Consult focuses on esoteric tests.

Although some level of specialization seems desirable and is probably inevitable, a wider range of choices is likely to compromise the clinicians’ ability to select and effectively use the most relevant knowledge resource for a particular question.11 To overcome this problem, infobuttons are designed to select the most relevant resources in a given context for the clinician (e.g., the resource(s) that are known to cover a particular LOINC code).7

This study also demonstrates some of the benefits of the common adoption of a standard terminology. The analysis would not have been practical had the participating institutions not used LOINC to code test results and had the knowledge resources not used LOINC to index content. In fact, the emerging HL7 Infobutton standard recommends the adoption of standard terminologies, such as LOINC for laboratory tests, as part of EHR context-specific knowledge retrieval requests.9 Nevertheless, this study also illustrates one of the limitations of LOINC when supporting content indexing. At the desired level of granularity for laboratory test content, multiple LOINC codes describe the same test content, potentially leading to incomplete indexing.

Other similar studies:

Similar approaches to the present study have been proposed to facilitate complex and laborious knowledge management processes.12,13 While previous reports focused on institution-specific knowledge management tasks, the current study proposes a replicable framework that leverages data from multiple stakeholders to support large-scale knowledge management practices.

Limitations:

The main limitation of the described method is that a successful content retrieval does not necessarily lead to the fulfillment of a knowledge need. Occasional prompts for clinician feedback on the outcome of knowledge sessions could help identify resource areas that commonly fail to address clinicians’ knowledge needs, potentially overcoming this limitation.

Conclusion

The combined analysis of point-of-care knowledge usage logs from multiple institutions is a valuable method to support large-scale, iterative knowledge management processes. This method could be applied to other knowledge domains, such as medications, diagnosis, and patient education material. Other types of knowledge-based decision support systems, such as drug-interaction alerts and order sets may also benefit from a similar approach and warrant further investigation.

Acknowledgments

This project was supported in part by National Library of Medicine Training Grant 1T15LM007124-10 and National Library of Medicine grant R01-LM07593. The authors also thank Beth Holloway for her assistance with the Clin-eguide analysis.

Footnotes

References

- 1.Magrabi F, Coiera EW, Westbrook JI, et al. General practitioners’ use of online evidence during consultations. Int J Med Inform. 2005;74(1):1–12. doi: 10.1016/j.ijmedinf.2004.10.003. [DOI] [PubMed] [Google Scholar]

- 2.Wyatt JC. Management of explicit and tacit knowledge. J R Soc Med. 2001;94(1):6–9. doi: 10.1177/014107680109400102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Matheson N. Things to come: postmodern digital knowledge management and medical informatics. J Am Med Inform Assoc. 1995;2(2):73–8. doi: 10.1136/jamia.1995.95261908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cimino JJ. Use, Usability, Usefulness, and Impact of an Infobutton Manager. Proc AMIA Annu Fall Symp. 2006:151–155. [PMC free article] [PubMed] [Google Scholar]

- 5.Van Walraven C, Naylor CD. Do we know what inappropriate laboratory utilization is? A systematic review of laboratory clinical audits. JAMA. 1998;280:550–8. doi: 10.1001/jama.280.6.550. [DOI] [PubMed] [Google Scholar]

- 6.Solomon DH, Hashimoto H, Daltroy L, Liang MH. Techniques to improve physicians’ use of diagnostic tests: a new conceptual framework. JAMA. 1998;280:2020–7. doi: 10.1001/jama.280.23.2020. [DOI] [PubMed] [Google Scholar]

- 7.Del Fiol G, Rocha R, Clayton PD. Infobuttons at Intermountain Healthcare: utilization and infrastructure. Proc AMIA Annu Fall Symp. 2006:180–4. [PMC free article] [PubMed] [Google Scholar]

- 8.Maviglia SM, Yoon CS, Bates DW, Kuperman G. KnowledgeLink: impact of context-sensitive information retrieval on clinicians’ information needs. J Am Med Inform Assoc. 2006;13:67–73. doi: 10.1197/jamia.M1861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Del Fiol G, Cimino JJ, Jenders R, et al. Context-Aware Information Retrieval (Infobutton) HL7 Version 3 Standard. 2007. Retrieved Mar 10th, 2009, from http://tinyurl.com/ywkf9r.

- 10.Ely JW, Osheroff JA, Chambliss ML, Ebell MH, Rosenbaum ME. Answering physicians’ clinical questions: obstacles and potential solutions. J Am Med Inform Assoc. 2005;12(2):217–24. doi: 10.1197/jamia.M1608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.McKibbon KA, Fridsma DB. Effectiveness of clinician-selected electronic information resources for answering primary care physicians’ information needs. J Am Med Inf Assoc. 2006;13(6):653–9. doi: 10.1197/jamia.M2087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hulse NC, Del Fiol G, Bradshaw RL, Roemer LK, Rocha RA. Towards an on-demand peer feedback system for a clinical knowledge base: A case study with order sets. J Biomed Inform. 2008;4:152–64. doi: 10.1016/j.jbi.2007.05.006. [DOI] [PubMed] [Google Scholar]

- 13.Wright A, Sittig DF. Automated development of order sets and corollary orders by data mining in an ambulatory computerized physician order entry system. Proc AMIA Annu Fall Symp. 2006:819–23. [PMC free article] [PubMed] [Google Scholar]