Abstract

Quantitative models for response time and accuracy are increasingly used as tools to draw conclusions about psychological processes. Here we investigate the extent to which these substantive conclusions depend on whether researchers use the Ratcliff diffusion model or the Linear Ballistic Accumulator model. Simulations show that the models agree on the effects of changes in the rate of information accumulation and changes in non-decision time, but that they disagree on the effects of changes in response caution. In fits to empirical data, however, the models tend to agree closely on the effects of an experimental manipulation of response caution. We discuss the implications of these conflicting results, concluding that real manipulations of caution map closely, but not perfectly to response caution in either model. Importantly, we conclude that inferences about psychological processes made from real data are unlikely to depend on the model that is used.

Electronic supplementary material

The online version of this article (doi:10.3758/s13423-010-0022-4) contains supplementary material, which is available to authorized users.

Keywords: Response time, Reaction time, Mathematical models, Model comparison

Introduction

A growing number of researchers use cognitive models of choice response time (RT) to draw conclusions about the psychological processes that drive decision making. By far the most successful framework for models of choice RT are evidence accumulation models. Many different types of models exist within this framework, but almost all have three common latent variables: rate of evidence accumulation, response caution, and the time taken for non-decision processes. These variables can be used to make inferences about the causes of overt behavior in rapid choice tasks in terms of latent psychological processes that are more meaningful than raw data summaries.

As an example of this approach, consider the finding that older participants tend to respond more slowly in choice tasks than younger participants. This pattern was initially attributed to a general age-related slowing in information processing. However, when Ratcliff and colleagues applied their diffusion model, they found that the rate of evidence accumulation did not change with age (Ratcliff, Spieler, & McKoon, 2000; Ratcliff, Thapar, & McKoon, 2001, 2003; Ratcliff, Thapar, Gomez, & McKoon, 2004; Ratcliff, Thapar, & McKoon, 2004, 2006b, 2006a, 2007). For example, in one study involving a recognition memory task Ratcliff, Thapar, & McKoon (2004) found that older participants responded more slowly than younger participants, but a diffusion model analysis indicated that this was not due to a reduced rate of access to evidence from memory. Rather, older participants were more careful in their responding (i.e., higher response caution), and also slower to execute the overt motor response (i.e., longer non-decision times). This kind of conclusion would have been very difficult to draw without a cognitive model of choice RT.

Similar analyses have been applied to a range of topics, including: lexical decision (Ratcliff, Gomez, & McKoon, 2004; Wagenmakers, Ratcliff, Gomez, & McKoon, 2008); recognition memory (Ratcliff, 1978; Ratcliff, Thapar, & McKoon 2004); the effect of practice (Dutilh, Vandekerckhove, Tuerlinckx, & Wagenmakers, 2009); reading impairment (Ratcliff, Perea, Colangelo, & Buchanan, 2004; Ratcliff, Philiastides, & Sajda, 2009); the links between depression and anxiety (White, Ratcliff, Vasey, & McKoon, 2009, in press); the neural aspects of decision making (Ratcliff, Hasegawa, Hasegawa, Smith, & Segraves, 2007); as well as a range of simple perceptual tasks (Ratcliff & Rouder, 1998; Ratcliff, 2002; Brown & Heathcote, 2005; Usher & McClelland, 2001). Such analyses have most often made use of Ratcliff and colleagues’ diffusion model (Ratcliff, 1978). The defining characteristic of a diffusion process is that evidence accumulation is stochastic, that is, it is corrupted by the addition of noise that varies from moment to moment during accumulation.

More recently, Brown and Heathcote (2008) proposed an alternative evidence accumulation theory, the Linear Ballistic Accumulator (LBA) model, which has a simpler characterization of noise than Ratcliff’s diffusion model, in that within-trial evidence accumulation is assumed to be ballistic (i.e., a noise-free linear function of the input signal). This simplification makes it possible to derive closed form expressions for the likelihood of an observed choice and RT, which greatly facilitates computation. Even though it is relatively new, and perhaps because of its computational simplicity, the LBA has already seen a range of applications as a model of rapid choice (e.g., Forstmann, Dutilh, Brown, Neumann, von Cramon, Ridderinkhof et al., 2008; Forstmann, Brown, Dutilh, Neumann, & Wagenmakers, 2010; Ho, Brown, & Serences, 2009; Ludwig, Farell, Ellis, & Gilchrist, 2009; Donkin, Brown, & Heathcote, 2009).

Given there is more than one decision process model, with no general agreement on which one is correct, the question arises whether and to what extent the conclusions about psychological processes depend on the models that are used to analyze the data. For example, if Ratcliff et al. (2004d) had used the LBA rather than the diffusion model, would they have drawn the same conclusions about the effects of aging on recognition memory? Van Ravenzwaaij and Oberauer (2009) investigated the relationship between the LBA and diffusion models, but could not find a simple one-to-one correspondence between their parameters. This suggests that Ratcliff, Thapar, & McKoon (2004) may have indeed drawn different conclusions if they had used the LBA. Such model dependence is clearly an undesirable state of affairs for making consistent inferences about latent psychological processes.

Below, we present the results of a study that investigates the relationship between the LBA and diffusion models. In contrast to the results of Van Ravenzwaaij and Oberauer (2009), we find mostly straightforward correspondence between the parameters of the two models. In particular, by carefully parameterizing the models in corresponding ways, we find a one-to-one correspondence between the parameters for non-decision time and for the mean rate of evidence accumulation. However, there is a less straightforward mapping between the response caution parameters of the two models.

Overview of the models

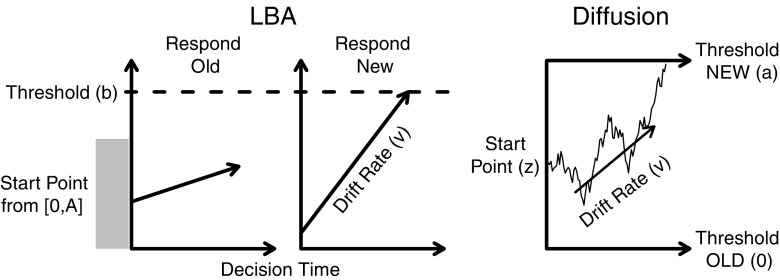

Both the diffusion model and the LBA model are based on the idea of “evidence accumulation.” They assume that decisions are based on the accumulation of evidence for each possible response, and that a response is made once the evidence for one particular response reaches a threshold (or criterion). The time taken for accumulation determines the decision time. In the LBA, the response chosen depends on which accumulator’s evidence threshold is satisfied first. In the diffusion model there are two response criteria, one corresponding to each response, with the choice response determined by which is crossed first.

To illustrate the models, imagine that a participant in a recognition memory task is presented with an item that had been previously studied (“old”) and asked whether the stimulus is “old” or “new” (not previously studied). The diffusion model assumes that participants sample evidence from the match between a test stimulus and memory continuously, and this information stream updates an evidence total, say x, illustrated as a function of accumulation time by the jagged line in the right panel of Fig. 1. The accumulator begins the decision process in some intermediate state, say x = z. Evidence that favors the response “new” increases the value of x, and evidence that favors the response “old” decreases the value of x. Depending on the stimulus, evidence tends to consistently favor one response over the other, so the process drifts either up or down; the average speed of this movement is called the drift rate, usually labeled v.1 Accumulation continues until sufficient evidence favors one response over the other, causing the total (x) to reach either the upper or lower boundary (the horizontal lines at x = a and x = 0 in the right-hand panel of Fig. 1). The choice made by the model depends on which boundary is reached (a for a “new” response or 0 for an “old” response), and response time equals the accumulation time plus a constant. This constant value, T er, represents the time taken by non-decision processes, such as the encoding of the stimulus and the production of the selected response.

Fig. 1.

Simplified representation of a typical decision process for the LBA and diffusion models. Both models also assume an independent time taken for non-decision processes (T er)

The LBA is a multiple accumulator model, meaning that it assigns a separate evidence accumulator to each possible response. For example, in a recognition memory task, one accumulator gathers evidence in favor of the “old” response and another gathers evidence in favor of the “new” response, as illustrated in the left panel of Fig. 1. The activity level in each accumulator begins at a value randomly sampled from the interval [0,A]. Evidence accumulation is noiseless (ballistic) and linear, with an average slope associated with each accumulator that we again call drift rates, v 1 and v 2. When the evidence accumulated for either response reaches its respective threshold, b 1 or b 2, that response is made. Like the diffusion model, the LBA assumes that non-decision processing takes some time, T er, which is assumed to be the same for both accumulators. Our first step in parameterizing the LBA in a way that is compatible with the diffusion model is to assume that the mean drift rate for each accumulator sums to one (i.e., v 1 + v 2 = 1).2 This choice is conventional (see Usher & McClelland, 2001), but not essential, and in some cases it might not be the best choice (see Donkin et al., 2009).

Both models have more parameters than those mentioned so far. These parameters describe the magnitude of trial-to-trial variability in some elements of the model, like the A parameter in the LBA. These secondary parameters give the models flexibility enough to accommodate some regularly observed empirical phenomena (e.g., the relative speed of correct and error responses). However, those parameters are not often of great interest in applications as they are assumed to be the same across experimental conditions, so we do not discuss them further here (but see ∼Appendix A, or Brown & Heathcote, 2008 Ratcliff & Rouder, 1998; Ratcliff & Tuerlinckx, 2002; for a list of these parameters and their details). Our simulation study also focuses on the case where the decision process is, on average, unbiased. For the LBA model this implies that the average distance from the start point to the response criterion is equal for each accumulator (i.e., b 1 = b 2). For the diffusion model this means the average starting point, z, is in the middle of the [0, a] interval.

Primary parameters

Despite marked differences in their architecture, the primary parameters of LBA and the diffusion models share common interpretations. We will focus on the three parameters just discussed as they index psychological constructs that are typically of greatest interest: the rate of evidence accumulation, response caution, and non-decision time. Both models have a drift rate parameter, v, which corresponds to the rate at which evidence is extracted from the environment. This parameter is typically influenced by manipulations of stimulus quality, or of decision difficulty. Both models also have a non-decision time parameter, T

er. However, the models differ slightly in their usual parameterization of response caution. The diffusion model uses the response threshold parameter, a, to describe caution – larger values of a mean that the response boundaries are farther from the start point, and so more evidence must be accrued before a response is made. The most directly comparable quantity in the LBA is the quantity  . Like the parameter a from the diffusion model, this quantity measures the average amount of evidence that must be accumulated in order to reach the response threshold (the average is over stimuli and responses).3

. Like the parameter a from the diffusion model, this quantity measures the average amount of evidence that must be accumulated in order to reach the response threshold (the average is over stimuli and responses).3

Cross-fit simulation study

We investigated whether there is a one-to-one correspondence between the three analogous primary parameters by first generating RT and accuracy data from one model, while systematically changing just one of those parameters, and then fitting those data with the other model. Ideally, changing a parameter in the data generating models will produce changes in only the analogous parameter of the fitted model. These simulations are therefore a test of selective influence between analogous parameters in each model.

Method

We simulated data from a single synthetic participant in a two-choice experiment where decision difficulty was manipulated within-subjects over three levels. We used a large sample size, 20,000 trials per difficulty level, so as to virtually eliminate sample variance in the parameter estimates. We used three simulated difficulty conditions for the same reason, as fitting the models to data from a single condition can under-constrain parameter estimates.

The diffusion model has six parameters, three primary parameters (a,T

er

,v) and three secondary parameters(s

z, s

t, η), and the LBA five, three primary parameters  and two secondary parameters (A, s). Note that each model has some parameters in addition to the three of greatest applied interest; results pertaining to those parameters are given in ∼Appendix A. For each simulation, we first chose a data-generating model (either the diffusion or the LBA). All model parameters were initially fixed at a default value (see Table 1). Next, we chose one parameter at a time, and identified 250 equally spaced values for that parameter that spanned the range commonly observed in applied settings. For each of these 250 parameter values, we generated a set of 60,000 observations (20,000 in each of the three difficulty conditions). To select the appropriate parameter settings for the diffusion model, we took the average parameter values and parameter ranges identified in a literature review reported by Matze and Wagenmakers (2009). For the LBA we used the range of parameters recovered from an initial simulation study that identified the ranges of LBA parameters that correspond to the diffusion parameter ranges in Matze and Wagenmakers (2009). Table 1 reports both sets of parameter ranges.

and two secondary parameters (A, s). Note that each model has some parameters in addition to the three of greatest applied interest; results pertaining to those parameters are given in ∼Appendix A. For each simulation, we first chose a data-generating model (either the diffusion or the LBA). All model parameters were initially fixed at a default value (see Table 1). Next, we chose one parameter at a time, and identified 250 equally spaced values for that parameter that spanned the range commonly observed in applied settings. For each of these 250 parameter values, we generated a set of 60,000 observations (20,000 in each of the three difficulty conditions). To select the appropriate parameter settings for the diffusion model, we took the average parameter values and parameter ranges identified in a literature review reported by Matze and Wagenmakers (2009). For the LBA we used the range of parameters recovered from an initial simulation study that identified the ranges of LBA parameters that correspond to the diffusion parameter ranges in Matze and Wagenmakers (2009). Table 1 reports both sets of parameter ranges.

Table 1.

Range of parameter values used to generate data sets that were fit in the cross-fit simulation study. Note that when a and T er in the diffusion model were varied, s z and S t were fixed at 0.044 and 0.225, respectively. “Default” indicates the value each parameter takes when fixed across simulations. See ∼Appendix A for definitions of parameters not covered in the main body of the text

| Model | b-A | A | T er | s | v | ||

|---|---|---|---|---|---|---|---|

| LBA | Min | 0 | .15 | .1 | .15 | .5 | |

| Max | .5 | .45 | .4 | .35 | 1 | ||

| Default | .12 | .25 | .25 | .27 | .74 | ||

| a | T er | η | sz/a | st/Ter | v | ||

| Diffusion | Min | .07 | .3 | .01 | .01 | - | .01 |

| Max | .2 | .6 | .25 | .7 | - | .45 | |

| Default | .125 | .435 | .133 | .044 | .225 | .23 |

For each simulated data set, we estimated the best-fitting parameters for the other model, always fixing all parameters except v across difficulty conditions. We fit using Quantile Maximum Probability Estimation (QMPE; Heathcote, Brown, & Mewhort, 2002) with a SIMPLEX minimization algorithm (Nelder & Mead, 1965).4 We did not use the optimal estimation procedure, maximum likelihood, because of the absence of a computationally efficient approximation to the diffusion model’s likelihood function. In general, QMPE produces similar results to maximum likelihood estimation when it is based on a large number of quantile bins. In the simulation study we used 99 equally spaced quantiles between .01 and .99. To confirm asymptotic equivalence, we repeated our fits of the LBA to diffusion data using maximum likelihood; results for the two estimation methods agreed very closely.

LBA fits to diffusion data

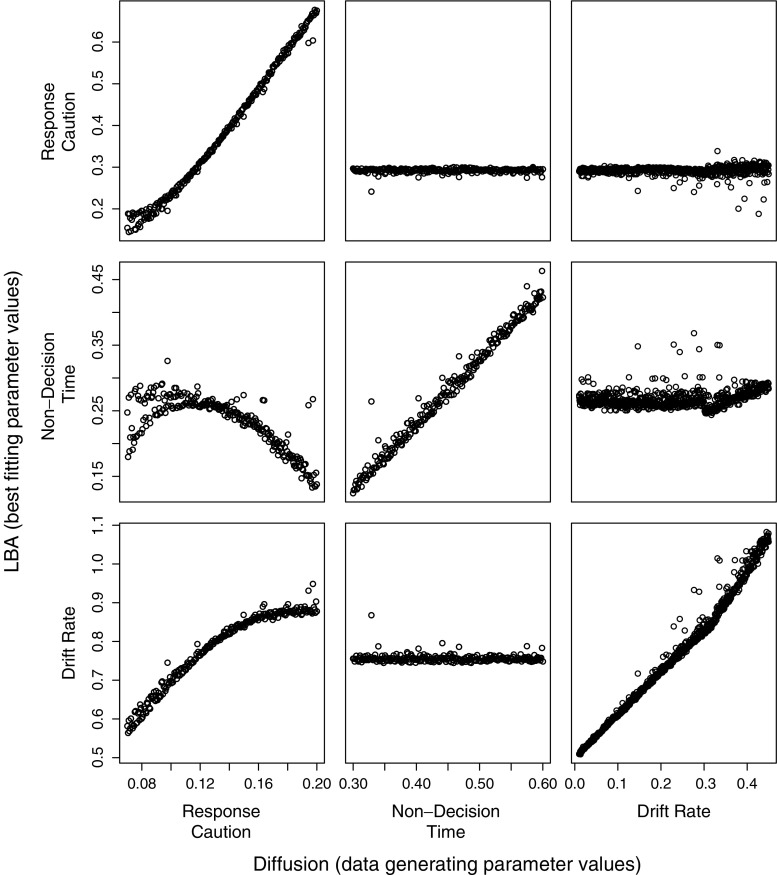

We first report the results of fits of the LBA to data generated from the diffusion model. Figure 2 shows the effect of changing these parameters of the diffusion model (rate of information accumulation, response caution and non-decision time) on the three corresponding parameters of the LBA. If the corresponding parameters of the two models map closely to each other then we expect to see monotonically increasing functions on the main diagonal of the plots shown in Fig. 2. Further, if the parameters of our models map selectively to each other then we also expect to see lines of zero slope in all plots not on the main diagonal of the figure.

Fig. 2.

Changes in parameters corresponding to response caution, non-decision time, and drift rate in the LBA model ( ,T

er,v: rows) caused by systematic changing of diffusion model parameters (a,T

er,v: columns)

,T

er,v: rows) caused by systematic changing of diffusion model parameters (a,T

er,v: columns)

The center and right columns of Fig. 2 show that changes in drift rate and non-decision time for the diffusion model correspond almost exclusively to changes in the corresponding parameter of the LBA. For example, when drift rate in the diffusion model increases (from 0 to 0.5, right-hand column of Fig. 2), the estimated drift rate parameter for the LBA increased (from 0.55 to about 0.9), while the non-decision time and the response caution parameters for the LBA were unaffected. Effects on T er were even simpler (see the middle column of Fig. 2): a unit change in the T er parameter of the diffusion model resulted in a unit change in the corresponding T er parameter of the LBA model.

The left column of Fig. 2 shows that the effect of changing the response caution parameter in the diffusion model was not as simple. Increasing the diffusion model’s response caution parameter (a) caused a corresponding increase in the LBA model’s response caution parameter ( ), as seen in the top-left panel. However, other LBA parameters were also affected. Drift rate in the LBA increased with the diffusion model’s a parameter, but with an effect size only about half as large as the change in LBA drift rate caused by changing diffusion model drift rate. The non-decision time parameter for the LBA first increased, then decreased with increasing response caution in the diffusion model, with an effect size about one-third the magnitude of that caused by direct changes in the diffusion model non-decision time.

), as seen in the top-left panel. However, other LBA parameters were also affected. Drift rate in the LBA increased with the diffusion model’s a parameter, but with an effect size only about half as large as the change in LBA drift rate caused by changing diffusion model drift rate. The non-decision time parameter for the LBA first increased, then decreased with increasing response caution in the diffusion model, with an effect size about one-third the magnitude of that caused by direct changes in the diffusion model non-decision time.

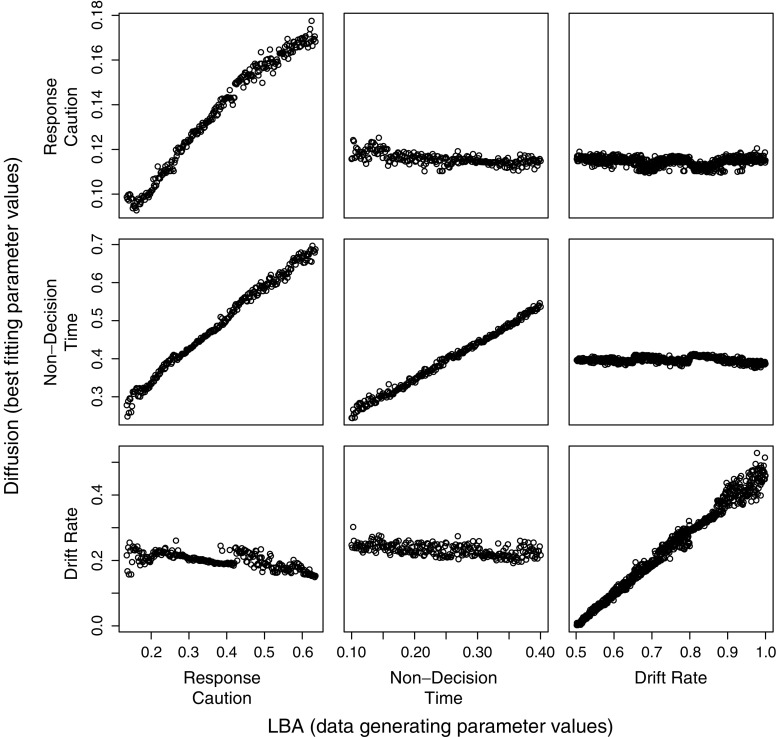

Diffusion fits to LBA data

Figure 3 shows the changes in diffusion model parameter estimates caused by changes in parameters of the data-generating LBA model. Once again, a unit change in the non-decision time parameter of the data-generating model (the LBA) was associated with a unit change in the corresponding parameter of the estimated model (the diffusion). Changes in both the LBA non-decision time and drift rate parameters also caused very selective effects, resulting in changes only in the corresponding parameter of the diffusion. However, once again, the response caution parameters did not map neatly between the models. Changing the LBA response caution parameter caused a corresponding change in the diffusion model response caution parameter (a), but also caused a change in the estimated non-decision time. The latter change was also very large, nearly twice as large as the range over which we varied non-decision time in the data generating models.

Fig. 3.

Changes in parameters corresponding to response caution, non-decision time, and drift rate in the diffusion model (a,T

er,v: rows) caused by systematic changing of LBA model parameters ( ,T

er,v: columns)

,T

er,v: columns)

Discussion of simulation results

Changes in the non-decision time parameter (T er) from either model were reflected almost exclusively in equal-sized changes of the non-decision time parameter of the other model. This correspondence is to be expected, since these parameters have simple and identical influences on predictions from both models (a shift in RT distribution, with no effect on distribution shape or response accuracy). Note, however, that non-decision time estimates were generally smaller for the LBA model than for the diffusion model. The parameters that index the quality of information (drift rate) were also mapped appropriately between the models. Since drift rate is the major determinant of accuracy in response time models, it is perhaps unsurprising that these two parameters are so simply related. In a more general sense, our results suggest that the rate of evidence accumulation is an appropriate metric for the quality of performance, irrespective of the assumed decision model architecture.

The response caution parameters did not produce such a neat correspondence. Increasing the response caution parameter in one model was associated with an increase in the corresponding parameter from the other model, as expected. However, other model parameters were also affected by underlying changes in response caution. This problem was slightly different for the two models. When data were generated from the diffusion model, changes in response caution affected drift rate and non-decision time in the LBA, while response caution changes in data generated from the LBA model caused changes only in non-decision time for the diffusion model.

For typical parameter settings, changes in response caution lead to larger shifts in the leading edge of the RT distribution for the LBA model than for the diffusion model. This occurs because, except at the fastest speed-accuracy settings, the LBA model predicts a slow rise in the first part of the RT distribution, that is, a period of time just after T er during which responses are unlikely. In contrast, the diffusion model predicts a sharp leading edge, with many responses predicted soon after the non-decision period. The technical reason for these divergent predictions concerns the speed with which evidence accumulates in the models. In the LBA model, evidence accumulates at a well-defined speed, governed by the normal distribution of drift rates across trials, and this makes it unlikely for a response to be triggered immediately after T er. However, in the diffusion model, moment-by-moment fluctuations mean that very fast evidence accumulation is more probable, so responses are more likely to be triggered soon after T er.5

The different leading edge predictions of the models appear quite simple, but caused complex effects in our simulations. Increasing response caution in the LBA increases the predicted length of the slow period after T er, and the diffusion model matches this by increasing T er. Conversely, the LBA model must increase drift rate so that the predicted leading edge remains stationary in order to mimic the effect of increasing response caution in the diffusion model.

Analyses of extant data sets

Our simulation study revealed that the LBA and diffusion model disagree on the influence of response caution. This disagreement is of potential concern since it suggests that the two models would differ in their explanation of an empirical manipulation of response caution, with no way of knowing which model’s account is correct. Because of this potential for confusion, we investigated the effect of changes in response caution in empirical data to see where and how the models diverge. Perhaps surprisingly, the potential problematic mis-mapping does not arise in real data sets, a result also reported by Forstmann et al. (2008). That is, when both models are fit to empirical data and the primary parameters are allowed to vary across conditions, the models agree closely about the direction and relative magnitudes of those effects. In an accompanying Online Appendix we report detailed analyses of two data sets that both included experimental manipulations of response caution and were selected on this basis to investigate the expected mis-mapping between the models’ response caution parameters.

In the analysis reported in the ∼Appendix we dropped all a priori assumptions and allowed all primary parameters to vary between experimental conditions. This meant the two models could have accounted for the same data by attributing observed effects to quite different underlying cognitive processes. Despite this freedom, both models agreed on which parameters were influenced by empirical manipulations – even those manipulations thought to uniquely influence response caution.

In addition to the data included in our Online Appendix, we have also repeated the comparison of LBA and diffusion model fits using data from many other experiments and paradigms, with both within-subject manipulations: Donkin et al. (2009); Forstmann et al. (2008); Ratcliff and Rouder (1998); Ratcliff et al. (2004a) and between-subject manipulations Donkin, Heathcote, Brown, and Andrews (2009); Ratcliff et al. (2004c); Ratcliff et al. (2004d); Ratcliff et al. (2001, 2003). In all cases, even for experiments that included a manipulation of response caution, the models overwhelmingly agreed on which parameters should vary across conditions.

The contrast between the results of empirical and simulation studies data suggests that an experimental manipulation of response caution does not selectively influence response caution parameters in either model. For example, if the diffusion account of speed- vs. accuracy-emphasis were true, we would have expected to see large changes in both response caution and drift rate across speed-accuracy conditions when the data were analyzed with the LBA. Similarly, if the LBA account was true, we would have observed large differences between non-decision time and response caution in the diffusion model. We did not observe either of these patterns, suggesting that changes in either model’s response caution parameter alone does not provide a perfect description of the effects of real manipulation of response caution.

Our analysis of real data suggests that neither model’s account of response caution is “true.” Of course, no cognitive model is true in the strict sense; for example, both models ignore a plethora of systematic effects such as response and stimulus repetition effects, post-error slowing, practice, and fatigue. The additional error associated with these, and other, un-modeled phenomena may reduce our ability to detect the mis-mapping we observe in our simulations. In addition, there are some obvious differences between real data and our simulation study that could, in practice, serve to reduce the mis-mapping between response caution in our two models. For example, to investigate selective influence we varied only one parameter at a time when creating our simulated data sets, thus ignoring the correlation between parameters typically observed in real data. This correlation may mean that response caution manipulations observed in real data are smaller than those in our simulations, and so we may not have enough power to identify the expected mis-mapping. On the other hand, we were able to rule out other differences between real data and our simulation study as potential causes for the disappearance of the mis-mapping we observed. In particular, we found that the mis-mapping still occurred when we repeated our simulations with small sample sizes—representative of real data—and despite the inclusion of contaminant processes (cf. Ratcliff & Tuerlinckx, 2002).

Concluding comments

Van Ravenzwaaij and Oberauer (2009) reported that in their simulation study the parameters of the diffusion and LBA models did not correspond in any clear way. In contrast, we identified clear and simple mappings between two of the three parameters of most substantive interest, drift rate and non-decision time. However, we did find one parameter – response caution – which did not have a simple mapping. The difference in findings between the two studies could be due to the different model parameterizations employed (as discussed earlier), but could also be caused by the different methods used to investigate the mapping of parameters. For each set of simulated data, Van Ravenzwaaij and Oberauer (2009) randomly sampled each parameter, while we were focused on the selective influence of individual parameters, and thus manipulated just one parameter at a time.

The diffusion model and the LBA assume very different frameworks for how rapid decisions are made. We have shown that because of the different frameworks, corresponding parameters do not always map simply between the two models. The mis-mapping is quite specific to how the models describe response caution. Surprisingly, however, in fits to real data, when response caution is experimentally manipulated, the two models lead to the same substantive conclusions (see our Online Appendix for examples). In other words, we find no reason to doubt any previously drawn conclusions using either model. However, since it is possible that an experimental manipulation we have not investigated could lead to the mis-mapping we observed in simulations, we suggest that if one observes the selective influence of response caution in either the LBA or diffusion model, it would be prudent to confirm the effects in the alternate model.

Electronic supplementary material

Below is the link to the electronic supplementary material.

(DOC 94 kb)

(PDF 346 kb)

(PDF 604 kb)

(PDF 22.7 kb)

Acknowledgments

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Footnotes

In all fits reported herein we followed the standard convention that within-trial variability in the diffusion model, s, was fixed at 0.1.

In other words, the means of the between-trial drift rate distributions for correct and error accumulators sum to one. This does not imply that the drift rates in the two accumulators on any one trial also sum to one.

We use  because it is the expected value of the uniform start point distribution, and is therefore equivalent to z in the diffusion model, thus making

because it is the expected value of the uniform start point distribution, and is therefore equivalent to z in the diffusion model, thus making  and a equivalent whenever the process is unbiased, except for a scale factor of

and a equivalent whenever the process is unbiased, except for a scale factor of  .

.

To ensure that we identified best-fitting parameters we used multiple and long SIMPLEX runs with different starting points and heuristics for generating good starting points

This property is also likely to hold for other models that assume finite rates of evidence accumulation. For example, the diffusion model can be motivated as a continuous approximation to a discrete random walk (Estes, 1959). However, the simplest version of a discrete random walk, where each sample takes a fixed time and adds a fixed unit of evidence favoring one response or the other, cannot make a response in fewer time steps than the distance between the starting value and the closest boundary. This point may have important implications as the discrete random walk is used as a decision process in some modern psychological theories (Logan, 2002; Nosofsky & Palmeri, 1997).

References

- Brown S, Heathcote A. A ballistic model of choice response time. Psychological Review. 2005;112:117–128. doi: 10.1037/0033-295X.112.1.117. [DOI] [PubMed] [Google Scholar]

- Brown S, Heathcote AJ. The simplest complete model of choice reaction time: Linear ballistic accumulation. Cognitive Psychology. 2008;57:153–178. doi: 10.1016/j.cogpsych.2007.12.002. [DOI] [PubMed] [Google Scholar]

- Donkin C, Brown S, Heathcote A. The over-constraint of response time models: Rethinking the scaling problem. Psychonomic Bulletin & Review. 2009;16:1129–1135. doi: 10.3758/PBR.16.6.1129. [DOI] [PubMed] [Google Scholar]

- Donkin C, Heathcote A, Brown S, Andrews A. Non-decision time effects in the lexical decision task. In: Taatgen NA, van Rijn H, editors. Proceedings of the 31st annual conference of the cognitive science society. Austin: Cognitive Science Society; 2009. [Google Scholar]

- Dutilh G, Vandekerckhove J, Tuerlinckx F, Wagenmakers E-J. A diffusion model decomposition of the practice effect. Psychonomic Bulletin & Review. 2009;16:1026–1036. doi: 10.3758/16.6.1026. [DOI] [PubMed] [Google Scholar]

- Estes WK. A random walk model for choice behavior. In: Arrow KJ, Karlin S, Suppes P, editors. Mathematical models in the social sciences. California: Stanford University Press; 1959. pp. 53–64. [Google Scholar]

- Forstmann, B. U., Brown, S. D., Dutilh, G., Neumann, J., & Wagenmakers, E.-J. (2010). The neural substrate of prior information in perceptual decision making: A model based analysis. Frontiers in Neuroscience, 4, Article 40. [DOI] [PMC free article] [PubMed]

- Forstmann BU, Dutilh G, Brown S, Neumann J, von Cramon DY, Ridderinkhof KR, et al. The striatum facilitates decision-making under time pressure. Proceedings of the National Academy of Science. 2008;105:17538–17542. doi: 10.1073/pnas.0805903105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heathcote A, Brown SD, Mewhort DJK. Quantile maximum likelihood estimation of response time distributions. Psychonomic Bulletin & Review. 2002;9:394–401. doi: 10.3758/BF03196299. [DOI] [PubMed] [Google Scholar]

- Ho T, Brown S, Serences J. Domain general mechanisms of perceptual decision making in human cortex. The Journal of Neuroscience. 2009;29:8675–8687. doi: 10.1523/JNEUROSCI.5984-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logan GD. An instance theory of attention and memory. Psychological Review. 2002;109:376–400. doi: 10.1037/0033-295X.109.2.376. [DOI] [PubMed] [Google Scholar]

- Ludwig CJ, Farrell S, Ellis LA, Gilchrist ID. The mechanism underlying inhibition of saccadic return. Cognitive Psychology. 2009;59:180–202. doi: 10.1016/j.cogpsych.2009.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matze D, Wagenmakers EJ. Psychological interpretation of ex-Gaussian and shifted Wald parameters: A diffusion model analysis. Psychonomic Bulletin & Review. 2009;16:798–817. doi: 10.3758/PBR.16.5.798. [DOI] [PubMed] [Google Scholar]

- Myung IJ, Pitt MA. Applying Occam’s razor in modeling cognition: A Bayesian approach. Psychonomic Bulletin & Review. 1997;4:79–95. doi: 10.3758/BF03210778. [DOI] [Google Scholar]

- Nelder JA, Mead R. A simplex algorithm for function minimization. Computer Journal. 1965;7:308–313. [Google Scholar]

- Nosofsky RM, Palmeri TJ. An exemplar–based random walk model of speeded classification. Psychological Review. 1997;104:266–300. doi: 10.1037/0033-295X.104.2.266. [DOI] [PubMed] [Google Scholar]

- Ratcliff R. A theory of memory retrieval. Psychological Review. 1978;85:59–108. doi: 10.1037/0033-295X.85.2.59. [DOI] [Google Scholar]

- Ratcliff R. A diffusion model account of response time and accuracy in a brightness discrimination task: Fitting real data and failing to fit fake but plausible data. Psychonomic Bulletin & Review. 2002;9:278–291. doi: 10.3758/BF03196283. [DOI] [PubMed] [Google Scholar]

- Ratcliff R, Gomez P, McKoon G. Diffusion model account of lexical decision. Psychological Review. 2004;111:159–182. doi: 10.1037/0033-295X.111.1.159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Hasegawa YT, Hasegawa YP, Smith PL, Segraves MA. Dual diffusion model for single-cell recording data from the superior colliculus in a brightness-discrimination task. Journal of Neurophysiology. 2007;97:1756–1774. doi: 10.1152/jn.00393.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Perea M, Colangelo A, Buchanan L. A diffusion model account of normal and impaired readers. Brain and Cognition. 2004;55:374–382. doi: 10.1016/j.bandc.2004.02.051. [DOI] [PubMed] [Google Scholar]

- Ratcliff R, Philiastides MG, Sajda P. Quality of evidence for perceptual decision making is indexed by trial-to-trial variability of the EEG. Proceedings of the National Academy of Sciences. 2009;106:6539–6544. doi: 10.1073/pnas.0812589106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Rouder JN. Modeling response times for two-choice decisions. Psychological Science. 1998;9:347–356. doi: 10.1111/1467-9280.00067. [DOI] [Google Scholar]

- Ratcliff R, Spieler D, McKoon G. Explicitly modeling the effects of aging on response time. Psychonomic Bulletin & Review. 2000;7:1–25. doi: 10.3758/BF03210723. [DOI] [PubMed] [Google Scholar]

- Ratcliff R, Thapar A, Gomez P, McKoon G. A diffusion model analysis of the effects of aging in the lexical–decision task. Psychology and Aging. 2004;19:278–289. doi: 10.1037/0882-7974.19.2.278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Thapar A, McKoon G. The effects of aging on reaction time in a signal detection task. Psychology and Aging. 2001;16:323–341. doi: 10.1037/0882-7974.16.2.323. [DOI] [PubMed] [Google Scholar]

- Ratcliff R, Thapar A, McKoon G. A diffusion model analysis of the effects of aging on brightness discrimination. Perception & Psychophysics. 2003;65:523–535. doi: 10.3758/BF03194580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Thapar A, McKoon G. A diffusion model analysis of the effects of aging on recognition memory. Journal of Memory and Language. 2004;50:408–424. doi: 10.1016/j.jml.2003.11.002. [DOI] [Google Scholar]

- Ratcliff R, Thapar A, McKoon G. Aging and individual differences in rapid two–choice decisions. Psychonomic Bulletin & Review. 2006;13:626–635. doi: 10.3758/BF03193973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Thapar A, McKoon G. Aging, practice, and perceptual tasks: A diffusion model analysis. Psychology and Aging. 2006;21:353–371. doi: 10.1037/0882-7974.21.2.353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Thapar A, McKoon G. Application of the diffusion model to two-choice task for adults 75-90 years old. Psychology and Aging. 2007;22:56–66. doi: 10.1037/0882-7974.22.1.56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Tuerlinckx F. Estimating parameters of the diffusion model: Approaches to dealing with contaminant reaction times and parameter variability. Psychonomic Bulletin & Review. 2002;9:438–481. doi: 10.3758/BF03196302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Usher M, McClelland JL. On the time course of perceptual choice: The leaky competing accumulator model. Psychological Review. 2001;108:550–592. doi: 10.1037/0033-295X.108.3.550. [DOI] [PubMed] [Google Scholar]

- Van Ravenzwaaij D, Oberauer K. How to use the diffusion model: Parameter recovery of three methods: Ez, fast-dm, and dmat. Journal of Mathematical Psychology. 2009;53:463–473. doi: 10.1016/j.jmp.2009.09.004. [DOI] [Google Scholar]

- Wagenmakers E-J, Ratcliff R, Gomez P, Iverson GJ. Assessing model mimicry using the parametric bootstrap. Journal of Mathematical Psychology. 2004;48:28–50. doi: 10.1016/j.jmp.2003.11.004. [DOI] [Google Scholar]

- Wagenmakers E-J, Ratcliff R, Gomez P, McKoon G. A diffusion model account of criterion shifts in the lexical decision task. Journal of Memory and Language. 2008;58:140–159. doi: 10.1016/j.jml.2007.04.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White C, Ratcliff R, Vasey M, McKoon G. Dysphoria and memory for emotional material: A diffusion model analysis. Cognition and Emotion. 2009;23:181–205. doi: 10.1080/02699930801976770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White, C., Ratcliff, R., Vasey, M., & McKoon, G. (in press). Sequential sampling models and psychopathology: Anxiety and reaction to errors. Journal of Mathematical Psychology.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOC 94 kb)

(PDF 346 kb)

(PDF 604 kb)

(PDF 22.7 kb)