Abstract

Summary

In functional data classification, functional observations are often contaminated by various systematic effects, such as random batch effects caused by device artifacts, or fixed effects caused by sample-related factors. These effects may lead to classification bias and thus should not be neglected. Another issue of concern is the selection of functions when predictors consist of multiple functions, some of which may be redundant. The above issues arise in a real data application where we use fluorescence spectroscopy to detect cervical pre-cancer. In this paper, we propose a Bayesian hierarchical model which takes into account random batch effects and selects effective functions among multiple functional predictors. Fixed effects or predictors in non-functional form are also included in the model. The dimension of the functional data is reduced through orthonormal basis expansion or functional principal components. For posterior sampling, we use a hybrid Metropolis-Hastings/Gibbs sampler, which suffers slow mixing. An Evolutionary Monte Carlo algorithm is applied to improve the mixing. Simulation and real data application show that the proposed model provides accurate selection of functional predictors as well as good classification.

Keywords: Bayesian hierarchical model, Evolutionary Monte Carlo, Functional data classification, Functional predictor selection, Fluorescence spectroscopy

1. Introduction

Classification with functional data is a challenging problem due to the high dimensionality of the observational space and the high correlation between adjacent points of the functional observations. One solution is to reduce the dimension and use the reduced features for classification, as done in Hall, Poskitt and Presnell (2001), Zhao, Marron and Wells (2004), and Ferré and Villa (2006). Another way is to use generalized linear regression, which was proposed by James (2002) and Müller and Stadtmüller (2005) and was applied to practical problems by Ratcliffe, Heller and Leader (2002) and Leng and Müller (2005).

In real data analysis, there are often practical issues that are not handled by the approaches mentioned above. One is the presence of systematic effects that are significant enough to bias classification, such as the artificial differences caused by measuring with different devices. In the Web Appendix A, an example is constructed to show how the device difference can mislead the classification in an unbalanced design. A similar issue is addressed in Baggerly et al. (2004). Another practical concern arises with multiple functional predictors. In this case, some functions are usually redundant or contain no information, therefore selecting a subset of the functions can reduce the cost of data collection for future observations, and may improve classification accuracy.

Our work is motivated by the investigation of fluorescence spectroscopy for cervical pre-cancer diagnosis (Ramanujam et al., 1996). In our clinical study, several different fluorescence spectra have been collected and used simultaneously for a single diagnosis. It is known that some spectral curves contain more disease related information hence are more “important” than others (Chang et al., 2002). Therefore it is beneficial to find those spectral curves that are best for diagnosis and to remove the unnecessary ones.

The fluorescence spectroscopy data analyzed here are collected in the following way. An excitation light at a fixed wavelength illuminates the cervical tissue. During illumination, the endogenous fluorescent molecules in tissue absorb the excitation light and emit fluorescent light. The emitted light is then captured by an optical detector which produces the spectrum as a smooth curve. In each measurement, the excitation light is varied at 16 different excitation wavelengths, ranging from 330 nm to 480 nm with increments of 10 nm. This produces 16 spectral curves for each measurement. In each curve, the spectral intensities are recorded at emission wavelengths ranging between 385 nm and 700 nm. During data preprocessing, the curves are truncated so that some intensity points at the smallest and largest emission wavelengths are removed.

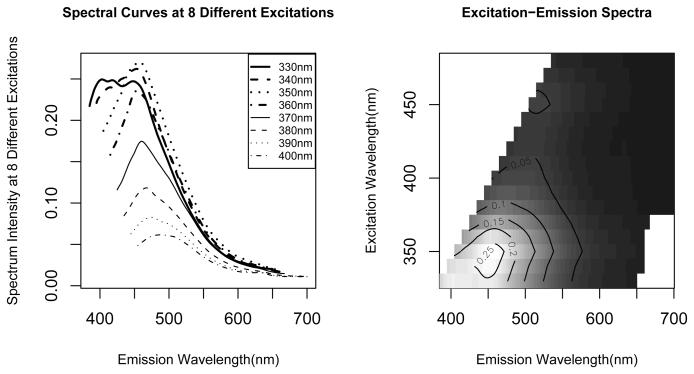

Figure 1 illustrates one observation. The left panel shows the first 8 of the total 16 spectral curves from this observation. The right panel shows a heat plot of the intensities, by stacking up all the 16 spectra in the order of their excitation wavelength. We call such a set of fluorescence spectroscopy curves an excitation-emission matrix (EEM).

Figure 1.

Left panel: spectral curves at 8 different excitation wavelengths ranging from 330nm to 400nm. Right panel: heat plot of an excitation-emission matrix (EEM).

One purpose of this study is to select a few excitation curves out of a total of 16 for diagnosis. The selected curves can then be measured in the future to reduce the device cost and measurement time. Several factors that are brought in by the experimental design need to be considered in this study. First, the data are obtained using two instruments with four optical probes located at three clinics. A preliminary study shows that there exist significant differences among the data from different device-probe-clinic combinations, which will put the classification at risk since the diseased cases are rare and distributed inhomogeneously across these combinations, like the example shown in the Web Appendix A. Second, in addition to device-clinic differences, it is believed that other factors, such as tissue type of the sample and menopausal status of the patient, will be confounded with the disease information. These factor effects are shown through box-plots in Web Figure 1.

This paper proposes a Bayesian hierarchical model with selection of functional predictors for complex functional data classification problems, where multiple functional predictors are influenced by random batch effects and fixed effects. We extend the idea of Bayesian variable selection to generalized functional linear regression with binary responses. Details on Bayesian variable selection can be found in George and McCulloch (1993, 1997) and Brown, Vannucci and Fearn (1998, 2002). We use a Bayesian hierarchical model to take into account random batch effects. Fixed effects or predictors in non-functional form are also included in the model. The dimension of the functional data are reduced through orthonormal basis expansion or functional principal component analysis. A Hybrid Gibbs/Metropolis-Hasting sampler is used for posterior sampling, which we find mix slowly. An Evolutionary Monte Carlo (EMC) algorithm (Liang and Wong, 2000) is then applied for better mixing. Similarly to most variable selection methods, our proposed model can serve for both predictor selection and prediction(with model averaging). In our current application, we are mainly interested in selecting functional predictors to be measured in the future at a reduced cost. However, the model may also be applied to classification problems with redundant functional predictors simply to improve prediction.

The rest of the paper is organized as follows. We introduce the Bayesian hierarchical model with functional predictor selection in Section 2, discuss the selection of parameters in Section 3, and describe the proposed MCMC algorithms in Section 4. Simulation results are shown in Section 5. The application of the proposed model to the fluorescence spectroscopy data is given in Section 6, followed by discussion in Section 7. More discussion on setting priors and parameters in MCMC algorithms can be found in Web Appendix C.

2. The Bayesian hierarchical model with selection of functional predictors

2.1 The proposed model

Suppose that we obtain functional observations from L exchangeable batches, in which the lth batch contains nl observations and each observation contains J functions. For l = 1, …, L, i = 1, …, nl and j = 1, …, J, let be the jth function observed from the ith observation in batch l, which takes values in L2[Tj], with Tj the compact domain of . In addition to the functional observations, there are also non-functional observations , which is assumed to be a vector of length q. We treat the observations as predictors and assume the binary responses to be conditionally independent given the predictors. We introduce univariate latent variables which link the responses to the predictors as follows:

| (1) |

Here we take the first component of to be 1 to include the intercept term. For all i and l, we assume to be i.i.d. with distribution N(0, 1), and assume that for all j. See Albert and Chib (1993) for the use of latent variables to analyze binary response data.

In many cases, some functional predictors do not contribute to the the classification, and selecting a subset of them may actually improve the classification accuracy. In our application introduced in Section 1, there are also economic reasons for using a subset of the J functional predictors. To this end, we introduce a hyper-parameter τ to the priors of , where τ = (τ1, …, τJ)T and each component takes values either 1 or 0, indicating whether or not the corresponding functional predictor is selected. The proposed priors for α and are as follows:

| (2) |

where , d1, d2, ωj are pre-specified prior parameters. GP(μ, γ) denotes a Gaussian process with mean μ(t) and covariance function γ(s, t). We let γτj depend on τj by

| (3) |

where is a complete orthonormal basis of L2[Tj]. Note that the infinite sum in equation (3) is a perfectly general form for a covariance function; it is simply the spectral representation of a covariance function (Ash and Gardner, 1975). We will treat and as prior parameters and make specific choices of them. In equation (3), we let ν1 >> ν0 > 0 and set ν0 to be close to 0. Under this setting, both and will have covariances function close to 0 when τj = 0 (i.e., the jth functional predictor is not selected), and have relatively large variances when τj = 1(i.e., the jth functional predictor is selected). This type of prior is motivated by George and McCulloch (1993, 1997) where they used mixture-normal priors for variable selection. The 's in equation (3) are pre-specified positive weight parameters subject to for all j. For simplicity, we assume that the Gaussian process priors for are independent for all j and l, and that priors for τj are independent for all j. In order to do practical posterior inference, it will be necessary to construct finite dimensional approximations to the functional predictors and coefficients. This will be described in detail in Section 2.2 below.

2.2 The posterior inference

From equation (1) and the standard normal assumption of , it is easy to see that the conditional distribution of given , α and is a truncated normal

| (4) |

where and I{·} is the indicator function. Since is a complete orthonormal basis of L2[Tj], we can expand and by

| (5) |

and use the truncated versions of (5) to approximate and . Note that the orthonormal basis can be chosen to be a known basis such as Fourier or wavelet basis. If assuming that has zero mean and , Mercer's theorem and Karhunen-Loève theorem (Ash and Gardner, 1975) suggest taking the eigenfunctions of the covariance operator Kj as the orthonormal basis, where Kj is defined by

In this case, the coefficients are called functional principal component (FPC) scores of . Note that using the FPC method is different from using fixed basis expansions in that the eigenfunctions need to be estimated. Various estimating methods have been proposed in Ramsay and Silverman (1997) and Hall, Müller and Wang (2006).

Once the orthonormal basis coefficients or the FPC scores have been estimated, we can reduce (1) by applying the truncated approximations in (5), which gives

| (6) |

where pj is the truncation parameter for the jth functional predictor. The notation of the above equation can be simplified by concatenating coefficients of the J functions to make one vector bl. The simplified form of equation (6) is

| (7) |

where and . Sl is a matrix of size nl × q with the ith row equals , and Cl is a matrix of size with the ith row equals . Similarly, . Based on (7), the conditional distribution of the latent variables in (4) becomes

| (8) |

where μl = Slα + Clbl and . The truncated orthonormal basis expansion or FPC analysis also reduce the Gaussian process priors for and to multivariate normal priors

| (9) |

where Στ = Dτ W1/2RW1/2Dτ. Here R is the prior correlation matrix of bl and b0. In our setup, R = Ip, an identity matrix since in Section 2.1 we assumed that 's are independent for all j's. W is also a diagonal matrix of size p, with positive diagonal components . In other words, the diagonal of W concatenates the first pj components of the weight sequence , for j = 1, …, J. Dτ is another diagonal matrix with diagonal components

where , for k = 1, …, pj, j = 1, …, J. Note that does not depend on k.

With the conditional distribution (8), the priors for α, τ and in (2), and the reduced multivariate priors for bl and b0 in (9), we get the joint conditional posterior distribution of α, bl's, b0, , τ given Zl's and Yl's by

| (10) |

The parameters α, bl's and b0 can all be integrated out sequentially from (10), which gives the marginal conditional posterior distribution

| (11) |

See the Web Appendix B for details of the integration. Based on (8), (10) and (11), MCMC algorithms can be designed to obtain posterior samples of the parameters. Using the posterior samples of bl's, we can estimate 's. For new observations, we can use the estimated 's and posterior estimate of α for prediction.

3. Setting parameters

It is important to determine the truncation parameters pj used in basis expansion (see equation (6)). One could set up priors for each pj and adopt reversible jump MCMC (Green, 1995) for posterior sampling. This strategy is reasonable but will introduce extra complications for MCMC. Another strategy of determining the pj's is through cross-validation. A test set can be used and the pj's can be determined through maximizing the prediction performance on test set. This method is straightforward but prohibitive unless one assumes pj ≡ p. It is also computationally expensive since it requires the model to be trained on all possible choices of p. For this paper, we propose a simple practical method for determining pj's. Since the truncated basis expansion is used to approximate the original functional predictors, we set an approximation criterion to determine pj. For example, if using FPC analysis, we set the criterion to be , for 0 < c1 ≤ 1, 1 ≤ pj ≤ K. Here λ̂k's are the estimated eigenvalues, and K is the maximum number of eigenvalues that are non-zero. Here f̂(pj) represents the proportion of variability accounted using the first pj FPC's. We usually set c1 above 0.99 in this paper. If using a known orthonormal basis, we suggest to let f̂(pj) = 1 − Σi∥xij(t) − x̂ij(t)∥2/∥xij(t)∥2 ≥ c2, where x̂ij(t) is the estimated function of xij(t) after truncating at pj, and ∥·∥ is the L2 norm. We also suggest setting c2 above 0.99.

The weight sequences in equation (3) will determine the weight matrix W in (9). We discuss the choices of here. We know that and . The main effect of is to weight higher orders of the orthonormal basis toward zero so that the series in (3) converges. In this paper, we always set so that all the weights are between 0 and 1. We determine by another parameter m such that for all k = 1, …, ∞ and all j. Smaller value of m will make decay to zero faster. The values of are truncated at pj to form the weight matrix W. We usually take m between 0.5 and 0.9. The setting of other MCMC parameters and priors is discussed in Web Appendix C.

4. Markov Chain Monte Carlo

Based on the model constructed in Section 2, we propose two MCMC algorithms for posterior sampling. The first one is a hybrid Metropolis-Hastings/Gibbs sampler, and the second one is a modified version of Algorithm 1 which uses the EMC algorithm to improve the mixing when the number of functional predictors is relatively large.

4.1 MCMC Algorithm 1 (Hybrid Metropolis-Hastings/Gibbs Sampler)

Step 0. Set initial values for bl's, α, τ and .

Step 1. For l = 1, …, L, conditional on Yl and current values of bl and α, update Zl from the truncated normal distribution described in equation (8) of Section 2.2.

- Step 2. Update based on . Sample a proposal by , with ε ~ N(0, δ2). δ is an adjustable step size. Compute the ratio

and update with probability min(1, Rσ). - Step 3. Update τ based on . First generate a proposal τ̃ using “switch/swap”, i.e., provided that τ does not contain all 1's or all 0's, with probability ξ, randomly swap one 1 term with one 0 term; and with probability 1 − ξ, randomly pick one position and switch it. Then let

and update τ = τ̃ with probability min(1, Rτ). Step 4. Update α conditional on current values of , τ and Zl through the conditional distribution , where μα and Vα are defined as in Web Appendix B.

Step 5. Conditional on current values of α, , τ and Zl, update b0 by b0|α, , τ, Zl ~ N(μ0, V0), where μ0 and V0 are defined as in Web Appendix B.

Step 6. Conditional on current values of b0, α, , τ and Zl, update bl by bl|b0, α, , τ, Zl ~ N(μl, Vl) for all l, where μl and Vl are defined as in Web Appendix B.

Repeat step 1 – 6 until the maximum number of iterations is reached.

The “switch/swap” proposal used in step 6 is similar to the methods used in Brown et al. (1998, 2002). Our simulation shows that if the number of functional predictors is small, this type of proposal can locate the correct value of τ within a few iterations. However, when the number of functional predictors become large, the number of possible values of τ increases at an exponential rate. The “switch/swap” proposal can hardly find successful proposals because of the discrete nature of the large state space, which results in extremely low acceptance rate (e.g., acceptance rate less than 0.1%).

In order to obtain better mixing for τ, we construct a more effective EMC algorithm based on Algorithm 1. The EMC algorithm is a MCMC scheme that inherits the attractive features of both simulated annealing and genetic algorithms. It simulates a population of I Markov chains in parallel, each chain with a different “temperature”. The temperatures are ordered decreasingly to form a “ladder”. If π(θ) denotes the target posterior distribution and ti denotes the temperature for the ith chain, then the transformed posterior for the ith chain is πi(θ) ∝ π(θ)1>/ti. Such transformations have the effect of making the unnormalized target posterior density more flat or more spiky. The EMC algorithm improves on Metropolis-Hastings updates by introducing three operations: mutation, crossover and exchange. These operations allow both independent updates for each chain and interactions between neighboring chains. More details on EMC algorithm can be found in Liang and Wong (2000), Liu (2001), Goswami and Liu (2007) and Bottolo and Richardson (2008).

In the EMC algorithm, the choice of temperatures for the temperature ladder is important. We adopt a simple method suggested in Bottolo and Richardson (2008), which uses a geometric sequence and adjusts the common ratio in a burn-in period so that the acceptance rate for the exchange operation is between 10% and 60%. The number of chains I and the maximum temperature are pre-specified. Based on our experiences, we suggest choosing I to be around J/2, and the maximum temperature between 10 and 103. The Algorithm 2 stated below gives details of the EMC algorithm.

4.2 MCMC Algorithm 2 (EMC)

Step 0. Set initial values for bl's, α, τ and . And set up an initial temperature ladder: t1 > t2 > … > tI > 0, where ti+1/ti = a (i = 1, …, I) denotes the initial ratio of the geometric sequence. We re-adjust the temperature ladder so that t1 is bounded by the maximum temperature and one chain has temperature exactly 1. We also set the step-size for adjusting temperature to be δa = log2(a)/(3ñ), where and ñ is the ratio of the burn-in period a block size(usually 100). We also set the parameter ζ, the probability of mutation and crossover, and ξ, the probability of switch and swap within the mutation step.

Step 1. Run step 1 – 2 in Algorithm 1 independently for each chain, obtaining samples of Zl's and .

- Step 2. Conditional on current values of Zl's and in each chain, update τ according to step 2.1 and 2.2. For convenience, here we denote , and denote πi(τ|·) the similar expression when plugging in the samples of and Zl's from the ith chain.

- Step 2.1. (mutation/crossover) With probability ζ, perform a mutation step independently for each chain, i.e., “switch” or “swap” with probability ξ, as in step 3 of Algorithm 1. In particular, denote the mutated value for the ith chain to be τ̃ and compute the log ratio log . Update τ = τ̃ with probability .

- With probability 1−ζ, perform the crossover step [I/2] times, where [I/2] denotes the integer part of I/2. The crossover is conducted as follows: randomly select a pair of chains (i, j) and exchange the right segment of τ's from a random point. Denote the old values to be (τi, τj), and the crossed values to be (τ̃i, τ̃j), we then compute the log ratio: log rc = [log πi(τ̃i|·) − log πi(τi|·)]/ti + [log π̃j|·) − log πj(τj|·)]/tj. The (τ̃i, τ̃j) are accepted with probability min(1, rc).

- Step 2.2. (exchange) Exchange the τ samples from two adjacent chains I times, i.e., randomly choose τi and τj from neighboring chains, and compute the log ratio: log re = [log πj(τj|·) − log πi(τi|·)]/ti + [log πj(τi|·) − log πj(τj|·)]/tj, and exchange τi with τj with probability min(1, re).

Step 3. Conditional on current values of Zl's, , and the current samples of τ, run step 4 – 6 in Algorithm 1 independently for each chain, obtaining samples of α, b0 and bl's.

Step 4. For every block of iterations within the burn-in period, we adjust the temperature ladder according to the acceptance rate of exchange operations within this block. A new geometric ratio ã is computed by log2 ã = log2 a ± δa, where the “+” sign is used when we would like to reduce the acceptance rate of exchange. The new temperature ladder is applied to the next block of iterations.

Repeat step 1 – 4 until the maximum number of iterations is reached.

5. Simulation results

Two simulation studies were conducted to evaluate the performance of the proposed model for functional data classification. In both simulations, we generate data that contain both random and fixed effects. Simulation 1 uses 4 functional predictors, and thus τ is a binary vector of length 4. Since the number of functional predictors is small, the MCMC Algorithm 1 works well. Simulation 2 increases the number of functional predictors to 20, in which case the Algorithm 1 suffers slow mixing. Algorithm 2 is used, which improves the mixing for posterior samples of τ.

5.1 Simulation 1

We generate n = 1000 i.i.d. observations, using 2 non-functional predictors and 4 functional predictors. For the non-functional predictors, one of them is generated from a uniform distribution on [0, 1], the other is a binary variable. The 4 functional predictors are generated using the first 10 orthonormal cosine bases on the interval [0, 1], i.e., using bases , k = 1, …, 9 (see Eubank (1999) for details of cosine series). The random effect has two levels, i.e., L = 2. We set the true value of τ to be (0, 1, 0, 1), indicating that the first and the third function do not contribute to the model, i.e., . Other parameters that are used to generate the data are . The weights used for the prior covariance are determined by parameter m = 0.51. The binary responses are generated based on (1) using numerical integration. To evaluate classification results, the data are randomly split into a training set(with 800 observations) and a test set(with 200 observations).

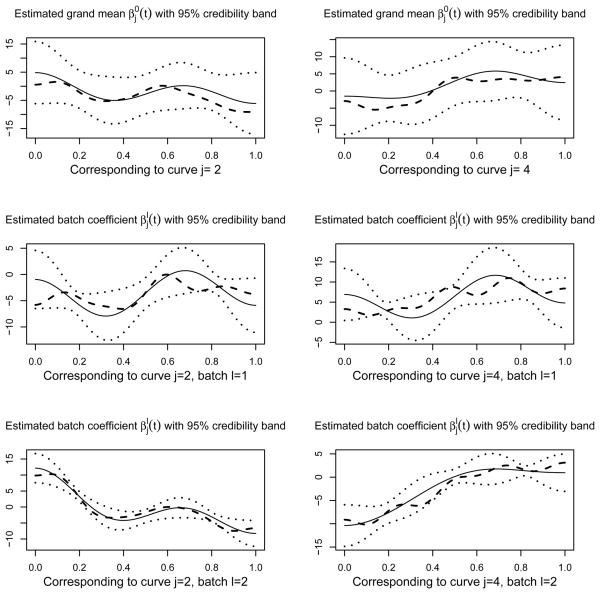

The proposed model in Section 2 is applied to the training data. We use FPC to construct the orthonormal basis and set the approximation criterion described in Section 3 to be c1 = 0.99, which results in pj = 4 for all j. Note that the computation of FPC scores for the test set is based on the eigenfunctions estimated from the training set in order to avoid possible bias. Based on the FPC scores, the model is trained using the MCMC Algorithm 1 with the following priors: , d1 = 4.3, d2 = 16, ωj ≡ 0.5, . The prior parameters for the weight matrix W is set to be such that m = 0.98. We set ζ = ξ = 0.5 and δ = 1.4. We performed 30,000 iterations with a burn in period of 15,000. It turns out that the posterior samples of τ converge to the true τ within 10 iterations. The estimated marginal posterior probability (Pr{τj = 1}, j = 1, …, 4) = (0, 1, 0, 1), indicating that our algorithm has correctly selected the second and the fourth functional predictor with high accuracy. Web Figure 2 shows the autocorrelation plot of the posterior samples of and the corresponding histogram plot. The Geweke convergence diagnostic test (Geweke, 1992) for using the first 10% and last 50% of the samples gives Z-score −0.57, indicating the convergence of the posterior sample means. The posterior median for is 5.7, and the 95% credible interval for is (3.0, 12.4). Note that since we are using a different orthonormal basis (FPC) than that used to generate data, the posterior estimates of bl's and b0 will not be comparable with the true values. However, we can estimate coefficient function 's from bl and compare them with the true coefficient functions. Figure 2 shows the posterior b means of the coefficient functions and the corresponding simultaneous 95% credibility bands for the non-zero cofficient functions, along with the true functions. The simultaneous credibility band is obtained by finding a constant M, such that 95% of the simulated posterior functions fall in the interval , where and denotes the posterior mean and standard deviation of the cofficient functions. From Figure 2, we see that the true coefficient functions lie in the 95% credibility bands.

Figure 2.

The posterior estimation of the non-zero coefficient functions and their 95% simultaneous credibility band, compared with the true coefficient functions used to generate the data. Here j is the the index for mutiple functional predictors, and l is the index for batch. 's are the grand means of all batch coefficients. The solid lines denote the posterior mean; the dotted lines denote the 95% credibility bands; the dashed lines denote the true coefficient functions. We only listed the estimations for j = 2, 4 since the functional predictors at j = 1 and 3 are unselected and thus the associated coefficient estimations are close to zero.

After the training step, the estimated coefficient functions are applied to the test set to get the posterior predictive probability. Treating yi = 1 as diseased and yi = 0 as normal, the predictions on the test set gives sensitivity 92% and specificity 99%, with the total misclassification rate 4.5%. Note that the sensitivity and specificity we reported here are obtained by finding a point on the empirical ROC curve that maximizes the sum of sensitivity and specificity (see Zweig (1993) for introduction of ROC Curves).

As mentioned in Section 4, in Algorithm 1, we use a Metropolis-Hastings step with a “switch/swap” proposal to update the parameter τ. In this simulation, the space for τ only has 24 possible values. The trace of posterior samples of τ shows that when Algorithm 1 starts from a random value, it only updates 3 times before reaching the correct value, which never changes afterward. However, as the length of τ increases, the size of the state space of τ increases exponentially, and the “switch/swap” proposal takes a long time to find a ”good” proposal. Our experiments show that when the length of τ goes beyond 8, Algorithm 1 suffers extremely low acceptance rate for τ and mixes very slowly. Therefore we suggest using Algorithm 2 when the number of functional predictors is large (e.g., greater than 8).

5.2 Simulation 2

To evaluate the performance of Algorithm 2 when there are a relatively large number of functional predictors, we generate n = 1000 i.i.d. observations similarly as in Simulation 1 using the first 10 cosine bases functions, but increase the number of functional predictors per observation to 20. We set the true τ to be such that 8 out of the 20 components are 1's. Other parameters are set to be the same as in Simulation 1. We also split the data to training and test set as in Simulation 1.

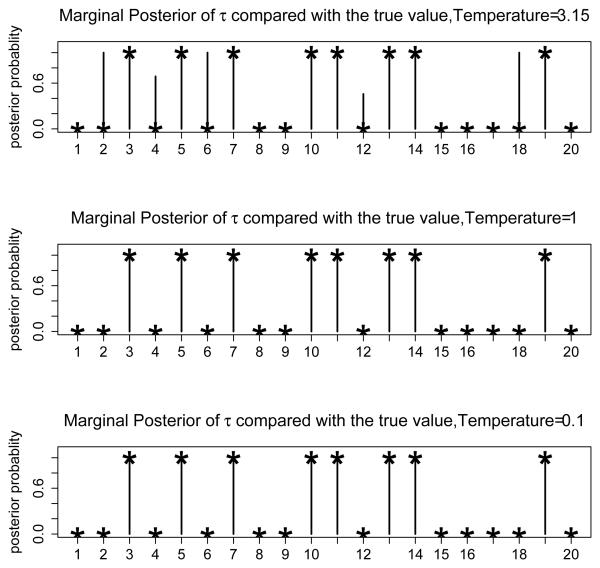

Similarly to Simulation 1, we set approximation criterion c1 = 0.99 as we approximate the functional predictors using FPC, which results in pj = 4 for all j. Seven parallel chains are used in Algorithm 2 with a maximum temperature of 18 for the temperature ladder and the geometric ratio for the ladder starting at 3. Other prior parameters are set similarly as in Simulation 1. We perform 20, 000 MCMC iterations with a burn-in period of 5, 000 in which the temperature ladder is adjusted. In addition to this burn-in period, an extra 5, 000 iterations are used as a second-stage burn-in period(with the fixed temperature ladder obtained from the first period). Therefore the posterior inference is based on the last 10, 000 iterations. It takes around 3 hours to complete the job using a computer server with dual 3.4 GHz Intel Xeon processors and 4 GB of memory. The MCMC algorithm is coded using R. The final temperature ladder after the burn-in period adjustment is (18, 7.53, 3.15, 1, 0.55, 0.23, 0.1). The acceptance rates of for different chains are (56, 41, 33, 23, 17, 12, 9) × 10−2, and the acceptance rates of τ in the mutation operation step are (23, 13, 4, 0, 0.5, 1, 0) × 10−4, in the same order of the temperature ladder. The acceptance rates for crossover and exchange operations are 15.3% and 45.8%, respectively. We plot the estimated marginal posterior probability Pr{τj = 1}, j = 1, …, 20, under three selected temperatures in Figure 3, compared with the true value of τ. Figure 3 shows that at temperature 3.15, more components in τ than the true are selected. The chains with temperature 1 and 0.1 show similar marginal posterior probabilities. They both pick out the correct functional predictors. The estimated regression coefficient functions are obtained from the chain with temperature 1 and applied to the test set for prediction, with a resulting sensitivity of 94.3%, specificity of 95.8% and misclassification rate of 5%.

Figure 3.

The marginal posterior probabilities Pr{τj = 1, j = 1}, …, J, at 3 selected temperatures. The symbol ★ indicates the true value of each component of τ. The vertical lines are the marginal posterior probabilities.

6. Application to fluorescence spectroscopy data

The proposed model is applied to the fluorescence spectroscopy data introduced in Section 1. In this data set, an EEM measurement corresponds to an observation with 16 functional predictors. Using our approach, we aim to select a subset of the 16 curves in the EEM to reduce the cost of data collection.

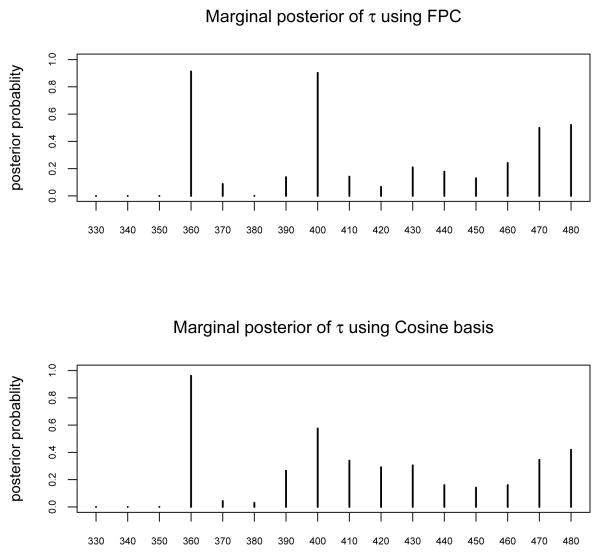

There are a total of 2414 measurements taken from 1006 patients. Each patient has 1 or more (up to 6) sites that were measured and there exists repeated measurements (although not for every patient). The data were pre-processed by procedures such as background correction and smoothing. All measurements come from 6 device-clinic combinations, which we treat as the sources of random effects. Two fixed effects are considered and treated as non-functional predictors in the proposed model: tissue-type, coded as 1, 2, and menopausal status, coded as 1, 2, 3. The 2414 measurements are randomly split into training and test set, with 1353 observations in the training set and 1061 in the test set. The split is conducted at the patient level, i.e., measurements from each patient either all fall in training set or all fall in test set. The proportion of diseased observations in the training and test sets are 10% and 9%, respectively. Both cosine basis expansion and FPC are used to approximate the functional predictors. We determine the number of basis functions used for each curve by setting the approximation criterion c1 = 0.998 for FPC, and c2 = 0.992 for cosine basis expansion. The resulting pj's for the functional predictors range from 2 to 4. The priors are set as: , d1 = 3.6, d2 = 22.9, ωj ≡ 0.5, ν1 = 1, ν0 = 10−3. The weight matrix W is determined by setting the parameter m = 0.81, using the way described in Section 3. In both cases, we perform 20,000 MCMC iterations with 5, 000 burn-in iterations for temperature ladder adjustment. Similary as in Simulation 2, an extra 5, 000 iterations are used as a second-stage burn-in period. Nine parallel chains are used in Algorithm 2 for both sets of basis functions. The maximum temperature used in Algorithm 2 is 10 in both the FPC case and the cosine expansion case. Both cases use an initial geometric ratio a = 3. Other parameters used in Algorithm 2 are: δ = 1.2, ζ = ξ = 0.5. The acceptance rates in both cases are listed in Web Table 1. The posterior mean estimate for is 3.10 using FPC, and is 3.52 using cosine basis expansion. In Figure 4, we plot the estimated marginal posterior probabilities Pr{τj = 1}, j = 1, …, 16, for both cases. Figure 4 shows that the two basis expansion methods provide similar marginal posterior probabilities for τ. Both methods show high probabilities of selection for functions at excitation 360 and 400nm, followed by functions at excitation 480nm and others. The marginal posterior probabilities suggest an order of selection for the functional predictors, with higher quantities having higher priority of being selected. The decision of selection can be made by setting the total number of functions to select, and choose the functions by the marginal posterior probabilities. For example, if we would like to select 3 functional predictors, both methods of basis expansion suggest to select functions at excitation 360, 400 and 480nm. One can also make decisions based on the joint posterior probabilities of τ(e.g., selecting the most frequently visited model.)

Figure 4.

The marginal posterior probabilities Pr{τj = 1}, j = 1, …, 16, for both cases of basis expansions. The top panel is based on FPC, and the bottom panel is based on Cosine basis expansion.

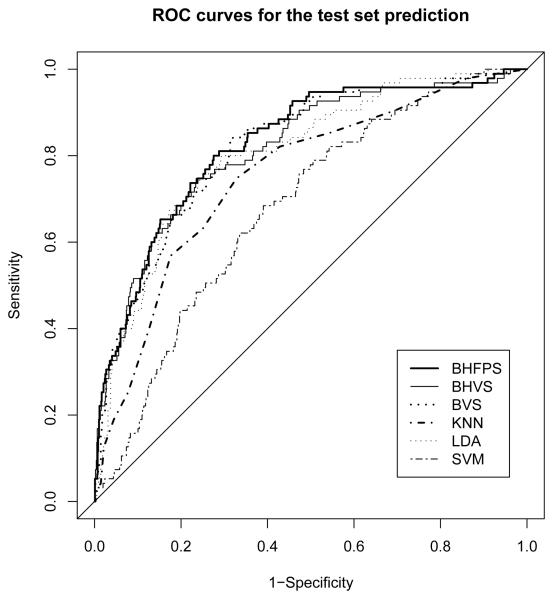

The estimated regression coefficients are applied to the test set for prediction. The prediction results are listed in Table 1 and are compared with 5 other classifiers. Here we denote our proposed model “BHFPS”, an abbreviation of Bayesian Hierarchical Functional Predictor Selection. Note that all the classifiers in Table 1 use both the non-functional and all 16 functional predictors. In particular, the BVS model is a regular Bayesian variable selection method which does not consider the random effects and functional predictor selection. It selects variables among the pooled scores obtained from orthonormal basis expansion of the 16 curves (Zhu, Vannucci and Cox, 2007). The Bayesian hierarchical variable selection (BHVS) is an extension of the BVS model which includes the random effects with a hierarchical setup. From Table 1, we see that the proposed method (BHFPS) obtains slightly higher area under the ROC curve(AUC) than BHVS and BVS. Table 1 also shows that the two orthonormal basis expansion methods are comparable in their prediction ability, although the cosine basis expansion method shows slightly lower AUC's than the FPC method. In Figure 5, we compare the empirical ROC curves for models listed in Table 1 using the results of the FPC method.

Table 1.

Results of test set prediction using the proposed model (BHFPS) compared with 5 other methods. Two methods of basis expansions are used: cosine basis expansion and functional principal components.

| Using Cosine basis expansion | Using FPC | |||||||

|---|---|---|---|---|---|---|---|---|

| Method | AUC | MisR | Sens | Spec | AUC | MisR | Sens | Spec |

| BHFPS | 0.824 | 26.7% | 81.1% | 72.6% | 0.826 | 26.9% | 80.0% | 72.5% |

| BHVS | 0.808 | 25.4% | 74.7% | 74.6% | 0.814 | 23.7% | 74.7% | 76.5% |

| BVS | 0.802 | 28.1% | 76.8% | 71.4% | 0.819 | 30.5% | 84.2% | 68.0% |

| KNN | 0.697 | 27.7% | 62.1% | 73.3% | 0.718 | 32.1% | 71.8% | 74.7% |

| LDA | 0.796 | 27.3% | 74.7% | 72.5% | 0.804 | 25.0% | 75.8% | 74.9% |

| SVM | 0.657 | 56.6% | 85.3% | 39.2% | 0.679 | 38.4% | 68.4% | 61.0% |

AUC: Area under ROC curve; MisR: misclassification rate; Sens: sensitivity; Speci: specificity; BHFPS: the proposed Bayesian hierarchical functional predictor selection model; BHVS: Bayesian hierarchical variable selection model; BVS: regular Bayesian variable selection model; KNN: K-nearest neighbor; LDA: linear discriminant analysis; SVM: linear support vector machine. See text for explanation of BVS and BHVS models

Figure 5.

ROC curves obtained by test set prediction using the proposed model compared with 5 other classifiers, where BHFPS, BHVS, BVS, KNN, LDA and SVM are defined in Table 1.

Based on the functional predictors selected from the proposed model, classification algorithms can be trained independently using only the selected curves. For example, trained on the first 3 functional predictors selected by the proposed model, the BHVS model gives sensitivity 73.7% and specificity 70%, with corresponding AUC 0.80 and misclassification rate 29%. Compared with Table 1, these prediction results are comparable with those using all 16 curves(which are based on model averaging over the different posterior selections of τ). Hence it is possible to use a smaller number of curves and retain a high prediction power. Using the selected curves, a new device can be constructed which reduces cost and saves measurement time.

7. Discussion

Motivated by a practical problem in functional data classification, we have proposed a Bayesian hierarchical model to deal with situations when functional predictors are contaminated by random batch effects. Inferences based on this model help to select a subset of functional predictors for classification. This model is applied to a real application of using fluorescence spectroscopy for cervical pre-cancer diagnosis. The results suggest that it is possible to build more cost-effective device with fewer spectral curves.

When setting the priors for the coefficient functions in (2), we have assumed that are independent for all j and l, which leads to the prior correlation matrix R = Ip in (9) after approximation with basis expansion. This is just a simple and convenient choice of prior. It is possible to allow the priors for to be correlated, such as assuming that is a multivariate Gaussian process, as done in Morris and Carroll (2006). However, determining prior correlations can be difficult and the resulting posterior computation can be complex.

Another concern arises over the necessity of using a hierarchical structure to adjust for batch effects. As we have pointed out in Section 1 and the Web Appendix A, for data obtained from an unbalanced experimental design, classification can be easily biased by batch effects. Algorithms that do not adjust for batch effects may result in classification based on batch difference, rather than disease information. Using a hierarchical model is a natural way to model the batch structure. In our real data application, although the hierarchical models (BHFPS and BHVS) did not improve prediction significantly over models like BVS (see Table 1 and Figure 5), they are more suitable as they adjust for the batch effects. In fact, we should not always expect that adjusting for batch effect can improve the prediction, since with a bad experimental design, a classification algorithm can get prediction as good as 100% sensitivity and specificity, by simply using batch information (see, e.g., discussions of Baggerly et al. (2004)).

In our simulation and real data applications, the proposed model was trained using data from all batches, and predictions were made on observations from the same batches. Like many other hierarchical models, our proposed model can also predict observations from new batches. However, it is natural to expect that the prediction will be worse when predicting on new batches, since the random effect of the new batch is unknown when training the model.

Finally, like many other regression problems, when there exists collinearity between the functional predictors, a unique solution for the “best” subset may not be guaranteed. In this case, our proposed model may provide nearly equal posterior probabilities of selecting one or the other functional predictors.

Supplementary Material

Acknowledgements

Hongxiao Zhu and Dennis D. Cox were supported by NCI grant PO1-CA82710 and by NSF grant DMS0505584. Marina Vannucci was partially supported by NIH grant R01-HG00331901 and NSF grant DMS0605001. The authors thank Dr. Gopi Goswami and Dr. Faming Liang for email discussions of EMC algorithm, and thank Dr. Peter Müller for suggestions on the modeling.

Footnotes

8. Supplementary Materials

The Web Appendices referenced in Section 1, 2.2, 3, 4 and 7 are available under the Paper Information link at the Biometrics website http://www.biometrics.tibs.org.

References

- Albert JH, Chib S. Bayesian analysis of binary and polychotomous response data. Journal of the American Statistical Society. 1993;88:669–679. [Google Scholar]

- Ash RB, Gardner MF. Topics in Stochastic Processes. Academic Press; New York: 1975. [Google Scholar]

- Baggerly KA, Edmonson SR, Morris JS, Coombes KR. High-resolution serum proteomic patterns for ovarian cancer detection. Endocrine-Related Cancer. 2004;11:583–584. doi: 10.1677/erc.1.00868. [DOI] [PubMed] [Google Scholar]

- Bottolo L, Richardson S. Evolutionary stochastic search. 2008 Available on website: http://www.bgx.org.uk/publications/Bottolo_Richardson_ESS.pdf.

- Brown PJ, Vannucci M, Fearn T. Multivariate Bayesian variable selection and prediction. Journal of the Royal Statistical Society, Series B. 1998;60:627–641. [Google Scholar]

- Brown PJ, Vannucci M, Fearn T. Bayes model averaging with selection of regressors. Journal of the Royal Statistical Society, Series B. 2002;64:519–536. [Google Scholar]

- Chang SK, Follen M, Malpica A, Utzinger U, Staerkel G, Cox DD, Atkinson EN, MacAulay C, Richards-Kortum R. Optimal excitation wavelengths for discrimination of cervical neoplasia. IEEE Transactions on Biomedical Engineering. 2002;49:1102–1110. doi: 10.1109/TBME.2002.803597. [DOI] [PubMed] [Google Scholar]

- Eubank R. Nonparametric Regression and Spline Smoothing. Marcel Dekker; New York: 1999. [Google Scholar]

- Ferré L, Villa N. Multilayer perceptron with functional inputs: an inverse regression approach. Scandinavian Journal of Statistics. 2006;33:807–823. [Google Scholar]

- George EI, McCulloch RE. Variable selection via Gibbs sampling. Journal of the American Statistical Society. 1993;89:881–889. [Google Scholar]

- George EI, McCulloch RE. Approaches for Bayesian Variable selection. Statistica Sinica. 1997;7:339–373. [Google Scholar]

- Geweke J, Bernardo JM, Berger JO, Dawid AP, Smith AFM. Evaluating the accuracy of sampling-based approaches to calculating posterior moments. Bayesian Statistics 4. 1992:169–194. [Google Scholar]

- Green P. Reversible jump Markov Chain Monte Carlo computation and Bayesian model determination. Biometrika. 1995;82:711–732. [Google Scholar]

- Goswami G, Liu JS. On learning strategies for Evolutionary Monte Carlo. Statistics and Computing. 2007;17:23–38. [Google Scholar]

- Hall P, Poskitt DS, Presnell B. A functional data-analytic approach to signal discrimination. Technometrics. 2001;43:1–9. [Google Scholar]

- Hall P, Müller H, Wang J. Properties of principal component methods for functional and longitudinal data analysis. The Annals of Statistics. 2006;34:1493–1517. [Google Scholar]

- James GM. Generalized linear models with functional predictors. Journal of the Royal Statistical Society, Series B. 2002;64:411–432. [Google Scholar]

- Leng X, Müller H. Classification using functional data analysis for temporal gene expression data. Bioinformatics. 2005;22:68–76. doi: 10.1093/bioinformatics/bti742. [DOI] [PubMed] [Google Scholar]

- Liang F, Wong WH. Evolutionary Monte Carlo: applications to Cp model sampling and change point problem. Statistica Sinica. 2000;10:317–342. [Google Scholar]

- Liu JS. Monte Carlo Strategies in Scientific Computing. Springer; New York: 2001. [Google Scholar]

- Morris JS, Carroll RJ. Wavelet-based functional mixed models. Journal of the Royal Statistical Society, Series B. 2006;68:179–199. doi: 10.1111/j.1467-9868.2006.00539.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller H, Stadtmüller U. Generalized functional linear models. The Annals of Statistics. 2005;33:774–805. [Google Scholar]

- Ramanujam N, Mitchell MF, Mahadevan A, Thomsen S, Malpica A, Wright T, Atkinson N, Richards-Kortum R. Spectroscopic diagnosis of cervical intraepithelial neoplasia (CIN) in vivo using laser-induced fluorescence spectra at multiple excitation wavelengths. Lasers in Surgery and Medicine. 1996;19:63–74. doi: 10.1002/(SICI)1096-9101(1996)19:1<63::AID-LSM8>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- Ramsay J, Silverman B. Functional Data Analysis. Springer; New York: 1997. [Google Scholar]

- Ratcliffe SJ, Heller GZ, Leader LR. Functional data analysis with application to periodically stimulated foetal heart rate data. ii: Functional logistic regression. Statistics in Medicine. 2002;21:1115–1127. doi: 10.1002/sim.1068. [DOI] [PubMed] [Google Scholar]

- Zhao X, Marron JS, Wells MT. The functional data analysis view of longitudinal data. Statistica Sinica. 2004;14:789–808. [Google Scholar]

- Zhu H, Vannucci M, Cox DD. Functional data classification in cervical pre-cancer diagnosis – a Bayesian variable selection model. 2007 Joint Statistical Meetings Proceedings. 2007:1339–1346. [Google Scholar]

- Zweig MH, Campbell G. Receiver-operating characteristic ROC plots: a fundamental evaluation tool in clinical medicine. Clinical Chemistry. 1993;39:561–577. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.