Abstract

Auditory responses to speech sounds that are self-initiated are suppressed compared to responses to the same speech sounds during passive listening. This phenomenon is referred to as speech-induced suppression, a potentially important feedback-mediated speech-motor control process. In an earlier study, we found that both adults who do and do not stutter demonstrated reduced amplitude of the auditory M50 and M100 responses to speech during active production relative to passive listening. It is unknown if auditory responses to self-initiated speech-motor acts are suppressed in children or if the phenomenon differs between children who do and do not stutter. As stuttering is a developmental speech disorder, examining speech-induced suppression in children may identify possible neural differences underlying stuttering close to its time of onset. We used magnetoencephalography to determine the presence of speech-induced suppression in children and to characterize the properties of speech-induced suppression in children who stutter. We examined the auditory M50 as this was the earliest robust response reproducible across our child participants and the most likely to reflect a motor-to-auditory relation. Both children who do and do not stutter demonstrated speech-induced suppression of the auditory M50. However, children who stutter had a delayed auditory M50 peak latency to vowel sounds compared to children who do not stutter indicating a possible deficiency in their ability to efficiently integrate auditory speech information for the purpose of establishing neural representations of speech sounds.

Keywords: stuttering, child development, speech-motor control, auditory processing, M50, magnetic source imaging

Introduction

Stuttering is a developmental disorder defined by frequent and involuntary repetitions and/or prolongations of sounds as well as silent blocks that disrupt speech fluency and is prevalent in approximately 5% of preschool children (Yairi and Ambrose 1999). The onset of the disorder typically occurs between 2 and 5 years of age (Bloodstein and Ratner 2008). There is evidence for a genetic aetiology of developmental stuttering (Ambrose, Cox, Yairi 1997; Howie 1981; Kang et al., 2010; Kidd, Heimbuch, Records 1981; Lan et al., 2009; Riaz et al., 2005; Suresh et al., 2006; Wittke-Thompson et al., 2007). There are also various neuroanatomical (Beal et al., 2007; Foundas et al., 2001; Foundas et al., 2004; Jäncke, Hänggi, Steinmetz 2004; Kell et al., 2009; Sommer et al., 2002; Song et al., 2007; Watkins et al., 2008) and neurophysiological (Blomgren et al., 2003; Braun et al., 1997; Chang et al., 2009; De Nil et al., 2000; De Nil, Kroll, Houle 2001; De Nil et al., 2008; Fox et al., 1996; Fox et al., 2000; Giraud et al., 2008; Kell et al., 2009; Lu et al., 2009; Neumann et al., 2003; Neumann et al., 2005; Preibisch et al., 2003; Watkins et al., 2008) differences that have been observed in adults who stutter relative to fluent speakers. To our knowledge, only two studies have examined the neural correlates of stuttering in children (Chang et al., 2008; Weber-Fox et al., 2008). Given that stuttering typically has its onset in the preschool years there is a great deal to be gained from increasing our understanding of the neural signatures of this disorder early in its presentation and development.

Chang et al. (2008) investigated neuroanatomical differences in children who stutter relative to non-stuttering and recovered-from-stuttering peers. Similar to adults who stutter, children who stutter were found to have deficient white matter connectivity, as measured by fractional anisotropy, underlying areas near the left ventral premotor and motor cortices. However, children who stutter also differed from their age-matched fluently speaking peers in a unique way relative to previous reports of differences between adults who stutter and their fluently speaking peers. Chang et al. (2008) reported that children who stutter had reduced grey matter volume compared to children who do not stutter in the left inferior frontal gyrus and bilateral middle temporal regions. Conversely, adults who stutter have been found to have increased grey matter in the left inferior frontal gyrus and bilateral superior temporal regions, including primary auditory cortex (Beal et al., 2007; Song et al., 2007). However, Kell et al. (2009) found reduced grey matter in the left inferior frontal gyrus in adults who stutter as well as in former stutterers who had recovered from stuttering.

Weber-Fox, Spruill, Spencer, & Smith (2008) measured event-related potentials (ERPs) of children who stutter and fluent children in a visual rhyming task. Children who stutter demonstrated lower accuracy on rhyming judgments relative to fluent children. However, the children who stutter did not differ from fluent children in the ERP component associated with the rhyming effect in this task. Instead, children who stutter demonstrated differences from fluent children in the contingent negative variation and N400. These components reflect anticipation and semantic incongruity. Weber-Fox et al. (2008) concluded that the neural profile of children who stutter suggested inefficient phonological rehearsal and target anticipation for rhyming judgment, and that children who stutter may have difficulty forming the phonological neural representations needed for accurate and efficient rhyming judgments. Further exploration is required to understand if differences in neural functioning between children who stutter and fluent children impact the early auditory processing for integrating feedback into upcoming speech-motor commands.

A central finding of previous functional neuroimaging studies of speech production in adults who stutter is a reduction in auditory cortex activation, in the presence of increased speech-motor cortex activation, relative to that of fluently speaking adults (De Nil et al., 2008; Fox et al., 1996; Fox et al., 2000; Watkins et al., 2008) but see Kell et al. (2009). Consequently, several researchers have posited that the interaction between motor and auditory cortices may be abnormal in adults who stutter (Brown et al., 2005; Ludlow and Loucks 2003; Max et al., 2004; Neilson and Neilson 1987). Specifically, some studies have proposed that stuttering may arise from difficulties controlling speech acts due to faulty neural representations of speech processes in the brain (Corbera et al., 2005; Max et al., 2004; Neilson and Neilson 1987). A crucial aspect of normal speech acquisition is the gradual transition of control of speech-motor movement from a feedback-biased to feedforward-biased mechanism during development (Bailly 1997; Guenther and Bohland 2002; Guenther 2006). Difficulty developing the neural processes for speech in childhood may interfere with the transition of speech-motor control from a predominant feedback to a more feedforward mode and contribute to the onset of stuttering (Civier, Tasko, Guenther 2010; Max et al., 2004; Neilson and Neilson 1987).

Further insight into the relation between motor and auditory cortical regions may be gained from the study of speech-induced auditory suppression, a mechanism related to this interaction. Speech-induced auditory suppression is a normal neurophysiological process thought to be related to the monitoring, and subsequent modification of, the auditory targets associated with speech-motor acts (Beal et al., 2010; Heinks-Maldonado, Nagarajan, Houde 2006; Houde et al., 2002; Numminen, Salmelin, Hari 1999; Tourville, Reilly, Guenther 2008). Various models of speech-motor control posit that projections from motor-related areas to auditory cortex relay information concerning the auditory target region for the speech sound under production (Guenther 2006; Houde et al., 2002; Kröger, Kannampuzha, Neuschaefer-Rube 2009; Ventura, Nagarajan, Houde 2009). The auditory target is compared to the actual auditory feedback and if there is correspondence then the incoming auditory signal is suppressed. If the auditory feedback is outside the range of the predicted auditory target then an error is detected and corrective motor commands are issued to the motor cortex (Heinks-Maldonado, Nagarajan, Houde 2006; Tourville, Reilly, Guenther 2008).

Speech production, from conceptual formulation to articulation, is completed in approximately 600 milliseconds (Levelt 2004; Sahin et al., 2009). On average, adults are able to produce 5 syllables per second when asked to speak at a fast rate (Tsao and Weismer 1997). Auditory feedback can be used to modify speech production within a time period ranging from 81 to 186 milliseconds (Tourville, Reilly, Guenther 2008). Millisecond level information about the sequence of cortical events comprising speech production is crucial for understanding the interaction between motor execution and auditory feedback of self-generated speech. The aforementioned investigations of speech production in adults who stutter used either positron emission tomography (PET) or functional magnetic resonance imaging (fMRI) which are limited in their ability to resolve temporal events occurring over periods shorter than several seconds. However, magnetoencephalography (MEG) is able to measure neural events with millisecond temporal resolution combined with good spatial resolution. MEG has been used to demonstrate that speech-induced related suppression of auditory activation can be detected as early as within 50 to 100ms of vocalization in adults (Beal et al., 2010; Curio et al., 2000; Houde et al., 2002; Numminen, Salmelin, Hari 1999).

We have reported that adults who stutter had shorter auditory M50 and M100 latencies in response to the self-generated vowel /i/ and vowel-initial words in the right hemisphere relative to the left hemisphere whereas adults who do not stutter showed similar latencies across hemispheres (Beal et al., 2010). These timing differences were observed in adults who stutter despite similar levels of auditory M50 and M100 peak amplitude reduction during active generation relative to controls. In other words, speech-induced auditory suppression resulted in peak latency differences in the adults who stutter relative to fluently speaking adults rather than peak amplitude differences. The neural timing differences may reflect inefficient access to the neural representations of speech processes, or compensation for such a deficit, in adults who stutter.

In adults, the M100 (N1 in EEG/ERP studies) is the most robust and reproducible auditory component across participants (Bruneau and Gomot 1998). Therefore, the main emphasis of MEG studies of auditory evoked responses has been the M100 (Mäkelä 2007). However, in children the morphology of the waveforms are often different such that the M50 is at a prolonged latency and more robust and reproducible across child participants relative to adults (Oram Cardy et al., 2004). From early childhood through to adulthood the evoked response morphology in MEG and EEG gradually changes, such that the M50 becomes less robust and reproducible and the M100 becomes more so (Bruneau and Gomot 1998; Gage, Siegel, Roberts 2003; Kotecha et al., 2009; Oram Cardy et al., 2004; Paetau et al., 1995; Picton and Taylor 2007; Rojas et al., 1998). Furthermore, the M50 and M100 have been shown to have a common source in primary auditory cortex (Hari, Pelizzone, Mäkelä 1987; Hari, Pelizzone, Mäkelä 1987; Kanno et al., 2000; Mäkelä and Hari 1987; Mäkelä and Hari 1987). Functionally, both the M50 and M100 are known to change in amplitude and/or latency in response to manipulations of auditory stimuli characteristics such as amplitude, pitch or interstimulus interval (Roberts et al., 2000). Given that speech is a rapid and dynamic motor process, it follows that the underlying neural system supporting it must respond in a timely, precise and sequential manner to ensure its correct production (Guenther 2006; Ludlow and Loucks 2003; Tourville, Reilly, Guenther 2008; Tsao and Weismer 1997). Therefore, it is reasonable to predict that the neural correlates of auditory feedback processing of self-generated speech will be reflected in the first measureable and reproducible auditory response component across children. The main goal of the current study was to understand the differential effects of speech-induced auditory suppression in children who stutter and in age-matched fluently speaking peers. The first observable and reproducible auditory component, namely the M50, is the focus of investigation as it is most likely to reflect early motor-auditory interaction in children ages 6 to 12 years old.

Despite auditory feedback of self-generated speech signals being crucial to the normal development of speech-motor control (Callan et al., 2000; Perkell et al., 2000), no published studies have reported on the effects of speech-induced suppression on auditory feedback in children who do and do not stutter. The current study investigated if speech-induced suppression differed in children who stutter relative to a group of age-matched fluently speaking peers. We also explored the nature of speech-induced suppression in children who stutter relative to that reported in our previous study of adults who stutter (Beal et al., 2010). Based on our data in adults, we anticipated that children who stutter would present with similar speech-induced suppression amplitude change as fluently speaking children, but show differences in the latency of the auditory response during speech, as did the adults who stutter.

Materials and Methods

Participants

Eleven children who stutter and 11 fluently speaking children participated in this study. The children who stutter were recruited from the treatment waiting lists at the Speech and Stuttering Institute as well as the Department of Speech-Language Pathology at the Hospital for Sick Children, both in Toronto, Canada. The fluently speaking children were recruited from the university and hospital communities in Toronto, Canada. The participants were boys who ranged in age from 6 to 12 years old and who spoke English as their primary language.1 All participants met the inclusion criterion that no speech or language deficits be revealed upon standardized testing with the Goldman Fristoe Test of Articulation – Second Edition (Goldman and Fristoe 2000) and the Peabody Picture Vocabulary Test – Third Edition (Dunn and Dunn 1997). A total of 25 children were screened to determine their appropriateness for participation in the current study. Three children (2 control participants, 1 child who stutters) who scored more than 1 standard deviation below the mean of these standardized measures were excluded from participation. Participants were all right-handed as tested with the Edinburgh handedness inventory (Oldfield 1971) and had a negative history of developmental or neural impairment via parent report. The children who stutter ranged in severity from very mild (7) to severe (34) on the Stuttering Severity Instrument – Third Edition (Riley 1994). Stuttering severity measurements were found to have high inter-rater reliability (ICC = .964, p = .01). The two groups did not differ in age, articulation or language ability (p>.05) (Table 1) as tested via multiple independent t-tests. The children gave informed assent and their parents gave informed written consent. The testing involved a pre-neuroimaging 1.5-hour session for articulation, language and hearing screening as well as training and stimuli recording (see Stimuli and Procedures below). The initial session was followed by a 1.5-hour scanning session at the MEG and MRI facilities at the Hospital for Sick Children in Toronto. The protocol was approved by the Hospital for Sick Children’s Research Ethics Board.

Table 1.

Means, standard deviations and ranges for participant characteristics.

| Control Participants (n = 11) | Children Who Stutter (n = 11) | |||||

|---|---|---|---|---|---|---|

| Mean | SD | Range | Mean | SD | Range | |

| Age (months) | 119.18 | 22.46 | 74 – 144 | 114.18 | 18.07 | 92 – 148 |

| PPVT-III | 121.45 | 11.94 | 105 – 138 | 118.72 | 17.26 | 88 – 139 |

| GFTA-II | 104.36 | 2.98 | 97 – 109 | 102.45 | 6.15 | 87 – 108 |

| SSI-III | - | - | - | 22.09 | 8.40 | 7 – 34 |

Stimuli and Procedures

The stimuli and procedures used in the current study were similar to those used in an earlier study of adults who stutter (Beal et al., 2010) but modified to accommodate the testing of children. Prior to the neuroimaging session participants completed a training and stimulus collection session. Participants were trained to consistently produce the vowel /a/2 at a constant volume of 70 dB SPL. Participants were seated in front of a computer monitor inside a sound insulated room while wearing a headset microphone (Shure 512; Shure Incorporated, Niles, Illinois) that maintained a constant 5 cm mouth to microphone distance. Participants were required to speak aloud the vowel /a/ in response to four white asterisks presented on a black background for 500 ms interspersed with the same white cross used in the listening tasks.

Muscle activity from articulator movement may interfere with the magnetic fields of interest (Beal et al., 2010). Therefore, to facilitate production of the vowel with minimal magnetic inference from speech muscle activity participants produced the open back unrounded vowel /a/ in blocks of five visual prompts with each prompt spaced 2.5 to 3 seconds apart. Each block of five vowel /a/ prompts was followed by a seven second rest period and a three second prompt that signalled the beginning of the next block. In this way, participants maintained an open jaw posture to facilitate the production of the vowel /a/ with minimal speech muscle movement during the active period and then closed their jaw during the rest period to facilitate swallowing and mouth moistening for comfort. After successful training, verbal productions of the vowel /a/ stimuli were collected from each of the participants for playback of their self-produced stimuli during the MEG passive listening task listen vowel. The children’s productions were recorded using a Tascam US-122L (TEAC Corporation, Tokyo, Japan) external sound card and Audacity software (version 1.2.6) on a laptop computer. Stimuli were then sound normalized to 70 dB SPL based on normalization of the intensity root mean square using PRAAT sound editing software (version 5.1).

Participants performed three independent tasks during the MEG recording session: listen tone, listen vowel and speak vowel. The two listen tasks, namely listen tone and listen vowel, required the participants to listen to acoustic stimuli while fixating on a static white cross on a black background. The stimuli for the listen tone and listen vowel tasks were presented binaurally via ear-insert phones at 70 decibels sound pressure level (dB SPL). In the listen tone task, participants listened to trials of a 1 kHz tone pip that was 50 ms in duration. In the listen vowel participants listened passively to trials of their recorded self-produced vowel /a/, previously prepared during the training and stimulus collection session described above. The third task, speak vowel, required the participants to speak aloud the vowel /a/ in response to a visual stimulus as they had been previously trained to do during the training session. Prior to the start of the speak vowel task, participants practiced producing the vowel /a/ with a constant volume of 70 dB SPL as they had been previously trained to do.

The order in which the tasks were completed during the MEG scanning session was counterbalanced across participants. All tasks contained 80 trials with an interstimulus interval ranging from 2.5 to 3 seconds. All stimuli were presented on a rear-projection screen in front of the participant using the presentation software SuperLab Pro version 2.0.4 (http://www.superlab.com).

Data acquisition

A photographic storybook was used to introduce parents and children to the MRI and MEG scanning environments prior to the data acquisition appointment. Auditory evoked magnetic fields were recorded continuously (2500Hz sample rate, DC-200 Hz band pass, third-order spatial gradient noise cancellation) for all tasks using a CTF Omega 151 channel whole head first order gradiometer MEG system in a magnetically shielded room at the Hospital for Sick Children in Toronto. The auditory stimuli presented to the participants during the listen tone and listen vowel tasks and the participants’ self-generated speech produced during the speak vowel task were recorded simultaneously with the MEG via an accessory channel on the MEG system. Concurrent acquisition of the auditory and speech signals together with the magnetic field activity facilitated accurate stimulus onset marker placement for data analysis. Fiducial coils were placed at the nasion and each auricular point. Head movement was monitored online via fiducial movement and video surveillance. Fiducial locations were also used to facilitate coregistration of the MEG data to an anatomical MRI obtained for each participant in order to specify the neural sources of the magnetic fields. A 1.5-T Signa Excite III HD 12.0 MRI system (GE Medical Systems, Milwaukee, WI) and an eight channel head coil was used to obtain neuroanatomical images. A T1-weighted 3D fast spoiled gradient echo (FSPGR) sequence (flip angle = 15°, TE= 4.2ms, TR = 9ms) was used to generate 110 1.5-mm-thick axial slices (256 × 192 matrix, 24 cm field of view).

Data analyses

The primary investigator evaluated audio-visual recordings of spontaneous speech and reading samples of the children who stutter using the SSI-3 (Riley 1994). A trained speech-language pathology student evaluated a random sample of 4 of the 11 (36%) children who stutter and a 2-way random-effects intra-class correlation coefficient was calculated to determine inter-rater reliability.

The primary investigator monitored participant performance online to ensure that the tasks were performed correctly and that no vowel repetitions or prolongations were included in the data collection. The onsets of the auditory stimuli presented during the passive listening tasks, and the vocalizations generated by the participants during the active generation tasks, were identified offline via an automated routine implemented in Matlab 7.1 (Mathworks Inc) and manually checked for accuracy. Preparation of the acoustic signal for the onset identification routine consisted of normalization, application of a participant-specific band pass filter, re-normalization and envelope extraction. An onset was identified when the acoustic signal exceeded the specified thresholds for noise, amplification and acceleration. These methods of onset identification have previously been demonstrated to reduce the influence of sound specific biases and yield accurate time marking results (Kessler, Treiman, Mullennix 2002; Tyler, Tyler, Burnham 2005).

The identified onsets were used to epoch the MEG data from 500 ms prior to the auditory stimuli onset to 1000 ms post onset. Source analysis was performed on the averaged individual trials using an event-related vector beamformer (Quraan and Cheyne 2010; Sekihara et al., 2001) to create volumetric images (2.5 mm resolution) of source activity throughout the brain at selected time intervals (Cheyne, Bakhtazad, Gaetz 2006; Herdman et al., 2003). We used the beamformer analysis because it has been shown to be able to suppress anticipated large subject-generated noise artefacts in the MEG recordings of auditory responses during the overt speaking task (Beal et al., 2010; Cheyne et al., 2007). Binaurally elicited auditory evoked fields produce highly correlated sources that can result in suppression of beamformer output and concomitant errors in localization and amplitude (Dalal, Sekihara, Nagarajan 2006; Quraan and Cheyne 2010). In order to circumvent these effects, we used an event-related vector beamformer with coherent source suppression capability as described by Dalal, et al. (2006) to image correlated sources in bilateral auditory areas. We generated source activity waveforms associated with the voxels of peak activity identified in the volumetric images using the single dominant current direction from the vector output of the beamformer, at ± 10 ms of the M50. Further details of this approach can be found in Quraan & Cheyne (2010).

Source plots were created for each participant via the co-registered anatomical MRI. To combine source localization results across subjects, pseudo-t source images co-registered to each subject’s MRI were spatially normalized to the MNI (T1) template brain using SPM2 (Wellcome Institute of Cognitive Neurology, London, UK). Linear and non-linear warping parameters were obtained from each individual’s T1-weighted structural image and used to warp source images to standardized stereotactic (MNI) space prior to averaging across subjects. Several studies have verified that MRIs of children from 6 years of age and older can be successfully warped to an adult template (Burgund et al., 2002; Kang et al., 2003; Muzik et al., 2000). Significant peaks of activity in the group images were identified after thresholding the images using a non-parametric permutation test (Nichols and Holmes 2002) adapted for beamformer source imaging (Singh, Barnes, Hillebrand 2003). Peak locations were reported in MNI coordinates. To obtain the group average time course for each peak activation we averaged the source waveforms computed at the peak response location in each subject’s original source image. This was achieved by first unwarping the group mean peak-voxel location (in MNI coordinates) back to MEG coordinate space for each individual participant and searching for a peak within a 10 mm radius of that location. A search radius of 10mm ensured that we found the true peak location for each participant that corresponded to the group response. Within each participant’s source waveform, the M50 peak was identified as the largest positive peak occurring within a time window of 50 to 110ms following stimulus onset.3 Across participants for each task and group, the peak amplitudes and latencies of the moment signal of this time course were measured and extracted for averaging.

Statistical analyses of the amplitude and latency data were completed separately for each condition. Analyses of the tone amplitude and latency data were completed using a 2-way mixed analysis of variance to test for differences in either peak amplitude or latency of the M50 response for the within-group variable of hemisphere (left vs. right), between groups (controls vs. children who stutter) and interaction. Analyses of the vowel data were completed using a 3-way mixed analysis of variance to test for differences in the within group variables of hemisphere (left, right) and task (listen, speak), between groups (children who do and do not stutter) and any interactions. We also calculated the speaking induced suppression percent difference of the group mean amplitude values [100*(1-amplitudespeak /amplitudelisten)] (Ventura, Nagarajan, Houde 2009). Lastly, exploratory bivariate correlation analyses were conducted between the behavioural measurements (participants’ age, Peabody Picture Vocabulary Test scores - 3A, Goldman Fristoe Test of Articulation – 2 scores or Stuttering Severity Index scores) and the neurophysiological data (source amplitude and latency).

Results

Listen Tone task

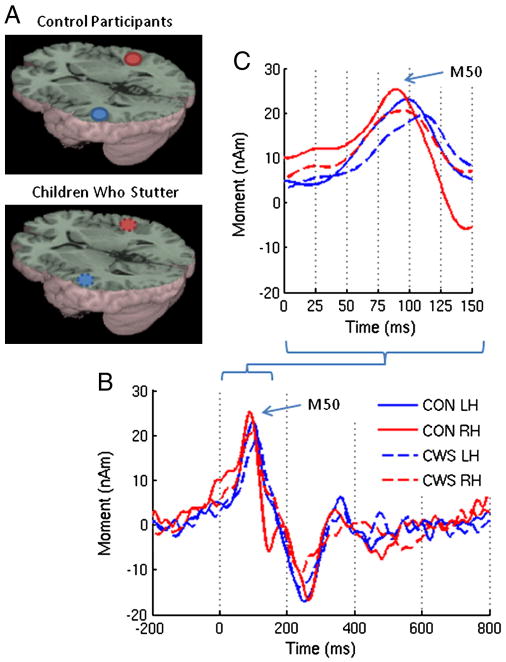

The source of the M50 localized to the auditory cortex in both hemispheres in children who do and do not stutter. These sources are shown in Figure 1 as well as the group averaged source magnitude variation across time for the evoked auditory fields to the tone. The corresponding MNI coordinates are provided in Table 2. No amplitude or latency differences were found (Figure 2). Consistent with previous studies of development of magnetic auditory responses to tones, the M50 occurred at a prolonged latency for both fluently speaking children (84.95 ± 7.86 ms) and children who stutter (84.29 ± 8.92 ms) relative to the latency expected for adults in the literature (62.0 ± 1.9 ms; Oram-Cardy et al., 2004). No correlations between age, receptive vocabulary, articulation ability or stuttering severity and either amplitude or latency were found.

Figure 1.

(A) Group averaged source images of the auditory evoked magnetic fields in response to a 1kHz tone overlaid on the MNI canonical brain. The associated MNI coordinates are listed in Table 2. (B) Group averaged source magnitude variations from 200ms prestimulus to 800 ms post stimulus corresponding to those sources. (C) A detailed view of the early components. The solid and dotted lines represent the control participants and children who stutter respectively. nAm = nanoAmpere*meters; ms = milliseconds; Blue = left hemisphere (LH); Red = right hemisphere (RH).

Table 2.

MNI coordinates for the M50 source locations and their respective pseudo-z threshold (Thresh.) values at p < 0.001 derived via non-parametric permutation testing adapted for beamformer source imaging (Nichols and A. P. Holmes, 2002; Singh et al., 2003).

| Control Participants | ||||||||

|---|---|---|---|---|---|---|---|---|

| Left Hemisphere | Right Hemisphere | |||||||

| X | Y | Z | Thresh. | X | Y | Z | Thresh. | |

| Tone | −53 | −20 | 5 | 2.14 | 48 | −20 | −5 | 2.43 |

| Listen /a/ | −53 | −32 | 5 | 1.64 | 48 | −25 | −5 | 1.99 |

| Speak /a/ | −53 | −22 | 10 | 1.50 | 51 | −23 | 0 | 1.63 |

| Children who Stutter | ||||||||

|---|---|---|---|---|---|---|---|---|

| Left Hemisphere | Right Hemisphere | |||||||

| X | Y | Z | Thresh. | X | Y | Z | Thresh. | |

| Tone | −55 | −20 | 8 | 2.02 | 58 | −18 | 2 | 1.76 |

| Listen /a/ | −55 | −20 | 5 | 1.37 | 53 | −20 | 5 | 1.54 |

| Speak /a/ | −55 | −22 | 13 | 1.11 | 58 | −15 | 8 | 1.15 |

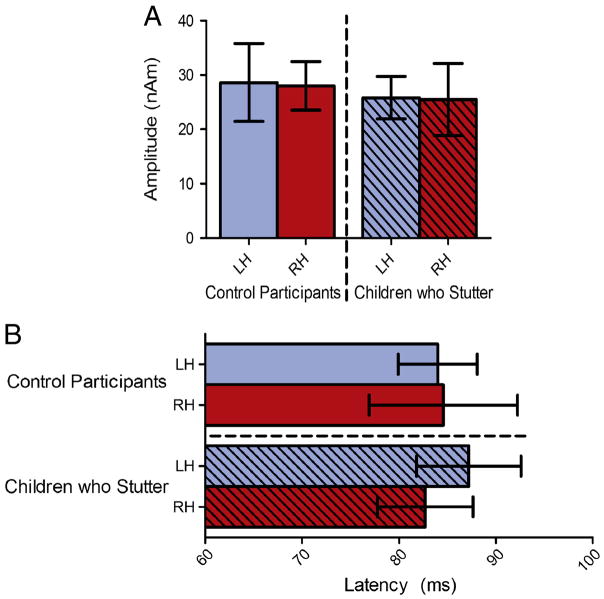

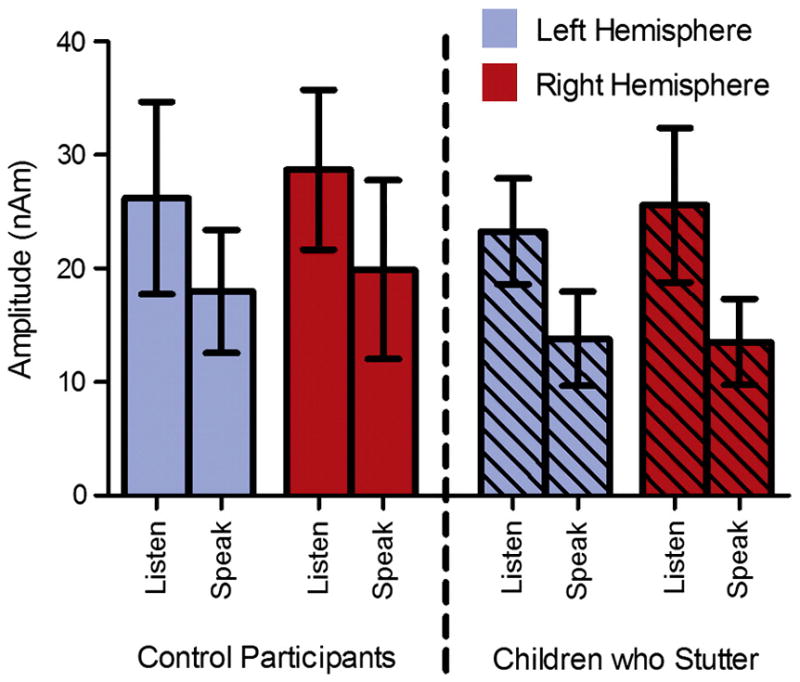

Figure 2.

The results of the listen tone task (A) amplitude and (B) latency analyses. No differences were found in the amplitude or latency of the M50 in response to a 1kHz tone in children who stutter relative to the control participants. Error bars represent the 95% confidence interval.

Listen Vowel and Speak Vowel tasks

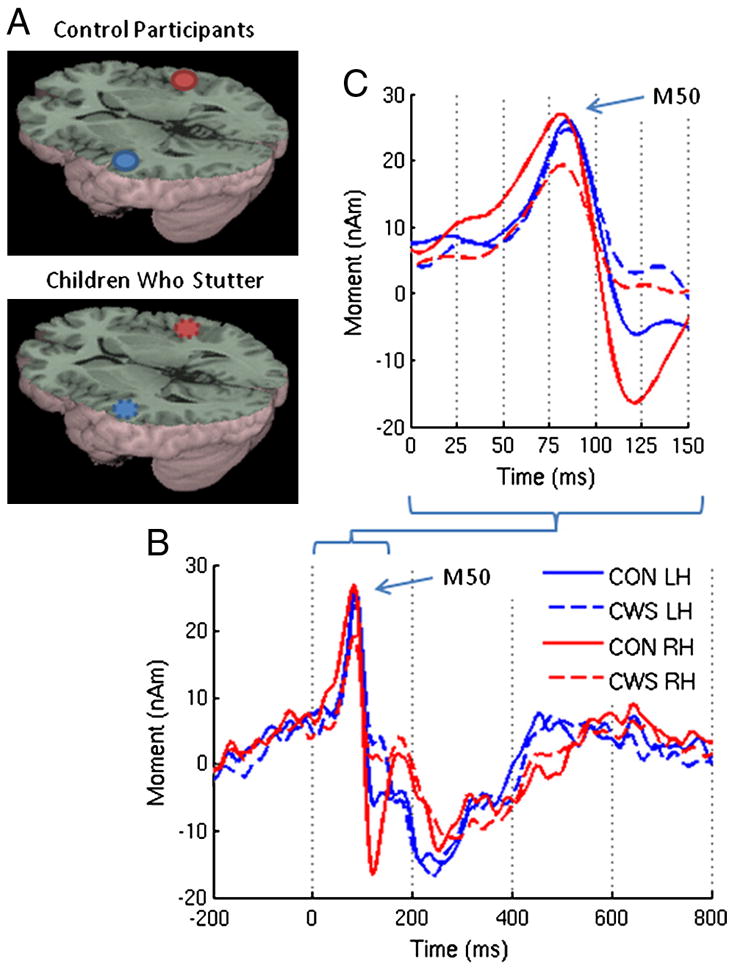

The source of the M50 localized to the auditory cortex in both hemispheres for the vowel tasks in children who stutter as well as fluently speaking children (Figures 3 and 4; see Table 2 for the MNI coordinates). The group averaged source power variation across time for the evoked auditory fields during the listen vowel and speak vowel tasks are shown in Figures 3 and 4. As shown in Figure 5, consistent with our hypothesis both children who stutter and fluently speaking children demonstrated a reduction in M50 amplitude for the speak vowel condition (16.30 ± 8.42 nAm) relative to the listen vowel condition (25.94 ± 10.07 nAm) (F (1, 20) = 16.21, p = .001). No other significant amplitude differences were found. Accordingly, the average speech-induced suppression percent change was calculated collapsed across hemispheres and groups. The auditory evoked field amplitude was reduced by 59% for the speak vowel task relative to the listen vowel task. As shown in Figure 6, the M50 peak amplitude, measured in the left hemisphere, was negatively correlated with stuttering severity in the group of children who stutter (r = −.65, p = .03). However, the correlation result is reported for exploratory purposes only as its statistical significance did not survive Bonferroni correction for multiple correlations.

Figure 3.

(A) Group averaged source images of the auditory evoked magnetic fields for the listen vowel task overlaid on the MNI canonical brain. The associated MNI coordinates are listed in Table 2. (B) Group averaged source magnitude variations from 200ms prestimulus to 800 ms post stimulus corresponding to those sources and (C) a detailed view of the early components.

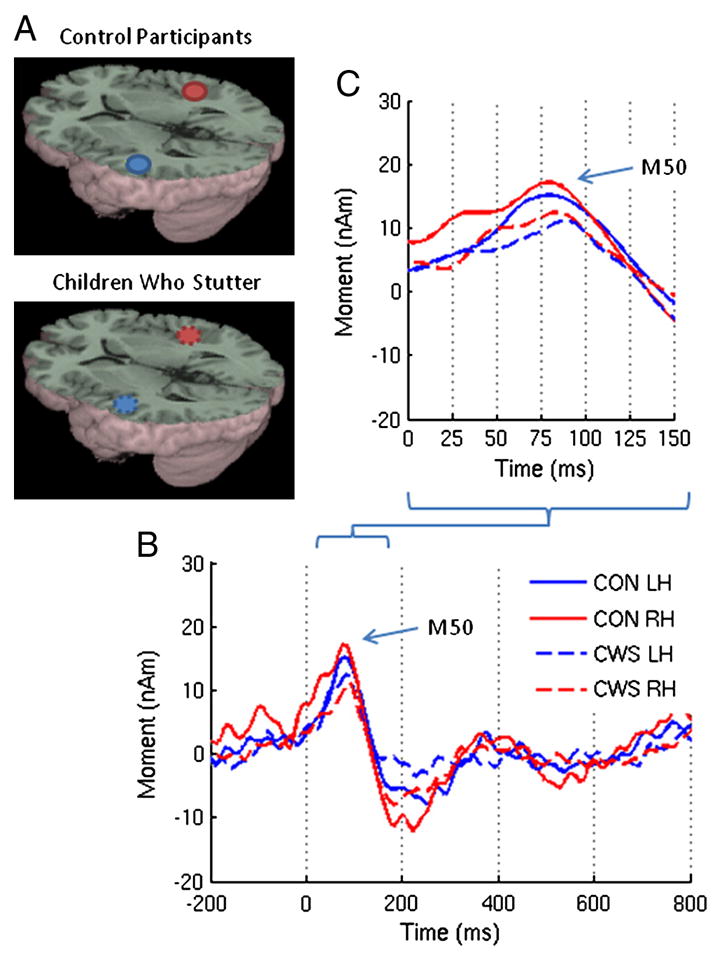

Figure 4.

(A) Group averaged source images of the auditory evoked magnetic fields for the speak vowel task overlaid on the MNI canonical brain. The associated MNI coordinates are listed in Table 2. (B) Group averaged source magntiude variations from 200ms prestimulus to 800 ms post stimulus corresponding to those sources and (C) a detailed view of the early components.

Figure 5.

The results of the listen vowel and speak vowel amplitude analysis. On average, the control participants and children who stutter demonstrated a 59% reduction in M50 amplitude for the speak vowel condition relative to the listen vowel condition. Error bars represent the 95% confidence interval.

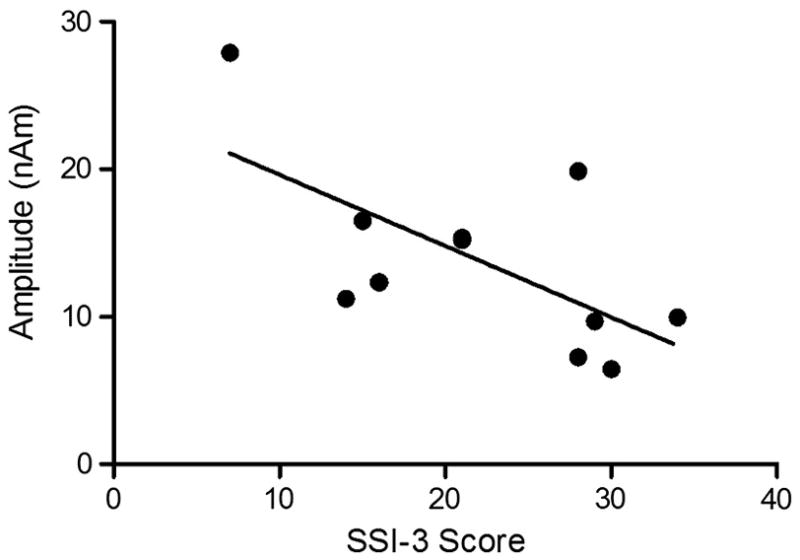

Figure 6.

For children who stutter, left hemisphere amplitude for the speak task had a negative correlation with stuttering severity as measured with the SSI-3.

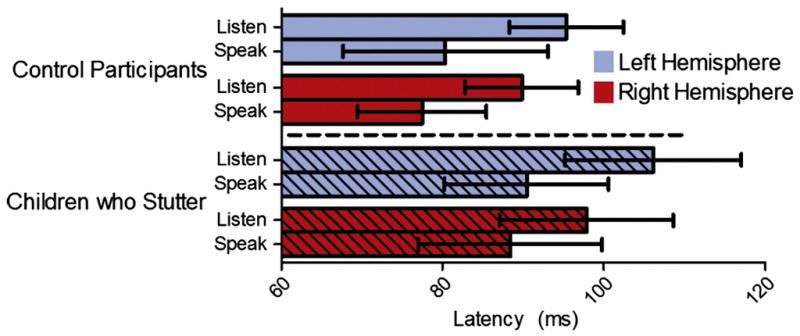

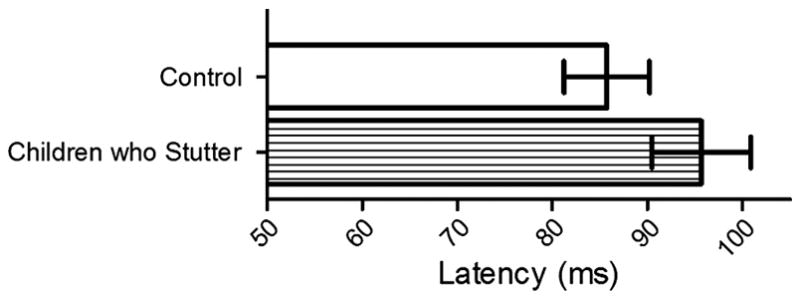

The latency of the auditory M50 was shorter for the speak vowel task (84.15 ± 16.31 ms) than the listen vowel task (97.27 ± 14.43 ms) in both children who stutter and fluently speaking children (F(1, 20) = 21.68, p < .001; Figure 7). On average, the M50 peak latency was 13.12 ms shorter for the speak vowel task relative to the listen vowel task. Children who stutter had delayed auditory M50 peak latencies (95.67 ± 17.07 ms) to vowel sounds, across tasks, compared to children who do not stutter (85.75 ± 14.84 ms) (F(1, 20) = 5.92, p = .02; Figure 8). As compared to the fluently speaking children, the children who stutter demonstrated a 9.92 ms delay in M50 peak latency.

Figure 7.

The results of the listen and speak vowel task latency analysis. The children who stutter had delayed M50 peak latencies for the listen vowel and speak vowel tasks relative to the control participants. Error bars represent the 95% confidence interval.

Fig. 8.

The children who stutter had a 9.92 ms delay in M50 peak latency for vowel tasks relative to the control participants. Error bars represent the 95% confidence interval.

Discussion

Our study is the first to provide evidence that speech-induced suppression of auditory fields is a process present in school-age children as it is in adults. Confirmation of this phenomenon in younger speakers allowed us to investigate whether differences existed in its manifestation in children who stutter relative to children who do not stutter. Consistent with findings in adults who stutter, children who stutter differed from fluent speakers in the peak latencies of evoked auditory fields to their own speech but showed no differences from fluent speakers in the amplitude of auditory fields for listening to or speaking a vowel. Rather, the findings advance our understanding of stuttering in children by demonstrating the importance of neural timing differences in auditory cortex for the processing of speech stimuli at a stage of development closer to the onset of this disorder than in adults. Task specific results are discussed below in relation to theories of the neural correlates of stuttering.

Amplitude

We examined evoked auditory fields to vowels during passive listening and active speech production. In the current study, children demonstrated a 59% reduction in the peak amplitude of the auditory M50 to vowel production versus vowel perception (Figure 5). The magnitude of speech-induced auditory suppression of the M50 in the current child cohort is substantially increased relative to our previously reported trend of a 6% reduction of the M50 to vowel production versus vowel perception in adults (Beal et al., 2010). We measured a statistically significant 22% reduction of the M50 for vowel-initial word production relative to vowel-initial word perception in the same adult cohort. We previously speculated that the increased magnitude of the suppression effect for word stimuli may be the result of the increased motor plan complexity of words over vowels. The 59% reduction of the M50 observed in the current child cohort, relative to the previously reported 6% reduction in adults, may be reflective of vowel production being a more complex, or at least a lesser established, motor task for a developing speech-motor system. Alternatively, the magnitude of the M50 reduction in our child cohort is consistent with previous studies of speech-induced auditory suppression of the M100 in fluent adult speakers (Beal et al., 2010; Heinks-Maldonado, Nagarajan, Houde 2006; Hirano et al., 1997; Houde et al., 2002; Ventura, Nagarajan, Houde 2009) and may reflect our ability to resolve a more consistent and robust measurement of this early component in children relative to adults.

Children who stutter did not differ from their fluent peers in the amplitude of the M50 during speaking. This finding is consistent with our previous observation that adults who stutter had similar M50 and M100 amplitudes for speaking relative to fluent adults (Beal et al., 2010). Therefore, neither adults nor children who stutter differed from their fluently speaking peers in the amplitude of their auditory evoked fields in response to actively produced self-generated vowel stimuli. Taken together, our findings suggest that for vowel production the mechanism of speech-induced suppression of evoked auditory field amplitude is typical in people who stutter across the development of the disorder.

As shown in Figure 6, the children who stutter showed a negative correlation between stuttering severity and left hemisphere M50 amplitude for speak vowel but not during listen vowel. In other words, the children with the most severe stuttering had the smallest left hemisphere M50 amplitude during the speak vowel task. This result must be considered preliminary because it was not statistically significant following correction for multiple correlations. However, the correlation is interesting in light of the efference copy hypothesis of stuttering (Brown et al., 2005). The efference copy hypothesis predicts that auditory signals are further suppressed in people who stutter as a result of the well documented presence of increased speech-motor activity and reduced auditory signal in this population as measured by PET and fMRI (De Nil et al., 2008; Fox et al., 1996; Fox et al., 2000; Watkins et al., 2008). Although the children who stutter did not differ as a group from the fluently speaking children in our study, the children who stutter had a relationship between the left hemisphere M50 amplitude and stuttering severity in the presence of speech-motor activity. This finding suggests that children with more severe stuttering may engage speech-motor cortex to a greater degree, thereby further suppressing auditory activity, than their less severe stuttering peers. As the current study did not examine cortical speech-motor activity directly, further investigation is required to determine if increased cortical speech motor activity is a hallmark of the disorder in childhood.

Latency

The children who stutter had delayed M50 peak latencies in both hemispheres for the listen vowel and speak vowel tasks relative to the fluently speaking children (Figure 8). However, the children who stutter had similar M50 peak latencies to the fluently speaking children for the listen tone task. The finding of similar M50 latencies observed between children who do and do not stutter for the listen tone task is consistent with the previous literature investigating auditory responses to non-linguistic stimuli in adults who stutter (Beal et al., 2010; Biermann-Ruben, Salmelin, Schnitzler 2005; Hampton and Weber-Fox 2008). However, the M50 latency patterns observed in the current study of children who stutter differed from those observed in our previous study of adults who stutter for vowel stimuli (Beal et al., 2010). Relative to fluently speaking children, the children who stutter presented with prolonged M50 latencies, bilaterally, for vowel perception and production. Adults who stutter did not differ from fluently speaking adults for the M50 latencies in response to vowel stimuli but adults who stutter did have prolonged M50 latencies in both hemispheres in response to vowel-initial word stimuli relative to fluently speaking adults.

These findings suggest that a cortical timing deficit for the auditory processing of linguistic stimuli may play a role in the development and maintenance of stuttering in school-aged children and adults who stutter. Theories of speech development posit that auditory input of speech signals during listening and speaking contributes to the ongoing modification of internal representations of speech sounds (Guenther and Vladusich In press; Hickok and Poeppel 2004; Hickok and Poeppel 2007; Lotto, Hickok, Holt 2009; Shiller et al., 2009). As the auditory inputs in the two vowel tasks come from exogenous and endogenous sources, one interpretation of our findings is that the longer peak latencies during vowel perception and production in children who stutter are reflective of a deficiency in integrating auditory information for the purpose of improving internal representations of speech sounds. Other authors have suggested that children who stutter may have an inability to maintain internal representations of speech sounds and that this results in unstable motor planning and execution that can ultimately trigger stuttering moments during speech production (Civier, Tasko, Guenther 2010; Corbera et al., 2005; Max et al., 2004).

The M50 in the current child dataset and the M50 and M100 in the adult dataset, reported in Beal et al. (2010), were all localized to the auditory cortices. As such, it is reasonable to speculate about the general timing of cortical auditory events in children and adults who stutter. Whereas children and adults who stutter had similarly prolonged bilateral latencies for vowel perception relative to their fluently speaking peers, they differed substantially from one another for vowel production. Children who stutter had prolonged bilateral latencies relative to their fluently speaking peers for vowel production. Although adults who stutter did not differ from their fluently speaking peers for vowel production, they did have shorter latencies in the right hemisphere relative to the left hemisphere for vowel production - a finding not observed in children who stutter. Kell et al. (2009) proposed that a primary structural deficit in the area of the left inferior frontal gyrus results in an in ability of people who stutter to integrate the auditory feedback information with internal representations of speech-motor programs thereby resulting in compensational adaptive changes in the right hemisphere. Our current findings of prolonged cortical auditory processing in children who stutter during vowel production taken together with our previous findings that mild stutterers engaged the right auditory cortex faster than more severe stutterers during vowel production further support the idea that the right faster than left pattern of cortical auditory processing in adults who stutter during vowel production is compensatory in nature.

Source Localization

The auditory M50 was localized to the auditory cortices for all tasks and participants. Small differences in the mean location for the listen vowel and speak vowel tasks were observed. As can be seen in Table 2, the largest systematic difference in mean location occurred in the control group in the y-plane of the left hemisphere which was 10 mm posterior for the listen vowel task relative to the speak vowel task. Given the known cytoarchitectural differences that span this distance of auditory cortex, it may be tempting to prescribe functional meaning to this change in position. However, given the limitations of source localization accuracy using MEG for such sources (which can be on the order of more than 10 mm) and the lower signal-to-noise ratio for response in the speak vowel task, further confirmation with a larger number of subjects may be needed to determine whether this posterior shift in location reflects activation of different neuronal populations within auditory cortex for the two types of stimuli. However, the amplitude and latency values measured at the source are consistent with those expected of the auditory M50 response in children and anatomical locations consistently within primary auditory cortex (Oram Cardy et al., 2004; Picton and Taylor 2007). Thus we are confident that the comparison of listen vowel and speak vowel source amplitudes for the purposes of determining the presence of speech-induced auditory suppression of the M50 response was valid across tasks and groups.

Conclusion

We demonstrated speech-induced suppression of the neuromagnetic auditory M50 response in children. We found that both children who stutter and their fluently speaking peers show evidence of speech-induced suppression for vowel stimuli. Children who stutter differed from children who do not stutter in that the auditory M50 was prolonged during vowel perception and production. This delayed M50 latency in children who stutter may reflect a deficiency in their ability to integrate auditory speech information for the purpose of establishing and improving internal representations of speech sound processes. By examining the neural correlates of auditory processing during passive listening and active generation in children who stutter, we have shown that faulty neural processes for speech may underlie stuttering early after the onset of the disorder. We have also differentiated the neurophysiology of early auditory processing in children who stutter from their same aged peers and adults who stutter. These results contribute to our understanding of this complex speech disorder closer to its onset as well as confirm the presence of speech-induced suppression in normal children.

Acknowledgments

We thank T. Mills and M. Lalancette for technical support; and R. V. Harrison for discussion and comments. This work is supported by the Canadian Institutes of Health Research (Grant number MOP-68969) to LFD and by the National Institute on Deafness and Other Communication Disorders Grants R01-DC-007603 to VG. DSB has received funding from the Canadian Institutes of Health Research Clinical Fellowship and the Clinician Scientist Training Program (CSTP). The CSTP is funded, fully or in part, by the Ontario Student Opportunity Trust Fund - Hospital for Sick Children Foundation Student Scholarship Program.

Abbreviations

- fMRI

functional magnetic resonance imaging

- MEG

magnetoencephalography

- PET

position emission tomography

Footnotes

This age group was selected relative to the usual length of recovery that ranges 2 to 3 years (Yairi & Ambrose, 1999) and the age of onset 2 to 5 years old (Bloodstein, 2006) with the rationale that children identified as stuttering at age 7 are likely to show predictive signs of recovering or persisting in the future. It is more difficult to predict recovery in children who are of preschool age as at least 70% as these children are likely to recover. Only children who did not show signs of recovery were included in the study.

The open back unrounded vowel /a/ was used because it requires relatively minimal muscular movement to articulate. Minimization of such movement was important as muscular activity introduces unwanted magnetic noise to the brain signals of interest for recording via MEG.

Previous developmental studies have demonstrated a prolonged M50 in typically developing children (79.6 ± 7.8 ms) relative to adults (62.0 ± 1.9 ms) and an even further prolonged M50 in children with development disorders (85.9 ±8.1 ms) (Oram-Cardy et al., 2004).

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Ambrose NG, Cox NJ, Yairi E. The genetic basis of persistence and recovery in stuttering. Journal of Speech, Language, and Hearing Research. 1997;40(3):567–580. doi: 10.1044/jslhr.4003.567. [DOI] [PubMed] [Google Scholar]

- Bailly G. Learning to speak. sensori-motor control of speech movements. Speech Communication. 1997;22(2–3):251–267. [Google Scholar]

- Beal DS, Gracco VL, Lafaille SJ, DeNil LF. Voxel-based morphometry of auditory and speech-related cortex in stutterers. NeuroReport. 2007;18(12):1257–1260. doi: 10.1097/WNR.0b013e3282202c4d. [DOI] [PubMed] [Google Scholar]

- Beal DS, Cheyne DO, Gracco VL, Quraan MA, Taylor MJ, De Nil LF. Auditory evoked fields to vocalization during passive listening and active generation in adults who stutter. NeuroImage. 2010;52(4):1645–1653. doi: 10.1016/j.neuroimage.2010.04.277. [DOI] [PubMed] [Google Scholar]

- Biermann-Ruben K, Salmelin R, Schnitzler A. Right rolandic activation during speech perception in stutterers: A MEG study. NeuroImage. 2005;25(3):793–801. doi: 10.1016/j.neuroimage.2004.11.024. [DOI] [PubMed] [Google Scholar]

- Blomgren M, Nagarajan SS, Lee JN, Li T, Alvord L. Preliminary results of a functional MRI study of brain activation patterns in stuttering and nonstuttering speakers during a lexical access task. Journal of Fluency Disorders. 2003;28(4):337–356. doi: 10.1016/j.jfludis.2003.08.002. [DOI] [PubMed] [Google Scholar]

- Bloodstein O, Ratner NB. A handbook on stuttering. 6. Thomson Delmar Learning; Clifton Park, N.Y: 2008. [Google Scholar]

- Braun AR, Varga M, Stager S, Schulz G, Selbie S, Maisog JM, Carson RE, Ludlow CL. Altered patterns of cerebral activity during speech and language production in developmental stuttering. an H215O positron emission tomography study. Brain. 1997;120(5):761–784. doi: 10.1093/brain/120.5.761. [DOI] [PubMed] [Google Scholar]

- Brown S, Ingham RJ, Ingham JC, Laird AR, Fox PT. Stuttered and fluent speech production: An ALE meta-analysis of functional neuroimaging studies. Human Brain Mapping. 2005;25(1):105–117. doi: 10.1002/hbm.20140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruneau N, Gomot M. Auditory evoked potentials (N1 wave) as indices of cortical development. In: Garreau B, editor. Neuroimaging in Child Neuropsychiatric Disorders. Springer-Verlag; 1998. pp. 113–123. [Google Scholar]

- Burgund ED, Kang HC, Kelly JE, Buckner RL, Snyder AZ, Petersen SE, Schlaggar BL. The feasibility of a common stereotactic space for children and adults in fMRI studies of development. NeuroImage. 2002;17(1):184–200. doi: 10.1006/nimg.2002.1174. [DOI] [PubMed] [Google Scholar]

- Callan DE, Kent RD, Guenther FH, Vorperian HK. An auditory-feedback-based neural network model of speech production that is robust to developmental changes in the size and shape of the articulatory system. Journal of Speech, Language, and Hearing Research. 2000;43(3):721–736. doi: 10.1044/jslhr.4303.721. [DOI] [PubMed] [Google Scholar]

- Chang S, Kenney MK, Loucks TMJ, Ludlow CL. Brain activation abnormalities during speech and non-speech in stuttering speakers. NeuroImage. 2009;46(1):201–212. doi: 10.1016/j.neuroimage.2009.01.066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang S, Erickson KI, Ambrose NG, Hasegawa-Johnson MA, Ludlow CL. Brain anatomy differences in childhood stuttering. NeuroImage. 2008;39(3):1333–1344. doi: 10.1016/j.neuroimage.2007.09.067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheyne D, Bakhtazad L, Gaetz W. Spatiotemporal mapping of cortical activity accompanying voluntary movements using an event-related beamforming approach. Human Brain Mapping. 2006;27(3):213–229. doi: 10.1002/hbm.20178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheyne D, Bostan A, Gaetz W, Pang E. Event-related beamforming: A robust method for presurgical functional mapping using MEG. Clinical Neurophysiology. 2007;118(8):1691–1704. doi: 10.1016/j.clinph.2007.05.064. [DOI] [PubMed] [Google Scholar]

- Civier O, Tasko SM, Guenther FH. Overreliance on auditory feedback may lead to sound/syllable repetitions: Simulations of stuttering and fluency-inducing conditions with a neural model of speech production. Journal of Fluency Disorders. 2010;35(3):246–279. doi: 10.1016/j.jfludis.2010.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbera S, Corral M, Escera C, Idiazábal MA. Abnormal speech sound representation in persistent developmental stuttering. Neurology. 2005;65(8):1246–1252. doi: 10.1212/01.wnl.0000180969.03719.81. [DOI] [PubMed] [Google Scholar]

- Curio G, Neuloh G, Numminen J, Jousmaki V, Hari R. Speaking modifies voice-evoked activity in the human auditory cortex. Human Brain Mapping. 2000;9(4):183–191. doi: 10.1002/(SICI)1097-0193(200004)9:4<183::AID-HBM1>3.0.CO;2-Z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalal SS, Sekihara K, Nagarajan SS. Modified beamformers for coherent source region suppression. IEEE Transactions on Biomedical Engineering. 2006;53(7):1357–1363. doi: 10.1109/TBME.2006.873752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Nil LF, Kroll RM, Houle S. Functional neuroimaging of cerebellar activation during single word reading and verb generation in stuttering and nonstuttering adults. Neuroscience Letters. 2001;302(2–3):77–80. doi: 10.1016/s0304-3940(01)01671-8. [DOI] [PubMed] [Google Scholar]

- De Nil LF, Kroll RM, Kapur S, Houle S. A positron emission tomography study of silent and oral single word reading in stuttering and nonstuttering adults. Journal of Speech, Language, and Hearing Research : JSLHR. 2000;43(4):1038–1053. doi: 10.1044/jslhr.4304.1038. [DOI] [PubMed] [Google Scholar]

- De Nil LF, Beal DS, Lafaille SJ, Kroll RM, Crawley AP, Gracco VL. The effects of simulated stuttering and prolonged speech on the neural activation patterns of stuttering and nonstuttering adults. Brain and Language. 2008;107(2):114–123. doi: 10.1016/j.bandl.2008.07.003. [DOI] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Examiner’s manual for the peabody picture vocabulary test. 3. American Guidance Services; Circle Pines, MN: 1997. [Google Scholar]

- Foundas AL, Bollich AM, Corey DM, Hurley M, Heilman KM. Anomalous anatomy of speech-language areas in adults with persistent developmental stuttering. Neurology. 2001;57(2):207–215. doi: 10.1212/wnl.57.2.207. [DOI] [PubMed] [Google Scholar]

- Foundas AL, Bollich AM, Feldman J, Corey DM, Hurley M, Lemen LC, Heilman KM. Aberrant auditory processing and atypical planum temporale in developmental stuttering. Neurology. 2004;63(9):1640–1646. doi: 10.1212/01.wnl.0000142993.33158.2a. [DOI] [PubMed] [Google Scholar]

- Fox PT, Ingham RJ, Ingham JC, Zamarripa F, Xiong J, Lancaster JL. Brain correlates of stuttering and syllable production: A PET performance-correlation analysis. Brain. 2000;123(10):1985–2004. doi: 10.1093/brain/123.10.1985. [DOI] [PubMed] [Google Scholar]

- Fox PT, Ingham RJ, Ingham JC, Hirsch TB, Downs JH, Martin C, Jerabek P, Glass T, Lancaster JL. A PET study of the neural systems of stuttering. Nature. 1996;382(6587):158–162. doi: 10.1038/382158a0. [DOI] [PubMed] [Google Scholar]

- Gage NM, Siegel B, Roberts TPL. Cortical auditory system maturational abnormalities in children with autism disorder: An MEG investigation. Developmental Brain Research. 2003;144(2):201–209. doi: 10.1016/s0165-3806(03)00172-x. [DOI] [PubMed] [Google Scholar]

- Giraud A, Neumann K, Bachoud-Levi A, von Gudenberg AW, Euler HA, Lanfermann H, Preibisch C. Severity of dysfluency correlates with basal ganglia activity in persistent developmental stuttering. Brain and Language. 2008;104(2):190–199. doi: 10.1016/j.bandl.2007.04.005. [DOI] [PubMed] [Google Scholar]

- Goldman R, Fristoe M. Examiner’s manual for the goldman fristoe test of articulation. 2. NCS Pearson, Inc; Minneapolis, MN: 2000. [Google Scholar]

- Guenther F, Vladusich T. A neural theory of speech acquisition and production. Journal of Neurolinguistics. doi: 10.1016/j.jneuroling.2009.08.006. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guenther FH. Cortical interactions underlying the production of speech sounds. Journal of Communication Disorders. 2006;39(5):350–365. doi: 10.1016/j.jcomdis.2006.06.013. [DOI] [PubMed] [Google Scholar]

- Guenther FH, Bohland JW. Learning sound categories: A neural model and supporting experiments. Acoustical Science and Technology. 2002;23(4):213–220. [Google Scholar]

- Hampton A, Weber-Fox C. Non-linguistic auditory processing in stuttering: Evidence from behavior and event-related brain potentials. Journal of Fluency Disorders. 2008;33(4):253–273. doi: 10.1016/j.jfludis.2008.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hari R, Pelizzone M, Mäkelä JP. Neuromagnetic responses of the human auditory cortex to on- and offsets of noise bursts. Audiology. 1987;26(1):31–43. doi: 10.3109/00206098709078405. [DOI] [PubMed] [Google Scholar]

- Heinks-Maldonado TH, Nagarajan SS, Houde JF. Magnetoencephalographic evidence for a precise forward model in speech production. NeuroReport. 2006;17(13):1375–1379. doi: 10.1097/01.wnr.0000233102.43526.e9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herdman AT, Wollbrink A, Chau W, Ishii R, Ross B, Pantev C. Determination of activation areas in the human auditory cortex by means of synthetic aperture magnetometry. NeuroImage. 2003;20(2):995–1005. doi: 10.1016/S1053-8119(03)00403-8. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nature Reviews Neuroscience. 2007;8(5):393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Dorsal and ventral streams: A framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92(1–2):67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- Hirano S, Kojima H, Naito Y, Honjo I, Kamoto Y, Okazawa H, Ishizu K, Yonekura Y, Nagahama Y, Fukuyama H, et al. Cortical processing mechanism for vocalization with auditory verbal feedback. Neuroreport. 1997;8(9–10):2379–2382. doi: 10.1097/00001756-199707070-00055. [DOI] [PubMed] [Google Scholar]

- Houde JF, Nagarajan SS, Sekihara K, Merzenich MM. Modulation of the auditory cortex during speech: An MEG study. Journal of Cognitive Neuroscience. 2002;14(8):1125–1138. doi: 10.1162/089892902760807140. [DOI] [PubMed] [Google Scholar]

- Howie PM. Concordance for stuttering in monozygotic and dizygotic twin pairs. Journal of Speech and Hearing Research. 1981;24(3):317–321. doi: 10.1044/jshr.2403.317. [DOI] [PubMed] [Google Scholar]

- Jäncke L, Hänggi J, Steinmetz H. Morphological brain differences between adult stutterers and non-stutterers. BMC Neurology. 2004;4(1):23. doi: 10.1186/1471-2377-4-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang C, Riazuddin S, Mundorff J, Krasnewich D, Friedman P, Mullikin JC, Drayna D. Mutations in the lysosomal enzyme-targeting pathway and persistent stuttering. New England Journal of Medicine. 2010;362(8):677–685. doi: 10.1056/NEJMoa0902630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kang HC, Burgund ED, Lugar HM, Petersen SE, Schlaggar BL. Comparison of functional activation foci in children and adults using a common stereotactic space. NeuroImage. 2003;19(1):16–28. doi: 10.1016/s1053-8119(03)00038-7. [DOI] [PubMed] [Google Scholar]

- Kanno A, Nakasato N, Murayama N, Yoshimoto T. Middle and long latency peak sources in auditory evoked magnetic fields for tone bursts in humans. Neuroscience Letters. 2000;293(3):187–190. doi: 10.1016/s0304-3940(00)01525-1. [DOI] [PubMed] [Google Scholar]

- Kell CA, Neumann K, Von Kriegstein K, Posenenske C, Von Gudenberg AW, Euler H, Giraud A. How the brain repairs stuttering. Brain. 2009;132(10):2747–2760. doi: 10.1093/brain/awp185. [DOI] [PubMed] [Google Scholar]

- Kessler B, Treiman R, Mullennix J. Phonetic biases in voice key response time measurements. Journal of Memory and Language. 2002;47(1):145–171. [Google Scholar]

- Kidd KK, Heimbuch RC, Records MA. Vertical transmission of susceptibility to stuttering with sex-modified expression. Proceedings of the National Academy of Sciences of the United States of America. 1981;78(1 II):606–610. doi: 10.1073/pnas.78.1.606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kotecha R, Pardos M, Wang Y, Wu T, Horn P, Brown D, Rose D, deGrauw T, Xiang J. Modeling the developmental patterns of auditory evoked magnetic fields in children. PLoS ONE. 2009;4(3) doi: 10.1371/journal.pone.0004811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kröger BJ, Kannampuzha J, Neuschaefer-Rube C. Towards a neurocomputational model of speech production and perception. Speech Communication. 2009;51(9):793–809. [Google Scholar]

- Lan J, Song M, Pan C, Zhuang G, Wang Y, Ma W, Chu Q, Lai Q, Xu F, Li Y, et al. Association between dopaminergic genes (SLC6A3 and DRD2) and stuttering among han chinese. Journal of Human Genetics. 2009;54(8):457–460. doi: 10.1038/jhg.2009.60. [DOI] [PubMed] [Google Scholar]

- Levelt WJM. Speech, gesture and the origins of language. European Review. 2004;12(4):543–549. [Google Scholar]

- Lotto AJ, Hickok GS, Holt LL. Reflections on mirror neurons and speech perception. Trends in Cognitive Sciences. 2009;13(3):110–114. doi: 10.1016/j.tics.2008.11.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu C, Ning N, Peng D, Ding G, Li K, Yang Y, Lin C. The role of large-scale neural interactions for developmental stuttering. Neuroscience. 2009;161(4):1008–1026. doi: 10.1016/j.neuroscience.2009.04.020. [DOI] [PubMed] [Google Scholar]

- Ludlow CL, Loucks T. Stuttering: A dynamic motor control disorder. Journal of Fluency Disorders. 2003;28(4):273–295. doi: 10.1016/j.jfludis.2003.07.001. [DOI] [PubMed] [Google Scholar]

- Mäkelä JP. Magnetoencephalography: Auditory evoked fields. In: Burkard Robert F, Eggermont Jos J, Don Manuel., editors. Auditory evoked potentials : basic principles and clinical application. Lippincott Williams & Wilkins; Philadelphia: 2007. p. 525. [Google Scholar]

- Mäkelä JP, Hari R. Evidence for cortical origin of the 40 hz auditory evoked response in man. Electroencephalography and Clinical Neurophysiology. 1987;66(6):539–546. doi: 10.1016/0013-4694(87)90101-5. [DOI] [PubMed] [Google Scholar]

- Max L, Guenther FH, Gracco VL, Ghosh SS, Wallace ME. Unstable or insufficiently activated internal models and feedback-biased motor control as sources of dysfluency: A theoretical model of stuttering. Contemporary Issues in Communication Science and Disorders. 2004 Spring;31:105–122. [Google Scholar]

- Muzik O, Chugani DC, Juhász C, Shen C, Chugani HT. Statistical parametric mapping: Assessment of application in children. NeuroImage. 2000;12(5):538–549. doi: 10.1006/nimg.2000.0651. [DOI] [PubMed] [Google Scholar]

- Neilson MD, Neilson PD. Speech motor control and stuttering: A computational model of adaptive sensory-motor processing. Speech Communication. 1987;6(4):325–333. [Google Scholar]

- Neumann K, Preibisch C, Euler HA, Gudenberg AWV, Lanfermann H, Gall V, Giraud A. Cortical plasticity associated with stuttering therapy. Journal of Fluency Disorders. 2005;30(1):23–39. doi: 10.1016/j.jfludis.2004.12.002. [DOI] [PubMed] [Google Scholar]

- Neumann K, Euler HA, Von Gudenberg AW, Giraud A, Lanfermann H, Gall V, Preibisch C. The nature and treatment of stuttering as revealed by fMRI: A within- and between-group comparison. Journal of Fluency Disorders. 2003;28(4):381–410. doi: 10.1016/j.jfludis.2003.07.003. [DOI] [PubMed] [Google Scholar]

- Nichols TE, Holmes AP. Nonparametric permutation tests for functional neuroimaging: A primer with examples. Human Brain Mapping. 2002;15(1):1–25. doi: 10.1002/hbm.1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Numminen J, Salmelin R, Hari R. Subject’s own speech reduces reactivity of the human auditory cortex. Neuroscience Letters. 1999;265(2):119–122. doi: 10.1016/s0304-3940(99)00218-9. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: The edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Oram Cardy JE, Ferrari P, Flagg EJ, Roberts W, Roberts TPL. Prominence of M50 auditory evoked response over M100 in childhood and autism. Neuroreport. 2004;15(12):1867–1870. doi: 10.1097/00001756-200408260-00006. [DOI] [PubMed] [Google Scholar]

- Paetau R, Ahonen A, Salonen O, Sams M. Auditory evoked magnetic fields to tones and pseudowords in healthy children and adults. Journal of Clinical Neurophysiology. 1995;12(2):177–185. doi: 10.1097/00004691-199503000-00008. [DOI] [PubMed] [Google Scholar]

- Perkell JS, Guenther FH, Lane H, Matthies ML, Perrier P, Vick J, Wilhelms-Tricarico R, Zandipour M. A theory of speech motor control and supporting data from speakers with normal hearing and with profound hearing loss. Journal of Phonetics. 2000;28(3):233–272. [Google Scholar]

- Picton TW, Taylor MJ. Electrophysiological evaluation of human brain development. Developmental Neuropsychology. 2007;31(3):249–278. doi: 10.1080/87565640701228732. [DOI] [PubMed] [Google Scholar]

- Preibisch C, Neumann K, Raab P, Euler HA, Von Gudenberg AW, Lanfermann H, Giraud A. Evidence for compensation for stuttering by the right frontal operculum. NeuroImage. 2003;20(2):1356–1364. doi: 10.1016/S1053-8119(03)00376-8. [DOI] [PubMed] [Google Scholar]

- Quraan MA, Cheyne D. Reconstruction of correlated brain activity with adaptive spatial filters in MEG. NeuroImage. 2010;49(3):2387–2400. doi: 10.1016/j.neuroimage.2009.10.012. [DOI] [PubMed] [Google Scholar]

- Riaz N, Steinberg S, Ahmad J, Pluzhnikov A, Riazuddin S, Cox NJ, Drayna D. Genomewide significant linkage to stuttering on chromosome 12. American Journal of Human Genetics. 2005;76(4):647–651. doi: 10.1086/429226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riley GD. Stuttering severity instrument for children and adults. Pro-Ed; Austin, TX: 1994. [DOI] [PubMed] [Google Scholar]

- Roberts TPL, Ferrari P, Stufflebeam SM, Poeppel D. Latency of the auditory evoked neuromagnetic field components: Stimulus dependence and insights toward perception. Journal of Clinical Neurophysiology. 2000;17(2):114–129. doi: 10.1097/00004691-200003000-00002. [DOI] [PubMed] [Google Scholar]

- Rojas DC, Walker JR, Sheeder JL, Teale PD, Reite ML. Developmental changes in refractoriness of the neuromagnetic M100 in children. Neuroreport. 1998;9(7):1543–1547. doi: 10.1097/00001756-199805110-00055. [DOI] [PubMed] [Google Scholar]

- Sahin NT, Pinker S, Cash SS, Schomer D, Halgren E. Sequential processing of lexical, grammatical, and phonological information within broca’s area. Science. 2009;326(5951):445–449. doi: 10.1126/science.1174481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sekihara K, Nagarajan SS, Poeppel D, Marantz A, Miyashita Y. Reconstructing spatio-temporal activities of neural sources using an MEG vector beamformer technique. IEEE Transactions on Biomedical Engineering. 2001;48(7):760–771. doi: 10.1109/10.930901. [DOI] [PubMed] [Google Scholar]

- Shiller DM, Sato M, Gracco VL, Baum SR. Perceptual recalibration of speech sounds following speech motor learning. Journal of the Acoustical Society of America. 2009;125(2):1103–1113. doi: 10.1121/1.3058638. [DOI] [PubMed] [Google Scholar]

- Singh KD, Barnes GR, Hillebrand A. Group imaging of task-related changes in cortical synchronisation using nonparametric permutation testing. NeuroImage. 2003;19(4):1589–1601. doi: 10.1016/s1053-8119(03)00249-0. [DOI] [PubMed] [Google Scholar]

- Sommer M, Koch MA, Paulus W, Weiller C, Büchel C. Disconnection of speech-relevant brain areas in persistent developmental stuttering. Lancet. 2002;360(9330):380–383. doi: 10.1016/S0140-6736(02)09610-1. [DOI] [PubMed] [Google Scholar]

- Song L, Peng D, Jin Z, Yao L, Ning N, Guo X, Zhang T. Gray matter abnormalities in developmental stuttering determined with voxel-based morphometry. National Medical Journal of China. 2007;87(41):2884–2888. [PubMed] [Google Scholar]

- Suresh R, Ambrose N, Roe C, Pluzhnikov A, Wittke-Thompson JK, Ng MC, Wu X, Cook EH, Lundstrom C, Garsten M, et al. New complexities in the genetics of stuttering: Significant sex-specific linkage signals. American Journal of Human Genetics. 2006;78(4):554–563. doi: 10.1086/501370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tourville JA, Reilly KJ, Guenther FH. Neural mechanisms underlying auditory feedback control of speech. NeuroImage. 2008;39(3):1429–1443. doi: 10.1016/j.neuroimage.2007.09.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao Y, Weismer G. Interspeaker variation in habitual speaking rate: Evidence for a neuromuscular component. Journal of Speech, Language, and Hearing Research. 1997;40(4):858–866. doi: 10.1044/jslhr.4004.858. [DOI] [PubMed] [Google Scholar]

- Tyler MD, Tyler L, Burnham DK. The delayed trigger voice key: An improved analogue voice key for psycholinguistic research. Behavior Research Methods. 2005;37(1):139–147. doi: 10.3758/bf03206408. [DOI] [PubMed] [Google Scholar]

- Ventura MI, Nagarajan SS, Houde JF. Speech target modulates speaking induced suppression in auditory cortex. BMC Neuroscience. 2009:10. doi: 10.1186/1471-2202-10-58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watkins KE, Smith SM, Davis S, Howell P. Structural and functional abnormalities of the motor system in developmental stuttering. Brain. 2008;131(1):50–59. doi: 10.1093/brain/awm241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weber-Fox C, Spruill JE, Spencer R, Smith A. Atypical neural functions underlying phonological processing and silent rehearsal in children who stutter. Developmental Science. 2008;11(2):321–337. doi: 10.1111/j.1467-7687.2008.00678.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wittke-Thompson JK, Ambrose N, Yairi E, Roe C, Cook EH, Ober C, Cox NJ. Genetic studies of stuttering in a founder population. Journal of Fluency Disorders. 2007;32(1):33–50. doi: 10.1016/j.jfludis.2006.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yairi E, Ambrose NG. Early childhood stuttering I: Persistency and recovery rates. Journal of Speech, Language, and Hearing Research. 1999;42(5):1097–1112. doi: 10.1044/jslhr.4205.1097. [DOI] [PubMed] [Google Scholar]