Abstract

Teleradiology allows contemporaneous interpretation of imaging exams performed at some distance from the interpreting radiologist. The transmitted images are usually static. However, there is benefit to real-time review of full-motion ultrasound (US) exams as they are performed. Telesonography is transmission of full-motion sonographic data to a remote site. We hypothesize that US exams, read after having been compressed utilizing Motion Picture Experts Group version 4 (MPEG-4) compression scheme, transmitted over the Internet as streaming multimedia, decompressed, and displayed, are equivalent in diagnostic accuracy to reading the examinations locally. MPEG-4 uses variable compression on each image frame to achieve a constant output bit rate. With less compression, the bit rate rises, and the only way the encoder can contain bit rate within the set bandwidth is by lowering frame rate or reducing image quality. We review the relevant technologies and industry standard components that will enable low-cost telesonography.

Teleradiology is required when a radiologist is not immediately present for a sonographic examination. It also allows for contemporaneous interpretation of imaging exams performed at some distance from where the interpreting radiologist is located. This provides expert radiologic consultation in areas that are underserved by radiologists. Ordinarily transmitted images are static. However, there is benefit to real-time review of full-motion sonographic exams as they are performed. Telesonography is the acquisition and transmission of full-motion sonographic data to a site remote from where the examination is performed. In recent articles regarding telesonography, the transmission of only static data has been discussed.1, 2 The Internet is an obvious choice for interconnecting digital imaging modalities and the Picture Archiving and Communications System (PACS) image database to the radiologist’s viewing station. It is already commonplace to use the World Wide Web (WWW) for the distribution of text, audio, pictures, and movies. Therefore, while technological advances will allow web-based teleradiology programs to become available commercially,3, 4 ultrasound (US) presents a unique challenge in that the imaging is full-motion data and static images may not always convey the true scope of the pathology. This need for review of full-motion sonographic images is the basis for the common practice of a radiologist to perform a focused patient scan following the detailed screen initially performed by the sonographer. Static images are often supplemented by closed-circuit TV monitors connected to the ultrasound machines so that the full-motion imaging can be observed real-time by a radiologist in a nearby reading room.

Streaming multimedia has made significant advances in the last three years, and it is now routine to find multimedia databases on high-end servers and to find sophisticated multimedia players embedded in personal computer operating systems and web browsers. In lieu of the radiologist being present for a sonogram, using the teleradiology or telesonography model, streaming multimedia will provide interpreting radiologists with an additional piece of information that is not available on the static sonographic images. With the continued digitization of radiographic imaging modalities such as US, we are now capable of saving full-motion ultrasound video clips in the DICOM format. DICOM is an acronym for Digital Imaging and Communications in Medicine and it is the standard file format for medical images such as digital radiographic images. The current standard is DICOM version 3.0 and it allows for the capture of full-motion sonographic video clips as DICOM “movies.” These movies then can be converted into streaming multimedia for distribution over the Internet for interpretation at some distance from where the examination is performed.

Our broad, long-term objective is to determine whether the hardware environment of the Internet, coupled with the streaming multimedia software support provided by modern servers and browsers and image compression algorithms, is suitable for the storage, retrieval, compression, transmission, decompression, and display of video medical images without loss of diagnostic accuracy. In this article, we review the relevant technologies that will make telesonography a viable component of the teleradiology paradigm.

Making telesonography work depends upon many factors, including transmission bandwidth, image compression scheme, degree of image compression, spatial resolution and contrast, characteristics of the display device, and cost. One can control many of these variables while evaluating grayscale ultrasound full-motion imaging data as one varies the transmission bandwidth and observe its effect on image quality and diagnostic accuracy. If our conjecture is correct, this opens a vast new opportunity to use low-cost and industry standard components (eg, multimedia databases, multimedia players embedded in web browsers, commercial compression techniques) as an enabler for telesonography. Further research would be required to determine the range of acceptable values for each parameter that characterizes a reliable system with acceptable diagnostic accuracy. No patient data was used for this proposal and no Investigational Review Board (IRB) approval was needed.

BACKGROUND OF APPLICATION

The Internet has become a commonplace feature in hospitals and doctors’ offices. It is used routinely for electronic messaging (e:mail) and for access to medical information. In some hospitals, the Internet is the communications backbone of patient information, medical records, and PACS. Web-based teleradiology programs are commercially available from many of the major PACS vendors,5 including the enterprise wide PACS in service here at our institution.6 However, in most commercial PACS solutions supporting teleradiology, telesonography functionality is still lacking. Even in their novel approach to “real-time” radiology, Kinosada et al7 do not consider telesonography applications. In their paper, the authors recognize the value of real-time review of full-motion sonographic images, but they accomplished their brand of “telesonography” through transmission of the analog video signal and not through capture of the native DICOM video. The physician interaction occurs via telephone with the sonographer performing the scan remotely.

The Internet and WWW provide vital support for radiologic education through the Radiological Society of North America (RSNA) at http://www.rsna.org. In its “Practice Resources” web site, RSNA provides an extensive archive of practice cases from a variety of modalities such as computed radiography (CR), computed tomography (CT), digital radiography (DR), magnetic resonance (MR), nuclear medicine (NM), and US and presents each case as a patient history with one or more images from an appropriate diagnostic examination. The RSNA site delivers the text and images in common formats suitable for display by modern web browsers. In addition, the American College of Radiology publishes a multimedia database of static and dynamic cine loop images to be used by radiology residents as examples of pathology that might be encountered during the American Board of Radiology (ABR) certification examination.

The use of web resources for practice readings raises the question of whether a carefully selected set of Internet resources (eg, multimedia databases, new image compression algorithms, web browsers with embedded players for multimedia images, steadily increasing network bandwidth, high-resolution and high-contrast flat panel displays) could be utilized to realize reliable yet low-cost, real-time full-motion telesonography.

Sonography has become indispensable in the daily evaluation of many common diagnostic challenges. Sonography is the first-line test for indications such as right upper quadrant (RUQ) pain, acute renal failure, or when an adnexal mass is suspected in a female patient with or without pelvic pain.8 Sonography is a relatively inexpensive diagnostic test with high accuracies for diagnosing gallstones,9 hydronephrosis,10 and ovarian pathology.11 However, a radiologist may not always be present at the point of care for a number of reasons (eg, underserved area, staffing limitations, limited experience, war zone). The added information provided by review of full-motion imaging or the opportunity for the radiologist to perform directed scanning is forfeited when the radiologist is not physically present for the exam.

However, Chan et al12 make a compelling argument for the involvement of physicians in ultrasound scanning. They reviewed 1,510 consecutive exams performed in their ultrasound laboratory and compared the findings of their sonographers with the findings of the radiologists who performed a directed sonographic exam following the initial screening exam. In those cases in which a major or minor new diagnosis was made from the US scan, concordance rates between sonographers and the final physician interpretation were low (36% and 32%, respectively). The physicians found that the sonographers committed several errors such as (1) nonvisualization of a finding that was later discovered by the physician performing a repeat directed scan, (2) interpretation of a recognized abnormality in a different way from the physician, (3) failure to integrate the clinical information with the sonographic findings, and (4) any combination of the above three errors. Seven percent of these discordant cases were considered of major clinical importance in the patient’s management.12 Thus, in the teleradiology model, real-time telesonography with radiologist observation of performance of the full-motion video is highly desirable compared with simple review of static images. This could avoid some of the potential pitfalls of interpretation elucidated by Chan et al.12 In our paradigm, the radiologist could review the streaming media full-motion exam as it is performed by the sonographer. If clarifications are needed, the radiologist could direct the sonographer over the telephone as the scan is being performed. Loss of eye–hand coordination between the radiologist’s eyes and the sonographer’s hand would still limit the study but to a lesser extent than the limitations of static images.

Clearly, some parameters could conspire to make telesonography impossible: the images could be too large, the network bandwidth too small, the image may lose diagnostic quality following compression, or the display may have inadequate resolution or contrast for reliable interpretation. There are many examples in the literature of institutions that have tried to transmit real-time full-motion sonographic imaging data over a network (ISDN–Integrated Services Digital Network, ATM–Asynchronous Transfer Mode, etc.)13, 14, 15 but none have used the fertile bed of the www with advanced web browsers with integrated streaming multimedia capabilities over the ever-increasing bandwidth of the web. But, what if the parameters were controlled so that it was known in advance that streaming multimedia, using a network of known capabilities, utilizing an image compression algorithm with known characteristics, and displayed on a device with proven resolution and contrast, could reliably deliver images without loss of diagnostic accuracy? If this can be done, it would be the enabler for low-cost telesonography with worldwide applications.

This, then, is our goal. We hypothesize that there exists a parametric combination of network bandwidth, compression algorithm, image rendering software, and image display characteristics that will allow us to achieve the same diagnostic accuracy using streaming multimedia over the Internet that we would otherwise achieve from reading the images locally from a PACS.

EXISTING KNOWLEDGE

Video encoding is practical for common use due to the standards currently available. The International Telecommunication Union (ITU) is an agency of the United Nations.16 The ITU-T (Telecommunication Standardizations) was known as CCITT until 1993. The ITU publishes about 5,000 pages of recommendations a year and its series of Video Conferencing Standards is named “H.xxx” (H-dot-number). The International Standards Organization (ISO) was founded in 1946 and is a member of ITU-T; the U.S. representative in ISO is the American National Standards Institute (ANSI). Other standards bodies involved in video encoding are the National Institute of Standards and Technology (NIST) and the Institute of Electrical and Electronics Engineers (IEEE). Two important medical imaging standards are Digital Images and Communications for Medicine (DICOM)17, 18 and Health Level Seven (HL7) for text data. DICOM is a standard originally created as a collaboration between the ACR and NEMA to facilitate the exchange of imaging data between multiple imaging modalities (CR, CT, MRI, NM, US, etc) and between image storage devices (eg, PACS archives) and image display stations.

The history of encoding video is extensive19 and begins with the first attempts in the 1960s. An analog videophone was implemented, but even using a large communications bandwidth it enabled only postage stamp-sized black-and-white images. A closed-circuit TV system was evaluated at UCLA connecting an outpatient area and the radiology department, and a closed-circuit TV system connected Massachusetts General Hospital and Logan International Airport; in both instances these video systems were judged acceptable.

In the 1980s, the development of digital video began. The COST211 video codec (ie, coder–decoder), based on differential pulse code modulation (DPCM), was standardized by CCITT under the name “H.120.” It required a bit rate of 1.544 Mbps and offered very good spatial resolution because the DPCM was applied pixel by pixel; however, it had very poor temporal quality. H.120 demonstrated that less than one bit could be used to code each pixel and that initiated the design of block-based codecs. ITU-T solicited new designs for block-based codecs and received 15 proposals [14 based on discrete cosine transform (DCT) and one based on vector quantization]. The DCT transform was selected by ITU-T because, during 1984–1988, the Joint Photographic Experts Group (JPEG) chose the DCT20 as the main unit of compression for still images. By the late 1980s, the recommended ITU-T videoconference codec used a combination of interframe DPCM for minimum coding delay and the DCT. The standard was completed in 1989 and called H.261. This coding method is called P × 64 (P-times-64) Kbps where P takes on values from 1 to 30 (thus video rates are between 64 Kbps and 1,920 Kbps).

In the early 1990s, the Motion Picture Experts Group (MPEG) started investigating coding techniques for storage of video, and the basic framework of the H.261 standard was used as a starting point in the design of the codec. The resulting standard was called MPEG-121 and compressed the video stream to between 1.2 and 1.5 Mbps. MPEG-1 divided the video stream into three segments: I-frames provided periodic anchors (independent frames) in the stream, P-frames predicted the motion in future frames (which achieved the economy of encoding the interframe differences rather than absolute values), and B-frames were bidirectionally encoded so that the stream could be played in reverse. MPEG-1 was proposed as a standard to provide video coding for digital storage media (CD, DVD, DAT–Digital Audio Tape, optical media) and thus is a technological competitor to videotape.

MPEG-222 was developed for coding of interlaced video at bit rates of 4–9 Mbps. ITU-T adopted MPEG-2 under the generic name of H.262. H.262/MPEG-2 can code a wide range of resolutions and bit rates, ranging from SIF (352 horizontal pixels × 288 vertical pixels × 25 frames/second) to HDTV (1920 × 1250 × 60). MPEG-2 allows a receiver to decode a subset of the full bit stream in order to display an image sequence with reduced quality and reduced spatial and temporal resolution. MPEG-2 was so successful at the high end that it subsumed the role of MPEG-3, originally intended for HDTV.

The goal of MPEG-423 (also called H.263) was to specify a coded representation that can be used for compressing the moving picture components of audiovisual services at low bit rates. Applications include mobile networks, public switched telephone network (PSTN), and narrowband ISDN. MPEG-4 video also aims to provide tools and algorithms for efficient storage, transmission, and manipulation of video data in multimedia environments. This is to be applied to the three fields of multimedia, including digital television and interactive multimedia (www, distribution and access to image content). Coding of video for multimedia applications relies on a content-based visual data representation of scenes.

MPEG-7 was started in October 1996. MPEG-7 seeks to develop search engines for searching multimedia databases. An example might be searching for a video clip of a “lady with a red hat waiting for a taxi.” The name of MPEG-7 is rumored to be derived from “MPEG-1 + MPEG-2 + MPEG-4 = MPEG-7.”

The developed principles of video compression are based on three redundancy reduction principles:

Spatial redundancy reduction: reduce spatial redundancy among the pixels within an image by employing some data compressions, such as transform coding.

Temporal redundancy reduction: remove similarities between successive images (frames) by coding their differences rather than their values.

Entropy coding: reduce the redundancy between the compressed data symbols themselves by using variable length coding techniques.

In the coding of still images, the JPEG standard24 specifies two classes of encoding and decoding: lossless compression and lossy compression. Lossless compression is based on a simple predictive DPCM method using neighboring pixel values while DCT is employed for the lossy mode. The DPCM encoder achieves redundancy reduction by predicting the values of pixels based on the values previously coded and then codes the predictions. The lossy compression used in the JPEG standard defines three compression modes: (1) baseline sequential, (2) progressive mode, and (3) hierarchical mode.

In the baseline sequential mode compression, the image is partitioned into 8 × 8-pixel blocks from left to right and top to bottom. Each block is DCT coded. All 64 transform coefficients are quantized to the desired quality. In the lossy compression progressive mode, the components are encoded in multiple scans. Compressed data for each component are placed in a minimum of 2 and as many as 896 scans. The initial scans create a rough version of the image while subsequent scans refine it (progressive scanning). The lossy compression hierarchical mode is a super progressive mode in which the image is broken down into a number of subimages called frames (a frame is a collection of one or more scans).

The emergence of streaming media offers ultrasound and other medical imaging video, audio, and still frame images a unique opportunity to be transmitted across the Internet using cable modems and digital subscriber lines (DSL). A stream originates on a media server that is connected to the Internet or an Intranet and is displayed by a media player on an end user’s computer. To archive this transfer, the data must be in a form that can be read by routers, modems, browsers, and other components of the network being utilized. The media server streams the video, audio, or still frames in packets; when the media player receives the stream it disassembles the packets and renders the data as images and sound. The rules that govern how the packets are to be assembled and disassembled are contained in the various Internet protocols. The multimedia streams are often packetized using the industry standard User Datagram Protocol (UDP).

IDENTIFICATION OF GAPS

The reliable distribution of MPEG-4 compressed streaming video over the Internet requires resolving the following knowledge gaps:

MPEG-4 utilizes lossy compression. Does the amount of loss reduce diagnostic accuracy?

What security methods are to be implemented to enable Health Insurance Portability and Accountability Act (HIPAA) privacy requirements to be achieved?

Can the bit rate needed to avoid loss of diagnostic accuracy be sustained on the commercial Internet?

Figure 1 illustrates the elements of the streaming media production from beginning to end. During the content creation phase, audio and/or video content is created and edited. To prepare for streaming, the content must be encoded using the media encoder PC. The media server is designed specifically for streaming media content, however, a standard web server can be used to host ASF files (ASF stands for Advanced Streaming Format – see the Appendix). This file type reduces data into small packets to enable streaming and/or storage to disk.

Figure 1.

Streaming media.

The media server properly meters the delivery of packets according to feedback information it receives when sending a stream to a client. Also, the media server is able to control the bandwidth by monitoring the streams and using the feedback information. The media player then renders the streaming data for display. The media player can play content in a standalone configuration or it can be embedded in a web page. Advanced presentations can synchronize text, images, audio, and video with other items on the web page. The media encoder creates ASF content that has a variety of video streams at variable bandwidths (either low- or high-bandwidth clients) and a separately encoded audio stream when creating multiple-bit-rate video content for low-bandwidth clients; the video streams can range from 18 to 300 Kbps. When creating multiple-bit-rate content for high-bandwidth clients, video streams can range from 81 Kbps to 10 Mbps. The video codec currently employed is MPEG-4; the newest version of the Media Player25 in Microsoft® Windows will decompress and play MPEG-4 encoded digital media content.

In summary, the MPEG-4 standard used for compressing video by fixing the bandwidth and the streaming media process enables the creation and management of streaming media for combining video, audio, and still images.

IMPORTANCE AND HEALTH RELEVANCE

Why enable worldwide teleradiology of assured quality? The benefits of image review of full-motion sonography by a qualified radiologist are obvious. Due to the low inherent sonographic spatial and contrast resolution of many structures, often the best way to distinguish one structure from another is by motion. That is to say, things that move together go together. However, the presence of a radiologist may not always be possible in underserved areas. In addition, review of static images can be limiting depending on the quality of the images and the experience of the sonographer obtaining those images. Full-motion image data may in fact improve patient care by increasing the radiologist’s confidence in his/her diagnosis.

PREVIOUS WORK

In our previous exploratory work during 1995–1997,26, 27, 28 we investigated whether JPEG and motion JPEG (frame-by-frame JPEG compression) were suitable for full-motion sonography of uterine fibroids. Sixty-seven clinical ultrasound examinations (including both screen-capture still images and movie clips selected by the technologist) were subjected to three-degrees-of-motion JPEG compression (none, 9:1, 15:1), and then randomized and submitted to seven radiologists over a multiweek period for reading. Receiver Operating Characteristic (ROC) analysis showed no significant difference in accuracy of detection of fibroid tumors at any of the three levels of compression. The significance of the study was that JPEG image compression techniques were usable clinically (in this particular setting), and that the 15:1 bandwidth reduction achieved using motion JPEG made the difference between achievable versus unachievable data rates on the hospital’s local area network used for data distribution.

In 1996–1997, we29, 30 conducted a similar experiment using MPEG-2 compression in place of motion JPEG, with the MPEG-2 (variable) compression set to achieve a constant output rate of 5 Mbps. Results were demonstrated at RSNA 1996.31 About 50 attendees observed a collection of ten sonographic examinations and 90% of them opined that the quality of the resulting video clips was sufficiently high to achieve reliable diagnosis (no formal ROC study was attempted).

Bubash–Faust and Bassignani30 took 20 normal chest X-rays and 20 chest X-rays showing interstitial lung disease and digitized and scanned them. These X-rays were digitized using a Lumisys diagnostic quality digitizer and scanned using a flatbed Epson scanner with transparency adapter. All three media (original chest X-ray and digitized and scanned chest X-ray) were presented to three reviewers for interpretation. The question presented to the reviewers was “Is there evidence for interstitial lung disease on this image.” They also rated their confidence in their diagnosis and gave a subjective measurement of which image they preferred. The authors found that reviewers favored the chest X-ray over other types of media for interpretation but that there was no statistically significant difference in the reviewers’ diagnoses between the three media types. The goal of this research was to show that low-cost flatbed scanners with transparency adapters could serve as a means to get analog images into resident teaching files without the loss of diagnostic quality. However, this research also suggests that various digital compression techniques can be applied to other radiologic images (eg, full-motion ultrasound) without loss of diagnostic accuracy.

CURRENT EXPLORATIONS

In 2001, Weaver et al32 conducted a proof-of-concept experiment for the University of Virginia’s Office of Telemedicine. They performed a digital capture of an ultrasound examination in pediatric cardiology, compressed that examination using MPEG-4 to achieve a constant output rate of 1.2 Mbps, and then produced a CD-ROM containing the compressed exam and the multimedia player for it. This compressed movie clip was then used informally to assess whether the resulting images were diagnostically useful; observers indicated that they were.

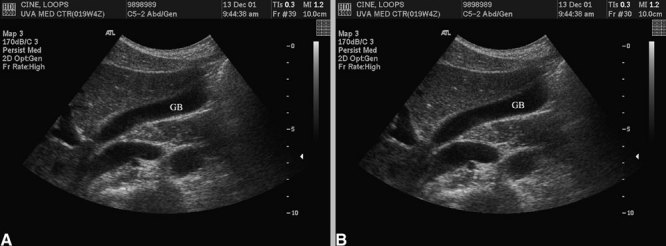

As an example of the potential of this concept, we captured and de-identified one 4 (60 US frames) examination of the gallbladder and one 4 (60 US frames) examination of the kidney. These two examinations were recorded in their standard DICOM formats and then compressed using MPEG-4.

Figure 2a is a screenshot of frame 39 from the file gallbladder.dcm and represents the true DICOM image as originally captured by the US machine. Figure 2b is a screenshot of frame 30 from the file gallbladder.wmv and represents the image seen by the radiologist after the DICOM input has been compressed and decompressed with MPEG-4 using standard Microsoft Windows Media Server software. In this figure, the MPEG-4 compression software was set to achieve a constant video output rate of 1.2 Mbps. As you can see, the view of the gallbladder in Figure 2b (the MPEG-4 version) is slightly less sharp than that in Figure 2a (the DICOM version) because of the discrete cosine transform coding inherent to MPEG-4. It is our hypothesis that this small loss of image sharpness will not degrade diagnostic accuracy.

Figure 2.

(a) Frame 39 from DICOM examination of gallbladder (GB). (b). Frame 30 from MPEG-4 compressed/decompressed examination of gallbladder.

ENCODING METHOD FOR MPEG-4

Using the MPEG-4 encoder software provided with the Microsoft Windows Media Server, the time required for encoding is typically five times the run-time of the raw DICOM image file, so a 4-s DICOM file can be MPEG-4 encoded in about 20 s. The advantage of this approach is that we save the expense of MPEG-4 encoder hardware, thus increasing the ubiquity and lowering the cost of the resulting clinical system. In this article, we are discussing real-time review of full-motion sonography. In fact, “near-real-time” might be a more accurate characterization since there is a 20-s delay introduced as the data are compressed with our MPEG-4 software compressor.

INTERNET TRANSMISSION

In our preliminary study, a 4-s DICOM examination created an 18-MB file; the higher-bandwidth (1.2 Mbps) MPEG-4 file was 800 kB. The University of Virginia’s Internet backbone consists of a 622-Mbps ATM backbone with 100-Mbps Ethernet local area network segments. Under normal workloads on our shared 100-Mbps Ethernet segment, the multimedia server can deliver the DICOM file in about 5 s and the compressed MPEG-4 file in about 1 s. Exams could be stored on the receiving PC for review at the radiologist’s convenience or, as we propose, neat-real-time review of the full-motion data would be optimal, allowing the radiologist to direct the scan via telephone conversation with the sonographer during performance of the exam.

CONCLUSION

Using the teleradiology paradigm, telesonography is feasible using today’s Internet to stream near-real-time, full-motion sonographic image data to radiologists at a distance from where the original US examination was performed. This opens a vast new opportunity to use low-cost and industry standard components (eg, multimedia databases, multimedia players embedded in web browsers, commercial compression techniques) as an enabler for telesonography. Research would be required to know with scientific certainty that these techniques do not degrade diagnostic accuracy of the sonographic image data.

APPENDIX: GLOSSARY OF TERMS

Audio Interchange File Format (AIFF/ASF)

AIFF files generally end with a .aif designation. One of the older formats, AIFF stores and transmits sound as waveforms, which take samples of sound in a file and then digitize the sounds. AIFF was originally developed to store files on Macintosh computers.

Advanced Streaming Format (ASF)

The structure of the advanced streaming format is particularly suited for streaming audio, video, images, and text over a network. The ASF specification calls for data to be organized into small packets which can be streamed or saved as a file.

Bandwidth

The data transfer capacity of a digital communication system such as the Internet. Bandwidth is expressed in the number of bits transferred per second (bps). Bandwidth is the bit rate capacity of a network.

Bit Rate

The speed at which binary content is streamed on a network. Bit rate is usually measured in kilobits per second (Kbps). The bit rate of an .asf file or live stream is determined during the encoding process, when the streaming content is created.

Client

On a local area network or the Internet, a computer that accesses shared network resources provided by another computer (called a server).

Codec

Short for compressor/decompressor. Hardware or software that can compress and uncompress audio or video data. Codecs are used to decrease the bit rate of media so that it can be streamed over a network.

Compression

The coding of data to reduce file size or the bit rate of a stream. A codec contains the algorithms for compressing and decompressing audio, video, and still frame images. With a lossless compression scheme, no data are lost in the compression/decompression process. With a lossy compression scheme, data may be lost or in some way changed. Most codecs used for streaming media use lossy compression.

File Transfer Service (FTS)

A feature that multicasts files over a network to an activeX control on a client computer.

Intelligent Streaming

A set of features that automatically detect network conditions and adjust the properties of a video stream to maximize quality.

Interframe Compression

A video compression technique that reduces redundancy in frame-to-frame content. Interframe compression can be used to produce a very low bit rate stream that is suitable for streaming on the Internet. Video frames are compressed into either key frames that contain a complete image or delta frames that follow key frames and contain only the parts of the key frame that have changed. MPEG-4 video codec uses interframe compression.

Multimedia

Includes audio, video, and images. A type of content that can be played or displayed on a computer.

Packet

A unit of information transmitted as a whole from one device to another on a network. A packet consists of binary digits representing both data and a header. When an ASF stream is created, audio, video, script commands, markers, and control and header information are encoded into a sequence of packets according to the ASF specification.

Player

A program that renders multimedia content, typically images, video, and audio. ASF content is rendered using a media player.

Port

A number that enables the sending of IP packets to a particular process on a computer connected to a network. Ports are identified with a particular service.

Render

To produce audio, video, or an image from a data file or stream on an output device such as a video display, a sound system, or a printer.

Script Command

Text that is inserted in an ASF stream at a specific time. When the stream is played and the time associated with a script command is reached, the media player triggers an event and the script command can initiate some action.

Stream

Data transmitted across a network and any properties associated with the data. An ASF stream contains data that are rendered by the media player into audio, video, images, and text.

Streaming

A method of delivering content to an end user in which media is located on a server and then played by streaming it across a network. The other method of delivering content is downloading by which media is first copied to a client computer and then played locally.

References

- 1.Landwehr JB, Jr, Zador IE, Wolfe HM, et al. Telemedicine and fetal ultrasonography: assessment of technical performance and clinical feasibility. Am J Obstet Gynecol. 1997;177:846–848. doi: 10.1016/s0002-9378(97)70280-1. [DOI] [PubMed] [Google Scholar]

- 2.Macedonia CR. Three-dimensional ultrasonographic telepresence. J Telemed Telecare. 1998;4:224–230. doi: 10.1258/1357633981932280. [DOI] [PubMed] [Google Scholar]

- 3.Ratib O, Ligier Y, Brandon D, et al. Update on digital image management and PACS. Abdom Imaging. 2000;25:333–340. doi: 10.1007/s002610000011. [DOI] [PubMed] [Google Scholar]

- 4.Hwang SC, Lee MH. A WEB-based telePACS using an asymmetric satellite system. IEEE Trans Inf Technol Biomed. 2000;4:212–215. doi: 10.1109/4233.870031. [DOI] [PubMed] [Google Scholar]

- 5.Mathiesen FK. WEB technology—the future of teleradiology? Comput Meth Programs Biomed. 2001;66:87–90. doi: 10.1016/S0169-2607(01)00140-7. [DOI] [PubMed] [Google Scholar]

- 6.http://www.algotec.com/products/medisurf.htm

- 7.Kinosada Y, Takada A, Hosoba M. Real-time radiology—new concepts for teleradiology. Comput Meth Programs Biomed. 2001;66:47–54. doi: 10.1016/S0169-2607(01)00134-1. [DOI] [PubMed] [Google Scholar]

- 8.American College of Radiology ACR Appropriateness Criteria 2000. Radiology 215 Suppl: 1-1511, 2000 [PubMed]

- 9.Shea JA, Berlin JA, Escarce JJ, et al. Revised estimates of diagnostic test sensitivity and specificity in suspected biliary tract disease. Arch Intern Med. 1994;154:2573–2581. doi: 10.1001/archinte.154.22.2573. [DOI] [PubMed] [Google Scholar]

- 10.O’Neill WC. Sonographic evaluation of renal failure. Am J Kidney Dis. 2000;35:1021–1038. doi: 10.1016/s0272-6386(00)70036-9. [DOI] [PubMed] [Google Scholar]

- 11.Laing FC, Brown DL, DiSalvo DN. Gynecologic ultrasound. Radiol Clin North Am. 2001;39:523–540. doi: 10.1016/s0033-8389(05)70295-5. [DOI] [PubMed] [Google Scholar]

- 12.Chan V, Hanbidge A, Wilson S, et al. Case for active physician involvement in US practice. Radiology. 1996;199:555–560. doi: 10.1148/radiology.199.2.8668811. [DOI] [PubMed] [Google Scholar]

- 13.Beard DV, Hemminger BM, Keefe B, et al. Real-time radiologist review of remote ultrasound using low-cost video and voice. Invest Radiol. 1993;28:732–734. doi: 10.1097/00004424-199308000-00015. [DOI] [PubMed] [Google Scholar]

- 14.Falconer J, Giles W, Villanueva H. Realtime ultrasound diagnosis over a wide-area network (WAN) using off-the-shelf components. J Telemed Telecare. 1997;3((Suppl 1):28–30. doi: 10.1258/1357633971930265. [DOI] [PubMed] [Google Scholar]

- 15.Duerinckx AJ, Hayrapetian A, Melany M, et al. Real-time sonographic video transfer using asynchronous transfer mode technology. AJR Am J Roentgenol. 1997;168:1353–1355. doi: 10.2214/ajr.168.5.9129443. [DOI] [PubMed] [Google Scholar]

- 16.Tanenbaum AS. Computer Networks. 3. Englewood Cliffs, NJ: Prentice Hall; 1996. pp. 67–72. [Google Scholar]

- 17.Fritz SL. DICOM standardization. In: Siegel EL, Kolodner RM, editors. Filmless Radiology. New York: Springer; 1999. pp. 311–321. [Google Scholar]

- 18.Oosterwijk H. DICOM Basics. Crossroads, TX: O’Tech Inc.; 2000. [Google Scholar]

- 19.Chanbari M. Video coding. Exeter: Short Run Press Ltd; 2000. [Google Scholar]

- 20.Wallace GK. The JPEG still picture compression standard. Commun ACM. 1991;34:30–44. doi: 10.1145/103085.103089. [DOI] [Google Scholar]

- 21.MPEG-1 (Coding of moving pictures and associated audio for digital storage media at up to 1.5 Mbit/s) ISO/IEC 1117-1122, video, November 1991

- 22.Haskel BG, Puri A, Netravali AN. Digital video: an introduction to MPEG-2. New York: Chapman and Hall; 1997. [Google Scholar]

- 23.Koener R, Perera F, Chiariglione L. MPEG-4: context and objectives. Image Commun J. 1997;9:4. [Google Scholar]

- 24.Pennebaker WH, Mitchell JL. JPEG: still image compression standard. New York: Van Nostrand Reinhold; 1993. [Google Scholar]

- 25.Inside Windows Media. Que, Microsoft Corporation, 1999, www.quecorp.com

- 26.DeAngelis GA, Dempsey BJ, Berr S, et al. Diagnostic efficacy of compressed digitized real-time sonography of uterine fibroids. Acad Radiol. 1997;4:83–89. doi: 10.1016/s1076-6332(97)80002-5. [DOI] [PubMed] [Google Scholar]

- 27.DeAngelis GA, Dempsey BJ, Berr S, et al. Digitized real-time ultrasound: signal compression experiment. Radiology. 1995;197:336. [Google Scholar]

- 28.Sublett JW, Dempsey BJ, Weaver AC. Design and implementation of a digital teleultrasound system for real-time remote diagnosis, computer-based medical systems. Los Alamitos, CA: IEEE Computer Society Press; 1995. pp. 292–228. [Google Scholar]

- 29.Sublett J: Design of a Teleultrasound System, Master’s thesis, University of Virginia, May 1996

- 30.Bubash–Faust LB, Bassignani MJ: A Comparison between Diagnostic Quality Digitizer and Flat Bed Scanner with Transparency Adapter for the Creation of Digital Teaching Files. Society for Health Services Research in Radiology Annual Meeting, San Diego, CA, October 18-20, 2001

- 31.Weaver AC, Viswanathan A, Gay S, et al: Teleultasound. RSNA, December 1996

- 32.Weaver AC, Dwyer SJ, Rheuban K: An exploration of streaming multimedia for pediatric cardiology. Fund for Exploration of Science and Technology, University of Virginia, March 2001

- 33.Swets JA, Pickett RM. Evaluation of diagnostic systems, methods from signal detection theory. New York: Academic Press; 1982. pp. 80–93. [Google Scholar]

- 34.Hanley JA, McNeil BJ. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 1982;143:29–36. doi: 10.1148/radiology.143.1.7063747. [DOI] [PubMed] [Google Scholar]

- 35.Hanley JA, McNeil BJ. A method of comparing the areas under receiving operating characteristic curves from the same cases. Radiology. 1983;148:839–843. doi: 10.1148/radiology.148.3.6878708. [DOI] [PubMed] [Google Scholar]

- 36.Barnett SB, Rott HD, ter Haar GR, et al. The sensitivity of biological tissue to ultrasound. Ultrasound Med Biol. 1997;23:805–812. doi: 10.1016/S0301-5629(97)00027-6. [DOI] [PubMed] [Google Scholar]

- 37.American Institute of Ultrasound in Medicine. Bioeffects Committee Bioeffects considerations for the safety of diagnostic ultrasound. J Ultrasound Med. 1988;7((9 Suppl)):S1–38. [PubMed] [Google Scholar]