Abstract

We propose a method using Gabor filters and phase portraits to automatically locate the optic nerve head (ONH) in fundus images of the retina. Because the center of the ONH is at or near the focal point of convergence of the retinal vessels, the method includes detection of the vessels using Gabor filters, detection of peaks in the node map obtained via phase portrait analysis, and an intensity-based condition. The method was tested on 40 images from the Digital Retinal Images for Vessel Extraction (DRIVE) database and 81 images from the Structured Analysis of the Retina (STARE) database. An ophthalmologist independently marked the center of the ONH for evaluation of the results. The evaluation of the results includes free-response receiver operating characteristics (FROC) and a measure of distance between the manually marked and detected centers. With the DRIVE database, the centers of the ONH were detected with an average distance of 0.36 mm (18 pixels) to the corresponding centers marked by the ophthalmologist. FROC analysis indicated a sensitivity of 100% at 2.7 false positives per image. With the STARE database, FROC analysis indicated a sensitivity of 88.9% at 4.6 false positives per image.

Key words: Retinal images, segmentation, optic nerve head, optic disk, Gabor filters, phase portraits, FROC analysis

Introduction

The optic nerve head (ONH) or optic disk is one of the important anatomical features that are usually visible in a fundus image of the retina.1–3 The ONH represents the location of entrance of the blood vessels and the optic nerve into the retina. In fundus images, the ONH usually appears as a bright region, white or yellow in color.3–5 In the commonly used macula-centered format for fundus images, the ONH is located toward the left-hand or right-hand side of the image and is an approximately circular area that is about one sixth the width of the image in diameter, is brighter than the surrounding area, and appears as the convergent area of the blood vessel network.6 In an image of the retina, all of the properties mentioned above (shape, color, size, and convergence) contribute to the identification of the ONH. Identification of the ONH is an important step in the detection and analysis of the anatomical structures and pathological features in the retina.

A crucial preliminary step in computer-aided analysis of retinal images is the detection and localization of important anatomical structures, such as the ONH, the macula, the fovea, and the major vascular arcades; several researchers have proposed methods for these purposes.7–9 The ONH and the major vascular arcades may be used to localize the macula and fovea.7–9 The anatomical structures mentioned above may be used as landmarks to establish a coordinate system of the retinal fundus.7–10 Such a coordinate system may be used to determine the spatial relationship of lesions, edema, and hemorrhages with reference to the ONH and the macula;8 it may also be used to exclude artifacts in some areas and pay more importance to potentially pathological features in other areas.9 For example, when searching for microaneurysms and nonvascular lesions, the ONH area should be omitted due to the observation that the ONH area contains dot-like patterns which could mimic the appearance of pathological features and confound the analysis.9 Regardless, the position and average diameter of the ONH are used to grade new vessels and fibrous proliferation in the retina.10 On the other hand, the presence of pathology in the macular region is associated with worse prognosis than elsewhere;9 more attention could be paid by lowering the threshold of detection of pathological features in the macular region. Abundant presence of drusen (a characteristic of age-related macular degeneration or AMD) near the fovea has been found to be roughly correlated with the risk of wet AMD and the degree of vision loss.11 One of the features useful in discriminating between drusen and hard exudates is that drusen are usually scattered diffusely or clustered near the center of the macula; on the other hand, hard exudates are usually located near prominent microaneurysms or at the edges of zones of retinal edema.10 Criteria for the definition of clinically significant macular edema include retinal thickening with an extent of at least the average area of the ONH with a part of it being within a distance equal to the average diameter of the ONH from the center of the macula.10

In clinical diagnosis of AMD, the potentially blinding lesion known as choroidal neovascular membrane (CNVM) is typically observed as subfoveal, juxtafoveal, or extrafoveal within the temporal vascular arcades. The CNVM lesion is usually circular in geometry and white in color. In a computer-aided procedure, the differentiation between the ONH and a potential CNVM lesion would be critical for accurate diagnosis: whereas both of these regions could be of similar shape and color, the ONH has converging vessels that dominate its landscape; hence, the prior identification of the ONH is important. Based on the same property noted above, the methods proposed in the present paper for the detection of the ONH rely on the converging pattern of the vessels within the ONH.

Computer-aided analysis of retinal images has the potential to facilitate quantitative and objective analysis of retinal lesions and abnormalities. Different types of retinal pathology, such as retinopathy of prematurity,12 diabetic retinopathy,13 and AMD4 may be detected and analyzed via the application of appropriately designed techniques of digital image processing and pattern recognition. Accurate identification and localization of retinal features and lesions could contribute to improved diagnosis, treatment, and management of retino-pathy. With this motivation, we propose a method for the detection of the ONH.

Methods for the Detection of the Optic Nerve Head

A review of selected recent works on methods and algorithms to locate the ONH in images of the retina is provided in the following paragraphs.

Osareh et al.14 proposed a method to detect the ONH based on a template image created by averaging the ONH region of 25 color-normalized images. Gray-scale morphological filtering and active contour modeling were used to locate the ONH region, after locating the center of the ONH by using the template. The method was tested with 75 images of the retina and an average accuracy of 90.32% in locating the boundary of the ONH was reported.

Relying on matching the expected directional pattern of the retinal blood vessels in the vicinity of the ONH, Youssif et al.1 proposed a method to detect the ONH. A two-dimensional Gaussian matched filter was used to obtain a direction map of the segmented retinal vessels. The Gaussian matched filter was resized in four different sizes, and the difference between the output of the matched filter and the vessels’ directions was measured. The minimum difference was used to estimate the coordinates of the center of the ONH. The center of the ONH was correctly detected in 80 out of 81 images (98.77%) from a subset of the Structured Analysis of the Retina (STARE) database15–17 and all of the 40 images (100%) of the Digital Retinal Images for Vessel Extraction (DRIVE) database.18,19 Similar methods have been implemented by ter Haar.20

Lalonde et al.21 implemented a template matching approach to locate the ONH. The design is based on a Hausdorff-based template matching technique using edge maps, guided by pyramidal decomposition for large-scale object tracking. The methods were tested with a database of 40 fundus images of the retina with variable visual quality and retinal pigmentation and also of normal and small pupils. An average error of 7% in positioning the center of the ONH was reported.

Based on the brightness and circularity of the ONH, Park et al.6 presented a method using algorithms which include thresholding, detection of roundness, and detection of circles. The method achieved a success rate of 90.25% with the 40 images in the DRIVE database. Similar methods have been described by Barrett et al.22, ter Haar,20 and Chrástek et al.23,24 Zhu et al.3,25 proposed a method based on edge detection and the Hough transform for the detection of circles. A detection sensitivity of 92.5% was obtained with 40 images from the DRIVE database; however, the performance of the method with the STARE database was poor (44.4%). Sekhar et al.26 presented a method for the detection of the ONH based upon morphological image processing and the Hough transform; the method detected the ONH in 36 out of the 38 images tested.

Sinthanayothin et al.27 proposed a method to locate the ONH by identifying the area with the highest variation in intensity using a window size equal to that of the ONH. The images were preprocessed using an adaptive local contrast enhancement method applied to the intensity component. One hundred twelve images obtained from a diabetic screening service were tested with the method; a sensitivity of 99.1% was achieved.

An automatic method to obtain an ellipse approximating the ONH using a genetic algorithm was proposed by Carmona et al.28 The algorithm can also provide the parameters characterizing the shape of the ellipse obtained. A set of hypothesis points were initially obtained that exhibited geometric properties and intensity levels similar to the ONH contour pixels. Then, a genetic algorithm was used to find an ellipse containing the maximum number of hypothesis points in an offset of its perimeter, considering some constraints. One hundred ten images of the retina were tested with the method. The results were compared with a gold standard generated by averaging two contours traced by an expert for each image; the results for 96% of the images had <5 pixels of discrepancy. Hussain29 proposed a method combining a genetic algorithm and active contour models; no quantitative result was reported.

The method proposed by Foracchia et al.30 is based on a preliminary detection of the major retinal vessels. A geometrical parametric model where two of the model parameters are the coordinates of the ONH center was proposed to describe the general direction of retinal vessels at any given position. Model parameters were identified by means of a simulated annealing optimization technique. The estimated values provided the coordinates of the center of the ONH. An evaluation of the proposed procedure was performed using a set of 81 images from the STARE database, containing both normal and pathological images. The position of the ONH was correctly identified in 79 out of the 81 images (97.53%).

A method was developed by Kim et al.31 to analyze images obtained by retinal nerve fiber layer photo-graphy. The center of the ONH was selected as the brightest point and an imaginary circle was defined. Applying the warping and random sample consensus technique, the imaginary circle was first warped into a rectangle and then inversely warped into a circle to find the boundary of the ONH. The images used to test the method included 43 normal images and 30 images with glaucomatous changes. A sensitivity of 91% and a positive predictability of 78% were reported.

A method to differentiate the ONH from other bright regions such as hard exudates and artifacts, based on the fractal dimension related to the converging pattern of the blood vessels, was proposed by Ying et al.32 The ONH was segmented by local histogram analysis. The method identified the ONH in 39 out of the 40 images in the DRIVE database.

Hoover and Goldbaum16 applied fuzzy convergence to detect the origin of the blood vessel network, which can be considered as the center of the ONH in a fundus image of the retina. Thirty images of normal retinas and 51 images of retinas with pathology from the STARE database were tested, containing such diverse symptoms as tortuous vessels, choroidal neovascularization, and hemorrhages that obscure the ONH. A rate of successful detection of 89% was achieved. Fleming et al.9 implemented an algorithm using the elliptical form of the major retinal blood vessels to obtain an approximate region of the ONH, which was then refined based on the circular edge of the ONH. The methods were tested on 1,056 sequential images from a retinal screening program. In 98.4% of the cases tested, the error in the ONH location was <50% of the diameter of the ONH.

Based on tensor voting to analyze vessel structures, Park et al.33 proposed a method in which the position of the ONH was identified by mean-shift-based mode detection. Park et al. utilized three preprocessing stages through illumination equalization to enhance local contrast and extract vessel patterns by tensor voting in the equalized images. The position of the ONH was determined by mode detection based on the mean shift procedure. The evaluation of the method was done with 90 images from the STARE database, and achieved 100% success rate on 40 images of normal retinas and 84% on 50 images of retinas with pathology.

We propose a method for the detection of the ONH via the detection of the blood vessels of the retina using Gabor filters34 and the application of phase portrait modeling35 to detect node points that could potentially indicate points of convergence of vessels.36 The method is specifically based on the characteristic of the ONH as the point of convergence of retinal vessels. The procedure of the method and detailed evaluation of the results are given in the following sections.

Methods

Databases of Images of the Retina and Experimental Setup

A few important details of the two publicly available databases used in the present work, the DRIVE18,19 and the STARE15–17 databases, are given in the following paragraphs.

The DRIVE Database The images in the DRIVE database were acquired using a field of view (FOV) of 45°. The database consists of a total of 40 color fundus photographs. Considering the size (584 × 565 pixels) and the FOV of the images, they are low-resolution fundus images of the retina, having an approximate spatial resolution 20 µm/pixel.23 Out of the 40 images provided in the DRIVE database, 33 are normal and seven contain signs of diabetic retinopathy, namely, exudates, hemorrhages, and pigment epithelium changes.18,19 The results of manual segmentation of the blood vessels are provided for all 40 images in the database; however, no information on the ONH is available.

The STARE Database The STARE images were captured using an FOV of 35°. Each image is of size 700 × 605 pixels with 24 bits/pixel. The spatial resolution of the STARE images is approximately 15 µm/pixel.20 A subset of the STARE database, which contains 81 images,16 was used in the present work. This subset has been used by several other researchers; we have used the same subset in order to facilitate comparison of the results obtained. Out of the 81 images, 30 have normal architecture and 51 have various types of pathology, containing diverse symptoms such as tortuous vessels, choroidal neovascularization, and hemorrhages.16,17 This database is considered to be more difficult to analyze than the DRIVE database because of the inclusion of a larger number of images with pathology of several types. More importantly, because the images were scanned from film rather than acquired directly using a digital fundus camera, the quality of the images is poorer than that of the images in the DRIVE database. The results of manual segmentation of the blood vessels for a subset of the 81 STARE images are available; however, no expert annotation of the ONH is available.

Annotation of Images of the Retina The center and the contour of the ONH were drawn on each image, independently by an ophthalmologist and retina specialist (A.L.E.), by magnifying the original image by 300% using the software ImageJ.37 The performance of the proposed method was evaluated by comparing the detected center of the ONH to the same as marked by the ophthalmologist. Several images in the STARE database do not contain the full region of the ONH. In such cases, the contour of the ONH was drawn only for the portion lying within the effective region of the image. In one of the STARE images, the center of the ONH is located outside the FOV (but within the frame of the image), which was, regardless, marked at the expected location. When drawing the contour of the ONH, attention was paid so as to avoid the rim of the sclera (scleral crescent or peripapillary atrophy) and the rim of the optic cup, which, in some images, may be difficult to differentiate from the ONH; for related illustrations, see Zhu et al.3 When labeling the center of the ONH, care was taken not to mark the center of the optic cup or the focal point of convergence of the central retinal vein and artery. However, the point of convergence of the retinal vessels serves as a primary feature that is useful in the detection of the ONH.16 The contours of the ONH are not used in the present work, but have been used in a recent related work.3

Preprocessing of Images

Each component of each color image was normalized to the range [0, 1] by dividing by 255, the maximum possible value in the original 8-bit representation. Each image was converted to the luminance component Y, given as  where R, G, and B are the red, green, and blue components, respectively, of the color image. The effective region of the image was thresholded using the normalized threshold of 0.1. The artifacts present in the images at the edges were removed by applying morphological erosion38 with a disk-shaped structuring element of diameter 10 pixels.

where R, G, and B are the red, green, and blue components, respectively, of the color image. The effective region of the image was thresholded using the normalized threshold of 0.1. The artifacts present in the images at the edges were removed by applying morphological erosion38 with a disk-shaped structuring element of diameter 10 pixels.

In order to prevent the detection of the edges of the effective region in subsequent steps, each image was extended beyond the limits of its effective region.34,39 First, a 4-pixel neighborhood was used to identify the pixels at the outer edge of the effective region. For each of the pixels identified, the mean gray level was computed over all pixels in a 21 × 21 neighborhood that were also within the effective region and assigned to the corresponding pixel location. The effective region was merged with the outer edge pixels, forming an extended effective region. The procedure was repeated 50 times, extending the image by a ribbon of width 50 pixels.

After preprocessing, a 5 × 5 median filter was applied to the resulting image to remove outliers. Then, the maximum intensity in the image was calculated to serve as a reference intensity to assist in the selection of the ONH from candidates detected in the subsequent steps.

Detection of Blood Vessels Using Gabor Filters

The methods proposed in the present work for the detection of the ONH rely on the initial detection of blood vessels. We have previously proposed image processing techniques to detect blood vessels in images of the retina based upon Gabor filters,34 which are used in the proposed method.

Gabor functions are sinusoidally modulated Gaussian functions that provide optimal localization in both the frequency and space domains; a significant amount of research has been conducted on the use of Gabor functions or filters for segmentation, analysis, and discrimination of various types of texture and curvilinear structures.39–43

The basic, real, Gabor filter kernel oriented at the angle θ = −π/2 may be formulated as:40,42

|

1 |

where σx and σy are the standard deviation values in the x and y directions and fo is the frequency of the modulating sinusoid. Kernels at other angles are obtained by rotating the basic kernel. In the proposed method, a set of 180 kernels was used with angles spaced evenly over the range [−π/2, π/2].

Gabor filters may be used as line detectors.42 The parameters in Eq. 1, namely, σx, σy, and fo, need to be specified by taking into account the size of the lines or curvilinear structures to be detected. Let τ be the thickness of the line detector. This parameter is related to σx and fo as follows: The amplitude of the exponential (Gaussian) term in Eq. 1 is reduced to one half of its maximum at x = τ/2 and y = 0; therefore,  . The cosine term has a period of τ; hence, fo = 1/τ. The value of σy could be defined as σy = lσx where l determines the elongation of the Gabor filter along its orientation with respect to its thickness. The value of τ could be varied to prepare a bank of filters at different scales for multiresolution filtering and analysis;34 however, in the present work, a single scale is used with τ = 8 pixels and l = 2.9.

. The cosine term has a period of τ; hence, fo = 1/τ. The value of σy could be defined as σy = lσx where l determines the elongation of the Gabor filter along its orientation with respect to its thickness. The value of τ could be varied to prepare a bank of filters at different scales for multiresolution filtering and analysis;34 however, in the present work, a single scale is used with τ = 8 pixels and l = 2.9.

The Gabor filter designed as above can detect piecewise linear features of positive contrast, i.e., linear elements that are brighter than their immediate background. In the present work, the Gabor filter was applied to the inverted version of the preprocessed Y component.

Blood vessels in the retina vary in thickness in the range 50–200 µm with a median of 60 µm.13,44 Taking into account the size (565 × 584 pixels) and the spatial resolution (20 µm/pixel) of the images in the DRIVE database, the parameters for the Gabor filters were specified as τ = 8 pixels and l = 2.9 in the present work. For each image, a magnitude response image was composed by selecting the maximum response over all of the 180 Gabor filters for each pixel. An angle image was prepared by using the angle of the filter with the largest magnitude response. The magnitude response and angle images were filtered with a Gaussian filter having a standard deviation of 7 pixels (a description of the procedure for filtering of orientation fields is given by Ayres and Rangayyan45) and downsampled by a factor of 4 for efficient analysis using phase portraits in the subsequent step.

Phase Portrait Analysis

The filtered and downsampled orientation field obtained as above, denoted as θ(x,y), was analyzed using phase portraits.35,45 The phase portraits of systems of two linear first-order differential equations are well understood,46 and the geometrical patterns in such phase portraits have been used to characterize oriented texture.35 Consider the following system of differential equations:

|

2 |

|

3 |

The functions x(t) and y(t) can be associated with the x and y coordinates of the Cartesian plane.40,47 The orientation field generated by the phase portrait model is defined as:

|

4 |

which is the angle of the velocity vector  with the x axis at (x, y) = [x(t), y(t)]. According to the eigenvalues of A (the characteristic matrix), the phase portrait is classified as a node, saddle, or spiral.46 The fixed point of the phase portrait is the point where

with the x axis at (x, y) = [x(t), y(t)]. According to the eigenvalues of A (the characteristic matrix), the phase portrait is classified as a node, saddle, or spiral.46 The fixed point of the phase portrait is the point where  and denotes the center of the phase portrait pattern being observed. Let x0 = [x0, y0]T be the coordinates of the fixed point. From Eq. 2, we have:

and denotes the center of the phase portrait pattern being observed. Let x0 = [x0, y0]T be the coordinates of the fixed point. From Eq. 2, we have:

|

5 |

Given an image presenting oriented texture, the orientation field θ(x,y) of the image is defined as the angle of the texture at each pixel location (x,y) (obtained as described in the “Detection of Blood Vessels Using Gabor Filters” section). The orientation field of an image can be qualitatively described by the type of the phase portrait that is most similar to the orientation field, along with the center of the phase portrait. Such a description can be achieved by estimating the parameters of the phase portrait that minimize the difference between the orientation field of the corresponding phase portrait and the orientation field of the image. Let us define xi and yi as the x and y coordinates of the ith pixel, 1 ≤ i ≤ N. Let us also define θi = θ(xi,yi), and  . The sum of the squared error is given by:

. The sum of the squared error is given by:

|

6 |

Minimization of R(A, b) leads to the optimal phase portrait parameters that describe the orientation field of the image under analysis. In the present work, an initial global optimization procedure was employed (simulated annealing), followed by a local optimization procedure (nonlinear least squares). To perform this step, an analysis window of size 40 × 40 pixels (3.2 × 3.2 mm for the DRIVE images) was slid pixel by pixel through the orientation field, and the optimal parameters A and b were determined for each pixel. Constraints were placed so that the matrix in the phase portrait model is symmetric and has a condition number <3.0.45 The constrained method yields only two phase portrait maps: node and saddle. Based on the result of the analysis as above at each pixel, a vote was cast in the node or saddle map, as indicated by the eigenvalues of the matrix in the phase portrait model at the location given by the corresponding fixed point. A condition was placed on the distance between the fixed point and the center of the corresponding analysis window, so as to be not >200 pixels. The node map was filtered with a Gaussian filter of standard deviation 6 pixels. All peaks in the filtered node map were detected and rank ordered by magnitude. The result was used to label positions related to the sites of convergence of the blood vessels.45

Detection of the ONH

Each peak in the node map, in decreasing order of its magnitude, was checked to verify if it could represent the center of the ONH. A circular area was extracted from the preprocessed Y component with the peak location as the center and radius equal to 20 pixels or 0.40 mm, corresponding to about half of the average radius of the ONH.21 Pixels within the selected area were rank ordered by their brightness values, and the top 1% was selected. The average of the selected pixels was computed. If the average brightness was >68% (for the DRIVE images) or 50% (for the STARE images) of the reference intensity obtained as described in the “Preprocessing of Images” section, the peak location was accepted as the center of the ONH; otherwise, the next peak was checked. The thresholds were determined by experimentation for the DRIVE and STARE databases; suitable thresholds may need to be determined for each database and imaging protocol.

Analysis of the Free-Response Receiver Operating Characteristics

Free-response receiver operating characteristics (FROC) are displayed on a plot with the sensitivity of detection on the ordinate and the mean number of false-positive responses per image on the abscissa.48,49 FROC analysis is applicable when there is no specific number of true negatives, which is the case in the present study.

The peaks in the node map were rank ordered according to the node value in order to test for the detection of the ONH. For the images in the DRIVE database, a result was considered to be successful if the detected ONH center was positioned within the average ONH radius of 0.8 mm (40 pixels) around the manually identified center; otherwise, it was labeled as a false positive. Thus, a successfully detected ONH center would be placed within a circle centered at the true ONH position and having a radius equal to the average radius of the ONH; in our opinion, this is a clinically acceptable level of accuracy in the localization of the ONH. Similar criteria have been used by other researchers in this area.9 Using the scale factor provided by ter Haar,20 for the images in the STARE database, the corresponding distance between the detected ONH and the manually identified center for successful detection is 53 pixels. The distance value of 60 pixels, which has been used in testing other methods with the STARE database,1,16,20 when converted using the same scale factor, yields a limit of 46 pixels for the images in the DRIVE database. In order to facilitate comparison with other published works with the same databases but different criteria for successful detection, FROC curves were derived using all of the limits mentioned above.

Results

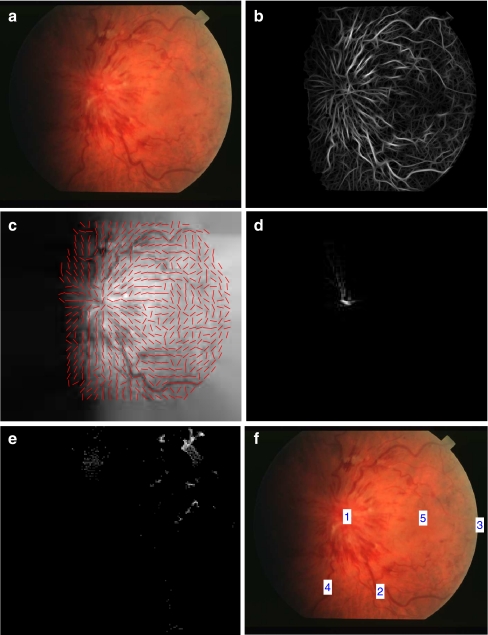

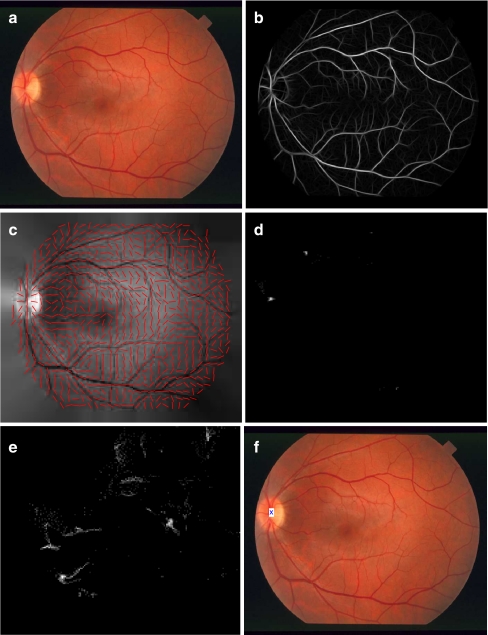

In Figure 1a, STARE image im0021 is shown; the magnitude response image and the orientation field obtained using Gabor filters are shown in parts b and c. Parts d and e show the node and saddle map derived from the orientation field using phase portrait analysis. We can observe a strong peak in the node map at the center of the ONH; peaks are seen in the saddle map around vessel branches. It is evident that the node map can be used to locate the center of the ONH. In Figure 1f, the locations of the top five peaks are shown superimposed on the original image. The first peak is located at the center of the ONH, as expected; the second peak is located at a retinal vessel bifurcation. The peak on the edge of the FOV is due to noise. The methods have worked successfully in spite of the blurry nature of the original image with no clear ONH being visible. Figure 2 shows a similar set of results for STARE image im0255; part f shows the ONH detected by the first peak in the corresponding node map.

Fig 1.

a Image im0021 from the STARE database. b Magnitude response of the Gabor filters. c Filtered and downsampled orientation field. Needles indicating the local orientation have been drawn for every fifth pixel in the row and column directions. d Node map; due to large differences between the values of the peaks in the node map, only the region related to the first peak is visible, in spite of the log transformation used. e Saddle map. The node and saddle maps are displayed with a logarithmic transformation. f Peaks in the node map. The number marked is the rank order of the peak in the node map.

Fig 2.

a Image im0255 from the STARE database. b Magnitude response of the Gabor filters. c Filtered and downsampled orientation field. Needles indicating the local orientation have been drawn for every fifth pixel in the row and column directions. d Node map. e Saddle map. The node and saddle maps are displayed with a logarithmic transformation. f ONH detected by the first peak in the node map.

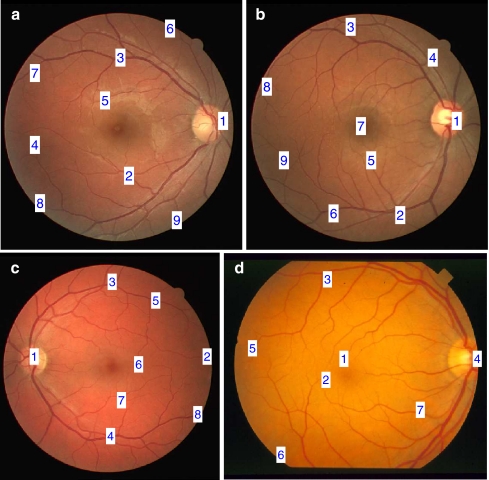

In Figure 3, the positions of the peaks of the node maps are shown for four images from the DRIVE and STARE databases. The number marked is the rank order of the corresponding point in the node map with no condition imposed regarding the corresponding intensity in the original image. We can observe that bifurcations of blood vessels lead to high responses in the node map. In the cases of the DRIVE images 34, 35, and 36, the first peak in the node map indicates the center of the ONH; however, in general, this is not always the case, as shown in the case of im0082 from the STARE database. To address this problem, an additional step of intensity-based selection of the center of the ONH was used, as described in the “Detection of the ONH” section.

Fig 3.

a Training image 34 from the DRIVE database. The number marked is the rank order of the corresponding point in the node map; the positions of all peaks detected in the corresponding node map are shown. b Training image 35. c Training image 36. d Image im0082 from the STARE database.

The result of the method proposed in the present work gives an approximation to the center of the ONH; therefore, the Euclidean distance between the manually marked and the detected center of the ONH was used to evaluate the results. The statistics of the distance between the manually marked and the detected ONH for the DRIVE images are shown in Table 1. The mean distance of the detected center of the ONH with the intensity-based condition is 0.46 mm (23.2 pixels). The statistics of the distance for the STARE images are shown in Table 2; the statistics are the same with or without the intensity-based condition with a mean distance of 1.78 mm or 119 pixels.

Table 1.

Statistics of the Euclidean Distance between the Manually Marked and the Detected Center of the ONH for the 40 Images in the DRIVE Database

| Method | Distance in millimeters (or pixels) | |||

|---|---|---|---|---|

| Mean | Min | Max | SD | |

| First peak in node map | 1.61 (80.7) | 0.03 (1.4) | 8.78 (439) | 2.40 (120) |

| Peak selected using intensity condition | 0.46 (23.2) | 0.03 (1.4) | 0.91 (45.5) | 0.21 (10.4) |

Min minimum, Max maximum, SD Standard deviation

Table 2.

Statistics of the Euclidean Distance between the Manually Marked and the Detected Center of the ONH for the 81 Images in the STARE Database

| Method | Distance in millimeters (or pixels) | |||

|---|---|---|---|---|

| Mean | Min | Max | SD | |

| First peak in node map | 1.78 (119) | 0.02 (1.4) | 8.16 (544) | 2.34 (156) |

| Peak selected using intensity condition | 1.78 (119) | 0.02 (1.4) | 8.16 (544) | 2.34 (156) |

Min minimum, Max maximum, SD standard deviation

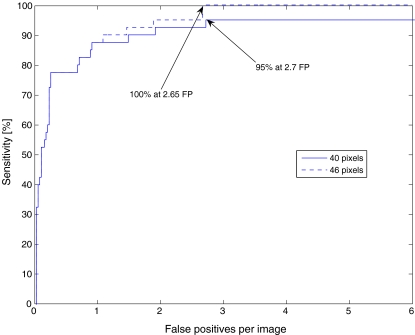

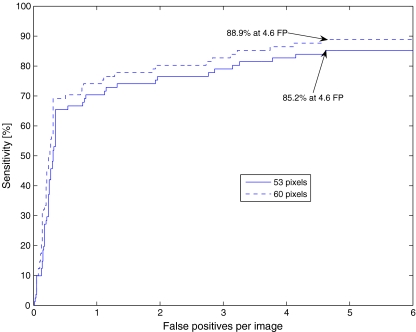

FROC analysis was used for evaluation of the results. In order to evaluate the detection of the center of the ONH, if more than ten peaks were detected in the node map, the top ten peaks detected in the node map were selected; otherwise, all of the peaks in the node map were used. The FROC curves for both the DRIVE and STARE databases were prepared with two definitions of successful detection (as described in the “Analysis of the Free-Response Receiver Operating Characteristics” section). The FROC curves for the DRIVE images are shown in Figure 4, which indicates a sensitivity of 100% at 2.65 false positives per image with the distance limit of 46 pixels. The intensity-based condition for the selection of centers is not applicable in FROC analysis. The FROC curves for the STARE images are shown in Figure 5; a sensitivity of 88.9% was obtained at 4.6 false positives per image with the distance limit of 60 pixels.

Fig 4.

FROC curves for the evaluation of detection of the ONH using phase portraits with the DRIVE database (40 images). The dashed line corresponds to the FROC curve with a threshold for successful detection of 46 pixels from the manually marked center to the detected center. The solid line is the FROC curve obtained when the same value is set to be 40 pixels. FP false positive.

Fig 5.

FROC curves for the evaluation of detection of the ONH using phase portraits with the STARE database (81 images). The dashed line corresponds to the FROC curve with a threshold for successful detection of 60 pixels from the manually marked center to the detected center. The solid line is the FROC curve obtained when the threshold is set to be 53 pixels. FP false positive.

Discussion

In Table 3, the success rates of locating the ONH reported by several methods published in the literature and reviewed in the “Methods for the Detection of the Optic Nerve Head” section are listed. The center of the ONH was successfully detected in all of the 40 images in the DRIVE database by the proposed method based on phase portraits. The distance for successful detection used in the present work is 46 pixels, whereas the other methods listed used 60 pixels.1,16 The method using phase portraits has performed better than some of the recently published methods with images from the DRIVE database.

Table 3.

Comparison of the Rate of Success in Locating the ONH in Images of the Retina Obtained by Several Methods

| Method of detection | DRIVE (%) | STARE (%) | Other database (%) |

|---|---|---|---|

| Lalonde et al.21 | – | 71.6a | 100 |

| Sinthanayothin et al.27 | – | 42a | 99.1 |

| Osareh et al.14 | – | 58a | 90.3 |

| Hoover and Goldbaum16 | – | 89 | – |

| Foracchia et al.30 | – | 97.5 | – |

| ter Haar20 | – | 93.8 | – |

| Park et al.6 | 90.3 | – | – |

| Ying et al.32 | 97.5 | – | – |

| Kim et al.31 | – | – | 91 |

| Fleming et al.9 | – | – | 98.4 |

| Park et al.33 | – | 91.1 | – |

| Youssif et al.1 | 100 | 98.8 | – |

| Zhu et al.3,25 | 90 | 44.4 | – |

| Present work | 100 | 69.1 | – |

In the present work, for the images of the DRIVE database, a result is considered to be successful if the detected ONH center is positioned within 46 pixels of the manually identified center. For the STARE database, the threshold is 60 pixels

aValues are from ter Haar20

The appearance of the ONH in the images in the STARE database varies significantly due to poor image quality, inconsistent format, and the presence of various types of retinal pathology. Misleading features that affected the performance of the method using phase portraits with the STARE database were grouped by the ophthalmologist (A.L.E.) as alternate retinal vessel bifurcation (12 out of 81 images), convergence of small retinal vessels at the macula (five out of 81 images), and noise (two out of 81 images). In the case of Figure 3d, the peak in or near the macula ranked first among the peaks in the node map and could lead to failure in the detection of the ONH if no intensity-based condition is applied. On the other hand, it could also be possible to utilize this feature of the algorithm to locate the macula.

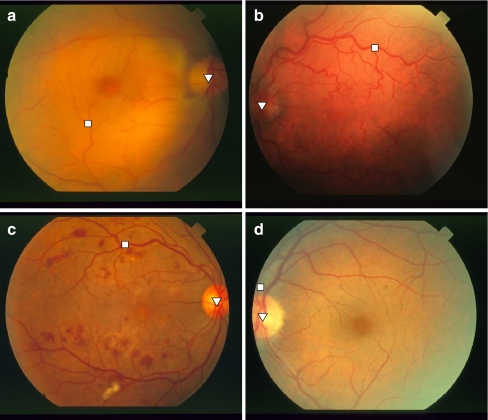

In Figure 6, four examples from the STARE database are shown where the ONH was not detected by the proposed method. In parts a, b, and c of the figure, the reason for failure is vessel bifurcation leading to a high peak in the node map. In the case of the image in part d of the same figure, noise at the edge of the FOV caused failure.

Fig 6.

a Image im0004 from the STARE database. The square represents the first peak detected in the node map that also met the condition based on 50% of the reference intensity. The triangle indicates the center of the ONH marked by the ophthalmologist. Distance = 375 pixels. b Image im0027. Distance = 368 pixels. c Image im0139. Distance = 312 pixels. d Image im0239. Distance = 84 pixels. Each image is of size 700 × 605 pixels.

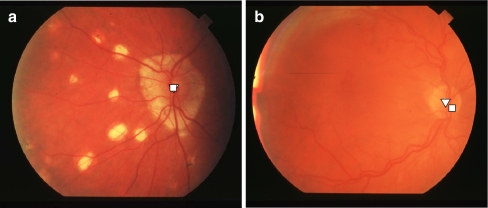

Figure 7a shows an image (im0010 from the STARE database) where the ONH is different from the commonly encountered circular or oval pattern. Using the method based on phase portraits, the ONH has been successfully detected by the single peak that passed the brightness condition. The method based on the Hough transform that we have recently proposed3,25 failed to detect the ONH because the circular property is not present in this case. Some of the images in the STARE database are out of focus. Figure 7b shows the result of detection using phase portraits for im0035, which is a case of successful detection with distance = 26 pixels. The advantage of the proposed method based on phase portraits is that it can detect the vascular pattern existing in an image even when the image is blurred.

Fig 7.

a Result for STARE image im0010; distance = 2.2 pixels. The square represents the first peak detected in the node map that also meets the condition based on 50% of the reference intensity. The triangle indicates the center of the ONH marked by the ophthalmologist. b The result for STARE image im0035. Distance = 26 pixels. This is the only case from the STARE subset for which the method of Youssif et al.1 failed (as shown in Fig. 5a of their paper). This example illustrates the strengths and weaknesses of different approaches. Each image is of size 700 × 605 pixels.

From Table 1, we can observe that, with the inclusion of selection based on the reference intensity, the average distance between the detected and manually marked ONH has been reduced, leading to better performance of the proposed method with the DRIVE images. However, from Table 2, we find that the performance in the case of the STARE images with intensity-based selection is the same as that without the condition. This is because the threshold of 50% of the reference intensity is relatively low and most of the peaks in the node maps passed this threshold. The use of a higher threshold could lead to no peak being retained after application of the intensity-based condition in several STARE images where the node map has only one or two peaks.

The methods proposed in the present work have provided better results than another method based on the Hough transform that we have recently published;3,25 regardless, the relatively poor performance with the STARE database indicates need for further improvement. However, the proposed method is computationally intensive due to the use of a large number of Gabor filters and two optimization techniques in the step of phase portrait analysis. On a Lenovo ThinkPad T61 computer with the Windows XP Professional operating system, a 2.5-GHz Core 2 Duo processor, and 1.96 GB RAM, the preprocessing steps took 11 s per image, the Gabor filtering procedure took 17 s, and the phase portrait analysis and subsequent steps to detect the ONH took 2,266 s. However, if the preprocessing steps and the Gabor filtering procedure are also used for the detection of blood vessels,34 the additional requirements for the detection of the ONH would only be the derivation and analysis of the phase portraits. Computational speed is an important issue in a practical clinical application; however, the present work is at the research stage where the focus is on the quality and accuracy of the results. Furthermore, detection of the ONH is just one of the several image processing steps that we are designing. The final product for use in a clinical setting would need to include optimized programs and graphical user interfaces. Computational requirements and speed would depend upon the work load, the type of the computer used, and the algorithms used in the application: these details are beyond the scope of the present work.

Conclusion and Future Work

We have proposed a procedure for the automatic detection of the ONH in fundus images of the retina based on the use of Gabor filters and phase portrait analysis of the orientation of the blood vessels; these methods have not been studied in any of the published works on the detection of the ONH. The blood vessels of the retina were detected using Gabor filters and phase portrait modeling was applied to the orientation field to detect points of convergence of the vessels. The method was evaluated by using the distance from the detected center of the ONH to that marked independently by an ophthalmologist. With the inclusion of a step for intensity-based selection of the peaks in the node map, a successful detection rate of 100% was obtained with the 40 images in the DRIVE database.

Analysis of the results was performed in two different ways. One approach involved the assessment of the distances between the detected centers of the ONH and the corresponding points independently marked by an ophthalmologist. The second approach was based on FROC analysis, which has not been reported in any of the published works on the detection of the ONH except our own recent related work.3

The proposed methods performed well with the DRIVE images, but yielded lower sensitivity of detection with the STARE images. Further studies are required to incorporate additional characteristics of the ONH to improve the efficiency of detection. Additional constraints related to the characteristics of retinal vessels may be applied to improve the rate of successful detection of the ONH in images with poor quality, abnormal features, and pathological changes.

Computer-aided analysis of retinal images can assist in quantitative and standardized analysis of retinal pathology. Various types of retinopathy, including retinopathy of prematurity,12 diabetic retinopathy,13 and AMD4 may be detected and analyzed using techniques of image processing and pattern recognition. Accurate identification and localization of retinal lesions could lead to efficient diagnosis, treatment, and management of retino-pathy. In procedures for the detection and analysis of retinal pathology, normal structures could be initially located and identified and subsequently used as landmarks for reference. The methods proposed in the present work for the detection of the ONH could contribute as above in an overall scheme for computer-aided diagnosis of retinal pathology.

Acknowledgments

This work was supported by the Natural Sciences and Engineering Research Council of Canada.

References

- 1.Youssif AAHAR, Ghalwash AZ, Ghoneim AASAR. Optic disc detection from normalized digital fundus images by means of a vessels’ direction matched filter. IEEE Trans Med Imag. 2008;27(1):11–18. doi: 10.1109/TMI.2007.900326. [DOI] [PubMed] [Google Scholar]

- 2.Walter T, Klein JC: Segmentation of color fundus images of the human retina: Detection of the optic disc and the vascular tree using morphological techniques. Medical Data Analysis, Lecture Notes in Computer Science, Volume 2199, Springer: Berlin, Pages 282-287, 2001

- 3.Zhu X, Rangayyan RM, Ells AL: Detection of the optic disc in fundus images of the retina using the Hough transform for circles. J Digit Imaging, in press, 2009 [DOI] [PMC free article] [PubMed]

- 4.Acharya R, Tan W, Yun WL, Ng EYK, Min LC, Chee C, Gupta M, Nayak J, Suri JS. The human eye. In: Acharya R, Ng EYK, Suri JS, editors. Image Modeling of the Human Eye. Norwood: Artech House; 2008. pp. 1–35. [Google Scholar]

- 5.Michaelson IC, Benezra D. Textbook of the Fundus of the Eye. 3. Edinburgh: Churchill Livingstone; 1980. [Google Scholar]

- 6.Park M, Jin JS, Luo S: Locating the optic disc in retinal images. In: Proceedings of the International Conference on Computer Graphics, Imaging and Visualisation, page 5, Sydney, QLD, Australia, July, 2006. IEEE

- 7.Tobin KW, Chaum E, Govindasamy VP, Karnowski TP. Detection of anatomic structures in human retinal imagery. IEEE Trans Med Imag. 2007;26(12):1729–1739. doi: 10.1109/TMI.2007.902801. [DOI] [PubMed] [Google Scholar]

- 8.Li H, Chutatape O. Automated feature extraction in color retinal images by a model based approach. IEEE Trans Biomed Eng. 2004;51(2):246–254. doi: 10.1109/TBME.2003.820400. [DOI] [PubMed] [Google Scholar]

- 9.Fleming AD, Goatman KA, Philip S, Olson JA, Sharp PF. Automatic detection of retinal anatomy to assist diabetic retinopathy screening. Phys Med Biol. 2007;52:331–345. doi: 10.1088/0031-9155/52/2/002. [DOI] [PubMed] [Google Scholar]

- 10.Early Treatment Diabetic Retinopathy Study Research Group Grading diabetic retinopathy from stereoscopic color fundus photographs—An extension of the modified Airlie House classification (ETDRS report number 10) Ophthalmology. 1991;98:786–806. [PubMed] [Google Scholar]

- 11.Sun H, Nathans J. The challenge of macular degeneration. Sci Am. 2001;285(4):68–75. doi: 10.1038/scientificamerican1001-68. [DOI] [PubMed] [Google Scholar]

- 12.Ells A, Holmes JM, Astle WF, Williams G, Leske DA, Fielden M, Uphill B, Jennett P, Hebert M. Telemedicine approach to screening for severe retinopathy of prematurity: A pilot study. Ophthalmology. 2003;110(11):2113–2117. doi: 10.1016/S0161-6420(03)00831-5. [DOI] [PubMed] [Google Scholar]

- 13.Patton N, Aslam TM, MacGillivray T, Deary IJ, Dhillon B, Eikelboom RH, Yogesan K, Constable IJ. Retinal image analysis: Concepts, applications and potential. Prog Retin Eye Res. 2006;25(1):99–127. doi: 10.1016/j.preteyeres.2005.07.001. [DOI] [PubMed] [Google Scholar]

- 14.Osareh A, Mirmehd M, Thomas B, Markham R: Comparison of colour spaces for optic disc localisation in retinal images. In: Proceedings 16th International Conference on Pattern Recognition. Quebec City, Quebec, Canada, 2002, pp 743–746

- 15.Hoover A, Kouznetsova V, Goldbaum M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans Med Imag. 2000;19(3):203–210. doi: 10.1109/42.845178. [DOI] [PubMed] [Google Scholar]

- 16.Hoover A, Goldbaum M. Locating the optic nerve in a retinal image using the fuzzy convergence of the blood vessels. IEEE Trans Med Imag. 2003;22(8):951–958. doi: 10.1109/TMI.2003.815900. [DOI] [PubMed] [Google Scholar]

- 17.Structured Analysis of the Retina, http://www.ces.clemson.edu/˜ahoover/stare/, accessed on March 24, 2008

- 18.Staal J, Abràmoff MD, Niemeijer M, Viergever MA, Ginneken B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans Med Imag. 2004;23(4):501–509. doi: 10.1109/TMI.2004.825627. [DOI] [PubMed] [Google Scholar]

- 19.DRIVE: Digital Retinal Images for Vessel Extraction, http://www.isi.uu.nl/Research/Databases/DRIVE/, accessed on March 24, 2008

- 20.ter Haar F: Automatic localization of the optic disc in digital colour images of the human retina. Master’s thesis, Utrecht University, Utrecht, the Netherlands, 2005

- 21.Lalonde M, Beaulieu M, Gagnon L. Fast and robust optic disc detection using pyramidal decomposition and Hausdorff-based template matching. IEEE Trans Med Imag. 2001;20(11):1193–1200. doi: 10.1109/42.963823. [DOI] [PubMed] [Google Scholar]

- 22.Barrett SF, Naess E, Molvik T. Employing the Hough transform to locate the optic disk. Biomed Sci Instrum. 2001;37:81–86. [PubMed] [Google Scholar]

- 23.Chrástek R, Skokan M, Kubecka L, Wolf M, Donath K, Jan J, Michelson G, Niemann H: Multimodal retinal image registration for optic disk segmentation. In: Methods of Information in Medicine, German BVM-Workshop on Medical Image Processing, volume 43. Germany: Schattauer GmbH, 2004, pp 336–342 [PubMed]

- 24.Chrástek R, Wolf M, Donath K, Niemann H, Paulus D, Hothorn T, Lausen B, Lämmer R, Mardin CY, Michelson G. Automated segmentation of the optic nerve head for diagnosis of glaucoma. Med Image Anal. 2005;9(4):297–314. doi: 10.1016/j.media.2004.12.004. [DOI] [PubMed] [Google Scholar]

- 25.Zhu X, Rangayyan RM: Detection of the optic disc in images of the retina using the Hough transform. In: Proceedings of the 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. Vancouver, BC, Canada, August 20–24, 2008. IEEE, pages 3546–3549 [DOI] [PubMed]

- 26.Sekhar A, Al-Nuaimy W, Nandi AK: Automated localisation of retinal optic disk using Hough transform. In: 5th IEEE International Symposium on Biomedical Imaging: From Nano to Macro, ISBI 2008, 2008, pp 1577–1580

- 27.Sinthanayothin C, Boyce JF, Cook HL, Williamson TH. Automated localisation of the optic disc, fovea, and retinal blood vessels from digital colour fundus images. Br J Ophthalmol. 1999;83(4):902–910. doi: 10.1136/bjo.83.8.902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Carmona EJ, Rincon M, García-Feijoó J, Martínez de-la Casa JM. Identification of the optic nerve head with genetic algorithms. Artif Intell Med. 2008;43(3):243–259. doi: 10.1016/j.artmed.2008.04.005. [DOI] [PubMed] [Google Scholar]

- 29.Hussain AR: Optic nerve head segmentation using genetic active contours. In: Proceeding International Conference on Computer and Communication Engineering. Kuala Lumpur, Malaysia, 2008, IEEE, pp 783–787

- 30.Foracchia M, Grisan E, Ruggeri A. Detection of optic disc in retinal images by means of a geometrical model of vessel structure. IEEE Trans Med Imag. 2004;23(10):1189–1195. doi: 10.1109/TMI.2004.829331. [DOI] [PubMed] [Google Scholar]

- 31.Kim SK, Kong HJ, Seo JM, Cho BJ, Park KH, Hwang JM, Kim DM, Chung H, Kim HC: Segmentation of optic nerve head using warping and RANSAC. In: Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. Lyon, France, 2007, IEEE, pp 900–903 [DOI] [PubMed]

- 32.Ying H, Zhang M, Liu JC: Fractal-based automatic localization and segmentation of optic disc in retinal images. In: Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. Lyon, France, 2007, IEEE, pp 4139–4141 [DOI] [PubMed]

- 33.Park J, Kien NT, Lee G: Optic disc detection in retinal images using tensor voting and adaptive mean-shift. In: IEEE 3rd International Conference on Intelligent Computer Communication and Processing, ICCP. Romania: Cluj-Napoca, 2007, pp 237–241

- 34.Rangayyan RM, Ayres FJ, Oloumi F, Oloumi F, Eshghzadeh-Zanjani P: Detection of blood vessels in the retina with multiscale Gabor filters. J Electron Imaging 17(2):023018:1–7, 2008

- 35.Rao AR, Jain RC. Computerized flow field analysis: Oriented texture fields. IEEE Trans Pattern Anal Mach Intell. 1992;14(7):693–709. doi: 10.1109/34.142908. [DOI] [Google Scholar]

- 36.Rangayyan RM, Zhu X, Ayres FJ: Detection of the optic disc in images of the retina using Gabor filters and phase portrait analysis. In: Vander Sloten J, Verdonck P, Nyssen M, Haueisen J Eds. IFMBE Proceedings 22: Proceedings of 4th European Congress for Medical and Biomedical Engineering. Belgium: Antwerp, 2008, pp 468–471

- 37.Image Processing and Analysis in Java, http://rsbweb.nih.gov/ij/, accessed on September 3, 2008

- 38.Gonzalez RC, Woods RE. Digital Image Processing. 2. Upper Saddle River: Prentice Hall; 2002. [Google Scholar]

- 39.Soares JVB, Leandro JJG, Cesar RM, Jr, Jelinek HF, Cree MJ. Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Trans Med Imag. 2006;25(9):1214–1222. doi: 10.1109/TMI.2006.879967. [DOI] [PubMed] [Google Scholar]

- 40.Rangayyan RM, Ayres FJ. Gabor filters and phase portraits for the detection of architectural distortion in mammograms. Med Biol Eng Comput. 2006;44(10):883–894. doi: 10.1007/s11517-006-0088-3. [DOI] [PubMed] [Google Scholar]

- 41.Rangayyan RM. Biomedical Image Analysis. Boca Raton: CRC; 2005. [Google Scholar]

- 42.Ayres FJ, Rangayyan RM: Design and performance analysis of oriented feature detectors. J Electron Imaging 16(2):023007:1–12, 2007

- 43.Manjunath BS, Ma WY. Texture features for browsing and retrieval of image data. IEEE Trans Pattern Anal Mach Intell. 1996;18(8):837–842. doi: 10.1109/34.531803. [DOI] [Google Scholar]

- 44.Swanson C, Cocker KD, Parker KH, Moseley MJ, Fielder AR. Semiautomated computer analysis of vessel growth in preterm infants without and with ROP. Br J Ophthalmol. 2003;87(12):1474–1477. doi: 10.1136/bjo.87.12.1474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ayres FJ, Rangayyan RM. Reduction of false positives in the detection of architectural distortion in mammograms by using a geometrically constrained phase portrait model. Int J Comput Assist Radiol Surg. 2007;1(6):361–369. doi: 10.1007/s11548-007-0072-x. [DOI] [Google Scholar]

- 46.Wylie CR, Barrett LC. Advanced Engineering Mathematics. 6. New York: McGraw-Hill; 1995. [Google Scholar]

- 47.Ayres FJ, Rangayyan RM. Characterization of architectural distortion in mammograms. IEEE Eng Med Biol Mag. 2005;24(1):59–67. doi: 10.1109/MEMB.2005.1384102. [DOI] [PubMed] [Google Scholar]

- 48.Egan JP, Greenberg GZ, Schulman AI. Operating characteristics, signal detectability, and the method of free response. J Acoust Soc Am. 1961;33(8):993–1007. doi: 10.1121/1.1908935. [DOI] [Google Scholar]

- 49.Edwards DC, Kupinski MA, Metz CE, Nishikawa RM. Maximum likelihood fitting of FROC curves under an initial-detection-and-candidate-analysis model. Med Phys. 2002;29:2861–2870. doi: 10.1118/1.1524631. [DOI] [PubMed] [Google Scholar]