Abstract

Key words: Imaging informatics, computer-assisted instruction, medical informatics applications

Background

According to the American Cancer Society’s Survey, 28,900 new cases of oral cancer emerged in 2000.1 The death rate associated with oral cancer is particularly high because in more than half of the cases, the cancer is discovered late in its development.2 In these instances, radiography plays a key role in the diagnosis. Dentists trained in radiographic interpretation can determine the presence of tumors or growths, and can even distinguish malignant tumors from benign tumors based on their radiographic presentation. Interpretation of lesions in the jaws, therefore, is a vital professional skill for dental practitioners. Dentists’ encounters with non-routine radiographic pathology are limited because of the small size of patient pools and the relatively low occurrence rate of certain disease processes. However, meaningful oral and maxillofacial radiographic pathology, including oral cancer cases, do exist and have been diagnosed and treated each year.1 Furthermore, dentists are less confident about their ability to interpret radiographic images accurately.3 This lack of confidence could potentially lead to a failure to recognize or misdiagnosis significant pathology including cancer. Dental faculty is faced with the challenge of finding teaching cases from various sources because they often do not have their own. There is a real need to enhance radiographic interpretation and diagnosis skills for both dental students and current practitioners.3

Interpretation of radiographic dental images is a process of decision making, which requires a broad knowledge and in-depth familiarity with the underlying pathology and consequent radiographic manifestations. It is a significant part of the radiology curriculum taught in every dental school. There are meaningful teaching cases that are not used to full potential because these cases are not properly annotated, archived, and shared. In the case of rare oral disease processes, these cases may occur so infrequently that the student’s sole exposure is the depiction in a textbook. Currently, teaching cases for the training of dentists are developed on an as-needed basis and generally created and maintained locally. This process necessarily limits the exposure of teaching cases to the population served by that dental health center and often to students who work with a particular instructor. Some areas are not sufficiently demographically diverse to account for differences between ethnic groups, population ages or other factors. Most radiology interpretation courses at the pre-doctoral level are given in large lecture format.

Computer-based learning has the promise to present the student individualized and interactive learning. Some interesting examples of computer-based teaching programs are BrainStorm at Stanford University,4Digital Anatomist at University of Washington, Seattle,5 and HeartLab at Harvard Medical School.6 Existing multimedia tools for dental radiography, such as NewMentor Clinical Radiography,7UTHSCSA Image Tool,8 and OralMax,9 are all CD-ROM based and designed to assist dental student and professionals with various aspects of radiology but not specifically on skill development in interpreting lesions on radiographic images. The content of these tools are static, and several were not designed for interactivity or assessment.

The Healthcare Informatics Program at the University of Wisconsin—Milwaukee (UWM) and the Dental Informatics Program at Marquette University School of Dentistry (MUSoD) have been collaborating to test the concept feasibility for the development and implementation of a web-based tele-educational system for dental radiology. The goal of this study is to provide unique computer-based tools for an educational program to improve the skills of dental students who interpret radiographic images.

Methods

A ‘user view’ defines what is required of an information system from the perspective of a particular job or business area. The project group has identified the major user views for this image archive application. These are the annotator view, the case demo view (teacher), the test designer view, the student view, and data operator view. Multiple user views were analyzed by using a centralized and integrated approach.10 The requirements of data types and size were analyzed. In addition, transaction requirements, which included data entry, update, deletion, and queries were also assessed. Based on this information, the initial database size was estimated. Another requirement for the image archive is the compatibility with multiple image formats, which allows images from different outside systems to be imported with minimal effort. This archive will support popular image formats such as JPEG, GIF, and TIFF. More significantly, it will support image files conformed to DICOM format. DICOM,11 a standard for transmission of medical images and their associated information, was jointly developed by the American College of Radiology and the National Electrical Manufacturers Association. This will ensure ease of integration with different vendor imaging equipment, picture archiving and communication systems (PACS) and other DICOM supporting medical information systems. An American Dental Association (ADA) dental informatics group is working on the development of a subset of DICOM standards specifically for dental imaging. Security protocols are planned ranging from password protection to physical backup. Patient identities are protected as required by the Health Insurance Portability and Accountability Act (HIPAA) law.

To prove the feasibility of the online training method, we need to develop a prototype system which would allow access through the UWM and the MUSoD campus’s intranets or from anywhere in the world at any time.12 To determine user needs, we conducted formal market research, which included one-on-one interviews, focus groups, surveys, and a literature search. To evaluate dental student needs of the dental radiology training tool, we conducted a pilot study among dental students at MUSoD in January 2007. A questionnaire was used to collect feedback from 36 volunteer students. Their feedback will be used to design the system. The system should include a regional dentistry imaging archive and applications, namely an annotation tool and a training tool. The image archive application also includes image viewing and manipulation tools. The new training method will provide dental school faculty and students with access to a web-based archive of dental cases thus reducing the access barrier to teaching cases.

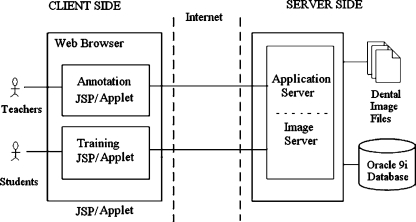

The system’s client–server architecture allows users from any location to have access to the dental image archive (See Fig. 1). Both the annotation tool and training tool are web-based (JSP/Applet) programs that allow users to properly annotate and retrieve a case via a series of systematic and user-friendly steps. On the client side of the dental annotation and training tools are the teacher/student’s web browsers, which access the web-based interfaces of the application. A Java-based applet automatically loads on the client side to provide interactive features for the annotation and training processes. As client stubs are concurrently accessible by multiple clients, they were created to encapsulate all necessary procedures in order to establish and perform remote queries and receive query results. For example, ApplicationClient and ImageClient are the two client stubs. The respective common framework components are the ApplicationServer and the ImageServer. On the server side, the data is stored in an Oracle database and images are stored as files on the OS (operating system) file system. The application is hosted on a JSP-enabled Apache Web Server which is capable of serving dynamic web pages and processing web-form data. The Application Server responds to queries for case data. The Image Server serves as a gateway to image files and safeguards the image files by verifying authorized users and processing client’s requests for images.

Fig 1.

System architecture.

Results

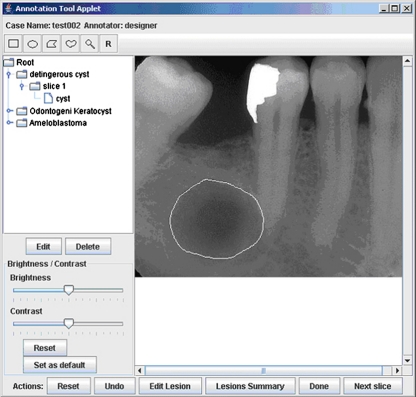

A prototype of the annotation tool was developed (see Fig. 2). Graphical annotation involves electronically defining and outlining regions of interest (ROI) directly on the image. The location and shape of the ROI is stored for future retrieval and review. To aid the annotator in visualizing the image, controls for brightness, contrast, and zoom are available. The annotation tool supports the DICOM format by using a public domain Java image-processing package, ImageJ.13 ImageJ was developed by the National Institute of Health to handle DICOM images. In addition, ImageJ is written in Java and well-suited for the Java-based platform and provides standalone tools for many needed image processing functions such as zoom in/out and change contrast/brightness.

Fig 2.

The screen copy of annotation tool.

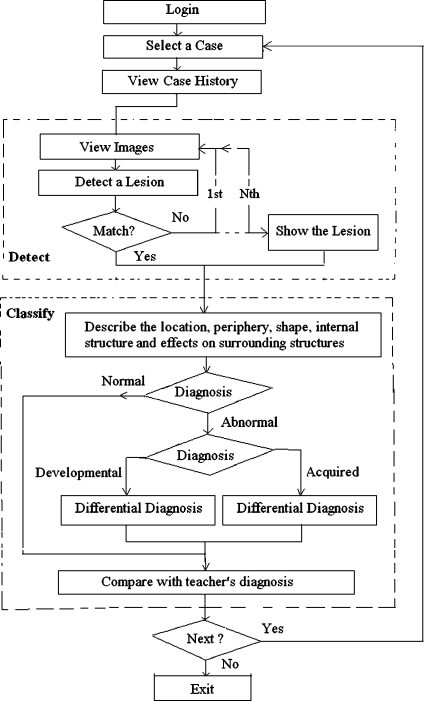

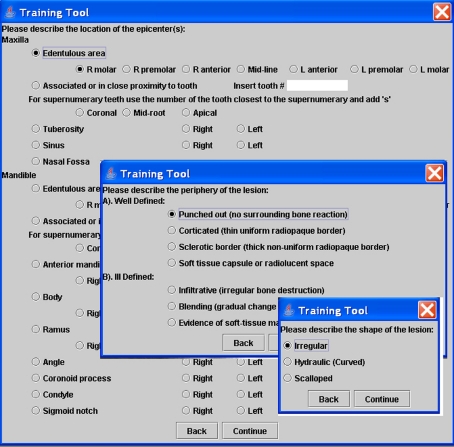

There are two steps in the training tool testing the student’s skill level. The first step requires the student to detect the abnormality. The second step requires the student to describe and classify the abnormality into a broad category such as “tumor” or “cyst”. Different lesions have specific radiographic features. Radiographic signs for dentists to interpret within dental images include radiographic density, margin characteristics, shape, location and distribution, size, internal architecture, and effects on surrounding tissue. The training tool allows students to interpret dental images in a workflow similar to clinical practice (see Fig. 3). Students first have to detect the lesion before continuing to describe it and render a broad classification of the underlying pathologic process. During the interpretation, the training tool provides immediate feedback, which includes the correct radiographic descriptions of the lesions and the subsequent diagnoses based on clinical findings and/or pathology results.

Fig 3.

Training tool work flow.

The training tool is self-guided and allows students to improve on the recognition and interpretation of dental images. The tool supports the processes of both detecting abnormalities visible in dental images and rendering a broad classification of those abnormalities. After logging into the training system, the student first reviews a list of cases with general case information. After the student selects a case for training, all data pertaining to the patient’s medical and dental history is displayed.

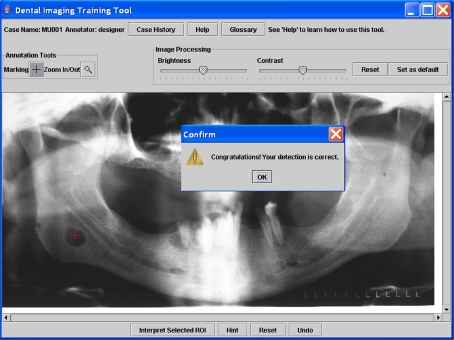

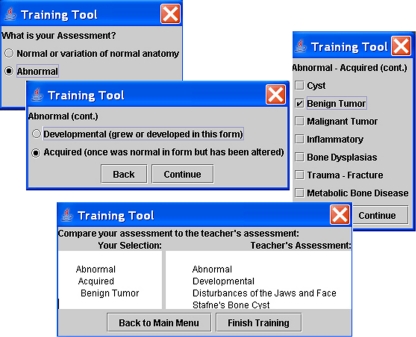

Preceding student instruction, case authors, usually faculty, use an annotation tool to annotate the lesions graphically in the dental image. During the training, students can place a mark as a ROI on the digital dental image to identify the abnormality. The student’s goal is to place the mark within the circle of the lesion annotated by the faculty. The training tool can directly compare the graphical annotations of the lesion between the instructor and student (see Fig. 4). The tool allows the user to review the images using the zoom in/out feature, and to adjust the contrast and brightness by using an intensity window at the top of the screen. After detecting the lesion abnormality, the student proceeds to describe the lesion (see Fig. 5) and formulate a general classification of the abnormality (see Fig. 6). Teacher’s feedback, comparing the teacher’s assessment with the student’s assessment, is provided immediately.

Fig 4.

Detection of the region of interest in the dental image.

Fig 5.

Describe the location, periphery and shape of the lesion.

Fig 6.

Diagnosis of the lesion.

To evaluate the effectiveness of the dental radiology training tool, the prototype system was demonstrated at a technology exposition during the March 2007 Annual Meeting of the American Dental Education Association (ADEA). Dental educators were able to use the tool and provide comments. Fifteen conference attendees, comprised primarily of dental school faculty, completed a survey reporting their reactions to the system. The survey was a subset of the Questionnaire for User Interface Satisfaction (QUIS).14 The scales on the survey are measured on a scale of 0 (least unfavorable response) to 9 (most favorable response). A total of 27 scaled items were asked. Students were also given opportunities to provide written comments. Items were designed to measure five areas of the tool and user attitude toward the tool: overall user reactions (six items), screen design (six items), terminology and system information (six items), learning (six items), and system capabilities (five items). The attendees rated the tool very favorably. The overall averaged mean was 7.72, which was above the mid-point (4.5) of the scales. For example, Learning has the highest rating at 8.27. The learning aspect includes the following items:

Learning to operate the system (difficult to easy),

Exploration of features by trial and error (discouraging to encouraging),

Remembering names and use of commands (difficult to easy),

Tasks can be performed in a straight-forward manner (never to always),

Help messages on the screen (unhelpful to helpful) and

Supplemental reference materials (confusing to clear).

“Screen Design” has the lowest minimum rating of 6.60. The organization of information on the screen can be further improved. In short, the needs for a web-based training were verified from this evaluation study. Dental faculty felt the dental radiology training method has potential and should be considered as a valuable approach for dental education.

Discussions

The ADA also provides continuing dental education online. For example, there is an online course, “Common Jaw Lesions” in the “Oral Radiology” category. ADA Continuing Education courses focus on knowledge acquisition. These courses post textbook information online and include post-test multiple choice questions. Our system directly targets skills development. Two critical skills to interpret radiological dental images are detection and classification. For example, detection is the most important skill for radiologists as the lesion must be located in the image before any classification can be made. Because our system requires application of detection and classification skills, reflecting the work flow in real clinical practice, we believe that our system is more useful and intuitive than ADA continuing education courses. In addition, our training tool is interactive. Our system provides a powerful image viewer to allow users to manipulate images, such as zoom, control contrast, while providing graphical annotation. Because our system is web-based, it will be more convenient for dentists to improve their skills in interpreting digital images at anytime from anywhere. Finally, our dental image database will allow users to explore a large collection of dental cases.

This tool is not a freeware. Currently, we are conducting further development and evaluation by a group of users in dental schools. The development of a computer-based dental education application is a complex task, which requires conducting collaborative studies with dental faculty, computer developers, and educational and cognitive psychologists. There are some suggestions from the preliminarily evaluation study, such as “It is a great idea for a study tool but it should be simplified.” and “Interface could have been brighter colored.” User acceptance was still a major obstacle for computer-based training applications and adequate training on the use of the computer applications is needed. For example, on the user side, a regular personal computer has a low-resolution monitor, which is sufficient for annotating dental images, and does not meet the requirements for radiographic diagnosis of digital medical images. In the future, a digital system for displaying the training tool should have a high spatial resolution of at least a 5-megapixel monitor.

Conclusions

The web-based training method has the potential to be a valuable approach for education in dental radiology and can supplement the current method of teaching without increasing faculty teaching load to further reinforce and improve dental students’ skills in interpreting dental radiographic images. The web-based training method provides an alternative to lecture-based course presentations and simulates a one-on-one teacher–student learning environment.

Acknowledgment

This project was supported by a grant from the Wisconsin Initiative for Biomedical and Health Technologies (WIBHT).

References

- 1.Oral Cancer Foundation http://www.oralcancerfoundation.org/

- 2.Greenlee RT, Hill-Harmon M, Murray T, Thun M. Cancer statistics. CA Cancer J Clin. 2001;51(1):15–36. doi: 10.3322/canjclin.51.1.15. [DOI] [PubMed] [Google Scholar]

- 3.Stheeman SE, Mileman PA, ’t Hof MA, Stelt PF. Room for improvement? The accuracy of dental practitioners who diagnose bony pathoses with radiographs. Oral Surg Oral Med Oral Pathol Oral Radiol Endod. 1996;81:251–254. doi: 10.1016/S1079-2104(96)80425-2. [DOI] [PubMed] [Google Scholar]

- 4.Hsu HL: Interactivity of human-computer interaction and personal characteristics in a hypermedia learning environment. Unpublished doctoral dissertation, Stanford University, 1996

- 5.Rosses C, Shapiro LG, Brinkley JF: The digital anatomist foundational model: principles for defining and structuring its concept domain. Proceedings of the AMIA Fall Symposium, pp. 820-824. Orlando, Florida, 1998 [PMC free article] [PubMed]

- 6.Bergeron BP, Greenes RA. Clinical skill-building simulations in cardiology: HeartLab and EKGLab. Comput Methods Programs Biomed. 1989;30(2-3):111–126. doi: 10.1016/0169-2607(89)90063-1. [DOI] [PubMed] [Google Scholar]

- 7.NewMentor Clinical Radiography, [homepage on the Internet] [cited 2009 March 10]. Available from: http://www.newmentor.com/

- 8.UTHSCSA Image Tool, [homepage on the Internet] [cited 2009 March 10]. Available from: http://ddsdx.uthscsa.edu/dig/itdesc.html

- 9.OralMax, [homepage on the Internet] [cited 2009 March 10]. Available from: http://www.oralmax.net/

- 10.Connolly TM, Begg CE: Database solutions: a step-by-step approach to building databases. Harlow; Reading, MA: Addison-Wesley, c2000

- 11.Digital imaging communications in medicine DICOM Part 3. NEMA Standards Publications, National Electrical Manufacturers Association, Rosslyn, VA, 2000. M. Wu, LJ

- 12.Wu M, Koenig L, Zhang X, Lynch J, Wirtz T: Web-based Training Tool for Interpreting Dental Radiographic Images, American Medical Informatics Association Annual Symposium Proceeding. 2007:1159-1159 [PubMed]

- 13.ImageJ [homepage on the Internet] [cited 2007 May 18]. Available from: http://rsb.info.nih.gov/ij/

- 14.Slaughter L, Norman KL, Shneiderman B: Assessing users’ subjective satisfaction with the Information System for Youth Services (ISYS). Proc. of Third Annual Mid-Atlantic Human Factors Conference: March 26-28, 1995; Blacksburg, VA