Abstract

Today, all types of data are used in various ways to provide useful information. PACS Audit data have not been typically used as a data source although it includes information that can be used for various purposes such as process improvement (PI). In a typical radiology workflow, examinations do not show up on a radiologist’s unread worklist until the examination has been verified. An issue with clinical access to radiology reports was determined to be partly due to delays in the verification step. A PI goal was created to reduce this wait time. PACS audit data were mined using an in-house application to provide workflow time span information, and the particular span from arrived to verified was measured. The report also allowed specific examinations and users to be identified, and these data were used as a PI educational tool. The end result was a dramatic reduction in examinations taking longer than 1 h to be verified which reduced the time for report production and enhanced patient care.

Key words: PACS, auditing, data mining, databases, information management, medical informatics applications, quality control, quality assurance

Background

Gathering audit data from a picture archive and communications system (PACS) can provide numerous benefits to an organization. Although one might typically think of computer audit data in terms of security or application events,1 there are many other events whose information we can mine. With medical data, we need to capture information on who merged a patient or examination, who marked an examination as dictated, and when an examination was moved to the archive. We also need to capture non-change events such as who viewed an examination as specified by the Health Information Portability and Accountability Act (HIPAA)2 in 2004. This act required organizations to audit the access and use of their protected healthcare information (PHI). Most PACS vendors began providing audit data around that time, but many sites remain unaware of its full potential.

This data can be stored and presented in many different ways. In this case, the data are stored in a stand-alone database and reporting system, although a web interface could be created to provide more ubiquitous access. Today, the Holy Grail of reports is often a “Digital Dashboard”3–5 that can provide deidentified data for trending and workflow analysis. To benefit from this data, it was used to look at the radiology departments need to provide the proper diagnosis as quickly as possible. Process improvement (PI) can be supported in many areas as described in the Discussion, and one of them became an obvious concern.

The diagnostic workflow process can be broadly broken down into three catagories: image acquisition, image verification, and image interpretation. The image verification step generally occurs after the examinations are sent from the modality and arrive on the PACS. At this point, the images are reviewed by the radiographer on a PACS display, and presentation manipulations and retake decisions are made. When appropriate, the examination is verified and made available to the radiologist for reading. The concern observed by the radiology administrator and radiographer supervisors was the time taken to move from arrived to verified. The problem appeared consistently with clinicians asking for a report on an examination that had not appeared in the radiologist’s worklist yet. A PI goal was made to bring the percentage of examinations taking longer than 1 h to verify to less than 10% of the total.

Although most current radiology information systems (RIS) can provide procedure time data, they can not provide data on when the examination arrived on the PACS and was verified unless the RIS and PACS are tightly integrated. Many institutions today have separate RIS and PACS systems and must look to the PACS to get this data. Traditionally, there has not been an easy way to measure this arrived to verified time, so an in-house application for reading PACS audit logs was used to support the PI goal described here.

Materials and Methods

Two radiology imaging departments at LSU Health Science Center-Shreveport were used for this study, computed radiology (CR)/direct radiology (DR) (General Radiology) and CT/MR. General Radiology performed around 9,000 examinations per month, and there were about 2,000 computed tomography (CT) and 500 magnetic resonance (MR) examinations each month. The actual times to verify an examination after it arrived on PACS was gathered using the in-house PACS Auditor application. This was done over a period of 20 months from January 2007 through August 2008 for the CR/DR data. The CT/MR data was collected from January of 2008 through April of 2009. The number of examinations that took longer than 1 h to verify were accumulated for each period, and the percentage of these examinations was calculated.

The PACS Audit Loader program development was done in Microsoft Visual Studio .NET 2005, and Microsoft Access 2003/7 was used for the PACS Auditor database. The project runs on a Dell Dimensions 9200 computer with a dual core 2.66 Ghz Intel Pentium processor with 2 Gb of RAM and a 320-Gb Raid 1 (mirrored) hard drive array.The GE PACS version is Centricity 2.0 CSR1 SP5 software.

The data studied are provided by the PACS from the event notification manager (ENM) table. It includes the Examination Change (EC) event type with corresponding status values to indicate ordered, arrived, verified, dictated, completed, canceled, and reference only. The combination of an EC event with a particular status value then indicates a step in the workflow process of the radiology department. These steps/events, each with their own corresponding record(s) and data fields, are extracted along with many other events to an XML file. By looking at the EC event record, its status, and its date/time stamp, the time span can be determined between any of the steps in the workflow process. The application includes the discreet spans “ordered to arrived” (OA), “arrived to verified” (AV), “verified to dictated” (VD), “dictated to completed” (DC) as well as the total “ordered to completed” (OC), and “arrived to completed” (AC) spans. The specific parameter measured in this study was the AV time span.

The XML file was created daily on the PACS and exported to the Dell computer. An Imaging Informatics Professional gathered the xml files containing the audit information from the PACS server. These files were then run through the PACS Audit Loader program to verify and import the data into the PACS Auditor database.

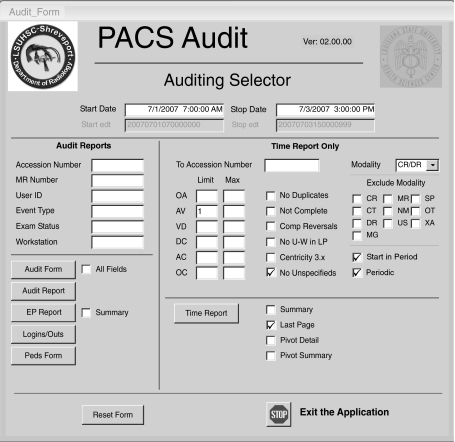

The PACS Auditor was a tool created in 2004 to enable HIPAA-related audits and quick lookups regarding who verified or dictated an examination.6 This first version looked at static events and displayed related data and could list all the events for an examination, patient, or user. Any time span values had to be calculated by hand and could not be used effectively for the number of examinations being reviewed here. In October of 2007, a set of Time Reports shown in Figure 1 was added to the PACS Auditor which allowed the mining of the time span data such as arrived to verified.7

Fig. 1.

PACS Auditor report generation screen.

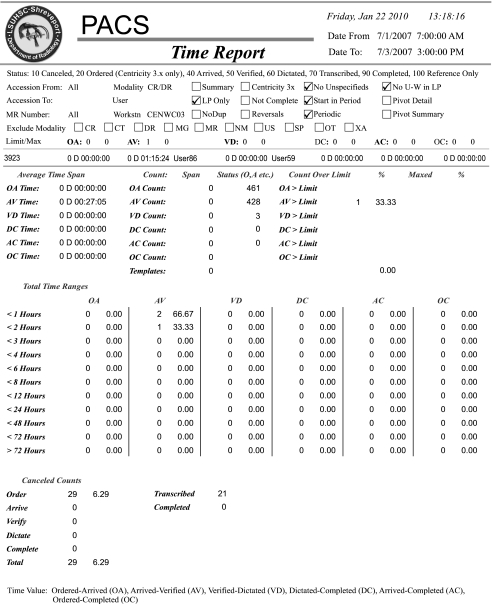

Reports could now be generated that could show aggregate times for all the spans and could break the reports down by date range, modality, and even shift. The reports also allowed the selection of a time limit for each of the spans so that any examinations over the limit could be displayed and counted. By setting the time limit for the arrived to verified span to 1 h, the number of examinations over this limit could easily be retrieved. Figure 2 shows a narrow selection of data that includes one > 1 h AV span that can be used to describe the report.

Fig. 2.

Time Span report showing aggregate display as well as one specific examination exceeding the limit criteria.

The report header shows when the report was run along with all the particular parameters selected for that report. Detail reports can be selected, but with typically thousands of records, it becomes less meaningful unless the range is very narrow. Aggregate data becomes more reliable when periods greater than 2 weeks are selected as outlier data skew will be minimized. The reports also have the ability to set a maximum value for a span. This causes any potential outlier data to be eliminated from the counts and averages. The user can select the Last Page check box to create a one-page report showing only aggregate data. The main body of the report shows the aggregate information derived from the selected data. This includes average times for each of the spans described above. It shows the total span count and also counts over any limit value applied. Each time span's data are shown on a separate line in the report.

Below that, the time spans are broken down into incremental ranges (< 1 h, < 2 h, and so on up to and over 72 h). This breakout capability provides a very good trend analysis for the department's workflow. By viewing the counts and percentages for each time span, bottlenecks and inefficiencies can be detected and acted upon. The report provides the ability to go from this aggregate data and drill down to the specific examinations that are causing time lags to occur.

The one examination that took more than an hour to verify is displayed on a line above the aggregate data and below the header information starting with the accession number 3923. This detail line shows the value of each time span available for that examination, as well as the user that verified and dictated the examination. This information can be presented to the radiographer to support the PI goals. Time spans can only be calculated if there is an event for both sides of the span, for example, an arrived event and a verified event. Since we know the date and time for each event record, we can calculate the time span between them. The line indicates the AV span took 1:15 and was verified by User86.

Below that, the AV aggregate line shows an average time span of 27:05 min for a count of three spans, with one span being over limit (33.33%). Looking at the Total Time Ranges breakout under the AV column below, two examinations are counted in the < 1-h line and one in the < 2-h line. In a typical month, there could be multiple examinations over the limit. In the report runs supporting this project, there was typically one or more pages of individual examinations listed before the last page provided the aggregate data. There would normally be counts and averages for all spans, and span limits and maximums can be set for any integer hour value. Canceled counts are displayed at the bottom in the time span in which they occurred as well.

Results

Table 1 shows the CR/DR counts and percentages by period (left column) starting with January of 2007 at the bottom and coming up to August of 2008. The columns show the total procedure counts, the number taking longer than 1 h to verify, and the percentage of the total. The data for all CR/DR areas are shown in the first three columns, and the next two sets simply show a breakout of the ER and 2nd floor General Radiology sections. There are also three other sections not displayed. The percentages start as high as 26% and drop to under 5%.

Table 1.

CR/DR Total, ER and General Radiology Counts, and Percent Greater than 1 h

| CR/DR | CR/DR | CR/DR | |||||||

|---|---|---|---|---|---|---|---|---|---|

| All | ER (CEN051) | 2nd Floor (CEN050) | |||||||

| Period | Total | > 1 h | Percent | Total | > 1 h | Percent | Total | > 1 h | Percent |

| 08/08 | 8,055 | 291 | 3.61 | 4,417 | 211 | 4.78 | 1,485 | 77 | 5.19 |

| 07/08 | 8,220 | 239 | 2.91 | 4,329 | 128 | 2.96 | 1,392 | 79 | 5.68 |

| 06/08 | 8,216 | 273 | 3.32 | 4,505 | 181 | 4.02 | 1,355 | 66 | 4.87 |

| 05/08 | 8,258 | 317 | 3.84 | 4,591 | 196 | 4.31 | 1,375 | 90 | 6.55 |

| 04/08 | 8,207 | 202 | 2.46 | 4,297 | 96 | 2.23 | 1,549 | 86 | 5.55 |

| 03/08 | 7,567 | 190 | 2.51 | 4,270 | 118 | 2.76 | 1,209 | 45 | 3.72 |

| 02/08 | 8,282 | 254 | 3.07 | 4,338 | 136 | 3.14 | 1,461 | 91 | 6.23 |

| 01/08 | 8,720 | 577 | 6.62 | 4,713 | 354 | 7.51 | 1,497 | 140 | 9.35 |

| 12/07 | 8,103 | 993 | 12.25 | 4,830 | 558 | 11.55 | 1,303 | 290 | 22.26 |

| 11/07 | 8,772 | 1,195 | 13.62 | 4,887 | 755 | 15.45 | 1,694 | 278 | 16.41 |

| 10/07 | 9,141 | 1,414 | 15.47 | 4,843 | 794 | 16.39 | 1,709 | 341 | 19.95 |

| 09/07 | 8,659 | 1,429 | 16.5 | 4,905 | 876 | 17.86 | 1,512 | 251 | 16.6 |

| 08/07 | 8,920 | 1,275 | 14.29 | 4,583 | 868 | 18.94 | 1,733 | 238 | 13.73 |

| 07/07 | 8,696 | 1,539 | 17.7 | 4,611 | 997 | 21.62 | 1,648 | 352 | 21.36 |

| 06/07 | 8,560 | 1,713 | 20.01 | 4,646 | 1,006 | 21.65 | 1,628 | 296 | 18.3 |

| 05/07 | 9,415 | 1,798 | 19.1 | 4,908 | 1,071 | 21.82 | 1,829 | 299 | 16.35 |

| 04/07 | 8,657 | 1,645 | 19 | 4,722 | 1,021 | 21.62 | 1,746 | 304 | 17.41 |

| 03/07 | 9,244 | 2,032 | 21.98 | 4,932 | 1,320 | 26.76 | 1,843 | 423 | 22.95 |

| 02/07 | 8,290 | 1,658 | 20 | 4,552 | 942 | 20.69 | 1,896 | 368 | 19.41 |

| 01/07 | 8,727 | 1,716 | 19.66 | 4,855 | 1,134 | 23.36 | 1,569 | 237 | 15.11 |

Table 2 shows the CT/MR counts and percentages starting in January of 2008 at the bottom and coming up to April of 2009. The columns depict the same data as described above for each section. The percentages start as high as 59% and drop to under 10%.

Table 2.

CT/MR Counts and Percent Greater than 1 h

| CT | MR | |||||

|---|---|---|---|---|---|---|

| Hospital | Hospital | |||||

| Period | Total | > 1 h | Percent | Total | > 1 h | Percent |

| 04/09 | 1,935 | 134 | 6.93 | 535 | 35 | 6.54 |

| 03/09 | 2,055 | 88 | 4.28 | 560 | 27 | 4.82 |

| 02/09 | 1,992 | 82 | 4.12 | 462 | 36 | 7.79 |

| 01/09 | 1,846 | 93 | 5.04 | 507 | 66 | 13.02 |

| 12/08 | 1,934 | 128 | 6.62 | 528 | 127 | 24.05 |

| 11/08 | 1,883 | 211 | 11.21 | 463 | 79 | 17.44 |

| 10/08 | 2,148 | 271 | 12.62 | 514 | 92 | 17.9 |

| 09/08 | 2,168 | 197 | 9.09 | 543 | 107 | 19.71 |

| 08/08 | 2,215 | 333 | 15.03 | 581 | 185 | 31.84 |

| 07/08 | 2,154 | 1,196 | 55.52 | 547 | 257 | 46.98 |

| 06/08 | 2,217 | 1,199 | 54.08 | 517 | 304 | 58.8 |

| 05/08 | 2,084 | 1,072 | 51.44 | 505 | 273 | 54.06 |

| 04/08 | 1,967 | 1,119 | 56.89 | 515 | 270 | 52.43 |

| 03/08 | 1,934 | 1,076 | 55.64 | 464 | 250 | 55.07 |

| 02/08 | 1,857 | 1,112 | 59.88 | 431 | 221 | 51.28 |

| 01/08 | 2,040 | 1,204 | 59.02 | 541 | 281 | 51.94 |

Discussion

The radiographer staff was informed of the PI goal and that audits would be done monthly. These reports were produced at the end of each month and provided the ability to show the AV time as well as who verified the examination. The data was then reviewed with the radiographer staff to drive the PI workflow and provide an impetus for change.

A review of the data quickly shows the strong improvements this process enabled. Prior to the start of this, the percentage of examinations taking over 1 h ran between 15% to 30% in CR/DR and up to 60% in CT/MR. The lack of a mechanism to measure these times is clearly indicated in this data. In the first several months of using the PI process for CR/DR, October through January, the number decreased rapidly and reached a fairly stable plateau of less than 5% that continues today. The CT/MR process shows marked improvement as well, and both departments were successful in reaching the PI goal. The benefits of an easy-to-use and consistent mechanism for tracking these times provided by the Auditor database is evident. The Process Improvement plan will be rolled out to all the departmental areas to help ensure that they can benefit from the process.

Audit record counts can easily be in the tens of thousands per day. Although Access provides a solid venue for creating forms and reports, the data would benefit from being stored in a server-based SQL environment. A web interface for this data would make it more easily available to other users, but being PHI, this would need to be controlled. The use of an Access front-end database that includes the forms and reports separate from the back-end database that includes the data is standard practice. This allows the front end to be provided to appropriate users, and access to the back-end data can be controlled by institutional policies.

There may be many factors that influence what would be considered an appropriate AV time, but this project used 1 h for its measurement. Note that any of the time spans can have limits set in the same way, and there are many other uses for the audit data. The audit data for an examination has been correlated to image reject data to provide monthly counts. Reports showing procedure counts for ACGME reporting were created by connecting the audit data to the in-house PACS database. The radiology department decided to create American College of Radiology (ACR) section groupings so that this type of data could be analyzed. By tying the audit data to these groupings, the report could easily display counts by procedure for the appropriate ACR section. Another application was to determine which procedures were done on pediatric patients in response to the Image Gently campaign.8 A query was designed to calculate age at the time of the examination by comparing the patient's date of birth to the event date and provide a result set of patients 18 years and younger for CR/DR examinations. This result set was exported to another database designed specifically for the radiology department to monitor proper exposure, collimation, and positioning. This allowed the department to view, validate, and audit a percentage of the examinations done and report on issues and resolutions as part of its PI processes. Work is currently underway on providing anonymized information for queries done by researchers and others with no need to have access to the PHI. A report to show workstation utilization by looking at logon/logoff times is also in progress.

The ability to extract and use audit data, particularly in conjunction with other processes, will continue to be valuable. Whether this data is obtained through a tightly integrated RIS/PACS system or individually from a PACS database, it can be used to support many different clinical goals.

References

- 1.NIST Computer Security Response Center, “Audit Trails”, http://csrc.nist.gov/publications/nistbul/itl97-03.txt Accessed 14 June 2009.

- 2.HIPAA, http://www.cms.hhs.gov/HIPAAGenInfo/, 45 CFR Parts 160, 162 and 164, ‘Health Insurance Reform: Security Standards; Final Rule’, 02/20/2003 Accessed 12 January 2006.

- 3.Morgan MB, Branstetter BF, Lionetti D, Chang PJ. The radiology digital dashboard: effects on report turnaround time. J Digit Imaging. 2008;21:1:50–58. doi: 10.1007/s10278-007-9008-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Stone G, Lekht A, Burris N, Williams C. Data collection and communications in the public health response to a disaster: rapid population estimate surveys and the Daily Dashboard in post-Katrina New Orleans. J Public Health Manag Pract. 2007;13:5:453–460. doi: 10.1097/01.PHH.0000285196.16308.7d. [DOI] [PubMed] [Google Scholar]

- 5.Minnigh TR, Gallet J. Maintaining quality control using a radiological digital X-ray dashboard. J Digit Imaging. 2009;22:1:84–88. doi: 10.1007/s10278-007-9098-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gregg B, D’Agostino HD, Gonzalez Toledo EC. Creating an IHE ATNA based audit repository. J Digit Imaging. 2006;19:4:307–315. doi: 10.1007/s10278-006-0927-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gregg B: Maximizing the power of centricity PACS audit data. Presentation at GE User Summit, August 20, 2008, Washington, DC.

- 8.Amis ES, Butler PF, Applegate KE, Birnbaum SB, Brateman LF, Hevezi JM, Mettler FA, Morin RL, Pentecost MJ, Smith GG, Strauss KJ, Zeman RK. American College of Radiology white paper on radiation dose in medicine. J Am Coll Radiol. 2007;4:272–284. doi: 10.1016/j.jacr.2007.03.002. [DOI] [PubMed] [Google Scholar]