Abstract

We conducted three experiments to reproduce and extend Perone and Courtney's (1992) study of pausing at the beginning of fixed-ratio schedules. In a multiple schedule with unequal amounts of food across two components, they found that pigeons paused longest in the component associated with the smaller amount of food (the lean component), but only when it was preceded by the rich component. In our studies, adults with mild intellectual disabilities responded on a touch-sensitive computer monitor to produce money. In Experiment 1, the multiple-schedule components differed in both response requirement and reinforcer magnitude (i.e., the rich component required fewer responses and produced more money than the lean component). Effects shown with pigeons were reproduced in all 7 participants. In Experiment 2, we removed the stimuli that signaled the two schedule components, and participants' extended pausing was eliminated. In Experiment 3, to assess sensitivity to reinforcer magnitude versus fixed-ratio size, we presented conditions with equal ratio sizes but disparate magnitudes and conditions with equal magnitudes but disparate ratio sizes. Sensitivity to these manipulations was idiosyncratic. The present experiments obtained schedule control in verbally competent human participants and, despite procedural differences, we reproduced findings with animal participants. We showed that pausing is jointly determined by past conditions of reinforcement and stimuli correlated with upcoming conditions.

Keywords: postreinforcement pause, fixed-ratio schedules, multiple schedules, stimulus control, incentive shift, screen touch, humans

When operant behavior produces a reinforcer, a pause in responding often extends beyond the period required to consume the reinforcer. There is reason to believe that pausing is “an almost universal phenomenon,” as Priddle-Higson, Lowe, and Harzem (1976, p. 347) put it. Pausing occurs under all simple schedules of reinforcement, including those in which reinforcement is contingent on the number of responses (whether fixed or variable) and those in which reinforcement is contingent on a single response emitted after a fixed or variable period (see reviews by Harzem & Harzem, 1981; Harzem, Lowe, & Priddle-Higson, 1978; Schlinger, Blakely, & Kaczor, 1990; Shull, 1979; Zeiler, 1977).

Two explanations of pausing have received attention. One holds that pausing represents anticipation of the response effort or time required to obtain the next reinforcer. This account predicts that increasing the frequency or magnitude of reinforcement should reduce pausing. Supporting data have come from research with fixed-ratio (FR), fixed-interval (FI), and variable-ratio (VR) schedules (e.g., Blakely & Schlinger, 1988; Crossman, 1971; Felton & Lyon, 1966; Griffiths & Thompson, 1973; Inman & Cheney, 1974; Killeen, 1969; Lowe & Harzem, 1977; Powell, 1968, 1969; Rider & Kametani, 1984; Schlinger et al., 1990).

A second account points to control by the past reinforcer. Harzem and Harzem (1981) proposed that pausing is an unconditioned inhibitory aftereffect of reinforcement, an effect that increases with the magnitude of the reinforcer (also see Staddon, 1974). In contrast to an anticipatory process, this view predicts that pausing should be longer after large reinforcers than after small ones. Again, considerable support is available from research with both ratio and interval schedules (e.g., Davey, Harzem, & Lowe, 1975; Harzem, Lowe, & Davey, 1975; Hatten & Shull, 1983; Jensen & Fallon, 1973; Lowe, Davey, & Harzem, 1974; Priddle-Higson et al., 1976; Staddon, 1970).

Perone, Perone, and Baron (1987) suggested that the contradictory results arose from unappreciated procedural differences that affected the degree to which excitatory and inhibitory factors could contribute to experimental outcomes. The critical variable, they argued, is whether the procedure allowed stimulus control of the behaviors leading to the different reinforcer magnitudes. Perone and Courtney (1992) tested this idea. Pigeons gained access to grain by pecking a key under an FR 80 schedule. Within each session, half of the ratios ended with a small reinforcer (1-s or 1.5-s access to grain, depending on the pigeon). These were the lean components. The other half of the ratios—the rich components—ended with a large reinforcer (4.5 s to 7 s). The rich and lean components were presented quasirandomly, yielding four types of transition: lean-to-lean, lean-to-rich, rich-to-rich, and rich-to-lean. There were two phases. In the mixed-schedule phase, the response key was lit white throughout the session. In the multiple-schedule phase, the rich and lean components were accompanied by different key colors.

In the mixed schedule, pauses generally were short (less than 5 s), but they were longer after the large reinforcers. Under the multiple schedule, pauses were longer when the key color signaled that the upcoming reinforcer would be small. Importantly, the effect of signaling the upcoming reinforcer depended on whether the past reinforcer was large or small, with relatively brief pauses after small reinforcers and long pauses (averaging about 35 s) after large reinforcers. Thus, when discriminative stimuli were absent, only the past reinforcer influenced pausing. When stimuli were present, both the past reinforcer and the upcoming reinforcer influenced pausing.

The basic finding—relatively extended pausing when the transition from a rich component to a lean component is signaled—appears to have some generality. It has been obtained with different species (rats and monkeys in addition to pigeons), different reinforcers (condensed milk, food pellets, and cocaine in addition to grain), and different methods of differentiating the rich and lean schedules (low-and high-effort response force requirements in addition to high and low reinforcer magnitudes; Baron, Mikorski, & Schlund, 1992; Galuska, Wade-Galuska, Woods, & Winger, 2007; Wade-Galuska, Perone, & Wirth, 2005). Together, these studies provide strong evidence for Perone et al.'s (1987) hypothesis that pausing is jointly controlled by two factors that exert their effects at the interface defined by the end of one schedule component and the start of the next. Translated to simple FR schedules, pausing is under joint control of the just-obtained reinforcer and the discriminable work or delay requirement for the next reinforcer.

The present series of experiments investigated such joint control of pausing in human participants. Although the pause–respond pattern is a common outcome when laboratory animals are trained under FR schedules, this outcome typically has not been reproduced in humans (e.g., Buskist, Bennett, & Miller, 1981; Lowe, 1978; Lowe, Harzem, & Bagshaw, 1978; Lowe, Harzem, & Hughes, 1978; Matthews, Shimoff, Catania, & Sagvolden, 1977). It seems likely that the absence of pausing in human participants may be attributed to procedural differences across species: The response requirements and reinforcer magnitudes in the typical human experiment may not be functionally equivalent to those in the typical animal experiment. An illuminating exception is Miller's (1968) experiment in which classic pause–respond patterns were obtained. Both the response and the reinforcer were unconventional, however. Loud vocal utterances (90 db–102 db depending on the participant) were negatively reinforced according to an FR schedule by momentarily reducing the manual force (from 10 lb to 1 lb) required to pull a plunger on a concurrent schedule of positive reinforcement. Pauses in vocal responding were directly related to the size of the FR schedule, a common finding in animal research (e.g., Powell, 1968).

The approach taken here was admittedly and unabashedly exploratory. We began with the fundamental assumption that the process identified by Perone and Courtney (1992) is general, and then sought to discover the operational definitions of “rich” and “lean” schedule conditions that would suffice to generate relatively long pausing in the rich-to-lean transition to which our human participants were exposed.

GENERAL METHOD

Participants

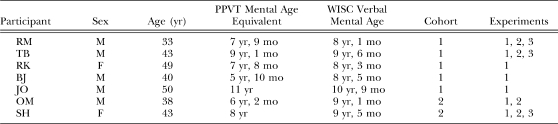

Seven verbally competent adults with mild intellectual disabilities participated. None was receiving psychotropic medications. The participants were recruited from individuals who expressed interest to inquiries made in supervised residential facilities. Table 1 shows each participant's sex, biological age, mental-age scores on the Peabody Picture Vocabulary Test (PPVT), which is a standardized test of receptive language skills, and the verbal subscale of the Wechsler Intelligence Scale for Children, which assesses both receptive and expressive language skills. Although the participants were 33 to 50 years old, their test scores, which ranged from mental ages of 6 to 11 years on the PPVT and 8 to 11 years on the Wechsler, indicated that their verbal skills matched those of school-age children.

Table 1.

Participant characteristics: Sex, age, mental-age score on the Peabody Picture Vocabulary Test (PPVT Mental Age), and score on the Verbal subscale of the Wechsler Intelligence Scale for Children (WISC Verbal Score converted to mental age). Also shown are each participant's experimental cohort and the experiments in which he or she participated.

Apparatus

Each participant sat in a small, sound-attenuated room facing a 38-cm (15-in diagonal) color touch-screen monitor that was recessed into a wooden partition. Session events were presented, and responses recorded, by a computer located behind the partition. A Gerbrands belt feeder, also behind the partition, dispensed coins into a Plexiglas cup that protruded through a hole in the partition to the lower right side of the monitor. The operanda (here designated as “keys”) were 5-cm squares that could be presented in any of nine separate positions within a symmetrical 3 × 3 arrangement on the screen. Visual stimuli could be presented in each square. Auditory stimuli were presented through external speakers. Sessions were monitored through a one-way window. Sessions were controlled by a Macintosh® computer running the MTS software provided by William Dube (Dube, 1991).

Procedure

Sessions were conducted on most weekdays. Each session included 41 components arranged in a multiple (mult) schedule, with at least 20 rich and 20 lean components. The components changed semirandomly following each reinforcer delivery, producing 10 instances of each type of transition between components: lean-to-lean, lean-to-rich, rich-to-rich, and rich-to-lean.

Each component began with the presentation of two keys, one positioned in the left third and one in the right third of the screen. Except as noted for Participants SH and OM in Experiment 1 and all participants in Experiment 2, each schedule component was associated with a different color—red or yellow—counterbalanced across participants. To promote rapid development of discriminative control by the component stimuli, the procedure incorporated differential responding to the key colors: When the lean schedule was signaled (e.g., by yellow keys), only touches of the left key counted towards the FR requirement. When the rich schedule was signaled (e.g., by red keys), touching only the right key counted. Touching the “incorrect” key produced a tone and a screen blackout for 1 s. Each discrete touch to the “correct” key produced a 0.1-s “click” sound and disappearance of the keys. When the keys reappeared, their vertical positions were switched randomly between the top third and the bottom third of the display (i.e., both keys were either on the top or bottom). This was to ensure continued attention to the stimuli throughout the FR requirement.

Upon completion of the requirement, the screen went black, and the reinforcement operation commenced. In the rich component, a 2-s series of three tones was presented, and in the lean component, a single 2-s tone was presented. The reinforcers that followed the tones were coins (nickels, dimes, or quarters) or points exchangeable for money. Individual coins were dispensed into the plastic cup. Point delivery involved the advancement of a counter located in the top center of the screen; participants were told that, at the end of the session, they would receive a penny for each point. After the session, the participants could retain the cash they earned or use all or part of it to purchase snacks, including soft drinks, or nonedible items from a “store” in the laboratory.

Sessions were terminated if the participant had not completed all 41 components after 2 hr, if the participant became upset or refused to continue, or if the participant engaged in self-injury or other severe problem behaviors. Participants refused to continue approximately 5% of the sessions; the data from incomplete sessions were not included in the analysis. Participant RM frequently bit his arms but did not produce tissue damage, and his sessions were not ended.

Experimental conditions remained in effect for a minimum of 10 sessions and until there was no visible trend in mean pause durations over the last five sessions.

EXPERIMENT 1

Our initial effort sought to identify experimental conditions sufficient to observe control of pausing by the joint action of past and upcoming conditions of reinforcement as reported by Perone and Courtney (1992) but in verbally competent adult humans. Eventually, each participant was exposed to a terminal condition in which schedule components differed in both FR size and reinforcer magnitude. In this double disparity procedure, the rich component consisted of a short FR leading to a large reinforcer, and the lean component consisted of a long FR leading to a small reinforcer. The participants fell into two cohorts, however, depending on whether the initial training procedure incorporated the previously described differential response to the key colors to ensure discriminative control. For ease of exposition, a combined Method and Results section will be presented for each cohort.

Method and Results

Cohort 1

The first cohort included 5 participants: RM, TB, RK, BJ, and JO (see Table 1). To establish FR responding, the ratio was set initially at FR 1 in both components of the multiple schedule (mult FR 1 FR 1). The experimenter demonstrated the first response and explained the payment arrangement. No additional demonstration or prompting was needed for any participant. The first session began with the components presented in strict alternation. After 20 reinforcers had been delivered, the components were alternated unpredictably for an additional 20 reinforcers. Responding readily came under control of the differential-response contingency: All participants touched the left key in the lean component and the right key in the rich. The ratio requirements were increased gradually within and across sessions until a mult FR 10 FR 10 schedule was reached.

From this point, the procedures differed slightly across the 5 participants. For Participants RM, TB, and RK, we increased the disparity between the two components by changing the ratio requirement or the reinforcer magnitude (or both) on an individual basis until the performance previously reported in animal participants—longer pauses in the rich-to-lean transition than in the other three transitions—was obtained. For these participants, at least one combination of ratio size and reinforcer magnitude was presented until responding stabilized prior to arriving at a combination that produced extended pausing in the rich-to-lean transition. The other 2 participants, BJ and JO, were placed directly on the procedures that reliably produced differential pausing in the first 3 participants, but with no prior condition in which stability was required.

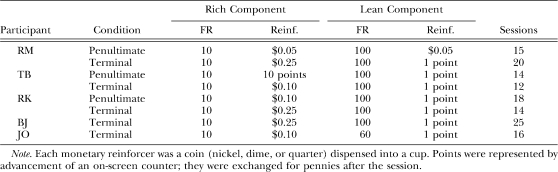

Table 2 shows the FR size and reinforcer magnitudes in the rich and lean components, and the number of sessions for the terminal condition, in which extended pausing occurred reliably in the rich-to-lean transition. In addition, for Participants RM, TB, and RK, this information is shown for the penultimate condition, that is, the condition immediately prior to the terminal condition. The double-disparity procedure was used in both of these conditions. In the penultimate condition, the rich component required completion of an FR-10 schedule to earn a nickel (Participant RM), 10 points (TB), or a dime (RK), and the lean component required an FR-100 to earn either the same reinforcer (a nickel; RM) or a lesser one (1 point; TB and RK). In the terminal condition, the rich component required an FR-10 to earn either a dime or quarter and the lean component required either an FR-60 (Participant JO) or an FR-100 to earn 1 point.

Table 2.

Experiment 1, Cohort 1: Fixed-ratio (FR) size and reinforcer magnitude (Reinf.) in the rich and lean components during the penultimate and terminal conditions. Also shown is the number of sessions per condition.

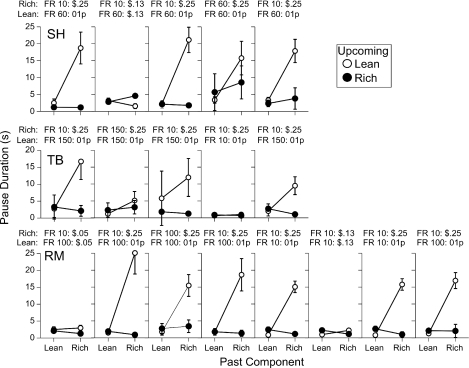

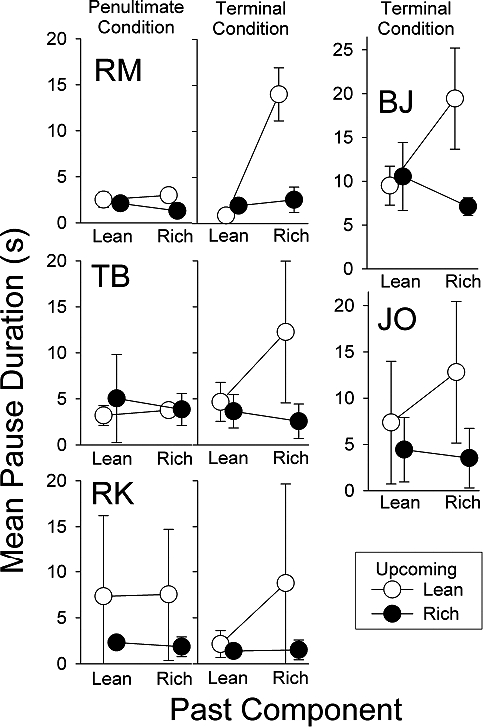

Figure 1 shows the means and standard deviations of the pauses in the final five sessions in the penultimate and terminal conditions for Participants RM, TB, and RK, and in the terminal condition for BJ and JO. All 5 participants showed the effectiveness of the double-disparity procedure, with extended pausing in the rich-to-lean transition when there were large differences between both the ratio requirements and the reinforcer magnitudes.

Fig 1.

Mean pause duration as a function of the past and upcoming schedule component for the participants of Cohort 1 in Experiment 1. For Participants RM, TB, and RK, the left panels show results from the penultimate condition and the center panels from the terminal condition. The right panels show results from the terminal condition results for Participants BJ and JO, who were exposed to these conditions directly after training. Symbols represent means of the last five sessions, and vertical lines extend 1 standard deviation above and below the mean (in some cases, the line extends either 1 standard deviation above or 1 below the mean).

The left panel of Figure 1 shows that Participant RM paused briefly in all transitions under a mult FR 10 FR 100 schedule with equal reinforcer magnitudes of a nickel in the rich and lean components. Increasing the disparity between reinforcer magnitudes in the terminal condition (a quarter vs. 1 point; center panel) increased the mean rich-to-lean pause duration to nearly 15 s. Similar results were obtained with Participant TB: Changing the rich-component reinforcer from 10 points to a dime tripled the mean pause duration in the rich-to-lean transition even though the dime and points had the same monetary value. However, variability in pausing also increased from the penultimate to the terminal conditions. For Participant RK, the penultimate condition had a rich component with FR 10 leading to a dime and a lean component with FR100 leading to 1 point. She paused substantially longer when the upcoming component was lean, regardless of the preceding component. Increasing the rich-component reinforcer to a quarter enhanced differential pausing in the rich-to-lean transition primarily by decreasing the mean duration and variability of pauses in the lean-to-lean transition, although the mean duration of the rich-to-lean pauses did increase slightly.

The rightmost panels of Figure 1 show results from the two participants (BJ and JO) who were exposed to the terminal procedure immediately after preliminary training. Pausing was longest in the rich-to-lean transition, although there was substantial variability in Participant JO's pause durations.

Cohort 2

To ensure that any failure to show extended pausing could not be attributed to lack of stimulus control by the component stimuli, the multiple-schedule procedures used with the first cohort incorporated a differential response requirement. Throughout the procedure, the participant had access to two keys; to advance the schedule the participant pressed the left key if the component was lean and the right key if it was rich. Procedures used with animals, however, have not incorporated this feature. With the second cohort, we asked whether the differential-response requirement was necessary for the double-disparity procedure to produce the pattern of results observed in Cohort 1: relatively extended pausing in the rich-to-lean transition and relatively brief pausing in the other three transitions.

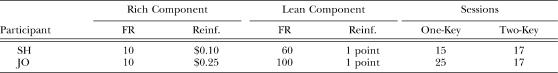

Two new participants, OM and SH, were exposed initially to the double-disparity conditions without the differential-response requirement. The procedure differed from that described in the General Procedure section in that the entire screen was illuminated with the component-specific color, and a single key was present on the left side during the lean component and on the right side during the rich component. The keys were black-square outlines filled with the background screen color. Otherwise, the procedure was the same as for Cohort 1 in that different colors and distinctive response-feedback sounds were associated with the two schedules. Preliminary training was the same as for Cohort 1. After pause durations stabilized under the new procedure, the differential-response requirement was added. The experimental conditions, and number of sessions in each, are shown in Table 3. Regardless of whether the differential-response requirement was imposed, the rich component had an FR 10 schedule leading to a coin and the lean component had an FR 60 or FR 100 schedule leading to 1 point.

Table 3.

Experiment 1, Cohort 2: Fixed-ratio (FR) size and reinforcer magnitude (Reinf.) in the rich and lean components in conditions without the differential response requirement (One-Key) and with the requirement (Two-Key). Also shown is the number of sessions per condition.

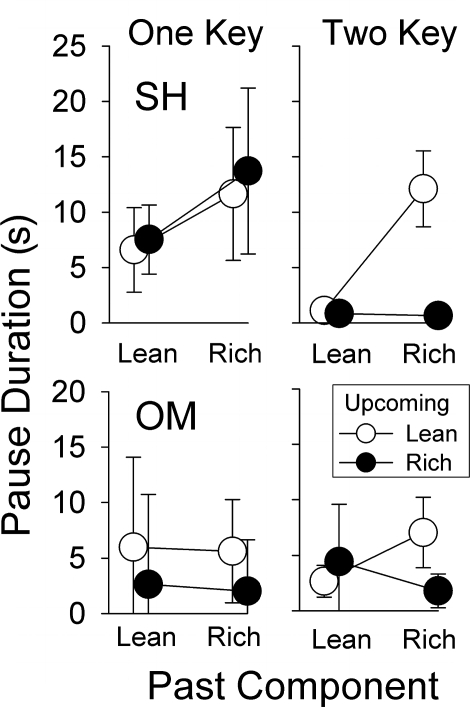

Figure 2 presents the mean pause durations without the differential-response requirement (“one-key” procedure) and with it (“two-key” procedure). Without the requirement (left panels), neither participant showed extended pausing in the rich-to-lean transition. Participant SH showed longer mean pause durations following the rich component regardless of the upcoming component. Participant OM showed longer mean pause durations when the upcoming component was lean regardless of the past component. The right panels show results obtained with the addition of the differential-response requirement. Both participants paused longest in the rich-to-lean transition, demonstrating joint control by the past and upcoming components. Participant SH's pausing in the other transitions, especially in the rich-to-rich transition, decreased markedly. Participant OM's pausing decreased in the lean-to-lean transition. These results are consistent with increased discriminability of the transition across the two types of components: Because completion of the FR schedule, and thus reinforcement, was contingent on different responses in the two components, the differential-response requirement ensured discriminative control by the component stimuli.

Fig 2.

Mean pause duration as a function of the past and upcoming schedule component for the participants of Cohort 2 in Experiment 1. The results are from the last five sessions of the conditions without (One Key) and with (Two Key) the differential response requirement. Other details as in Figure 1.

Analysis of the Determinants of Pause Duration

Relative frequency distributions

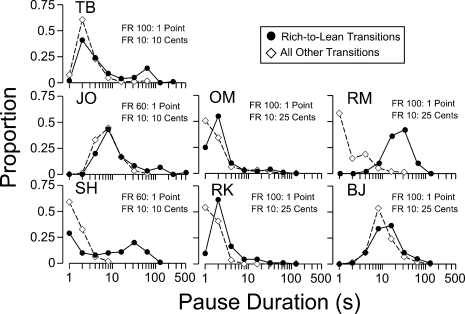

Arithmetic means can provide an incomplete picture of pausing on FR schedules, as the distribution of pause durations is often skewed (for a discussion, see Perone et al., 1987). Variables that increase mean pause duration, such as response requirement, do so by increasing the duration and frequency of long pauses, but modal pause duration may not be affected (e.g., Powell, 1968). Thus, frequency distributions provide a more complete description of pausing. Figure 3 presents relative frequency distributions of pauses in the rich-to-lean transition (filled circles) and the other transitions combined (unfilled diamonds), based on data from the last five sessions of the terminal condition. The abscissas are logarithmic to accommodate the large range in pause duration (see Baron & Herpolsheimer, 1999).

Fig 3.

Relative frequency (Proportion) distributions of pauses for each participant over the last five sessions of the terminal condition of Experiment 1.

All 7 participants showed more long pauses in the rich-to-lean transition relative to the other three transitions. For 4 participants (OM, RM, RK, and BJ), the modal pause durations for the rich-to-lean transition were longer than (to the right of) the modal pause for the other three transitions. The remaining 3 participants' modal pauses were similar for all transitions, but, as already noted, they showed a larger proportion of longer pauses in the rich-to-lean transitions than for the other three transitions. Thus, not all rich-to-lean transitions produced longer modal pauses, but longer pausing occurred primarily within the rich-to-lean transition. For 5 of 7 participants (i.e., all except RM and BJ), the longer mean pauses for the rich-lean transitions were due to the positive skew in the distributions of pauses.

Factors influencing variable pausing in the rich-to-lean transition

The longer, but variable, pausing in the rich-to-lean transition is similar to results from animal participants under FR schedules (e.g., Baron & Herpolsheimer, 1999; Perone & Courtney, 1992). The cause of this variability in pausing is unknown. As Derenne and Baron (2001) pointed out, some theories appeal to the waxing and waning of response strength (e.g., Keller & Schoenfeld, 1950). Efforts to document this waxing and waning in the form of sequential dependencies in pause duration, however, have not demonstrated systematic relationships between short and long pauses (see Derenne & Baron, 2001).

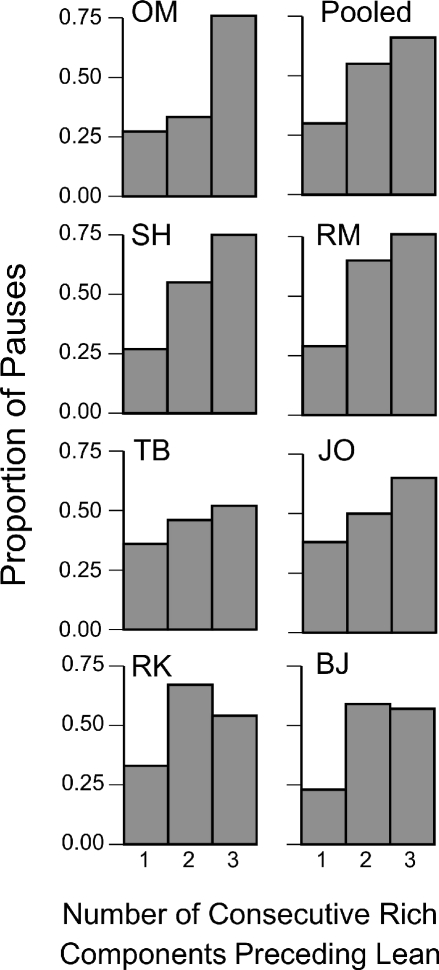

To explore factors that might account for variation in pauses in the rich-to-lean transition, we analyzed the pauses as a function of the immediately preceding context. That is, we asked whether a transition to the lean component that followed two or three consecutive rich components produced longer pauses than a transition that followed one rich component. Figure 4 shows, for each participant and for the pooled data over the last five sessions of the terminal condition, the proportion of rich-to-lean pauses that were greater than the overall median rich-to-lean pause as a function of the number of consecutive rich components immediately preceding the transition to lean. For all participants, the probability of a longer-than-overall-median pause was highest when the lean component was preceded by more than one rich component. Considering the pooled results, analysis of variance yielded a statistically significant main effect, F(2, 6) = 5.59, p = .002, and post-hoc t-tests revealed significant differences (p < .05) between both one and two consecutive rich components and one and three consecutive rich components, but not between two and three. At least in the present procedure, variability in pausing within the rich-to-lean transition may be attributable to variability in the number of consecutive rich components preceding the lean component.

Fig 4.

Proportion of rich-to-lean transitions in Experiment 1 with pauses longer than the overall median pause in all rich-to-lean transitions. The proportion appears as a function of the number of consecutive rich components that preceded the lean component. The Pooled graph combines the results from all 7 participants.

Discussion

We succeeded in demonstrating joint control of pausing by past and upcoming conditions of reinforcement in all 7 adult humans. Although the pattern of results in the terminal condition matches that obtained in pigeons by Perone and Courtney (1992), the procedure differed in several respects. One might regard these differences as limiting the generality of the results from the animal laboratory, but we view the present work as a systematic replication of Perone and Courtney's study. As such, the similarity in the results in the face of the procedural differences serves as evidence of process generality (Sidman, 1960; see also Baron, Perone, & Galizio, 1991a, 1991b).

First, our rich and lean components differed from one another in both ratio size and reinforcer magnitude. In Perone and Courtney's (1992) experiment, the ratio size was held constant and the rich and lean components differed only in reinforcer magnitude. On the surface, at least, it would appear that the production of extended pausing by our human participants required more extreme differences between the rich and lean components (as arranged by our double-disparity procedure). It is premature, however, to draw strong conclusions about the procedural variations across the experiments because the species, operanda, response topography, establishing operations, and reinforcers all differed. Moreover, pausing that is produced by disparities in reinforcement magnitude can be modulated by the size of the schedule requirement (i.e., even though the schedule requirement is the same in both components). Perone (2003) reported that, with schedule requirements below FR 60, pigeons did not show extended pausing in the rich-to-lean transition despite a seven-fold difference in reinforcer magnitude across components (1-s access to grain in the lean component, 7-s in the rich). Galuska et al. (2007) reported similar results in their study of rhesus monkeys responding under schedules that arranged a ten-fold difference in cocaine reinforcement across the components. Furthermore, the ratio size at which extended pausing appeared depended on the absolute levels of the drug doses: Pausing was more sensitive to ratio size when the lean and rich reinforcers were 0.003 mg/kg and 0.03 mg/kg than when they were 0.0056 mg/kg and 0.056 mg/kg. Taken together, these studies suggest that extended pausing is more likely when the overall experimental context is relatively lean (i.e., higher ratios or lower absolute levels of reinforcement).

That there should be species differences in the conditions sufficient to generate extended pausing should not be surprising given that notable individual differences are observed within species. Wade-Galuska et al. (2005) studied rats' pausing in a rich-to-lean transition defined solely in terms of the response force required to press a lever. Some rats showed extended pausing when the components differed by as little as 0.15 N (a response-force of 0.40 N was required in the lean component, 0.25 N was required in the rich), whereas others required a difference of 0.45 N (0.70 N in the lean, 0.25 N in the rich).

Given these complexities, it will take considerable research to identify the degree of difference between lean and rich components that is necessary to produce extended pausing. The studies to date, including our Experiment 1, were not designed to delineate boundary conditions but merely to demonstrate sufficient conditions.

A second procedural variation was our use of a differential-response requirement to promote discrimination of the component stimuli. Animal studies demonstrating joint control of pausing by the past and upcoming multiple-schedule components have not typically incorporated topographically distinct responding in the two components (Galuska et al., 2007; Perone, 2003; Perone & Courtney, 1992). The use of explicit contingencies to ensure stimulus control in laboratory studies of human behavior is not without precedent, however. In a study of sensitivity to concurrent schedules of monetary reinforcement, Madden and Perone (1999) provided college students with an observing response that briefly displayed stimuli (colors) correlated with the schedules. Observing these stimuli led to increased sensitivity as measured via the generalized matching equation (Baum, 1974), but special contingencies were needed to foster observing in 2 of 3 students: Specifically, whenever a reinforcer was delivered, the student could not collect it until she identified the color associated with the schedule that had provided the reinforcer. With this contingency in place, all 3 students observed the stimuli and demonstrated high levels of sensitivity to the reinforcement rates afforded by the schedules.

A third distinctive feature of our procedure is that the lean-component reinforcers differed from the rich-component reinforcers not only in monetary value (e.g., $0.01 vs. $0.25) but also in terms of whether money was delivered during (in the rich component) or after (in the lean component) the session. Thus, the reinforcers might be viewed as qualitatively different or as varying across dimensions other than monetary value. For example, the lean reinforcer might be viewed as delayed whereas the rich reinforcer was immediate. Thus it is important to consider whether the extended pausing in the rich-to-lean transition depended in some way on the delivery of points rather than coins in the lean component. The multiple-schedule procedure provides a within-participant, within-session assessment of this issue because it allows comparisons of pausing across all possible transitions between the lean and rich components. Extended pausing was unique to the rich-to-lean transition. Pausing in the lean-to-lean transition, as in the lean-to-rich and rich-to-rich transitions, was relatively brief. Clearly, the use of points in the lean component, per se, did not generate long pauses. It is worth noting that both points and coins are conditioned reinforcers that presumably derive their efficacy through later exchange for backup reinforcers—put another way, both coins and points are tokens. Unlike water and food, points exchangeable for coins and coins themselves do not differ in terms of their relevant deprivation conditions or establishing operations.

The variability in the pause duration seems to be a signature phenomenon under FR schedules of reinforcement, and one that has defied analysis in operant terms (see Baron & Herpolsheimer, 1999; Derenne & Baron, 2001). This variability is seen in steady-state responding under both simple and multiple schedules. The analysis presented in Figure 4 indicates that the longest pauses—those that account for much of the increase in mean pause duration—occur when the transition to a lean component follows a series of rich components. These results reproduced findings with rats under a “single-disparity” procedure with small and large FR values (Baron & Herpolsheimer, 1999). These results are somewhat counterintuitive, as it seems at odds with a response-strength account of variation in pause duration. It seems reasonable to predict that responding should be at high strength after three short ratios leading to large reinforcers. Indeed, according to the account offered by Keller and Schoenfeld (1950), it would be expected that responding would be most probable—and pausing would be briefest—after a series of rich components, and responding would be least probable—and pausing longest—following a series of lean schedules. Nevertheless, the results contradict such a prediction.

The distribution of pauses we observed was similar to distributions reported by Derenne and Baron (2001), Powell (1968), and others. Conditions that increase mean pause duration may do so by increasing the proportion of long pauses more than by increasing the mode of the distribution. Many pauses remained in the very short range. Thus, the rich–lean transition increases pausing in a manner analogous to increasing the response requirement under simple FR schedules (Powell, 1968).

EXPERIMENT 2

Of necessity, the procedures that proved sufficient to produce extended pausing by humans in the rich-to-lean transition differed somewhat from those used in studies with pigeons and rats. Practically, it is not possible to arrange identical procedures across species. It is possible, however, to arrange conditions that are functionally similar. The double-disparity procedure of Experiment 1 arranged a particular kind of rich-to-lean transition, one that differs formally from the procedures used in the animal studies to date but that generated a pattern of results in our participants that closely matched those reported in animal studies. Still, additional evidence is needed to strengthen the case that similar behavioral processes are operating across species.

Consider the results from Cohort 2, which demonstrated the importance of the discrimination of the rich and lean component stimuli. Without the differential response requirement in the presence of the component stimuli, participants did not show extended pausing in the rich-to-lean transition, presumably because they failed to discriminate the components. Only 2 participants were exposed to conditions with and without the differential response requirement, however, and this limitation is exacerbated by the use of an AB design. Perone and Courtney (1992) manipulated stimulus control by comparing pigeons' performances under multiple schedules (in which distinct stimuli accompany each schedule component) and mixed schedules (without such stimuli). Extended pausing in the rich-to-lean transition occurred only under the multiple schedule.

The evidence to date suggests that extended pausing depends not only on a transition from rich to lean conditions of reinforcement but also on discrimination of that transition. Experiment 2 was designed to assess the role of discriminative-stimulus control by comparing pausing under mixed and multiple schedules in an ABA design.

Method

Four participants who had served in Experiment 1 participated. Two were from Cohort 1 (RM and TB) and the others from Cohort 2 (SH and OM). The multiple-schedule conditions were as described previously for Cohort 1 in Experiment 1, but with the addition of reinforcer-specific visual stimuli that appeared on the on-screen response keys and accompanied reinforcer delivery. The stimuli were a photo of a quarter for the rich component and a black numeral “1” for the lean component; the dimensions of the numeral were 4 × 4 cm. In the rich component, completing an FR 10 schedule produced a quarter, a 2-s component-specific tone, and a photo of a quarter in the center of the screen. In the lean component, completing an FR 100 schedule (FR 60 for Participant SH) produced a 2-s component-specific tone and presentation of the numeral 1 in the center of the screen. After the session participants were paid $0.01 for each lean component they completed; the participants kept the quarters they obtained in the rich components.

Under the mixed schedule, the stimuli that had accompanied the component schedules were removed. In addition, rather than presenting two response keys, one on each side of the screen, a single response key was presented in the middle third of the screen. To ensure attention to the screen in the mixed schedule, the key's vertical position was changed unpredictably following a response (as it was under the multiple schedule). The key was a blue 5 × 5-cm square outlined in black against a blue background. Blue was not used in the multiple schedule procedures. Following the completion of each ratio, a 2-s tone and a visual display consisting of the blue square in the center of the screen were presented. The tone was different from that used under the multiple schedule. No instructions were given in Experiment 2.

Each condition was presented for at least 10 sessions and until there was no visible trend in mean pause duration. The multiple schedule was imposed first (for 13, 12, 17, or 10 sessions for Participants RM, TB, OM, and SH, respectively), followed by the mixed schedule (12, 11, 12, and 13 sessions), and, finally, reinstatement of the multiple schedule (16, 18, 36, and 13 sessions).

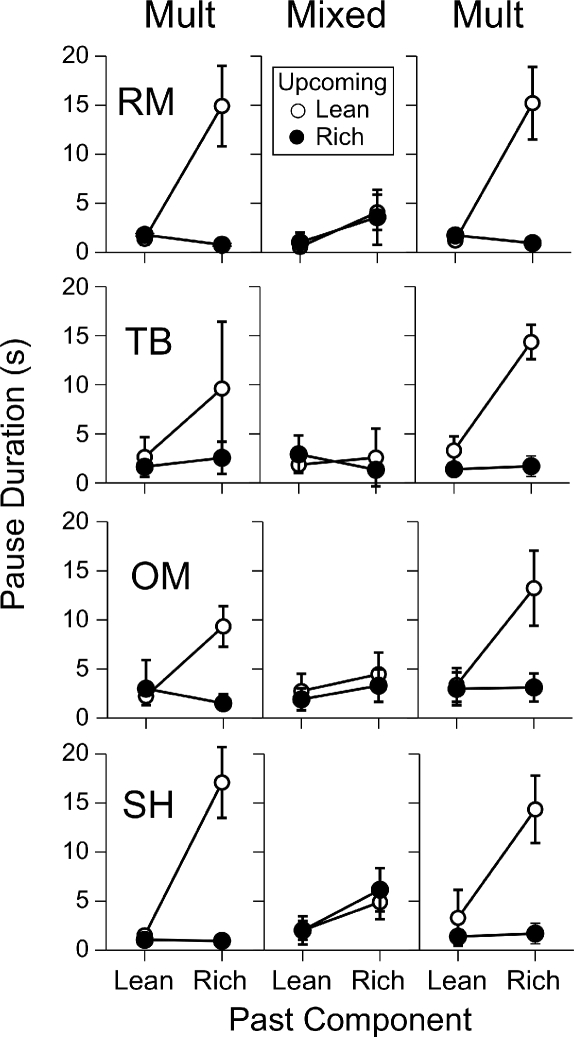

Results and Discussion

Figure 5 shows, for each participant, the mean and standard deviations of the pause durations from the last five sessions of each condition. The multiple-schedule outcomes were similar to those of Experiment 1. Under the mixed schedules, all participants showed relatively brief, undifferentiated pauses. Participants RM, OM, and SH showed slightly longer pausing after rich schedules regardless of whether the upcoming schedule was rich or lean, indicating control by the past reinforcer alone. It is worth noting that, for the 2 participants who showed relatively shorter pausing in the first multiple-schedule condition (TB and OM), mean pause durations were longer following mixed schedule exposure, and, for Participant TB, variability was greatly reduced.

Fig 5.

Mean pause duration as a function of the past and upcoming schedule component for the participants in Experiment 2. Results are from the last five sessions in conditions with the multiple (Mult) and mixed schedules. Other details as in Figure 1.

The dramatically different pattern of results across the mixed- and multiple-schedule conditions demonstrate that extended pausing was not a function of the schedule and reinforcer parameters per se. In addition, functional discriminative stimuli were necessary for the production of long pauses in the rich-to-lean transition. These results reproduce closely the results obtained from similar manipulations with pigeons (Perone & Courtney, 1992) and, as such, increase our confidence that similar processes are at work.

EXPERIMENT 3

Studies with animals have produced extended pausing in the rich-to-lean transition when the two components of a multiple schedule differed only in terms of reinforcer magnitude (defined in term of pigeons' access to grain, Perone & Courtney, 1992); rhesus monkeys' dosage of self-administered cocaine (Galuska et al., 2007); or response requirement (defined in terms of rats' FR size, Baron & Herpolsheimer, 1999; Baron et al., 1992) or rats' response-force requirement (Wade-Galuska et al., 2005). The multiple-schedule procedures that produced differential pausing in Experiments 1 and 2 differed from procedures used with animals in that both reinforcement magnitude and response requirement were less favorable in the lean component. This double-disparity procedure presumably adjusted for individual differences in sensitivity to these variables. Humans have shown individual differences in sensitivity to the reinforcer dimensions of magnitude and rate under concurrent variable-interval (VI) schedules (Dube & McIlvane, 2002). For some participants, relative response rate matched relative reinforcer rate but performance was insensitive to reinforcer magnitude. For other participants, relative response rate matched relative reinforcer magnitude but not reinforcer rate. Similar individual differences have been reported in children with behavior disorders (e.g., Neef & Lutz, 2001; Neef, Shade, & Miller, 1994).

Individual differences in sensitivity to reinforcer rate and reinforcer magnitude are not surprising, because studies of human participants generally do not involve deprivation of a primary reinforcer. The use of conditioned reinforcers that have been established prior to participation in the experiment may underlie differential sensitivity to reinforcement parameters. In Experiment 3, we sought to determine whether, in individual participants, extended pausing in the rich-to-lean transition was determined by reinforcer magnitude, response requirement, or both. To address this question, we presented conditions under which only one dimension differed across components, using the ratio size or reinforcer magnitude under which extended pausing had been demonstrated. Participants were exposed to at least one condition in which multiple-schedule components differed in terms of magnitude but not reinforcer rate or in terms of reinforcer rate but not magnitude.

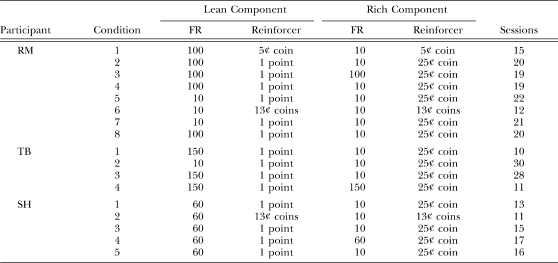

Method and Results

Participants RM, TB, and SH participated; all three also had participated in the first two experiments. Experimental conditions are presented in Table 4. Multiple-schedule stimuli and procedures were the same as in Experiment 2. One procedural element was new: Participants RM and SH were exposed to a condition in which the reinforcer was $0.13 (midway between $0.01 and $0.25). In this condition, the reinforcer involved delivery of 1 dime and 3 pennies into the Plexigas cup to the right of the participant's computer monitor.

Table 4.

Experiment 3: Fixed-ratio (FR) size and reinforcer specifications in the lean and rich schedule components, and the number of sessions, in each experimental condition.

Figure 6 shows the means and standard deviations of the pause data from the last five sessions per condition. The bottom panels show results from Participant RM. In Conditions 2, 4, and 8, the double-disparity procedure generated extended pausing in the rich-to-lean transition. In Condition 6, when the components were identical in terms of both reinforcer magnitude and response requirement, pausing was brief in every transition. The remaining conditions for Participant RM were single-disparity conditions. In Condition 1, with equal reinforcer magnitude and disparate FRs of 100 and 10, pausing was brief in all transitions (these results are from Experiment 1 and also appear in Figure 1). In Conditions 3, 5, and 7, when the components had the same response requirement but a 25∶1 disparity in reinforcer magnitude, extended pausing occurred in the rich-to-lean transition. The outcomes are similar even though in Condition 3 both components had FR 100 schedules and in Conditions 5 and 7 both components had FR 10 schedules.

Fig 6.

Mean pause duration as a function of the past and upcoming schedule component for the participants in Experiment 3. Results are from the last five sessions of each condition, defined in terms of the FR size and the magnitude of the reinforcer (“$” indicate that coins were dispensed; “p” designates that points were presented and later exchanged for pennies). Other details as in Figure 1.

The top and center panels in Figure 6 show data from Participants SH and TB, respectively. In Conditions 1, 3, and 5 for both participants, the double-disparity condition reproduced outcomes similar to those from the same condition in the previous experiments, although, for Participant TB, differences among the four transition types were not as pronounced in Conditions 3 and 5 as in Condition 1. Participant TB's extended pausing was eliminated in the rich-to-lean transition when the response requirements were equated across components (at FR 150 and FR 10 in Conditions 2 and 4, respectively) even though the disparity in reinforcer magnitude across components was maintained at 25∶1.

For Participant SH, Condition 2 shows that equating the reinforcer at $0.13, while maintaining the disparate response requirements of FR 10 and FR 60, eliminated extended pausing in the rich-to-lean transition. In Condition 4, by contrast, equating the response requirements at FR 60 while maintaining a 25∶1 disparity in reinforcer magnitude produced extended pausing in the rich-to-lean transition. This pattern of results suggests greater sensitivity to reinforcer magnitude than that seen in Participant TB.

Discussion

The primary purpose of the manipulations in Experiment 3 was to assess the sensitivity of the participants' performances to manipulations of the kinds of reinforcer and response variables used in the earlier studies. Whereas all participants showed extended pausing in the double-disparity conditions, there were individual differences in sensitivity to disparity in reinforcer magnitude alone and to disparity in response requirement alone, as reflected in pausing at the rich-to-lean transition. Participants RM and SH were more sensitive to reinforcer magnitude and Participant TB to the ratio requirement. Note that the ratio requirement is confounded with the interreinforcer interval or rate of reinforcement under these schedules. Thus it is possible that some effects of increasing the ratio requirement are attributable to the increased interreinforcer interval or a reduced rate of reinforcement. Independent manipulation of the two variables will be required to untangle these influences. The limited number of manipulations reported here necessarily limits precise, quantitative description of the effects. The results do, however, provide additional evidence that the behavior of the participants in Experiments 1 and 2 was under the control of the experimenter-arranged environmental conditions.

GENERAL DISCUSSION

All 7 participants demonstrated differentially longer pausing at the beginning of the lean component of a two-component multiple schedule only when the lean component followed the rich component. The results reproduced those obtained with pigeons (Perone & Courtney, 1992), both in demonstrating longer pausing at the rich-to-lean transition and in showing that differences in pausing across the different types of transitions do not occur under mixed schedules. Moreover, as in previous studies demonstrating that the mean pause duration is a function of FR value, the relatively long mean pause duration following the rich-to-lean transition was the result of averaging skewed distributions of individual pause durations. The reproduction of these aspects of animal performance suggests the operation of the same behavioral processes across the human and animal preparations despite the procedural differences that were required by the use of different species.

Most previous studies of human performance on simple FR schedules have not reproduced the response patterning obtained in animals (e.g., Lowe, Harzem, & Hughes, 1978). As noted by Sidman (1960) and others (e.g., Baron et al., 1991a, 1991b), it is important to explore procedural adaptations before concluding that a phenomenon is species-bound. Discrepancies between human and animal performance might occur because there is no straightforward way to equate ratio requirement and reinforcer magnitude across the human and animal procedures. The reinforcer in animal studies, for example, is typically food, and the participants are food deprived. For humans, conditioned reinforcers typically are used, and the control of deprivation is far less precise or absent altogether. Experiment 3, which showed that the behavior of individual participants differed in sensitivity to manipulations of reinforcer magnitude and ratio requirement, provides support for the role of procedural adaptations in reproducing results across (and within) species.

The notion that pausing is generated by discriminated reductions in the value of the prevailing reinforcement conditions may help explain some of the disparities in outcome across human and animal research. Even when a simple FR schedule is arranged, the onset of each ratio represents a kind of rich-to-lean transition, because at that point the experimental environment is changed from a period in which the reinforcer is consumed to a period in which responding is required. Raising the FR size may increase pausing (e.g., Powell, 1968) because it serves to increase the disparity between the relatively rich and lean moments of the procedure: The transition from reinforcement to a high FR is worse than the transition from that same reinforcement to a low FR. Perone and Courtney's (1992) multiple-schedule procedure intensified this phenomenon by juxtaposing ratios leading to different magnitudes of reinforcement, thus allowing for different kinds of transitions within a session. In human research, the use of intrinsically weak reinforcers such as points may reduce the disparity between the relatively favorable moment of reinforcer delivery and the relatively unfavorable response requirements that follow delivery. When the reinforcer is weak, the operational difference between the relatively rich and lean moments of the procedure may not make a functional difference. The present experiments overcame this problem with a double-disparity procedure that juxtaposed components with a low work requirement leading to a coin of relatively high monetary value and components with a high work requirement leading to a point exchangeable for minimal monetary value.

Pausing controlled by the transition between schedules may reflect engagement in other behaviors that remove the participant from the aversive stimuli associated with the transition (e.g., turning away from the stimuli). In this way, pausing may be a form of escape. Support for this view comes from early studies of simple schedules. For example, in a classic study, pigeons' key pecks were maintained under an FR schedule (Azrin, 1961). A peck on another key—the escape key—initiated a signaled timeout during which the schedule of food reinforcement was suspended. Another peck on the escape key reinstated the original stimuli and the schedule. Because the birds pecked the escape key, Azrin concluded that schedules of positive reinforcement have aversive aspects. Other studies of FR schedules (Appel, 1963; Ator, 1980; Thompson, 1964, 1965), FI schedules (Brown & Flory, 1972; Cohen & Campagnoni, 1989), and progressive-ratio schedules (Dardano, 1973, 1974) have confirmed escape responding during the period immediately following reinforcer delivery.

The notion that pausing might function as a form of escape that can occur when an explicit escape contingency has not been arranged is supported by the fact that manipulation of schedule parameters affects pausing and escape similarly on simple FR schedules. Escape, like pausing, tends to be directly related to increases in FR size (Azrin, 1961; Appel, 1963; Thompson, 1964, 1965) and FI duration (Brown & Flory, 1972; Cohen & Campagnoni, 1989). More recent data from multiple schedules ties the aversiveness notion directly to the procedures used in the present study. Given the opportunity to peck an escape key that suspends the current schedule component and its associated stimulus, pigeons are most likely to do so when a lean component follows a rich one, that is, in the rich-to-lean transition that also generates extended pausing.

There are other potential functions of pausing. A number of species engage in adjunctive or schedule-induced behavior during the postreinforcement interruption in responding. The form of these behaviors depends on present environmental supports and can include aggression (e.g, Todd, Morris, & Fenza, 1989; see also Pitts & Malagodi, 1996, for an especially pertinent example) or excessive (compulsive) behaviors such as polydipsia, air licking, and wheel running. These behaviors are noteworthy in that they are characterized as nonadaptive; in their exaggerated forms they exceed the biological requirements of the organism (e.g., Falk, 1967). There is no consensus on the functional significance of adjunctive behaviors. They may be elicited by stimuli associated with a low probability of reinforcement or be escape behaviors that remove the animal from those stimuli. Thus, the stimuli comprising the context of transitions from relatively rich to relatively lean may elicit incompatible adjunctive behaviors or be part of an establishing operation for other incompatible behaviors. This view is consistent with the notion that the stimuli signaling a rich-to-lean transition are aversive. Pitts and Malagodi (1996) demonstrated that pigeons' aggressive behavior under intermittent schedules of reinforcement is an increasing function of the magnitude of reinforcement, and is a function of the past and upcoming reinforcer as well. Thus, larger reinforcers can increase the aversive properties of intermittent reinforcement schedules. This is paradoxical because animals prefer the larger magnitude conditions overall, but local contexts may be more aversive than under less preferred schedules with smaller reinforcer magnitudes.

An alternative to the aversiveness theory is that, at the signaled rich-to-lean transition, the value of the upcoming reinforcer is low relative to other, concurrently available reinforcers. The reinforcer for the FR response may be discounted as a function of delay or work requirement. As a result, activities such as grooming in animals, counting coins in humans, or other reinforcers become relatively more valuable and control alternative responding.

Derenne and Baron (2002) addressed this notion experimentally. Rats' lever presses were reinforced with food under an FR schedule, and a drinking tube was continuously available. Under experimental conditions, the bottle contained Milwaukee tap water, a 0.1% saccharin solution, or a 0.5% saccharin solution. Pausing under the FR schedule increased with saccharin concentration, and the majority of the pause was spent drinking. These results are in accord with the view that pausing is a function of the emission of other, presently more valued operant-response classes when the reinforcer for the instrumental (FR) response is devalued by the rich-to-lean context inherent in FR schedules.

The reproduction in humans of behavior patterns routinely demonstrated in basic animal studies can serve important functions in the translation or bridging from basic to applied research. The procedures used to reproduce behavior patterns across species always require modifications unique to the targeted species. The homology of the obtained results at the level of process does not require homology of form, but confidence in process generality is an inverse function of the extent of procedural variants. Laboratory research with humans can be invaluable in a translational research program because, unlike clinical studies, they allow control of at least some of these variants. Ultimately, homology of process must be assessed not on exact replication of procedural details, but on the ability to reproduce and demonstrate functional relations critical to the processes under study. In the present case, the critical functional relations involve the sensitivity of pausing to differences in the incentive value of the schedule conditions and the importance of the discriminability of shifts in that value.

The notion that schedules of positive reinforcement can, under some conditions, have aversive properties may be counterintuitive to practitioners. However, this view is of potential importance for understanding clinical problems that may be maintained by negative reinforcement. Maladaptive escape behaviors and negative emotional responses occasioned by normally benign environmental conditions are defining symptoms of a variety of clinical conditions such as mood, anxiety, and obsessive-compulsive disorders, and also of the disruptive behaviors of children diagnosed with behavior disorders. In studies of problem behaviors in intellectual and developmental disabilities, escape behaviors account for the largest single function of self-injurious and aggressive behaviors (e.g., Carr, 1977, Edelson, Taubman & Lovaas, 1983; Iwata et al., 1994).

The relevance of the present findings to natural environments is not that FR schedules per se are found there in large measure. Rather, the general conditions that produce pausing—namely, discriminable transitions from more favorable to less favorable conditions—do arise in the daily lives of humans, and the behavioral processes that generate pausing may operate there. This study represents an initial step in translating basic research on schedules of reinforcement into a form potentially useful for understanding problem behaviors. No single study comprises a full translation, such as basic research to specific treatment and understanding of treatment efficacy (United States National Advisory Mental Health Council, 2000). Rather, translational research entails a program of research directed at understanding the basic processes in clinically significant behaviors, with the ultimate goal of developing treatment. To this end, future applied research directed at specific practical problems (see Bejarano, Williams & Perone, 2003), as well as additional basic research to better understand the potentially aversive aspects of positive reinforcement schedules, will be necessary.

Acknowledgments

This research was supported by grant number R01 HD044731 from NICHHD. We gratefully acknowledge Joe Spradlin for helpful comments, Pat White for editorial assistance, Rafael Bejarano, Adam Doughty, and Chad Galuska for intellectual assistance, Coleen Eisenbart for conducting the sessions, and Bill Dube for use of his software for arranging the experimental contingencies.

REFERENCES

- Appel J.B. Aversive aspects of a schedule of positive reinforcement. Journal of the Experimental Analysis of Behavior. 1963;6:423–428. doi: 10.1901/jeab.1963.6-423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ator N.A. Mirror pecking and timeout under a multiple fixed-ratio schedule of food delivery. Journal of the Experimental Analysis of Behavior. 1980;34:319–328. doi: 10.1901/jeab.1980.34-319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Azrin N.H. Time-out from positive reinforcement. Science. 1961;34:382–383. doi: 10.1126/science.133.3450.382. [DOI] [PubMed] [Google Scholar]

- Baron A, Herpolsheimer L.R. Averaging effects in the study of fixed-ratio response patterns. Journal of the Experimental Analysis of Behavior. 1999;71:145–153. doi: 10.1901/jeab.1999.71-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron A, Mikorski J, Schlund M. Reinforcement magnitude and pausing on progressive-ratio schedules. Journal of the Experimental Analysis of Behavior. 1992;58:377–388. doi: 10.1901/jeab.1992.58-377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron A, Perone M, Galizio M. Analyzing the reinforcement process at the human level: Can application and behavioristic interpretation replace laboratory research. The Behavior Analyst. 1991a;14:95–105. doi: 10.1007/BF03392557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron A, Perone M, Galizio M. The experimental analysis of human behavior: Indispensable, ancillary, or irrelevant. The Behavior Analyst. 1991b;14:145–155. doi: 10.1007/BF03392565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baum W.M. On two types of deviation from the matching law: Bias and undermatching. Journal of the Experimental Analysis of Behavior. 1974;22:231–242. doi: 10.1901/jeab.1974.22-231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bejarano R, Williams D.C, Perone M. Pausing on multiple schedules: Toward a laboratory model of escape-motivated behavior. Experimental Analysis of Human Behavior Bulletin. 2003;21:18–20. [Google Scholar]

- Blakely E, Schlinger H. Determinants of pausing under variable-ratio schedules: Reinforcer magnitude, ratio size, and schedule configuration. Journal of the Experimental Analysis of Behavior. 1988;50:65–73. doi: 10.1901/jeab.1988.50-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown T.G, Flory R.K. Schedule-induced escape from fixed-interval reinforcement. Journal of the Experimental Analysis of Behavior. 1972;17:395–403. doi: 10.1901/jeab.1972.17-395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buskist W.F, Bennett R.H, Miller H.L. Effects of instructional constraints on human fixed-interval performance. Journal of the Experimental Analysis of Behavior. 1981;35:217–225. doi: 10.1901/jeab.1981.35-217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr E.G. The motivation of self-injurious behavior: A review of some hypotheses. Psychological Bulletin. 1977;84:800–816. [PubMed] [Google Scholar]

- Cohen P.H, Campagnoni F.R. The nature and determinants of spatial retreat in the pigeon between periodic grain presentations. Animal Learning and Behavior. 1989;17:39–48. [Google Scholar]

- Crossman E.K. The effects of fixed-ratio size in multiple and mixed fixed-ratio schedules. The Psychological Record. 1971;21:535–544. [Google Scholar]

- Dardano J.F. Self-imposed timeouts under increasing response requirements. Journal of the Experimental Analysis of Behavior. 1973;19:269–287. doi: 10.1901/jeab.1973.19-269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dardano J.F. Response-produced timeouts under a progressive-ratio schedule with a punished reset option. Journal of the Experimental Analysis of Behavior. 1974;22:103–113. doi: 10.1901/jeab.1974.22-103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davey G.C.L, Harzem P, Lowe C.F. The aftereffects of reinforcement magnitude and stimulus intensity. The Psychological Record. 1975;25:217–223. [Google Scholar]

- Derenne A, Baron A. Time-out punishment of long pauses on fixed-ratio schedules of reinforcement. The Psychological Record. 2001;51:39–51. [Google Scholar]

- Derenne A, Baron A. Preratio pausing: Effects of an alternative reinforcer on fixed- and variable-ratio responding. Journal of the Experimental Analysis of Behavior. 2002;77:273–282. doi: 10.1901/jeab.2002.77-273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dube W.V. Computer software for stimulus control research with Macintosh computers. Experimental Analysis of Human Behavior Bulletin. 1991;9:28–30. [Google Scholar]

- Dube W.V, McIlvane W.J. Quantitative assessments of sensitivity to reinforcement contingencies in mental retardation. American Journal on Mental Retardation. 2002;107:136–145. doi: 10.1352/0895-8017(2002)107<0136:QAOSTR>2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- Edelson S.M, Taubman M.T, Lovaas O.I. Some social contexts of self-destructive behavior. Journal of Abnormal Child Psychology. 1983;11:299–311. doi: 10.1007/BF00912093. [DOI] [PubMed] [Google Scholar]

- Falk J.L. Control of schedule-induced polydipsia: Type, size, and spacing of meals. Journal of the Experimental Analysis of Behavior. 1967;10:199–206. doi: 10.1901/jeab.1967.10-199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felton M, Lyon D.O. The post-reinforcement pause. Journal of the Experimental Analysis of Behavior. 1966;9:131–134. doi: 10.1901/jeab.1966.9-131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galuska C.M, Wade-Galuska T, Woods J.H, Winger G. Fixed-ratio schedules of cocaine self-administration in rhesus monkeys: Joint control of responding by past and upcoming doses. Behavioral Pharmacology. 2007;18:171–175. doi: 10.1097/FBP.0b013e3280d48073. [DOI] [PubMed] [Google Scholar]

- Griffiths R.R, Thompson T. The post-reinforcement pause: A misnomer. The Psychological Record. 1973;23:229–235. [Google Scholar]

- Harzem P, Harzem A.L. Discrimination, inhibition, and simultaneous association of stimulus properties: A theoretical analysis of reinforcement. In: Harzem P, Zeiler M.D, editors. Advances in analysis of behaviour: Vol. 2. Predictability, correlation, and contiguity. New York: Wiley; 1981. pp. 81–124. (Eds.) [Google Scholar]

- Harzem P, Lowe C.F, Davey G.C.L. After-effects of reinforcement magnitude: Dependence on context. Quarterly Journal of Experimental Psychology. 1975;27:579–584. [Google Scholar]

- Harzem P, Lowe C.F, Priddle-Higson P.J. Inhibiting function of reinforcement: Magnitude effects on variable-interval schedules. Journal of the Experimental Analysis of Behavior. 1978;30:1–10. doi: 10.1901/jeab.1978.30-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hatten J.L, Shull R.L. Pausing on fixed interval schedules: Effects of the prior feeder duration. Behaviour Analysis Letters. 1983;3:101–111. [Google Scholar]

- Inman D.P, Cheney C.D. Functional variables in fixed ratio pausing with rabbits. The Psychological Record. 1974;24:193–202. [Google Scholar]

- Iwata B.A, Pace G.M, Dorsey M.F, Zarcone J.R, Vollmer T.R, Smith R.G. The functions of self-injurious behavior: An experimental-epidemiological analysis. Journal of Applied Behavior Analysis. 1994;27:215–240. doi: 10.1901/jaba.1994.27-215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen C, Fallon D. Behavioral aftereffects of reinforcement and its omission as a function of reinforcement magnitude. Journal of the Experimental Analysis of Behavior. 1973;19:459–468. doi: 10.1901/jeab.1973.19-459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keller F.S, Schoenfeld W.N. Principles of psychology: A systematic text in the science of behavior. New York: Appleton-Century-Crofts; 1950. [Google Scholar]

- Killeen P. Reinforcement frequency and contingency as factors in fixed-ratio behavior. Journal of the Experimental Analysis of Behavior. 1969;12:391–395. doi: 10.1901/jeab.1969.12-391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowe C.F. Determinants of human operant behavior. In: Zieler M.D, Harzem P, editors. Advances in analysis of behaviour, Vol. 1: Reinforcement and the organization of behaviour. New York: John Wiley & Sons; 1978. pp. 159–192. (Eds.) [Google Scholar]

- Lowe C.F, Davey G.C.L, Harzem P. Effects of reinforcement magnitude on interval and ratio schedules. Journal of the Experimental Analysis of Behavior. 1974;22:553–560. doi: 10.1901/jeab.1974.22-553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowe C.F, Harzem P. Species differences in temporal control of behavior. Journal of the Experimental Analysis of Behavior. 1977;28:189–201. doi: 10.1901/jeab.1977.28-189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowe C.F, Harzem P, Bagshaw M. Species differences in temporal control of behavior II: Human performance. Journal of the Experimental Analysis of Behavior. 1978;29:351–361. doi: 10.1901/jeab.1978.29-351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowe C.F, Harzem P, Hughes S. Determinants of operant behavior in humans: Some differences from animals. Quarterly Journal of Experimental Psychology. 1978;30:373–386. doi: 10.1080/14640747808400684. [DOI] [PubMed] [Google Scholar]

- Madden G.J, Perone M. Human sensitivity to concurrent schedules of reinforcement: Effects of observing schedule-correlated stimuli. Journal of the Experimental Analysis of Behavior. 1999;71:303–318. doi: 10.1901/jeab.1999.71-303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matthews B.A, Shimoff E, Catania A.C, Sagvolden T. Uninstructed human responding: Sensitivity to ratio and interval contingencies. Journal of the Experimental Analysis of Behavior. 1977;27:453–467. doi: 10.1901/jeab.1977.27-453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller L.K. Escape from an effortful situation. Journal of the Experimental Analysis of Behavior. 1968;11:619–627. doi: 10.1901/jeab.1968.11-619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neef N.A, Lutz M.N. Assessment of variables affecting choice and application to classroom interventions. School-Psychology-Quarterly. 2001;16:239–252. [Google Scholar]

- Neef N.A, Shade D, Miller M.S. Assessing influential dimensions of reinforcers on choice in students with serious emotional disturbance. Journal of Applied Behavior Analysis. 1994;27:575–583. doi: 10.1901/jaba.1994.27-575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perone M. Negative effects of positive reinforcement. The Behavior Analyst. 2003;26:1–14. doi: 10.1007/BF03392064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perone M, Courtney K. Fixed-ratio pausing: Joint effects of past reinforcer magnitude and stimuli correlated with upcoming magnitude. Journal of the Experimental Analysis of Behavior. 1992;57:33–46. doi: 10.1901/jeab.1992.57-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perone M, Perone C.L, Baron A. Inhibition by reinforcement: Effects of reinforcer magnitude and timeout on fixed-ratio pausing. The Psychological Record. 1987;37:227–238. [Google Scholar]

- Pitts R.C, Malagodi E.F. Effects of reinforcement amount on attack induced under a fixed-interval schedule in pigeons. Journal of the Experimental Analysis of Behavior. 1996;65:93–110. doi: 10.1901/jeab.1996.65-93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell R.W. The effect of small sequential changes in fixed-ratio size upon the post-reinforcement pause. Journal of the Experimental Analysis of Behavior. 1968;11:589–593. doi: 10.1901/jeab.1968.11-589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell R.W. The effect of reinforcement magnitude upon responding under fixed-ratio schedules. Journal of the Experimental Analysis of Behavior. 1969;12:605–608. doi: 10.1901/jeab.1969.12-605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priddle-Higson P.J, Lowe C.F, Harzem P. Aftereffects of reinforcement on variable-ratio schedules. Journal of the Experimental Analysis of Behavior. 1976;25:347–354. doi: 10.1901/jeab.1976.25-347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rider D.P, Kametani N.N. Interreinforcement time, work time, and the postreinforcement pause. Journal of the Experimental Analysis of Behavior. 1984;42:305–319. doi: 10.1901/jeab.1984.42-305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlinger H, Blakely E, Kaczor T. Pausing under variable-ratio schedules: Interaction of reinforcer magnitude, variable-ratio size, and lowest ratio. Journal of the Experimental Analysis of Behavior. 1990;53:133–139. doi: 10.1901/jeab.1990.53-133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shull R.L. The postreinforcement pause: Some implications for the correlational law of effect. In: Zeiler M.D, Harzem P, editors. Advances in analysis of behaviour: Vol. 1, Reinforcement and the organization of behaviour. New York: Wiley; 1979. pp. 193–221. (Eds.) [Google Scholar]

- Sidman M. Tactics of scientific research. New York: Basic Books; 1960. [Google Scholar]

- Staddon J.E.R. Effect of reinforcement duration on fixed-interval responding. Journal of the Experimental Analysis of Behavior. 1970;13:9–11. doi: 10.1901/jeab.1970.13-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staddon J.E.R. Temporal control, attention, and memory. Psychological Review. 1974;81:375–391. [Google Scholar]

- Thompson D.M. Escape from S+ associated with fixed-ratio reinforcement. Journal of the Experimental Analysis of Behavior. 1964;7:1–8. doi: 10.1901/jeab.1964.7-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson D.M. Punishment by S+ associated with fixed-ratio reinforcement. Journal of the Experimental Analysis of Behavior. 1965;8:189–194. doi: 10.1901/jeab.1965.8-189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd J.T, Morris E.K, Fenza K.M. Temporal organization of extinction-induced responding in preschool children. The Psychological Record. 1989;39:117–130. [Google Scholar]

- United States National Advisory Mental Health Council Behavioral Science Workgroup (2000). Translating behavioral science into action: report of the National Advisory Mental Health Council Behavioral Science Workgroup. Bethesda, MD: National Institutes of Health/National Institute of Mental Health; 2000. NIH. Publication No. 00-4699. [Google Scholar]

- Wade-Galuska T, Perone M, Wirth O. Effects of past and upcoming response-force requirements on fixed-ratio pausing. Behavioral Processes. 2005;68:91–95. doi: 10.1016/j.beproc.2004.10.001. [DOI] [PubMed] [Google Scholar]

- Zeiler M.D. Schedules of reinforcement: The controlling variables. In: Honig W.K, Staddon J.E.R, editors. Handbook of operant behavior. Englewood Cliffs, NJ: Prentice-Hall; 1977. pp. 201–232. (Eds.) [Google Scholar]