Abstract

Patients suffering from addiction persist in consuming substances of abuse, despite negative consequences or absence of positive consequences. One potential explanation is that these patients are impaired at flexibly adapting their behavior to changes in reward contingencies. A key aspect of adaptive decision-making involves updating the value of behavioral options. This is thought to be mediated via a teaching signal expressed as a reward prediction error (PE) in the striatum. However, to exert control over adaptive behavior, value signals need to be broadcast to higher executive regions, such as prefrontal cortex. Here we used functional MRI and a reinforcement learning task to investigate the neural mechanisms underlying maladaptive behavior in human male alcohol-dependent patients. We show that in alcohol-dependent patients the expression of striatal PEs is intact. However, abnormal functional connectivity between striatum and dorsolateral prefrontal cortex (dlPFC) predicted impairments in learning and the magnitude of alcohol craving. These results are in line with reports of dlPFC structural abnormalities in substance dependence and highlight the importance of frontostriatal connectivity in addiction, and its pivotal role in adaptive updating of action values and behavioral regulation. Furthermore, they extend the scope of neurobiological deficits underlying addiction beyond the focus on the striatum.

Introduction

A hallmark of addiction is the continuous use of substances despite adverse consequences (American Psychiatric Association, 1994; Kalivas and Volkow, 2005). One hypothesis is that addicts have difficulties in integrating reinforcements to guide future behavior. One possible mechanism for this is abnormal computation of reward prediction errors (PEs), reflecting the difference between expected and experienced outcomes. The firing properties of dopaminergic midbrain neurons correlate with trial-by-trial changes in PEs (Schultz et al., 1997; Bayer and Glimcher, 2005), a finding paralleled in human neuroimaging studies that show activity in ventral striatum (VS), a target area of dopaminergic midbrain neurons, correlates with PE (McClure et al., 2003; O'Doherty et al., 2003; Pessiglione et al., 2006) and expectancies of uncertain outcomes (Breiter et al., 2001). These error signals are used to update the reward values associated with stimuli or actions in the striatum (Reynolds et al., 2001; Frank and Claus, 2006). Therefore, it is conceivable that abnormal representation of PE in the striatum of substance-dependent individuals leads to an inability to update the value of behavioral options and generate the observed impairments.

However, the representation of PE alone is insufficient for successful decision-making. Even if these signals are appropriately represented, they must impact on higher executive control processes such as those implemented in dorsolateral prefrontal cortex (dlPFC, BA9/46). The dlPFC is involved in a variety of higher order executive functions essential for goal-directed behavior and decision-making, such as integrating information over longer time scales (Barraclough et al., 2004), coding action-outcome conjunctions (Lee and Seo, 2007), action planning (Mushiake et al., 2006) and self-control (Knoch et al., 2006; Hare et al., 2009). Evidence from primate electrophysiology studies demonstrates that reward associations initially formed in striatum are subsequently used to guide learning in dlPFC (Pasupathy and Miller, 2005). Furthermore, studies of alcohol-dependent patients show dlPFC volume abnormalities (Makris et al., 2008b), while cocaine-dependent polysubstance abusers show reduced dlPFC cortical thickness associated with abnormal decision-making (Makris et al., 2008a). Altogether, these results suggest that impaired frontostriatal connectivity could disrupt propagation of value information to dlPFC and contribute to the observed behavioral impairments.

Recently it has been shown that the striatum of smokers represents fictive error signals, although they appear not to be used to guide behavior (Chiu et al., 2008). We hypothesized that the impairment of substance-dependent individuals in adaptive behavior reflects abnormal frontostriatal connectivity, whereas representation of PE in the striatum remains intact. Second, we hypothesized that impairments in functional coupling should be accompanied by observable behavioral dysfunctions such as slower learning. Finally, given the role of dlPFC in self-control during decision-making (Knoch et al., 2006; Hare et al., 2009), structural abnormalities in the dlPFC in substance abusers (Makris et al., 2008a,b) and the role of striatal dopamine in cue-induced craving (Heinz et al., 2004; Everitt and Robbins, 2005; Di Chiara and Bassareo, 2007), we hypothesized that frontostriatal coupling should be related to patients' ability to control their alcohol-craving.

Materials and Methods

Subjects.

Twenty male abstinent alcohol-dependent patients (aged 26–57, mean 42.45 ± 1.8) and 16 healthy male subjects (aged 23–47, mean 37.8 ± 2.22) were included. Patients and controls were right-handed and of central European origin. Written informed consent was obtained after the procedure was fully explained. The study was approved by the Ethics Committee of the Charité–Universitätsmedizin Berlin. The patients were diagnosed as alcohol-dependent according to ICD-10 (Dilling et al., 1991) and DSM-IV (American Psychiatric Association, 1994) criteria and had no other neurological or psychiatric disorder. Before the experiment, patients abstained from alcohol in an inpatient detoxification treatment program for at least 7 d (mean: 16.9 d) and were free of psychotropic medication (e.g., benzodiazepine or chlormethiazole) for at least four half-life-periods. Healthy subjects had no history of psychiatric or neurological disorder.

Because smoking is also an addiction, analyses were statistically controlled for smoking. Groups did not differ in age and smoking behavior (age: t 34 = 1.66, smoking: χ2 = 0.73, all p > 0.1). The severity of alcohol craving was assessed using the Alcohol Urge Questionnaire (Bohn et al., 1995) before fMRI acquisition. Three patients and two control subject refused to report their current subjective craving. To avoid the confound in general intelligence, subjects also performed a verbal intelligence test (WST, Schmidt and Metzler, 1992). Three patients denied performing this test.

Task description.

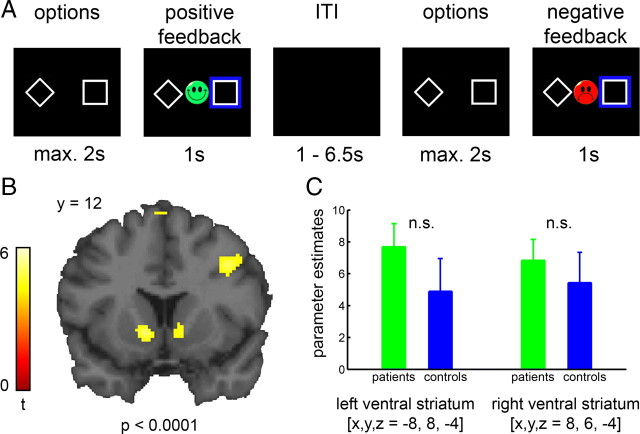

During fMRI acquisition, subjects performed a reward-guided decision-making task with dynamically changing response-outcome contingencies (Kahnt et al., 2009). In each trial, two abstract stimuli were presented on the left- and right-hand sides of the screen (Fig. 1 A) and subjects were asked to choose one with the left or right thumb on a response box as quickly as possible (maximum time: 2 s). Following the response, a blue box surrounded the chosen stimulus and feedback was presented for 1 s. The feedback was either a green smiling face for positive feedback or a red frowning face for negative feedback. Trials were separated by a variable interval of 1.5–6.5 s.

Figure 1.

Task description and PE-related activity in ventral striatum. A, Subjects chose one option using button press and were rewarded or punished according to probabilistic rules, which changed every 10 to 16 trials. Three rules were introduced to subjects: (1) 80% right-hand and 20% left-hand response leading to reward, otherwise to punishment, (2) the inverted rule, and (3) 50% reward and 50% punishment for either-hand response. B, BOLD activity in bilateral ventral striatum of all subjects correlating with PE. C, Parameter estimates of striatal activity correlating with PE. No group differences were observed (left: t 34 = 1.17, p = 0.25; right: t 34 = 0.64, p = 0.52).

Reward allocation was determined probabilistically. There were three types of reward allocation (i.e., block types); (1) 20% for the left- and 80% for the right-hand response leading to reward, (2) 80% for the left- and 20% for the right-hand response leading to reward, and (3) 50% reward for the left- and for the right-hand response. Block types changed unpredictably for the subject when two criteria were fulfilled; (1) minimum of 10 trials and (2) minimum of 70% correct responses in the entire block. If subjects did not reach learning criteria after 16 trials, the task went over to the next block automatically. The experiment included two runs à 100 trials. Before entering the scanner, subjects performed a practice version of the task (without reversal component) to be introduced to the probabilistic character of the task. Subjects were instructed to win as often as possible.

Behavioral analyses—reinforcement learning model.

As a comprehensive measure of learning performance, learning speed was computed for each subject. This is defined as the average number of trials to reach learning criterion: 4 correct responses over a sliding window of 5 trials during 20/80 and 80/20 blocks. We then analyzed behavioral and neural data using a standard reinforcement learning model (Sutton and Barto, 1998). Similar models have been used previously to study healthy and addicted subjects (O'Doherty et al., 2003; Samejima et al., 2005; Pessiglione et al., 2006; Cohen and Ranganath, 2007; Chiu et al., 2008; Kahnt et al., 2009). The model uses a PE (δ) to update action values (Q) associated with each response (left and right). PE is defined as the difference between the received outcome and the expected outcome, which is the value of the chosen response. δt = r t − Q(chosen)t. The action value of the chosen response is then updated according to Q t+1 = Q t + α(outcome) × d t and that of the unchosen option is updated according to Q t+1 = Q t. Here, α(outcome) is a set of learning rates for positive [α(win)] and negative outcomes [α(loss)], which scale the effect of the PE on future action values. Model predictions were generated by soft-max action selection on the differences between the action values of both responses:

|

Here, β is the inverse temperature controlling the stochasticity of the choices. Learning rates and the inverse temperature were estimated for each subject individually by fitting the model predictions to subjects' actual behavior. The model fit was then compared between the groups. First, we regressed the model prediction against the actual behavior and performed a two-sample t test with the standardized regression coefficients. Also, the individually estimated learning rates and the inverse temperature were tested for group differences using a two-way ANCOVA (parameter × group and smoking as covariate).

MRI acquisition and preprocessing.

Functional imaging was conducted on a 3.0 Tesla GE Signa scanner with an 8 channel head coil to acquire gradient echo T2*-weighted echo-planar images. The acquisition plane was tilted 30° from the anterior–posterior commissure. In each session, 310 volumes (∼12 min) containing 29 slices (4 mm thick) were acquired. The imaging parameter were as follows: repetition time (TR) 2.3 s, echo time (TE) 27 ms, α = 90°, matrix size 96 × 96 and a field of view (FOV) of 260 mm, thus yielding an in-plane voxel resolution of 2.71 mm2. We were unable to acquire data from the ventromedial part of the orbitofrontal cortex due to susceptibility artifacts at air-tissue interfaces.

Functional data were analyzed using SPM5 (Wellcome Department of Imaging Neuroscience, Institute of Neurology, London, UK). The first three volumes of each session were discarded. For preprocessing, images were slice time corrected, realigned, spatially normalized to a standard T2* template of the Montreal Neurological Institute (MNI), resampled to 2 mm isotropic voxels, and spatially smoothed using an 8 mm full-width at half-maximum (FWHM) Gaussian kernel.

fMRI data analysis.

To examine neural responses that correlate with ongoing PEs, we set up a general linear model (GLM) with a parametric design. The stimulus functions for reward and loss feedback were parametrically modulated by the trial-wise PE and convolved with a hemodynamic response function (HRF) to provide the regressors for the GLM. These regressors were then orthogonalized with respect to the regressors of reward and loss trials and simultaneously regressed against the blood oxygenation level-dependent (BOLD) signal in each voxel. Individual contrast images were computed for PE-related responses and taken to a second-level random effect analysis using one-sample t test. Between-group whole-brain comparisons were conducted using two-sample t tests. Thresholds for all whole brain analyses were set to p < 0.0001 uncorrected with an extend threshold of 50 continuous voxels.

To test for region-specific between-group differences in the ventral striatum, we extracted the parameter estimates of each subject from the region of interest (ROI), defined from clusters in bilateral ventral striatum, in which activity correlated significantly with PE across all subjects from both groups (Fig. 1 B). Parameter estimates were averaged across voxels and then included into a two-sample t test.

Functional connectivity analysis.

To investigate alterations in the functional connectivity of the ventral striatum in addiction, we used the “psychophysiological interaction” (PPI) term (Friston et al., 1997; Pessoa et al., 2002). Here, the entire time series over the experiment was extracted from each subject in the clusters of the ventral striatum in which activity significantly correlated (p < 0.0001 uncorrected, k = 50) with PE and collapsed over both hemispheres (because the time courses were highly correlated; r = 0.83, p < 0.01). To create the PPI regressor, we multiplied these normalized time series with condition vectors containing ones for 6 TRs after each feedback type and zeros otherwise. The method used here relies on correlations in the observed BOLD time-series data and makes no assumptions about the nature of the neural event contributing to the BOLD signal (Kahnt et al., 2009). This time window was selected to capture the entire hemodynamic response function, which peaks after 3 TRs and is back at baseline about 8 TRs after stimulus onset. These regressors were used as covariates in a separate regression, which also included regressors for reward and loss trials convolved with a HRF to account for feedback related activity. The resulting parameter estimates represent the extent to which activity in each voxel correlates with activity in the ventral striatum. Individual contrast images for functional connectivity during win > loss feedback were then computed and entered into one- and two-sample t tests.

To further examine group differences, the strength of functional connectivity was extracted during reward and loss feedback in each subject. For this, a ROI was defined from the significant cluster (p < 0.0001, uncorrected and k = 50) in the contrast controls > patients (comparing functional connectivity during win > loss). The differential connectivity strength (win > loss) between the ventral striatum and dlPFC was then used to predict the individual learning speed and self-reported craving (Alcohol Urge Questionnaire).

Results

Behavioral results

There was no group difference in general intelligence (t 31 = 0.57, p = 0.57, mean patients (n = 17): 104.59 ± 15.26, mean controls (n = 16): 107.5 ± 13.76). During the reinforcement learning task, patients needed significantly more trials than the control group to meet learning criteria (patients: 7.98 ± 0.19, controls: 7.42 ± 0.15, t 34 = 2.25, p < 0.05). This group difference was also significant, when learning speed was computed using an alternative criterion of 4 correct responses over a sliding window of 6 trials (t 34 = 2.89, p < 0.01).

The fit of the model to subject's behavior did not differ significantly between groups (t 34 = 0.74, p = 0.47). A two-way ANCOVA on model parameters showed neither a significant main effect of group (F (1,33) = 0.2, p = 0.66) nor a significant group by parameter interaction (F (2,66) = 0.15, p = 0.86). Thus, the model fitted subjects' behavior in both groups equally well and did not differ in estimated parameters, suggesting similar cognitive parameters underlying PE computation in these groups; an important prerequisite for comparing model-based fMRI results between groups.

fMRI results

Across all subjects, individually generated trial-wise PE correlated significantly with BOLD signal in midbrain, bilateral ventral striatum, orbitofrontal cortex, anterior cingulate cortex and dorsolateral prefrontal cortex (supplemental Table S1, available at www.jneurosci.org as supplemental material). Activity in striatum peaked at the ventral intersection between caudate and putamen (MNI [x, y, z], left [−8, 8, −4], t 35 = 5.2, p < 0.0001; right [8, 6, −4], t 35 = 4.76, p < 0.0001; Fig. 1 B), consistent with activations in the nucleus accumbens (Breiter et al., 1997).

In accordance with previous results in smokers (Chiu et al., 2008), a whole-brain group comparison revealed no differences between groups (p < 0.0001). To further probe group differences in PE-related activity in the ventral striatum, we performed an analysis within the ROI. No between-group differences were observed even using a liberal threshold (left: t 34 = 1.17, p = 0.25; right: t 34 = 0.64, p = 0.52, Fig. 1 C). Thus, it appears that patients represent PEs in the ventral striatum and other brain regions of similar magnitude to that of healthy controls.

Given an absence of group differences in striatal PE signals, we next examined an alternative hypothesis of alteration in frontostriatal connectivity by computing PPI using the striatal cluster defined on the basis of our PE analysis. Across all subjects, whole-brain connectivity analysis showed significant functional connectivity of the ventral striatum during win > loss trials with the bilateral dorsolateral prefrontal cortex, orbitofrontal cortex, parietal cortex, precuneus and midbrain (supplemental Table S2, available at www.jneurosci.org as supplemental material).

When comparing controls with patients, functional connectivity of the ventral striatal seed during win versus loss trials revealed significant group differences in the right dlPFC ([52, 28, 30], t 34 = 5.12, p < 0.0001, Fig. 2 A). Within the control group, frontostriatal connectivity was significantly stronger during win compared with loss feedback (t 15 = 8.78, p < 0.001) a feedback-related modulation that was absent in the patient group (t 19 = 1.45, p = 0.16, Fig. 2 B). No other brain region showed a significant group difference (supplemental Fig. S2, available at www.jneurosci.org as supplemental material). We performed two control analyses to rule out the possibility that this group difference is due to a general difference in connectivity. Task-independent connectivity did not reveal any significant group differences in connectivity between VS and dlPFC. Furthermore, a PPI model with modulation by button press (right vs left) did not reveal significant group differences in the dlPFC. These results suggest that the group difference in frontostriatal connectivity is specific for modulation by feedback (win > loss).

Figure 2.

Impaired frontostriatal connectivity in addiction. A, Functional connectivity analysis with ventral striatum as seed region revealed group differences in dlPFC during win compared with loss trials. B, Functional connectivity in controls distinguishes significantly between win and loss trials (t 15 = 8.78, p < 0.001). This feedback-related modulation in functional connectivity was absent in patients (t 19 = 1.45, p = 0.16). Asterisks indicate statistical significance. C, Relationship between learning speed and frontostriatal connectivity modulation. The smaller the feedback-related connectivity modulation, the more trials were required to learn the rule (r = −0.38, p < 0.05). D, Relationship between reported craving and frontostriatal connectivity modulation. The greater the reported craving the less pronounced was the connectivity modulation (r = −0.52, p < 0.01). Solid lines in C and D represent best fitting regression line.

Finally, to provide face validity to our PPI results, we investigated whether frontostriatal connectivity correlated with behavioral learning performance and patients' ability to control alcohol craving. We found that the differential modulation of connectivity significantly correlated with the number of trials needed to learn the new rule after reversals (r = −0.38, p < 0.05; Fig. 2 C). Performing the correlation analysis with the alternative learning criterion (4 correct responses over a sliding window of 6 trials) yielded a similar result (r = −0.39, p < 0.05). Furthermore, the stronger the outcome-dependent frontostriatal connectivity modulation, the smaller the reported craving (r = −0.52, p < 0.01, Fig. 2 D), a relationship that was significantly stronger in patients than controls (z = 2.73, p < 0.01; see also supplemental Fig. S1, available at www.jneurosci.org as supplemental material).

Discussion

In this study we show that abnormal decision-making in alcohol dependence is associated with impaired frontostriatal connectivity rather than with a deficit in the representation of PE per se. Dorsolateral prefrontal cortex has been suggested to integrate motivational information (such as reward history) and cognitive information (such as contextual representations) (Sakagami and Watanabe, 2007), functions that appear to rely in part on interactions with the striatum and other subcortical structures (Cools et al., 2007; Delgado et al., 2008). Furthermore, a recent study has shown volume abnormalities in the dlPFC of patients with alcohol dependence (Makris et al., 2008b). Even though PPI does not provide any information about directionality, our results suggest that in addiction, whereas PEs are accurately coded in the ventral striatum, abnormal signal propagation between VS and dlPFC leads to impairments in modifying and controlling behavior following reinforcement. This is in line with a hallmark of addiction, namely, the inability to take the consequences of behavior into account when making choices, even though patients are aware of these consequences.

Relative to controls, alcohol-dependent patients had impaired modulation of frontostriatal connectivity by valence. Enhanced connectivity during reward contexts provides a mechanism that enables reinforcement of the current action in the dlPFC by striatal reward signals. Conversely, a relative lack of connectivity during unrewarded behavior would be expected to lessen the impact of an associated action plan in dlPFC. Our findings demonstrate that a lack of modulation of frontostriatal interactions is predictive of both maladaptive drug-related (i.e., patient's ability to control their alcohol-craving) and non-drug-related (i.e., learning performance) behavior. A recent study by Hare et al. (2009) has shown that the functional connectivity between dlPFC and value-sensitive brain regions is associated with self-control in the face of appealing but unhealthy food items. Also, activity in the dlPFC increases when subjects are asked to actively regulate reward expectations using cognitive strategies (Delgado et al., 2008). In line with this, a study using transcranial magnetic stimulation (TMS) inducing temporary disruption of activity in the right dlPFC has demonstrated that even if subjects are aware of the unfairness of an offer, they were not able to use this information for their decisions (Knoch et al., 2006). Studies with transcranial direct current stimulation (tDCS) and repetitive TMS targeting dlPFC have shown that modulation of activity in dlPFC significantly reduces not only risk-taking behavior (Fecteau et al., 2007), but also craving for alcohol (Boggio et al., 2008), cocaine (Camprodon et al., 2007) and food (Uher et al., 2005). Furthermore, Makris et al. (2008a) have demonstrated that the volume abnormalities in the dlPFC of polysubstance abusers predict restricted behavioral repertoires, a defining feature of addiction.

In summary, our results provide evidence that disrupted functional coupling between striatum and prefrontal cortex is associated with core symptoms of addiction. This mechanism is related to both deficits in reward guided decision-making and patients' inability to control their drug-craving. Finally, these observations extend the scope of neurobiological deficits underlying addiction above and beyond the dopaminergic striatum.

Footnotes

This study was supported by the German Research Foundation (Deutsche Forschungsgemeinschaft, HE2597/4-3 and 7-3) and by the Bernstein Center for Computational Neuroscience Berlin (Bundesministerium für Bildung und Forschung Grants 01GQ0411 and 01GS08159).

References

- American Psychiatric Association. Washington DC: American Psychiatric Association; 1994. Diagnostic and Statistical Manual of Mental Disorders (DSM IV) [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47:129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boggio PS, Sultani N, Fecteau S, Merabet L, Mecca T, Pascual-Leone A, Basaglia A, Fregni F. Prefrontal cortex modulation using transcranial DC stimulation reduces alcohol craving: a double-blind, sham-controlled study. Drug Alcohol Depend. 2008;92:55–60. doi: 10.1016/j.drugalcdep.2007.06.011. [DOI] [PubMed] [Google Scholar]

- Bohn MJ, Krahn DD, Staehler BA. Development and initial validation of a measure of drinking urges in abstinent alcoholics. Alcohol Clin Exp Res. 1995;19:600–606. doi: 10.1111/j.1530-0277.1995.tb01554.x. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Gollub RL, Weisskoff RM, Kennedy DN, Makris N, Berke JD, Goodman JM, Kantor HL, Gastfriend DR, Riorden JP, Mathew RT, Rosen BR, Hyman SE. Acute effects of cocaine on human brain activity and emotion. Neuron. 1997;19:591–611. doi: 10.1016/s0896-6273(00)80374-8. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron. 2001;30:619–639. doi: 10.1016/s0896-6273(01)00303-8. [DOI] [PubMed] [Google Scholar]

- Camprodon JA, Martínez-Raga J, Alonso-Alonso M, Shih MC, Pascual-Leone A. One session of high frequency repetitive transcranial magnetic stimulation (rTMS) to the right prefrontal cortex transiently reduces cocaine craving. Drug Alcohol Depend. 2007;86:91–94. doi: 10.1016/j.drugalcdep.2006.06.002. [DOI] [PubMed] [Google Scholar]

- Chiu PH, Lohrenz TM, Montague PR. Smokers' brains compute, but ignore, a fictive error signal in a sequential investment task. Nat Neurosci. 2008;11:514–520. doi: 10.1038/nn2067. [DOI] [PubMed] [Google Scholar]

- Cohen MX, Ranganath C. Reinforcement learning signals predict future decisions. J Neurosci. 2007;27:371–378. doi: 10.1523/JNEUROSCI.4421-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cools R, Sheridan M, Jacobs E, D'Esposito M. Impulsive personality predicts dopamine-dependent changes in frontostriatal activity during component processes of working memory. J Neurosci. 2007;27:5506–5514. doi: 10.1523/JNEUROSCI.0601-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delgado MR, Gillis MM, Phelps EA. Regulating the expectation of reward via cognitive strategies. Nat Neurosci. 2008;11:880–881. doi: 10.1038/nn.2141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Chiara G, Bassareo V. Reward system and addiction: what dopamine does and doesn't do. Curr Opin Pharmacol. 2007;7:69–76. doi: 10.1016/j.coph.2006.11.003. [DOI] [PubMed] [Google Scholar]

- Dilling H, Mombour W, Schmidt MH. Bern: Huber; 1991. Internationale Klassifikation psychischer Störungen: ICD-10 Kapitel V (F). Klinisch-diagnostische Leitlinien. [Google Scholar]

- Everitt BJ, Robbins TW. Neural systems of reinforcement for drug addiction: from actions to habits to compulsion. Nat Neurosci. 2005;8:1481–1489. doi: 10.1038/nn1579. [DOI] [PubMed] [Google Scholar]

- Fecteau S, Pascual-Leone A, Zald DH, Liguori P, Théoret H, Boggio PS, Fregni F. Activation of prefrontal cortex by transcranial direct current stimulation reduces appetite for risk during ambiguous decision making. J Neurosci. 2007;27:6212–6218. doi: 10.1523/JNEUROSCI.0314-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank MJ, Claus ED. Anatomy of a decision: striato-orbitofrontal interactions in reinforcement learning, decision making, and reversal. Psychol Rev. 2006;113:300–326. doi: 10.1037/0033-295X.113.2.300. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Buechel C, Fink GR, Morris J, Rolls E, Dolan RJ. Psychophysiological and modulatory interactions in neuroimaging. Neuroimage. 1997;6:218–229. doi: 10.1006/nimg.1997.0291. [DOI] [PubMed] [Google Scholar]

- Hare TA, Camerer CF, Rangel A. Self-control in decision-making involves modulation of the vmPFC valuation system. Science. 2009;324:646–648. doi: 10.1126/science.1168450. [DOI] [PubMed] [Google Scholar]

- Heinz A, Siessmeier T, Wrase J, Hermann D, Klein S, Grüsser-Sinopoli SM, Flor H, Braus DF, Buchholz HG, Gründer G, Schreckenberger M, Smolka MN, Rösch F, Mann K, Bartenstein P. Correlation between dopamine D-2 receptors in the ventral striatum and central processing of alcohol cues and craving. Am J Psychiatry. 2004;161:1783–1789. doi: 10.1176/appi.ajp.161.10.1783. [DOI] [PubMed] [Google Scholar]

- Kahnt T, Park SQ, Cohen MX, Beck A, Heinz A, Wrase J. Dorsal striatal-midbrain connectivity in humans predicts how reinforcements are used to guide decisions. J Cogn Neurosci. 2009;21:1332–1345. doi: 10.1162/jocn.2009.21092. [DOI] [PubMed] [Google Scholar]

- Kalivas PW, Volkow ND. The neural basis of addiction: a pathology of motivation and choice. Am J Psychiatry. 2005;162:1403–1413. doi: 10.1176/appi.ajp.162.8.1403. [DOI] [PubMed] [Google Scholar]

- Knoch D, Pascual-Leone A, Meyer K, Treyer V, Fehr E. Diminishing reciprocal fairness by disrupting the right prefrontal cortex. Science. 2006;314:829–832. doi: 10.1126/science.1129156. [DOI] [PubMed] [Google Scholar]

- Lee D, Seo H. Mechanisms of reinforcement learning and decision making in the primate dorsolateral prefrontal cortex. Ann N Y Acad Sci. 2007;1104:108–122. doi: 10.1196/annals.1390.007. [DOI] [PubMed] [Google Scholar]

- Makris N, Gasic GP, Kennedy DN, Hodge SM, Kaiser JR, Lee MJ, Kim BW, Blood AJ, Evins AE, Seidman LJ, Iosifescu DV, Lee S, Baxter C, Perlis RH, Smoller JW, Fava M, Breiter HC. Cortical thickness abnormalities in cocaine addiction—a reflection of both drug use and a pre-existing disposition to drug abuse? Neuron. 2008a;60:174–188. doi: 10.1016/j.neuron.2008.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makris N, Oscar-Berman M, Jaffin SK, Hodge SM, Kennedy DN, Caviness VS, Marinkovic K, Breiter HC, Gasic GP, Harris GJ. Decreased volume of the brain reward system in alcoholism. Biol Psychiatry. 2008b;64:192–202. doi: 10.1016/j.biopsych.2008.01.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClure SM, Berns GS, Montague PR. Temporal prediction errors in a passive learning task activate human striatum. Neuron. 2003;38:339–346. doi: 10.1016/s0896-6273(03)00154-5. [DOI] [PubMed] [Google Scholar]

- Mushiake H, Saito N, Sakamoto K, Itoyama Y, Tanji J. Activity in the lateral prefrontal cortex reflects multiple steps of future events in action plans. Neuron. 2006;50:631–641. doi: 10.1016/j.neuron.2006.03.045. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- Pasupathy A, Miller EK. Different time courses of learning-related activity in the prefrontal cortex and striatum. Nature. 2005;433:873–876. doi: 10.1038/nature03287. [DOI] [PubMed] [Google Scholar]

- Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Gutierrez E, Bandettini P, Ungerleider L. Neural correlates of visual working memory: fMRI amplitude predicts task performance. Neuron. 2002;35:975–987. doi: 10.1016/s0896-6273(02)00817-6. [DOI] [PubMed] [Google Scholar]

- Reynolds JN, Hyland BI, Wickens JR. A cellular mechanism of reward-related learning. Nature. 2001;413:67–70. doi: 10.1038/35092560. [DOI] [PubMed] [Google Scholar]

- Sakagami M, Watanabe M. Integration of cognitive and motivational information in the primate lateral prefrontal cortex. Ann N Y Acad Sci. 2007;1104:89–107. doi: 10.1196/annals.1390.010. [DOI] [PubMed] [Google Scholar]

- Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science. 2005;310:1337–1340. doi: 10.1126/science.1115270. [DOI] [PubMed] [Google Scholar]

- Schmidt K, Metzler P. Wortschatztest (WST) Weinheim: Beltz; 1992. [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Sutton R, Barto A. Reinforcement learning: an introduction. Cambridge, MA: MIT; 1998. [Google Scholar]

- Uher R, Yoganathan D, Mogg A, Eranti SV, Treasure J, Campbell IC, McLoughlin DM, Schmidt U. Effect of left prefrontal repetitive transcranial magnetic stimulation on food craving. Biol Psychiatry. 2005;58:840–842. doi: 10.1016/j.biopsych.2005.05.043. [DOI] [PubMed] [Google Scholar]