Abstract

We propose a new fully automated velocity-based algorithm to identify fixations from eye-movement records of both eyes, with individual-specific thresholds. The algorithm is based on robust minimum determinant covariance estimators (MDC) and control chart procedures, and is conceptually simple and computationally attractive. To determine fixations, it uses velocity thresholds based on the natural within-fixation variability of both eyes. It improves over existing approaches by automatically identifying fixation thresholds that are specific to (a) both eyes, (b) x- and y- directions, (c) tasks, and (d) individuals. We applied the proposed Binocular-Individual Threshold (BIT) algorithm to two large datasets collected on eye-trackers with different sampling frequencies, and compute descriptive statistics of fixations for larger samples of individuals across a variety of tasks, including reading, scene viewing, and search on supermarket shelves. Our analysis shows that there are considerable differences in the characteristics of fixations not only between these tasks, but also between individuals.

Keywords: Eye tracking, Fixation detection, Saccade thresholds, Reading, Search, Scene viewing

The volume of eye-tracking applications in vision research, human factors, psychology, engineering, and business research has grown exponentially in recent years (Duchowski, 2003; Henderson & Hollingworth, 1998; Poole & Ball, 2006; Rayner, 1998; Wedel & Pieters, 2007). Sequences of eye fixations are basic components of eye movements in these fields to gain understanding in visual behavior. Various algorithms have been proposed to identify eye fixations from the recordings of the point of regard (POR) that the eye-tracking equipment provides (e.g., Urruty, Lew, Ihadeddene, & Simovici, 2007). Most algorithms commonly used in eye-movement research have identified fixations based on thresholds of velocity, distance or dispersion, duration, angle, and/or acceleration of the POR. In practice, dispersion-based methods have been most often implemented in various commercial eye-tracking software, such as Tobii Clearview (described in Nyström & Holmqvist, 2010). Recently however, velocity-based algorithms have gained increasing interest because they are more transparent, and more accurate in identifying the precise onset and offset of saccades (Nyström & Holmqvist, 2010). For instance, the EyeLink 1000 uses a combined velocity and acceleration algorithm (Stampe, 1993). In this article, we propose a new velocity-based algorithm for fully automated identification of fixations and saccades from records of the point of regard, which addresses important challenges of the currently available algorithms.

First, virtually all algorithms to determine fixations from eye-movement recordings have involved the POR of a single eye (for reviews see Duchowski, 2003; Salvucci & Goldberg, 2000), and most eye-movement research to date has relied on such data about monocular viewing. Exceptions are Duchowski et al. (2002), Engbert and Kliegl (2003) and Engbert and Mergenthaler (2006). Duchowski et al. (2002) presented a binocular velocity and acceleration-based algorithm that allowed for the measurement of vergence eye-movements and is therefore applicable to 3-D eye-movement data. In addition, these authors used binocular information, and reduced noise in the eye-tracking data by averaging the position of the left and right eye. The velocity-based algorithm by Engbert and Kliegl (2003), which was later updated in Engbert and Mergenthaler (2006), calculated fixations and saccades for each eye separately. In a second step, binocular saccades were defined as saccades occurring in the left and right eye with a temporal overlap. Application of this algorithm during reading, revealed the importance of binocular coordination (Nuthmann & Kliegl, 2009). Liversedge, White, Findlay, and Rayner (2006) and Vernet and Kapoula (2009) recently also documented the importance of binocular coordination of eye movements during reading, and call for more research in this emerging field (see also Kloke & Jaschinski, 2006). Our algorithm for identifying fixations therefore accommodates binocular viewing.

Second, in most fixation identification algorithms to date, the thresholds to identify fixations and distinguish them from saccades in the eye-movement records are fixed a-priori across individuals and often even across tasks. Exceptions are two-state Hidden Markov models (Rothkopf & Pelz, 2004; Salvucci & Goldberg, 2000), and the velocity-based algorithms by Nyström and Holmqvist (2010), Engbert and Kliegl (2003), and Engbert and Mergenthaler (2006). Fixed, a-priori set thresholds have the drawbacks that the resulting fixation data may be sensitive to the specific thresholds chosen, and that potential differences in characteristics of fixations between directions of movement of the POR, stimuli and individuals are ignored. This is important because the characteristics of fixations and their role in information processing may vary systematically between tasks and individuals (Rayner, Li, Williams, Cave, & Well, 2007), and they may also vary due to the instrumentation. It thus seems crucial to enable the fixation thresholds to vary not only between tasks, but also between individuals. For example, in the open source ILAB program for eye-movement analysis (Gitelman, 2002), eye blinks are automatically removed and fixations are calculated using the dispersion-based algorithm by Widdel (1984). Gitelman reports that the algorithm is sensitive to the choice of thresholds for defining a fixation, including the maximum horizontal and vertical eye movements, and the minimum fixation duration (Karsh & Breitenbach, 1983), and the user has to define these thresholds based on the task, stimuli and equipment. Two-state Hidden Markov models avoid these problems, and probabilistically determine fixations and saccades based on the different distributions of velocities during fixations and saccades. An advantage of these algorithms is that all parameters are estimated from the eye-tracking data and that thus differences between researchers are avoided. However, they are computationally unattractive, i.e., needing relatively long computation times, and are more difficult to implement, which is the reason that they have rarely been used in practice. Another solution is provided by the velocity-based algorithms by Engbert and Kliegl (2003), Engbert and Mergenthaler (2006), and Nyström and Holmqvist (2010) that use the variability of the eye-tracking data to determine individual- and task-specific velocity thresholds. Nyström & Holmqvist iteratively update velocity thresholds based on the variability of the POR until a convergence criterion (set to 1 deg/s) is reached. Engbert and his colleagues use a multiple λ (λ = 6 in Engbert and Kliegl, and λ = 5 in Engbert and Mergenthaler) of the median standard deviation of the POR to determine velocity thresholds. In addition to individual- and task-specific thresholds, the algorithm of Engbert and his colleagues also determines eye (left vs. right)-, horizontal- and vertical-direction-specific velocity thresholds. These algorithms are computationally attractive, but researchers still need to set specific parameters, i.e., the convergence criterion in the algorithm by Nyström and Holmqvist, or λ in the algorithms by Engbert and his colleagues. In addition, these algorithms do not fully explore the statistical relationships between the left and right eye, and horizontal and vertical directions of eye movements. Yet, such correlational information is valuable in classifying eye-movement data in fixations and saccades. Our Binocular-Individual Threshold (BIT) algorithm for identifying fixations is therefore a parameter-free fixation-identification algorithm that automatically identifies task- and individual-specific velocity thresholds by optimally exploiting the statistical properties of the eye-movement data across different eyes and directions of eye movements.

Differences in fixations and saccades between individuals and tasks

In two influential reviews of research on eye-movements in information processing, Rayner (1998, 2009) provided eye-movement statistics for a range of tasks. Mean fixation durations were reported of 225–250 ms for (silent) reading, 180–275 ms for visual search, and 260–330 ms for scene viewing, amongst others. Saccade lengths were about 2° for reading, 3° for visual search and 4° for scene viewing. These average eye-movement statistics have been shown to vary systematically between individuals and tasks.

Andrews and Coppola (1999) recorded eye-movements (sampling frequency 100 Hz) for a sample of 15 individuals for various tasks and image types (simple textured patterns, complex natural scenes, visual search display, text). Horizontal and vertical differences in POR location were computed at each time point to determine eye velocity. The threshold for detecting a saccadic eye movement was an eye displacement greater than 0.2° at a velocity of not less than 20°/s across all individuals. The epochs between saccades were labeled as fixations (procedures to deal with blinks and other outliers are not described in the article). The average fixation durations during reading varied from 150–220 ms, during visual search from 190–230 ms, and during scene viewing from 200–400 ms. Saccade sizes varied from 3–7° during scene viewing, 3–8° during reading, and 4–7° during visual search. Thus, mean fixation durations tend to be shorter and saccade sizes tend to be bigger than those reported by Rayner (1998, 2009).

Rayner et al. (2007) provided the first systematic study of eye-movement patterns for a larger sample of participants (n = 74) and larger numbers of stimuli, using the Eyelink II tracker that samples eye movements at 250 Hz. This tracker has manufacturer settings of thresholds for displacement, velocity and acceleration of 0.10°, 30°/s, and 8,000°/s2, respectively, to define fixations and saccades, for all tasks and individuals. Fixations with durations of more than three times the standard deviation were removed, as is common. Rayner et al. found average fixation durations of 254 ms during (English) reading, 210 ms during visual search, and 280 ms during scene viewing, amongst others. Average saccade lengths were 2.19°, 5.45°, and 5.21°, respectively for these three tasks. Cultural differences (between Chinese and bilingual individuals) were observed, mostly when reading Chinese text. The fixation durations were in the same range whereas the saccades sizes were somewhat larger than those reported by Rayner (1998, 2009), but similar to those reported by Andrews and Coppola (1999).

Over, Hooge, Vlaskamp, and Erkelens (2007) investigated fixation duration and saccade size during the time course of search in two experiments. Participants in the first experiment did not know target conspicuity, whereas participants in the second experiment did. In both experiments, the authors used an Eyelink I tracker (sampling frequency 250 Hz) and identified fixation durations using a velocity-based algorithm, using different parameter settings in the two experiments. In experiment 1, they identified saccades based on a velocity threshold of 30°/s, and determined the start and end of a saccade as having a velocity of at least two standard deviations higher than the velocity of the previous fixation (see also van der Steen & Bruno, 1995). Saccades with amplitudes smaller than 0.5° were discarded and the fixation before and after the saccade in question were considered as one. Fixations shorter than 20 ms were also discarded. In their second experiment, these authors used a velocity threshold of 50°/s, and discarded saccades with amplitudes of .1° and fixations shorter than 50 ms. In both experiments, the authors found that during the time-course of search, saccade amplitude decreases and fixation duration increases (although this effect was stronger in their first experiment). In their first experiments, from the second until the last fixation, durations increased from 173 to 252 ms (43% increase), and saccade amplitudes decreased from 7.7° to 5.3° (35% increase). The results of the second experiment are reported in normalized values, and show that the increase in fixation duration is 18%, while the amplitude of saccades decreases on average by 23%. This supported a coarse-to-fine search pattern in terms of eye-movement statistics.

Nakatani and van Leeuwen (2008) distinguished between durations of the initial and re-fixations on objects. They used the EyeLink I tracker and used a velocity-based algorithm to identify fixations. Before applying the algorithm, bad segments of eye-movement records were removed. Although the authors do not give the exact parameter settings for their algorithm, they mention that thresholds were set to detect saccades larger than 0.6° and fixations longer than 100 ms. The tasks presented to participants were sequences of letters and digits, and the tasks constituted several category (letter/digit) and location (odd/even rows), judgment tasks. To identify multi-modal distributions of fixation durations, the authors applied a logarithmic (ln) transformation to fixation durations, which reduced the skewness of their distribution. They observed multimodal distributions of fixation durations, caused by differences in the durations of first versus subsequent fixations, which were larger than differences in fixation durations between tasks. Multimodality of the distribution was explained from the fact that the duration of a single fixation on an object was longer (6.09 ln(ms) corresponding to 420 ms, SD = 0.54 ln(ms)), than the first fixation in a two- (or more) fixation sequence on an object (5.12 ln(ms) corresponding to 167 ms, SD = 0.59 ln(ms)). The subsequent fixation in a two-fixation sequence was of intermediate length (5.83 ln(ms) corresponding to 339 ms, SD = 0.55 ln(ms)).

Castelhano and Henderson (2008) also distinguished initial and later fixations in picture viewing. They monitored eye movements at a sampling rate of 1,000 Hz using a Generation 5.5 Stanford Research Institute Dual Purkinje Image Eyetracker. Fixations were identified using a velocity-based algorithm with a velocity threshold of 6.58°/s (see Henderson, McClure, Pierce, & Schrock, 1997). Fixations with durations less than 90 ms were removed from their analysis. They observed significant differences between picture types (longest for photo, followed by line drawings and 3D renderings). Generally, they found durations for the first five fixations to be shorter compared to the last five fixations, whereas the first five saccades tended to be shorter compared to the last five saccades (experiment 1).

These studies all report systematic differences in fixation durations and saccade lengths across individuals and tasks. In addition, Foulsham, Kingstone, and Underwood (2008) recently found that next to these measures, saccade directions also vary systematically across tasks. They found in scene perception tasks that the dominant saccade direction follows the orientation of scenes, with a predominant horizontal direction of saccades for natural scenes (they used the Eyelink II tracker with manufacturer settings mentioned above).

Taken together, these studies reveal systematic differences in fixation durations and saccade lengths between individuals, stimuli, tasks and from early-to-later exposure. However, different studies use different algorithms and settings to identify fixations and saccades from the eye-movement records, and different procedures to filter out bad samples, blinks and other anomalies. It is reassuring that despite these variations in algorithms and settings there has been consistency across eye movement studies in overall findings, but, prior research has also found differences between individuals, stimuli, tasks, and eye-movement directions, which begs the question whether the thresholds used to separate fixations from saccades should also vary accordingly. Possibly with the exception of an early study by Harris, Hainline, Abramov, Lemerise, and Camenzuli (1988), who used extremely laborious, manual coding of the complete sequence of fixations and saccades, most studies, including the ones summarized above, assume constant thresholds to define saccades and fixations. In addition, most studies define fixations based on the eye-movement recordings of a single eye, or the average of both eyes. Recent studies using new generations of affordable binocular eye-tracking systems however report significant disparities between the movements of both eyes (Kloke & Jaschinski, 2006; Liversedge et al., 2006; Nuthmann & Kliegl, 2009; Vernet & Kapoula, 2009). Using the recordings of both eyes can provide needed insights into the coordination of binocular viewing, as well as information to determine the thresholds to define fixations that may even differ between the right and left eye, for a specific individual.

In this study, we propose an algorithm for the identification of fixations from eye-movement records—described in the next section—that alleviates some of the limitations of previous approaches and that is conceptually simple and computationally attractive. It is based on principles in robust statistics (see Engbert and Kliegl (2003) and Engbert and Mergenthaler (2006), for an application of robust methods to the identification of micro-saccades) and control charts techniques. Our algorithm identifies fixations from the POR after automatically eliminating outliers due to blinks and anomalies in the recording. Based on the natural variability of the POR within-fixations, it identifies thresholds for fixations that are specific to each of the eyes, to directions of eye-movements, to tasks, and to individuals. Because the algorithm appropriately deals with differences in thresholds for fixations between individuals, tasks and both eyes, it can contribute to further knowledge accumulation and theory development in the emerging fields of binocular eye-movement coordination, and individual differences in eye-movements.

Using the proposed algorithm, we compute eye-movement statistics for both eyes for samples of about 70 participants across a variety of common tasks and stimuli (two reading tasks, static scene viewing, and search) and provide descriptive statistics for them, which reveal that individual differences in fixation durations and saccade sizes are large.

BIT: Binocular-Individual Threshold algorithm

Our fixation identification algorithm is a velocity-based algorithm, which improves over extant algorithms in three ways. First, it accommodates binocular viewing and uses information about covariations between movements of both eyes to identify fixations and saccades. Second, it estimates rather than pre-sets the velocity threshold to detect fixations and saccades, and it allows the threshold to differ between eye-movement directions, tasks and individuals. Third, it accommodates the inherent stochasticity in eye movements such that not every record that exceeds the threshold is labeled as a saccade.

To determine individual, task, and eye-specific thresholds, we use techniques from Robust Statistics (Maronna, Martin, & Yohai, 2006) that enable us to estimate velocity thresholds based on individual-level variability of the eye-movement recordings within a fixation. These velocity thresholds are input to Shewhart quality control chart procedures (Shewhart, 1931), that classify the POR as a saccade or a fixation. The intuition of the algorithm is that it determines the variability of the POR of both eyes within a fixation for a specific individual, and then determines when the velocity of the POR exceeds the within-fixation variability, and label the corresponding epochs as saccades. The next two sections describe the components of the BIT algorithm.

Using robust statistics to determine within-fixation variability of the POR

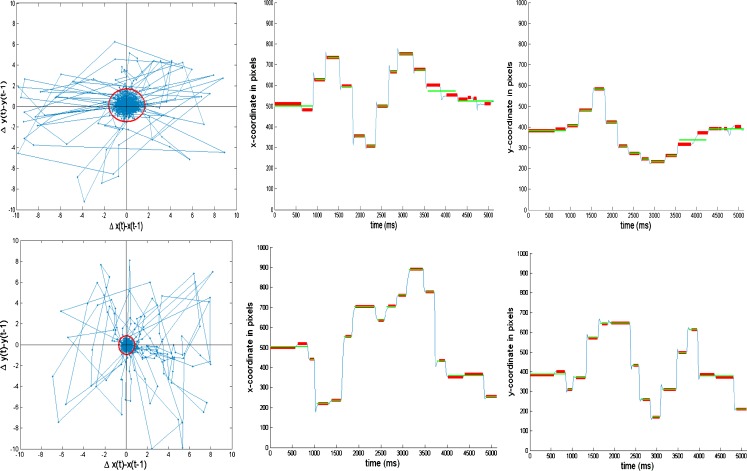

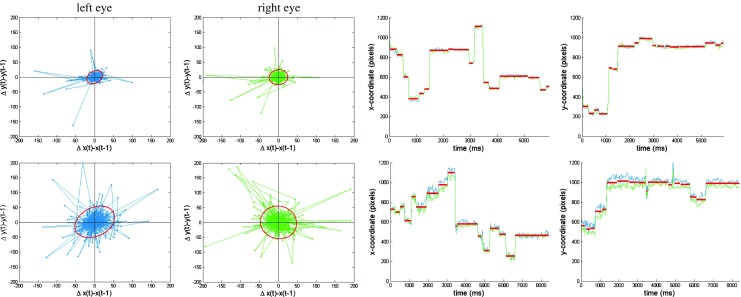

Figure 1 shows trace-plots of the POR of two individuals (separately for the left and right eye) searching for a target in a static image, measured with a Tobii ® 2150 eye-tracker that images the pupil of the observers’ eyes, along with the corneal reflections to estimate the position of the eye sampling at 50 Hz. Although the data in Fig. 1 are collected on a specific eye tracker (study 1), we hasten to emphasize that the proposed algorithm is machine and sampling frequency independent, and can be easily accommodated to POR records from other eye-tracking equipment with different precision and sampling frequency. To demonstrate this and provide further substantive insights, we applied the proposed fixation identification algorithm on a different eye-tracker with a higher precision and higher sampling frequency (study 2).

Fig. 1.

Illustrating the BIT Algorithm on two observed PORs during a search task. The four plots on the top correspond to participant 71 and the bottom plots to participant 11. The two left scatter plots represent the velocities of the POR as expressed in differenced x- and y-coordinates, for, respectively, the left and right eye. The red ellipses in these plots represent the estimated threshold for determining fixations and saccades (i.e., velocities outside the ellipse are labeled as candidate saccades). The two right plots represent the POR of both eyes (blue corresponds to left eye, and green to the right eye) in respectively x- and y-coordinates, with estimated fixation positions superimposed (in red)

The observed process  consists of the x-y locations of the left and right eyes of the POR during exposure to the stimulus, for time samples t = 1,…,T. Figure 1 shows spells of time in which the POR is relatively stable, presumably fixations, as well as larger jumps, presumably saccades. The natural within-fixation variability of the POR, caused by tremor, drift and micro-saccades (Rayner, 1998), is typically small relative to variability of the displacement of the POR between fixations, caused by saccades. As illustrated in Fig. 1, the within-fixation variability of the POR is not constant across individuals and the two individuals selected represent extremes of the spectrum. Both individuals exhibit a positive covariance of the x- and y- coordinates of the location of the left, but not of the right eye. The variability of the POR of the individual shown in the plots in the top panel is considerably smaller than that of the individual shown in the bottom panel. The average fixation durations are 321 ms (SD = 241) and 456 (SD = 406), respectively, for the top and bottom panel. In addition, the correspondence of the POR of the left and right eye is substantially larger for the individual in the top panel than for the individual in the bottom panel. The variability of the POR of the latter individual is so large that some researchers may consider removing this individual from the data. Yet, we wish to retain the data of the individual in the bottom panel as well, in absence of external evidence that the data in question is invalid. Therefore, the algorithm assumes the within-fixation variability of displacement of the POR to have a (multivariate normal) distribution with individual-, eye- and task-specific means and covariance matrices.

consists of the x-y locations of the left and right eyes of the POR during exposure to the stimulus, for time samples t = 1,…,T. Figure 1 shows spells of time in which the POR is relatively stable, presumably fixations, as well as larger jumps, presumably saccades. The natural within-fixation variability of the POR, caused by tremor, drift and micro-saccades (Rayner, 1998), is typically small relative to variability of the displacement of the POR between fixations, caused by saccades. As illustrated in Fig. 1, the within-fixation variability of the POR is not constant across individuals and the two individuals selected represent extremes of the spectrum. Both individuals exhibit a positive covariance of the x- and y- coordinates of the location of the left, but not of the right eye. The variability of the POR of the individual shown in the plots in the top panel is considerably smaller than that of the individual shown in the bottom panel. The average fixation durations are 321 ms (SD = 241) and 456 (SD = 406), respectively, for the top and bottom panel. In addition, the correspondence of the POR of the left and right eye is substantially larger for the individual in the top panel than for the individual in the bottom panel. The variability of the POR of the latter individual is so large that some researchers may consider removing this individual from the data. Yet, we wish to retain the data of the individual in the bottom panel as well, in absence of external evidence that the data in question is invalid. Therefore, the algorithm assumes the within-fixation variability of displacement of the POR to have a (multivariate normal) distribution with individual-, eye- and task-specific means and covariance matrices.

To estimate individual-specific means, variances and covariances of the within-fixation velocity of the POR, the PORs are first differenced:  , for t = 2,…,T. These Δ(zt) reflect not only velocities of the POR caused by within-fixation variability, but also displacement of the POR during saccades. To obtain reliable estimates of the mean and covariance of the within-fixation variability of the velocity of the POR, we apply robust statistical methods (Maronna et al., 2006). These methods consider abrupt increases in Δ(zt) to be “outliers” relative to the within-fixation distribution of Δ(zt). The most efficient method to obtain robust estimates for the mean and covariance by taking into account a possibly large number of outliers is the Minimum Covariance Determinant method, MCD (Rousseeuw, 1984). MCD finds a fraction of 1 − α observations, such that their covariance matrix has the lowest determinant, det(∑), where

, for t = 2,…,T. These Δ(zt) reflect not only velocities of the POR caused by within-fixation variability, but also displacement of the POR during saccades. To obtain reliable estimates of the mean and covariance of the within-fixation variability of the velocity of the POR, we apply robust statistical methods (Maronna et al., 2006). These methods consider abrupt increases in Δ(zt) to be “outliers” relative to the within-fixation distribution of Δ(zt). The most efficient method to obtain robust estimates for the mean and covariance by taking into account a possibly large number of outliers is the Minimum Covariance Determinant method, MCD (Rousseeuw, 1984). MCD finds a fraction of 1 − α observations, such that their covariance matrix has the lowest determinant, det(∑), where  . We set α = 0.25, which defines the maximum percentage of outliers in the data, based on our review of published studies reporting the percentage of saccades in POR records. The final robust estimates of location and covariance are the sample average and covariance matrix of the remaining 1 − α points. Although MCD is computationally intensive, involving an iterative procedure, Rousseeuw and Van Driessen (1999) developed an efficient algorithm that converges rapidly, even for large T. Their approach was used in the algorithm to obtain robust estimates of the mean and covariance of the eyes, individual and task specific within-fixation variability.

. We set α = 0.25, which defines the maximum percentage of outliers in the data, based on our review of published studies reporting the percentage of saccades in POR records. The final robust estimates of location and covariance are the sample average and covariance matrix of the remaining 1 − α points. Although MCD is computationally intensive, involving an iterative procedure, Rousseeuw and Van Driessen (1999) developed an efficient algorithm that converges rapidly, even for large T. Their approach was used in the algorithm to obtain robust estimates of the mean and covariance of the eyes, individual and task specific within-fixation variability.

We need these reliable estimates of (eye-individual-task-specific) within fixation POR-velocities to enable the identification of saccades, with ‘extreme’ POR velocities relative to the within-fixation variability. For this, a Shewhart control chart procedure was used, which is explained next.

Identification of saccades and blinks

The multivariate Shewhart control procedure is a popular statistical process-control technique to objectively detect unusual points of variability in process data. Such charts have been used to identify anomalies in various disciplines, such as in health care to identify increases in infection rates (Benneyan, Lloyd, & Plsek, 2003), in neuroscience to identify small changes in blood oxygenation-level-dependent (BOLD) contrast in fMRI studies (Friedman & Glover, 2006), and in manufacturing to quickly identify fallout during the production process (Montgomery, 1997). The multivariate Shewhart control chart assumes that data is generated from a multivariate distribution with target mean μ and covariance ∑. The procedure flags extreme data points that are unlikely to be generated from the target distribution, i.e., if the probability of observing a data point Δ(zt) is maller than a pre-specified value. In the case of (x-y) POR data, for large T, the variable  has approximately a Chi-square distribution with four (x

left

, y

left

, x

right

, y

right) degrees of freedom (Montgomery, 1997)1. Hence, the multivariate Shewhart control procedure flags those velocities Δ(zt) for which

has approximately a Chi-square distribution with four (x

left

, y

left

, x

right

, y

right) degrees of freedom (Montgomery, 1997)1. Hence, the multivariate Shewhart control procedure flags those velocities Δ(zt) for which  , where

, where  .2

.2

To apply this procedure to detect saccades from the variability in PORs of eye-movement data, we use for each individual and task the robustly estimated mean and covariance (see Sect. 2.1). For each velocity Δ(zt) we compute w

t, defined above, which we use to determine whether each POR at times t = 2,…,T is a candidate saccade. The leftmost plots in Fig. 1 illustrate this for the two individuals. The points on the “control ellipse” illustrate those velocities Δ(zt) for which  , the threshold. Hence, those points that lie inside the control ellipse, i.e., velocities for which

, the threshold. Hence, those points that lie inside the control ellipse, i.e., velocities for which  , indicate PORs with velocities consistent with the within-fixation variability for that individual and task. However, velocities that lie outside the control ellipse, i.e., velocities for which

, indicate PORs with velocities consistent with the within-fixation variability for that individual and task. However, velocities that lie outside the control ellipse, i.e., velocities for which  , are highly unlikely to be due to within-fixation variability and are marked as candidate saccades.

, are highly unlikely to be due to within-fixation variability and are marked as candidate saccades.

However, these points will not always correspond to saccades, because unlikely variations in the velocity of the POR may also be due to blinks and other anomalies that prevent accurate detection of the POR. Therefore, to classify a candidate at time t, for which  , as a saccade we use a one-step-ahead forecast (Kumar, 2007). For this, we compute the velocity of the POR from t-1 to t + 1,

, as a saccade we use a one-step-ahead forecast (Kumar, 2007). For this, we compute the velocity of the POR from t-1 to t + 1,  , and measure it as a deviation from the within-fixation variability:

, and measure it as a deviation from the within-fixation variability:  . If

. If  , this signifies that the average velocity from t-1 to t + 1 is larger than the within-fixation variability, so that the POR does not return to the vicinity of its prior location and its acceleration continues. Thus, the POR at time t can be classified as a saccade. Otherwise, the POR at t is classified as a blink or outlier, because the POR returns to its prior location at t-1, after time point t. Subsequently, all remaining points are classified as possible fixation time points. Finally, a sequence of candidate fixation time points is classified as a fixation if at least three subsequent time points (i.e., 60 ms) are classified as fixation points, possibly interrupted by a maximum of three consecutive blinks or missing data points.

, this signifies that the average velocity from t-1 to t + 1 is larger than the within-fixation variability, so that the POR does not return to the vicinity of its prior location and its acceleration continues. Thus, the POR at time t can be classified as a saccade. Otherwise, the POR at t is classified as a blink or outlier, because the POR returns to its prior location at t-1, after time point t. Subsequently, all remaining points are classified as possible fixation time points. Finally, a sequence of candidate fixation time points is classified as a fixation if at least three subsequent time points (i.e., 60 ms) are classified as fixation points, possibly interrupted by a maximum of three consecutive blinks or missing data points.

The crucial differences of the proposed procedure with commonly used velocity-based procedures is that we determine a control ellipse from the observed variability of the POR within fixations, which allows for different thresholds that may differ in the x- and y-direction and that may vary not only across tasks but also across eyes and individuals. Importantly, these thresholds are set automatically by the procedure. Although we are not the first to propose a velocity-based algorithm that determines thresholds based on the individual- and task-specific variability of the POR (Engbert & Kliegl, 2003; Engbert & Mergenthaler, 2006; Nyström & Holmqvist, 2010), our algorithm optimally uses the statistical information available in the variability of both eyes. The algorithm by Nyström & Holmqvist only applies to the POR of one eye (or the average of both eyes) and does not take into account direction-specific velocities, leading to control circles. Similar to ours, the algorithm by Engbert and his colleagues uses information of both eyes and determines direction specific thresholds leading to control ellipses. Yet, their approach does not take into account the relationship between both eyes, or horizontal and vertical movements, and implicitly assumes independence between these measures. Our MCD procedure accounts for the covariance of within-fixation variability so that, in contrast to the algorithm by Engbert and his colleagues, the control ellipse can have an arbitrary direction for any task, eye, or individual. Furthermore, based on well-established statistical procedures, our procedure fully explores this information to determine candidate saccades. Allowing for covariance between the left and right eye, and horizontal and vertical movements is essential given the findings of Liversedge et al. (2006), Nuthmann and Kliegl (2009) and Foulsham et al. (2008). The results of Liversedge et al. (2006) and Nuthmann and Kliegl (2009) suggest that the movements of the left and right eye are possibly correlated during reading fixations, since, on average, they find the disparity of the left and right eye at the start of a fixation to be larger than at the end of a fixation. Note that, as reported by Liversedge et al. (2006), there could be a positive correlation (both eyes move in the same direction but with different velocities), a negative correlation (both eyes move in opposite direction), or no correlation (one eye moving alone). The results by Foulsham et al. (2008) show that the directions of saccades are non-random and that the dominant saccade direction follows the orientation of the scene. This result therefore suggests that velocity thresholds to determine candidate saccades might also be direction-specific, which our algorithm allows for.

As illustrated in Fig. 1, within-fixation variability varies strongly across individuals leading to very different control ellipses. In addition, the shapes of these control ellipses also vary across individuals because the covariance of the velocities of the POR in the x- and y-direction is different for these different individuals. For example, for the POR record of the participant shown in the top plots in Fig. 1, the within-fixation variability has a positive covariance in the x- and y-directions, indicating that if the eye moves towards the right it also tends to move upwards, while if it moves towards the left it tends to move down. Note that the POR for the participant shown in the plots in the bottom panel does not exhibit such a pattern. While the cutoffs used in most velocity-based approaches imply “control circles” (see Engbert and Kliegl (2003) and Engbert and Mergenthaler (2006) for exceptions), the proposed algorithm allows for direction-specific elliptical control regions, which results in direction-specific velocity thresholds. For instance, for the individual presented at the top in Fig. 1, velocity thresholds are larger for changes in the velocity of the POR in the upper-right quadrant compared to changes in the lower-right quadrant.

Thus, the proposed algorithm is a velocity-based algorithm that automatically determines direction-, task-, eye- and individual-specific thresholds to identify fixations and saccades from the POR, and removes outliers, missing data, blinks and other anomalies in the eye-movement recording. The algorithm was implemented in MATLAB, and the code is freely distributed at the personal webpage of the first author (http://www.bm.ust.hk/~mark/staff/rlans.html). Because it is programmed in MATLAB, the algorithm would lend itself to be incorporated in the open source Eyelink Toolbox (Cornelissen, Peters, & Palmer, 2002).

We investigated this algorithm in two empirical applications to POR records collected on two different eye trackers having different sampling rates, and accuracies.

Study 1

Experimental setup

Participants

Seventy-one participants were recruited from the undergraduate student population at a major University in the Netherlands. They received course credit for their participation. All participants had normal uncorrected vision or their vision was corrected via contact lenses or glasses. They ranged in age between 18 and 24 years and were native Dutch speakers.

Apparatus

Eye movements were monitored using a Tobii ® 2150 tracker, sampling infrared corneal reflections at 50 Hz, with a 0.35o spatial resolution, and with an accuracy of 0.5 o. Stimuli were presented on the 21 inch LCD monitor of the eye-tracker, controlled by a Dell PC with a display resolution of 1600 × 1200 pixels. Participants responded by pressing the space bar on the keyboard. Participants were tested in a sound-attenuated, dimly lit room, and could freely move their head in a virtual box of about 13 × 13 inches, while cameras tracked their head and eye-focus position. The eye-tracking system compensates for head movements with a resulting accuracy of 0.75–1.75 degrees. Viewing was binocular and the position of the participant’s left and right eye were separately recorded.

Tasks and procedures

All participants performed the following four tasks: (1) Dutch reading (one out of two texts), (2) English reading (one out of two texts), (3) scene viewing (two advertisements), (4) visual search (two brands on retail shelves). In all tasks, the stimuli used were common, natural stimuli that the participants would encounter in day-to-day life, and exposure to all stimuli was self-controlled, as in real life exposures. The exposure tasks resemble tasks previously used in reading (Rayner, 1998), scene perception (Andrews & Coppola, 1999; Castelhano & Henderson, 2008) such as ad viewing (Rayner, Rotello, Stewart, Keir, & Duffy, 2001; Wedel & Pieters, 2000), and search on store shelves (van der Lans, Pieters, & Wedel, 2008). All instructions and experimental stimuli were shown on the Tobii eye-tracker. Participants continued to the next stimulus by pressing the spacebar on the computers’ keyboard. Prior to these tasks, the eye-tracker was calibrated for each participant using the manufacturer’s five-point calibration procedure. Participants were seated comfortably at a distance of about 60 cm from the screen and were free to move their heads during calibration as recommended by Tobii’s user manual. Before calibration, participants were carefully instructed to fixate each of the five points that were sequentially presented on the screen for about two seconds each. If calibration was successful, the experiment started, otherwise the eyes were recalibrated until calibration was successful.

The two Dutch reading tasks both contained 18 sentences in respectively five and three paragraphs (in Arial font). The average sentence length was respectively 13.9 and 9.9 words, with a range from two to respectively twenty and sixteen words. The English reading tasks were translations of the two Dutch tasks, and contained the same number of paragraphs and, respectively, 18 and 17 sentences. Average sentence length was respectively 14.9 and 11.5 words, with ranges from respectively five to 19, and two to 18 words. An example of a sentence used was: “In the future, it will also be possible to receive your boarding pass with a barcode on your mobile phone.” Participants silently read the entire paragraph at their own pace and pressed a key to proceed to the next task (average reading time for the Dutch text was 52.6 s (SD = 23.0), and for the English text 56.2 s (SD = 19.6)). In the reading tasks, given the average font size and viewing distance, about six characters subtended one degree of visual angle. Following previous research on reading (Rayner & Pollatsek, 1989, p.117), return sweeps (i.e., when the eye moves back to the beginning of the next line) were removed from the saccade data after the algorithm was applied.

The scenes used were two color photographs of print advertisements from pro-environment campaigns. The ads were scanned and cropped so that they were shown in full screen. Participants were asked to explore the ads as they would normally do at home or in a waiting room at their leisure, and to press the spacebar in order to proceed to the next task. On average, participants viewed the ads 7.9 s (SD = 4.4).

In the visual search task, participants were asked to find a product (a brand of shampoo and a brand of margarine) on an image of a retail shelf that contained packages of various brands. The shampoo-shelf contained 43 different brands, each represented by one package. The margarine-shelf contained nineteen different brands and an average of 6.3 packages per brand. The location of the target items were respectively center right and bottom left. Participants were asked to press the spacebar to proceed to the next task once they had located the target brand. On average, participants took 6.7 s (SD = 4.3) to find the shampoo and 8.6 s (SD = 2.7) to find the margarine brand.

Results

Within fixation variability

Tables 1 and 2 provide the robust estimates of the means and standard deviations of the x- and y-velocities of the POR of the left and right eye and their correlations, for each of the 12 tasks. That is, they provide the estimates of the elements of the mean vector μ (Table 1) and covariance matrix ∑ (Table 2) corresponding to the variability of the POR during fixations (i.e., excluding outliers that are classified as candidate saccades), estimated with the MCD procedure. Table 3 shows the velocity thresholds determined by our algorithm, for the different tasks and individuals. These quantities are of interest because they reflect the within-fixation variability of the POR per eye and are directly related to the Shewhart control limits to identify fixations. We examined how these control limits in the x-y direction as well as their correlations, vary across eyes, tasks and individuals. For this purpose, we fitted a linear mixed effects model on the measures presented in Table 2, with eye, task and individual differences as their predictors, using the WINBUGS software (Lunn, Thomas, Best, & Spiegelhalter, 2000).3 We used the procedure by Gelman and Pardoe (2006) to compute the part of the variation that is attributable to eyes, individuals, and tasks. These results are summarized in Table 4.

Table 1.

Robust estimates of the mean changes (μ) of the POR during fixations in x- and y-directions for different tasks (in °/s)

| Task | Left eye | Right eye | ||

|---|---|---|---|---|

| Mean x l | Mean y l | Mean x r | Mean y r | |

| Reading English 1 | .49 (.57) | .17 (.37) | .31 (.54) | .12 (.41) |

| Reading English 2 | .28 (.42) | .12 (.57) | -.03 (.47) | .08 (.42) |

| Reading Dutch 1 | .50 (.52) | .25 (.30) | .12 (.42) | .27 (.30) |

| Reading Dutch 2 | .65 (.40) | .04 (.45) | .35 (.34) | .23 (.49) |

| Reading Overall | .50 (.49) | .14 (.42) | .22 (.47) | .18 (.42) |

| Search Shampoo | .02 (.98) | .27 (.79) | -.02 (.57) | .26 (.87) |

| Search Margarine | .26 (.61) | .32 (.69) | -.34 (.57) | .02 (.58) |

| Search Overall | .12 (.85) | .29 (.75) | -.15 (.58) | .16 (.77) |

| Ad Exploration 1 | .08 (.87) | .50 (.91) | -.38 (.77) | .27 (1.01) |

| Ad Exploration 2 | .23 (.74) | .20 (.76) | -.04 (.76) | .35 (.69) |

| Ad Exploration Overall | .15 (.81) | .36 (.85) | -.21 (.78) | .31 (.87) |

| Overall | .26 (.74) | .26 (.71) | -.05 (.66) | .22 (.72) |

Note: Standard deviations across individuals in parentheses. Positive (negative) values correspond to movements to the right (left) for the x-coordinates, and up (down) for the y-coordinates

Table 2.

Robust estimates of standard deviations and correlations (∑) of changes in POR during fixations in x- and y-directions for different tasks (in °/s)

| Task | Left eye | Right eye | Correlations right and left eyes | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| sd(xl) | sd(yl) | r(xl, yl) | sd(xr) | sd(yr) | r(xr, yr) | r(xl, xr) | r(yl, yr) | r(xl, yr) | r(yl, xr) | |

| Reading English 1 | 19.0 (5.46) | 25.6 (8.41) | .08 (.12) | 19.2 (5.18) | 25.5 (7.56) | –.14 (.11) | .13 (.05) | .01 (.04) | –.01 (.04) | –.01 (.03) |

| Reading English 2 | 17.8 (3.88) | 23.4 (6.25) | .12 (.12) | 18.6 (5.06) | 23.3 (6.63) | –.14 (.12) | .13 (.07) | .01 (.04) | .00 (.04) | .01 (.05) |

| Reading Dutch 1 | 18.6 (4.16) | 23.5 (4.69) | .07 (.11) | 19.4 (4.90) | 23.6 (6.67) | –.16 (.12) | .13 (.04) | .02 (.05) | .00 (.03) | .00 (.04) |

| Reading Dutch 2 | 19.6 (5.83) | 25.5 (8.55) | .10 (.11) | 19.7 (5.51) | 26.3 (8.12) | –.10 (.09) | .13 (.10) | .02 (.05) | .01 (.03) | –.01 (.04) |

| Reading Overall | 18.9 (5.02) | 24.8 (7.41) | .09 (.12) | 19.3 (5.12) | 24.9 (7.37) | –.13 (.10) | .13 (.07) | .02 (.04) | .00 (.03) | .00 (.04) |

| Search Shampoo | 15.8 (4.12) | 19.9 (5.56) | –.05 (.13) | 15.5 (3.27) | 18.6 (6.64) | –.17 (.13) | .10 (.17) | .07 (.21) | –.01 (.09) | .00 (.09) |

| Search Margarine | 17.2 (5.15) | 17.8 (5.16) | .07 (.12) | 16.9 (4.70) | 18.5 (5.33) | –.06 (.11) | .13 (.21) | .05 (.23) | –.00 (.07) | .00 (.09) |

| Search Overall | 16.4 (4.59) | 19.0 (5.46) | .00 (.14) | 16.1 (3.95) | 18.5 (6.09) | –.12 (.13) | .11 (.19) | .06 (.21) | –.01 (.08) | .00 (.09) |

| Ad Exploration 1 | 19.8 (6.28) | 21.6 (8.04) | .12 (.13) | 19.9 (6.88) | 21.6 (9.72) | –.08 (.15) | .12 (.13) | .02 (.08) | .00 (.10) | –.02 (.10) |

| Ad Exploration 2 | 19.0 (4.93) | 23.0 (6.91) | .01 (.13) | 18.5 (4.70) | 22.4 (8.72) | –.12 (.12) | .14 (.09) | .04 (.08) | –.02 (.07) | .00 (.07) |

| Ad Exploration Overall | 19.4 (5.65) | 22.3 (7.50) | .07 (.14) | 19.2 (5.93) | 22.0 (9.21) | –.10 (.14) | .13 (.11) | .03 (.08) | –.01 (.09) | –.01 (.09) |

| Overall | 18.4 (5.30) | 22.3 (7.30) | .06 (.14) | 18.4 (5.35) | 22.1 (8.20) | –.12 (.12) | .13 (.13) | .03 (.12) | –.00 (.07) | –.00 (.07) |

Note: Standard deviations across individuals in parentheses

Table 3.

Velocity thresholds for the left and right eye in horizontal (x) and vertical (y) directions (in °/s)

| Task | Left eye | Right eye | ||

|---|---|---|---|---|

| Horizontal | Vertical | Horizontal | Vertical | |

| Reading English 1 | [–61.3 ; 62.3] | [–83.1 ; 83.5] | [–62.2 ; 62.8] | [–82.9 ; 83.1] |

| Reading English 2 | [–57.6 ; 58.2] | [–76.0 ; 76.3] | [–60.5 ; 60.5] | [–75.7 ; 75.9] |

| Reading Dutch 1 | [–60.0 ; 61.0] | [–76.2 ; 76.7] | [–63.0 ; 63.2] | [–76.5 ; 77.1] |

| Reading Dutch 2 | [–63.1 ; 64.4] | [–82.9 ; 83.0] | [–63.7 ; 64.4] | [–85.3 ; 85.8] |

| Reading Overall | [–61.0 ; 62.0] | [–80.6 ; 80.8] | [–62.6 ; 63.0] | [–80.8 ; 81.2] |

| Search Shampoo | [–51.4 ; 51.4] | [–64.5 ; 65.0] | [–50.5 ; 50.4] | [–60.3 ; 60.8] |

| Search Margarine | [–55.7 ; 56.2] | [–57.6 ; 58.2] | [–55.3 ; 54.6] | [–60.2 ; 60.2] |

| Search Overall | [–53.2 ; 53.5] | [–61.5 ; 62.1] | [–52.5 ; 52.2] | [–60.0 ; 60.4] |

| Ad Exploration 1 | [–64.3 ; 64.5] | [–69.8 ; 70.8] | [–65.1 ; 64.4] | [–70.0 ; 70.6] |

| Ad Exploration 2 | [–61.6 ; 62.1] | [–74.6 ; 75.0] | [–60.2 ; 60.2] | [–72.5 ; 73.2] |

| Ad Exploration Overall | [–63.0 ; 63.3] | [–72.2 ; 72.9] | [–62.7 ; 62.3] | [–71.3 ; 71.9] |

Note: Lower (left) and upper (right) thresholds given in square brackets

Table 4.

Explained variation of POR during fixation across individuals, eyes (left vs. right) and tasks

| Predictor | Predictor | ||

|---|---|---|---|

| Individuals | Tasks | Eye (left vs. right) | |

| Mean x | .21 | .04 | .03 |

| Mean y | .16 | –.06 | –.02 |

| sd(x) | .83 | .05 | –.00 |

| sd(y) | .77 | .08 | –.00 |

| r(xl, yl) | .26 | .06 | – |

| r(xr, yr) | .29 | .01 | – |

| r(xl, xr) | .57 | –.00 | – |

| r(yl, yr) | .61 | .01 | – |

| r(xl, yr) | .39 | –.01 | – |

| r(yl, xr) | .25 | –.01 | – |

Note: Reported values are median posterior estimates of the explained variance in the linear mixed effects model in WINBUGS using the procedure of Gelman and Pardoe (2006). Similar to the adjusted R2, it is possible for this procedure to produce negative values if the explained variance of a parameter is so poor that it is larger than the variance of the data (see Gelman and Pardoe (2006), p.244)

Table 1 shows that across all tasks the means of within-fixation velocity are on average small, but yet significantly different from zero, except for the x-direction of the right eye. For reading tasks only, the right-eye velocities in the x-direction appear smaller than the left eye velocities (0.22°/s versus 0.50°/s). Table 2 shows, as expected, that the horizontal and vertical velocities of the left and right eye are significantly positively correlated (.13 for the horizontal direction, and .03 for the vertical direction). However, these correlations are rather small, which suggest that both the left and right eye contain distinct information to determining fixations.

The mean fixation variability μ is relatively stable across tasks and individuals (see Tables 1 and 4). Most of the variation in this measure is random, only 21% (horizontal x-direction) and 16% (vertical y-direction) can be explained by individual differences, while the effect sizes of the eye and task are negligible. Furthermore, except for the mean horizontal movement of the left eye during reading, parameter estimates of the linear mixed effects model show that these mean fixation variabilities are not significantly different from zero. These results suggest that in this study it is not necessary to allow for non-zero mean velocities in both the x- and y-direction within fixations in identifying fixations and saccades, and supports the previous finding that this parameter is effectively zero (Engbert & Kliegl, 2003; Engbert & Mergenthaler, 2006).

Whereas the mean of the POR during fixations does not vary systematically across tasks, the within-fixation variation ∑ does, even if not by very much (the explained variance equals .05 for the x-direction, and .08 for the y-direction, see Table 4). The effects for search tasks are significantly smaller compared to reading and scene viewing, and the within-fixation variability of the POR does not significantly differ for reading and scene viewing.

Individual differences

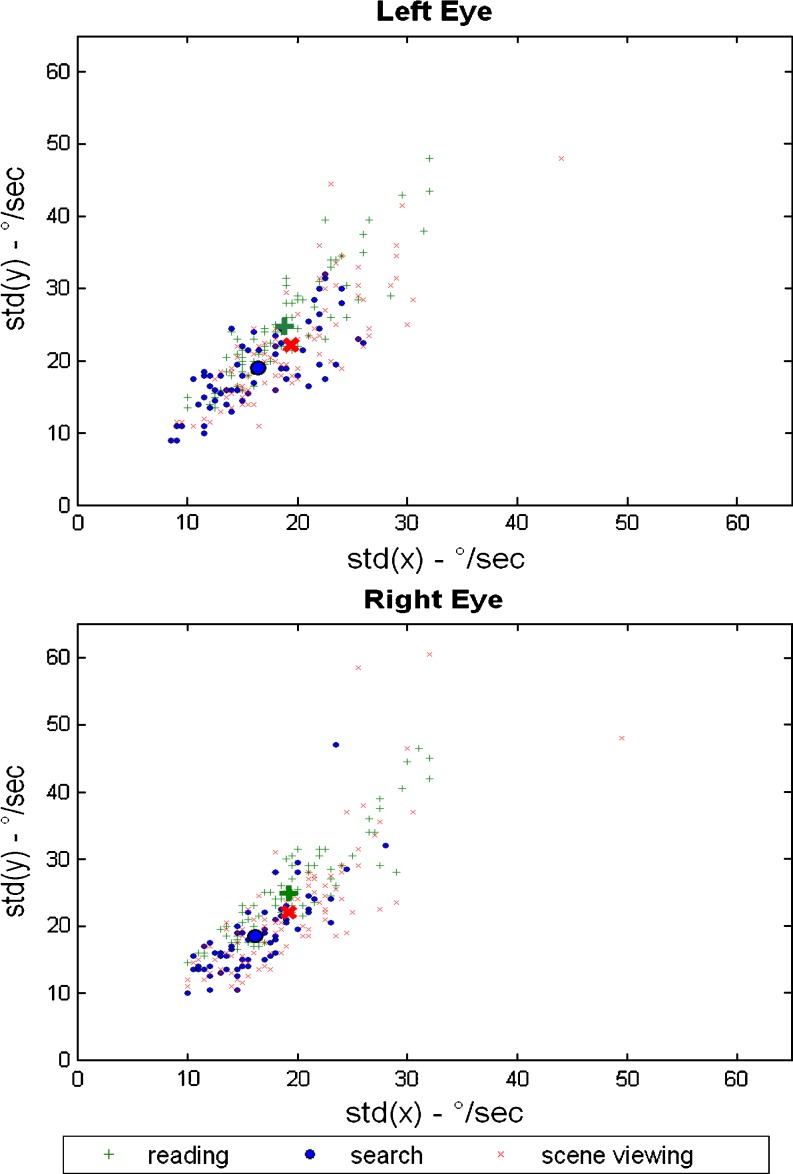

There are significant differences between tasks in fixation variability, although these account for only 5% of the variability in the x-direction and 8% in the y-direction. Furthermore, left vs. right eye does not account for any variation of the within fixation variability (see Table 4). In contrast, individual differences are much more important and account for, respectively, 83% of the variation in the x-direction and 77% in the y-direction. Figure 2 illustrates the importance of individual differences for the left and right eye (respectively top and bottom plot). In the figure, the average standard deviations in the x- and y- directions are plotted for the three tasks, as well as for each individual. The figure illustrates that differences in within-fixation variability are substantially larger between individuals than between tasks.

Fig. 2.

Individual-specific and average standard deviations of the within fixation variability in eye-velocity in the x- and y-directions across tasks. The scatter plots show the average standard deviation of the first-order differences of the POR in the x- and y-directions for each task in large symbols, and they show the individual-level standard deviations in the x- and y-directions in small symbols, for respectively the left eye (top panel) and right eye (bottom panel)

Table 2 shows between-individual standard deviations of the within-fixation variability ranging from a minimum of 3.27°/s to a maximum 9.72°/s, implying that large differences in velocity thresholds should be used to determine fixations. For instance, for reading the average robust estimator for the within fixation variation for, respectively, the left and right eye in the x-direction is 18.9°/s and 19.3°/s and for the y-direction is 24.8°/s and 24.9°/s, leading to velocity thresholds for the left eye of –61.0°/s to 62.0°/s in the x-direction and –80.6°/s to 80.8°/s in the y-direction, and for the right eye of -62.6°/s to 63.0°/s in the x-direction and –80.8°/s to 81.2°/s in the y-direction (see Table 3).

Participant 71’s (shown in Fig. 1) robust estimate of the within-fixation variability for the left and right eye equals 10.1°/s in the x-direction, and are 13.4°/s and 14.5°/s in the y-direction, respectively, leading to velocity thresholds that are almost 50% smaller than those for the average participant, which is large. On the other hand, within-fixation variability of participant 11 (shown in Fig. 1) for respectively the left and right eye are 31.5 °/s and 32.0 °/s in the x-direction and 37.8 °/s and 42.0 °/s in the y-direction, which is about three times those of participant 71. These results show that different thresholds are required to define fixations of different individuals, which the proposed algorithm automatically does.

Descriptive statistics of eye-movement characteristics

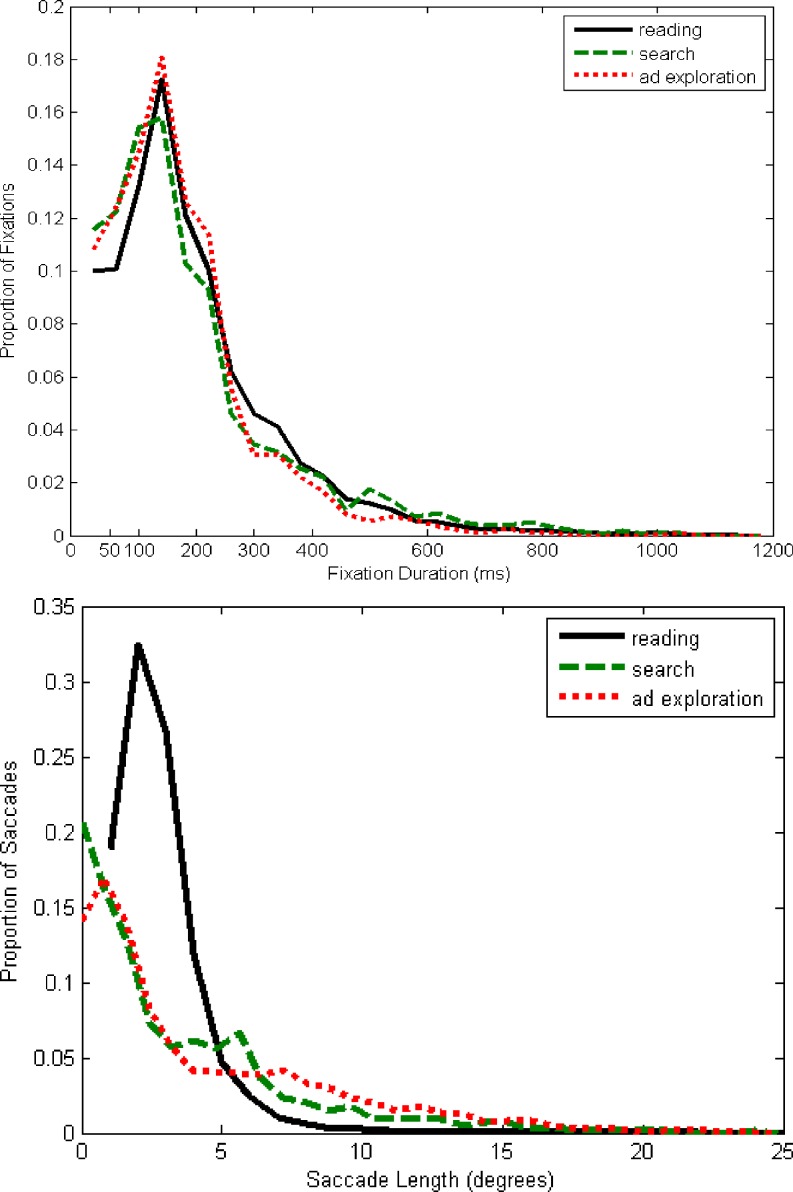

Table 5 presents the descriptive statistics of the fixations defined by our BIT algorithm for the sample of 71 participants, for each of the 12 tasks. We show the average number of fixations, the mean, median and standard deviation of fixation duration (in ms), and the mean, median and standard deviation of saccade size (in degrees, see Rayner et al., 2007). In addition, Fig. 3 presents the frequency distributions of fixation durations and saccade lengths as identified by the algorithm.

Table 5.

Characteristics of fixation durations and saccade sizes for different tasks

| Task | No. of fixations | Fixation durations (ms) | Saccade lengths (°) | ||||

|---|---|---|---|---|---|---|---|

| Mean | Mean | Median | SD | Mean | Median | SD | |

| Reading English 1 | 203 (65) | 261 (41) | 229 (28) | 150 (50) | 1.77 (.35) | 1.60 (.31) | 1.20 (.34) |

| Reading English 2 | 175 (68) | 251 (36) | 223 (32) | 135 (43) | 1.95 (.49) | 1.70 (.37) | 1.45 (.54) |

| Reading Dutch 1 | 192 (86) | 250 (45) | 220 (23) | 141 (62) | 2.03 (.63) | 1.88 (.68) | 1.41 (.41) |

| Reading Dutch 2 | 169 (60) | 261 (54) | 229 (34) | 149 (67) | 2.06 (.42) | 1.83 (.45) | 1.52 (.26) |

| Reading Overall | 185 (69) | 257 (44) | 226 (29) | 145 (56) | 1.94 (.47) | 1.74 (.46) | 1.38 (.40) |

| Search Shampoo | 23 (14.0) | 245 (43) | 214 (42) | 149 (50) | 3.41 (.95) | 2.25 (1.01) | 3.34 (1.03) |

| Search Margarine | 26 (7.5) | 267 (57) | 207 (67) | 190 (67) | 4.50 (1.49) | 3.31 (1.60) | 4.21 (1.40) |

| Search Overall | 24 (11.8) | 254 (50) | 211 (43) | 166 (60) | 3.86 (1.31) | 2.69 (1.38) | 3.70 (1.26) |

| Ad Exploration 1 | 21 (10.6) | 230 (46) | 206 (41) | 124 (48) | 5.37 (1.17) | 4.11 (1.89) | 4.75 (1.03) |

| Ad Exploration 2 | 37 (15.9) | 236 (35) | 214 (24) | 127 (47) | 4.40 (.83) | 2.90 (.89) | 4.17 (.71) |

| Ad Exploration Overall | 29 (15.6) | 233 (41) | 210 (34) | 125 (47) | 4.90 (1.13) | 3.52 (1.61) | 4.47 (.93) |

Note: Standard deviations across individuals in parentheses

Fig. 3.

Frequency distributions of the fixation durations (top panel) and saccade lengths (bottom panel)

Overall, the fixation durations for the scene viewing (ad-exploration) task are shortest (mean 233 ms), with reading (mean 257 ms) slightly but not significantly longer than search (mean 254 ms). The duration of fixations is most variable for search (SD = 166 ms), followed by those for reading tasks (SD = 145 ms). Our results also show that fixation durations are skewed to the right, with median fixation durations much shorter than their mean. In general, the average fixation durations identified through our algorithm are similar to those in previous accounts summarized above. For instance, similar to Rayner (2009) we find that fixation durations for reading are in the same range as those for visual search (Rayner: silent reading: 225–250 ms; visual search: 180–250 ms). Although Rayner reports slightly longer fixation durations for scene perception (260–330 ms), in another study he and his coauthors presented significantly shorter fixation durations in ad processing (234 ms for fixations on text vs. 251 ms for fixations on pictorials; Rayner, Miller, & Rotello, 2008) that are relatively close to what we find in our study. The top panel of Fig. 3 contains the distributions of the fixation durations across the three tasks. The figure shows that fixation durations have a skewed distribution, comparable to, for example, with Fig. 2 of Henderson and Hollingworth (1998). Similar to Henderson and Hollingworth, we find that the modal fixation duration is the same across the tasks. However, differently from Henderson & Hollingworth, we find a substantially higher proportion of longer fixation durations and a lower proportion of fixations below 100 ms in the reading task. This may be caused by the specific reading tasks that we use, which included reading a foreign language, which may require more time for comprehension and lead to longer fixations. In addition, different from some prior research, our scene viewing tasks involves advertisements, which are multimodal stimuli comprised of both pictorial and textual information. This may explain why we do not replicate previous findings that fixation durations during reading tend to be somewhat shorter than those in scene viewing.

The saccade size was largest for the scene viewing task (mean 4.90°) and smallest for the reading task (mean 1.94°). Saccade lengths during search were in-between those during ad exploration and reading (mean 3.86°). Similar to fixation durations, these saccade sizes are similar to those reported in previous studies, indicating that the algorithm correctly identifies velocity thresholds. Saccade sizes are highly variable with standard deviations of similar magnitude as the means, which vary significantly across tasks. Saccade lengths are also skewed to the right, with—except for reading—median lengths that are more than 1° shorter than their corresponding means (see Table 5). This is shown in the bottom panel in Fig. 3, which represents the distribution of saccade lengths across the three tasks. Similar to Henderson and Hollingworth (1998), we find that the modal saccade length for reading is substantially higher compared to scene viewing (ad exploration). Although individual differences were much more important than task differences in accounting for within-fixation variability of the POR, a linear mixed effects model shows that task differences play a significant role in explaining characteristics of fixations and, especially, saccades. That is, for fixation durations, individual differences account for 49% of the variation, but task differences still account for a significant 5%. For saccade lengths, individual differences only account for 12%, with 61% being due to task differences. These results are in line with the findings of Rayner et al. (2007) and Castelhano and Henderson (2008), but they are the first to quantify the size of individual and task effects on fixations and saccades.

Importance of individual specific threshold velocities

Because individual differences in within-fixation variability and their effects on velocity-based thresholds are large, we investigate their influence on fixation and saccade characteristics further. Table 6 compares the eye-movement characteristics of fixations and saccades computed by our algorithm with those computed by an alternative algorithm that would use only task-specific velocity thresholds for both eyes—the common procedure in current velocity-based algorithms. For the alternative algorithm, we used the average of the individual-specific velocity thresholds as presented in Table 3 as fixation thresholds for all individuals. Table 6 presents statistics for three eye-movement measures: average number of fixations, mean fixation durations, and average saccade sizes. It reports the average difference, the mean absolute deviation (MAD; for each measure the absolute value of the difference between the values of that measure computed with the two methods), the mean absolute percentage deviation (MAPD: the MAD expressed as a percentage of the mean of the measure in question), and the correlation between the three eye-movement measures computed with the two methods.

Table 6.

Comparison of eye-movement measures computed with algorithms that use individual and task-specific, respectively, only task-specific velocity thresholds

| Measure | Statistic | Overall | Reading | Search | Ad exploration |

|---|---|---|---|---|---|

| Number of fixations | Mean difference | –1.53 | –2.78 | –.32 | –1.26 |

| MAD | 19.27 | 46.15 | 4.16 | 5.82 | |

| MAPD | .21 | .27 | .18 | .18 | |

| Correlation | .90 | .55 | .92 | .84 | |

| Fixation durations (ms) | Mean difference | 1.24 | –.02 | –2.14 | 4.65 |

| MAD | 56.98 | 71.15 | 55.85 | 45.26 | |

| MAPD | .22 | .26 | .22 | .19 | |

| Correlation | .14 | –.08 | .38 | .07 | |

| Saccade size (°) | Mean difference | –.01 | –.00 | –.06 | .02 |

| MAD | .56 | .34 | .63 | .70 | |

| MAPD | .17 | .18 | .17 | .16 | |

| Correlation | .89 | .59 | .82 | .69 |

Note: The reported statistics are: mean difference: the overall mean for the eye-movement measure in question, computed using individual specific velocity thresholds minus the overall mean computed using the average velocity threshold for individuals, within a specific task; MAD Mean absolute deviation of the measures computed using individual and average velocity thresholds; MAPD Mean absolute percentage deviation of the measures computed using individual and average velocity thresholds; Correlation Correlation coefficient of the measures computed using individual and average velocity thresholds

As shown in Table 6, the overall differences in the reported means for the three eye-movement measures between algorithms that incorporate, respectively ignore individual differences in within fixation variation of the POR are very small. The average differences being –1.53 fixations, 1.24 ms fixation duration and 0.01° saccade size. Table 6 shows that this holds by-and-large for all tasks. The fact that the aggregate effect is small on average is reassuring in view of prior studies that have used thresholds that are fixed across individuals and report eye-movement statistics averaged across samples.

However and importantly, inspection of the correspondence of eye-movement measures at the individual level reveals large significant differences for all eye-movement measures. At the individual level, the mean absolute deviation (MAD) of the estimated number of fixations varies from 41.6 for search to 46.15 for reading. This corresponds to a 21% mean absolute percentage difference (MAPD). The magnitude of this difference increases as the length of the task increases; reading being the longest tasks in general. Moreover, the correlations between the numbers of fixations computed by the two algorithms are low, for reading this is only .55, although the overall correlation is high (.90). This reveals the importance of accounting for individual-specific, and not just task-specific, fixation thresholds, in particular when interest focuses on individual-level characterization of the eye-movement process.

A similar pattern is found for average fixation durations. At the individual level, the MAD is 56.98 ms overall, and for reading even 71.15 ms. Similarly, the correlations between the computed average fixation durations based on our algorithm and the alternative are low, ranging from .28 for reading to only .20 for ad exploration. The results for the average saccade sizes follow a similar pattern, with absolute differences that range from .34° (18% MAPD) for reading to .70° (16% MAPD) for ad exploration. Although the overall correlation between computed average saccade sizes is relatively high (.89), the correlation is only .59 for reading (a mere 35% shared variance).

These results imply that if the goal is to characterize aggregate eye-movement patterns across samples of individuals and/or differences between tasks and stimuli, using only task-specific fixation thresholds will yield approximately unbiased results. This has been the case for prior research that has used thresholds that were constant across individuals. However, our results also reveal that if the goal is to characterize eye-movement patterns at the individual level it is essential to allow for individual differences in the variability of the POR. Allowing for individual differences in the variability of the POR is even more important when the focus is on understanding coordination of binocular viewing.

Importance of binocular information

The algorithm can be easily applied to the POR of only one eye (see Footnote 1 in Sect. 2.2). However, using the POR of one eye possibly eliminates valuable information to identify fixations, which is suggested by Table 2. Such loss of information is likely as the correlation between velocities of both eyes are low. Table 7 reports the average difference, the mean absolute deviation (MAD), the mean absolute percentage deviation (MAPD), and the correlation between the eye-movement measures computed with the algorithm applied to POR of both eyes vs. the POR of only the left eye. The results in Table 7 clearly show a bias in all three measures. That is, when using information of one eye, the algorithm overestimates fixation durations by 19% and saccade lengths by 16%. This results in an overall decrease in the number of fixations of 14%. The reason for these results is that the information available from the POR of the second eye provides additional evidence for the algorithm to qualify high velocities of the POR as potential saccades. The results in Table 7 also explain why our algorithm finds fixation durations and saccade lengths that are somewhat shorter than previously reported measures. Previous research mostly uses velocity thresholds based on a single eye, which on average produces slightly longer fixation durations and longer saccades.

Table 7.

Comparison of eye-movement measures computed with algorithms that use the POR of both eyes, respectively only the left eye

| Measure | Statistic | Overall | Reading | Search | Ad exploration |

|---|---|---|---|---|---|

| Number of fixations | Mean difference | 13.65 | 33.37 | 3.00 | 3.47 |

| MAD | 13.79 | 33.37 | 3.19 | 3.71 | |

| MAPD | .14 | .17 | .13 | .12 | |

| Correlation | .99 | .96 | .98 | .98 | |

| Fixation durations (ms) | Mean difference | –44.69 | –57.74 | –46.07 | –32.24 |

| MAD | 47.64 | 57.75 | 52.53 | 35.40 | |

| MAPD | .19 | .21 | .22 | .16 | |

| Correlation | .79 | .92 | .61 | .78 | |

| Saccade size (°) | Mean difference | –.46 | –.34 | –.47 | –.56 |

| MAD | .51 | .34 | .52 | .65 | |

| MAPD | .16 | .19 | .16 | .14 | |

| Correlation | .97 | .93 | .95 | .89 |

Note: The reported statistics are: mean difference: the overall mean for the eye-movement measure in question, computed using individual specific velocity thresholds based on both eyes minus the overall mean computed using individual specific velocity threshold based on only the left eye; MAD Mean absolute deviation of the measures computed using velocity thresholds based on both eyes and only the left eye; MAPD Mean absolute percentage deviation of the measures computed using velocity thresholds based on both eyes and only the left eye; Correlation Correlation coefficient of the measures computed velocity thresholds based on both eyes and only the left eye

Study 2

Description and experimental setup

As discussed in the description of our algorithm, it is machine and sampling frequency independent. The results of the previous studies were collected on an eye tracker with a relatively low sampling frequency (50 Hz), and a relatively high inaccuracy because it compensates for head movements. Such an eye tracker is popular in applied research, but for basic research a tracker with a higher sampling frequency and accuracy (supplanted for instance by a chinrest or bite bar to immobilize the head during tracking) is required. Therefore, we also demonstrate the performance of the algorithm with data collected on a different machine, i.e., a Fourward Technologies Generation V dual-Purkinje eye tracker (Buena Vista, VA). Using a bite bar, it has an accuracy of <10 min arc, a precision of about 1 min of arc, with a sampling rate of 200 Hz. The specific eye-tracking data we use was published by van der Linde, Rajashekar, Bovik, and Cormack (2009), and is called DOVES. Among others, this dataset contains the raw POR records of one eye of 29 observers that viewed a total of 101 scenes each one for 5 s. For a more detailed description, we refer to the original publication of this dataset by van der Linde Rajashekar, Bovik, and Cormack (2009).

The DOVES database only contains the raw data of the POR of one single eye. Therefore we applied our algorithm to these measures (as explained above, our algorithm can easily be applied to the POR of one eye). However, the accuracy of the eye-tracker in this study is much higher (see also Fig. 4 for two examples of the POR of this study). Next, we present the results of applying our algorithm to the DOVES database. Although this is not our primary purpose, this dataset allows us to compare the performance of our algorithm to the one applied by van der Linde et al. (2009). They use the algorithm recommended by the manufacturer, which defines a fixation if the POR remains within a diameter of 1° for at least 100 ms.

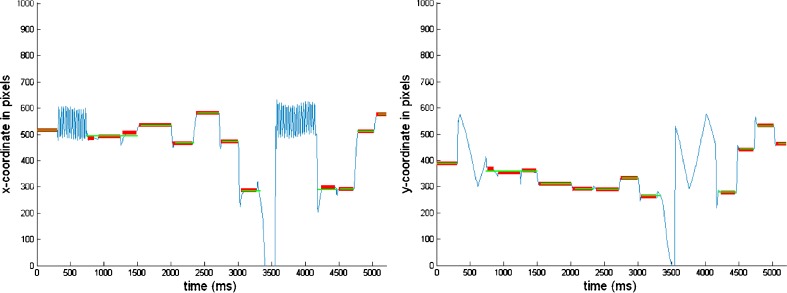

Fig. 4.

Illustrating the BIT Algorithm on observed PORs for two individuals in the DOVES database. The three plots on the top correspond to participant 2 and the bottom plots to participant 21 while they are viewing picture 2. The left scatter plots represent the velocities of the POR as expressed in differenced x- and y-coordinates. The red ellipses in these plots represent the estimated threshold for determining fixations and saccades (i.e., velocities outside the ellipse are labeled as candidate saccades). The two right plots represent the POR in respectively x- and y-coordinates, with estimated fixation positions superimposed (red: the proposed algorithm that determines individual specific velocity thresholds; green: the algorithm by the manufacturer as used by van der Linde et al. (2009))

Results

Within-fixation variability

Figure 4 presents the variability of the POR of the eye of two observers. The two left plots represent the velocity of the POR and the individual specific velocity thresholds computed by our algorithm. The two right plots indicate the x- and y- coordinates of the POR and the identified fixations by our algorithm (red) and the ones reported by van der Linde et al. (green). Clearly and as expected, the within-fixation variation in this study is much lower than in study 1. This leads to much smaller thresholds to identify fixations and saccades. On average, our algorithm finds standard deviations of the POR within fixations in the horizontal direction of 2.77° (median: 1.68°), and of 1.94° (median: 1.62°) in the vertical direction. Furthermore, the within-fixation variation of the POR of horizontal and vertical movements in this study are on average unrelated (average correlation: –.02). However, there are substantial differences of the within-fixation variability of the POR across individuals and tasks (SD = 7.84 in x-direction and SD = 1.51 in y-direction). For instance in Fig. 4, the within-fixation variabilities of participant 5 (plots at the top of Fig. 4) are 2.10° and 2.00° in, respectively, the horizontal and vertical direction, while for participant 21 (plots at the bottom of Fig. 4) these values are .95° and 1.12°, respectively. These results again show the importance of allowing for individual differences in velocity thresholds to identify fixations and saccades.

The within-fixation variability is much lower in this study, suggesting that our algorithm does not only pick up individual and task differences but also differences due to instrumentation. Furthermore, for some individual-task combinations we find extremely high within-fixation variability of the POR. We manually investigated these individual-task combinations and found an extremely high noise during some periods of the eye-tracking experiment in study 2. Figure 5 presents such a case in which participant 6 is viewing image 2. Our algorithm finds a within-fixation variability of 90.3 in the horizontal x-direction, 14.3 in the vertical y-direction, indicating very high noise. This shows that our algorithm is not only useful to identify fixations but also to identify potential recording problems during eye-tracking.

Fig. 5.

Using the BIT Algorithm to identify possible PORs with relatively high noise. The blue lines in these plots represent the x- (left) and y- (right) coordinates of the POR of participant 6 while viewing the second image. Estimated fixation positions are superimposed on these plots (red: the proposed algorithm that determines individual specific velocity thresholds; green: the algorithm by the manufacturer as used by van der Linde et al. (2009)). Our algorithm reports a high within fixation-variability  , indicating high noise of the POR

, indicating high noise of the POR

In study 1, we found that the mean of the within-fixation variability was not significantly different from zero. Interestingly, in this study the overall means of the within-fixation variability is on average positive and significantly different from zero (mean x-direction: .19°, mean y-direction: .02°). Especially the mean in the horizontal x-direction is substantial, suggesting that the eye has the tendency to drift slightly towards the right during a fixation. This effect is illustrated in the bottom-left plot of Fig. 4, where the center of the ellipse is located slightly towards the right (mean x-direction: .20°). This shows that allowing for a non-zero mean for within-fixation variability is important in this specific study, in which the use of a chinrest that prevents head movements may have caused a systematic within-fixation movement of the eye.

Descriptive statistics of eye-movement characteristics

Using the BIT algorithm, we find on average 16.3 (SD = 2.6) fixations per task of 5 seconds. Furthermore, we find an average fixation duration of 257 ms (median = 255 ms, SD = 46.3 ms). This is somewhat longer than the fixation durations during the ad exploration task in study 1, and somewhat shorter than the ones reported by Rayner (2009) for scene perception (260–330 ms). The average saccade length equals 2.7° (median = 2.73°, SD = .92°). This is much shorter than what we found in study 1 during ad exploration, and also much shorter than what was reported by Rayner 2009 (4–5° during scene perception).

Table 8 presents the comparison of the eye-movement characteristics found by our algorithm with the algorithm used by van der Linde et al. (2009), who used standard manufacturer settings. Their reported eye-movement characteristics differ from those found by our algorithm. The manufacturer algorithm used by van der Linde et al. (2009) systematically finds fewer fixations compared to ours (an average of 12.1 fixations; 4.13 fewer fixations than our algorithm), leading to longer fixation durations (mean difference = –172.54 ms) and longer saccades (mean difference = –.74°). The mean fixation duration found by the standard algorithm used by van der Linde et al. was 429 ms, which is longer than that reported in previous studies. Visual inspection of the PORs of individuals and identified fixations superimposed on these plots shows that their standard algorithm merges fixations that our algorithm separate. Although there are no absolute standards for the definition and computation of oculomotor measures in the study of cognitive processes (Inhoff & Radach, 1998), the descriptive statistics and visual inspection suggest that our algorithm better identifies fixations and saccades compared to the manufacturer algorithm applied by van der Linde et al. (2009). The two right plots in Fig. 4 illustrate two examples. For participant 2 (plots in the top of Fig. 4), the standard algorithm does a reasonable job at the beginning in this task, however it only identifies two fixations during the last 1,500 ms, while our algorithm suggests six fixations. Similarly for participant 21, the standard algorithm identifies only one fixation during the first second and two during the final second. Our algorithm identifies two fixations during the first second and three during the final second.