Abstract

Relational rules such as ‘same’ or ‘different’ are mastered by humans and non-human primates and are considered as abstract conceptual thinking as they require relational learning beyond perceptual generalization. Here, we investigated whether an insect, the honeybee (Apis mellifera), can form a conceptual representation of an above/below spatial relationship. In experiment 1, bees were trained with differential conditioning to choose a variable target located above or below a black bar that acted as constant referent throughout the experiment. In experiment 2, two visual stimuli were aligned vertically, one being the referent, which was kept constant throughout the experiment, and the other the target, which was variable. In both experiments, the distance between the target and the referent, and their location within the visual field was systematically varied. In both cases, bees succeeded in transferring the learned concept to novel stimuli, preserving the trained spatial relation, thus showing an ability to manipulate this relational concept independently of the physical nature of the stimuli. Absolute location of the referent into the visual field was not a low-level cue used by the bees to solve the task. The honeybee is thus capable of conceptual learning despite having a miniature brain, showing that such elaborated learning form is not a prerogative of vertebrates.

Keywords: above/below relationship, concept learning, rule learning, visual cognition, honeybee, Apis mellifera

1. Introduction

In cognitive sciences, concepts or categories constitute representations of typical entities or situations encountered in an individual's world [1–3]. They promote cognitive economy by sparing the learning of every particular instance of such entities or situations. Instead, individuals generate broad classifications of items so that they can transfer appropriate responses to novel instances that fulfil the basic criteria defining the concept or category.

Categorical classification is thought to be governed to some extent by perceptual similarities. In other words, it is possible to classify different items within a class based on specific features that define the category. For instance, a robin, a sparrow and even a dodo are positive instances of the category ‘bird’ as they share specific features such as a beak, feathers, wings, etc. that group them together in the same class. A higher level of classification corresponds to stimulus grouping that is not necessarily based on perceptual similarity but on relational rules linking different instances together. Relational rules such as ‘same’ or ‘different’, ‘more than’ or ‘less than’ are mastered by humans and non-human primates (e.g. [4–6]) and are considered as a form of abstract conceptual cognition as they involve learning beyond perceptual generalization.

For many animals that must operate in complex natural environments, spatial concepts such as ‘right’, ‘left’, ‘above’ and ‘below’ are of crucial importance to generate appropriate relational displacements and orientation in their environment. Currently however, relatively few studies have investigated if and how animals master conceptual spatial relations such above/below or left/right. Because the few studies performed so far have focused on pigeons [7] and non-human primates such as chimpanzees [8], baboons [9] or capuchins [10], it is relevant from the perspective of comparative animal cognition, to study if the mastering of a spatial concept such as above/below is a prerogative of vertebrates traditionally characterized as efficient learners such as the species cited above, or can also be found in a miniature brain. We have therefore focused on an insect that despite its phylogenetic distance to vertebrates is capable of efficient learning and retention. Such an insect is the honeybee Apis mellifera [11].

Honeybees are appealing to study whether abstract rule learning occurs in an insect because they learn and memorize a variety of complex visual cues to identify food sources including flowers. While foraging in the field, bees learn to associate visual, olfactory, mechanosensory and spatial cues of flowers, with flower rewards of nectar and pollen [11,12]. The study of their visual capacities is possible because free-flying bees can be trained to choose specific visual targets, on which the experimenter offers a drop of sucrose solution as the equivalent of nectar reward (see review in [11]). After the completion of training, bees can be tested with different stimuli in order to determine the visual strategies employed for stimulus recognition. Using this protocol, it has been shown that bees are capable of higher order forms of visual learning that have been mainly studied in vertebrates [11,13,14]: they categorize both artificial patterns [15,16]; (see [17] for a review) and pictures of natural scenes [18], they exhibit top-down modulation of their visual perception [19], and they learn to master both delayed matching-to-sample and delayed non-matching-to-sample discriminations by following a rule of sameness and of difference, respectively [20,21] or a rule of numerosity [22]. These performances indicate that bees can effectively deal with relational concepts.

Here, we studied whether honeybees learn an above/below relationship between visual stimuli and transfer it to novel stimuli that are perceptually different from those used during the training. We therefore analysed whether conceptual learning based on spatial relationships is possible in a brain that owing to its reduced amount of neurons (less than 1 million compared with 100 billions in humans) could be considered as limited in its computational capacities.

2. Material and methods

(a). General procedure

Free-flying honeybees A. mellifera, Linnaeus, from a single colony located 30 m from the test site were allowed to collect 0.2 M sucrose solution from a gravity feeder. Individual bees marked with a colour spot on the thorax were trained to fly into a Y-maze located 10 m from the feeder to collect 1 M sucrose solution delivered on the back walls of the apparatus [23]. The maze covered by an ultraviolet-transparent Plexiglas ceiling was located on an outside table and illuminated by open daylight. Only one bee was present at a time in the Y-maze to insure independence of decision making.

The entrance of the Y-maze led to a decision chamber, where the honeybee could choose between the two arms of the maze. Each arm was 40 × 20 × 20 cm (L × H × W). The back walls of the maze (20 × 20 cm) were placed at a distance of 15 cm from the decision chamber and were covered by a white-reflecting ultraviolet (UV) background on which stimuli patterns were presented.

Bees were trained using a differential conditioning protocol, in which one stimulus, presented on one of the back walls of the Y-maze, was rewarded with 1 M sucrose solution while a different stimulus was penalized with 60 mM quinine solution [24,25]. Solutions were delivered by means of a transparent micropipette (5 mm diameter) located in the centre of each visual target. The pipette subtended a visual angle of 1.9° to the decision chamber and was below the angular threshold for visual detection established for honeybees [26]. Moreover, as both maze arms presented the same pipette, it could not be used as a discrimination cue.

Each training trial consisted of a visit to the maze of the experimental bee, which ended with its uptake of sucrose solution (i.e. a foraging bout). If the bee chose the rewarded stimulus, it could drink sucrose solution ad libitum. If, however, it chose the non-rewarded stimulus, it was allowed to taste the quinine solution and then to fly to the alternative arm presenting the rewarded stimulus to imbibe the sucrose solution. The bee then flew back to the hive and 3–5 min later returned to the maze for the next training trial. During its absence, the side of rewarded and punished stimuli was interchanged following a pseudorandom sequence (i.e. the same side was not rewarded more than twice) in order to avoid positional (side) learning. On each training trial, only the first choice of the bee was recorded for statistical analysis. Acquisition curves were obtained by calculating the frequency of correct choices per block of five trials.

Following the last acquisition trial, non-rewarded tests were performed with fresh, non-rewarded stimuli. During the tests, both the first choice and the cumulative contacts with the surface of the targets were counted for 45 s. The choice proportion for each of the two test stimuli was then calculated. Each test was done twice, interchanging the sides of the stimuli to control for side preferences. Three refreshing trials with the reinforced training stimuli were intermingled between the tests to ensure that foraging motivation did not decay owing to non-rewarded test experiences.

(b). Stimuli

Stimuli were presented on a white UV-reflecting paper of constant quality covering the back walls of the maze. Pairs of vertically aligned stimuli were presented on each back wall. Per definition, the ‘referent’ was the stimulus that was below or above and acted as a reference for the ‘target’, which was located above or below it, respectively. In experiment 1, the referent was always a horizontal black bar, 10 cm long, above or below which different targets were presented. In experiment 2, the referent was either a black disc 3 cm in diameter, or a 4 × 4 cm black cross, depending on the group of bees considered. In both experiments, the targets were variable, so that the bee had to learn the relationship rule rather than a specific pattern. Within a trial or a test, stimulus pairs in the left and right arms of the maze were identical except for their spatial relationship, thus excluding the use of low-level cues for discrimination such as differences in the centre of gravity, black area or total pattern frequency [27].

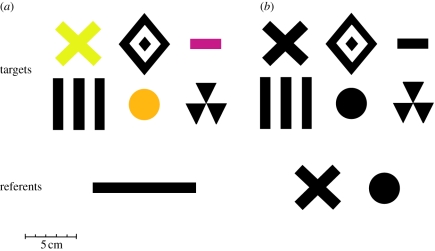

In experiment 1 (figure 1a), six targets were presented above the black bar used as referent. Three of the targets—a 5 × 5 cm vertical grating, a 4 × 4 cm radial three-sectored pattern and a 5 × 6 cm concentric diamond pattern—were achromatic ‘black’ and were printed with a high-resolution laser printer. The other three targets—a 4 × 4 cm cross, a disc 3 cm in diameter and a 1 × 3 cm small bar—were chromatic and were, respectively, cut from HKS-N coloured papers 3N, 71N and 26N (K + E Stuttgart, Stuttgart–Feuerbach, Germany), which appeared yellow, ochre and purple to the human eye. All colours were well above the discrimination threshold and distinguished by bees according to both the Colour Opponent Coding space [28] and the Hexagon colour space [29] models of bee visual processing.

Figure 1.

Stimuli used in the experiments. (a) Experiment 1: during training, the targets were presented above and below the referent bar. (b) Experiment 2: only achromatic patterns were used. During training, the targets were presented above and below the referent pattern that was a cross or a disc depending on the group of bees trained.

The black bar used as referent subtended a visual angle of 37° to the centre of the maze's decision chamber. In their largest extension, the targets subtended visual angles that varied from 12° to 22°. The grating stripes, the cross bars and the black and white diamonds that composed the concentric diamond pattern were all 1 cm width, which corresponded to a visual angle of 4° from the decision chamber. Sectors in the radial pattern covered 2 cm in their largest extension thus subtending a visual angle of 8° to the bees' eye when deciding between stimuli. An angular threshold of 5° has been reported for stimulus detectability using chromatic or achromatic discs of varying size [26]. In a different experiment [30], where horizontal and vertical black and white gratings of varying spatial frequency had to be discriminated, single stripes could be resolved if they subtended a threshold angle of 2.3°. These angular values ensure that our disc and striped stimuli were detectable and resolvable for honeybees [26,30].

In experiment 2 (figure 1b), only achromatic, black stimuli were used. The referent was either a cross or a disc as described for experiment 1. The six stimuli used as targets were as described for experiment 1, except that for experiment 2 all stimuli were achromatic.

(c). Experiment 1

Compound stimuli consisted of a target (figure 1a) placed above or below a referent black bar (figures 1a and 2a). One group of bees (n = 4) was trained to choose the rewarded ‘target above bar’ spatial relation and to avoid the penalized ‘target below bar’ spatial relation; another group of bees (n = 4) was trained with inversed contingencies (i.e. target above bar penalized and target below bar rewarded). Each bee was trained for 30 trials using five of the six available target stimuli. During training each of the five targets was presented six times, in a randomized sequence. From one trial to the next, the side of reward, the target, its distance to the referent bar and the bar's location were pseudo randomized (figure 2a), only keeping constant the fact that the ‘target above bar’ relation was rewarded and the ‘target below bar’ relationship was penalized for bees of the ‘above group’ while the opposite was true for the ‘below group’. Following the training procedure, each bee was presented with a non-rewarded transfer test with a novel target located above or below the referent. The transfer-test target had not been used during the training and was thus novel to the individual bee (figure 1a). The stimulus selected as the transfer-test target varied randomly between bees.

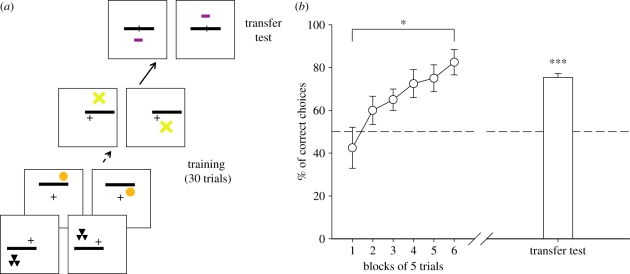

Figure 2.

Experiment 1. (a) Example of the conditioning and testing procedure. Half of the bees were rewarded on the ‘target above bar’ relation whereas the other half was rewarded on the ‘target below bar’ relation. The transfer test was not rewarded. (b) Acquisition curve during training (percentage of correct choices as a function of blocks of five trials) and performance (cumulative choices during 45 s test) in the non-rewarded transfer test (white bar). Data shown are means and s.e.m. (n = 8). Bees succeeded in learning the rule based on the above versus below relationship and in transferring the concept to novel stimuli (*p < 0.05; ***p < 0.001).

(d). Experiment 2

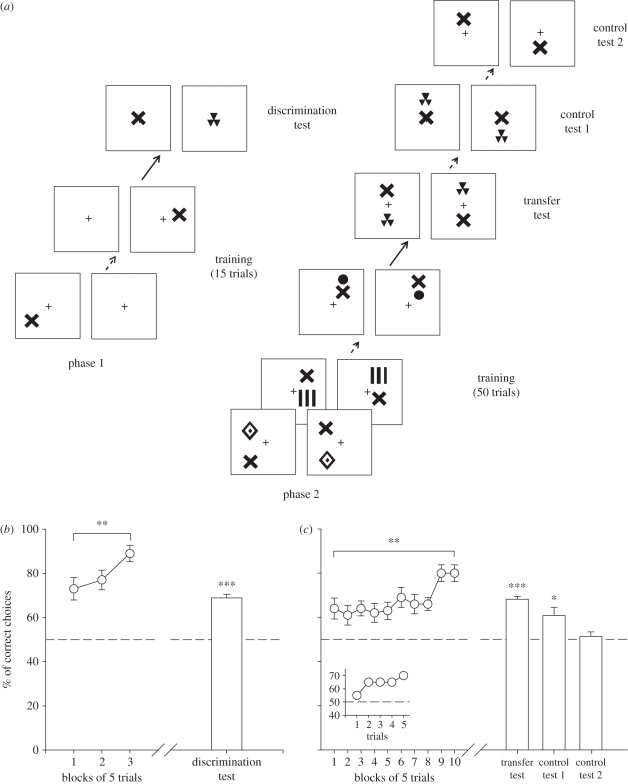

In a pre-training phase (Phase 1), bees were subjected to an absolute conditioning protocol [23] during 15 trials in which the stimulus subsequently used as a constant referent throughout the experiment was rewarded with sucrose solution when presented in one arm of the maze. The other arm presented a white background alone associated with quinine solution. This absolute conditioning was performed to encourage the bees to focus on the referent during the subsequent training phase, in which the above/below relationship was inculcated relative to it. For one group of bees, the stimulus used as referent was a cross (n = 10) while it was a disc for another group of bees (n = 10). The target used within each group was varied in a pseudo random fashion between trials (figure 3a). After pre-training, a discrimination test opposing the referent and a pattern chosen randomly among the set of available targets (‘test pattern’) was performed to verify that bees did indeed discriminate the referent (figure 3a). This pattern was not used during the following training phase.

Figure 3.

Experiment 2. (a) Example of the conditioning and testing procedure. Half of the bees were rewarded on the ‘target above referent’ relation whereas the other half was rewarded on the ‘target below referent’ relation. The referent pattern was either the disc or the cross depending on the group of bees trained. The transfer test was not rewarded. (b) Phase 1 (pre-training): acquisition curve during pre-training (percentage of correct choices as a function of blocks of five trials) and performance (cumulative choices during 45 s test) in the non-rewarded discrimination test (white bar). Data shown are means and s.e.m. (n = 20). Bees learned to choose the referent pattern and to discriminate it from other patterns used as targets in the subsequent training phase (**p < 0.01; ***p < 0.001). (c) Phase 2 (training): acquisition curve during training (percentage of correct choices as a function of blocks of five trials) and performance (cumulative choices during 45 s test) in the non-rewarded tests (white bars). Data shown are means and s.e.m. (n = 20 for acquisition curve and transfer test, and n = 8 for controls 1 and 2). The inset shows acquisition performance during the first five trials that integrate the first training block. Bees learned the concept of above/below and transferred it to novel stimuli. Controls 1 and 2 show that the spatial location of the referent on the background was not used as a discrimination cue to resolve the task (*p < 0.05; **p < 0.01; ***p < 0.001).

After pre-training, bees were subjected to a training phase (Phase 2) during 50 trials to select either the ‘target above referent’ (above group) or the ‘target below referent’ relationship (below group) in a differential conditioning task. Bees in the above group were rewarded whenever a variable target was above the referent (disc or cross depending on the group of bees); for these bees, the same stimuli presented in a reversed relationship (i.e. with the target always below the referent) were penalized with quinine solution. Bees in the below group were trained with the reversed contingencies. From one trial to the next, the positions and distance between the target and the referent were constantly varied, keeping constant only the above/below relationship as predictor of reward and punishment (figure 3a). Excluding the pattern used for the test following pre-training (see above) and the pattern used as referent, all four remaining patterns (figure 1b) were used as targets during the training. Each of the four patterns was therefore presented 12 or 13 times in a random sequence during the 50 trials.

After training was completed, bees were subjected to a transfer test in which the test pattern was introduced as the target in an above/below relationship (figure 3a). Additionally, eight of 20 bees studied were subjected to two further controls aimed at verifying that bees used the relative position of both target and referent and not just the fact that in most cases the referent appeared in the lower or in the upper visual field in the above or the below group, respectively. In control 1, the referent was positioned in the middle of the background for both the rewarded and the punished stimulus (figure 3a) so that its absolute position could not help discrimination. As a target was nevertheless also present in this test, the above/below relationships were preserved. In control 2, the referent was presented singly in the lower versus the upper part of the background (figure 3a) to determine whether its absolute position was an orientation cue used by the bees, instead of its position relative to the target, which was now absent.

(e). Statistical analysis

Performance of balanced groups during acquisition was compared using ANOVA for repeated measurements in which groups and trial blocks constituted factors of analysis. The dependent variable was the percentage of correct first choices of each individual bee in each block of five trials. Performance during the tests was analysed in terms of the proportion of correct choices per test, producing a single value per bee to exclude pseudo replication. A binomial test was used to analyse performance in terms of the bees' first choice, while a one-sample t-test was used to test the null hypothesis that the proportion of correct choices cumulated during 45 s of a test was not different from a theoretical value of 50 per cent. Comparisons between groups and between tests were made using independent two-sample t-tests and paired samples t-tests, respectively.

3. Results

(a). Experiment 1

To test if honeybees can extract an abstract above/below relationship and to classify visual instances based on this relative spatial relationship, we used target stimuli presented above or below a horizontal bar (figure 1a). Honeybees were individually trained in a differential conditioning procedure in which one spatial relation (e.g. target above bar) was associated with sucrose solution while the other relation (e.g. target below bar) was associated with quinine solution (figure 2a). One group of bees was rewarded on the ‘target above bar’ relation while another group was rewarded on the ‘target below bar’ relation. After completing the training, bees were subjected to a non-rewarded transfer test in which a novel target (not used during the training) was presented above or below the bar (figure 2a). This experiment allows the testing of two alternative hypotheses. First, if bees responded on a strictly perceptual basis, deterioration of performance should be observed in the transfer test because of the introduction of the unknown target stimulus. By contrast, if performance in the transfer test remained high, then an above–below abstract concept was formed by the bee in order to solve the novel visual problem.

Both groups of bees (above group and below group) learned the task as their acquisition curves significantly increased during the six blocks of five trials (ANOVA for repeated measurements; n = 8 bees; block effect: F5,30 = 3.5, p < 0.05). There was no group effect (F1,6 = 0.1, p = 0.81), thus showing that acquisition was not influenced by the particular spatial relationship (above or below) rewarded. Data of both groups were therefore pooled and presented as a single curve in figure 2b.

In the transfer test, performance was also independent of the particular spatial relationship (above or below) rewarded (two sample t-test, t6 = 0.55, p = 0.60) so that results of both groups were pooled and presented as a single bar (figure 2b). Here and henceforth, test performances shown correspond to the cumulative choices of the bees during the 45 s test; similar performance levels were obtained when considering the first choice in the tests (not shown). Bees chose the correct spatial relationship despite the introduction of an unknown stimulus as the target in 75.4 ± 1.8 per cent of the cases (mean ± s.e.: n = 8 bees). This performance was significantly different from chance both if the first choice (binomial test: p < 0.005) or the cumulative choices during 45 s (one sample t-test against a 50% choice, t7 = 12.57, p < 0.001) were considered, thus showing that bees were able to classify visual instances on the basis of a conceptual representation of the above/below relationship.

(b). Experiment 2

In this experiment, we extended our testing of how bees can learn the concept of above/below, but instead of using a salient horizontal bar as referent, we used two stimuli positioned one above the other so that one acted as the target and the other as the referent. For one group of bees, the stimulus used as referent was a cross (n = 10) while for another group of bees, it was a disc (n = 10; figure 1b). The target used within each group varied from trial to trial in order to promote the extraction of an abstract above/below relationship.

In phase 1 (pre-training phase), bees were rewarded to choose the referent stimulus alone (cross or disc, depending on the groups of bees; figure 3a). During the three blocks of five pre-training trials, bees learned quickly to choose the arm presenting the target independently of the stimulus used (cross or disc; n = 20; block effect: F2,36 = 5.6, p < 0.01; group effect: F1,18 = 2.7, p = 0.12); data for both groups were therefore pooled and presented as a single curve in figure 3b. Results from the discrimination test showed that honeybees discriminated significantly differently to chance expectation between the referent and the alternative stimulus both considering the first choice (binomial test: p < 0.001) and the cumulative choices (n = 20; t19 = 11.2, p < 0.001; figure 3b), irrespective of the referent trained (t18 = 0.3, p = 0.78).

After pre-training, bees were trained in phase 2 to select either the ‘target above referent’ (above group) or the ‘target below referent’ relationship (below group) in a differential conditioning task in which the referent was constant and the targets were variable. During 10 blocks of five trials, bees significantly improved their correct choices (n = 20, trial effect: F9,144 = 3.1, p < 0.005) irrespective of the group considered (above/below effect: F1,16 = 0.9, p = 0.36; cross/disc effect: F1,16 = 3.4, p = 0.08; figure 3c). Performance of groups was therefore pooled and presented as a single curve in figure 3c. The fact that the first block of trials yielded a performance that was already significantly above chance (t19 = 2.8, p = 0.011) was owing to the fact that some bees rapidly learned to make correct choices after the first choice. The inset in figure 3c shows the choice performance for the first five acquisition trials that integrate the first block. The data indicate that bees started choosing randomly and then rapidly improved their performance to 60–70% correct choices.

After training was completed, bees were subjected to a transfer test in which a novel stimulus was introduced as target in an above/below relationship (figure 3a). In the transfer test, bees significantly preferred the spatial relationship for which they were trained (n = 20; first choice: p < 0.001; cumulative choices: t19 = 13.0, p < 0.001; figure 3c) irrespective of the group considered (above/below: t18 = 0.4, p = 0.70; cross/disc: t18 = 0.6, p = 0.55). The bees were consequently able to learn the concept above/below even when the task was rendered more difficult.

In addition, eight of 20 bees studied were subjected to two further controls aimed at verifying that bees used the relative position of both target and referent and not just the fact that in most cases the referent appeared in the upper or in the lower visual field in the below or the above group, respectively. In control 1, the referent was located in the middle of the background both for the rewarded and the non-rewarded stimulus pairs so that it could not help the bees choosing between them. In this control, bees chose appropriately the stimulus pair presenting the spatial relationship for which they were trained (figure 3c; n = 8; first choice: p < 0.05; cumulative choices: t7 = 2.9, p < 0.05) irrespective of the group considered (above/below: t6 = 1.1, p = 0.33; cross/disc: t6 = 1.9, p = 0.10). Performance of bees in this test did not differ from that observed in the transfer test (t7 = 1.6, p = 0.16). In control 2, only the referent was presented in the upper or the lower part of the background to determine whether its absolute position was an orientation cue used by the bees, instead of its position relative to the target, which was now absent. Bees showed no preference between the two referent locations (figure 3c; n = 8; first choice: p = 0.40; cumulative choices: t7 = 0.7, p = 0.50), irrespective of the group considered (above/below: t6 = 0.08, p = 0.94; cross/disc: t6 = 0.4, p = 0.74; figure 2c). Performance in this test was, therefore, significantly different from that in the transfer test (t7 = 4.6, p < 0.005) and in control 1 (t7 = 2.6, p < 0.05). These results show that the honeybee possesses the faculty to extract a conceptual above/below relationship from a set of training stimuli and to transfer this concept to newly encountered stimuli.

4. Discussion

The present work shows that honeybees learn a conceptual spatial relationship based on an above/below relationship between stimuli, irrespectively of the physical nature of the stimuli. In both experiments, bees exhibited the faculty to transfer an appropriate response to novel instances of the trained concept in spite of variations in the distance separating the referent and the target, the spatial location within the visual field, the fact that targets were variable and randomized during training, and that novel stimuli were introduced in the tests. None of these manipulations affected the performance of the bees, which learned to choose stimuli based on an above/below relationship.

Several examples of perceptual categorical learning have been provided for honeybees (see [17] for review). Honeybees can indeed categorize visual stimuli based on single features, such as bilateral symmetry [15], global orientation [31], concentric and radial organization [31], and on configurations of features [16,32]. This capacity also applies to natural pictures, which bees can categorize in classes such as closed and radial flower types, plant stems and landscapes [18]. In all these examples, perceptual similarity plays a critical role as bees classify stimuli based on their physical similarity with a prototype or because they present the basic perceptual features that define the category [33]. Examples of conceptual relational learning, in which the animal's response is not driven by the physical similarity of stimuli but rather by an abstract relationship or rule binding items irrespective of their physical nature, are however rare in the case of the honeybee. So far, bees have been reported to learn both a concept of sameness and of difference [20,21] so that they learned to solve a delayed matching-to-sample (DMTS) and a delayed non-matching-to-sample (DNMTS) problem, respectively. In these experiments, the bees' response was not driven by stimulus similarity as bees matched (DMTS) or non-matched (DNMTS) colours with achromatic gratings (and vice versa) and even colours with odours [20]. A DMTS procedure was also used to demonstrate the existence of numerosity in honeybees [22]. In this case, bees had to match the novel stimulus containing the same number of items as the sample. The authors controlled for low-level cues, such as cumulated area and edge length, configuration identity and illusionary shape similarity formed by the elements. Their results showed that honeybees have the capacity to match visual stimuli as long as the number of items does not exceed four. In a different set-up, inspired by field experiments on honeybee navigation [34], bees were trained to fly into a tunnel to find a food reward after a given number of landmarks [35]. The shape, size and positions of the landmarks were also changed in the different testing conditions in order to avoid confounding factors. As in the DMTS experiment, bees showed a stronger preference to land after the correct number of landmarks in non-rewarded tests and showed the same limit of four in their counting capacity.

Our results add another example of conceptual learning to the relatively few set of examples documenting this capacity in honeybees. Our new finding demonstrates that bees can indeed process above and below spatial relations between visual stimuli and provide, to our knowledge, the first example of above/below conceptual learning in an invertebrate. This ability seems thus to be present in a variety of animals. Until now, this capacity has only been studied in pigeons [7], in chimpanzees [8], in baboons [9] and in capuchins [10]. Our results thus support the hypothesis that the learning of conceptual spatial relations is independent of language abilities [36] and challenges the idea that a relatively large vertebrate brain is necessary to perform such high-level cognitive tasks.

Acknowledgements

We thank two anonymous reviewers and L. Chittka for helpful comments and corrections. M.G. and A.A.-W. thank the French Research Council (CNRS) and the University Paul Sabatier (Project APIGENE) for generous support. A.A.-W. was supported by a Travelling Fellowship of The Journal of Experimental Biology, and by the University Paul Sabatier. A.G.D. acknowledges the ARC DP0878968 and DP0987989 for funding support.

Footnotes

One contribution to a Special Feature ‘Information processing in miniature brains’.

References

- 1.Zayan R., Vauclair J. 1998. Categories as paradigms for comparative cognition. Behav. Process. 42, 87–99 10.1016/S0376-6357(97)00064-8 (doi:10.1016/S0376-6357(97)00064-8) [DOI] [PubMed] [Google Scholar]

- 2.Thagard P. 2005. Mind: introduction to cognitive science. Cambridge, MA: MIT Press [Google Scholar]

- 3.Zentall T. R., Wasserman E. A., Lazareva O. F., Thompson R. K. R., Rattermann M. J. 2008. Concept learning in animals. Comp. Cogn. Behav. Rev. 3, 13–45 [Google Scholar]

- 4.Boysen S. T., Berntson G. G. 1995. Responses to quantity: perceptual versus cognitive mechanisms in chimpanzees (Pan troglodytes). J. Exp. Psychol. Anim. Behav. Process. 21, 82–86 10.1037/0097-7403.21.1.82 (doi:10.1037/0097-7403.21.1.82) [DOI] [PubMed] [Google Scholar]

- 5.Murphy G. L. 2002. The big book of concepts. Cambridge, MA: MIT Press [Google Scholar]

- 6.Wright A. A., Katz J. S. 2006. Mechanisms of same/different concept learning in primates and avians. Behav. Process. 72, 234–254 10.1016/j.beproc.2006.03.009 (doi:10.1016/j.beproc.2006.03.009) [DOI] [PubMed] [Google Scholar]

- 7.Kirkpatrick-Steger K., Wasserman E. A. 1996. The what and the where of the pigeon's processing of complex visual stimuli. J. Exp. Psychol. Anim. Behav. Process. 22, 60–67 10.1037/0097-7403.22.1.60 (doi:10.1037/0097-7403.22.1.60) [DOI] [PubMed] [Google Scholar]

- 8.Hopkins W. D., Morris R. D. 1989. Laterality for visual-spatial processing in two language-trained chimpanzees (Pan troglodytes). Behav. Neurosci. 103, 227–234 10.1037/0735-7044.103.2.227 (doi:10.1037/0735-7044.103.2.227) [DOI] [PubMed] [Google Scholar]

- 9.Dépy D., Fagot J., Vauclair J. 1999. Processing of above/below categorical spatial relations by baboons (Papio papio). Behav. Process. 48, 1–9 10.1016/S0376-6357(99)00055-8 (doi:10.1016/S0376-6357(99)00055-8) [DOI] [PubMed] [Google Scholar]

- 10.Spinozzi G., Lubrano G., Truppa V. 2004. Categorization of above and below spatial relations by tufted capuchins monkeys (Cebus apella). J. Comp. Psychol. 118, 403–412 10.1037/0735-7036.118.4.403 (doi:10.1037/0735-7036.118.4.403) [DOI] [PubMed] [Google Scholar]

- 11.Giurfa M. 2007. Behavioral and neural analysis of associative learning in the honeybee: a taste from the magic well. J. Comp. Physiol. A 193, 801–824 10.1007/s00359-007-0235-9 (doi:10.1007/s00359-007-0235-9) [DOI] [PubMed] [Google Scholar]

- 12.von Frisch K. 1967. The dance language and orientation of honeybees. Cambridge, UK: Belknap Press [Google Scholar]

- 13.Zhang S. W. 2006. Learning of abstract concepts and rules by honeybees. Int. J. Comp. Psychol. 19, 318–341 [Google Scholar]

- 14.Avarguès-Weber A., Deisig N., Giurfa M. 2010. Visual cognition in social insects. Annu. Rev. Entomol. 56, 423–443 [DOI] [PubMed] [Google Scholar]

- 15.Giurfa M., Eichmann B., Menzel R. 1996. Symmetry perception in an insect. Nature 382, 458–461 10.1038/382458a0 (doi:10.1038/382458a0) [DOI] [PubMed] [Google Scholar]

- 16.Avarguès-Weber A., Portelli G., Benard J., Dyer A. G., Giurfa M. 2010. Configural processing enables discrimination and categorization of face-like stimuli in honeybees. J. Exp. Biol. 213, 593–601 10.1242/jeb.039263 (doi:10.1242/jeb.039263) [DOI] [PubMed] [Google Scholar]

- 17.Benard J., Stach S., Giurfa M. 2006. Categorization of visual stimuli in the honeybee Apis mellifera. Anim. Cogn. 9, 257–270 10.1007/s10071-006-0032-9 (doi:10.1007/s10071-006-0032-9) [DOI] [PubMed] [Google Scholar]

- 18.Zhang S., Srinivasan M. V., Zhu H., Wong J. 2004. Grouping of visual objects by honeybees. J. Exp. Biol. 207, 3289–3298 10.1242/jeb.01155 (doi:10.1242/jeb.01155) [DOI] [PubMed] [Google Scholar]

- 19.Zhang S., Srinivasan M. 1994. Prior experience enhances pattern discrimination in insect vision. Nature 368, 330–332 10.1038/368330a0 (doi:10.1038/368330a0) [DOI] [Google Scholar]

- 20.Giurfa M., Zhang S., Jenett A., Menzel R., Srinivasan M. V. 2001. The concepts of ‘sameness' and ‘difference' in an insect. Nature 410, 930–933 10.1038/35073582 (doi:10.1038/35073582) [DOI] [PubMed] [Google Scholar]

- 21.Zhang S. W., Bock F., Si A., Tautz J., Srinivasan M. V. 2005. Visual working memory in decision making by honey bees. Proc. Natl Acad. Sci. USA 102, 5250–5255 10.1073/pnas.0501440102 (doi:10.1073/pnas.0501440102) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gross H. J., Pahl M., Si A., Zhu H., Tautz J., Zhang S. 2009. Number-based visual generalisation in the honeybee. PLoS ONE 4, e4263. 10.1371/journal.pone.0004263 (doi:10.1371/journal.pone.0004263) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Giurfa M., Hammer M., Stach S., Stollhoff N., Muller-Deisig N., Mizyrycki C. 1999. Pattern learning by honeybees: conditioning procedure and recognition strategy. Anim. Behav. 57, 315–324 10.1006/anbe.1998.0957 (doi:10.1006/anbe.1998.0957) [DOI] [PubMed] [Google Scholar]

- 24.Chittka L., Dyer A. G., Bock F., Dornhaus A. 2003. Psychophysics: bees trade off foraging speed for accuracy. Nature 424, 388–388 10.1038/424388a (doi:10.1038/424388a) [DOI] [PubMed] [Google Scholar]

- 25.Avarguès-Weber A., de Brito Sanchez M. G., Giurfa M., Dyer A. G. 2010. Aversive reinforcement improves visual discrimination learning in freely-flying honeybees. PLoS ONE 5, e15370. 10.1371/journal.pone.0015370 (doi:10.1371/journal.pone.0015370) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Giurfa M., Vorobyev M., Kevan P., Menzel R. 1996. Detection of coloured stimuli by honeybees: minimum visual angles and receptor specific contrasts. J. Comp. Physiol. A 178, 699–709 [Google Scholar]

- 27.Ernst R., Heisenberg M. 1999. The memory template in Drosophila pattern vision at the flight simulator. Vis. Res. 39, 3920–3933 10.1016/S0042-6989(99)00114-5 (doi:10.1016/S0042-6989(99)00114-5) [DOI] [PubMed] [Google Scholar]

- 28.Backhaus W. 1991. Color opponent coding in the visual system of the honeybee. Vis. Res. 31, 1381–1397 10.1016/0042-6989(91)90059-E (doi:10.1016/0042-6989(91)90059-E) [DOI] [PubMed] [Google Scholar]

- 29.Chittka L. 1992. The colour hexagon: a chromaticity diagram based on photoreceptor excitations as a generalized representation of colour opponency. J. Comp. Physiol. A 170, 533–543 10.1007/BF00199331 (doi:10.1007/BF00199331) [DOI] [Google Scholar]

- 30.Srinivasan M. V., Lehrer M. 1988. Spatial acuity of honeybee vision and its spectral properties. J. Comp. Physiol. A 162, 159–172 10.1007/BF00606081 (doi:10.1007/BF00606081) [DOI] [Google Scholar]

- 31.Van Hateren J. H., Srinivasan M. V., Wait P. B. 1990. Pattern recognition in bees: orientation discrimination. J. Comp. Physiol. A 167, 649–654 [Google Scholar]

- 32.Stach S., Benard J., Giurfa M. 2004. Local-feature assembling in visual pattern recognition and generalization in honeybees. Nature 429, 758–761 10.1038/nature02594 (doi:10.1038/nature02594) [DOI] [PubMed] [Google Scholar]

- 33.Homa D. 1984. On the nature of categories. In The psychology of learning and motivation: advances in research and theory, vol. 18 (ed. Bower G. H.), pp. 49–94 New York, NY: Academic Press [Google Scholar]

- 34.Chittka L., Geiger K. 1995. Can honey bees count landmarks? Anim. Behav. 49, 159–164 10.1016/0003-3472(95)80163-4 (doi:10.1016/0003-3472(95)80163-4) [DOI] [Google Scholar]

- 35.Dacke M., Srinivasan M. V. 2008. Evidence for counting in insects. Anim. Cogn. 11, 683–689 10.1007/s10071-008-0159-y (doi:10.1007/s10071-008-0159-y) [DOI] [PubMed] [Google Scholar]

- 36.Quinn P. C. 2007. On the infant's prelinguistic conception of spatial relations. In The emerging spatial mind (eds Plumert J. M., Spencer J. P.), pp. 117–141 Oxford, UK: Oxford University Press [Google Scholar]