Abstract

Background

In the era of evidence based medicine, with systematic reviews as its cornerstone, adequate quality assessment tools should be available. There is currently a lack of a systematically developed and evaluated tool for the assessment of diagnostic accuracy studies. The aim of this project was to combine empirical evidence and expert opinion in a formal consensus method to develop a tool to be used in systematic reviews to assess the quality of primary studies of diagnostic accuracy.

Methods

We conducted a Delphi procedure to develop the quality assessment tool by refining an initial list of items. Members of the Delphi panel were experts in the area of diagnostic research. The results of three previously conducted reviews of the diagnostic literature were used to generate a list of potential items for inclusion in the tool and to provide an evidence base upon which to develop the tool.

Results

A total of nine experts in the field of diagnostics took part in the Delphi procedure. The Delphi procedure consisted of four rounds, after which agreement was reached on the items to be included in the tool which we have called QUADAS. The initial list of 28 items was reduced to fourteen items in the final tool. Items included covered patient spectrum, reference standard, disease progression bias, verification bias, review bias, clinical review bias, incorporation bias, test execution, study withdrawals, and indeterminate results. The QUADAS tool is presented together with guidelines for scoring each of the items included in the tool.

Conclusions

This project has produced an evidence based quality assessment tool to be used in systematic reviews of diagnostic accuracy studies. Further work to determine the usability and validity of the tool continues.

Background

Systematic reviews aim to identify and evaluate all available research evidence relating to a particular objective. [1] Quality assessment is an integral part of any systematic review. If the results of individual studies are biased and these are synthesised without any consideration of quality then the results of the review will also be biased. It is therefore essential that the quality of individual studies included in a systematic review is assessed in terms of potential for bias, lack of applicability, and, inevitably to a certain extent, the quality of reporting. A formal assessment of the quality of primary studies included in a review allows investigation of the effect of different biases and sources of variation on study results.

Quality assessment is as important in systematic reviews of diagnostic accuracy studies as it is in any other review. [2] However, diagnostic accuracy studies have several unique features in terms of design which differ from standard intervention evaluations. Their aim is to determine how good a particular test is at detecting the target condition and they usually have the following basic structure. A series of patients receive the test (or tests) of interest, known as the "index test(s)" and also a reference standard. The results of the index test(s) are then compared to the results of the reference standard. The reference standard should be the best available method to determine whether or not the patient has the condition of interest. It may be a single test, clinical follow-up, or a combination of tests. Both the terms "test" and "condition" are interpreted in a broad sense. The term "test" is used to refer to any procedure used to gather information on the health status of an individual. This can include laboratory tests, surgical exploration, clinical examination, imaging tests, questionnaires, and pathology. Similarly "condition" can be used to define any health status including the presence of disease (e.g. influenza, alcoholism, depression, cancer), pregnancy, or different stages of disease (e.g. an exacerbation of multiple sclerosis).

Diagnostic accuracy studies allow the calculation of various statistics that provide an indication of "test performance" – how good the index test is at detecting the target condition. These statistics include sensitivity, specificity, positive and negative predictive values, positive and negative likelihood ratios, diagnostics odds ratios and receiver operating characteristic (ROC) curves.

Unique design features mean that the criteria needed to assess the quality of diagnostic test evaluations differ from those needed to assess evaluations of therapeutic interventions. [2] It is also important to use a standardised approach to quality assessment. This should avoid the choice of a quality assessment tool that is biased by preconceived ideas. [1] Although several checklists for the assessment of the quality of studies of diagnostic accuracy exist, none of these have been systematically developed or evaluated, and they differ in terms of the items that they assess. [3]

The aim of this project was to use a formal consensus method to develop and evaluate an evidence based quality assessment tool, to be used for the quality assessment of diagnostic accuracy studies included in systematic reviews.

Methods

We followed the approach suggested by Streiner and Norman to develop the quality assessment tool [4]. Jadad et al [5] also adopted this approach to establish a scale for assessing the quality of randomised controlled studies. This procedure involves the following stages: (1) preliminary conceptual decisions; (2) item generation; (3) assessment of face validity; (4) field trials to assess consistency and construct validity; and lastly (5) the generation of the refined instrument.

Preliminary conceptual decisions

We decided that the quality assessment tool was required to:

1. be used in systematic reviews of diagnostic accuracy

2. assess the methodological quality of a diagnostic study in generic terms (relevant to all diagnostic studies)

3. allow consistent and reliable assessment of quality by raters with different backgrounds

4. be relatively short and simple to complete

'Quality' was defined to include both the internal and external validity of a study; the degree to which estimates of diagnostic accuracy have not been biased, and the degree to which the results of a study can be applied to patients in practice.

We conducted a systematic review of existing systematic reviews of diagnostic accuracy studies to investigate how quality was incorporated into these reviews. The results of this review are reported elsewhere. [9,10] Based on these results, we decided that the quality assessment tool needed to have the potential to be used:

• as criteria for including/excluding studies in a review or in primary analyses

• to conduct sensitivity/subgroup analysis stratified according to quality

• as individual items in meta-regression analyses

• to make recommendations for future research

• to produce a narrative discussion of quality

• to produce a tabular summary of the results of the quality assessment

The implication for the development of the tool was that it needed to be able to distinguish between high and low quality studies. Component analysis, where the effect of each individual quality item on estimates of test performance is assessed, was adopted as the best approach to incorporate quality into systematic reviews of diagnostic studies. [6,7] We decided not to use QUADAS to produce an overall quality score due to the problems associated with their use. [8] The quality tool was developed taking these aspects into consideration.

Item generation

We produced an initial list of possible items for inclusion in the quality assessment tool incorporating the results of two previously conducted systematic reviews. Full details of these are reported elsewhere. [3,10,11] The first review examined the methodological literature on diagnostic test assessment to identify empirical evidence for potential sources of bias and variation. [11] The results from this review were summarised according to the number of studies providing empirical, theoretical or no evidence of bias. The second looked at existing quality assessment tools to identify all possible relevant items and investigated on what evidence those items are based. [3] The results from this review were summarised according to the proportion of tools that included each item, this formed the basis for the initial list. We phrased each proposed item for the checklist as a question.

Assessment of face validity

The main component of the development of the tool was the assessment of face validity. We chose to use a Delphi procedure for this component. Delphi procedures aim to obtain the most reliable consensus amongst a group of experts by a series of questionnaires interspersed with controlled feedback. [12,13] We felt that the Delphi procedure was the optimum method to obtain consensus on the items to be included in the tool as well as the phrasing and scoring of items. This method allowed us to capture the views of a number of experts in the field and to reach a consensus on the final selection of items for the tool. As each round of the procedure is completed independently, the views of each expert panel member can be captured without others influencing their choices. However, at the same time, consensus can be reached by the process of anonymously feeding back the responses of each panel member in a controlled manner in subsequent rounds.

As the area of diagnostic accuracy studies is a specialised area we decided to include a small number of experts in the field on the panel, rather than to include a larger number of participants who may have had a more limited knowledge of the area. Eleven experts were contacted and asked to become panel members.

The Delphi procedure

General features

Each round of the Delphi procedure included a report of the results from the previous round to provide a summary of the responses of all panel members. We also provided details on how we reached decisions regarding which items to include/exclude from the tool based on the results of the previous round. We reported all other decisions made, for example how to handle missing responses and rephrasing of items, together with the justification for the decisions. These decisions were made by the authors who were not panel members (PW, AR, JR, JK (the 'steering group')) and we asked panel members whether they supported the decisions made. When making a decision regarding whether to include an item in the quality assessment tool, we asked panel members to consider the results from the previous round, the comments from the previous round (where applicable), and the evidence provided for each item.

Delphi Round 1

We sent the initial list of possible items for inclusion in the quality assessment tool, divided into four categories (Table 1 [see Additional file 1]), to all panel members. The aim was to collect information on each member of the group's opinion regarding the importance of each item. To help panel members in their decision-making, we summarised the evidence from the reviews for each item. The aims of the quality assessment tool and its desired features were presented. We asked members of the panel to rate each item for inclusion in the quality assessment tool according to a five point Likert Scale (strongly agree, moderately agree, neutral, moderately disagree, strongly disagree). We also gave them the opportunity to comment on any of the items included in the tool, to suggest possible rephrasing of questions and to highlight any items that may have been missed off the initial list of items.

Delphi Round 2

We used the results of round 1 to select items for which there were high levels of agreement for inclusion/exclusion from the final quality assessment tool. Categories/items rated as "strongly agree" by at least 75 % of the panel members who replied in this round were selected for inclusion in the tool. Categories/items that were not rated as "strongly agree" by at least one panel member were excluded. Items selected for inclusion or exclusion from the final quality assessment tool were not rated as part of round 2.

For the round 2 questionnaire, rather than rating each item on the 5-point Likert scale, we asked panel members to indicate whether they thought that a category or item should be included or excluded from the quality assessment tool. In addition, we asked panel members to answer yes or no to the following questions:

1. Would you like to see a number of "key items" highlighted in the quality assessment tool?

2. Do you endorse the Delphi procedure so far? If no, please give details of the aspects of the procedure which you do not support and list any suggestions you have for how the procedure could be improved.

3. As part of the third round, instructions on how to complete the quality assessment will be provided to you. As we do not want to ask you to invest too much time, the instructions will be drawn up by the steering group. In the third round you will only be asked if you support the instructions and if not, what you would like to change. Do you agree with this procedure?

We described the methods proposed to validate the tool and asked panel members to indicate whether or not they agreed with these methods, and also to suggest any additional validation methods.

Delphi Round 3

We used the results of round 2 to further select items for inclusion/exclusion in the quality assessment tool. All categories rated as include by more than 80% of the panel members were selected for inclusion in the tool. Items scored "include" by 75% of the panel members were re-rated as part of round 3. All other items were removed from the tool and comments regarding rephrasing were incorporated while revising the tool.

We presented all items selected for inclusion in the tool at this stage and asked panel members to indicate if they agreed with the proposed phrasing of the items, and if not, to suggest alternative phrasings. As for the round 2 questionnaire, we asked panel members to indicate whether they thought that each item to be re-rated should be included or excluded from the quality assessment tool.

We proposed a scoring system and asked panel members to indicate whether they agreed with this system. The system proposed was straightforward: all items would be scored as "yes", "no" or "unclear". We presented further details of the proposed validation methods and again asked panel members to indicate whether they agreed with these methods. The aims of the quality assessment tool were highlighted and we asked panel members whether taking these into consideration they endorsed the Delphi procedure. We also asked members whether they used the evidence provided from the reviews and the feedback from previous rounds in their decisions of which items to select for inclusion in the tool. If they did not use this information we asked them to explain why not.

Lastly, we asked panel members if they would like to see the development of topic and design specific items in addition to the generic section of the tool. If they answered yes to these questions we asked them whether they would like to see the development of these items through a further Delphi procedure, and if so, if they would like to be a member of the panel for this procedure. We decided to name the tool the "QUADAS" (Quality Assessment of Diagnostic Accuracy Studies) tool. We produced a background document to accompany QUADAS for the items selected for inclusion in the tool up to this point and asked panel members to comment on this.

Delphi Round 4

We used the results of round 3 to select the final items for inclusion/exclusion in the quality assessment tool. All items rated as 'include' by at least 75% of the panel members were selected for inclusion in the tool. All other items were removed from the tool. We considered comments regarding rephrasing of items and rephrased items taking these into consideration. The final version of the background document to accompany QUADAS was presented.

Field trials to assess consistency and construct validity

We are currently in the process of evaluating the consistency, validity and usability of QUADAS. The validation process will include piloting the tool on a small sample of published studies, focussing on the assessment of the consistency and reliability of the tool. The tool will also be piloted in a number of diagnostic reviews. Regression analysis will be used to investigate associations between study characteristics and estimates of diagnostic accuracy in primary studies.

Generation of the refined instrument

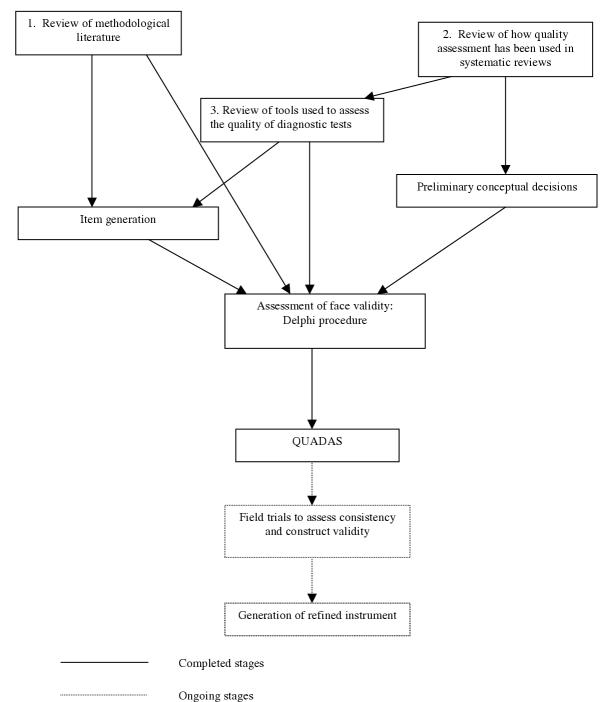

If necessary, we will use the results of the evaluations outlined above to adapt QUADAS. The steps involved in the development of QUADAS are illustrated in Figure 1.

Figure 1.

Flow chart of the tool development process.

Results

Item generation

The systematic reviews produced a list of 28 possible items for inclusion in the quality assessment tool. These are shown in Table 1 [see Additional file 1] together with the results of the systematic reviews on sources of bias and variation, and existing quality assessment tools. The evidence from the review on sources of bias and variation was summarised as the number of studies reporting empirical evidence (E), theoretical evidence (T) or absence (A) of bias or variability. The number of studies providing each type of evidence of bias or variability is shown in columns 2–4 of Table 1 [see Additional file 1]. The results from the review of existing quality assessment tools was summarised as the proportion of tools covering each item. The proportions were grouped into four categories: I (75–100%), II (50–74%), III (25–49%) and IV (0–24%) and are shown in the final column of Table 1 [see Additional file 1]. For some items evidence from the reviews was only available in combination with other items rather than for each item individually, e.g. setting and disease prevalence and severity. For these items the evidence for the combination is provided. Table 1 [see Additional file 1] also shows to which item on the QUADAS tool each item in this table refers. Evidence from the first review was not available for a number of items; these items were classed as "na".

Assessment of face validity: The Delphi procedure

Nine of the eleven people invited to take part in the Delphi procedure agreed to do so. The names of the panel members are listed at the end of this paper.

Delphi Round 1

Eight of the nine people who agreed to take part in the procedure returned completed questionnaires. The ninth panel member did not have time to take part in this round. Following the results of this round, six items were selected for inclusion, one item was removed from the tool, and the remaining items were put forward to be re-rated as part of round 2. Items selected for inclusion were:

1. Appropriate selection of patient spectrum

2. Appropriate reference standard

3. Absence of partial verification bias

4. Absence of review bias (both test and diagnostic)

5. Clinical review bias

6. Reporting of uninterpretable/indeterminate/intermediate results

The item removed from the tool was:

1. Test utility

Panel members made a number of suggestions regarding rephrasing of items. We considered these and made changes where appropriate. Based on some of the comments received we added an additional item to the category "Spectrum composition". This item was "What was the study design?". This item was rated for inclusion in the tool as part of round 2.

Delphi Round 2

Of the nine people invited to take part in round 2, eight returned completed questionnaires. Based on the results of this round, a further four items were selected for inclusion in the tool:

1. Absence of disease progression bias

2. Absence of differential verification bias

3. Absence of incorporation bias

4. Reporting of study withdrawals.

Panel members did not achieve consensus for a further five items, these were re-rated as part of round 3:

1. Reporting of selection criteria

2. Reporting of disease severity

3. Description of index test execution

4. Description of reference standard execution

5. Independent derivation of cut-off points

All other items, including the new item added based on feedback from round 1, were excluded from the process. Based on the comments from round 2, we proposed the following additional items which were included in the round 3 questionnaire:

1. Are there other aspects of the design of this study which cause concern about whether or not it will correctly estimate test accuracy?

2. Are there other aspects of the conduct of this study which cause concern about whether or not it will correctly estimate test accuracy?

3. Are there special issues concerning patient selection which might invalidate test results?

4. Are there special issues concerning the conduct of test which might invalidate test results?

Since none of the panel members were in favour of highlighting a number of key items in the quality assessment tool, this approach was not followed. At this stage, five of the panel members reported that they endorsed the Delphi procedure so far, one did not and two were unclear. The member who did not endorse the Delphi procedure stated that "I fundamentally believe that it is not possible to develop a reliable discriminatory diagnostic assessment tool that will apply to all, or even the majority of diagnostic test studies." One of the comments from a panel member who was "unclear" also related to the problem of producing a quality assessment tool that applies to all diagnostic accuracy studies. The other related to the process used to derive the initial list of items and the problems of suggesting additional items. All panel members agreed to let the steering group produce the background document to accompany the tool. The feedback suggested that there was some confusion regarding the proposed validation methods. These were clarified and re-rated as part of round 3.

Delphi Round 3

All nine panel members invited to take part in round 3 returned completed questionnaires. Agreement was reached on items to be included in the tool following the results of this round.

Three of the five items re-rated as part of this round were selected for inclusion. These were:

1. Reporting of selection criteria

2. Description of index test execution

3. Description of reference standard execution

The other two items and the additional items rated as part of this round were not included in the tool.

The panel members agreed with the scoring system proposed by the steering group. Each of the proposed validation steps was approved by at least 7/9 of the panel members. These methods will therefore be used to validate the tool. Five of the panel members indicated that they would like to see the development of design and topic specific criteria. Of these four stated that they would like to see this done via a Delphi procedure. The development of these elements will take place after the generic section of the tool has been evaluated.

At this stage, all but one of the panel members stated that they endorsed the Delphi procedure. This member remained unclear as to whether he/she endorsed the procedure and stated that "all my reservations still apply". These reservations related to earlier comments regarding the problems of developing a quality assessment tool which can be applied to all studies of diagnostic accuracy. Seven of the panel members reported using the evidence provided from the systematic reviews to help in their decisions of which items to include in QUADAS. Of the two that did not use the evidence one stated that (s)he was too busy, the other stated that there was no new information in the evidence. Seven of the panel members reported using the feedback from earlier rounds of the Delphi procedure. Of the two that did not, one stated that he/she was "not seeking conformity with other respondents" the other did not explain why he or she did not use the feedback. The two panel members that did not use the feedback were different from the two that did not use the evidence provided by the reviews. These responses suggest that the evidence provided by the review did contribute towards the production of QUADAS.

Delphi Round 4

The fourth and final round did not include a questionnaire, although panel members were given the opportunity to feedback any additional comments that they had. Only one panel member provided further feedback. This related mainly to the broadness of the first item included in the tool, and the fact that several items relate to the reporting of the study rather than directly to the quality of the study.

The QUADAS tool

The tool is structured as a list of 14 questions which should each be answered "yes", "no", or "unclear". The tool is presented in Table 2. A more detailed description of each item together with a guide on how to score each item is provided below.

Table 2.

The QUADAS tool

| Item | Yes | No | Unclear | |

| 1. | Was the spectrum of patients representative of the patients who will receive the test in practice? | ( ) | ( ) | ( ) |

| 2. | Were selection criteria clearly described? | ( ) | ( ) | ( ) |

| 3. | Is the reference standard likely to correctly classify the target condition? | ( ) | ( ) | ( ) |

| 4. | Is the time period between reference standard and index test short enough to be reasonably sure that the target condition did not change between the two tests? | ( ) | ( ) | ( ) |

| 5. | Did the whole sample or a random selection of the sample, receive verification using a reference standard of diagnosis? | ( ) | ( ) | ( ) |

| 6. | Did patients receive the same reference standard regardless of the index test result? | ( ) | ( ) | ( ) |

| 7. | Was the reference standard independent of the index test (i.e. the index test did not form part of the reference standard)? | ( ) | ( ) | ( ) |

| 8. | Was the execution of the index test described in sufficient detail to permit replication of the test? | ( ) | ( ) | ( ) |

| 9. | Was the execution of the reference standard described in sufficient detail to permit its replication? | ( ) | ( ) | ( ) |

| 10. | Were the index test results interpreted without knowledge of the results of the reference standard? | ( ) | ( ) | ( ) |

| 11. | Were the reference standard results interpreted without knowledge of the results of the index test? | ( ) | ( ) | ( ) |

| 12. | Were the same clinical data available when test results were interpreted as would be available when the test is used in practice? | ( ) | ( ) | ( ) |

| 13. | Were uninterpretable/ intermediate test results reported? | ( ) | ( ) | ( ) |

| 14. | Were withdrawals from the study explained? | ( ) | ( ) | ( ) |

Users' guide to QUADAS

1. Was the spectrum of patients representative of the patients who will receive the test in practice?

a. What is meant by this item

Differences in demographic and clinical features between populations may produce measures of diagnostic accuracy that vary considerably, this is known as spectrum bias. It refers more to the generalisability of results than to the possibility that the study may produce biased results. Reported estimates of diagnostic accuracy may have limited clinical applicability (generalisability) if the spectrum of tested patients is not similar to the patients in whom the test will be used in practice. The spectrum of patients refers not only to the severity of the underlying target condition, but also to demographic features and to the presence of differential diagnosis and/or co-morbidity. It is therefore important that diagnostic test evaluations include an appropriate spectrum of patients for the test under investigation and also that a clear description is provided of the population actually included in the study.

b. Situations in which this item does not apply

This item is relevant to all studies of diagnostic accuracy and should always be included in the quality assessment tool.

c. How to score this item

Studies should score "yes" for this item if you believe, based on the information reported or obtained from the study's authors, that the spectrum of patients included in the study was representative of those in whom the test will be used in practice. The judgement should be based on both the method of recruitment and the characteristics of those recruited. Studies which recruit a group of healthy controls and a group known to have the target disorder will be coded as "no" on this item in nearly all circumstances. Reviewers should pre-specify in the protocol of the review what spectrum of patients would be acceptable taking factors such as disease prevalence and severity, age, and sex, into account. If you think that the population studied does not fit into what you specified as acceptable, the item should be scored as "no". If there is insufficient information available to make a judgement then it should be scored as "unclear".

2. Were selection criteria clearly described?

a. What is meant by this item

This refers to whether studies have provided a clear definition of the criteria used as in- and exclusion criteria for entry into the study.

b. Situations in which this item does not apply

This item is relevant to all studies of diagnostic accuracy and should always be included in the quality assessment tool.

c. How to score this item

If you think that all relevant information regarding how participants were selected for inclusion in the study has been provided then this item should be scored as "yes". If study selection criteria are not clearly reported then this item should be scored as "no". In situations where selection criteria are partially reported and you feel that you do not have enough information to score this item as "yes", then it should be scored as "unclear".

3. Is the reference standard likely to correctly classify the target condition?

a. What is meant by this item

The reference standard is the method used to determine the presence or absence of the target condition. To assess the diagnostic accuracy of the index test its results are compared with the results of the reference standard; subsequently indicators of diagnostic accuracy can be calculated. The reference standard is therefore an important determinant of the diagnostic accuracy of a test. Estimates of test performance are based on the assumption that the index test is being compared to a reference standard which is 100% sensitive and specific. If there are any disagreements between the reference standard and the index test then it is assumed that the index test is incorrect. Thus, from a theoretical point of view the choice of an appropriate reference standard is very important.

b. Situations in which this item does not apply

This item is relevant to all studies of diagnostic accuracy and should always be included in the quality assessment tool.

c. How to score this item

If you believe that the reference standard is likely to correctly classify the target condition or is the best method available, then this item should be scored "yes". Making a judgement as to the accuracy of the reference standard may not be straightforward. You may need experience of the topic area to know whether a test is an appropriate reference standard, or if a combination of tests are used you may have to consider carefully whether these were appropriate. If you do not think that the reference standard was likely to have correctly classified the target condition then this item should be scored as "no". If there is insufficient information to make a judgement then this should be scored as "unclear".

4. Is the time period between reference standard and index test short enough to be reasonably sure that the target condition did not change between the two tests?

a. What is meant by this item

Ideally the results of the index test and the reference standard are collected on the same patients at the same time. If this is not possible and a delay occurs, misclassification due to spontaneous recovery or to progression to a more advanced stage of disease may occur. This is known as disease progression bias. The length of the time period which may cause such bias will vary between conditions. For example a delay of a few days is unlikely to be a problem for chronic conditions, however, for many infectious diseases a delay between performance of index and reference standard of only a few days may be important. This type of bias may occur in chronic conditions in which the reference standard involves clinical follow-up of several years.

b. Situations in which this item does not apply

This item is likely to apply in most situations.

c. How to score this item

When to score this item as "yes" is related to the target condition. For conditions that progress rapidly even a delay of several days may be important. For such conditions this item should be scored "yes" if the delay between the performance of the index and reference standard is very short, a matter of hours or days. However, for chronic conditions disease status is unlikely to change in a week, or a month, or even longer. In such conditions longer delays between performance of the index and reference standard may be scored as "yes". You will have to make judgements regarding what is considered "short enough". You should think about this before starting work on a review, and define what you consider to be "short enough" for the specific topic area that you are reviewing. If you think the time period between the performance of the index test and the reference standard was sufficiently long that disease status may have changed between the performance of the two tests then this item should be scored as "no". If insufficient information is provided this should be scored as "unclear".

5. Did the whole sample or a random selection of the sample, receive verification using a reference standard?

a. What is meant by this item

Partial verification bias (also known as work-up bias, (primary) selection bias, or sequential ordering bias) occurs when not all of the study group receive confirmation of the diagnosis by the reference standard. If the results of the index test influence the decision to perform the reference standard then biased estimates of test performance may arise. If patients are randomly selected to receive the reference standard the overall diagnostic performance of the test is, in theory, unchanged. In most cases however, this selection is not random, possibly leading to biased estimates of the overall diagnostic accuracy.

b. Situations in which this item does not apply

Partial verification bias generally only occurs in diagnostic cohort studies in which patients are tested by the index test prior to the reference standard. In situations where the reference standard is assessed before the index test, you should firstly decide whether there is a possibility that verification bias could occur, and if not how to score this item. This may depend on how quality will be incorporated in the review. There are two options: either to score this item as 'yes', or to remove it from the quality assessment tool.

c. How to score this item

If it is clear from the study that all patients, or a random selection of patients, who received the index test went on to receive verification of their disease status using a reference standard then this item should be scored as "yes". This item should be scored as yes even if the reference standard was not the same for all patients. If some of the patients who received the index test did not receive verification of their true disease state, and the selection of patients to receive the reference standard was not random, then this item should be scored as "no". If this information is not reported by the study then it should be scored as "unclear".

6. Did patients receive the same reference standard regardless of the index test result?

a. What is meant by this item

Differential verification bias occurs when some of the index test results are verified by a different reference standard. This is especially a problem if these reference standards differ in their definition of the target condition, for example histopathology of the appendix and natural history for the detection of appendicitis. This usually occurs when patients testing positive on the index test receive a more accurate, often invasive, reference standard than those with a negative test result. The link (correlation) between a particular (negative) test result and being verified by a less accurate reference standard will affect measures of test accuracy in a similar way as for partial verification, but less seriously.

b. Situations in which this item does not apply

Differential verification bias is possible in all types of diagnostic accuracy studies.

c. How to score this item

If it is clear that patients received verification of their true disease status using the same reference standard then this item should be scored as "yes". If some patients received verification using a different reference standard this item should be scored as "no". If this information is not reported by the study then it should be scored as "unclear".

7. Was the reference standard independent of the index test (i.e. the index test did not form part of the reference standard)?

a. What is meant by this item

When the result of the index test is used in establishing the final diagnosis, incorporation bias may occur. This incorporation will probably increase the amount of agreement between index test results and the outcome of the reference standard, and hence overestimate the various measures of diagnostic accuracy. It is important to note that knowledge of the results of the index test alone does not automatically mean that these results are incorporated in the reference standard. For example, a study investigating MRI for the diagnosis of multiple sclerosis could have a reference standard composed of clinical follow-up, CSF analysis and MRI. In this case the index test forms part of the reference standard. If the same study used a reference standard of clinical follow-up and the results of the MRI were known when the clinical diagnosis was made but were not specifically included as part of the reference then the index test does not form part of the reference standard.

b. Situations in which this item does not apply

This item will only apply when a composite reference standard is used to verify disease status. In such cases it is essential that a full definition of how disease status is verified and which tests form part of the reference standard are provided. For studies in which a single reference standard is used this item will not be relevant and should either be scored as yes or be removed from the quality assessment tool.

c. How to score this item

If it is clear from the study that the index test did not form part of the reference standard then this item should be scored as "yes". If it appears that the index test formed part of the reference standard then this item should be scored as "no". If this information is not reported by the study then it should be scored as "unclear".

8. Was the execution of the index test described in sufficient detail to permit replication of the test?

9. Was the execution of the reference standard described in sufficient detail to permit its replication?

a. What is meant by these items

A sufficient description of the execution of index test and the reference standard is important for two reasons. Firstly, variation in measures of diagnostic accuracy can sometimes be traced back to differences in the execution of index test or reference standard. Secondly, a clear and detailed description (or citations) is needed to implement a certain test in another setting. If tests are executed in different ways then this would be expected to impact on test performance. The extent to which this would be expected to affect results would depend on the type of test being investigated.

b. Situations in which these items do not apply

These items are likely to apply in most situations.

c. How to score these items

If the study reports sufficient details or citations to permit replication of the index test and reference standard then these items should be scored as "yes". In other cases these items should be scored as "no". In situations where details of test performance are partially reported and you feel that you do not have enough information to score this item as "yes", then it should be scored as "unclear".

10. Were the index test results interpreted without knowledge of the results of the reference standard?

11. Were the reference standard results interpreted without knowledge of the results of the index test?

a. What is meant by these items

This item is similar to "blinding" in intervention studies. Interpretation of the results of the index test may be influenced by knowledge of the results of the reference standard, and vice versa. This is known as review bias, and may lead to inflated measures of diagnostic accuracy. The extent to which this may affect test results will be related to the degree of subjectiveness in the interpretation of the test result. The more subjective the interpretation the more likely that the interpreter can be influenced by the results of the reference standard in interpreting the index test and vice versa. It is therefore important to consider the topic area that you are reviewing and to determine whether the interpretation of the index test or reference standard could be influenced by knowledge of the results of the other test.

b. Situations in which these items do not apply

If, in the topic area that you are reviewing, the index test is always performed first then interpretation of the results of the index test will usually be without knowledge of the results of the reference standard. Similarly, if the reference standard is always performed first (for example, in a diagnostic case-control study) then the results of the reference standard will be interpreted without knowledge of the index test. However, if test results can be interpreted at later date, after both the index test and reference standard have been completed, then it is still important for a study to provide a description of whether the interpretation of each test was performed blind to the results of the other test. In situations where one form of review bias does not apply there are two possibilities: either score the relevant item as "yes" or remove this item from the list. If tests are entirely objective in their interpretation then test interpretation is not susceptible to review bias. In such situations review bias may not be a problem and these items can be omitted from the quality assessment tool. Another situation in which this form of bias may not apply is when tests results are interpreted in an independent laboratory. In such situations it is unlikely that the person interpreting the test results will have knowledge of the results of the other test (either index test or reference standard).

c. How to score these items

If the study clearly states that the test results (index or reference standard) were interpreted blind to the results of the other test then these items should be scored as "yes". If this does not appear to be the case they should be scored as "no". If this information is not reported by the study then it should be scored as "unclear".

12. Were the same clinical data available when test results were interpreted as would be available when the test is used in practice?

a. What is meant by this item

The availability of clinical data during interpretation of test results may affect estimates of test performance. In this context clinical data is defined broadly to include any information relating to the patient obtained by direct observation such as age, sex and symptoms. The knowledge of such factors can influence the diagnostic test result if the test involves an interpretative component. If clinical data will be available when the test is interpreted in practice then this should also be available when the test is evaluated. If however, the index test is intended to replace other clinical tests then clinical data should not be available, or should be available for all index tests. It is therefore important to determine what information will be available when test results are interpreted in practice before assessing studies for this item.

b. Situations in which this item does not apply

If the interpretation of the index test is fully automated and involves no interpretation then this item may not be relevant and can be omitted from the quality assessment tool.

c. How to score this item

If clinical data would normally be available when the test is interpreted in practice and similar data were available when interpreting the index test in the study then this item should be scored as "yes". Similarly, if clinical data would not be available in practice and these data were not available when the index test results were interpreted then this item should be scored as "yes". If this is not the case then this item should be scored as "no". If this information is not reported by the study then it should be scored as "unclear".

13. Were uninterpretable/ intermediate test results reported?

a. What is meant by this item

A diagnostic test can produce an uninterpretable/indeterminate/intermediate result with varying frequency depending on the test. These problems are often not reported in diagnostic accuracy studies with the uninterpretable results simply removed from the analysis. This may lead to the biased assessment of the test characteristics. Whether bias will arise depends on the possible correlation between uninterpretable test results and the true disease status. If uninterpretable results occur randomly and are not related to the true disease status of the individual then, in theory, these should not have any effect on test performance. Whatever the cause of uninterpretable results it is important that these are reported so that the impact of these results on test performance can be determined.

b. Situations in which this item does not apply

This item is relevant to all studies of diagnostic accuracy and should always be included in the quality assessment tool.

c. How to score this item

If it is clear that all test results, including uninterpretable/indeterminate/intermediate are reported then this item should be scored as "yes". If you think that such results occurred but have not been reported then this item should be scored as "no". If it is not clear whether all study results have been reported then this item should be scored as "unclear".

14. Were withdrawals from the study explained?

a. What is meant by this item

This occurs when patients withdraw from the study before the results of either or both of the index test and reference standard are known. If patients lost to follow-up differ systematically from those who remain, for whatever reason, then estimates of test performance may be biased.

b. Situations in which this item does not apply

This item is relevant to all studies of diagnostic accuracy and should always be included in the quality assessment tool.

c. How to score this item

If it is clear what happened to all patients who entered the study, for example if a flow diagram of study participants is reported, then this item should be scored as "yes". If it appears that some of the participants who entered the study did not complete the study, i.e. did not receive both the index test and reference standard, and these patients were not accounted for then this item should be scored as "no". If it is not clear whether all patients who entered the study were accounted for then this item should be scored as "unclear".

Discussion

This project has produced an evidence based tool for the quality assessment of studies of diagnostic accuracy. The tool is now available to all reviewers involved in systematic reviews of studies of diagnostic accuracy. The final tool consists of a set of 14 items, phrased as questions, each of which should be scored as yes, no or unclear. The tool is simple and quick to complete and does not incorporate a quality score. There are a number of reasons for not incorporating a quality score into QUADAS. Quality scores are only necessary if the reviewer wants to use an overall indicator of quality to weight the meta-analysis, or as a continuous variable in a meta-regression. Since quality scores are very rarely used in these ways, we see no need to introduce such a score. Choices on how to weight and calculate quality scores are generally fairly arbitrary thus it would be impossible to produce an objective quality score. Furthermore, quality scores ignore the fact that the importance of individual items and the direction of potential biases associated with these items may vary according to the context in which they are applied. [6,7] The application of quality scores, with no consideration of the individual quality items, may therefore dilute or entirely miss potential associations. [8]

Experts in the area used evidence provided by systematic reviews of the literature on diagnostic accuracy studies to produce the quality assessment tool. This is the first tool that has been systematically developed in the field of diagnostic accuracy studies. A further strength of this tool is that it will be subjected to a thorough evaluation. Any problems with the tool highlighted by this evaluation will be addressed with the aim of improving the tool. The tool is currently being piloted in 15 reviews of diagnostic accuracy studies covering a wide range of topics including the diagnosis of multiple sclerosis, the diagnosis of urinary tract infection in children under 5, diagnosing urinary incontinence and myocardial perfusion scintigraphy. We are collecting feedback on reviewers' experience of the use of QUADAS through a structured questionnaire. Anyone interested in helping pilot the tool can contact the authors for a questionnaire. Other proposed work to evaluate the tool includes an assessment of the consistency and reliability of the tool.

There are a number of limitations to this project, and the QUADAS tool. The main problem relates to the development of a single tool which can be applied to all diagnostic accuracy studies. The objective of this project was not to produce a tool to cover everything, but to produce a quality assessment tool that can be used to assess the quality of primary studies included in systematic reviews. We appreciate that different aspects of quality will be applicable to different topic areas and for different study designs. However, QUADAS is the generic part of what in practice may be a more extensive tool incorporating design and topic specific items.

We plan to do further work to develop these design and topic specific sections. We anticipate that certain items may need to be added for certain topic or design specific areas, while in other situations some of the items currently included in QUADAS may not be relevant and may need to be removed. Possible areas where the development of topic specific items may be considered include screening, clinical examination, biochemical tests, imaging evaluations, invasive procedures, questionnaire scales, pathology and genetic markers. Possible design specific areas include diagnostic case-control studies and diagnostic cohort studies. We plan to use a Delphi procedure to develop these sections. The Delphi panel will be larger than the panel used to develop the generic section and will include experts in each of the topic specific areas as well as experts in the methodology of diagnostic accuracy studies. Anyone interested in becoming a member of the Delphi panel for these sections can contact the authors.

One problem in using the QUADAS tool lies in the distinction between the reporting of a study and its quality. Inevitably the assessment of quality relates strongly to the reporting of results; a well conducted study will score poorly on a quality assessment if the methods and results are not reported in sufficient detail. The recent publication of the STARD document [14] may help to improve the quality of reporting of studies of diagnostic accuracy. The assessment of study quality in future papers should therefore not be limited by the poor quality of reporting which is currently a problem in this area. Currently, studies which fail to report on aspects of quality, for example if reviewers were aware of the results of the reference standard when interpreting the results of the index test, are generally scored as not having met this quality item. This is often justified as faulty reporting generally reflects faulty methods. [15]

Another factor to consider when using QUADAS is the difference between bias and variability. Bias will limit the validity of the study results whereas variability may affect the generalisability of study results. QUADAS includes items which cover bias, variability and, to a certain extent, the quality of reporting. The majority of items included in QUADAS relate to bias (items 3, 4, 5, 6, 7, 10, 11, 12 and 14), with only two items each relating to variability (items 1 and 2) and reporting (items 8, 9 and 13).

Conclusions

This project has produced the first systematically developed evidence based quality assessment tool to be used in systematic reviews of diagnostic accuracy studies. Further work to validate the tool is in process.

Competing interests

None declared.

Authors' contributions

All authors contributed towards the conception and design of the study and read and approved the final manuscript. PW and AWSR participated in the performance of the Delphi procedure, the analysis and interpretation of data, and drafted the article. PMMB, JBR and JK provided supervision for the study and JK and PB obtained the funding for the study.

Pre-publication history

The pre-publication history for this paper can be accessed here:

Supplementary Material

Table 1 – Initial list of items together with review evidence. Table 1 contains the list of 28 possible items for inclusion in the quality assessment tool which were rated using the Delphi procedure. It also contains the results of the systematic reviews on sources of bias and variation, and existing quality assessment tools.

Acknowledgments

Acknowledgements

We would like to thank Professor Colin Begg, Professor Patrick Bossuyt, Jon Deeks, Professor Constantine Gatsonis, Dr Khalid Khan, Dr Jeroen Lijmer, Mr David Moher, Professor Cynthia Mulrow, and Dr Gerben Ter Riet, for taking part in the Delphi procedure.

The work was commissioned and funded by the NHS R&D Health Technology Assessment Programme. The views expressed in this review are those of the authors and not necessarily those of the Standing Group, the Commissioning Group, or the Department of Health.

Contributor Information

Penny Whiting, Email: pfw2@york.ac.uk.

Anne WS Rutjes, Email: a.rutjes@amc.uva.nl.

Johannes B Reitsma, Email: j.reitsma@amc.uva.nl.

Patrick MM Bossuyt, Email: p.m.bossuyt@amc.uva.nl.

Jos Kleijnen, Email: jk13@york.ac.uk.

References

- Glasziou P, Irwig L, Bain C, Colditz G. Systematic reviews in health care: A practical guide. Cambridge: Cambridge University Press. 2001.

- Deeks J. Systematic reviews of evaluations of diagnostic and screening tests. In: Egger M, Davey Smith G, Altman D, editor. Systematic Reviews in Health Care: Meta-analysis in context. London: BMJ Publishing Group; 2001. Second edition. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whiting P, Rutjes A, Dinnes J, Reitsma JB, Bossuyt P, Kleijnen J. A systematic review of existing quality assessment tools used to assess the quality of diagnostic research. submitted.

- Streiner DL, Norman GR. Health measurement scales: a practical guide to their development and use. Oxford: Oxford University Press. 1995.

- Jadad AR, Moore A, Carroll D, Jenkinson C, Reynolds DJ, Gavaghan DJ, McQuay HJ. Assessing the quality of reports of randomised clinical trials: is blinding necessary? Control Clin Trials. 1996;17:1–12. doi: 10.1016/0197-2456(95)00134-4. [DOI] [PubMed] [Google Scholar]

- Juni P, Altman DG, Egger M. Assessing the quality of controlled trials. BMJ. 2001;323:42–46. doi: 10.1136/bmj.323.7303.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Juni P, Witschi A, Bloch RM, Egger M. The hazards of scoring the quality of clinical trials for meta-analysis. JAMA. 1999;282:1054–1060. doi: 10.1001/jama.282.11.1054. [DOI] [PubMed] [Google Scholar]

- Greenland S. Invited Commentary: A critical look at some popular meta-analytic methods. A J Epidemiol. 1994;140:290–296. doi: 10.1093/oxfordjournals.aje.a117248. [DOI] [PubMed] [Google Scholar]

- Whiting P, Dinnes J, Rutjes AWS, Reitsma JB, M BP, Kleijnen J. A systematic review of how quality assessment has been handled in systematic reviews of diagnostic tests. submitted.

- Whiting P, Rutjes AWS, Dinnes J, Reitsma JB, Bossuyt PMM, Kleijnen J. The development and validation of methods for assessing the quality and reporting of diagnostic studies. Health Technol Assess. [DOI] [PubMed]

- Whiting P, Rutjes AWS, Reitsma JB, Glas A, Bossuyt PM, Kleijnen J. A systematic review of sources of variation and bias in studies of diagnostic accuracy. Ann Intern Med, In press. [DOI] [PubMed]

- Kerr M. The Delphi Process 2002. City: Remote and Rural Areas Research Initiative, NHS in Scotland; 2001. The Delphi Process. avaliable online at http://www.rararibids.org.uk/documents/bid79-delphi.htm. [Google Scholar]

- The Delphi Technique in Pain Research. Vol. 2002. City: Scottish Network for Chronic Pain Research; 2001. The Delphi Technique in Pain Research. avaliable at http://www.sncpr.org.uk/delphi.htm. [Google Scholar]

- Bossuyt P, Reitsma J, Bruns D, Gatsonis C, Glasziou P, Irwig L, Moher D, Rennie D, de Vet H, Lijmer J. The STARD statement for reporting studies of diagnostic accuracy: explanation and elaboration. Clin Chem. 2003;49:7–18. doi: 10.1373/49.1.7. [DOI] [PubMed] [Google Scholar]

- Schulz KF, Chalmers I, Hayes RJ, Altman DG. Empirical evidence of bias: dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA. 1995;273:408–412. doi: 10.1001/jama.273.5.408. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table 1 – Initial list of items together with review evidence. Table 1 contains the list of 28 possible items for inclusion in the quality assessment tool which were rated using the Delphi procedure. It also contains the results of the systematic reviews on sources of bias and variation, and existing quality assessment tools.