Abstract

Objective: Substantial variations in adherence to guidelines for human immunodeficiency virus (HIV) care have been documented. To evaluate their effectiveness in improving quality of care, ten computerized clinical reminders (CRs) were implemented at two pilot and eight study sites. The aim of this study was to identify human factors barriers to the use of these CRs.

Design: Observational study was conducted of CRs in use at eight outpatient clinics for one day each and semistructured interviews were conducted with physicians, pharmacists, nurses, and case managers.

Measurements: Detailed handwritten field notes of interpretations and actions using the CRs and responses to interview questions were used for measurement.

Results: Barriers present at more than one site were (1) workload during patient visits (8 of 8 sites), (2) time to document when a CR was not clinically relevant (8 of 8 sites), (3) inapplicability of the CR due to context-specific reasons (9 of 26 patients), (4) limited training on how to use the CR software for rotating staff (5 of 8 sites) and permanent staff (3 of 8 sites), (5) perceived reduction of quality of provider–patient interaction (3 of 23 permanent staff), and (6) the decision to use paper forms to enable review of resident physician orders prior to order entry (2 of 8 sites).

Conclusion: Six human factors barriers to the use of HIV CRs were identified. Reducing these barriers has the potential to increase use of the CRs and thereby improve the quality of HIV care.

Although much has been accomplished in improving the care of patients with human immunodeficiency virus (HIV) over the last decade, there is room for continued improvement. The national HIV Costs and Services Utilization Study (HCSUS) documented substantial variations in care for subgroups of patients and practices. The largest provider of HIV services in the United States, the Veteran's Health Administration (VHA), is no exception. For example, a third of VHA patients and almost half of non-VHA patients failed to receive optimal antiretroviral therapy.1These shortfalls are greater among vulnerable subgroups such as African American and poor patients, in both antiretroviral therapy and opportunistic infection (OI) prophylaxis.2,3

The potential for computerization to improve clinical care has been appreciated for some time.4 In particular, computerized clinical reminders (CRs) have been advocated as a strategy to improve compliance with established clinical guidelines. With CRs, the software rather than the human initiates the human–machine interaction. CRs take advantage of preexisting electronic patient information to alert the provider when an action is recommended. In addition, CRs are “real-time” decision aids in that they prompt providers to consider guideline-based advice when a patient record, and ideally the patient, is in front of the provider. As such, they have the potential to improve quality of care in that they augment the limited memory resource of a time-pressured, frequently interrupted clinician who provides intermittent care to multiple patients. In HIV care, clinical reminders were associated with more timely initiation of recommended practices.5,6

Computerized clinical reminders in the VHA run on top of a complex electronic infrastructure. The VHA began the development of an integrated electronic database for national use in the 1970s. In 1983, the Decentralized Hospital Computer Program (DHCP) system was initially deployed, which was renamed the Veterans Information Systems and Technology Architecture (VistA) in 1996 to reflect the evolution of the architecture. As early as 1986, a nursing special user interest group began focusing on order entry software, and an initial release of Order Entry Results Reporting (OE/RR) occurred in 1990. In 1996, Computerized Patient Record System (CPRS) software that incorporates order entry and other functionality in a Graphical User Interface (GUI) format was released initially. Clinical reminders display on the primary screen of the CPRS software, which was mandated nationally in the VHA in 1999.7

The Computerized Patient Record System (CPRS) has extensive functionality, including ordering and reviewing of laboratory results, pharmaceuticals, and diagnostic tests and documentation of progress and procedures. A summary list of “due” CRs for an individual patient automatically displays on the primary screen of the CPRS software, and further details about individual CRs can be viewed upon demand. “Due” CRs are rendered “satisfied” through actions observable to the computer system (e.g., ordering a laboratory test), in which case documentation is a by-product of following the reminder advice. In cases in which the reminders are not clinically relevant, the provider can remove the reminder by selecting a dialog box option.

The results regarding the effectiveness of CRs in practice are mixed.8,9,10,11,12 In a review of controlled clinical trials published between 1974 and 1998, 43 of 65 studies were found to improve physician performance.13 In the Veteran's Administration, 275 resident physicians at 12 Veteran's Administration medical centers were assigned randomly to a reminder or control group. In this study, the impact of CRs on practice was significant but declined throughout 17 months, even though the reminders stayed active.14 The underlying reasons for this variation in effectiveness remain unclear, but findings from an exploratory focus group suggest that there may be “human factors” barriers to use, particularly efficiency, usefulness, information content, user interface, and workflow.15

We asked the question: What are human factors barriers to the effective use of ten HIV clinical reminders? This report describes barriers identified through direct, ethnographic observation16 and semistructured interviews at two pilot and six infectious disease outpatient clinics. These barriers might generalize to other clinical reminders and have implications for the design and use of computerized clinical reminders.

Methods

Clinical reminders

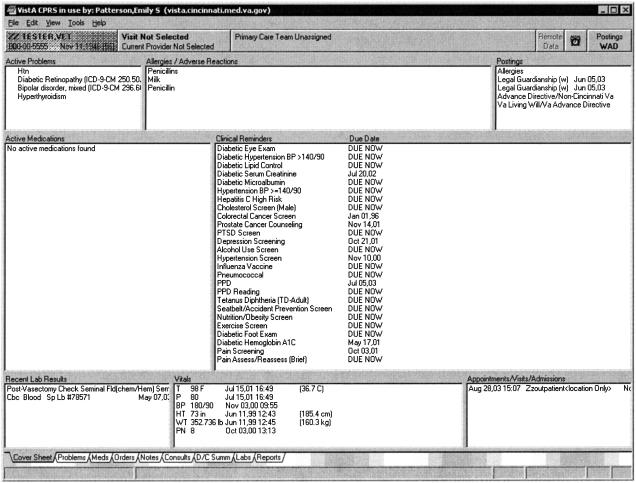

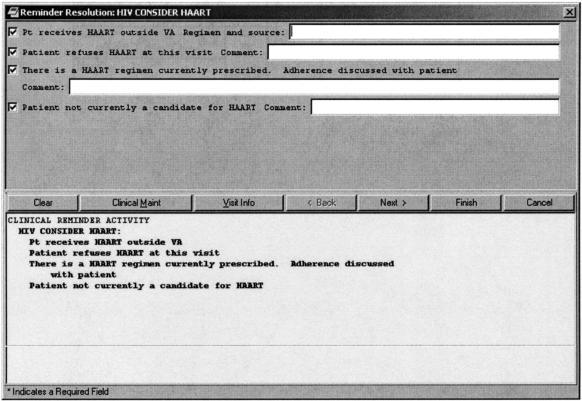

Clinical reminders that are “due” for a patient, based on the available data, appear on the primary “coversheet” screen of the Computerized Patient Record System (CPRS) software (▶). This screen includes allergies, medications, laboratory results, vital sign data, appointments/admissions, crisis notes, adverse reactions, warnings, and clinical reminders in a condensed single-window view. By clicking on the alarm clock icon in the upper right hand corner of the coversheet screen, further information about the clinical reminders, including potentially applicable reminders that are not currently due, can be accessed. “Due” CRs are rendered “satisfied” through actions observable to the computer system (e.g., ordering a laboratory test), in which case documentation is a by-product of following the reminder advice. In cases in which the reminders are judged not to be clinically relevant, the provider can remove the reminder by clicking on the “notes” tab on the bottom of the coversheet, starting a new note, which includes the standard “sign in” process, then clicking on the “reminders” drawer which separates reminders by due, applicable, and other categories, and then clicking on an individual reminder. At this time, a dialog box, such as is shown in ▶ for one of the HIV clinical reminders in this study, can be used to document the reason for inapplicability of the reminder. Upon completion of this dialog box, the status of the reminder will change so that it is no longer “due.” The amount of time before the clinical reminder again becomes “due” depends on which option is selected.

Figure 1.

CPRS coversheet in which “due” clinical reminders are displayed for a fictional patient.

Figure 2.

Dialog box used to document inapplicability of reminder to consider HAART.

Ten guideline-driven reminders were developed as part of a larger project to improve quality of HIV care. Each one uses electronic record (VistA) data to identify patients potentially receiving suboptimal care, such as single drug antiretroviral therapy or no prescription for antiretroviral drugs despite immune deterioration and high viral load. The purpose of the HIV clinical reminders is to reduce the likelihood that physicians will forget to screen a patient for related diseases (5 of 10 reminders), miss that patient data have crossed a threshold for an intervention (3 of 10), or forget to perform a periodic action in a treatment plan (2 of 10). Specifically, the HIV CRs (▶) remind providers to:

Screen for related diseases (hepatitis A, B, C, toxoplasmosis, syphilis);

Initiate medication regimens when immune function (CD4+ count) falls below recommended thresholds (Highly Active Anti-Retroviral Therapy [HAART], Pneumocystis carinii pneumonia [PCP] prophylaxis, Mycobacterium avium complex [MAC] prophylaxis); and

Monitor immune function, viral load, and lipids at recommended intervals.

Table 1.

Guidelines and Implementation for HIV CRs

| Guideline | Clinical Reminder Implementation |

|---|---|

| Every HIV patient should have a hepatitis A titer at least once | Due once if HIV. |

| Satisfied by disease classification code, lab report, or entry of patient refusal. | |

| Every HIV patient should have a hepatitis B titer at least once | Due once if HIV. |

| Satisfied by disease classification code, lab report, or entry of patient refusal. | |

| Every HIV patient should have Hepatitis C titer at least once | Due once if HIV. |

| Every HIV patient should have a hepatitis C titer at least once | Due once if HIV.Satisfied by disease classification code, lab report, or entry of patient refusal. |

| Every HIV patient should have a toxoplasma titer at least once | Due once if HIV. |

| Satisfied by lab report, or entry of acute illness, or patient refusal. | |

| Every HIV patient should have a VDRL at least once | Due once if HIV. |

| Satisfied by lab report or entry of patient refusal. | |

| Patients with CD4 t-cell count < 350 or VL > 30,000 should be recommended for HAART | Due for patient with last CD4 < 350 or last VL > 30,000 who is not on HAART medications. |

| Satisfied by active HAART medications or entry that patient is treated elsewhere, unable to tolerate, or refuses. | |

| Patients with CD4 < 200 should be on PCP prophylaxis | Due for patient with CD4 < 200 or CD4% < 15 within the previous six months who is not on PCP prophylaxis medications. |

| Satisfied by active PCP prophylaxis medications or entry that patient is treated elsewhere, unable to tolerate, or refuses. | |

| Patients with last CD4 < 51 should be on MAC prophylaxis | Due if HIV and last CD4 < 51. |

| Satisfied by active MAC prophylaxis medications or entry that patient is treated elsewhere, unable to tolerate, or refuses. | |

| Patients on therapy need CD4 t-cell count and viral load monitored every three months; others, every six months | Due every three months if on therapy. Due every six months if not on therapy. |

| Satisfied by the CD4 and VL lab values in the last three to six months, or entry of acute illness, outside results, or patient refusal. | |

| Every patient should have a lipid panel done once and patients on protease inhibitors should have a lipid panel every 18 months |

Due once if HIV and every 18 months if HIV positive, no lipid panel lab value, and life expectancy > 6 months. |

| Satisfied by lipid panel lab value. |

Setting

We observed primary care providers for all outpatient clinic hours for one day each at two pilot and six study sites after the clinical reminders were implemented, from October 2001 to October 2002. The two pilot sites were selected based on ease of accessibility, and the six study sites were selected based on IRB approval and participation in a larger, randomized 16-site study.17 This larger study was designed to evaluate the independent effects of these computerized clinical reminders and a group-based quality improvement intervention based on the Institute for Healthcare Improvement (IHI) breakthrough series model.18 Five of the eight observed sites (two pilot sites, three other sites) implemented the clinical reminders as the sole intervention and were instructed not to locally modify the reminders during the study period. Three of the observed sites received the quality improvement intervention as well as implemented the clinical reminders. This intervention included training on the ten HIV clinical reminders as well as three face-to-face learning sessions and weekly interactive conference calls surrounding quality improvement techniques. All clinic sites were dedicated to infectious disease (ID) patients, and all care providers had been using the CPRS on which the clinical reminders display for more than a year, but the size and work organization varied. ▶ shows the number of HIV physicians, including attending, fellow, and resident physicians, and approximate number of HIV patients at each site. Note that no intern physicians provided care at any of the sites. In addition, ▶ shows the number and role of the personnel who were interviewed and observed at each site.

Table 2.

Site and Participant Data

| Site | No. HIV Attending Physicians | No. HIV Patients | Interviewed Personnel | Observed Personnel | No. Observed Patients |

|---|---|---|---|---|---|

| 1 (pilot) | 1 | 150 | 2 attendings, 1 fellow | 2 attendings, 2 fellows, 3 residents, 1 medical student | 5 |

| 2 (pilot) | 16 | 500 | 1 attending, 2 physician's assistants, 1 research nurse, 1 pharmacist, 1 nurse, 1 clerk | 2 attendings, 1 fellow, 1 resident | 2 |

| 3 | 3 | 300 | 2 attendings, 2 nurses, 1 pharmacist, 1 social worker, 1 clerk | 2 attendings | 5 |

| 4 | 4 | 500 | 2 attendings, 3 case managers, 1 pharmacist, 1 nurse, 1 dietitian, 1 clerk | 1 fellow, 3 case managers, 1 dietitian | 4 |

| 5 | 11 | 650 | 2 attendings, 2 fellows, 1 case manager, 1 immunology coordinator, 1 pharmacist, 1 nurse | 1 attending, 2 fellows, 1 case manager | 6 (and walkthroughs of 8 without patient present) |

| 6 | 7 | 450 | 1 attending, 1 fellow, 2 residents, 1 psychologist, 2 psych interns, 1 case manager, 1 pharmacist, 1 nurse, 1 clerk | 1 fellow, 1 resident | 2 |

| 7 | 2 | 200 | 2 attendings, 2 nurse practitioners, 1 case manager, 1 clinic coordinator, 1 HIV coordinator, 1 pharmacist, 1 dietitian | 2 attendings, 1 nurse practitioner | 5 |

| 8 | 2 | 250 | 2 attendings, 1 physician's assistant, 1 pharmacist, 1 nurse | 1 attending | 3 |

Conceptual Framework

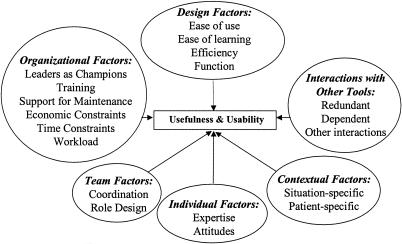

The conceptual framework that guided the data collection and analysis is summarized in ▶. Potential barriers to the use of clinical reminders were identified from prior research on barriers to use of Bar Code Medication Administration (BCMA)19 and computerized decision support systems in aviation,20 an emergency call center,21 and space shuttle mission control.22 The potential barriers include design, organizational, team, individual, contextual, and tool interaction factors. Drawing on this conceptual framework, 19 barriers to the effective use of clinical reminders were hypothesized and explicitly investigated during this study (▶).

Figure 3.

Conceptual framework guiding data collection and analysis.

Table 3.

Hypothesized Barriers to Use of HIV Clinical Reminders

| Poor support for reminder use from leadership: |

| No champion |

| No monitoring of use |

| No reinforcement as a priority |

| No maintenance of software |

| Inadequate training for: |

| Permanent clinic staff |

| Rotating clinic staff |

| Goal conflicts with reminder use: |

| Workload |

| Economic |

| Performance (efficacy of care) |

| Quality of patient–provider interaction |

| Timeliness |

| Training |

| Keeping current with best practices |

| Documentation |

| HIV expertise/experience |

| Poor coordination with other practitioners |

| Interactions with paper-based systems |

| Between-clinic interactions |

| Context-specific barriers |

Data Collection

Two observers, trained in ethnographic field observations in complex settings,23 conducted all observations for one day each at the two pilot and six study sites. Each observation period lasted the entire clinic time, ranging from 3 to 5 hours at each site. To minimize the effect of observations on behavior, we informed the participants that no one, including their supervisors, would be provided with information that could trigger a punitive response since data were deidentified for site, person, place, and time, and we did not measure quality of care. We targeted the observations to sample times from two weeks until one year after batch implementation of the clinical reminders.

Verbal response data from the semistructured interviews,24 conducted opportunistically throughout the day with all accessible clinic personnel, were collected as handwritten, deidentified notes. The first question asked of the host physician was: “How would you characterize your clinic?” When asked for clarification, examples were given such as “Is this a large clinic? Do you only see patients with infectious diseases?” In addition, the host physician was asked to describe how a typical patient is processed on a typical day to elicit the standard workflow. All interviewees were asked to describe their role in providing patient care, what they perceived to be barriers to the use of clinical reminders in general and the specific clinical reminders being evaluated, and what training they had received on the use of clinical reminders in general and the HIV clinical reminders in particular. As issues were raised by the interviewees, questions to clarify trade-offs and assess the tractability of the barriers were asked.

Notes from both the observations and interviews were translated into electronic format by the investigator within two days of data collection to minimize losing additional data in memory and introducing inaccurate information during translation.

Analysis

The observational data were transformed into a standard activity protocol format that details observed verbalizations and behavior separately from less reliable inferences about internal cognitive processing.25 The protocols were analyzed for usefulness and usability problems, normally indicated by a reaction of surprise to how the clinical reminders responded to a user action.

The verbal response data from the semistructured interviews were organized for each site into a template that was designed to target specific questions as well as support the discovery of unanticipated insights. Specifically, the template had the following section headings:

summary of main findings for the site,

demographic information about the clinic and providers,

normal workflow (the expected sequence of activities for a patient on a typical clinical day),

training,

self-reported barriers for each individual reminder,

barriers to HIV reminders and clinical reminders in general,

other data, and

reactions to the debriefing interview with the host physician where highlights of the preliminary findings were presented.

Finally, data from the observations and interviews at all the sites were collectively analyzed. The specific analytic technique used was process tracing analysis,26 which iteratively uses a conceptual framework to generate top-down questions as well as abstracts data bottom-up into emerging themes. Barriers were classified as “present” if they were observed or self-reported during the interviews and “unable to tell if present” if they were not. For example, at the first site, the lack of a clinical champion was classified as a barrier because neither of the two attending physicians promoted the use of the CRs. The first attending physician stated that he did not “buy into” the clinical reminders because they did not provide laboratory data, they missed subtleties, they did not fit the practice of preparing on Friday for a Monday clinic or of outside-clinic phone interactions with patients, they did not work with their paper charts, there were no computers in patient rooms, and the clinic nurse did not use the CPRS software upon which the reminders ran. The second attending physician stated that he thought that clinical reminders were “worse than nothing if they only work intermittently.” Finally, a fellow physician stated that he thought reminders were very important and always looked at them, but without the support of leadership, he felt he could not encourage the residents or medical students to do so.

Results

Six of the 19 hypothesized barriers were judged to reduce the effectiveness of the HIV clinical reminders at more than one site.

Barrier 1: Workload

The primary barrier to the use of the HIV clinical reminders, based on the relative frequency of times that it was self-reported during the interviews and provided as an explanation for not using the clinical reminders during the observations, was the additional workload. At all sites, at least one provider reported that he or she did not use the clinical reminders when he or she was busy. At some sites, physicians reported using the clinical reminders only when they had additional time during the clinic day.

Barrier 2: Time to Document Why CR Did Not Apply

All sites reported that a significant barrier was the lack of time to follow documentation procedures within the CR noting why the reminder's advice was not followed. Although the clinical reminders were automatically removed if the recommended actions were taken, there were many observed situations in which the reminders were judged not to be clinically relevant by the providers, in which case they had to document an explanation to remove the reminder, normally via selection of a radio button in a dialog box. At all sites, at least one provider never satisfied reminders that were not clinically relevant, which required data entry such as when a patient received a vaccine at another hospital. At least one provider at all eight sites conducted documentation activities, including satisfying clinical reminders that were not clinically relevant (when done), after the patient had left the room. At one of the two pilot sites and three of the six study sites, at least one provider conducted documentation activities after the clinic closed, normally within 36 hours. At two of the study sites, case managers documented clinical reminders that were not clinically relevant after the physicians did not.

Barrier 3: Inapplicability to the Situation

The third barrier to following the advice of a clinical reminder was inapplicability to the specific situational context. For example, a recommendation to begin Highly Active Anti-Retroviral Therapy (HAART) was not followed because the patient had experienced multiple intolerances to the medication in the past. Not including observations at sites in which clinical reminders were not used as a general practice, nine of 26 patients had HIV clinical reminders that the observed providers believed were inappropriate for the situation. The reasons for inapplicability are shown in ▶.

Table 4.

Inapplicable Reminders Due to Situation-specific Factors

| Site | Extent of General CR Use | Proportion of Cases in Which CRs Not Relevant | Explanation for Why HIV CR Was Considered Inappropriate |

|---|---|---|---|

| 1 (pilot) | Never used | N/A | N/A |

| 2 (pilot) | Viewed by all but not removed when inappropriate | 1/2 | Did not want to start patient on HAART because of multiple past intolerances to medication. |

| 3 | Used by some | 2/5 | Patient 1: CD4 t-cell count of 210 and 14%. Although the t-cell count was below 15%, the other indicator was above the borderline of 200, which was the preferred indicator for the physician. In addition, there was not a downward trend with his t-cell count and he wanted to minimize the patient's medications. |

| Patient 2: Hepatitis (Hep) A was ordered already. Hep C had always been positive, so no need to test. | |||

| 4 | Used by some | 1/4 | PCP prophylaxis reminder present even though CD4 t-cell count of 350 was above the 200 borderline (although it might have been below in the last six months). |

| 5 | Used by some | 3/9 | Patient 1: Previously tested positive for Hep C; Although CD4 t-cell count was 309 and 18%, didn't want to begin HAART because patient was not compliant with medication regimens. |

| Patient 2: Tested for Hep C in 1999, the result was negative, and there were no ongoing risk factors. | |||

| Patient 3: PCP prophylaxis reminder triggered because CD4 was 9% even though the CD4 t-cell count was above the borderline (320). In addition, Bactrim had already been ordered (although it is likely that the patient did not fill the prescription). | |||

| 6 | Never used | N/A | N/A |

| 7 | Never used | N/A | N/A |

| 8 | Used by some | 0/2 | N/A |

Barrier 4: Training

At five of the eight sites, limited knowledge of how to use the clinical reminder software in general was a barrier (▶). The levels in ▶ represent a synthesized aggregate judgment based on the interview and observational data about knowledge of site providers regarding how to use the clinical reminder software. Also included in ▶ is a description of the typical use of the CPRS and paper-based systems at the site, as that might influence the knowledge of how to use the clinical reminder software. At only two sites were resident physicians observed to be aware of how to remove clinical reminders that were judged to be not clinically relevant or to view details about a particular clinical reminder on demand. At every site, the permanent clinic staff members were judged to have a better understanding of how to use computerized clinical reminders than rotating staff members, specifically the resident and fellow physicians. Nevertheless, there are opportunities for improving training on clinical reminder use even with permanent staff, as personnel at only three of the eight sites knew how to view active clinical reminders.

Table 5.

Knowledge of HIV Clinical Reminder Use

| Site | CPRS and Paper-based Systems Use | Knowledge of Permanent Staff | Knowledge of Rotating Staff |

|---|---|---|---|

| 1 (pilot) | CPRS: notes, orders, labs (physicians fill out paper forms, case manager reviews, clerk enters in CPRS) | 2 | 1 |

| Paper: orders, labs, overview, lab results, shadow charts | |||

| 2 (pilot) | CPRS: notes, orders, labs | 4 | 2 |

| Paper: lab results | |||

| 3 | CPRS: notes, orders, labs | 4 | N/A |

| Paper: overview | |||

| 4 | CPRS: notes, orders, labs | 5 | 1 |

| Paper: overview | |||

| 5 | CPRS: notes, orders, labs | 5 | 3 |

| Paper: vital signs, reminders | |||

| 6 | CPRS: notes, orders, labs (physicians fill out paper forms, case manager and pharmacist review, clerk enters in CPRS) | 2 | 1 |

| Paper: orders, labs, reminders, shadow charts | |||

| 7 | CPRS: notes, orders, labs | 2 | N/A |

| Paper: vital signs, shadow charts | |||

| 8 | CPRS: notes, orders, labs | 4 | 3 |

| Paper: none |

1, not aware CRs exist; 2, can only view active CRs on primary CPRS display; 3, knows how to document that a CR is not relevant and view details about CRs; 4, competent CR use, including knowledge of what triggers individual CRs to become active; 5, super-user knowledge, including how long CR will be removed based on selection of dialog box option, how to tailor CRs for the site, and how to troubleshoot.

This barrier is likely to generalize to the use of all clinical reminders, because the limits in knowledge affect all clinical reminder use. During our interviews, we were informed by several physicians that their facility's basic formal training class on the CPRS did not include training on computerized clinical reminders.

Barrier 5: Quality of Provider–Patient Interaction

The fifth barrier to use was a perceived reduction of quality of provider–patient interaction when interacting with the computerized clinical reminders. This barrier was reported by three of the 23 permanent staff (14 attending physicians, four case managers, three physician's assistants, and two nurse practitioners) who were interviewed or observed at the pilot and study sites. The specific interview data regarding this barrier included:

Physician's assistant: “I do all of my computer work after the patient leaves because I don't want to interact more with the computer than the patient.”

Attending physician: “The main reason why I do not use the clinical reminders is that while I am with the patient, I will cut and paste to start a new progress note. I will do orders while the patient is there—meds, labs. I don't like to do reminders while with the patient because I feel that it takes you away from the patient. I know it doesn't take long, but I feel like, as it is, I spend more time looking at the computer than in the patient's eyes.”

Attending physician: “Using the clinical reminders takes too much time away from my time with my patients.”

In addition, note that although one of the attending physicians was not categorized as having this barrier because he normally used clinical reminders, he reported that he did not use them during the first patient visit. He explained that he wanted the first patient visit to exclusively focus on discussions about the disease and how to take the medications and felt that the clinical reminders might interfere with those discussions. Note that rotating physicians generally had little experience with computerized clinical reminders and often were not the primary decision makers regarding whether to use clinical reminders at the site, so their data were not included in the analysis regarding this barrier.

Barrier 6: Use of Paper Forms

Likely due to the specialized expertise needed to treat infectious diseases, only three of the eight sites used resident physicians to provide care. At two of these sites, the clinical reminders were not part of the standard workflow. Instead of writing orders personally into the CPRS, the physicians filled out paper forms, which were reviewed by a case manager, as well as a pharmacist at one site, prior to entry by a clerk. At both sites, the review was conducted on a paper-based system that did not incorporate the clinical reminders, and the clerk did not review clinical reminders when ordering.

Discussion

Clinical reminders (CRs) have the potential to improve clinical care, but have had mixed effectiveness in practice. We identified the following barriers to the effective use of ten HIV CRs: (1) workload (8 of 8 sites), (2) lack of time to follow documentation procedures within the CR noting why the reminder was not followed (8 of 8 sites), (3) inapplicability of the CR for context-specific reasons (9 of 26 patients), (4) limited training on how to use the CR software for rotating (5 of 8 sites) and permanent staff (3 of 8 sites), (5) perceived reduction of quality of provider-patient interaction (3 of 23 permanent staff), and (6) the use of paper forms to write resident physician orders (2 of 8 sites).

Few of the identified barriers are inherent to computerized clinical reminders. It is likely that design revisions, modification of organizational policies, and “best practices” training could reduce many of these barriers. Although generating recommendations for change to address these barriers is a challenging and creative task, a preliminary list has been developed to explicate the kinds of approaches that might be taken (▶). For example, users who did not feel that they had enough time to document why the advice of a clinical reminder was not followed via the existing dialog box could be provided with a “disable” feature. With such a feature, a physician could indicate with a single right-click that a reminder is inappropriate during a patient session, and then document at a later time why the reminder is not applicable. Even if the documentation is not completed prior to the next patient encounter, the likelihood that the next provider will erroneously follow the reminder's advice is reduced. As another example, two sites used paper-based order forms so that attending physicians or case managers could check orders prior to entry by a clerk. As the software is currently designed, this function could be done through the provider order entry software by having residents enter orders that are then signed by an attending physician or case manager. Note that none of these recommendations has been evaluated for whether they actually reduce the identified barriers, and that making the proposed changes might introduce new, unexpected problems. Note also that several of these recommendations have been implemented in the current version of the HIV clinical reminders, which have been installed voluntarily at approximately 30 VA medical centers not included in this study.

Table 6.

Recommendations to Reduce Barriers

| Category | Barrier | Recommendation |

|---|---|---|

| Design: efficiency | Inefficient interaction when CR does not apply. | Add single button-click “disable” feature that allows documentation of why a CR does not apply at a later time. |

| Design: usability | Ordering new medications satisfied the intent of the reminders but did not resolve them because they were not yet included in the logic. | Add “Appropriate medication prescribed” option to resolve reminder. |

| Design: usability | Confusing that reminders displayed as DUE even after labs were ordered. | Distinguish between reminders that have not been acted upon, ordered labs, drawn labs, and received results; have different reminders employ consistent rules. |

| Design: usability | Reminders did not always apply given the context of a particular patient. | Add “Not indicated in provider's judgment” option to resolve reminder. |

| Design: usability | In borderline cases, providers did not want to order medications yet but wanted to reconsider the action at the next visit. | Create a “snooze” feature that dismisses reminders until a specific time in the future. |

| Design: usability | Users were uncertain how long the reminders would be turned off for each dialog option. | Display how long each option will satisfy the reminder. |

| Design: usability | There is no way to “undo” an action. | Add an “undo” function. |

| Design: usefulness | Reminders did not always match local practice. | Support sites in turning off and tailoring individual reminders. |

| Design: usefulness | One site wanted to add a reminder because yearly TB screens for HIV patients are sometimes forgotten. | Support the local design of reminders consistent with standard clinical reminder design guidelines. |

| Organizational: time constraints | CRs are not used when staff are busy. | Devise systems for preclinic preparation to make visit more efficient; lengthen time allocated for patient visit. |

| Organizational: workflow | Less use when physicians are experiencing workflow bottlenecks. | Redesign workflow to reduce bottlenecks, such as by delegation of time-consuming tasks to others or by reducing wait times for residents to speak to attending physicians. |

| Organizational: workload | Workload cited as primary barrier. | Reduce workload for end-users; demonstrate how the use of clinical reminders can be used to make some processes more efficient, such as documentation. |

| Organizational: training | Permanent staff better training than rotating staff (residents, fellows) on CRs. | Improve CR training for rotating staff; provide contact information for computer support personnel. |

| Team: role design | Documentation of nonapplicable CRs delegated to nonprimary care physicians. | Institute systematic process for communicating rationale for not following recommended actions to delegates. |

| Individual: attitudes | Unsatisfied with quality of patient–provider interaction. | Reconfigure physical workspace such that patients can see computer screens and/or physician can maintain eye contact with patient while using software. |

| Contextual: patient- and situation-specific | CRs did not apply. | “Sharpen” the automated logic that triggers the reminder to reduce false alarms, such as by increasing the ability to electronically access laboratory results from other hospitals. |

| Interactions with other systems: redundant | Paper-based systems used to detect erroneous decisions by residents. | Have resident physician enter orders that are signed by senior personnel; add new features. |

This study has several limitations. The data were collected from a small proportion of VA facilities (8 of 163 medical centers) and an even smaller proportion of possible providers and patients. Data regarding the specific versions of CPRS and VistA that were used at the time of observations were not collected, even though it is possible that the different implementations may have affected how the reminders were implemented and experienced by the study clinicians. No objective, independent evaluation of the usability of the HIV reminders was conducted in this study, such as by provider survey, due to the desire to minimize burden on the observed providers, the small number of observed providers, the inability to randomly sample the observed providers due to access limitations, and the lack of a comparison group in the study design. A national provider survey is planned for future research to identify barriers to the use of all clinical reminders in the VA, including barriers due to usability problems. No objective workload data were collected, which would have helped to validate the findings regarding the workload barrier to the effective use of the clinical reminders. Future research is planned to investigate the relationship of objective and self-reported workload data. Although some of the identified barriers, such as limited training on the provider order entry system, might generalize to other clinical reminders, others such as the use of paper-based forms to review the work of resident physicians, might be unique to HIV patient care. Generalization of these findings to non-VA clinics, and possibly other VA clinics, might not be possible due to differences in the informatics infrastructure in which the clinical reminders are embedded. Our redesign recommendations have not been evaluated for how well they reduce barriers to use, and might introduce new, unintended consequences on how the clinical reminders are used.

Conclusion

Six human factors barriers to the use of ten HIV CRs were identified at multiple infectious disease clinics. Some or all of these barriers might help explain the mixed results regarding the effectiveness of computerized clinical reminders in prior studies. Additional research is needed to determine if these barriers generalize to other CRs. This study shows how human factors knowledge and methods can generate new insights regarding the use of computerized tools to provide patient care. These insights could improve the effectiveness of computerized clinical reminders in improving patient care as well as increase adoption of use by primary care providers.

This research was supported by the Department of Veterans Affairs, Veterans Health Administration, Health Services Research and Development Service (HIS 99-042, HIT-01-090). The views expressed in this article are those of the authors and do not necessarily represent the view of the Department of Veterans Affairs. A VA HSR&D Advanced Career Development Award supported Dr. Asch and a VA HSR&D Merit Review Entry Program Award supported Dr. Patterson. The authors thank Sam Bozzette, Candice Bowman, Debbie Fetters, and Henry Anaya for sharing their findings regarding the effectiveness of clinical reminder use and a quality improvement intervention on clinical outcomes. The authors thank Sophia Chang and Vandana Sundaram from the Center for Quality Management of the VA Public Health Strategic Health Care Group for collaboration on technical development, implementation, and field training of the HIV clinical reminders. The authors thank Peter Glassman for reviewing this report and providing helpful suggestions.

References

- 1.Bozzette SA, Berry SH, Duan N, et al. The care of HIV-infected adults in the United States. HIV cost and services utilization study consortium. N Engl J Med. 24December1998;339(26):1897–904. [DOI] [PubMed] [Google Scholar]

- 2.Asch S, McCutchan A, Gifford A, et al. Underuse of primary prophylaxis for mycobacterium avium complex (MAC) in a representative sample of HIV-infected patients in care in the U.S.A: who is missing out? Presented at the World AIDS Conference, June 1998.

- 3.Shapiro MF, Morton SC, McCaffrey DF, et al. Variations in the care of HIV-infected adults in the United States: results from the HIV Cost and Services Utilization Study. JAMA. 1999;281(24):2305–15. [DOI] [PubMed] [Google Scholar]

- 4.Bates DW, Cohen M, Leape LL, Overhage M, Shabot MM, Sheridan T. Reducing the frequency of errors in medicine using information technology. J Am Med Inform Assoc. 2001;8(4):299–308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kitahata MM, Dillingham PW, Chaiyakunapruk N, et al. Electronic human immunodeficiency virus (HIV) clinical reminder system improves adherence to practice guidelines among the University of Washington HIV study cohort. Clin Infect Dis. 15March2003;36(6):803–11. [DOI] [PubMed] [Google Scholar]

- 6.Safran C, Rind DM, Davis RB, et al. Guidelines for the management of HIV infection in a computer-based medical record. Lancet. 1995;346:341–6. [DOI] [PubMed] [Google Scholar]

- 7.Brown SH, Lincoln MJ, Groen P, Kolodner RM. VistA: The U.S. Department of Veterans Affairs National Scale Hospital Information System. Int J Med Inform. 2003;69:135–56. [DOI] [PubMed] [Google Scholar]

- 8.Reisman Y. Computer-based clinical decision aids. A review of methods and assessment of systems. Medical Informatics. 1996;21(3):179–97. [DOI] [PubMed] [Google Scholar]

- 9.Davis DA, Thomson MA, Oxman AD, Haynes RB. Changing physician performance. A systematic review of the effect of continuing medical education strategies. JAMA. 1995;274(9):700–5. [DOI] [PubMed] [Google Scholar]

- 10.Shiffman RN, Liaw Y, Brandt CA, Corb GJ. Computer-based guideline implementation systems: a systematic review of functionality and effectiveness. J Am Med Inform Assoc. 1999;6(2):104–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Harpole LH, Khorasani R, Fiskio J, Kuperman GJ, Bates DW. Automated evidence-based critiquing of orders for abdominal radiographs: impact on utilization and appropriateness. J Am Med Inform Assoc. 1997;4(6):511–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Rocha BH, Christenson JC, Evans RS, Gardner RM. Clinicians' response to computerized detection of infections. J Am Med Inform Assoc. 2001;8(2):117–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998;280(15):1339–46. [DOI] [PubMed] [Google Scholar]

- 14.Demakis JG, Beauchamp C, Cull WL, et al. Improving residents' compliance with standards of ambulatory care: Results from the VA cooperative study on computerized reminders. JAMA. 2000;284(11):1411–6. [DOI] [PubMed] [Google Scholar]

- 15.Krall MA, Sittig DF. Clinician's assessments of outpatient electronic medical record alert and reminder usability and usefulness requirements. Proc AMIA Symp. 2002:400–4. [PMC free article] [PubMed]

- 16.Hutchins E. Cognition in the Wild. Cambridge, MA: MIT Press, 1995.

- 17.Bozzette SA, Phillips B, Asch S, et al. Quality enhancement research initiative for HIV/AIDS: framework and plan. Medical Care. 2000;38(6 suppl 1):I60–9. [DOI] [PubMed] [Google Scholar]

- 18.Kilo CM, Kabcenell A, Berwick DM. Beyond survival: toward continuous improvement in medical care. New Horizons. 1998;6(1):3–11. [PubMed] [Google Scholar]

- 19.Patterson ES, Cook RI, Render ML. Improving patient safety by identifying side effects from introducing bar coding in medication administration. J Am Med Inform Assoc. 2002;9(5):540–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sarter N, Woods DD, Billings CE. Automation Surprises. In: Salvendy G, (ed). Human Factors/Ergonomics (ed 2). New York: Wiley; 1997.

- 21.Salembier P, Pavard B, Benchekroun H. Design of cooperative systems in complex dynamic environments. In: Cacciabue C, Hoc JM, Hollnagel E, (eds). Expertise and Technology: Cognition & Human-Computer Cooperation. New York: Lawrence Erlbaum Associates, 1995.

- 22.Malin J, Schreckenghost D, Woods D, et al. Making Intelligent Systems Team Players: Case Studies and Design Issues. NASA Technical Memo 104738, 1991.

- 23.Hutchins E. Cognition in the Wild. Cambridge, MA: MIT Press, 1995.

- 24.Cooke NJ. Varieties of knowledge elicitation techniques. International Journal of Human-Computer Studies. 1994;41:801–49. [Google Scholar]

- 25.Jordan B, Henderson A. Interaction analysis: foundations and practice. J Learning Sciences. 1995;4(1):39–103. [Google Scholar]

- 26.Woods DD. Process tracing methods for the study of cognition outside of the experimental psychology laboratory. In: Klein G, Orasanu J, Calderwood R (eds). Decision Making in Action: Models and Methods. Norwood, NJ: Ablex Publishing Corporation, 1993, pp 228–51.