Abstract

Objective: The aim of this study was to investigate the impact of a program of repeated assessments, feedback, and training on the quality of coded clinical data in general practice.

Design: A prospective uncontrolled intervention study was conducted in a general practice research network.

Measurements: Percentage of recorded consultations with a coded problem title and percentage of patients receiving a specific drug (e.g., tamoxifen) who had the relevant morbidity code (e.g., breast cancer) were calculated. Annual period prevalence of 12 selected morbidities was compared with parallel data derived from the fourth National Study of Morbidity Statistics from General Practice (MSGP4).

Results: The first two measures showed variation between practices at baseline, but on repeat assessments all practices improved or maintained their levels of coding. The period prevalence figures also were variable, but over time rates increased to levels comparable with, or above, MSGP4 rates. Practices were able to provide time and resources for feedback and training sessions.

Conclusion: A program of repeated assessments, feedback, and training appears to improve data quality in a range of practices. The program is likely to be generalizable to other practices but needs a trained support team to implement it that has implications for cost and resources.

As part of establishing a primary care research network in North Staffordshire, we developed and introduced to a group of general practices a simple program of assessment, feedback, and training with respect to the primary care electronic patient record (EPR). We initiated a descriptive study to investigate the impact of the assessment, feedback, and training on data quality, which we report here.

Background

Information for Health,1 the UK Government's information technology strategy for the National Health Service, has a target that by 2005 there will be full implementation of the person-based electronic health record (EHR). It will be a lifelong record of a patient's health and health care; provide the basis for patient-held records and a summary for 24-hour access; and be used for audit, planning, and research. It will be a combination of the primary care EPR and linked information from other record systems. The strategy states that the primary care EPR will need to be “accurate, complete, relevant, up to date, and accessible.” To achieve the National Health Service's aspirations, many practices will need to have training and support to improve data quality of their EPRs. Methods to do this will need to be readily available and easy to implement.

There have been a number of studies that have investigated the validity of computerized morbidity recording in primary care,2,3,4,5,6,7,8,9,10 and they contain a range of methods for approaching this issue. The methods include comparing the electronic data with a “gold standard” such as paper records or prescribing data,2,3,4,6,7,8,9 comparing practice disease prevalence with published prevalence data,3,5,6,10 and, more recently, “triangulating” morbidity coding with other data in the EPR.5 A recent systematic review11 summarized the methods used in 52 studies. While published reports have served to indicate what might be achieved and illustrated methods by which to assess that achievement, no simple generalizable methods of assessing and improving data quality on a routine basis in a clinical setting have been tested in a range of practices.

Research Question

Can a program of assessment, feedback, and training improve the quality of data recorded in a general practice information system?

Methods

Study Setting

The North Staffordshire General Practice (GP) Research Network was established by the Primary Care Sciences Research Centre (PCSRC) at Keele University, the North Staffordshire Health Authority, and local general practices in 1997. The network practices were selected by their previous active involvement in local audit projects related to computerized data. They, therefore, represented a relatively computer-aware group of practices. The aim of the network was to collect coded consultation data for epidemiologic purposes and for local health planning. For this, a complete and correctly coded computerized record of consultations was essential. Therefore, we needed to assess and, where applicable, improve data quality in the participating practices.

The study reported here was carried out in seven of the network practices. These were the first practices to be recruited to the network whose anonymized data are being used for epidemiologic studies. All the practices use the Egton Medical Information System (EMIS) as their clinical computer system. The total registered population represented by these practices was 59,337 as of December 31, 1999. All morbidity data assigned a code on the practices' computers use the Read clinical classification (Read codes).12 The Read codes are a hierarchy of morbidity, symptom, and process codes and enable primary health care workers to code consultations by either the presenting symptom, the working or established diagnosis, or the procedure undertaken. The local research ethical committee approved the study as an audit.

Measures of Coding Quality

Consultation coding

A face-to-face contact is defined as a medical encounter between a patient and a doctor or nurse at the primary care center or during a home visit. A consultation is the addressing of a problem during a face-to-face contact, and one or more may be addressed during a single contact and may subsequently be recorded in the EPR. A coded consultation is one that has been recorded and has also been assigned a Read code problem title. These definitions are based on the way data are captured in practices using the EMIS computer, so one or more consultations may be addressed, recorded, and coded during a contact.

The measure of consultation coding quality was the proportion of recorded consultations that were coded consultations. It provides an estimate of the completeness with which consultations between patients and the practice have been coded. A high percentage is a basic requirement if contact data are to be used for epidemiologic studies of incidence and prevalence or health care use. The denominator is defined as all consultations during face-to-face contacts recorded in the EPR in the assessment period (2 weeks initially, 4 weeks for the final assessments). The numerator is the number of those consultations with a Read-coded problem title assigned. Consultations were grouped according to place (primary care center, home visit) and health care professional consulted (doctor, nurse) to report these separately. The separate analysis for nurses was introduced to provide specific feedback to this group.

Morbidity Coding by Prescribed Drug

Pairs of common general practice diseases and drugs were identified by the study team where the drug is almost exclusively prescribed for a small number of selected morbidities (▶). All patients currently being prescribed the drugs were identified and their records searched for the relevant morbidity codes having ever been recorded in the EPR. For each drug or drug group the percentage of patients with one of the selected morbidity codes was determined. For this measure, the morbidity code had only to have been recorded once in the EPR, at any time, and may have been entered by a range of health care workers inputting data from consultations, hospital letters, or primary care paper records. Morbidity codes recorded in this way enable patients to be identified for a “virtual” computer-based disease register, and this measure assesses the register's completeness.

Table 1.

Selected Drugs and Drug Groups with Paired Morbidities

| Drug/Drug Group | BNF Code | Morbidity | Read Code 4 | Read Code 5 |

|---|---|---|---|---|

| Thyroxine | 6.2.1 | Congenital hypothyroidism | C13. | C03.. |

| Acquired hypothyroidism | C13. | C04.. | ||

| History of hypothyroidism | 1432 | 1432. | ||

| Allopurinol | 10.1.4 | Gout | C53. | C34.. |

| Selegeline | 4.9.1 | Parkinson's disease | F22. | F12.. |

| Tamoxifen | 8.3.4 | Malignant neoplasm male breast | B35.. | |

| Malignant neoplasm female breast | B34.. | |||

| Breast cancer | B1D. | |||

| History of breast cancer | 1424 | 1424. | ||

| Sodium valproate | 4.8.1 | Epilepsy | F34. | F25.. |

| History of epilepsy | 1473 | 1473. | ||

| Hydroxycobalamin | 9.1.2 | Pernicious anemia | D121 | D010. |

| Other vitamin B12 deficiency | D122 | D011. | ||

| Vitamin B12 deficiency | C2621 | |||

| B complex deficiency NOS | C413 | |||

| Digoxin | 2.1.1 | Heart failure | G64. | G58.. |

| Cardiac dysrhythmias | G57.. | |||

| Cardiomyopathy | G64. | G55.. | ||

| Paroxysmal supraventricular tachycardia | G66. | |||

| Atrial fibrillation | G67. | |||

| Ectopic beats | G68. | |||

| Amiodarone | 2.3.2 | Cardiac dysrhythmias | G57.. | |

| Paroxysmal supraventricular tachycardia | G66. | |||

| Atrial fibrillation | G67. | |||

| Inhaled steroids [BNF chapter search] | 3.2 | Chronic obstructive pulmonary disease | H4.. | H3… |

| History of Asthma | 14B4. | |||

| Fluoxetine | 4.3.3 | Neuroses & other mental disorders | E2… | |

| Nonorganic psychoses | E2.. | E1… | ||

| Neuroses | E3.. | |||

| Other mental disorders | E4.. | Egton306 | ||

| Glaucoma drugs [BNF chapter search with removals] | 11.6 | Glaucoma | F54. | F45.. |

| Congenital glaucoma | N141 | P3200 |

Annual Period Prevalence Comparisons

The prevalence of 12 selected conditions was compared with parallel data derived from the fourth National Study of Morbidity Statistics from General Practice (MSGP4).13 For MSGP4 and in our study, prevalence is defined as the number of patients who consult at least once during a year for the condition per 10,000 patient years at risk. This enabled the network estimates of period prevalence to be compared with an external reference, validated for its completeness of capture of consultation data. MSGP4 is the largest such published database of consulting incidence and prevalence in U.K. general practice. The morbidities were chosen to represent a range of conditions that are managed in general practice and for which comparative data could be identified in MSGP4. ▶ shows the morbidity and process codes used for each of the chosen morbidities. The records were searched for the selected codes recorded in the EPR during the 12 months prior to the date of the assessment.

Table 2.

Conditions Selected for Period Prevalence Comparison with MSGP4

| MSGP4 ICD Codes | Read Term | Additional Read Terms | Read Code 4 | Read Code 5 |

|---|---|---|---|---|

| 250 | Diabetes mellitus | C2.. | C10.. | |

| Diabetes monitoring | 66A. 9OLA | 66A.. 9OLA | ||

| History of diabetes | 1434 | 1434. | ||

| Diabetic retinopathy | F521 | F420. | ||

| 401 | Essential hypertension | G31. | G20.. | |

| Hypertension monitoring | 6627 – 6629 | 6627. – 6629. | ||

| 662F – 662H | 662F. – 662H. | |||

| 662O – 662P | 662O. – 662P. | |||

| 9OIA 9N03 | 9OIA. 9N03. | |||

| 430–438 | Cerebrovascular accident | G71. – G75.. | G60.. – G68.. | |

| G6y.. G6z.. | ||||

| History of stroke | 14A7 | 14A7. | ||

| Stroke monitoring | 662M | 662M. | ||

| 410–414 | Ischemic heart disease | G4.. | G3… | |

| Cardiac drug side effect | 6625 | 6625. | ||

| Cardiac treatment changed | 6626 662D 662E | 6626. 662D. | ||

| 662J | 662E. 662J. | |||

| Angina control | 662K | 662K. | ||

| CHD monitoring | 662N – 662Z | 662N. 662Z. | ||

| 410 | Acute myocardial infarct | G41. | G30.. | |

| 413 | Angina | G44. | G33.. | |

| 427 | Cardiac dysrhythmias | G66. – G69. | G57.. | |

| 428 | Heart failure | G6A. | G58.. | |

| 493 | Asthma | H43. | H33.. | |

| Asthma monitoring | 663. | 663.. | ||

| Seen in asthma clinic | 9N1d | 9N1d. | ||

| Monitoring check done | 9OJA | 9OJA. | ||

| History of asthma | 14B4 | 14B4. | ||

| 372 | Conjunctivitis | Acute | F5B1 | F4C0. |

| Chronic | F5B2 | F4C1. | ||

| Allergic | F5B7 | |||

| 455 | Hemorrhoids | G94. | G84.. | |

| 715 | Osteoarthritis | M26. | N05.. |

Study Phases

Baseline Assessment

On joining the network, all practices were visited by a GP Research Fellow appointed to the project (a GP in one of the participating practices), an information technology (IT) officer, and the project manager. The data validation procedures were explained in detail and practices then signed a formal agreement with PCSRC. Through this agreement, the project team was bound to specific data extraction, confidentiality, and anonymization procedures. In turn, practice staff agreed to work toward recording and coding all face-to-face patient contacts, attend feedback sessions, and participate in training or other agreed strategies to improve data quality; the practice receives a modest payment to support this activity. The baseline assessments for all the measures were performed between January and August 1998 and were carried out by the project team. The timing of the assessments for individual practices was staggered, depending on their recruitment date. The anonymized data were analyzed at PCSRC using Microsoft databases, and presentations were designed for feedback to the practices.

Feedback and Training

After the baseline assessments, the practices were visited individually. The results were presented at a practice meeting in which the full primary health care team was represented. There was a simple check that the number of consultations recorded in the assessment period for each health professional reasonably reflected the practice's estimate of consultations for that professional. Consultation coding by practice, and by groups of professionals and individuals within practices was presented graphically. Feedback on drug-morbidity pairs was at practice level with interpractice comparisons, as were comparisons of annual period prevalence to MSGP4. During the feedback, there was detailed discussion on Read code awareness, data entry by clinical and secretarial staff, the use of Read codes in practice, the set up of structured data entry systems (templates, which can be used when monitoring long-term conditions such as diabetes), and how coding could be improved. Agreement was reached on training needs in these areas and how they could be met. Practices were given data in which coding was not complete for drug-morbidity pairs and were asked to review the morbidity coding of these health records. Training consisted of a range of one- to two-hour sessions on the specific needs identified.

Repeat Cycles of Assessment, Feedback, and Training

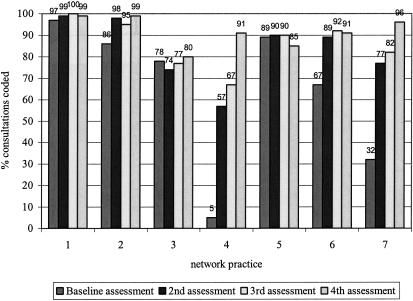

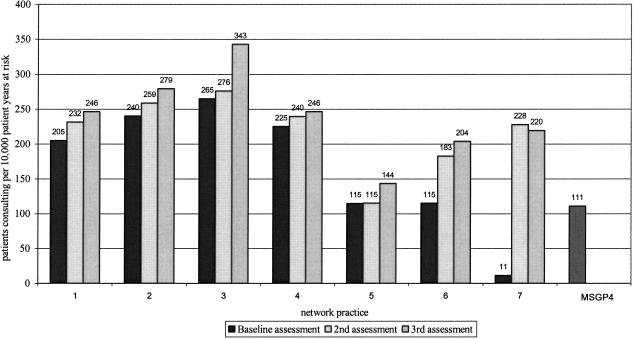

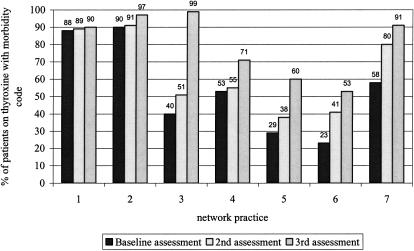

After the initial feedback and training, repeat assessments were carried out over the next two years using the three measures used at baseline. These were fed back to the practices as before and further support and training arranged as needed. Examples of the charts used in the last feedback session are shown in ▶–▶. This rolling program continues, and practices new to the network join it.

Figure 1.

Percentage of doctors' consultations coded per assessment by practice (feedback presentation).

Figure 3.

Annual period prevalence of diabetes per assessment by practice and MSGP4 (feedback presentation).

Analysis

We present in this report the analysis of consultation coding completeness across four assessments (baseline and three repeats) and the recording of diagnostic codes according to prescribed drug and annual period prevalence compared with MSGP4 from three assessments (baseline and two repeats). Analysis consisted of, for each assessment: (1) the percentage of consultations recorded in the EPR that were assigned a Read coded problem title and stratified by primary care center consultation, with a doctor or nurse, or home visit; (2) the percentage of patients prescribed each of the selected drug or drug types who had the relevant morbidity code, analyzed across all practices and across all drugs; and (3) the period prevalence of each selected condition per 10,000 patient years at risk.

Further, to assess improvement from the baseline to the fourth assessment in the level of consultation coding completeness across all practices by doctors, by nurses, and for home visits, Mantel-Haenszel tests were performed. A Mantel-Haenszel test was also used to assess differences in level of coding completeness between doctors and nurses at the 4th assessment. The Mantel-Haenszel test takes into account the stratification by practice but returns an overall p-value.

To assess the change in recording of diagnostic codes according to prescribed drug across assessments, logistic regression was used, using SPSS for Windows 10.0.14 Here the patients identified as receiving the drugs are the units of analysis, and whether they had the appropriate morbidity code is the outcome measure. Practice, type of drug, and assessment number were the independent variables. Patients could have been included in more than one assessment, so the observations are not independent, and a repeated measures approach may be preferable. However, although patients were identifiable for feedback, statistical analysis was anonymized, so this alternative analysis was not possible. The analyses thus are indications of the extent of change in coding levels across the series of assessments. Finally, a logistic regression was also performed on data using the third assessment only to assess variation by practice and by drug or drug type in recording of morbidity codes.

Results

Baseline Assessment

▶ shows the results for completeness of consultation coding by practice. The baseline assessment of coding completeness for all primary care center consultations with a doctor ranged from 5% to 97% between practices. Nurses showed lower levels of coding, with nurses in practice 6 not using the computer at all for recording consultations. The baseline results for coding of home visits show a variable level of coding with home visits rarely recorded in the EPR in practices 3 and 5 and not recorded at all in practice 6.

Table 3.

Completeness of Face-to-face Consultation Coding by Practice

| Type of Consultation | Practice | %* (n†) Coded Baseline Assessment | %* (n†) Coded 2nd Assessment | %* (n†) Coded 3rd Assessment | %* (n†) Coded 4th Assessment |

|---|---|---|---|---|---|

| Primary care center consultations for doctors only | 1 | 97 (1,196) | 99 (1,452) | 100 (1,274) | 99 (1,368) |

| 2 | 86 (857) | 98 (681) | 95 (839) | 99 (828) | |

| 3 | 78 (598) | 74 (909) | 77 (868) | 80 (878) | |

| 4 | 5 (798) | 57 (488) | 67 (824) | 91 (836) | |

| 5 | 89 (469) | 90 (475) | 90 (999) | 85 (623) | |

| 6 | 67 (796) | 89 (1,089) | 92 (1,195) | 91 (1,075) | |

| 7 | 32 (1,888) | 77 (1,504) | 82 (1,491) | 96 (1,769) | |

| Primary care center consultations for nurses only | 1 | 98 (370) | 94 (367) | 97 (539) | 87 (662) |

| 2 | 72 (363) | 82 (462) | 89 (497) | 94 (497) | |

| 3 | 52 (436) | 54 (388) | 81 (474) | 76 (475) | |

| 4 | 21 (480) | 12 (834) | 38 (596) | 59 (488) | |

| 5 | 37 (229) | 34 (202) | 75 (140) | 89 (109) | |

| 6 | - (0)‡ | - (0)‡ | - (0)‡ | 37 (380) | |

| 7 | 6 (494) | 7 (462) | 83 (574) | 86 (806) | |

| Home visits for all health professionals | 1 | 99 (104) | 100 (98) | 92 (61) | 92 (74) |

| 2 | 85 (100) | 91 (93) | 84 (102) | 97 (76) | |

| 3 | 100 (2) | 71 (7) | 65 (51) | 77 (71) | |

| 4 | 4 (50) | 33 (60) | 20 (107) | 61 (98) | |

| 5 | 100 (7) | 95 (21) | 100 (9) | 67 (2) | |

| 6 | - (0)‡ | 100 (1) | 100 (2) | 95 (30) | |

| 7 | 41 (133) | 84 (136) | 88 (105) | 93 (118) |

Percent of recorded consultations that were coded.

Number of consultations recorded on computer during assessment period. Assessment periods were for two or four weeks; the number of recorded consultations for the four-week assessments are shown in italics with the mean two-week figure given.

No consultations were recorded on computer at these assessments.

▶ refers to morbidity coding by prescribed drug and shows the median number of patients on each drug or drug group at baseline assessment and the range across the seven practices. Amiodarone and selegeline were the least frequently prescribed, and inhaled steroids were the most frequent. ▶ shows the baseline completeness of relevant morbidity coding with respect to each drug or drug group. Inhaled steroids had the highest level of morbidity coding (77.9%), and amiodarone had the lowest (24.6%).

Table 4.

Completeness of Morbidity Coding per Assessment by Selected Drug or Drug Group and Number of Patients by Prescribed Drug or Drug Group

| Percent Patients on Drug with a Paired Morbidity Code, All Practices Combined (Range across Practices) |

||||

|---|---|---|---|---|

| Drug/Drug Group | No. Patients on Drug by Practice,* Median (Range across Practices) | Baseline Assessment | 2nd Assessment | 3rd Assessment |

| Thyroxine | 134 (41–295) | 57.1 (22.7–89.9) | 68.5 (38.5–91.4) | 82.4 (52.7–9.3) |

| Allopurinol | 36 (8–72) | 48.0 (8.3–87.5) | 56.8 (33.8–82.0) | 68.0 (35.3–100.0) |

| Selegeline | 6 (1–26) | 48.7 (0.0–80.0) | 81.2 (0.0–96.3) | 84.1 (0.0–100.0) |

| Tamoxifen | 45 (11–84) | 46.6 (2.4–100.0) | 71.3 (25.0–100.0) | 85.2 (35.3–100.0) |

| Sodium valproate | 17 (9–46) | 49.7 (13.0–80.0) | 59.1 (35.7–82.4) | 71.4 (33.3–92.3) |

| Hydroxycobalamin | 23 (2–71) | 35.2 (0.0–52.9) | 50.4 (0.0–90.9) | 52.9 (8.7–91.7) |

| Digoxin | 78 (25–124) | 53.2 (21.8–92.9) | 64.9 (41.0–100.0) | 76.8 (60.0–100.0) |

| Amiodarone | 7 (1–17) | 24.6 (0.0–57.1) | 49.3 (0.0–100.0) | 62.5 (44.4–100.0) |

| Inhaled steroids | 498 (134–876) | 77.9 (26.9–90.2) | 82.2 (32.0–95.3) | 89.2 (50.5–97.6) |

| Fluoxetine | 198 (24–235) | 64.1 (12.5–92.9) | 68.7 (21.1–93.7) | 68.3 (30.0–91.0) |

| Glaucoma drugs | 58 (12–141) | 37.2 (9.2–75.9) | 55.2 (23.1–94.4) | 66.8 (31.3–95.9) |

At baseline assessment.

For those drugs least frequently prescribed, some caution is needed in interpreting the range across practices. However, tamoxifen showed the largest variation in completeness across the practices (2.4% to 100%). ▶ then illustrates the completeness of coding separately for each practice for all drugs or drug groups combined, with a range of 29.3% to 88.4% at the baseline assessment.

Table 5.

Completeness of Morbidity Coding per Assessment by Practice

| Percent Patients with a Morbidity Code for Each Practice, All Drugs/Drug Groups Combined (Range across Drugs/Drug Groups) |

|||

|---|---|---|---|

| Practice | Baseline Assessment | 2nd Assessment | 3rd Assessment |

| 1 | 85.1 (45.5–90.2) | 90.9 (67.4–95.3) | 92.1 (62.9–100.0) |

| 2 | 88.4 (52.4–100.0) | 91.7 (52.4–100.0) | 95.6 (78.6–100.0) |

| 3 | 70.0 (16.7–85.7) | 75.7 (16.7–94.4) | 91.2 (44.4–100.0) |

| 4 | 74.8 (25.0–88.7) | 72.7 (20.0–88.0) | 83.2 (60.0–92.3) |

| 5 | 29.3 (0.0–72.2) | 36.8 (0.0–74.2) | 51.4 (0.0–88.9) |

| 6 | 44.3 (10.5–67.3) | 55.4 (15.7–76.5) | 67.9 (8.7–85.0) |

| 7 | 40.7 (2.4–65.5) | 63.9 (33.7–96.3) | 78.0 (51.7–93.6) |

▶ shows the combined prevalence estimates of the selected morbidities compared with MSGP4 rates and the range of the estimates across practices. The baseline assessment shows a wide variation among practices and overall prevalence figures that are often below MSGP4 rates. This is not true for diabetes or hypertension in which the combined figures are closer to or higher than MSGP4 rates.

Table 6.

Annual Period Prevalence of Selected Morbidities

| Annual Period Prevalence–combined Practice Rates (Range across Practices) and MSGP4 Rates per 10,000 Person-years at Risk |

||||

|---|---|---|---|---|

| Baseline Assessment | 2nd Assessment | 3rd Assessment | MSGP4 | |

| Diabetes mellitus | 153 (11–265) | 226 (115–276) | 242 (144–343) | 111 |

| Essential hypertension | 382 (2–818) | 497 (173–884) | 673 (311–1090) | 412 |

| Cerebrovascular accident | 32 (2–82) | 44 (9–93) | 53 (17–86) | 66 |

| Ischemic heart disease | 118 (2–293) | 173 (75–326) | 230 (69–351) | 170 |

| Acute myocardial infarct | 10 (2–25) | 17 (11–31) | 22 (9–43) | 29 |

| Angina | 56 (5–143) | 70 (14–168) | 88 (29–155) | 114 |

| Cardiac dysrhythmias | 29 (6–83) | 44 (19–95) | 47 (29–61) | 45 |

| Heart failure | 47 (5–151) | 53 (5–145) | 58 (30–100) | 89 |

| Asthma | 306 (6–614) | 395 (158–645) | 501 (215–699) | 425 |

| Conjunctivitis | 113 (4–282) | 190 (29–267) | 223 (189–282) | 415 |

| Hemorrhoids | 36 (7–85) | 52 (11–85) | 65 (49–90) | 103 |

| Osteoarthritis | 101 (31–220) | 126 (50–240) | 167 (80–270) | 315 |

Feedback and Training

There were no difficulties in arranging feedback and training with the practices, as they readily provided practice time and resources. Feedback of completeness of consultation coding at practice, professional group, and individual levels enabled us to highlight gaps and identify training needs at each level. The project team was then able to design simple training programs that were tailored to the specific needs of individuals, professional groups, and the practice as a whole. GPs and nurses needed training on the use of Read codes and on effective data entry. Systems training was given on refining structured data entry so that if a template was chosen for recording data, an appropriate Read coded problem title would be assigned to the consultation record. Feedback on drug-morbidity coding and MSGP4 comparisons identified incompleteness of the disease registers and training needs for secretarial staff in Read code awareness and interpretation of hospital letters and paper notes. It also led to the development of simple practice data entry protocols to support coding of letters and paper notes. At each subsequent feedback session, more detailed and complex training needs were identified and addressed.

Repeat Assessments

▶ and ▶ show that the level of consultation coding by doctors improved, or was maintained, in all the practices, with doctors in five of the seven practices coding at above 90% by the fourth assessment. Coding by nurses was similarly improved or maintained but with nurses in only one practice coding at above 90% by their final assessment (▶). Home visits showed a more variable picture partly because of a continuing low level of recording home visits in some practices (▶). Across all practices, the level of coding increased from baseline to fourth assessment from 60% to 93% (doctors), 45% to 77% (nurses), and 64% to 84% (home visits). Overall, there was a significant improvement in levels of coding for all three (Mantel-Haenszel tests, all p < 0.001). However, at the 4th assessment, doctors were coding at higher levels than nurses (p < 0.001).

▶ and ▶ show a general improved level of morbidity coding for all the drug groups and for all the practices. Across all practices, the level of morbidity coding increased from 64% (baseline) to 82% (third assessment). An example of the improvement for thyroxine across practices is given in ▶. By the third assessment, patients on inhaled steroids still had the highest level of paired morbidity coding, and hydroxycobalamin had the lowest. Three of the practices reached coding levels of above 90%.

Figure 2.

Completeness of morbidity coding for patients on thyroxine per assessment by practice (feedback presentation).

The logistic regression analysis, after adjusting for practice and type of drug, showed that someone with a drug code had 1.67 (95% CI 1.55–1.80) times the odds of having the relevant diagnostic code at the second assessment compared with baseline. This had improved by the third assessment to an odds ratio of 3.14 (95% CI 2.90–3.41) as compared with baseline. However, there was still variation between practices by the third assessment (p < 0.001). Practice 5 (OR 0.21; 95% CI 0.17–0.27 compared with practice 7—the arbitrary reference practice with which the others were compared) and practice 6 (OR 0.49; 95% CI 0.41–0.57) were the worst performing practices at the third assessment after adjusting for drug type. By contrast, a patient recorded as having been prescribed one of the drugs in practice 2 had almost 6 times the odds of having the relevant morbidity code recorded than similar patients in practice 7 (OR 5.75; 95% CI 4.16–7.95). There also was variation by drug type after adjusting for practice (p < 0.001). Patients taking inhaled steroids were most likely to have a relevant morbidity code recorded (OR 4.50; 95% CI 3.57–5.68 compared with patients on glaucoma drugs) at the third assessment followed by those on tamoxifen (OR 3.08; 95% CI 2.03–4.66). Patients taking amiodarone (OR 0.79; 95% CI 0.45–1.37) or hydroxycobalamin (OR 0.53; 95% CI 0.36–0.79) were least likely to have a relevant morbidity code recorded in their records.

▶ shows a general increase in the estimates of prevalences, combined across all seven practices. By the third assessment, the practices had a prevalence at or higher than MSGP4 for 5 of 12 morbidities compared with 1 of 12 morbidities at baseline. The increase for diabetes across practices is shown in ▶. However, the prevalences for conjunctivitis, hemorrhoids, and osteoarthritis remain lower than MSGP4 rates by the final assessment.

Discussion

This study describes the introduction of a program for assessment of coding quality combined with regular feedback, training, and reassessment. It has shown that, at baseline assessment, even practices highly motivated to record electronically had variable levels of consultation coding and completeness of disease registers. However, by the final assessment, all practices showed improvements in, or maintained previous levels of, consultation coding by both doctors and nurses and in the completeness of their disease registers. We have drawn the conclusion that the program is likely to have been an important factor in this improvement; however, this was not a controlled trial, and this conclusion must be viewed as arising from an uncontrolled study in which changes may have occurred for other reasons. However, there were no other National Health Service or monetary incentives to improve quality of coding operating during the study. The practical implementation of this program at each of the seven practices suggests this method of improving data quality should be feasible to introduce to other practices.

A possible bias was whether all face-to-face contacts were recorded on the practice computer. For this to be true, at least one consultation would have to be recorded for each face-to-face contact. It is likely that this occurred because, at the time of the study, six of the practices were “paperless,” the policy being to record face-to-face contacts only on the computer and not in the paper notes. As a check, the practices were able to confirm, at the feedback sessions, that the total number of consultations recorded for each health professional were what would have been expected for that assessment period. The recording of home visits on computer still needs improvement for several practices, although most did improve over the course of the study.

In using consultations rather than contacts as the unit of assessment, the level of coding does include coding of multiple-consultation contacts. However, the study did not address the question of whether all problems addressed during a contact were recorded and coded. This would have needed a “gold standard,” such as a video recording of the contact.3 We plan to research recording and coding of multiple problems during a contact in the future.

The assessment period increased from 2 to 4 weeks at the fourth assessment (third for nurses) to allow for the possibility of a doctor or nurse being absent for part but not all of an assessment period. The numbers of consultations in the 2-week assessment periods were large enough to have confidence that the percentages given are close to the actual coding percentages around that time period. For example, a sample of 385 would give a potential error in the assessment percentage of between −5 and +5% for a true coding level of 50%.

Practices were able to accommodate assessment, feedback, and training sessions with the support team having little difficulty in arranging and undertaking these sessions. Further research is needed to assess the impact and acceptability of the program on the practice staff. Crucial to the program of feedback and data collection was the support team based at PCSRC, linked to the network practices. In addition to the study program, they were able to extend training and problem solving to other related areas to develop the use of the computer in the practices. The time devoted to the program was equivalent to a 0.5 full-time data quality facilitator. We continue to perform annual assessments of these practices, and training is available to new staff as part of the data quality program for the network.

The assessment measures we used have been described and used in previous studies: consultation coding,3,4,5,6 morbidity coding to prescribed drug,3,4,5,8,9 and prevalence comparison with MSGP4.3,5,6,10 Our study is the first to use these measures in a practical program designed to be used for feedback and improvement as much as for the actual assessment of quality. Two studies have assessed the impact of feedback on the quality of consultation recording.15,16 These studies suggested variable effects of a feedback-only strategy.

Completeness of consultation coding was the most practical in terms of measuring individual performance but was subjected to the bias discussed above. Analysis at the practitioner level allowed us to account for and address individual behaviors, such as low coding by a GP registrar in one assessment and nurses using templates that do not enter a Read code. Feedback on template use identified the need for practices to undertake training that enabled them to institute automatic coding of problem titles whenever templates were used.

Feedback on morbidity coding paired to prescribed drugs showed this was not a precise matching, and 100% may not be a reasonable absolute target. New health records often were not coded, as there was a delay between the start of prescribing and the summarizing of old records. Drugs were sometimes used for morbidities that had not been searched for but were coded, for example, fluoxetine for premenstrual syndrome. Morbidities sometimes were not coded because it was difficult to establish a firm diagnosis, as treatment had been started when the recording in paper notes was, at best, cursory. Hydroxycobalamin was a good example of this. However, the drug-morbidity pairing is a simple measure to perform, enables individual records to be updated, and allows practices to benchmark themselves against their peers. It also is possible to use new drug-morbidity pairings to assess coding for other morbidities.

The comparison of prevalences to MSGP4 is useful as it provides a standard figure to assess between-practice variation. The problem is that absolute figures for prevalence are subject themselves to variation and are not timeless “gold standards.” This may be due to changes over time in prevalence; MSGP4 figures are from 1991/1992, the latest published data. Second, differences in case finding, screening, and case definition, especially true for diabetes and hypertension, occur over time and between practices. The between-practice variation could be attributable to differing practice special interests and referral patterns. Third, GPs can vary in coding practice; e.g., one person's painful knee is another's knee osteoarthritis, so no group of GPs can be held to be a “gold standard.” These are important problems to solve for primary care epidemiology, and research is needed into the usefulness of standard definitions of morbidity and the interpretation of morbidity codes.

Conclusion

This study describes an assessment program that appeared to improve data quality in primary care electronic records and that enables consultation morbidity data to be used for epidemiologic research in primary care. The method is likely to be generalizable but needs a well-resourced team to carry it out. There is room for improvement in the precision of the measures used and the need for more research into the impact on practice staff.

The authors thank all the general practitioners, practice nurses, and staff of the general practices who belong to the network for their participation in this study. The North Staffordshire General Practice Research Network was established and funded by the North Staffordshire Health Authority and North Staffordshire general practice fundholder savings. The Network is now funded by grants from the NHS(E), the North Staffordshire Primary Care Research Consortium, and the Staffordshire Moorlands Primary Care Research Consortium.

References

- 1.NHS Executive. Information for Health: An Information Strategy for the Modern NHS 1998–2005. A National Strategy for Local Implementation. London: Department of Health, 1998.

- 2.Mant D, Tulloch A. Completeness of chronic disease registration in general practice. BMJ (Clin Res Ed). 1987; 294:223–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pringle M, Ward P, Chilvers C. Assessment of the completeness and accuracy of computer medical records in four practices committed to recording data on computer. Br J Gen Pract. 1995; 45:537–41. [PMC free article] [PubMed] [Google Scholar]

- 4.Scobie S, Basnett I, McCartney P. Can general practice data be used for needs assessment and health care planning in an inner-London district?. J Public Health Med. 1995; 17:475–83. [PubMed] [Google Scholar]

- 5.Hassey A, Gerrett D, Wilson A. A survey of validity and utility of electronic patient records in a general practice. BMJ. 2001; 322:1401–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Pearson N, O'Brien J, Thomas H, Ewings P, Gallier L, Bussey A. Collecting morbidity data in general practice: the Somerset morbidity project. BMJ. 1996; 312:1517–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Thiru K, de Lusignan S, Hague N. Have the completeness and accuracy of computer medical records in general practice improved in the last five years? The report of a two-practice pilot study. Health Informatics Journal. 1999; 5:224–32. [Google Scholar]

- 8.Coulter A, Brown S, Daniels A. Computer held chronic disease registers in general practice: a validation study. J Epidemiol Community Health. 1989; 43:25–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bottomley A. Methodology for assessing the prevalence of angina in primary care using practice based information in northern England. J Epidemiol Community Health. 1997; 51:87–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hollowell J. The general practice research database: quality of morbidity data. Population Trends. 1997; 87:36–40. [PubMed] [Google Scholar]

- 11.Thiru K, Hassey A, Sullivan F. Systematic review of scope and quality of electronic patient record data in primary care. BMJ. 2003; 326:1070–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Chisholm J. The Read clinical classification. BMJ. 1990; 300:1092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Royal College of General PractitionersOffice of Population Census and SurveysDepartment of Health Morbidity Statistics from General Practice Fourth National Study 1991-1992. London: HMSO, 1995.

- 14.SPSS for Windows, Rel.10.0.7. Chicago: SPSS Inc, 2000.

- 15.Gilliland A, Mills K, Steels K. General Practitioner records on computer—handle with care. Family Practice. 1992; 9:441–50. [DOI] [PubMed] [Google Scholar]

- 16.de Lugsignan S, Stephens P, Adal N, Majeed A. Does feedback improve the quality of computerized medical records in primary care?. J Am Med Inform Assoc. 2002; 9:395–401. [DOI] [PMC free article] [PubMed] [Google Scholar]