Abstract

Recent behavioral studies in both humans and rodents have found evidence that performance in decision-making tasks depends on two different learning processes; one encoding the relationship between actions and their consequences and a second involving the formation of stimulus–response associations. These learning processes are thought to govern goal-directed and habitual actions, respectively, and have been found to depend on homologous corticostriatal networks in these species. Thus, recent research using comparable behavioral tasks in both humans and rats has implicated homologous regions of cortex (medial prefrontal cortex/medial orbital cortex in humans and prelimbic cortex in rats) and of dorsal striatum (anterior caudate in humans and dorsomedial striatum in rats) in goal-directed action and in the control of habitual actions (posterior lateral putamen in humans and dorsolateral striatum in rats). These learning processes have been argued to be antagonistic or competing because their control over performance appears to be all or none. Nevertheless, evidence has started to accumulate suggesting that they may at times compete and at others cooperate in the selection and subsequent evaluation of actions necessary for normal choice performance. It appears likely that cooperation or competition between these sources of action control depends not only on local interactions in dorsal striatum but also on the cortico-basal ganglia network within which the striatum is embedded and that mediates the integration of learning with basic motivational and emotional processes. The neural basis of the integration of learning and motivation in choice and decision-making is still controversial and we review some recent hypotheses relating to this issue.

Keywords: decision-making, instrumental conditioning, prefrontal cortex, dorsal striatum, nucleus accumbens, amygdala

INTRODUCTION

Although much has been discovered about the anatomy and pharmacology of the basal ganglia, there has been much less research systematically investigating their functions in adaptive behavior. The considerable interest in neuropathology, particularly of the dorsal striatum and its midbrain dopaminergic afferents, associated with disorders, such as obsessive compulsive disorder (Saint-Cyr et al, 1995), Parkinson's (Antonini et al, 2001) and Huntington's diseases (Joel, 2001), Tourette's syndrome (Marsh et al, 2004), and so on, has implicated this structural network in various aspects of action control. However, historically, these functions have been argued to be limited to the bottom-up regulation of motor movement through output to the pallidum, thalamus, and to motor and premotor cortices (Mink, 1996). More recently, however, interest has turned to potential role of the basal ganglia in the top-down or executive control of motor movement (Miller, 2008). This interest has largely been fueled by new, more detailed, models of the neuroanatomy that have linked feedforward inputs through the corticostriatal circuit with these feedback functions through a network of partially closed cortico-basal ganglia loops (Alexander and Crutcher, 1990b; Nambu, 2008).

In addition, this recent approach has led to integration of some previously poorly characterized aspects of basal ganglia function with motor control, particularly its role in decision-making based on affective or reward processes. Considerable research has long suggested that the ventral striatum has a critical role in the motivational control of action, a suggestion captured in the characterization of this region as a limbic–motor interface (Kelley, 2004; Mogenson et al, 1980). However, recent research has also implicated the dorsal striatum in the control of decision-making regulated by reward, particularly the role of the caudate or dorsomedial region of the striatum in the integration of reward-related processes with action control (Balleine et al, 2007c). This research suggests that the striatum has a much broader role in the control of executive functions than previously suspected and, indeed, appears to be centrally involved in functions long argued to depend solely on the regions of prefrontal cortex (Balleine et al, 2007a; Lauwereyns et al, 2002; Tanaka et al, 2006).

Reconciling the motor with the non-motor, cognitive aspects of basal ganglia function in the production and control of adaptive behavior has become a matter of considerable speculation and debate, and a number of theories have been advanced based around the new and developing description of the neuroanatomy and neuropharmacology of basal ganglia and its composite subregions (Bar-Gad et al, 2003; Hazy et al, 2007; Joel et al, 2002; McHaffie et al, 2005). However, it must be conceded that, although promissory, much of this theorizing has been driven by these structural advances with relatively little consideration of evidence (or lack of evidence) regarding the functions of the circuitry involved (Nambu, 2008).

In this review, we describe recent research from our laboratories that addresses this issue focusing particularly on collaborative projects through which we have started to assess homologous functions of corticostriatal circuitry in both executive and motor learning processes and in the motivational control of action in rodents and humans. We begin by considering behavioral evidence for multiple sources of action control and recent evidence implicating regions of dorsal striatum in the cognitive and sensorimotor control of actions. We then consider the evidence for two, apparently independent, sources of motivational control mediated by reward and by stimuli that predict reward and the role of distinct cortico-ventral striatal networks in these functions. Finally, we consider the role of basal ganglia generally in the integration of the learning and motivational processes through which courses of action are acquired, selected, and implemented to determine adaptive decision-making and choice.

MULTIPLE SOURCES OF ACTION CONTROL IN RODENTS AND HUMANS: GOALS AND HABITS

There is now considerable evidence to suggest that the performance of reward-related actions in both rats and humans reflects the interaction of two quite different learning processes, one controlling the acquisition of goal-directed actions, and the other the acquisition of habits. This evidence suggests that, in the goal-directed case, action selection is governed by an association between the response ‘representation' and the ‘representation' of the outcome engendered by those actions, whereas in the case of habit learning, action selection is controlled through learned stimulus–response (S–R) associations without any associative link to the outcome of those actions. As such, actions under goal-directed control are performed with regard to their consequences, whereas those under habitual control are more reflexive in nature, by virtue of their control by antecedent stimuli rather than their consequences. It should be clear, therefore, that goal-directed and habitual actions differ in two primary ways: (1) they differ in their sensitivity to changes in the value of the consequences previously associated with the action; and (2) they differ in their sensitivity to changes in the causal relationship between the action and those consequences. Generally, two kinds of experimental test have been used to establish these differences, referred to as outcome devaluation and contingency degradation, respectively.

Outcome Devaluation

It is now nearly 30 years since it was first demonstrated that rats are capable of encoding the consequences of their actions. In an investigation of the learning processes controlling instrumental conditioning in rats, Adams and Dickinson (1981) were able to demonstrate that, after they were trained to press a lever for sucrose, devaluing the sucrose by pairing its consumption with illness (induced by an injection of lithium chloride) caused a subsequent reduction in performance when the rats were again allowed to lever press in an extinction test; ie, in the absence of any feedback from the delivery of the now devalued outcome. In the absence of this feedback during the test, the reduction in performance suggested that the rats encoded the lever press–sucrose association during training and were able to integrate that learning with the experienced change in the value of the sucrose to alter their subsequent performance.

This demonstration was of central importance because, at the time, the available evidence suggested that lever-press acquisition was controlled solely by sensorimotor learning, involving a process of S–R association. Adams and Dickinson's (1981) finding was the first direct evidence to contradict this view and to suggest that animals are capable of a more elaborate form of encoding based on the response–outcome (R–O) association. It is important to recognize that evidence of R–O encoding did not necessarily imply that animals could not or did not learn by S–R association and, indeed, subsequent evidence has suggested that both processes can be encouraged depending on the training conditions. Adams (1981) found early on that the influence of devaluation on lever pressing was dependent on the amount of training; that, after a period of overtraining, the lever pressing by rats appeared to be no longer sensitive to devaluation. This was consistent with the view that R–O learning dominated performance early after acquisition but gave way to an S–R process, as performance became more routine or habitual (see also Dickinson, 1994; Dickinson et al, 1995).

Importantly, very similar effects have been found in human subjects. In a recent study, for example, we (Tricomi et al, 2009) trained human subjects to press different buttons to gain access to symbols corresponding to small quantities of two different snack foods (one of which they were given to eat at the end of the session). When allowed to eat a particular snack food until satiated, thereby selectively devaluing that snack food, undertrained subjects subsequently reduced their performance of the action associated with the devalued snack food compared with that of an action associated with a non-devalued snack food in an extinction test. In contrast, after overtraining, performance was no longer sensitive to snack food devaluation and subjects responded similarly on both the action associated with the devalued outcome and the action associated with the non-devalued outcome.

Devaluation effects have also been demonstrated when assessed using choice between different actions. Rats trained on two different action–outcome associations have been found to alter their choice between actions in an extinction test after one or other outcome has been devalued either by taste aversion learning (Colwill and Rescorla, 1985) or by specific satiety induced by a period of free consumption of one or other training outcome (Balleine and Dickinson, 1998a, 1998b). Valentin et al (2007) reported a similar devaluation effect on choice performance in humans, using a training procedure in which subjects were allowed to make stochastic choices between two icons, one paired with a high probability chocolate milk or low probability orange juice and, in a second pair of icons, one paired with high probability tomato juice and the other the low probability orange juice. When subsequently sated on the chocolate milk or tomato juice, their choice of the specific icon associated with the now devalued outcome was reduced, whereas choice of the icon paired with the other non-devalued outcome was not.

Thus, whether assessed using an undertrained free operant action or by means of choice between two actions, both rodents and humans show sensitivity to outcome devaluation. Furthermore, in both species overtraining produces insensitivity to outcome devaluation suggesting that performance has become habitual. These findings suggest, therefore, that both rodents and humans are capable of goal-directed and habitual forms of behavioral control.

Contingency Degradation

In addition to differences in associative structure, demonstrated by differential sensitivity to devaluation, the R–O and S–R learning processes appear also to be driven by different learning rules. Traditionally S–R learning was argued to reflect the operation of a contiguity learning rule, subsequently referred to as Hebbian-learning. As one might expect, this proposed that S–R learning is governed by contiguous activation of sensory and motor processes (Hebb, 1949). On a pure contiguity account (Guthrie, 1935) that is all that is required whereas, according to the S-R/reinforcement view (Hull, 1943), the association of contiguously active sensory and motor processes is strengthened by a reinforcement-related signal that does not itself form part of the associative structure and acts merely as a catalyst to increase the associative strength between S and R. On this latter view, every time an action is reinforced its association with any prevailing, contiguously active, situational stimuli is increased. This view predicts, naturally enough, that S–R learning should be constrained to the stimuli under which training is conducted and, indeed, in an interesting demonstration, Killcross and Coutureau (2003) found evidence of contextual control of S–R learning induced by overtraining one action in one context and undertraining a different action in a different context. When tested in the overtrained context the action was insensitive to outcome devaluation; when tested in the undertrained context, it was not and showed the normal sensitivity to devaluation indicative of control by an R–O learning process. By extension, the training of actions under distinct discriminative stimuli should also be anticipated to restrict S–R control to the performance assessed under that particular stimulus relative to other stimuli, consistent with aspects of previous reports (Colwill and Rescorla, 1990; De Wit et al, 2006).

In contrast, R–O learning is not determined by simple contiguity between action and outcome. This has been demonstrated in a variety of studies, first by Hammond (1980) and later in a number of better-controlled demonstrations (Balleine and Dickinson, 1998a; Colwill and Rescorla, 1986; Dickinson and Mulatero, 1989; Williams, 1989). Thus, for example, when action–outcome contiguity is maintained, non-contiguous delivery of the outcome of an action causes a selective reduction in the performance of that action relative to actions not paired with that outcome. Generally, if a specific outcome is more probable given performance of a specific action, then the strength of the R–O association increases. If the outcome has an equal or greater probability of delivery in the absence of the action, then the R–O association declines. This increase and decrease in the strength of association suggests that, unlike S–R learning, R-O learning is sensitive to the contingency between R and O rather than R–O contiguity. In contrast, S–R learning, being contiguity driven, should be insensitive to non-contiguous outcome delivery. In one study, Dickinson et al (1998) found that, compared with relatively under trained rats, overtrained rats were insensitive to a shift in contingency induced by the imposition of an omission schedule; ie, when rats were asked to withhold the performance of an action to earn a sucrose outcome, undertrained rats were able to do so, whereas overtrained rats were not. Thus, reversal of the contingency from positive (O depends on R) to negative (O depends on withholding R) affected undertrained actions, sensitive to outcome devaluation, but did not affect overtrained actions.

Although the influence of overtraining on contingency sensitivity has not been assessed in human subjects, there is considerable evidence that rat actions and human causal judgments exhibit a comparable sensitivity to the degradation of the action–outcome contingency produced by the delivery of unpaired outcomes. Recall that an instrumental action can be rendered causally ineffective by delivering unpaired outcomes with the same probability as paired ones. In fact, just as the rate of lever pressing by rats declines systematically as the difference in the probability of the paired and unpaired outcomes is reduced (Hammond, 1980), human performance declines similarly when the outcomes are given a nominal value (Shanks and Dickinson, 1991) and, more importantly, so do judgments regarding the causal efficacy of actions (Shanks and Dickinson, 1991; Wasserman et al, 1983).

Summary

These behavioral studies provide consistent evidence of two different associative processes through which actions can be acquired and controlled. One of these, R–O learning, reflects the formation of associations between actions and their consequences or outcomes, a process that explains the sensitivity of newly acquired actions and of choice between distinct courses of action to changes in the value of their consequences and in the contingent relationship between performance of the action and delivery of its associated outcome. Given the clear and substantial regulation by their consequences, the rapid and relatively flexible deployment of actions controlled by the R–O association is clearly consistent with the claim that this learning process is critical to the acquisition and performance of goal-directed action specifically and to executive control of decision-making and choice between courses of action more generally. The second, S–R learning, process involves the formation of an association between the response and antecedent stimuli. Performance controlled by the S–R association is, therefore, stimulus- and, often, contextually bound, and relatively automatic, appearing impulsive or habitual and unregulated by its consequences.

One might anticipate that these distinct learning and behavioral processes would have quite distinct neural determinants and recent research has confirmed this prediction. In the following section, we review evidence suggesting that homologous regions of the cortical-dorsal striatal network are involved in these learning processes in rats and humans, findings that have been established using many of the same behavioral tests described above.

ROLE OF THE CORTICOSTRIATAL NETWORK IN GOAL-DIRECTED AND HABIT LEARNING IN RATS AND HUMANS

Neural Substrates of Goal-Directed Learning

In rats, two components of the corticostriatal circuit in particular have been implicated in goal-directed learning: the prelimbic region of prefrontal cortex—see Figure 1a—and the area of dorsal striatum to which this region of cortex projects: the dorsomedial striatum—Figure 1d (Groenewegen et al, 1990; McGeorge and Faull, 1989; Nauta, 1989). The networks described in this section are illustrated in Figure 2a. Lesions of either of these regions prevents the acquisition of goal-directed learning, rendering performance habitual even during the early stages of training, as assessed using either an outcome devaluation paradigm or contingency degradation (Balleine and Dickinson, 1998a; Corbit and Balleine, 2003b; Yin et al, 2005). Importantly, prelimbic cortex, although necessary for initial acquisition, does not appear to be necessary for the expression of goal-directed behavior; lesions of this area do not impair goal-directed behavior if they are induced after initial training (Ostlund and Balleine, 2005). On the other hand, dorsomedial striatum does appear to be critical for both the learning and expression of goal-directed behavior; lesions of this area impair such behavior if performed either before or after training (Yin et al, 2005).

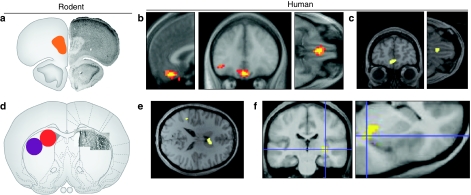

Figure 1.

(a) Photomicrograph of an NMDA-induced cell body lesion of prelimbic prefrontal cortex (right hemisphere) and approximate region of lesion-induced damage (orange oval; left hemisphere) found to abolish the acquisition of goal-directed action in rats (cf. Balleine and Dickinson, 1998a, 1998b; Corbit and Balleine, 2003a, 2003b; Ostlund and Balleine, 2005). (b) Region of human vmPFC (here medial OFC) exhibiting a response profile consistent with the goal-directed system. Activity in this region during action selection for a liquid food reward was sensitive to the current incentive value of the outcome, decreasing in activity during the selection of an action leading to a food reward devalued through selective satiation compared to an action leading to a non-devalued food reward. From Valentin et al (2007). (c) Regions of human vmPFC (medial prefrontal cortex and medial OFC) exhibiting sensitivity to instrumental contingency and thereby exhibiting response properties consistent with the goal-directed system. Activation plots show areas with increased activity during sessions with a high contingency between responses and rewards compared with sessions with low contingency. From Tanaka et al (2008). (d) Photo-micrographs of NMDA-induced cell-body lesions of dorsomedial and dorsolateral striatum (right hemisphere) with the approximate region of lesion-induced damage illustrated in using red and purple circles, respectively (left hemisphere). This lesion of dorsomedial striatum has been found to abolish acquisition and retention of goal-directed learning (cf. Yin et al, 2005), whereas this lesion of dorsolateral striatum was found to abolish the acquisition of habit learning (Yin et al, 2004). (e) Region of human anterior dorsomedial striatum exhibiting sensitivity to instrumental contingency from the same study described in panel c. (f) Region of posterior lateral striatum (posterior putamen) exhibiting a response profile consistent with the behavioral development of habits in humans. From Tricomi et al, 2009.

Figure 2.

(a) Evidence reviewed in text suggests that distinct neural networks mediate the acquisition of goal-directed actions and habits and the role of goal values and of Pavlovian values in the motivation of performance. On this view, habits are encoded in a network involving sensory-motor (SM) cortical inputs to dorsolateral striatum (DL), with feedback to cortex through substantial nigra reticulata/internal segment of the globus pallidus (SNr/GPi) and posterior thalamus (PO) and are motivated by midbrain dopaminergic inputs from substantia nigra pars compacta (SNc). A parallel circuit linking medial prefrontal cortex (MPC), dorsomedial striatum (DM), SNr, and mediodorsal thalamus (MD) mediates goal-directed actions that may speculatively involve a dopamine-mediated reward process. Finally, choice between actions can be facilitated both by the value of the goal or outcome associated with an action, likely involving amygdala inputs to ventral striatum, MPC and DM, and by Pavlovian values mediated by a parallel ventral circuit mediated by orbitofrontal cortex (OFC) and ventral striatal (VS) inputs into the habit and goal-directed loops. (b) Various theories have been advanced, based on rat and primate data, regarding how limbic, cortical, and midbrain structures interact with the striatum to control performance (see text). Here, dopaminergic (DA) feedforward and feedback processes are illustrated involving VTA-accumbens shell and core and SNc-dorsal striatal networks. The involvement of the BLA in reward processes is illustrated, as is the hypothesized involvement of the inframbic cortex (IL) and central nucleus of the amygdala in the reinforcement signal derived from SNc afferents on dorsolateral stiatum.

The finding that the parts of rat prefrontal cortex contribute to action–outcome learning raises the question of whether there exists a homologous region of the primate on prefrontal cortex that contributes to similar functions. A number of fMRI studies in humans have found evidence that a part of the ventromedial prefrontal cortex (vmPFC) is involved in encoding the expected reward attributable to chosen actions, which might suggest this region as a candidate area for a possible homolog. In a typical example, Daw et al (2006) used a four-armed bandit task in which subjects on each trial could choose one of the four bandits to obtain ‘points' that could be obtained on the different bandits in varying amounts (that would later be converted into money). The amount of reward expected on a given bandit once it had been chosen was estimated using a reinforcement learning algorithm, which took into account the history of past rewards obtained on that bandit to generate a prediction about the future rewards likely attainable on a given trial. Activity in vmPFC (medial orbitofrontal cortex extending dorsally up the medial wall of prefrontal cortex) was found to correlate with the expected reward value derived from the RL model for the chosen action across trials. A similar finding has been obtained in a number of other studies using similar paradigms and approaches (Hampton et al, 2006; Kim et al, 2006; Tanaka et al, 2004). These findings suggest that human vmPFC is involved in encoding value signals relevant for reward-based action selection; however, the above studies did not deploy the behavioral assays necessary to determine whether such value signals are goal-directed or habitual.

To address this issue, Valentin et al, (2007) performed an outcome devaluation procedure in humans, while subjects were scanned with fMRI during the performance of instrumental actions for food rewards. Following an initial (moderate) training phase, one of these foods was devalued by feeding the subject to satiety on that food. The subjects were then scanned again while they were re-exposed to the instrumental choice procedure in extinction. By testing for regions of the brain showing a change in activity during selection of a devalued action compared with that elicited during selection of a valued action from pre- to post-satiety, it was possible to isolate areas showing sensitivity to the learned action–outcome associations. The regions found to show such a response profile were medial OFC, as well as an additional part of central OFC. The aforementioned results would therefore appear to implicate at least a part of vmPFC in encoding action–outcome and not S–R associations—see Figure 1b. However, another possibility that cannot be ruled out on the basis of the Valentin et al (2007)study alone is that this region may instead contain a representation of the discriminative stimulus (in this case the fractals), used to signal the different available actions, and that the learned associations in this region may be formed between these stimuli and the outcomes obtained. In other words, the design of the Valentin et al (2007) study does not allow us to rule out a possible contribution of vmPFC to purely Pavlovian stimulus-driven valuation signals (see section ‘The corticolimbic-ventral striatal network and the motivation of decision making' for further discussion of this point).

Direct evidence of a role for vmPFC in encoding action-related value signals was provided by Gläscher et al (2009). In this study, the possible contribution of discriminative stimuli in driving expected-reward signals in vmPFC was probed using a specific design in which, in an ‘action-based' condition, subjects had to choose between performing one of two different physical motor responses (rolling a trackerball vs pressing a button) in the absence of explicit discriminative stimuli signaling those actions. Subjects were given monetary rewards on a probabilistic basis according to their choice of the different physical actions, and the rewards available on the different actions changed over time. Similar to the results found in studies where both discriminative stimulus information and action-selection components are present, in this task, activity in vmPFC was found to track the expected reward corresponding to the chosen action. These results suggest that activity in vmPFC does not necessarily depend on the presence of discriminative stimuli, indicating that this region contributes to encoding of action-related value signals above and beyond any contribution this region might make to encode stimulus-related value signals.

Further evidence that human vmPFC has a role in goal-directed learning, and in encoding action–outcome-based value signals specifically, has come from a study by Tanaka et al (2008)—see Figure 1c. In this study, rather than using outcome devaluation, areas exhibiting sensitivity to the contingency between actions and outcomes were assessed. As described earlier, sensitivity to action–outcome contingency is another key feature besides sensitivity to changes in outcome value that distinguishes goal-directed learning from its habitual counterpart. To study this process in humans, Tanaka et al (2008) abandoned the traditional trial-based approach typically used in experiments using humans and nonhuman primates, in which subjects are cued to respond at particular times in a trial, for the unsignaled, self-paced approach more often used in studies of associative learning in rodents in which subjects themselves choose when to respond. Subjects were scanned with fMRI while in different sessions; they responded on four different free operant reinforcement schedules which varied in the degree of contingency between responses shown and rewards obtained. Consistent with the findings from outcome devaluation (Valentin et al, 2007), activity in two subregions of vmPFC (medial OFC and medial prefrontal cortex) as well as one of the target areas of these structures in the human striatum, the anterior caudate nucleus (Haber et al, 2006; Ongür and Price, 2000) was elevated on average across a session when subjects were performing on a high-contingency schedule compared with when they were performing on a low-contingency schedule—see Figure 1e. Moreover, in the subregion of vmPFC identified on the medial wall, activity was found to vary not only with the overall contingency averaged across a schedule, but also with a locally computed estimate of the contingency between action and outcome that tracks rapid changes in contingency over time within a session, implicating this specific subregion of medial prefrontal cortex in the on-line computation of contingency between actions and outcomes. Finally, activation of medial prefrontal cortex also tracked a measure of subjective contingency; ie, the ratings of the subjects regarding the causal efficacy of their actions. This rating, taken after each trial block, positively correlated (approximately 0.6) with measures of objective contingency, suggesting that the medial vmPFC-caudate network may interact directly with medial prefrontal cortex to influence causal knowledge.

Neural Substrates of Habit Learning

The finding that medial prefrontal cortex and its striatal efferents contribute to goal-directed learning in both rats and humans, raises the question as to where in the corticostriatal network habitual S–R learning processes are implemented. Considerable earlier, although behaviorally indirect, evidence from studies using tasks that are nominally procedural and could potentially involve S–R learning (largely simple skill learning in humans or maze learning in rats) has implicated a region of dorsal striatum lateral to the caudate nucleus—referred to as dorsolateral striatum in rat or putamen in primates—in S–R encoding (see Figure 2a). More direct evidence, based on the criteria described in section ‘Multiple sources of action control in rodents and humans: goals and habits,' was provided in a study by Yin et al (2004). Rats with lesions to a region of dorsolateral striatum were found to remain goal-directed even after extensive training which, in sham-lesioned controls, led to clear habitization; ie, whereas actions in lesioned rats remained sensitive to outcome devaluation those of sham controls did not. This increased sensitivity to the consequences of actions was observed both with outcome devaluation and contingency degradation procedures; in the latter case, overtrained rats were unable to adjust their performance of an action when responding caused the omission of reward delivery, whereas inactivation of dorsolateral striatum rendered rats sensitive to this omission contingency (Yin et al, 2006). This finding suggests that this region of dorsolateral striatum has a critical role in the habitual control of behavior in rodents—see Figure 1d.

To establish whether a similar area of striatum also contributes to such a process in humans, Tricomi et al (2009) scanned subjects with fMRI while they performed on a variable interval schedule for food rewards in which one group of subjects was overtrained to induce behavioral habitization. In the group that was given this procedure, activity in a region of lateral striatum (caudo-ventral putamen) was found to show an increased activation on the third day of training when an outcome devaluation test revealed subjects' responding to be habitual, compared with the first day of training when responding in undertrained subjects was shown to be goal-directed—see Figure 1f. These findings provide evidence to suggest that this region of posterolateral putamen in humans may correspond functionally to the area of striatum found to be critical for habitual control in rodents. Additional hints of a role for human caudoventral striatum in habitual control can be gleaned from fMRI studies of ‘procedural' sequence learning (Jueptner et al, 1997a; Lehéricy et al, 2005). Such studies have reported a transfer of activity within striatum from the anterior striatum to posterior striatum as a function of training. Although these earlier studies did not formally assess whether behavior was habitual by the time that activity in posterolateral striatum had emerged, these studies did show that, by this time, sequence generation was insensitive to dual-task interference, a behavioral manipulation potentially consistent with habitization.

Summary

The evidence reviewed in this section, summarized in Figure 1, suggests that there is considerable overlap in the corticostriatal circuitry that mediates goal-directed and habitual actions in humans and rodents. Rodent studies have implicated prelimbic cortex and its striatal efferents on dorsomedial striatum as a key circuit responsible for goal-directed learning. In a series of fMRI studies, vmPFC has been found to be involved in encoding reward predictions based on goal-directed action–outcome associations in humans, suggesting that this region of cortex in the primate prefrontal cortex is a likely functional homolog of prelimbic cortex in the rat. Furthermore, the area of anterior caudate nucleus found in humans to be modulated by contingency would seem to be a candidate homolog for the region of dorsomedial striatum implicated in goal-directed control in the rat. Finally, the evidence reviewed here supports the suggestion that a region of dorsolateral striatum in rodents and of the putamen in humans is involved in the habitual control of behavior, which when taken together with the findings on goal-directed learning reviewed previously, provides converging evidence that the neural substrates of these two systems for behavioral control are relatively conserved across mammalian species.

THE CORTICOLIMBIC-VENTRAL STRIATAL NETWORK AND THE MOTIVATION OF DECISION MAKING

The findings described above provide evidence of distinct sources of action control governed by R–O and S–R learning processes. Given the differences in associative structure and in the neural systems controlling these types of learning, it should not be surprising to discover that they are also governed by different motivational processes. Generally, these processes can be differentiated into the influence of the encoded reward-value of the outcome of an action, derived from direct consummatory experience, and the influenced of Pavlovian predictors of reward. Experienced reward determines the performance of goal-directed actions and, hence, reflects the control of actions by outcome values. Where there is a significant degree of stimulus control over action selection, however, it is possible to observe the influence of Pavlovian stimuli associated with reward on performance; ie, stimuli that predict rewarding events can enhance the influence of action selection resulting in an increased tendency to perform selected actions and with increased vigor. As such, this motivational influence is referred to here as that based on Pavlovian values.

Neural Basis of Outcome Values

It is now well established that the reward processes that establish outcome values depend on the ability of animals to evaluate the affective and motivationally relevant properties of the goal or outcome of goal-directed actions (Balleine, 2001, 2004; Dickinson and Balleine, 1994, 2002). It is one of more striking properties of goal-directed actions that performance is determined by the experienced reward value of the outcomes associated with actions and, unlike habits or other reflexes, is not directly affected by shifts in primary motivation (Dickinson and Balleine, 1994). For example, rodents do not immediately alter their choice of an action associated with a more (or less) calorific outcome when deprivation is increased (or decreased) and do so only after they have experienced the outcome value in that new deprivation state (Balleine, 1992; Balleine et al, 1995; Balleine and Dickinson, 1994). The influence of this form of reward or incentive learning on goal-directed actions has been reported following wide ranging shifts in motivational state induced by: (i) specific satiety-induced outcome devaluation (Balleine and Dickinson, 1998b); (ii) taste aversion-induced outcome devaluation (Balleine and Dickinson, 1991); (iii) shifts from water deprivation to satiety (Lopez et al, 1992); (iv) changes in outcome value mediated by drug states and withdrawal (Balleine et al, 1994; Balleine et al, 1995; Hellemans et al, 2006; Hutcheson et al, 2001); and, (v) changes in the value of thermoregulatory reward (Hendersen and Graham, 1979); and (vi) sexual reward (Everitt and Stacey, 1987; Woodson and Balleine, 2002) (see Balleine, 2001; Balleine, 2004; Balleine, 2005; Dickinson and Balleine, 2002 for reviews).

Current theories suggest, therefore, that outcome values are established by associating the specific sensory features of outcomes with emotional feedback (Balleine, 2001; Dickinson and Balleine, 2002). Given these theories, one might anticipate that neural structures implicated in associations of this kind would have a critical role in goal-directed action. The amygdala, particularly its basolateral region (BLA), has long been argued to mediate sensory-emotional association, and recent research has established the involvement of this area in goal-directed action in rodents. The BLA has itself been heavily implicated in a variety of learning paradigms that have an incentive component (Balleine and Killcross, 2006); for example, this structure has long been thought to be critical for fear conditioning and has recently been reported to be involved in a variety of feeding-related effects, including sensory-specific satiety (Malkova et al, 1997), the control of food-related actions (see below), and in food consumption elicited by stimuli associated with food delivery (Holland et al, 2002; Petrovich et al, 2002). And, indeed, in several recent series of experiments clear evidence has emerged for the involvement of BLA in incentive learning. In one series, we found that lesions of the BLA rendered the instrumental performance of rats insensitive to outcome devaluation, apparently because they were no longer able to associate the sensory features of the instrumental outcome with its incentive value (Balleine et al, 2003; Corbit and Balleine, 2005). This suggestion was confirmed using post-training infusions of the protein-synthesis inhibitor, anisomycin, after exposure to an outcome after a shift in primary motivation. In this study, evidence was found to suggest that the anisomycin infusion blocked both the consolidation and the reconsolidation of the stimulus-–affect association underlying incentive learning (Wang et al, 2005). More recently, we have found direct evidence for the involvement of an opioid receptor-related process in the basolateral amygdala in encoding outcome value based on sensory–affect association. In this study, infusion of naloxone into the BLA blocked the assignment of an increase in the value of a sugar solution when it was consumed in an increased state of food deprivation without affecting palatability reactions to the sucrose. Naloxone infused into the ventral pallidum or accumbens shell had the opposite effect, reducing palatability reactions without affecting outcome value (Wassum et al, 2009).

With respect to the encoding of outcome values, the effects of amygdala manipulations on feeding have been found to involve connections between the amygdala and the hypothalamus (Petrovich et al, 2002) and, indeed, it has been reported that neuronal activity in the hypothalamus is primarily modulated by chemical signals associated with food deprivation and food ingestion, including various macronutrients (Levin, 1999; Seeley et al, 1996; Wang et al, 2004; Woods et al, 2000). Conversely, through its connections with visceral brain stem, midline thalamic nuclei, and associated cortical areas, the hypothalamus is itself in a position to modulate motivational and nascent affective inputs into the amygdala. Together with the findings described above, these inputs, when combined with the amygdala's sensory afferents, provide the basis for a simple feedback circuit linking this sensory information with motivation/affective feedback to determine outcome value. Nevertheless, it is not clear from this structural perspective how changes in outcome value encoded on the basis of this feedback act to influence the selection and initiation of specific courses of action.

The BLA projects to a variety of structures in the cortico-basal ganglia network implicated in the control of goal-directed action, such as the prelimbic cortex, mediodorsal thalamus, and dorsomedial striaum (see Figure 2b). However, the role of the BLA and these associated regions in action control differ in important ways (Ostlund and Balleine, 2005; Ostlund and Balleine, 2008) raising the question of how the outcome values established in the BLA make contact with the basal ganglia network critical for goal-directed motor control. Evidence suggests that BLA activity is necessary for encoding outcome values in insular cortex, particularly its gustatory region (Balleine and Dickinson, 2000), and that these values are then distributed to regions of prefrontal cortex and striatum to control action (Condé et al, 1995; Rodgers et al, 2008). With regard to the latter, insular cortex projects to the ventral striatum, particularly to the core of the nucleus accumbens (NACco) (Brog et al, 1993) and lesions of the NACco have been found (i) to impair instrumental performance (Balleine and Killcross, 1994) and, in contrast to more medial regions, such as the shell and central pole (de Borchgrave et al, 2002), (ii) to reduce sensitivity to outcome devaluation (Corbit et al, 2001). However, these lesions have no effect on the sensitivity of rats to the degradation of the instrumental contingency (Corbit et al, 2001), suggesting that the NACco influences goal-directed performance, but does not affect goal-directed learning. Generally, therefore, the NACco, as a component of the limbic cortico-basal ganglia network, appears to mediate the ability of the incentive value of rewards to affect instrumental performance, but does not have a direct role in action–outcome learning per se (Balleine and Killcross, 1994; Cardinal et al, 2002; Corbit et al, 2001; de Borchgrave et al, 2002; Parkinson et al, 2000). Thus, as has long been argued, the NACco appears to have a central role in the translation of motivation into action by bringing changes in outcome value to bear on performance consistent with its description as the limbic–motor interface (Mogenson et al, 1980).

In humans, the evidence on the role of the amygdala in outcome valuation is somewhat ambiguous, although broadly compatible with the aforementioned evidence from the rodent literature. Although some studies have reported amygdala activation in response to the receipt of rewarding outcomes, such as pleasant tastes or monetary reward (Elliott et al, 2003; O'Doherty et al, 2003; O'Doherty et al, 2001a; O'Doherty et al, 2001b), other studies have suggested that the amygdala is more sensitive to the intensity of a stimulus rather than its value (Anderson et al, 2003; Small et al, 2003), as amygdala responds equally to both positive and negative valenced stimuli matched for intensity. These latter findings could suggest a more general role for the amygdala in arousal rather than valuation per se, although, alternatively, the findings are also compatible with the possibility that both positive and negative outcome valuation signals are present in the amygdala (correlating both positively and negatively with outcome values, respectively), and that such signals are spatially intermixed at the single neuron level (Paton et al, 2006). Indeed in a followup fMRI study by Winston et al (2005), BOLD responses in amygdala were found to be driven best by an interaction between valence and intensity (that is by stimulus of high intensity and with high valence), rather than by one or other dimension alone, suggesting a role for this region in the overall value assigned to an outcome, which would be a product of its intensity (or magnitude) and its valence.

Even clearer evidence for the presence of outcome valuation signals has been found in human vmPFC (particularly in medial orbitofrontal cortex) and adjacent central orbitofrontal cortex. Specifically, activity in medial orbitofrontal cortex correlates with the magnitude of monetary outcome received (O'Doherty et al, 2001a), and medial along with central orbitofrontal cortex correlates with the pleasantness of the flavor or odor of a food stimulus (Kringelbach et al, 2003; Rolls et al, 2003). Furthermore, activity in these regions decreases as the hedonic value of that stimulus decreases as subjects are sated on it (Kringelbach et al, 2003; O'Doherty et al, 2000; Small et al, 2003). De Araujo et al (2003) found that activity in caudal orbitofrontal cortex correlated with the subjective pleasantness of water in thirsty subjects, and, moreover, that insular cortex was active during the receipt of water when subjects were thirsty compared with when they were sated, suggesting the additional possible involvement of at least a part of insular cortex in some features of outcome valuation in humans. Further evidence of a role for medial orbitofrontal cortex in encoding the values of goals has come from a study by Plassmann et al (2007) who used an economic auction mechanism to elicit subjects' subjective monetary valuations for different goal objects, which were pictures of food items one of which subjects would later have the opportunity to consume depending on their assigned valuations. Activity in medial orbitofrontal cortex was found to correlate with subjective valuations for the different food items. Although in this case such value signals are at the time of choice and hence reflect ‘goal-values' rather than outcome values, these findings are consistent with a contribution for this region in encoding the value of goals even if those goals are not currently being experienced (as would be required for a region linking action and outcome representations at the time of choice).

Neural Basis of Pavlovian Values

In contrast to goal-directed actions, responses controlled by S–R learning are directly affected by shifts in primary motivation. For example, Dickinson et al (1995) found that, when relatively undertrained to lever press for food reward, rats only altered lever-press performance when sated after they had experienced the change in outcome value in that state. In contrast, when overtrained, their performance was reduced directly by satiety suggesting that the vigor of habitual actions is more dependent on the activational effects of motivational state than undertrained actions. In addition, Holland (2004) found that, as overtrained actions became less sensitive to changes in outcome value, they become increasingly sensitive to the effects of Pavlovian reward-related stimuli on response vigor. This dissociation suggests that the influence of outcome values and of Pavlovian values provide distinct sources of motivation for the performance of actions, the former controlling the performance of goal-directed actions and the latter actions that are under significant stimulus control, whether they have simply been trained under discriminative stimuli or have been overtrained and become habitual. Two other behavioral observations support this argument. First, we have found that, when actions are performed in a chain, performance of the most distal action is controlled by the outcome value and not by Pavlovian values, whereas the most proximal action is motivated primarily by Pavlovian values and not by outcome values (Corbit and Balleine, 2003a). Second, Rescorla (1994)—and later Holland (2004)—found that the influence of a Pavlovian stimulus on lever-press responding was not affected by earlier devaluation of the outcome associated with that stimulus (see also Balleine and Ostlund, 2007d). Hence, reward-related stimuli affect the performance of instrumental actions irrespective of the value of the outcome that the stimulus predicts, an effect consistent with the argument that Pavlovian values exert their effects on actions through stimulus, as opposed to outcome, control. Indeed, this same insensitivity to outcome devaluation has been reported for actions that are acquired and maintained not by primary reward but by stimuli previously associated with reward; ie, so called conditioned reinforcers. Thus, lever pressing trained with a conditioned reinforcer is maintained even when the outcome associated with the stimulus earned by the action has been devalued (Parkinson et al, 2005).

The observation by Rescorla (1994) was particularly important because it provided a very clear demonstration of the fact that, not only the general excitatory influence but also very specific effects of reward-related stimuli on action selection are unaffected by outcome devaluation. This latter, specific effect of stimuli on action selection (an effect referred to as outcome-specific Pavlovian-instrumental transfer, or simply specific transfer) is demonstrated when two actions trained with different outcomes are performed in the presence of a stimulus paired with one or other of those outcomes; in this situation only the action that earned the same outcome as the stimulus is influenced by that cue. It is also important to note here that we have also recently established evidence of a comparable effect in human subjects. In similar manner to findings in rats, a Pavlovian stimulus associated with a specific outcome (eg, orange juice, chocolate milk, or coca cola) only elevated the performance of actions that earned the outcome predicted by that stimulus and did not affect actions trained with other outcomes (Bray et al, 2008).

There are two important aspects of the neural bases of Pavlovian values to consider relating to (1) the neural bases of predictive learning generally and (2) the neural processes through which predictive learning influences decision making. These are illustrated in Figure 2a and b.

With regard to the former, using maniulations of the Pavlovian stimulus–outcome contingency, Ostlund and Balleine (2007b) found that the orbitofrontal cortex in rats has an important role in establishing the predictive validity of Pavlovian cues with regard to specific outcomes. Thus, in control rats, although conditioned responding to a cue declined when its outcome was also delivered unpaired with that cue, responding to a stimulus predicting a different outcome was unaffected. In contrast, rats with lesions of lateral orbitofrontal cortex reduced responding to both stimuli suggesting that these animals were unable to discriminate the relative predictive status of cues with respect to their specific outcomes. Likewise, single-unit recording studies in both rodents and nonhuman primates have implicated neurons in a network of brain regions including the orbitofrontal cortex, and its striatal target area, the ventral striatum, notably the accumbens shell (Fudge and Emiliano, 2003; Haber, 2003), in encoding stimulus–reward associations. For example, Schoenbaum et al (1998) used a go/no-go reversal task in rats in which, on each trial, one of two different odor cues signaled whether or not a subsequent nose poke by the rat in a food well would result in the delivery of an appetitive sucrose solution or an aversive quinine solution. Neurons in the orbitofrontal cortex were found to discriminate between cues associated with the positive and negative outcomes, and some were also found to show an anticipatory response related to the expected outcome after the animal had placed its head in the food well, but immediately before the outcome was delivered. Cue-related anticipatory responses have also been found in orbitofrontal cortex relating to the behavioral preference of monkeys for a predicted outcome; ie, the responses of the neurons to the cue paired with a particular outcome depend on the relative preference of the monkey for that outcome compared with another outcome presented in the same block of trials (Tremblay and Schultz, 1999).

Furthermore, bilateral lesions of orbitofrontal cortex, or crossed unilateral lesions of the orbitofrontal cortex in one hemisphere with the amygdala (a region connecting strongly to both the orbitofrontal cortex and the ventral striatum (Alheid, 2003; Ongür and Price, 2000)) in the other hemisphere, result in impairments in the modulation of conditioned Pavlovian responses following changes in the value of the associated outcome induced by an outcome devaluation procedure in both rats and monkeys (Baxter et al, 2000; Hatfield et al, 1996; Malkova et al, 1997; Ostlund and Balleine, 2007b; Pickens et al, 2003). Perhaps unsurprisingly given the connectivity between OFC and ventral striatum, activity of some neurons in this region have, similar to those in the orbitofrontal cortex, been found to reflect expected reward in relation to the onset of a stimulus presentation, and to track progression through a task sequence ultimately leading to reward (Cromwell and Schultz, 2003; Day et al, 2006; Shidara et al, 1998). Some studies report that lesions of a part of the ventral striatum, the nucleus accumbens core can impair learning or expression of Pavlovian approach behavior (Parkinson et al, 1999), or that infusion of a dopaminergic agonist into accumbens can also impair such learning (Parkinson et al, 2002), suggesting an important role for ventral striatum in facilitating the production of at least some classes of Pavlovian conditioned associations.

Consistent with these findings in rodents and nonhuman primates, functional neuroimaging studies in humans have also revealed activations in both of these areas during both appetitive and aversive Pavlovian conditioning in response to Pavlovian cue presentation. For example, Gottfried et al (2002) reported activity in orbitofrontal cortex and ventral striatum (as well as the amygdala) following presentation of visual stimuli predictive of the subsequent delivery of both a pleasant and an unpleasant odor. Gottfried et al (2003) aimed at investigating the nature of such predictive representations to establish whether these responses were related to the sensory properties of the unconditioned stimulus irrespective of its underlying reward value, or whether they were directly related to the reward value of the associated unconditioned stimulus. To address this, Gottfried et al (2003) trained subjects to associate visual stimuli with one of two food odors: vanilla and peanut butter while being scanned with fMRI. Subjects were then removed from the scanner and fed to satiety on a food corresponding to one of the food odors to devalue that odor, similar to the devaluation procedures described earlier during studies of instrumental action selection. Following the devaluation procedure, subjects were then placed back in the scanner and presented with the conditioned stimuli again in a further conditioning session. Neural responses to presentation of the stimulus paired with the devalued odor were found to decrease in orbitofrontal cortex and ventral striatum (and amygdala) from before to after the satiation procedure, whereas no such decrease was evident for the stimulus paired with the non-devalued odor. These results suggest that the orbitofrontal cortex and ventral striatum encode both the value of event predicted by a stimulus and not merely its sensory features.

Learning Pavlovian Values

The finding of expected value signals in the brain raises the question of how such signals are learned in the first place. An influential theory by Rescorla and Wagner (1972) suggests that the learning of Pavlovian predictions is mediated by the degree of surprise engendered when an outcome is presented, or more precisely the difference between what is expected and what is received. Formally this is called a prediction error, which in the Rescorla–Wagner formulation can take on either a positive or negative sign depending on whether an outcome is greater than expected (which would lead to a positive error signal), or less than expected (which would lead to a negative error). This prediction error is then used to update predictions associated with a particular stimulus or cue in the environment, so that if this cue always precedes, for eaxmple, a reward (and hence is fully predictive of reward) eventually the expected value of the cue will converge to the value of the reward, at which point the prediction error is zero and no further learning will take place.

Initial evidence for prediction error signals in the brain emerged from the work of Wolfram Schultz and colleagues who observed such signals by recording from the phasic activity of dopamine neurons in awake behaving nonhuman primates undergoing simple Pavlovian or instrumental conditioning tasks with reward (Hollerman and Schultz, 1998; Mirenowicz and Schultz, 1994; Schultz, 1998; Schultz et al, 1997). These neurons, particularly those present in the ventral tegmental area in the midbrain, project strongly to the ventral corticostriatal circuit, including the ventral striatum and orbitofrontal cortex (Oades and Halliday, 1987). The response profile of these dopamine neurons closely resembles a specific form of prediction error derived from the temporal difference learning rule, in which predictions of future reward are computed at each time interval within a trial, and the error signal is generated by computing the difference in successive predictions (Montague et al, 1996; Schultz et al, 1997). Similar to the temporal difference prediction error signal, these neurons increase their firing when a reward is presented unexpectedly; decrease their firing from baseline when a reward is unexpectedly omitted; respond initially at the time of the US before learning is established but shift back in time within a trial to respond instead at the time of presentation of the CS once learning has taken place. Further evidence in support of this hypothesis has come from recent studies using fast cyclical voltammetry assays of dopamine release in the ventral striatum during Pavlovian reward conditioning, in which timing of dopamine release in ventral striatum was also found to exhibit a shifting profile, occurring initially at the time of reward presentation but gradually shifting back to occur at the time of presentation of the reward predicting cue (Day and Carelli, 2007).

To test for evidence of a temporal difference prediction error signal in the human brain, O'Doherty et al (2003) scanned human subjects while they underwent a classical conditioning paradigm in which associations were learned between arbitrary visual fractal stimuli and a pleasant sweet taste reward (glucose). The specific trial history that each subject experienced was next fed into a temporal difference model to generate a time series that specified the model-predicted prediction error signal that was then regressed against the fMRI data for each individual subject to identify brain regions correlating with the model-predicted time series. This analysis revealed significant correlations with the model-based predictions in a number of brain regions, most notably in the ventral striatum (ventral putamen bilaterally) as well as weaker correlations with this signal in orbitofrontal cortex. Consistent with these findings, numerous other studies have found evidence implicating ventral striatum in encoding prediction errors (Abler et al, 2006; McClure et al, 2003; O'Doherty et al, 2004). In a recent study by Hare et al (2008), the role of ventral striatum and orbitofrontal cortex in encoding prediction errors for free rewards was tested against a number of other types of valuation related-signals while subjects performed a simple decision-making task. Activity in ventral striatum was found to correlate specifically with reward prediction errors, whereas activity in medial orbitofrontal cortex was found to correspond more to the valuation of the goal of the decision, perhaps consistent with the aforementioned role for this region in goal-directed learning.

The studies discussed above demonstrate that prediction error signals are present during learning of Pavlovian stimulus–reward associations, a finding consistent with the tenets of a prediction error-based account of associative learning. However, merely demonstrating the presence of such signals in either dopamine neurons or in target areas of these neurons, such as the striatum, during learning does not establish whether these signals are causally related to learning or merely an epi-phenomenon. The first study in humans aiming to uncover a causal link was that of Pessiglione et al (2006) who manipulated systemic dopamine levels by delivering a dopamine agonist and antagonist while subjects were being scanned with fMRI during performance of a reward–learning task. Prediction error signals in striatum were boosted following administration of the dopaminergic agonist, and diminished following administration of the dopaminergic antagonist. Moreover, behavioral performance followed the changes in striatal activity and was increased following administration of the dopamine agonist and decreased following administration of the antagonist. These findings support, therefore, a causal link between prediction error activity in striatum and the degree of Pavlovian conditioning.

The Influence of Pavlovian Values on Decision Making

Over and above the role of ventral striatum in acquiring Pavlovian predictions, this region has also been implicated in the way Pavlovian values alter choice, particularly in studies of Pavlovian-instrumental transfer. In both rodents and humans, however, it appears not to involve the accumbens core directly but the surrounding accumbens shell/ventral putamen region of ventral striatum. In rats, considerable evidence suggests that the amygdala has a role both in Pavlovian conditioning proper (Ostlund and Balleine, 2008) but also in the way that Pavlovian cues influence both the vigor of responses, involving the central nucleus of the amygdala (Hall et al, 2001; Holland and Gallagher, 2003), and in the way that these cues influence choice, the latter dependent on basolateral amygdala. In one study, Corbit and Balleine (2005) doubly dissociated the influence of cues on choice and on responses vigor; lesions of amygdala central nucleus abolished the increase in vigor induced by these cues without affecting their biasing influence on choice. In contrast, lesions of basolateral amygdala abolished the effect on choice without affecting the increase in vigor. In humans, evidence has emerged linking the degree of activity in the right amygdala across subjects with the extent to which a Pavlovian conditioned cue modulates the vigor of responding (Talmi et al, 2008). However, as yet, no study has assessed the functions of different amygdalar subnuclei on the motivational control of behavior as has been carried out in rodents.

Circuitry involving amygdala has recently been implicated in these effects of Pavlovian values on choice. The basolateral amygdala projects to mediodorsal thalamus (Reardon and Mitrofanis, 2000), to orbitofrontal cortex, and to both the core and shell regions of the nucleus accumbens (Alheid, 2003) and, in fact lesions of each of these regions appear to influence outcome specific form of Pavlovian-instrumental transfer (Ostlund and Balleine, 2005; Ostlund and Balleine, 2007b; Ostlund and Balleine, 2008) in subtly different ways. Lesions of mediodorsal thalamus and of orbitofrontal cortex have similar effects. When rats were trained on two actions for different outcomes, a stimulus associated with one of the outcomes is usually observed to bias choice and to elevate only the action trained with the outcome predicted by that stimulus. In lesioned rats, however, the stimuli were found to elevate performance indiscriminately; ie, lesioned rats increased their performance but of both actions equally (Ostlund and Balleine, 2007b; Ostlund and Balleine, 2008). In contrast, lesions of ventral striatum have a very different effect. Although lesions of the accumbens core have been reported to affect the vigor of responses in the presence of Pavlovian cues, Corbit et al (2001) found that these lesions had no effect on the biasing effects on choice performance. Thus, rats with lesions of the accumbens core that were trained on two actions for different outcomes were just as sensitive as sham lesioned rats to the effects of a stimulus associated with one of the two outcomes; ie, similar to shams they increased responding of the action that in training earned the outcome predicted by the stimulus and did not alter performance of the other action. In contrast, lesions of the accumbens shell completely abolished outcome selective Pavlovian-instrumental transfer. Although other evidence suggested that the rats learned both the Pavlovian S–O and instrumental R–O relationships to which they were exposed, the Pavlovian values predicted by the stimuli had no effect on either the vigor of responding or on their choice between actions.

In humans, Talmi et al (2008) reported that BOLD activity in the central nucleus accumbens (perhaps analogous to the core region in rodents) was engaged when subjects' were presented with a reward-predicting Pavlovian cue while performing an instrumental response, which led to an increase in the vigor of responding, consistent with the effects of general Pavlovian to instrumental transfer. In a study of outcome-specific Pavlovian-instrumental transfer in humans using fMRI, Bray et al (2008) trained subjects on instrumental actions each leading to one of four different unique outcomes. In a separate Pavlovian training session, subjects were previously trained to associate different visual stimuli with the subsequent delivery of one of these outcomes. Specific transfer was then assessed by inviting subjects to choose between pairs of instrumental actions that, in training, were associated with the different outcomes in the presence of a Pavlovian visual cue that predicted with one of those outcomes. Consistent with the effects of specific transfer, subjects were biased in their choice toward the action leading to the outcome consistent with that predicted by the Pavlovian stimulus. In contrast to the region of accumbens activated in the general transfer design of Talmi et al (2008), specific transfer produced BOLD activity in a region of ventrolateral putamen: this region was less active in trials in which subjects chose the action incompatible with the Pavlovian cue compared with trials in which they chose compatible action, or indeed in other trials in which a Pavlovian stimulus paired with neither outcome was presented. These findings could suggest a role for this ventrolateral putamen region in linking specific outcome–response associations with Pavlovian cues and suggest that on occasions when an incompatible action is chosen, activity in this region may be inhibited. Given the role of this more lateral aspect of the ventral part of the striatum in humans in specific-PIT, it might be tempting to draw parallels between the functions of this area in humans with that of the shell of the accumbens implicated in specific-transfer in rodents. At the moment such suggestions must remain speculative until more fine-grained studies of this effect are conducted in humans, perhaps making use of higher resolution imaging protocols to better differentiate between different ventral striatal (and indeed amygdala) subregions.

THE INTEGRATION OF ACTION CONTROL AND MOTIVATION IN DECISION MAKING

How learning and motivational processes are integrated to guide performance is a critical issue that has received relatively little attention to date. Some speculative theories have been advanced within the behavioral, computational, and neuroscience literatures, although there is not at present a significant body of experimental results to decide how this integration is achieved.

Behavioral Studies

Although the fact that both the outcome itself and stimuli that predict that outcome can exert a motivational influence on performance suggests that these processes exert a complimentary influence on action selection, the growing behavioral and neural evidence that these sources of motivation are independent, reviewed above, suggests that the behavioral complementarity of these processes is achieved through an integrative network rather than through a common representational or associative process. This kind of conclusion has in fact long been the source of a number of two-process theories of instrumental conditioning that have been advanced in the behavioral literature. These positions have typically argued for the integration of some form of Pavlovian and instrumental learning process. Although this is not the place to review the variety of theories advanced along these lines (see Balleine and Ostlund, 2007d), the evidence reviewed above, arguing for distinct learning and motivational processes mediating goal-directed and habitual actions, suggests that this may be based on integration across the S–R and R–O domain in the course of normal performance. Although, this may be achieved in variety of ways, some recent evidence suggests that it is accomplished through a distributed outcome representation involving, on the one hand, the outcome as a goal and on the other the outcome as a stimulus with which actions can become associated. That outcomes might have this kind of dual role is particularly likely in self-paced situations where actions and outcomes occur intermittently, sometimes preceding sometimes following one another over time. Thus, in experiments in which food outcomes are used both as discriminative stimuli and as goals, the delivery of the food can be arranged such that it selects (always precedes) one action and independently serves as an outcome of (always follows) a different action. Devaluation of that outcome was found to influence the action with which it was associated as a goal but did not affect the selection of the action for which the outcome served as a discriminative stimulus (De Wit et al, 2006; Dickinson and de Wit, 2003). This kind of finding encourages the view that, in the ordinary course of events, stimuli and goals exert complementary control over action selection and initiation, respectively.

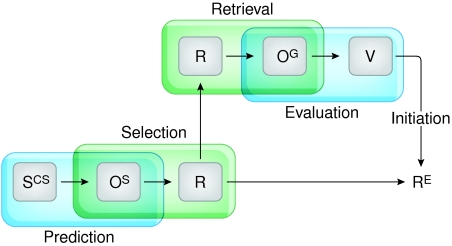

This position is summarized in Figure 3. Here, the outcome controls actions in two ways: (1) through a form of S–R association in which the stimulus properties of the outcome can select an action with which they are associated, ie, through an OS–R association; and (2) through the standard R–O association in which any selected action retrieves the outcome as a goal; ie, an R–OG. Thus, given this form of O–R association, anything that retrieves the outcome, such as the Pavlovian S–O association in specific transfer experiments, for example, should act to select the R with which the O is associated (as a stimulus). Selection of that specific R then acts to retrieve the O with which the R is associated for evaluation, now as a goal, allowing the OS and OG to work together to control selection and initiation of the action in performance. That something like this is going on is supported by much of the behavioral evidence already discussed above. Thus, outcome devaluation affects neither the ability of discriminative cues or Pavlovian cues to affect performance and only reduces actions that are dependent on outcome value for initiation (Colwill and Rescorla, 1990; Holland, 2004; Rescorla, 1994). Likewise, action selection inducted by reinstatement of an action by outcome delivery after a period of extinction is unaffected by devaluation of the outcome, although the rate of subsequent performance is significantly reduced suggesting that the OS selection process is initially engaged normally and the evaluative OG process only later acts to modify the rate of performance (Balleine and Ostlund, 2007d; Ostlund and Balleine, 2007a).

Figure 3.

Associations that current evidence suggests are formed between various stimuli (S), actions (R), and outcomes (O) or goals (G) and goal values (V) during the course of acquisition or performance of goal-directed action. (i) Current research provides evidence for both R–OG and OS–R associations in the control of performance. Whereas the OS–R association does not directly engage or influence changes in outcome value, the R–OG association directly activates an evaluative process (V). Performance (RE) relies on both R–O and O–R processes. The necessity of the evaluative pathway in response initiation can be overcome by increasing the contribution of the selection process either by presenting a stimulus separately trained with the outcome (ie, SCS–OS as in Pavlovian-instrumental transfer experiments) or by strengthening the OS–R association itself by selective reinforcement through overtraining.

Furthermore, this account provides a very natural explanation of the acquisition of habits and their dependence on overtraining. Thus, if an OS process usually exerts control over action selection, then it requires only the assumption that the strength of this selection process grows sufficiently strong with over training to initiate actions without the need for the evaluation or, more likely, before evaluation is completed. Thus, habitual actions have the quality of being impulsive; they are implemented before the actor has had time adequately to evaluate their consequences. On this view, therefore, the Pavlovian and outcome-related motivational processes associated with habits and actions are intimately related, although their joint influence over the final common path to action. Nevertheless, although these data and this general theoretical approach points to a simple integrative process, it does not provide much in the way of constraints as to how such a process is implemented. Several views of this have, however, been developed in both the computational and neuroscience literatures.

Computational Theories of Action Selection

A particularly influential class of models for instrumental conditioning has arisen from a subfield of computational theories collectively known as reinforcement learning (Sutton and Barto, 1998). The core feature of such RL models is that, to choose optimally between different actions, an agent needs to maintain internal representations of the expected reward available on each action, and then subsequently choose the action with the highest expected value. Also central to these algorithms is the notion of a prediction error signal that is used to learn and update expected values for each action through experience, just as was the case for Pavlovian conditioning described earlier. In one such model—the actor/critic, action selection is conceived as involving two distinct components: a critic, which learns to predict future reward associated with particular states in the environment, and an actor which chooses specific actions to move the agent from state to state according to a learned policy (Barto, 1992, 1995). The critic encodes the value of particular states in the world and as such has the characteristics of a Pavlovian reward prediction signal described above. The actor stores a set of probabilities for each action in each state of the world, and chooses actions according to those probabilities. The goal of the model is to modify the policy stored in the actor, such that, over time, those actions associated with the highest predicted reward are selected more often. This is accomplished by means of the aforementioned prediction error signal that computes the difference in predicted reward as the agent moves from state to state. This signal is then used to update value predictions stored in the critic for each state, but also to update action probabilities stored in the actor such that if the agent moves to a state associated with greater reward (and thus generates a positive prediction error), then the probability of choosing that action in future is increased. Conversely, if the agent moves to a state associated with less reward, this generates a negative prediction error and the probability of choosing that action again is decreased.