Abstract

Neurophysiological experiments in primates, alongside neuropsychological and functional magnetic resonance investigations in humans, have significantly enhanced our understanding of the neural architecture of decision making. In this review, I consider the more limited database of experiments that have investigated how dopamine and serotonin activity influences the choices of human adults. These include those experiments that have involved the administration of drugs to healthy controls, experiments that have tested genotypic influences upon dopamine and serotonin function, and, finally, some of those experiments that have examined the effects of drugs on the decision making of clinical samples. Pharmacological experiments in humans are few in number and face considerable methodological challenges in terms of drug specificity, uncertainties about pre- vs post-synaptic modes of action, and interactions with baseline cognitive performance. However, the available data are broadly consistent with current computational models of dopamine function in decision making and highlight the dissociable roles of dopamine receptor systems in the learning about outcomes that underpins value-based decision making. Moreover, genotypic influences on (interacting) prefrontal and striatal dopamine activity are associated with changes in choice behavior that might be relevant to understanding exploratory behaviors and vulnerability to addictive disorders. Manipulations of serotonin in laboratory tests of decision making in human participants have provided less consistent results, but the information gathered to date indicates a role for serotonin in learning about bad decision outcomes, non-normative aspects of risk-seeking behavior, and social choices involving affiliation and notions of fairness. Finally, I suggest that the role played by serotonin in the regulation of cognitive biases, and representation of context in learning, point toward a role in the cortically mediated cognitive appraisal of reinforcers when selecting between actions, potentially accounting for its influence upon the processing salient aversive outcomes and social choice.

Keywords: dopamine, serotonin, psychiatry and behavioral sciences, value, choice, social

INTRODUCTION

Decision making encompasses a range of functions through which motivational processes make contact with action selection mechanisms to express one behavioral output rather than any of the available alternatives. Research into the cognitive neuroscience of decision making has expanded dramatically over the last 15–20 years, starting with revived interest in the role of the ventromedial prefrontal cortex and its interconnected circuits in the capacity to optimize decisions over the longer term (Bechara et al, 1996; Damasio, 1994), but is now manifested in the burgeoning field of ‘neuroeconomics' (Glimcher et al, 2008).

Clinical interest in decision-making centers round the possibility that impairment in decision making—perhaps reflecting altered reward evaluation or other distortions in the psychological metrics that influence human choice—might constitute models of dysfunctional action selection in psychiatric disorders. Decision making has been a research target in substance and alcohol misuse disorders (Bechara et al, 2001; Ersche et al, 2008; Rogers et al, 1999b; Rogers and Robbins, 2001), unipolar depression and bipolar disorder (Chandler et al, 2009; Murphy et al, 2001), suicidaility (Dombrovski et al, 2010; Jollant et al, 2005), and impulsive personality disorders (Bazanis et al, 2002; Kirkpatrick et al, 2007). Adjunctive work has explored the idea that impairments in decision making can serve as markers for likely relapse (Adinoff et al, 2007; Bechara et al, 2001) and facilitate the exploration of therapeutic interventions (Rahman et al, 2006; Robbins and Arnsten, 2009).

Scope of Review

This review is confined to those experiments that have, in some way, investigated the role of dopamine and serotonin function in human subjects (both healthy non-clinical controls and those drawn from clinical populations) using laboratory models of decision making. I will also consider some experiments that have examined the influence of relevant genotypes (eg, dopamine transport (DAT), DRD2, DRD4, COMT, 5-HTTLPR, and TPH).

Neurophysiological investigations have dramatically improved our understanding of the functions of the midbrain dopamine neurones, with the application of quantitative models of their role in reinforcement learning and in decision making under uncertainty (Schultz, 2006, 2008). Contemporary functional magnetic resonance imaging (fMRI) studies of decision making in human volunteers involve parameterized models that interpret changes in blood-oxygenation-level-dependent (BOLD) signal in terms of (phasic and tonic) activity of the midbrain dopamine neurones and their limbic, striatal, and cortical projection structures (D'Ardenne et al, 2008; Knutson and Wimmer, 2007; O'Doherty et al, 2007).

From a wider perspective, the targets of the mesolimbic and striatal system may also constitute the two components of a reinforcement-learning system that underpins decision making. For example, this includes a distinction between an ‘actor', which controls and selects between behaviors, and a ‘critic', which computes the value of those actions (Sutton and Barto, 1998). This perspective has received support from fMRI investigation of instrumental learning indicating BOLD signal within the dorsal striatum as reflecting the operation of the actor and signal within the ventral striatum as reflecting the critic (O'Doherty et al, 2004). Although most fMRI investigations of decision making in human subjects have focused on elucidating the operational mechanisms of the ‘critic' in the acquisition of the values underpinning choice, recent experiments indicate that activity within cortical and sub-cortical structures innervated by dopamine and serotonin implement critical and non-normative constraints on human choice. These include, to take just a few examples, the representation of the multiple values underlying decisions (Hare et al, 2008, 2010; Smith et al, 2010), as well as subjective distortions of outcomes probabilities (Tobler et al, 2008) and how individual differences in risk attitudes are complemented by value-based signal changes within prefrontal areas (Tobler et al, 2007). These studies form the backdrop for the pharmacological experiments in human subjects considered here. The review will include investigations of principally non-social decision making (most usually involving monetary reinforcers) but, in the case of serotonin, choices involving social reinforcers as well.

Although I consider the roles of serotonin in decision making, I will not include too much about delay-dependent impulsive choice (‘temporal discounting'); this material is reviewed in detail elsewhere (Winstanley et al, 2004a). Both dopamine and serotonin interact with other neuromodulators to influence choice (Doya, 2008). However, I will not consider proposals that phasic activity of the locus coreleus, releasing noradrenaline, codes decision outcomes (to optimize performance and exploit predictable rewards), whereas fluctuations in tonic activity allows current behavior to be relinquished (to facilitate exploration) (Aston-Jones and Cohen, 2005; Dayan and Yu, 2006; Yu and Dayan, 2005). Nor will the review consider the role of the gamma-aminobutyric acid (GABA) and cannabinoid systems in predominantly operant-based studies of risk taking (Lane et al, 2008), or in experiments showing the influence of CB1 receptor activity in decisions based on expected value (Rogers et al, 2007). Finally, I will not consider neuropeptides, and their interactions with other modulator, in social choices (Baumgartner et al, 2008; Fehr, 2009; Skuse and Gallagher, 2009). The approach will be narrative with some, hopefully fresh, suggestions about serotonin to finish.

Background: Dopamine and Serotonin in Reinforcement Processing

Discussions of dopamine and serotonin frequently centee round their apparent opponency. Daw et al (2002) helpfully summarize much of the evidence frequently cited in this context. This includes similarities between the behavioral sequelae of serotonin depletion and amphetamine administration (Lucki and Harvey, 1979; Segal, 1976), and findings that administration of agonists and antagonists of dopamine and serotonin produce broadly opposite effects on unconditioned behaviors (eg, feeding) and responding for conditioned reward, self-stimulation, and performance of conditioned place-preference tests (Fletcher et al, 1993, 1995, 1999). Serotonin activity can also depress dopamine activity within the ventral tegmental area and substantial nigra, as well as dopamine release within the nucleus accumbens and striatum (Daw et al, 2002; Kapur and Remington, 1996).

Notwithstanding the above observations, however, dopamine and serotonin also act synergistically in aspects of reinforcement processing. For example, the behavioral and reinforcing effects of cocaine in rats are potentiated by treatment with selective serotonin-reuptake blockers (SSRIs) (Cunningham and Callahan, 1991; Kleven and Koek, 1998; Sasaki-Adams and Kelley, 2001), and brain self-stimulation thresholds can also be dose dependently altered with SSRI treatment (Harrison and Markou, 2001). Similarly, tryptophan depletion can block the rewarding effects of cocaine in human subjects (Aronson et al, 1995). Most recently, tonic activity of serotonergic neurones within the dorsal raphe nucleus appears to code the magnitude of expected and received rewards, whereas phasic activity of the dopamine neurones of the substantia pars compacta codes differences between these values (Nakamura et al, 2008).

Finally, there are situations in which serotonin seems to facilitate the activity of dopamine in controlling behavior, exemplified by the findings that the capacity of amphetamine to enhance the ability to tolerate longer delays to rewards (on the basis of presented conditioned stimuli) can be abolished by global depletions of serotonin in the rat (Winstanley et al, 2005). Therefore, dopamine and serotonin probably play complementary, rather than simply opponent, roles in the reinforcement and control processes that underpin choice behaviors (see also Cools et al, 2010).

DOPAMINE AND DECISION MAKING

Dopamine is likely to exert a multi-faceted influence upon decision making through the activity of its forward afferents along the mesolimbic, striatal, and cortical pathways, with the nucleus accumbens playing a pivotal role in action selection (Everitt and Robbins, 2005). In rats, interactions between D1 receptor activity within the anterior cingulate cortex (ACC) and the core of the nucleus accumbens mediate decisions to expend greater effort to obtain larger rewards (Hauber and Sommer, 2009; Salamone et al, 1994; Schweimer and Hauber, 2006), perhaps reflecting cost–benefit calculations about the net value of candidate actions (Gan et al, 2010; Phillips et al, 2007). The specificity of the dopamine contribution to decision making is underpinned by the demonstration of a double dissociation of changes in effort vs delay discounting following the administration of the mixed D2 receptor antagonist, haloperidol and para-chlorophenylalanine (pCPA), indicating greater involvement of the D2 family of receptors in the former and of serotonin activity in the latter forms of behavioral choice (Denk et al, 2005). In addition, the influence of dopamine upon these forms of decision-making function is likely to involve complex interactions with other neurotransmitter systems, such as glutamate, that also play a pivotal role in motivation and reinforcement (Floresco et al, 2008).

In comparison, experimental investigation of dopamine's role in different forms of decision making in human subjects has been surprisingly limited. Clinical evidence suggests that chronic substance misusers show significant impairments in the capacity to decide between probabilistic outcomes, reflecting possible disturbances of dopaminergic, and possibly serotonergic, modulation of fronto-striatal systems (Ersche et al, 2008; Paulus et al, 2003; Rogers et al, 1999b). Equally though, such deficits may reflect pre-existing disturbances including, for example, elevations of D2 receptor expression within the nucleus accumbens that promote the heightened impulsivity associated with drug-seeking behavior and, presumably, variability in decision-making function (Besson et al, 2010; Dalley et al, 2007; Jentsch and Taylor, 1999; Jentsch et al, 2002).

Other evidence suggests that impairments in decision making associated with certain neuropsychiatric and neurological conditions are mediated by dopaminergic mechanisms. Most obviously, administration of dopamine therapies—including, for example, drugs that on act upon the D2 family of receptors—appears to induce problems with gambling and other compulsive behaviors in a minority of Parkinsonian patients (Dodd et al, 2005; Voon et al, 2006a, 2006b; Weintraub et al, 2006) (see below). Experimental evidence has also shown that administration of amphetamine and the D2 receptor antagonist, haloperidol, can prime cognitions that promote gambling behavior as well as enhance its reinforcement value in samples of individuals with gambling problems (Zack and Poulos, 2004, 2007, 2009). Finally, the mixed dopamine and noradrenaline agent, methylphenidate, has also been shown to ameliorate the risk-taking behavior of children with diagnosis of DSM-IV attention-deficit-hyperactivity disorder (ADHD) when asked to place ‘bets' on their previous decisions being correct (DeVito et al, 2008), as well as reducing the number of impulsive decisions in the performance of delay-discounting tasks (Shiels et al, 2009).

Dopaminergic agents also appear to improve decision making in adult clinical populations. Methylphenidate, in comparison with single placebo treatments, has been shown to reduce the heightened tendency to take risks—manifested again as a tendency to wager more reward on previous choices being correct (Rahman et al, 1999)—in patients with diagnoses of fronto-temporal dementia (Rahman et al, 2006). fMRI experiments with healthy subjects suggest that this effect reflects a shift in the distribution of activity toward amygdala and para-hippocampal sites in response to uncertainty (Schlosser et al, 2009).

In healthy adults, Sevy et al (2006) used the Iowa Gambling Task to test optimal longer-term decision making following ingestion of a mixture of branched-chain amino acids (BCAA) that depleted the tyrosine substrate for dopamine synthesis. Tyrosine depletion impaired performance of the Iowa Gambling Task by enhancing the weight attributed to recent compared with less recent outcomes (Sevy et al, 2006). These results complement other data suggesting that tyrosine depletion, achieved through ingestion of a BCAA mixture, reduced the weight decision makers' place upon the magnitude of bad outcomes when making decisions under conditions of uncertainty for monetary rewards (Scarná et al, 2005).

DOPAMINE'S COMPUTATIONAL ROLE IN DECISION MAKING

Dopamine plays a critical role in predicting rewards in Pavlovian and instrumental forms of learning and, in the latter case, updating the value of actions on the basis of this learning. In the now classic formulation, delivery of unexpected rewards induce phasic increases in the activity of midbrain dopamine neurones, whereas the omission of expected rewards produce depressions in their activity, thus instantiating positive and negative prediction errors (Schultz, 2004, 2007). Several features of this system can be represented by formal theories of reinforcement learning (Sutton and Barto, 1998). As learning proceeds, phasic responses of dopamine neurones shift away from the delivery of predicted rewards toward the onset of environmental stimuli associated with those rewards, perhaps implementing a form of temporal difference learning (Hollerman and Schultz, 1998; O'Doherty et al, 2003).

Pessigilone et al (2006) provided preliminary evidence that manipulation of dopamine function through single doses of 100 mg of 3,4-dihydroxy-phenylalanine (-DOPA) (plus 25 mg of benserazide) or 1 mg of the mixed D2 dopamine antagonist, haloperidol, influenced the performance of an instrumental learning task involving both monetary gains and losses (Pessiglione et al, 2006). Participants who were treated with -DOPA earned more money than the participants who received haloperidol, by virtue of making responses that, on average, accrued a higher rate of large gains and a higher rate of low losses.

In addition, analysis of the magnitude of both BOLD signals associated with positive striatal prediction errors (increases in signal associated with the delivery of winning outcomes) and negative prediction errors (decreases in signal associated with losing outcomes) were enhanced within the ventral striatum bilaterally and left posterior putamen following treatment with -DOPA compared with haloperidol. Although there are no explicit comparisons between the task performance or BOLD signals of participants who received -DOPA or haloperidol and participants who received placebo, this experiment demonstrates that the learning mechanisms of value-based decision making can be altered by dopaminergic agents in young, healthy adults.

At the current time, Pessigilone et al (2006) remains the only experiment to have tested the effects of a dopaminergic agent on formally derived estimate of prediction errors during performance of an instrumental decision-making task in young health adults. However, Menon et al (2007) tested the effects of single doses of amphetamine (0.4 mg/kg) and haloperidol (0.04 mg/kg) against a placebo treatment on the BOLD expression of prediction error signals derived with a temporal difference learning model, during completion of a simple aversive conditioning procedure. As expected, participants' prediction errors were reliably expressed as BOLD responses within the ventral striatum following treatment with placebo, but abolished entirely following treatment with haloperidol. By contrast, amphetamine treatment—facilitating dopamine release—produced larger BOLD prediction errors signals within the ventral striatum, putamen, and globus pallidus (Menon et al, 2007).

The Role of Distinct Dopamine Receptor Systems in Decision Making

The above results raise the question as to which dopamine receptor sub-types might mediate these effects. The most sophisticated model proposed to answer this question, strengthened by its incorporation of the role of dopamine in action control, has been formulated by Michael Frank and his co-workers (Frank et al, 2004; Frank and O'Reilly, 2006).

According to this model, activity in the striatal direct pathway facilitates the execution of responses identified in the cortex (‘Go signals'), whereas activity in the indirect pathway suppresses competing responses (‘No-Go signals'), and the influence of these pathways is facilitated by the relative distribution of D1 and D2 receptors. Several reviews of this research are already available (Frank et al, 2007b; Frank and Hutchison, 2009). However, in essence, the Frank model proposes that increased dopamine activity elicited by positive reinforcers influence striatal outflow to pallidal-thalamic nuclei by facilitating synaptic plasticity within the ‘direct' pathway by actions at D1 receptors while inhibiting activity within the ‘indirect' pathway by (post-synaptic) actions at D2 receptors. Equally, dips in dopamine activity elicited by negative reinforcers produce the opposite pattern of changes, suppressing behavioral output.

An initial neuropsychological test of the model involved patients with Parkinson's disease with a discrimination learning procedure. Patients (and senior non-clinical controls) were shown three pairs of patterns that varied in their reinforcement. In one pair, one pattern (A) was reinforced 80% of the time (but punished 20% of the time), whereas the other pattern (B) was punished 80% of the time (but reinforced 20% of the time). In the other two pairs, these differences were progressively degraded such that, in the second pair, one pattern (C) was reinforced 70% of the time, whereas the other pattern (D) was punished 70% of the time. In the final pair, one pattern (E) was reinforced 60% of the time, whereas the other pattern (F) was punished 60% of the time. During training, patients learnt to choose reliably the pattern most frequently reinforced within each pair (Frank et al, 2004). Learning was subsequently assessed in a series of transfer tests in which patients were shown new pairs comprising one of the patterns (A and B) that had been predominantly reinforced or punished, paired with other patterns encountered during training. Differences in learning about good and learning about bad outcomes were manifested in the tendency of decisions to select or avoid these patterns. Frank et al (2004) confirmed the central prediction of the model: that the depleted dopamine state in unmedicated Parkinson's patients impaired learning from the positive outcomes of their decisions (by attenuating phasic increases in dopamine activity), but enhanced learning from negative outcomes, while shifts to the medicated state reversed this pattern of impairments by facilitating learning from good outcomes (and also by diminishing the dips in dopamine activity that facilitate learning from negative outcomes) (Frank et al, 2004).

Pharmacological tests of Frank et al's model are hampered by the limited specificity of the agents that can be administered to human subjects and the inevitable uncertainty about their pre- vs post-synaptic modes of action (Frank and O'Reilly, 2006; Hamidovic et al, 2008). Nonetheless, some evidence that the D2 family of receptors might play a role in learning from bad outcomes is provided by the demonstration that small single doses (0.5 mg) of the D2/D3 receptor agonist, pramipexole, impair the acquisition of a response bias toward the most frequently rewarded choice in a probabilistic reward learning task, consistent with a hypothesized pre-synaptic diminution of the phasic signalling of positive prediction errors (Pizzagalli et al, 2008). This behavioral effect of low doses of pramipexole may also involve increased feedback-related neuronal responses to unexpected rewards, probably originating within the ACC region, and possibly reflecting the altered integration of decision outcomes over an extended reinforcement history (Santesso et al, 2009).

Small doses of pramipexole may not produce marked or generalized changes in other aspects of decision making. Using a within-subject, cross-over, placebo-controlled design, Hamidovic et al (2008) tested the effects of two relatively low doses of pramipexole (0.25 and 0.50 mg) against placebo on the performance of a number of tasks purported to tap, among other things, behavioral inhibition (Newman et al, 1985), response perseveration (Siegel, 1978), risk taking (Lejuez et al, 2002), and impulsivity (as delay discounting) (Richards et al, 1999). Pramipexole significantly reduced positive affect compared with placebo and increased sedation; however, neither dose significantly altered performance of any task compared with placebo. This might suggest that the changes observed by Pizzagalli et al (2008) and Santesso et al (2009) reflect the influence of pramipexole upon the acquisition of action–value relationships, consistent with the proposed role of D2/D3 receptors in learning from decision outcomes (Frank et al, 2004, 2007b). Such learning would have been attenuated in the within-subject, cross-over design of Hamidovic et al (2008), in which the same subjects completed the same tasks, but following different pharmacological treatments.

Frank and O'Reilly (2006) compared single 1.25 mg doses of the D2 agonist, cabergoline, and the 2 mg of haloperidol on healthy adults' performance of the discrimination task described above to test effects on learning from positive or negative outcomes. Like haloperidol, cabergoline shows considerably greater affinity for D2 compared with D1 receptors, and so the comparison of an agonist and antagonist at the same receptors systems represents good pharmacological strategy. As in unmedicated Parkinson's disease patients, cabergoline impaired learning from good outcomes, but (tended to) enhance learning from bad outcomes, whereas, like medicated Parkinson's disease patients, haloperidol enhanced learning from good outcomes, but impaired learning from bad outcomes. Cabergoline and haloperidol act as agonist and antagonist at D2 receptors, respectively. Therefore, Frank and O'Reilly (2006) interpreted their findings as reflecting predominantly pre-synaptic actions. Thus, cabergoline diminished phasic bursts of dopamine following good outcomes, retarding Go learning; whereas haloperidol increased dopamine bursts following good outcomes, improving Go learning.

This interpretation is consistent with what we know about the effects of pre-synaptic dopamine receptor activity (Grace, 1995). It is also consistent with the reduced state positive affect and increased sedation following low doses of pramipexole reported by Hamidovic et al (2008). On this view, activity at pre-synaptic autoreceptors might have diminished the firing of midbrain dopamine neurones and, perhaps, reduced noradrenergic activity through depressed firing of the locus coeruleus (Samuels et al, 2006). Frank and O'Reilly (2006) also observed that cabergoline treatment produced larger effects in participants with low memory span who showed both improved Go learning and No-Go inhibition of RT, which might argue for both pre- and post-synaptic effects. It is also worth noting that Riba et al (2008) found that single doses of 0.5 mg pramipexole induced riskier choices of lotteries in healthy volunteers alongside reductions in positive BOLD signal changes within the ventral striatum in response to unexpected winning outcomes. This suggests that it may be possible to dissociate preference for risk and heightened Go learning (Riba et al, 2008).

Genotypic Variation in Receptor Expression and Decision Making

The hypothesis that D1 and D2 receptors play dissociable roles in the reinforcement learning that underlies value-based decision-making raises the important question as to whether individual differences in their expression might influence peoples' choices in these forms of value-based choice. Consistent with the claim that low doses of D2 agonists impair learning from the bad outcomes of decisions, Cools et al (2009) found that1.25 mg of the drug, bromocriptine, improved the prediction of rewards relative to punishments during the performance of an adapted probabilistic outcome (reward vs punishment) discrimination task in participants with low baseline dopamine synthesis capacity (as measured with the uptake of the positron emission tomography tracer [18F]fluorometatyrosine) while impairing it in participants with high baseline dopamine synthesis capacity in the striatum).

Other evidence that genotypic variation influences the operation of dissociable mechanisms involved in learning from good and bad outcomes is provided by the influence upon decision making of the rs907094 polymorphism of the DARPP-32 genotype, which heightens D1 synaptic plasticity within the striatum, and the C957T polymorphism of the DRD2 receptor gene, which regulates D2 mRNA translation and stability (Duan et al, 2003) and post-synaptic D2 receptor density in the striatum (Frank et al, 2007a). Individuals who were homozygotes for the A allele of the DARPP-32 gene showed enhanced learning from good outcomes compared with carriers of the G allele; by contrast, individuals who were T/T homozygotes of the DRD2 gene were selectively better than carriers of the C allele when learning from bad outcomes, consistent with the claim that the D1 and D2 receptors influence distinct forms of Go vs No-Go learning (Frank et al, 2007a). Two further polymorphisms (rs2283265 and rs1076560) associated with reduced pre-synaptic to post-synaptic D2 receptor expression showed independent changes in learning from the bad compared to good outcomes of their decisions (Frank and Hutchison, 2009).

Several other experiments have highlighted D2 receptor density, focusing upon the Taq 1A (ANKK1-Taq 1A) polymorphism. Carriers of the A1+ allele (ie, A1A1 and A1A2) show a reduction in D2 receptor density of up to 30% compared with A2 homozygotes (Ritchie and Noble, 2003; Ullsperger, 2010). The personality trait of extraversion in A1 carriers is associated with altered BOLD responses within the striatum following the delivery of decision outcomes (Cohen et al, 2005) as well as decreased rostral ACC signals following bad outcomes (Klein et al, 2007). This latter activity is likely to relate to the requirements to adjust behavior following bad outcomes, as evidenced by observations that switches from one choice to another by A1 carriers are associated with reduced signal within an orbito-frontal area and ventral striatum, as well as altered signalling within ACC when integrating across preceding negative outcomes (Jocham et al, 2009). By contrast, other polymorphisms of the DRD2 gene (eg, −141C Ins/Del) are associated with heightened striatal BOLD responses to rewards during performance of a card guessing game (Forbes et al, 2009).

Cortical and sub-cortical dopamine seems to involve reciprocal functional relationships (Roberts et al, 1994), raising the question as to whether genotypic influences on prefrontal dopamine might also influence aspects of choice behavior. Several experiments suggest they do. Carriers of the met/met allele of the catechol-O-methyltransferase (COMT) gene (associated with reduced clearance of prefrontal dopamine) showed enhanced BOLD signals within dorsal prefrontal regions and the ventral striatum during the anticipation of rewards (Yacubian et al, 2007). Evidence that the correspondence between striatal BOLD and expected value was disturbed in carriers of the met/met COMT allele and the 10-repeat DAT allele, and in carriers of the val/val allele and the 9-repeat DAT allele, suggests that genotypic interactions that influence cortical and striatal dopamine also govern learning about decision outcomes (Dreher et al, 2009; Yacubian et al, 2007). By contrast, carriers of the val/val allele (associated with enhanced clearance of prefrontal dopamine) show enhanced prediction error signalling expressed as BOLD signals within the striatum, alongside rapid changes in BOLD expressions of learning rate within dorsomedial prefrontal cortex (Krugel et al, 2009). Collectively, these data suggest that dopamine activity within the prefrontal cortex influence action-value learning mechanisms within the striatum (see also Camara et al, 2010).

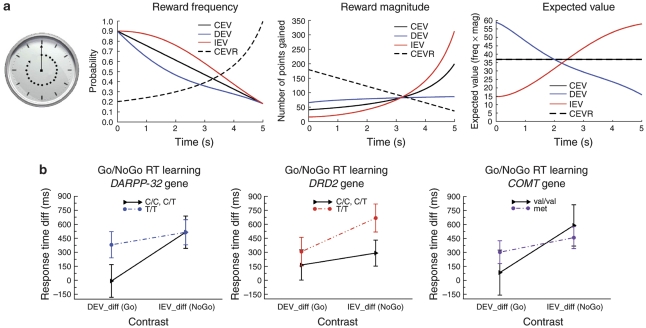

The conjoint influence of cortical and striatal dopamine function upon value-based decision has been shown further in an elegant experiment testing the effects of genotypic variation in the COMT gene, DARPP-32 and DRD2 genes (governing the expression of D1 and D2 receptors) (Frank et al, 2009). In three conditions, participants observed a clock arm that completed a revolution over 5 s (Figure 1), and stopped the clock with a key press to win points, delivered with a probability and magnitude that varied as a function of the key-press latency.

Figure 1.

Representation of the action selection used by Frank et al (2009). (a) Participants observed a clock arm that completed a revolution over 5 s, and could stop the clock with a key press in an attempt to obtain rewards. Rewards were delivered with a probability and magnitude that varied as a function of response times (RTs), defining changing expected values. There were four conditions (one not shown here). In the expected value decreases (DEV) condition, expected values declined with slower RTs, such that performance benefited from Go learning to produce quicker responses. In the expected value increases (IEV) condition, expected values increased such that performance benefited from No-Go learning to produce adaptively slower responding. Finally in the (control) CEV condition, expected values remained constant as a function of RTs. (b) Mean RTs (plus standard error bars) in the IEV relative to CEV conditions for carriers of the T/T compared with the C/C and C/T alleles of the DARPP-32 and DRD2 genes, and carriers of the met allele compared with the met/val and val/val alleles of the COMT gene.

In the DEV condition, the expected value of responses declined with response times (RTs) (such that performance benefited from Go learning to further speeded RTs). In the IEV condition, the expected value of responses increased with RTs (such that performance benefited from No-Go learning to produce adaptively slower responding). In the CEV condition, the expected value of responses remained constant as a function of RT. Carriers of the DARPP-32 T/T allele showed faster RTs in the DEV relative to CEV condition, but similar RTs in the IEV condition, indicating enhanced Go learning. By contrast, individuals with the DRD2 T/T genotype, with highest striatal D2 receptor density, showed marginally slower RTs in IEV condition, tending to indicate enhanced No-Go learning; these individuals showed similar RTs in the DEV, indicating little effects upon Go learning (see Figure 1).

These findings have been elegantly incorporated into a revised computational model, suggesting that genotypic variations of D1 receptor expression (DARPP-32 gene), and D2 receptor expression (DRD2 gene), regulate the tendency to ‘exploit' known action valuations based on learning from good and bad outcomes, respectively (Frank et al, 2009). By contrast, individuals who carry the met allele of the COMT gene (associated with reduced uptake of synaptic dopamine) exhibit an enhance ability to shift to alternative behaviors following negative outcomes, as expressed in the learning rate parameters of the computational model, instantiating the proposal that the COMT genotype can regulate exploration as a function of uncertainty about decision outcomes (Frank et al, 2009).

MORE ON THE DECISION MAKING OF PARKINSON'S DISEASE

Parkinson's disease has provided a helpful naturalistic experimental test of the above theoretical framework, most dramatically expressed in the minority of patients who exhibit a dopamine dysregulation syndrome involving compulsive problems, usually characterized by repetitive but subjectively rewarding behaviors (Dagher and Robbins, 2009; Dodd et al, 2005; Voon et al, 2006a, 2007, 2010). However, neuropsychological investigations have also indicated that samples of patients without these striking clinical features also exhibit changes in performance of the Iowa Gambling Task (Perretta et al, 2005), in the processing of other forms of reward-related choices (Cools et al, 2003; Frank et al, 2004; Rowe et al, 2008) and in impulsivity in delay-discounting paradigms (Voon et al, 2009).

Strikingly, it has been apparent for some time that the magnitude of cognitive and learning impairments in Parkinson's disease is dependent on medication status (Swainson et al, 2000). Impairments in planning and attentional control functions seem to be improved when tested in the ‘on' state of -DOPA medication compared with the ‘off' state, whereas other problems, for example, in reward-related learning seem to be made worse (Cools et al, 2001; Frank et al, 2004). Consistent with this, Cools et al (2003) showed that -DOPA therapy disrupted neural signals within the ventral striatum of Parkinson's disease patients when processing errors as part of a probabilistic reversal learning procedure (Cools et al, 2003). Overall, the extant data suggest that impulsive control disorders observed in a minority of Parkinson's disease patients following dopamine therapies involve chronic overstimulation of a wider neural circuit incorporating the ventromedial, the ventral striatum, and its pallidal-thalamic outputs (Dagher and Robbins, 2009), perhaps contributing to the pathological and compulsive spectrum of behaviors seen in vulnerable patients (Voon et al, 2007).

In the context of decision making for motivationally significant outcomes, Frank and O'Reilly (2006) elaborated their model that chronic overstimulation of (post-synaptic) D2 receptors of the indirect pathway through the administration of dopamine agonists, such as pramipexole, interferes with the capacity to learn from bad outcomes of decisions, while variably increasing the tendency to overweight good outcomes, promoting reward-seeking behaviors. To explore this issue clinically, van Eimeren et al (2009) compared the effects of stimulation of D2/D3 through administration of pramipexole with the effects of being ‘off' and ‘on' -DOPA in Parkinson's disease patients without a history of gambling problems, using a simulated roulette game. Prampiexole increased the magnitude of positive neural responses within the orbito-frontal cortex of Parkinson's patients following winning outcomes while attenuating negative changes following the delivery of losing outcomes, highlighting the potential involvement of a wider prefrontal cortical circuit in the genesis of gambling and compulsive problems following dopamine treatment in Parkinson's disease (van Eimeren et al, 2009).

The use of formal reinforcement learning models has tended to provide more precise evidence that dopamine therapies increase learning from positive outcomes compared with learning from bad outcomes. Thus, Rutledge et al (2009) found that learning in Parkinson's disease patients on the basis of positive prediction errors was enhanced in the ‘on' state compared with the ‘off' state. Treatment status made no difference in learning from negative outcomes (Rutledge et al, 2009). The most detailed investigation has been provided by Voon et al (2010), who compared the performance of three separate groups: Parkinson's patients with problem gambling and shopping behaviors, matched Parkinson's disease controls ‘on' and ‘off' dopamine therapy, and finally, matched normal volunteers, as a part of an fMRI protocol.

Using a reinforcement learning model, dopamine agonists increased the rate of learning from good outcomes in susceptible patients with Parkinson's disease, consistent with the proposed overweighting positive outcomes (eg, Frank et al, 2004). Learning from bad outcomes was slowed in those patients without a history of compulsive problems, consistent with previous findings in similar experiments (Frank et al, 2004), and this was accompanied by attenuated negative prediction errors within the ventral striatum and anterior insula (Voon et al, 2010). The remaining puzzles include the need to understand how chronic administration of dopamine agonists that predominantly act upon D2 and D3 receptors are able to facilitate learning from good outcomes that is—according to Frank et al (2004)—predominantly mediated by activity at D1 receptors in patients who are vulnerable to compulsive problems (Voon et al, 2010). One possibility is that the tendency to experience these problems reflects excessive dopamine pre-synaptic dopamine release (Steeves et al, 2009) or the facilitation of D1 receptor activity via chronic overstimulation of D3 receptors (Berthet et al, 2009) (see Voon et al, 2010 for discussion).

Summary

The extant experiments that investigate the effects of dopaminergic challenges upon human decision making are relatively few in number, and their interpretation is subject to a number of complicating factors. These include (i) uncertainties about whether given dosages have pre- vs post-synaptic modes of action; (ii) lack of specificity of the licensed medications used in experiments with human subject for D1 vs D2 receptor subtypes; and (iii) interaction of effects with ‘baseline' cognitive performance. There are also some surprising gaps; in particular, the limited evidence that treatments with dopamine agents influence the BOLD expression of prediction errors in non-clinical healthy adult volunteers when these errors are specified using a formal reinforcement learning models.

Nonetheless, the information provided by human psychopharmacology experiments is broadly consistent with the basic proposal that dopamine activity can influence decisions by modulating what is learnt about the value of their outcomes. Furthermore, D1 and D2 receptor activity appears to exert complementary influences upon learning from good decision outcomes compared with bad decision outcomes, although the existing human studies do not provide much information about whether these effects are pre- vs post-synaptic, or how D1 and D2 activity influences subsequent response selection in pallidal-thalamic pathways. By contrast, prefrontal dopamine activity, possibly involving long-hypothesized interactions with striatal dopamine (Roberts et al, 1994), seems to be involved in more strategic shifts in decision making involving, for example, exploratory behaviors also mediated by interactions with noradrenergic influences within medial prefrontal sites (Ullsperger, 2010).

Experiments with Parkinson's disease indicate that dopamine medications influence decision making of patients, as it does in other forms of cognitive activity (Cools et al, 2001; Swainson et al, 2000), by altering dopamine activity toward or away from optimal levels within fronto-striatal loops. This is also consistent with the idea that compulsive problems associated with dopamine medications in a minority of patients reflect overstimulation of reinforcement circuits that enhance the value of rewards (Dagher and Robbins, 2009; Frank et al, 2004). The evidence that dopamine therapies enhance the positive value of good outcomes is somewhat stronger than the evidence that it reduces negative changes in the value of bad outcomes in Parkinson's disease. Finally, the striking convergence of findings involving genotypic variation in COMT activity and D2 receptor function links powerfully to the notion that decision making involves interactions between prefrontal and striatal dopamine neuromodulation, and with animal data suggesting that expression of this D2 receptors (in the striatum) confers vulnerability to substance and impulse control disorders (Dalley et al, 2007).

SEROTONIN AND DECISION MAKING

An established tradition of experiments indicates a role for serotonin in the modulation of non-rewarded behavior (Soubrie, 1986) and various impulse control functions (Schweighofer et al, 2007; Winstanley et al, 2004a; Wogar et al, 1993). Converging evidence from human clinical populations indicates that impulsive behaviors, especially those involving impulsive violent actions, are frequently associated with markers of serotonergic dysfunctions (Booij et al, 2010; Brown et al, 1979; Coccaro et al, 2010), confirming that this neurotransmitter plays a central role in selecting actions giving rise to motivationally significant reinforcers. On the other hand, the contributions of serotonin to decision making—whether impulsive or not—are likely to be complex and involve multiple receptor systems. Thus, in the context of different forms of impulsive behaviors, the ability to tolerate delays before larger rewards can be undermined by depletions of serotonin (Wogar et al, 1993), whereas failures to withhold activated responses may involve heightened serotonin activity within the prefrontal cortex (Dalley et al, 2002). Similarly, problems with controlling premature responding can be increased or decreased by the activation of 5-HT2A and 5-HT2C receptors, respectively (following global serotonin depletions produced by di-hydroxytryptamine) (Winstanley et al, 2004b). This suggests that serotonin modulates multiple cognitive, affective and response-based mechanisms in decision making via activity in multiple receptor systems.

SEROTONIN AND DECISION MAKING

Several early experiments tested the effects of reducing central serotonin activity through diatary tryptophan depletion on decision making of healthy controls using cognitive tasks that had been validated in samples of neurological patients. These experiments show both the sensitivity of decision-making tasks to tryptophan depletion and also the volatility of their findings. Originally, Rogers et al (1999b) showed that tryptophan depletion reduced the proportion of choices with the highest probability of positive reinforcement. As this pattern of choices was also shown by patients with focal lesions of the ventromedial and orbital regions of the frontal lobes (Rogers et al, 1999b), these findings suggested that serotonin modulates decision-making functions mediated by these cortical regions and their striatal afferents (Rogers et al, 1999a). However, Talbot et al (2006) subsequently found precisely the reverse effect in the form of increased choice of the most reinforced option using the same behavioral paradigm (Talbot et al, 2006). As neither effect was associated with marked increases in ‘bets' placed against the outcomes of these decisions, these effects suggest that serotonin modulates the selection of actions with probabilistic outcomes (see below).

Other evidence gathered using a range of choice tasks suggests that genotypic influences on serotonin activity can also influence the decision-making functions in both healthy and clinical populations. Thus, carriers of the ss allele of the 5-HTTPLR gene have shown enhanced attention toward probability cues during the performance of a risky choice task compared with carriers of the ll allele (Roiser et al, 2006), fewer risky choices in a financial investment task (Kuhnen and Chiao, 2009), and smaller impairments in probabilistic reversal tasks following tryptophan depletion (Finger et al, 2007), especially under aversive reinforcement conditions (Blair et al, 2008). Notably, female ss carriers have also shown poorer decisions under conditions of decision ambiguity (Stoltenberg and Vandever, 2010), poor performance of the Iowa gambling game compared with carriers of the ll allele (Homberg et al, 2008), and especially in OCD samples (da Rocha et al, 2008).

These findings reflect other evidence that the effects of tryptophan depletion upon cognitive functions are enhanced in female compared with male participants (Harmer et al, 2003). At the current time, it is unclear whether the apparently larger impact of the short-form allele in the decision making of female participants reflects gender-related differences in choices behavior that enhance the effects of the transporter genotype, or gender related to differences in the functionality of the serotonin system (Nishizawa et al, 1997). However, observations from neurological patients and fMRI experiments in healthy adults suggest that decision-making functions may also be lateralized toward the right hemisphere in male participants, but toward the left hemisphere in female participants (Bolla et al, 2004; Tranel et al, 2005), perhaps reflecting the use of different cognitive strategies (holistic/gestalt-type processing vs analytic, verbal) across the genders (Tranel et al, 2005). This raises the possibility that genotypic variation of serotonergic function (eg, involving the short-form allele of the 5-HTTPLR gene) has an apparently greater impact in female participants through altered modulation of their preferred strategies for making value-based choices.

Clarifying these relationships will be constrained by complex relationships between genotypic variation in 5-HTTLPR and underlying serotonin pre- and post-synaptic activity (David et al, 2005; Reist et al, 2001; Smith et al, 2004; Williams et al, 2003), whereas the mechanisms that mediate between genotype and individual differences in decision-making function remain unknown. It is also unclear why carriers of the short-form allele should show worse performance of the Iowa Gambling Task while being more sensitive to aversive stimuli that presumably include bad decision outcomes (Hariri et al, 2005). Perhaps some of these varied phenomena reflect other genotypic influences, including, for example, the finding that carriers of the TPH2 haplotype, associated with reduced tryptophan hydroxylase activity, show reduced risky choice of large, unlikely over small, likely reinforcers (Juhasz et al, 2010).

Serotonin and Risky Choice

Given the clinical association between altered serotonin function and risky behaviors, surprisingly few experiments have examined the role of serotonin in risky choices associated with larger rewards. Mobini et al (2000a, 2000b) found that while global serotonergic depletions altered delay discounting (as one might expect), it did not alter probabilistic discounting, suggesting that serotonin may only influence responses to risk where choice of the less likely options can be reinforced with larger rewards (Mobini et al, 2000a). Long et al (2009) tested for the effects of tryptophan depletion using a tightly controlled paradigm in which macaques were given choices between safe behavioral options and risky behavioral options that carried variable pay-offs (Long et al, 2009). Tryptophan depletion reduced choice of the most probably rewarded option—as Rogers et al (1999a, 1999b) had found originally in humans—but, critically, this behavior was associated with a reduction in the magnitude of the pay-offs needed to elicit switches from choices of the safe option toward the risky option, consistent with an induced increase in preference for risky decisions (Long et al, 2009).

Two recent experiments with human subjects confirm that serotonin activity may also influence risky decisions, specifically those involving aspects of non-normative choice. First, a 14 day of tryptophan supplements, enhancing serotonin activity, reduced the reflection effect, manifested as shifts between risk-seeking choices (when confronted with certain losses and options associated with larger losses or no losses) and risk-avoidant choices (when confronted with certain gains and options associated with larger gains or no gains at all) (Murphy et al, 2009). In addition, tryptophan supplements increased choices of gambles with small negative expected values, raising the possibility that serotonin mediates aspects of loss aversion. Second, shifts between risk-avoidant behavior when dilemmas are framed in terms of gains and risk-seeking behavior when framed in terms of losses (Tversky and Kahneman, 1981) was enhanced in carriers of the ss allele of the 5-HTTPLR genotype, such that their few risk-seeking selections for positively framed dilemmas involved stronger functional interactions between the amygdala and the prefrontal cortex (Roiser et al, 2009).

Finally, while serotonin may modulate risky choices through its influences upon learning from negative outcomes (Evers et al, 2005; see below), it is also likely that serotonin can alter choice behaviors via processing of positive reward signals when making decisions, consistent with the evidence of functional interactions between midbrain dopamine neurones and the serotonergic neurones of the raphe (Nakamura et al, 2008) and evidence that serotonin activity can facilitate aspects of dopamine-mediated reward processing (Aronson et al, 1995). Thus, Rogers et al (2003) showed that tryptophan depletion reduced healthy volunteers' attentional processing of reward cues, but not probability or punishment cues, when deciding between risky actions associated with uncertain outcomes, highlighting a role for serotonin in auxiliary cognitive activities—this time, attentional—in decision-making functions (Rogers et al, 2003).

COMPUTATIONAL MODELS OF SEROTONIN

Computational accounts of the role of serotonin in decision making and, by implication of its role in reinforcement learning, are much less well developed than those of dopamine, and have not been systematically tested. In rats, global depletions of serotonin have been shown to increase the value of a hyperbolic discounting parameter for the value of future rewards (Mobini et al, 2000a; Wogar et al, 1993). In humans, (Tanaka et al (2004, 2007) have articulated a model in which serotonin is proposed to modulate reinforcement value signals across different time scales within the ventral striatum and the dorsal striatum, respectively. Healthy adults completed a delayed-discounting procedure as part of an fMRI protocol following ingestions of amino-acid drinks instantiating three conditions: tryptophan depletion (leading to short-term reduced serotonin activity in the usual way; as above), acute tryptophan loading (intended to produce short-term increased central serotonin activity), and a neutral tryptophan-balanced amino acid preparation.

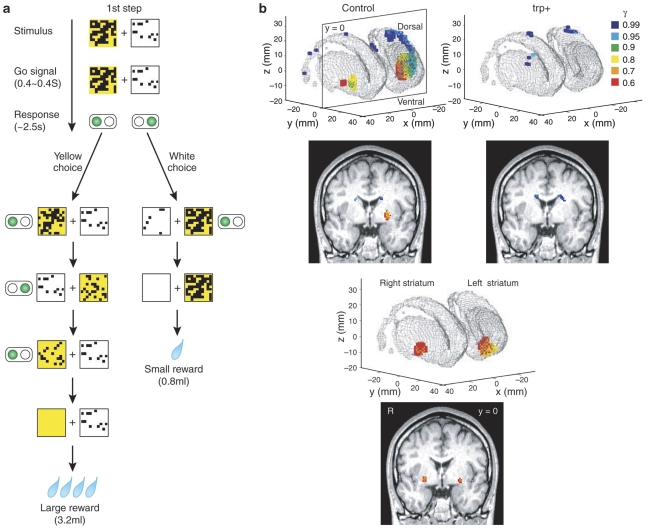

Participants were shown one white square (associated with a small reward) and one yellow square (associated with a large reward), each occluded by variable numbers of black patches, displayed side by side on a screen (Figure 2). Over subsequent trials, participants selected the white or yellow squares to remove progressively the occluding black squares to expose the whole of the square and being rewarded by a liquid reward. The (randomly varying) number of black patches removed at each step was such that the delay before a small reward was usually shorter than the delay before a large reward. Thus, participants needed to choose between the more immediate but small reward (white) and the more delayed but large reward (yellow) by comparing the number of black patches on the two squares. Tanaka et al (2007) modelled the value of anticipated reward at a number of delay-discounted rates.

Figure 2.

Task and striatal value signals at different delays as modelled by Tanaka et al (2007) in three groups of participants who underwent tryptophan depletion, tryptophan loading, and a neutral tryptophan-balanced condition. (a) Task sequence for decision-making task, in which participants seek to remove occluding black squares to obtain rewards at different delays. In this example, choosing the white square would deliver a small amount of juice (0.8 ml) in two steps. Choosing the yellow square delivers a larger amount in four steps. The position of the squares (left or right) was changed randomly at each step. (b) Voxels within the striatum in the three-dimensional mesh surface showing a significant correlation (P<0.001 uncorrected for multiple comparisons, n=12 subjects) with V(t) modelled at different delay running from short to long coded (red:orange:yellow:green:cyan:blue). Red- to yellow-coded voxels, correlated with reward prediction at shorter time scales, are seen located in the ventral part of the striatum, whereas the green- to blue-coded voxels, correlated with reward prediction at longer time scales, are located in the dorsal part of the striatum (dorsal putamen and caudate body).

Although tryptophan depletion did not enhance preferences for smaller, sooner reward over the larger, delayed reward—as it had in a previous experiment (Schweighofer et al, 2008)—it enhanced BOLD signals associated with short-term value signals within the ventral striatum while tryptophan loading enhanced the BOLD signal associated with longer-term reward valuations within the dorsal striatum (Figure 2) (Tanaka et al, 2007). These data suggest that serotonin modulates the delay-dependent component of values within learning systems that mediate goal-directed vs habit-based action selection (Balleine and O'Doherty, 2010).

The proposal that serotonin codes reward value across different delays, or reinforcement horizons, is also implicit in other models. Adopting the opponency between dopamine and serotonin into models of temporal difference learning, Daw et al (2002) has suggested that a tonic serotonin signal codes the long-run average reward rate of actions (as opposed to the phasic dopamine signal of reward prediction errors), whereas a phasic serotonin signal codes the punishment prediction error (as opposed to the signal of average punishment rate provided by tonic dopamine activity) (Daw et al, 2002). Relatedly, and incorporating the always salient role of altered serotonin function in lapses of behavioral control, Dayan and Huys (2008) have proposed that failures of behavioral control following reductions in serotonin activity—that can be induced experimental through manipulations of tryptophan depletion or identified clinically through, for example, the dysphoric states associated with depression (Smith et al, 1997)—can produce pervasive increases in the size of negative prediction errors that, in turn, engender negative affective states in vulnerable individuals (Dayan and Huys, 2008).

Experiments with humans confirm that manipulations of serotonin influence both learning from aversive events and adjusting behavior appropriately. Tryptophan depletion alters stimulus-reward learning as instantiated in simple or probabilistic reversal discrimination paradigms (Cools et al, 2008a; Rogers et al, 1999a). Both tryptophan depletion and single doses of the SSRI citalopram (perhaps acting pre-synaptically to reduce serotonin release), impair probabilistic reversal learning, possibly by increasing the signal evoked by bad outcomes within the ACC region and precipitating inappropriate switches away from the maximally rewarded response option (Chamberlain et al, 2006; Evers et al, 2005).

On the other hand, serotonin may also modulate learning depending upon the delays between actions and their outcomes. Tanaka et al (2009) asked groups of healthy adults to complete a monetary decision-making task in which choices were associated with good and bad outcomes delivered immediately or after an interval of three trials. Separate groups of subjects participated in the three conditions of tryptophan depletion, tryptophan loading, and the tryptophan balanced. Their performance was captured using a temporal difference reinforcement learning model that included a term for the time scales that constrained participants' ability to link efficiently good and bad outcomes to their behavioral choices (as an ‘eligibility trace'). Tryptophan depletion specifically retarded learning about delayed bad outcomes. As there were no other changes in learning about immediate bad outcomes or immediate or delayed good outcomes, these data highlight serotonin's role in learning about aversive outcomes at longer time intervals, perhaps contributing to problems with behavioral control under aversive conditions (Blair et al, 2008; Tanaka et al, 2009).

Serotonin and Social Choice

Serotonin probably plays a pivotal role in social choices. Evidence gleaned from clinical populations suggests that the mechanisms through which antidepressants have their therapeutic effects can include improvements in social function (Tse and Bond, 2002a, 2002b), whereas use of drugs such as 3,4-methylenedioxymethamphetamine that can enhance serotonin activity also heighten the reward value of social contact (Parrott, 2004). Laboratory experiments involving healthy adult volunteers show that treatments that augment serotonin activity (eg, administration of SSRIs) can influence psychometric and task-based measures of pro-social behaviors (Knutson et al, 1998; Raleigh et al, 1980; Young and Leyton, 2002), with additional indications that genotype variation in the 5-HTTLPR mediates attention toward social rewards (Watson et al, 2009).

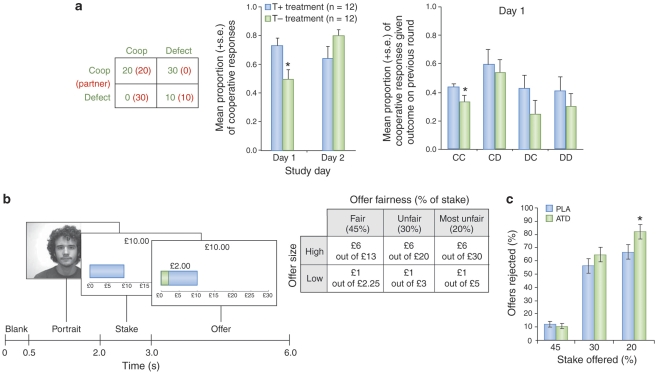

Social decision making has been investigated using a number of game theoretic models that offer ways to understand how values are used in social exchanges (Behrens et al, 2009; Kishida et al, 2010; Yoshida et al, 2008) and there is now accumulating evidence that serotonin plays a significant role in modulating social exchanges, as well as their appraisal in terms of fairness and reciprocity. In the first experiment of this kind, Wood et al (2006) showed that tryptophan depletion reduces cooperative responses of healthy (never-depressed) adults while playing an iterated, sequential Prisoner's Dilemma game in the first occasion that participants played the game (day 1; see Figure 3). This result is especially intriguing as the social partner in the game played a strict tit-for-tat (Wood et al, 2006). Tit-for-tat strategies are highly effective in eliciting cooperative behaviors through their clarity and the capacity to forgive occasional failures of cooperation (Axelrod and Hamilton, 1981). Therefore, this represents a strong test of whether serotonin activity might influence cooperative responding. Critically, tryptophan-depleted participants also failed to show an enhanced probability of future cooperative behavior following exchanges that involved mutually cooperative outcomes (Figure 3), suggesting that temporarily reduced serotonin activity diminishes the reward value attributed to mutual cooperation (Wood et al, 2006).

Figure 3.

Changes in social behavior consequent to tryptophan depletion in healthy control adults. (a) Pay-off matrix for the four outcomes in the iterated, sequential Prisoner's Dilemma (PD) game used by Wood et al (2006). Mean proportion of cooperative response following tryptophan depletion (T−) and the control treatment (T+) on the first and second study days. Conditional probability of making a cooperative choice given the four possible outcomes of immediately previous rounds of the game (CC=participant cooperates–partner cooperates; CD=participant cooperates–partner defects; DC=participant defects–partner cooperates; DD=participant defects–partner defects). (b) Example task display for the single-short Ultimatum Game used by Crockett et al (2008), with combination of fair/unfair offers for high/low monetary amounts. Mean percentage of fair/unfair offers rejected following tryptophan depletion (ATD) and control treatment (placebo). *p=0.01 difference between treatments.

Serotonin can also influence social choices involving more complicated notions of fairness as instantiated by the Ultimatum Game (UG). In the UG, one social partner (the ‘proposer') offers to split an amount of money with the recipient, who is asked to accept or reject on the basis that acceptance means that the proposer and the recipient receive the offered amount and the remainder, respectively, whereas rejection ensures that neither partner receives anything. Substantial evidence indicates that people will act irrationally (in the sense of forgoing certain gains) by rejecting what are perceived as unfair offers (eg, of less than 30%) (Guth et al, 1982). Such rejection of offers in the UG is mediated by activity within the ACC and the insula (Sanfey et al, 2003) and the right prefrontal cortex (Knoch et al, 2006; Koenigs and Tranel 2007). Tryptophan depletion increased the number of unfair offers rejected (Crockett et al, 2008), suggesting that serotonin mediates social decisions that center around notions of fairness. Convergent evidence is provided by observations that the number of rejections of unfair offers is associated with low serotonin platelet activity (Emanuele et al, 2008). Finally, the effects of tryptophan depletion on rates of rejection of unfair offers and delay discounting have been shown to corralate, suggesting a common neural mechanism (Crockett, in press).

Summary

To date, the vast majority of experiments testing the influence of serotonin in human decision making have used tryptophan depletion or, occasionally, tryptophan supplementation. These experiments show that manipulations of serotonin influence decision making in a number of overlapping domains as exemplified by consistent effects in probabilistic reversal learning and choices involving social reinforcers. The effects of genotypic variation in the serotonin transporter are mixed and much less consistent than the most comparable experiments involving, for example, the DRD2 genotype. Strikingly, the application of formal models of reinforcement learning (paralleling an earlier literature in rats; Mobini et al, 2000b) provide strong evidence that serotonin helps integrate delays into the value of rewards within the striatum, as well as playing a role in editing, in a time-dependent manner, how much is learnt about bad outcomes (as indicated by Tanaka and co-workers in their elegant experiments). The idea that serotonin underpins multi-faceted aspects of outcome values also highlights its probable involvement in non-normative aspects of human choice, including shifts between risk-averse and risk-seeking behaviors, and with social decisions where rewards can involve broader affective, as well as motivational influences (see below).

DOPAMINE/SEROTININ INTERACTIONS IN DECISION MAKING

To date, there have been very few direct comparisons of the role of dopamine and serotonin in the decision-making behavior of any species (see Campbell–Meiklejohn et al, in press). As noted above, Denk et al (2005) report that administration of pCPA impaired delay discounting in rats but not effort-based decision making, whereas administration of haloperidol produced the reverse pattern of behavioral changes. Using an analog of the Iowa Gambling Task, Zeeb et al (2009) explored the effects of systemic dopamine and serotonergic manipulations on gambling-like behaviors in rats. The administration of amphetamine and the 5-HT1A receptor agonist 8-OH-DPAT (acting pre-synaptically) reduced the selection of optimal responses that maximized reward opportunities per unit time. By contrast, administration of the D2 receptor antagonist, eticlopride, improved task performance, suggesting that serotonin and dopamine may play different roles in decisions involving degrees of risk and mixed outcomes (Zeeb et al, 2009).

In the human context, a few studies have examined the effects of genotypes that influence dopamine and serotonin function on valuation and decision-making measures. Zhong et al (2009) cleverly examined genotypic variation in the dopamine and serotonin transporter and risk attitudes in 350 subjects using a simple elicitation procedure. This study found that carriers of the 9-repeat allele of the DAT1 gene (that results in lowered extracellular dopamine levels) show more risk-seeking choices when deciding about gains, whereas carriers of the 10-repeat allele of the STin2 gene (resulting in higher extracellular serotonin levels) show more risk seeking for losses (Zhong et al, 2009). Consistent with this, these genotypes contribute to risky decisions as evidenced by observations that carriers of the 7-repeat allele of the DRD4 gene took more risks than individuals without this allele, whereas carriers of the ss allele of the 5-HTTPLR gene made 28% less risky choices than carriers of the s/l and l/l allele (Kuhnen and Chiao, 2009). It is possible that these genotypes confer risk for clinical disorders associated with decision-making problems such as gambling (Comings et al, 1999), as indicated by observations that administration of -DOPA increased the number of risky actions in carriers of the 7-repeat allele of the DRD4 gene (with no significant history of gambling behavior) compared with non-carriers (Eisenegger et al, 2010).

FUTURE DIRECTIONS FOR RESEARCH

At the current time, the exploration of formal computational models of dopamine and serotonin in value-based decision making has not been extensively investigated using direct pharmacological challenges, or even individual genotypic differences, in human subjects. Nonetheless, the available evidence is broadly in line with what we know about the role of dopamine function in reinforcement learning as derived from neurophysiological studies in primates and inferred from fMRI experiments in human subjects.

Notwithstanding the problems associated with combining drug treatments that have variable physiological and pharmacological effects (Mitsis et al, 2008), future priorities include the need to examine how dopamine modulation influences the wider constituent components of value (such as uncertainty and delay) in human choice and their multiplicative combination with other features of prospects to be manifested as ‘risk'. In particular, we need to know more about how the dopamine (and serotonin) systems deploy different kinds of value representations to make choices depending upon decision makers' present behavioral priorities and the relative reliability of the information available to them.

Serotonin and the Idea of ‘Appraised' Values

The absence of direct comparisons between the effects of dopamine and serotonin challenges in human subjects mean that it is also difficult at the current time to identify differences in the way these neuromodulators influence decision-making function. However, I propose that serotonin exerts a pervasive influence upon decision making by mediating the appraised meanings attached to good and bad decision outcomes. Although speculative, there are several strands of evidence that highlight the potential for serotonin to influence human choice in this way.

First, the negative cognitive biases associated with depression are likely influenced by serotonergic (and noradrenergic) rather than dopaminergic mechanisms, and negative cognitive biases can be modelled by the temporary reduction in serotonin activity achieved by tryptophan depletion in healthy non-clinical adults (Harmer, 2008). Moreover, treatments that increase serotonin activity can have the effect of reversing these biases so that they can enhance the processing of positive emotional signals and memory for positive information (Harmer et al, 2004). Thus, disturbances in serotonin function, achieved by pharmacological challenge or through psychopathology, may influence the parameters that determine outcome values in reinforcement learning models—including longer-term rewards rates (Daw et al, 2002) or delay parameters (Doya, 2008)—precisely by influencing the cognitive appraisal of dilemmas giving rise to these outcomes and the appraisal of the decision outcomes themselves.

Moreover, if serotonin activity can influence the activity of positive vs negative cognitive biases, it should not surprise that tryptophan depletion and other manipulations that enhance such biases find greater expression in the processing of negative choice outcomes (Cools et al, 2008b; Dayan and Huys, 2008), as aversive events more frequently warrant reappraisal. Indeed, this proposal is reminiscent of an older theory suggested that activity of the dorsal raphe nucleus might mediate coping responses to such events (Deakin, 1998; Deakin and Graeff, 1991), and that variation in the functioning of the ascending serotonin system confers vulnerability to psychological disorders involving depression and anxiety (Deakin and Graeff, 1991). Accordingly, variability in the way that serotonin furnishes the appraised meaning of choices and their outcomes may contribute to disorders involving altered action selection.

Second, the above perspective is consistent with other data showing that serotonergic innervation of hippocampal structures mediates learning about the context of aversive and emotionally significant events (Wilkinson et al, 1996). As the meaning of dilemmas and their outcomes can be altered by their context (Tversky and Kahneman, 1981), one might expect that manipulations that depress serotonin activity, such as tryptophan depletion, can have variable results depending upon salient meaning of the choices and outcomes promoted by their environmental/experimental context (Rogers et al, 1999b; Talbot et al, 2006).

Third, the potential of serotonin to mediate the appraisal of decisions and outcomes may explain its apparently central role in determining social choices since these are quintessentially subject to interpretations in terms of their interpersonal significance. Appraisals of social choices frequently incorporate complex judgments about others' intentions that might promote the development of cooperative relationships or judgment about fairness (Frith and Singer, 2008; Singer and Lamm, 2009). Consequently, we might expect the deleterious effects of tryptophan depletion on the development of social cooperative relationships (Wood et al, 2006) and the enhanced rejection of what are deemed to be unfair offers in a social exchange should be accompanied by changes in the social appraisals that influence these behaviors (Crockett et al, 2008). Recently, Behrens et al (2009) have noted that social behaviors are supported by at least two networks of neural systems: one mediating reinforcement learning and another that supports ‘mentalizing' operations or inferences about the intentions and mental states of social partners involving, for example, activity in dorsomedial prefrontal areas, the temporal parietal junction and superior temporal regions (Frith, 2007). I propose that serotonin activity can influence social choices by modulating the evaluative functions over social outcomes within this wider circuitry.

This is not to say that dopamine does not influence the representation of decisions outcomes within cortical regions; or that serotonin activity cannot influence value representations within the sub-cortical or striatal sites that are also subject to dopaminergic modulation. In both cases, we know these things to be true (Schweimer and Hauber, 2006; Tanaka et al, 2007). However, it is striking that single doses of the dopamine D2 agonist, bromocriptine, in healthy volunteers, as well as -DOPA administration in patients with Parkinson's disease, disrupted BOLD signals within the ventral striatum following bad outcomes as part of probabilistic reversal learning or prediction tasks (Cools et al, 2009, 2007) while contrast, tryptophan depletion enhanced BOLD within the dorsomedial prefrontal cortex following negative outcomes (Evers et al, 2005). Therefore, future research will need to identify the broader psychological processes influenced by serotonin compared with dopamine in computing context-dependent values.

RD Rogers has no biomedical financial interests or potential conflicts of interest.

References

- Adinoff B, Rilling LM, Williams MJ, Schreffler E, Schepis TS, Rosvall T, et al. Impulsivity, neural deficits, and the addictions: the ‘oops' factor in relapse. J Addict Dis. 2007;26 (Suppl 1:25–39. doi: 10.1300/J069v26S01_04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronson SC, Black JE, McDougle CJ, Scanley BE, Jatlow P, Kosten TR, et al. Serotonergic mechanisms of cocaine effects in humans. Psychopharmacology (Berl) 1995;119:179–185. doi: 10.1007/BF02246159. [DOI] [PubMed] [Google Scholar]

- Aston-Jones G, Cohen JD. Adaptive gain and the role of the locus coeruleus–norepinephrine system in optimal performance. J Comp Neurol. 2005;493:99–110. doi: 10.1002/cne.20723. [DOI] [PubMed] [Google Scholar]

- Axelrod R, Hamilton WD. The evolution of cooperation. Science. 1981;211:1390–1396. doi: 10.1126/science.7466396. [DOI] [PubMed] [Google Scholar]

- Balleine BW, O'Doherty JP. Human and rodent homologies in action control: corticostriatal determinants of goal-directed and habitual action. Neuropsychopharmacology. 2010;35:48–69. doi: 10.1038/npp.2009.131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumgartner T, Heinrichs M, Vonlanthen A, Fischbacher U, Fehr E. Oxytocin shapes the neural circuitry of trust and trust adaptation in humans. Neuron. 2008;58:639–650. doi: 10.1016/j.neuron.2008.04.009. [DOI] [PubMed] [Google Scholar]

- Bazanis E, Rogers RD, Dowson JH, Taylor P, Meux C, Staley C, et al. Neurocognitive deficits in decision-making and planning of patients with DSM-III-R borderline personality disorder. Psychol Med. 2002;32:1395–1405. doi: 10.1017/s0033291702006657. [DOI] [PubMed] [Google Scholar]

- Bechara A, Dolan S, Denburg N, Hindes A, Anderson SW, Nathan PE. Decision-making deficits, linked to a dysfunctional ventromedial prefrontal cortex, revealed in alcohol and stimulant abusers. Neuropsychologia. 2001;39:376–389. doi: 10.1016/s0028-3932(00)00136-6. [DOI] [PubMed] [Google Scholar]

- Bechara A, Tranel D, Damasio H, Damasio AR.1996Failure to respond autonomically to anticipated future outcomes following damage to prefrontal cortex Cereb Cortex 6215–225.Seminal demonstration that focal lesions of the ventromedial prefrontal cortex are associated with clear changes in value-based decision making optimizsed over the longer term. [DOI] [PubMed] [Google Scholar]

- Behrens TE, Hunt LT, Rushworth MF. The computation of social behavior. Science. 2009;324:1160–1164. doi: 10.1126/science.1169694. [DOI] [PubMed] [Google Scholar]

- Berthet A, Porras G, Doudnikoff E, Stark H, Cador M, Bezard E, et al. Pharmacological analysis demonstrates dramatic alteration of D1 dopamine receptor neuronal distribution in the rat analog of -DOPA-induced dyskinesia. J Neurosci. 2009;29:4829–4835. doi: 10.1523/JNEUROSCI.5884-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Besson M, Belin D, McNamara R, Theobald DE, Castel A, Beckett VL, et al. Dissociable control of impulsivity in rats by dopamine d2/3 receptors in the core and shell subregions of the nucleus accumbens. Neuropsychopharmacology. 2010;35:560–569. doi: 10.1038/npp.2009.162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blair KS, Finger E, Marsh AA, Morton J, Mondillo K, Buzas B, et al. The role of 5-HTTLPR in choosing the lesser of two evils, the better of two goods: examining the impact of 5-HTTLPR genotype and tryptophan depletion in object choice. Psychopharmacology (Berl) 2008;196:29–38. doi: 10.1007/s00213-007-0920-y. [DOI] [PubMed] [Google Scholar]

- Bolla KI, Eldreth DA, Matochik JA, Cadet JL. Sex-related differences in a gambling task and its neurological correlates. Cereb Cortex. 2004;14:1226–1232. doi: 10.1093/cercor/bhh083. [DOI] [PubMed] [Google Scholar]

- Booij L, Tremblay RE, Leyton M, Seguin JR, Vitaro F, Gravel P, et al. Brain serotonin synthesis in adult males characterized by physical aggression during childhood: a 21-year longitudinal study. PLoS One. 2010;5:e11255. doi: 10.1371/journal.pone.0011255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown GL, Goodwin FK, Ballenger JC, Goyer PF, Major LF. Aggression in humans correlates with cerebrospinal fluid amine metabolites. Psychiatry Res. 1979;1:131–139. doi: 10.1016/0165-1781(79)90053-2. [DOI] [PubMed] [Google Scholar]

- Camara E, Kramer UM, Cunillera T, Marco-Pallares J, Cucurell D, Nager W, et al. The effects of COMT (Va1108/158Met) and DRD4 (SNP -521) dopamine genotypes on brain activatlons related to valence and magnitude of rewards. Cereb Cortex. 2010;20:1985–1996. doi: 10.1093/cercor/bhp263. [DOI] [PubMed] [Google Scholar]

- Campbell-Meiklejohn D, Wakeley J, Herber TV, Cook J, Scollo P, Kar Ray M, et al. Serotonin and dopamine play complementary roles in gambling to recover losses: Implications for pathological gambling Neuropsychopharrnacology(in press). [DOI] [PMC free article] [PubMed]