Abstract

While health care facilities recognize the need for dedicated picture archiving and communication system (PACS) staff at the time of the initial implementation of PACS, they often do not plan accordingly for ongoing or increasing PACS support needs as a PACS matures. This article reviews trends in a health care system’s PACS support data over 4 years to show how PACS support needs evolve over time. PACS support items were logged and categorized over this period and were used by the health care system to become more proactive in system support and adjust staffing levels accordingly. This article details how PACS support needs change over the life of a PACS installation and can be used as a model for health care facilities planning for future PACS support needs.

Key words: PACS, digital imaging, support

Background

As the picture archiving and communication system (PACS) has grown to become a more mature health care information technology, the importance of having dedicated PACS support staff has been recognized.1 However, there are numerous job titles and descriptions applied to PACS support personnel2 and the responsibilities carried out by these individuals can vary significantly from site to site.3,4. In an attempt to try to bring some level of standardization to the industry, PACS certification has arisen in recent years.5,6 While certification assists health care facilities in their selection of PACS support staff, there is little information to help facilities determine how many individuals are needed to support their PACS.7 Furthermore, even less information is available to assist facilities in understanding how their support needs may change after a PACS is installed.

With today’s economic downturn,8 it is essential that health care facilities correctly staff their PACS support functions to avoid unnecessary labor costs while assuring that users’ needs are met and system uptime is not compromised. PACS support staffing errors can be very costly for health care facilities. Facilities often pay for very expensive vendor support contracts because they have not staffed their PACS support activities properly. Because these facilities lack specific data on their support needs, they are unable to cut these costs by appropriately hiring and staffing PACS support internally.

Given the potentially high cost of incorrectly staffing PACS support, it is surprising that the PACS literature does not contain much information on how to staff a PACS beyond initial implementation. Likewise, there is very little published on the specific issues encountered by health care organizations once a PACS goes live. This includes the staffing required to address those issues as they arise. Such information could be used to help facilities better understand how PACS support staffing may change over the life of the PACS and staff appropriately without incurring unnecessary costs.

The objective of this article is to help overcome these limitations in the literature and look at one health care system’s PACS support history over 4 years and how staffing was adjusted in response to the trends analyzed via this support data.

Methods

The Main Line Health System (MLHS) implemented a single-enterprise PACS in the summer of 2003. Initially, the system was implemented to support both radiology and invasive and noninvasive cardiology across the entire health system. Later, the system was expanded to support additional departments including perinatology and neurodiagnostics. In 2008, the MLHS PACS archived approximately 550,000 studies and had over 2,500 users on the system.

Beginning at implementation, reported PACS issues were recorded and categorized. Issues were reported by end users through a centralized help desk or directly to the PACS support team. In both cases, the issues were logged in a computerized help desk system. The PACS support team categorized all issues regardless of the method of reporting (e.g., if a user reported a problem as an application issue but the PACS team determined that the issue was related to user training, then the PACS team would change the category of the ticket to reflect that the issue was related to application training). Thus, initially falsely reported issues were later correctly categorized for the data used in this study. The PACS support team initially consisted of three individuals at the time the PACS was implemented. A fourth individual was added in August 2006 and a fifth individual was added in December 2008.

Tickets were categorized into the following categories: application training issues, new user training, application issues, hardware issues, printing issues, network-related issues, modality-related issues, interface issues (i.e., radiology information system [RIS], speech recognition, advanced visualization), and other items.

On-call (i.e., after hours) issues were included in overall issue totals; however, they were also tracked separately to investigate on-call coverage trends. On-call support was classified as issues reported between 6 p.m. and 7 a.m. Monday through Friday and all day on weekends and holidays.

For significant system issues that impacted a majority of users or all users at a single site, the PACS team followed a standardized process of reporting these issues as quickly as possible to the impacted departments and users to attempt to keep users informed of the nature of the issues, provide workarounds where appropriate, and give updates on progress toward resolution of those issues. To some degree, this reduced duplicate reports of issues beyond the initial reports.

The initial year and a half of data collection are not included in this article as this was a significant period of learning and expansion of the system. Instead, this article focuses on the reported issue data collected between January 1, 2005 and December 31, 2008 when the system was completely implemented and established and staff was fully trained and routinely using the full capabilities of the PACS.

Results

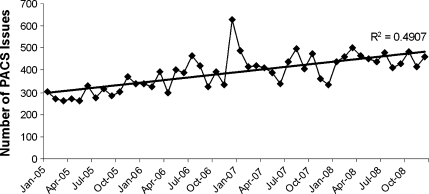

Figure 1 shows the historical month by month number of PACS issues reported from the start of 2005 through the end of 2008 along with a simple linear regression of the data. As seen in the linear regression, there is clearly an upward growth in the volume of issues over the life of the PACS. Between 2005 and 2008, the average issue volume per month rose to over 47% and the average on-call issue volume rose to over 92%.

Fig 1.

Number of PACS issues addressed by the PACS team on a monthly basis between January 2005 and December 2008.

Over this period, the mean number of issues reported per month was 390; however, the standard deviation of 79 clearly aligns with the significant variability of the data over time.

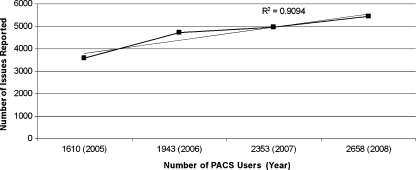

Figure 2 presents the annual number of PACS issues reported versus the total number of system users at the end of each year. As seen in the linear regression, there is a strong correlation between the number of issues reported and the number of users on the system as expected.

Fig 2.

Number of PACS issues reported annually versus the total number of PACS users at the end of each year.

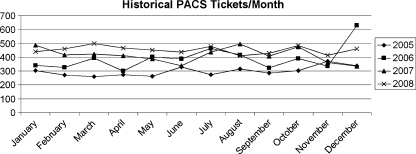

Figure 3 shows the volume of PACS issues reported by month. As expected, the volume of issues declines into early summer as summer vacations increase. The period of July into August shows some increase in ticket volume. This is due to new physician residents starting at MLHS in July. Ticket volumes again typically drop off in November and December due to end-of-year holidays and associated vacations. The large number of issues in December 2006 was due to a major system upgrade where the system was upgraded two versions, the database was upgraded, and nearly all system hardware was replaced.

Fig 3.

Yearly comparison of PACS issues on a monthly basis.

Figure 4 shows application training issues as a percentage of all PACS issues on a monthly basis over time. As users were still relatively new to the PACS in early 2005, nearly 18% of all issues were related to application training. This percentage continued to decline over the course of 2005 to the point where it was under 10% of all issues by the end of the year. In subsequent years, this percentage continued to decline to the point where these issues were under 4% during the last quarter of 2008. The slight rise in percentage during the first several months of 2007 was related to users continuing to learn the new functionality of the system introduced with the system upgrade in December 2006.

Fig 4.

Percentage of monthly PACS issues related to user application training over time.

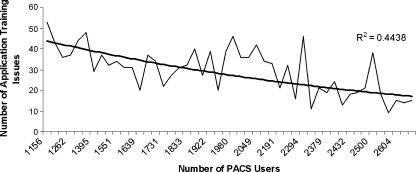

Figure 5 shows the number of PACS application training issues reported each month versus the total number of system users at the end of the month. As evident in the exponential regression, there is a decline in the volume of application training issues even as the number of users on the system grows.

Fig 5.

Number of PACS issues related to user application training versus total number of PACS users at the end of each month.

Figure 6 shows application issues as a percentage of all PACS issues on a monthly basis over time. This figure is generally the opposite of Figure 3. As application training issues diminish, the number of application issues increase. This reflects the transition from end user training to troubleshooting and resolving actual application issues over the life of the PACS.

Fig 6.

Percentage of monthly PACS issues related to the PACS application over time.

Figure 7 presents the number of PACS application issues reported each month versus the total number of system users at the end of the month. As evident in the exponential regression, there is an increase in the number of application issues over time as the number of users on the system grows.

Fig 7.

Number of PACS issues related to the PACS application versus total number of PACS users at the end of each month.

Finally, Figure 8 shows hardware issues as a percentage of all PACS issues on a monthly basis. After some higher than normal hardware issues during the beginning of 2005, the percentage of hardware-related issues settled around the mean of 5.4% (standard deviation = 0.02).

Fig 8.

Percentage of monthly PACS issues related to hardware failures over time.

As the additional issue categories reflect smaller percentages that are relatively consistent, they are not included in this article.

In terms of duplicate issues, the data was analyzed to look for any individual days where the same issue was reported at least three times regardless if these reports were from one or multiple users. In 2005, there were 12 days where duplicate issues were reported; in 2006, there were 19 days; in 2007, there were 6 days; and in 2008, there were 11 days. The increase in 2007 was attributed to the major system upgrade that took place during that year. Given the relatively small number of days in any year and the relatively small number of duplicate reported issues on those days compared to the overall volume of issues reported in any given month or year, the duplication of reported issues did not affect the overall data trends. Duplicate issues that were reported over more than 1 day were not assessed given the difficulty to historically determine if the issues were a continuation of an unresolved issue, a recurrence of an issue that was previously resolved, or a new form of the issue that appeared to have the same symptoms.

Discussion

When the enterprise PACS was first installed at MLHS, PACS support staffing was originally established as four individuals with the thinking that this would be sufficient staffing to get the PACS project underway and allow for one support team member at each major campus with a fourth individual who could supervise the group, plan for future growth, manage upgrades and new implementations, etc. It was also assumed that, after the PACS was fully implemented, the support workload would remain relatively stable over time. As a result, when the PACS was implemented and operational across the enterprise for about a year, the PACS support team was reduced by one member because it was felt that, with a consistent (or potentially even diminishing) workload, a resource could be better utilized performing other non-PACS-related support tasks. This decision was made partly because of the lack of PACS support requirement information in the literature and the lack of any significant MLHS PACS support trending data at the time.

At the start of 2005, when the data included in this article began, the MLHS PACS support team consisted of only three individuals. Not only were these individuals responsible for all day to day support of the PACS, but they also were responsible for all new user training; setup, training, and testing associated with integrating new imaging modalities to PACS; maintaining and testing interfaces to other information systems, such as a hospital information system (HIS), RIS, and speech recognition system; planning for future growth and system needs; etc. While the data in this article focuses strictly on support needs, it is important to keep in mind that a PACS support team has many other responsibilities that fall outside of support.

As the data in the previous section highlights, PACS support needs do not diminish or even remain stable but continue to grow over time. Certainly, the type of support changes over time.

Early on, there was a much larger need for end user application support. Initially, a lot of the reported issues resulted around one of the following application support areas:

Training radiologists, cardiologists, and other diagnostic physicians on using specific functionality within the PACS. In particular, there was a lot of focus on customizing the system. This included building custom user-specific filters to locate data more effectively, developing individual hanging/display protocols, customizing hotkeys or mouse shortcuts for individuals, and helping these users locate specific functionality within the system.

Training attending physicians on utilizing the functionality of the PACS Web applications that accompanied the system. This training was more straightforward in terms of providing basic instruction on how to perform common tasks within the Web applications; however, unlike the diagnostic physician users, the attending physicians were not directly trained by the system vendor and, therefore, typically had shorter initial training sessions.

Training technologists and other departmental staff not only on system functionality, but also on workflow associated with using the PACS. This included how to scan paper documents into the system and when to do so, how to address QA issues on the PACS, how to link combination studies (e.g., computed tomography of the chest, abdomen, and pelvis), and how to address cases where bad images or studies were accidentally sent to the PACS. These issues tended to focus as much on process and workflow as on specific functionality within the PACS.

All of these initial user training issues are not unusual when any new technology is introduced within an organization. By the end of 2005, the PACS team was able to get most users comfortable with using the system and address these training type issues. Furthermore, over this year, the PACS team was able to leverage its experience of consistently helping end users resolve certain issues to in turn provide more proactive support by holding specific training session for technologists, radiologists, superuser, etc. By holding these training sessions and developing training documentation as a reminder on how to perform common tasks in the system, the PACS team was able to reduce the overall number of application training issues reported over time. Furthermore, the PACS team was able to incorporate the lessons learned from their analysis of the reported issue data to improve user training as new users were added to the system. For instance, based on experience, the PACS team was able to customize its Web training for attending physicians so that it took the same amount of time but was focused on those items for which users were consistently requesting additional support initially. As a result, even as the number of users of the system continued to grow, the number of application training-related issues declined.

As evident in the data presented in the previous section, the PACS team transitioned to a greater need for troubleshooting and resolving actual application issues that users could not resolve on their own. Among the types of more complex application issues that arose over time were:

Addressing differences in Digital Imaging and Communications in Medicine (DICOM) data from various modality vendors—while the DICOM standard is very specific in terms of what format data must take, it does allow vendors the option to present data in different fields including their own proprietary fields. As radiologists build more complex display protocols based on specific DICOM data, these differences in DICOM datasets require investigation and mapping. Similarly, in some cases, not all vendors send a particular DICOM data element. As a result, labels may not appear as desired on all images or desired window/level settings may vary from similar modalities produced by different vendors. In certain instances, DICOM elements are present in some procedure types produced by a modality but not other procedure types produced by the same modality due to differences in imaging protocols. As a result, this causes differences in the appearance of images requiring investigation and standardization of protocols to produce consistent images and DICOM data. Initially, many of these DICOM differences are not noticed by the physicians reading the studies; however, as those physicians become more comfortable with the system and as more and more prior studies are available within the PACS for comparison, these differences are noticed and raised as an issue.

Identifying and addressing additional data—in some instances, additional images are added to a study that has been stored on PACS and has been reported by a physician. These images may have been bad images that were rejected by the technologist at the modality, may be good images but the physician read the study too quickly, or may be images that belong under another study or patient. In some cases, the technologist recognizes that these images have been added but cannot do anything with the images because the study has already been reported. In other cases, the technologist does not even realize that the images have been sent to PACS or knows that the images were sent to PACS but cannot locate the images because they are under the incorrect patient or study. As a result, the PACS team needs to review logs and studies to determine where bad data may have gone and move or remove the incorrect data using back end tools to ensure that the database remains updated. Not only does the PACS team need to respond to items that were reported, but it also needs to identify cases where incorrect data was added to studies and was not reported. This can take significant time to locate the bad data and ensure that it is correctly located within PACS and that it is reviewed by a physician in a timely manner.

Adding and supporting additional functionality and customization—as the PACS matures, end users are continually looking for new functionality or customization to allow them to be more efficient using the PACS or to allow them to do more with the system than when they first began using the system. Making the changes often requires significant time to configure the application and test the impact of the changes. However, more time is consumed when making such changes introduces issues into the system that may not have been seen in testing in a test environment. Often, these issues do not immediately arise or are not immediately seen in production. When they are identified, the PACS team may spend significant time troubleshooting the issues, finding the underlying cause, and working to resolve the issue. In some instances, these issues need to be escalated to the PACS vendor’s development team. When this happens, there may be significant time spent to capture relevant log files, test out new patches or system changes, and roll out these changes into the production system. Even though the vendor resources may be spending the majority of time on the issue, there is still a substantial amount of time spent by the in-house PACS support team in working with the vendor resources, updating impacted users, testing changes, etc.

Addressing system changes and issues affiliated with system upgrades or updates—over time, a PACS will be updated or upgraded many times. Furthermore, even if the application itself is not updated, there are regular updates to operating systems, antivirus applications, and other software that may run on the PACS servers and workstations. Each of these changes has the ability to introduce issues or eliminate functionality that previously existed prior to the update. Even though these updates are tested in a test environment, it is impossible to match the production environment in a test environment. Therefore, despite testing, there are always issues that arise in production when updates are applied. Again, these issues may not be immediately seen. Certainly, there is an option to remove the updates; however, this is only a temporary solution and does not allow the needed changes in the updates to be applied. As a result, the PACS team can spend significant time discovering, analyzing, and addressing issues that arise when updates or upgrades are made to the system. At the time of a major upgrade, there can be numerous issues that are identified simultaneously and it can be difficult to determine the cause of the issues as there are so many changes at one time. Even in minor upgrades, the PACS support team can spend significant time trying to determine the cause of an issue that appears after an upgrade. Often, these items need to be passed back to the PACS vendor’s developers and significant time is spent working with those developers on a resolution. And implementing the resolution for one problem may introduce another problem.

Addressing issues that arise due to system growth—for a large PACS, there are continually new modalities integrated to the system, new workstations added to the system, and new users using the system. All of this growth on the PACS may lead to performance issues with the application that are not seen when the PACS is first implemented. These issues may be difficult to diagnose at first because the problems appear gradually and inconsistently but become more significant over time as system expansion continues. Therefore, the PACS team may spend significant time trying to determine the cause of these issues and given that these issues are caused by increased system demands often there is not an easy solution, which can lead to frustration for end users. In some cases, database upgrades or optimization are needed. In other cases, the system needs to be reconfigured to better handle system demands. And in still other cases, upgrades, hardware updates, or system redesigns are required. In any case, the level of complexity in resolving these issues is significant.

Addressing interface changes—when a PACS goes live, it may have only some basic HL7 interfaces to a RIS or HIS. Over time, the requirements for sending data to or receiving data from a RIS, HIS, or other information systems, such as a speech recognition system or electronic medical record, may substantially increase. Just as the PACS is continually updated and changed over time, all of these other interfaced systems are also changing. This has the potential to introduce problems into existing interfaces. Again, these interface or system changes are tested in a test environment but it is not possible to fully capture the production systems in the test environment and, invariably, issues arise. Given that multiple systems are involved, there may be substantial time spent by the PACS team trying to determine the origin of the issue and even more time trying to have multiple vendors work out resolutions to the issues between their systems. Even for established interfaces, there may be problems introduced as new departments, procedures, users, etc. are created in those systems. Or users may begin to look at specific data fields provided through those interfaces that they had not looked at previously and begin to notice issues that may have historically existed between the systems.

It should be pointed out that, in many cases, these more complex issues existed from the initial implementation of the PACS or sometime shortly after the implementation. However, as users and the PACS support team were focused on the more basic functionality of the system, these items often went unnoticed or were identified but not given higher priority for resolution due to more urgent user training needs. Furthermore, the PACS support team gradually became more skilled in identifying and addressing system issues and were better able to handle these issues as they were freed up from providing a significant amount of application training.

Ultimately, not only did the volume of issues increase over time, but the amount of work required to address those issues increased as well as it typically takes more time to resolve application issues compared to the time it takes to answer application questions or show users how to use specific functionality within the system.

Workstation and server hardware was replaced by MLHS about every 3 years to try to maintain consistent uptimes. While the overall number of issues per month increased over the period of study thereby making the number of hardware failures greater per month, MLHS continued to add additional workstations, servers, and other hardware throughout the period of study which would account for the greater number of hardware failures. For example, the number of workstations increased by over 35% and the number of servers increased by over 47% during the period of study. Nevertheless, even though the percentage of hardware issues remained relatively consistent between 2005 and 2008, the volume of hardware issues increased thereby adding the volume of more comlex issues to be addressed by the PACS support team.

As the volume of issues increased as well as the complexity of issues increased, the PACS team also found itself able to troubleshoot and provide first-level support at an equivalent level to its PACS vendor through the additional knowledge the PACS team acquired about the system. This allowed MLHS to utilize cooperative support with the PACS vendor further lowering overall PACS support costs. Cooperative support meant that MLHS PACS staff took first call on all PACS issues, handled all application training items, managed all hardware replacement, monitored and restarted PACS services and applications, collected all system logs, restarted information system and modality interfaces, deploy application and operating system patches, etc. (i.e., only issues requiring second-level or developer support were passed to the vendor). Through the 4 years of this study, MLHS was able to save $872,611 in support costs by moving to a cooperative support program. In fact, MLHS was able to continue to decrease its support costs over the length of this study through greater utilization of cooperative support. For example, annual support costs were approximately $117,000 less in 2008 than in 2005 even though substantially more hardware and software was installed in 2008.

In addition to the cost savings, MLHS was able to see improved turnaround times in response to issues. Although specific response times were not collected, improvements were clearly seen in cases where vendor field engineers previously needed to be dispatched to the site. MLHS PACS support staff could immediately begin working on troubleshooting and resolution of issues, whereas under the full PACS vendor support model, if a field engineer needed to be dispatched, there was typically a delay of anywhere between an hour up to several days awaiting a field engineer to appear on site. Similarly, although the PACS vendor was extremely prompt in handling issues reported to them, there was always some small delays while the MLHS PACS team collected information, contacted the PACS vendor, conveyed that information to the vendor support personnel, and waited for the vendor support personnel to remotely connect into the system. Although this may have only been a few minutes, for significant issues, eliminating these delays by having the MLHS PACS staff immediately respond to and resolve the issues was extremely valuable in maintaining system uptime and performance.

Furthermore, because MLHS staff was on site and continually working with the system, it was much easier to determine if solutions were effective. While the PACS vendor would monitor the system for some period of time after a solution was implemented, they were not continually monitoring or using the system. As a result, if symptoms of a problem reoccurred, it was very unlikely that the vendor would be aware of these symptoms unless they happened very shortly after the resolution of the issue. When the MLHS staff took over more support responsibilities, they were continually using the system, could spot trends that had led to issues previously, and could proactively address those items before they became problems for the end users.

However, the additional support responsibilities assumed by MLHS also increased the workload on the PACS team. Due to all of these increased PACS support demands and the PACS support trend data shown in this article, MLHS restored the fourth PACS support position in the summer of 2006. A portion of the cost savings assumed through expanded use of the PACS vendor’s cooperative support program was used to fund the additional MLHS PACS support position.

As the PACS support needs became more complex, the skill set required to support those needs also changed over time and MLHS expanded the basic PACS support position into a two-tiered support structure with the creation of a senior-level support position. The senior-level support staff is responsible for the more complex PACS issues that previously were only handled by the PACS vendor support staff.

The largest overall spike in support items immediately followed a major system upgrade that also included replacement of nearly all system hardware in December 2006. At the time the system was upgraded two full versions, so there was a significant learning curve for many users as they needed to learn a new interface, Web application, functionality, etc. This was not surprising given the significant change within the system. However, a major upgrade to just the cardiology portion of the system at the end of January 2008 did not produce such a dramatic support spike in subsequent months. Certainly, this involved a much smaller population of end users than the enterprise upgrade in 2006, but it was still anticipated based on the response in 2006 and the fact that the user interface for cardiology changed more significantly than had the enterprise user interface in 2006 that there would be some increased volume in support items. MLHS did not experience the same spike at the time of the cardiology upgrade. This may have been due to the additional preparation put into place to address some of the issues that arose as part of the 2006 upgrade.

As support issue volumes continued to rise, MLHS again added another member to the PACS support team in December 2008. Based on the PACS support issue growth trends, MLHS has added an additional PACS support team member roughly every 2.5 years. This was not budgeted in the original plans for the enterprise PACS. However, the support data analysis has been instrumental in helping to better plan for appropriate PACS staff growth and also provides for future planning of MLHS PACS staffing needs.

One item that was not analyzed in this study was the amount of time required to address each issue and whether or not this changed over the course of the study. This information was collected with each issue; however, the time spent was manually recorded by each member of the PACS support team when he or she reported an issue as closed. As a result, this information was extremely subjective and varied significantly not only by the individual that closed a particular issue, but also by how long an issue remained open, how many different individuals worked on an issue, and how much vendor involvement was needed with resolving an issue. For future studies of PACS support trends, it is recommended that this data would be collected in a more quantitative manner and included with those studies as it could further quantify specific PACS support needs.

An additional item for future PACS support study consideration would be an objective assessment of the complexity of reported PACS issues. Through experience, the MLHS PACS support team found that certain PACS issues were more complex. This data was not recorded in this study. As a follow-up study, the complexity of PACS issues could be assessed by assigning weighted criteria to each issue and scoring the complexity of the issues based on those criteria. Among the relevant criteria that could be included in such a study would be the length of time required to address an issue, whether or not an issue needed to be escalated internally within a hospital support system or externally within a vendor (e.g., does the vendor need to pull in developers or can support staff resolve the issue), the number of individuals required to address the issue, and the number of devices or systems impacted by the issue. Subjective scoring could be used to accompany the objective measures. Subjective scoring could be assigned by asking members of the support team to assign a complexity value to each issue they address. Such subjective and objective scores would be useful to better term how the complexity of PACS issues changes over the lifetime of a PACS implementation.

Conclusion

While many health care organizations successfully staff for PACS support at the start of a PACS implementation, the evolving needs of PACS support are not often considered or addressed beyond that point. As a result, health care organizations may find themselves lacking adequate PACS support staff as a PACS matures. As a result, this may lead to higher costs to utilize more expensive vendor support resources over time, may lead to delays in providing PACS support to end users which in turn may lead to delays in patient care, or may lead to dissatisfied end users ultimately leading to inefficiency in system use, poor system adoption, requests to replace an existing PACS with a different system at a high replacement cost, or other related issues.

This article highlights the need for health care organizations to analyze their PACS support trends over time for staffing purposes. Both the volume of PACS issues and the type of issues change over time and health care organizations must be proactive to address their changing PACS support needs to prevent costly or harmful PACS support mistakes from arising and ultimately ensuring the success of the PACS implementation within their organizations.

References

- 1.Treitl M, et al. IT services in a completely digitized radiological department: value and benefit of an in-house departmental IT group. Eur Radiol. 2004;15(7):1485–1492. doi: 10.1007/s00330-004-2609-5. [DOI] [PubMed] [Google Scholar]

- 2.Stockman T. A case study in developing a radiology information technology (RIT) specialist position for supporting digital imaging. Radiol Manage. 2006;28(4):46–49. [PubMed] [Google Scholar]

- 3.Nagy P, et al. Defining the PACS profession: an initial survey of skills, training, and capabilities for PACS administrators. J Digit Imaging. 2005;18(4):252–259. doi: 10.1007/s10278-005-8146-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mazurowski J. Informatics management and the PACS. Radiol Manage. 2004;26(1):41–42. [PubMed] [Google Scholar]

- 5.Langlotz C. From the chair: the top 10 myths about imaging informatics certification (January 2008—SIIM News) J Digit Imaging. 2008;21(1):1–2. doi: 10.1007/s10278-007-9100-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Socia CW. Credentialing imaging informatics professionals: creation of items for the CIIP examination. J Digit Imaging. 2006;19(Suppl 1):6–9. doi: 10.1007/s10278-006-0931-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Honea R. How many people does it take to operate a picture archiving and communication system. J Digit Imaging. 2001;14(2 Suppl 1):40–43. doi: 10.1007/BF03190292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Horton R. The global financial crisis: an acute threat to health. Lancet. 2009;373(9661):355–356. doi: 10.1016/S0140-6736(09)60116-1. [DOI] [PubMed] [Google Scholar]