Abstract

What benefit will new technologies offer if they are inadequately or not used? This work presents a meta-synthesis of adoption of technology related findings from four innovative monitoring intervention research studies with older adults and their informal and/or formal caregivers. Each study employed mixed methods analyses that lead to an understanding of the key variables that influenced adoption of telephone and Internet based wireless remote monitoring technologies by elders and their caregivers. The studies were all conducted in “real world” homes ranging from solo residences to multi-story independent living residential buildings. Insights gained came from issues not found in controlled laboratory environments but in the complex interplay of family-elder-staff dynamics around balancing safety and independence. Findings resulted in an adoption of technology model for remote monitoring of elders’ daily activities derived from evidence based research to advance both practical and theoretical development in the field of gerontechnology.

Keywords: Gerontechnology, Aging, Telecare, Aging-in-place, Behavioral monitoring

Telehealth Adoption-of-Technology Conceptual Framework

Adoption of technology is the most critical factor associated with usage. What good is the most sophisticated, well-developed technology intervention if the end-user does not use it? And what makes the end-user want to use it? The author’s program of research has been consistently refining an adoption-of-technology model to inform an understanding of the factors that make elders and their caregiver end-users want to use or not use a telehealth technology-based intervention. This article focuses on a telehealth subset concerned with geriatric personal/behavioral/activity monitoring that occurs at home using various sensors and equipment that send signals offsite for data management and that triggers response functions. This field is distinct from remote patient monitoring for physiological parameters to guide medical therapy, which is considered to be telemedicine. The model’s conceptual underpinnings were formed by adapting the diffusion-of-innovation theory that suggests factors leading to the adoption, or non-adoption, of innovations (Ryan and Gross 1943; Rogers 1995), and by integrating aspects from the trans-theoretical behavioral-change model (Prochaska 1997), which suggests there are certain background, predisposing, enabling, and situational barriers that affect whether one adopts and uses an innovation.

Southon et al. (1997) present a convincing case about the organizational impediments that block technology usage and diffusion, and warn developers to give equal attention to the human as well as the technical aspects of program implementation. As Mair and Whitten noted in their meta-analysis of telemedicine studies, there is a paucity of data examining end-user’s perceptions (2000). To address this weakness in the literature, both qualitative and quantitative methods were included in each project to increase understanding of end-users’ perspectives, including non-adopters’ perspectives. And the field was advanced by conducting this research in “real world” settings with community-dwelling older adults at home and in independent-living residences, assessing for the first time a range of informal and professional caregivers, including nurse practitioners and housing staff (multiple end-users). An adapted adoption-of-technology model is needed due to the special nature of technologies that passively monitor older adults. Most adoption-of-technology models are based on the assumption that the elder will need to overcome personal anxieties, learn new information, and remember how to operate the technology as the sole end-user (Jay and Willis 1992; Ellis and Allaire 1999; Czaja et al. 2006). Passive remote-monitoring technologies differ because they do not place computer engagement demands on an elder and thus can be used with a broad range of older adults, including those with memory loss. However, monitoring systems overseeing elders at home are increasing in complexity, with multiple end-users who may differ in their acceptance and use of the technology.

The intent of this paper is to present a brief synopsis of four pioneering studies in the elder activity-monitoring field, highlighting the findings that contributed to the adoption-of-technology model specific to this emerging field, and ending with delineation of the model. Three of the studies are with community-dwelling older adults and their family caregivers, the fourth occurred in independent-living residential housing with involvement of both family caregivers and a team of residence staff and clinicians. Two studies used telephone-based technologies and two used wireless-sensor technologies and the Internet. Each of the studies has had their qualitative implementation and quantitative study outcomes published in the research literature. Thus, an overview will be discussed and project citations provided for more specific details. This manuscript will focus on the technology-adoption issues experienced and elucidate how the variables contributing to the model originated from experiential evidence-based research findings.

Telephone Linked Care (TLC) Eldercare Home Monitoring

During the early nineties there were many elders on a Massachusetts waiting list for homemaker assistance because, when they were evaluated, they were not frail enough to qualify for formal services. With a shortage of case workers and high labor costs, intermittent in-home evaluations were not possible. Policymakers worried that over time these frail elders would functionally decline and actually be eligible for care without anyone knowing about it until they experienced a crisis situation that triggered a formal re-evaluation. The question arose whether technology could be designed to monitor an elder’s functional status and alert the case manager when declines occurred. A request for proposals was issued to address this situation. In response, a telecommunications research team proposed to develop and test the reliability and validity of an innovative automated interactive voice response (IVR) telephone monitoring system for assessing changes in the functional status of 20 disabled homebound elders enrolled in a homecare program. The technology employed a digitally recorded human voice scripted to query the elder in a conversational style, using their regular telephone, about each of their activities of daily living. Using the telephone keypad, the elder would press 1 for “yes” and 2 for “no” in response to the activity queries. Each session resulted in scores per query section and a summed total and lasted less than 10 min (Mahoney et al. 1999).

Worker Fear of Technology Substitution

To test the system, several trials were planned. First the system call would be repeated within 24 h to assess whether it would capture the same information the same way for test-retest reliability. Second, the system would be compared to a case manager asking the same questions over the telephone to control for the telephone usage, and then in comparison to an in-person case manager assessment to determine similarities and differences in setting outcomes. Thus, collaboration with a home care agency was necessary, one was approached, and the administrators readily agreed to participate. However, 9 months passed without recruiting one eligible elder participant who was able to use the telephone, understand the questions, and press the correct keypad. Another home care agency was tried for 3 months with similar zero recruitment. The author was asked to join the project and address the under-recruitment, and in the process discovered an organized resistance to technology adoption. The project had been formally blackballed by the union representatives for the case managers because they perceived the technology to be a threat to their jobs. Despite the project presentations suggesting the technology would be a help to manage large caseloads, the union leaders did not believe it. Instead, they reported that this technology, if successful, would be used as a substitute for their workers. The case managers had been instructed to refer only ineligible elders to the project so it appeared they were cooperating, when in reality they were not. Another study orientation session was conducted that included the union representatives, along with the case managers, in which the study personnel readily admitted that this technology would not be able to replace an in-person assessment, and the outcome data would probably reflect that and be helpful to support, not substitute, for case managers making home visits. They remained unconvinced and reported any need to follow-up to a technology-generated alert score was adding to the case manager’s workload. The variables influencing technology adoption started to emerge. Ultimately, due to the strong negative perceptions about technology-based elder monitoring, the project had to be relocated to a non-unionized agency in order to conduct the study. Word had been spread among the union steward representatives across the region, and no other union shop would test this technology.

By contrast, the non-union agency staff readily believed that the technology would be useful to them as described in the presentation. Case managers volunteered to arrange their assessment calls to be in tandem with the automated calls, and helped to recruit participants. The study proceeded without further adoption problems. Notably, the test results indicated that the IVR system showed excellent test-retest reliability with a weighted kappa of 0.76 for activities of daily living (ADL) items and 0.83 for instrumental activities of daily living (IADL) items, good agreement between the personal and automated telephone assessments (ADL weighted kappa 0.68; IADL 0.80), and, as expected, poorer agreement with the case manager (ADL kappa 0.53; IADL 0.34). Overall, the IVR was similar to the in-person telephone assessment, but neither captured as many impairments as the in-home case manager. Thus study findings, as anticipated, did support the use of the case manager when in-home assessments were needed, and limited the technology to routine monitoring of the wait list with alerts to the case manager when functional declines occurred. The adoption issues and lessons learned were: the necessity of anticipating fears of technology substitution for people, perception of increased workload, clearly demonstrating a match to their perceived needs, and thoroughly analyzing the context of the setting prior to initiating technology research.

REACH for Telephone Linked Care (TLC)

Established in 1995, the Resources for Enhancing Alzheimer’s Caregiver Health (REACH) Project was funded by the National Institutes of Health through the National Institute on Aging and the National Institute of Nursing Research to characterize and test the most promising behavioral, social, technological, or environmental interventions for enhancing family dementia caregiving. Interventions using a randomized controlled design and intention-to-treat analyses were carried out at six sites (Birmingham, Boston, Memphis, Miami, Palo Alto, Philadelphia), with 1229 elder/family caregiver dyads. All sites used a common measurement battery and study outcome points, with rigorous protocol oversight and outcome analyses conducted by external evaluators (Schulz et al. 2003).

The REACH for TLC Boston site-specific intervention was designed to help reduce caregiver stress due to bothersome behavioral problems related to Alzheimer’s disease by employing telephone technology. Once again, the IVR telephone technology was employed, but this time innovated by vastly expanding the automated outgoing assessment call by offering multiple components. The system design gave caregivers the control over, and choice of, using five different technology-based intervention modules, available 24 h a day, seven days a week, for 12 months through their ordinary home touch-tone telephone that linked to an automated computer-mediated interactive voice response (IVR) telecommunications system. Caregivers had access to 1) a program that monitored behavioral problems and caregivers’ stress levels, which could alert their health providers when parameters were exceeded, as well as offer specific advice depending on the behavioral problems; 2) a voice mail system that acted as a public bulletin board for shared problem-solving strategies among caregivers; 3) a private mail box for confidential correspondence and replies; 4) a voice mail triage geriatric nurse practitioner who responded to individual questions or linked the caregiver to the appropriate expert from our expert panel of consultants; 5) an 18- minute automated conversation individualized for the care recipient to stimulate pleasant memories as a distraction strategy, and to provide respite time for the caregiver (Mahoney et al. 1998).

Different Debugging Standards and Version Updates—Challenges to Technology Stability

Originally, participants were going to be given the choice of either using the keypad (press 1 for “yes”, or 2 for “no”) or speaking their responses (“yes”, “no”). Because some participants spoke English as their second language, there was a need to see how voice recognition performed with this population (Zafar et al. 1999). Despite the commercial rhetoric of the high accuracy of voice interpretation, our testing revealed a 40% rate of misinterpretation by the program, which increased to 60% when used with people who had foreign accents. From a research perspective, this was much too poor a performance for valid and reliable data and for the users to tolerate (given that the voice response frequently gave wrong referral paths).

Once the program was developed, an equal effort was given to the debugging phase. The subcontractor had a debugging standard of 80%, but a standard of 100% for the REACH for TLC prototype was established. Although this set a standard higher than the industry, the pilot and focus group testing indicated that the Alzheimer’s caregivers were under such demands that they had minimal tolerance for even minor computer program “glitches” (Mahoney 1998). The project coordinator also served as the data manager during this phase, and she assumed major responsibility for testing, identifying the bugs, and overseeing the debugging and monitoring for system problems through daily surveillance checks. One needs to consider debugging to be an ongoing process, because software developers release upgrades without complete re-testing of the new versions. We used six software programs in the development phase, the majority of which were upgraded during the implementation phase. This introduced several new incompatibilities (Mahoney 2000). According to Friedman and Wyatt (1997), one needs to study the resource in the laboratory and in the field. He notes that completely different questions arise when an information resource is still in the lab, versus when it is in the field, and it can take many years for an installed resource to stabilize within an environment. Other researchers have even suggested dividing the analysis of an informatic system’s function from the study of its impact, due to the high probability of problems with newly developed technology (Hopinsak et al. 1997).

Lack of Relative Advantage

Once we had our debugged system, we entered the participant recruitment phase and confronted significant barriers to study participation. There were 18 other studies competing for family caregivers and elders with Alzheimer’s disease in the Boston area at the same time. In this region, caregivers were extremely sophisticated about assessing research offerings. The relative advantage of the technology over other trial studies continually had to be identified, and frequently was deemed inferior to pharmaceutical- sponsored studies that offered more financial compensation and the potential of a cure by the simple ingestion of a pill rather than advice via technology. The greatest barrier, however, was the pharmaceutical company’s ban prohibiting caregivers from participating in another Alzheimer’s disease-related study, even non-drug interventions. The second major barrier was resistance by some Alzheimer’s support group leaders who were the gatekeepers to access the groups for presentations on the project. Several deemed the technology as a-humanistic and of no-value to their members, without letting the members even learn about the project. To gain further insight into the recruitment and technology adoption issues, we conducted a sub-study and analyzed the barriers, facilitators, and financial costs associated with recruiting our sample of 100 dyads (Tarlow and Mahoney 2000; Mahoney et al. 2001).

Successful Outcomes Among Participants

Despite the technical development and recruitment issues, we were able to execute the project successfully and quantitatively demonstrate positive technology-related outcomes on the reduction of caregiver distress and satisfaction with the technology (Mahoney et al. 2003). In brief, we found that wives caring for spouses in middle-stage Alzheimer’s disease exhibited significant intervention effect in the reduction of the bothersome nature of caregiving (p=0.02). Also, caregivers who exhibited low mastery at baseline significantly improved (p=.0.04). Usage of the system was primarily in the first 4 months and of very short encounters (3 min), with the stress monitoring and distraction conversation used the most and the voice mail system the least. End-users did not delve deeply into the wealth of counseling and 12 preventive-health personalized-wellness modules that were available. Rather, they focused on strategies for managing elders’ current bothersome behaviors—immediate needs due to the demands on caregivers’ time. They did highly value the multi-component nature of the intervention that gave them the ability to tailor the technology-mediated offerings to their individual needs. The capacity of the technology to offer relevant, brief interchanges on demand though their regular telephone, with the ability to be interrupted and returned to the point of disconnection, was noted to be greatly beneficial. Study results also indicated that caregivers’ providers were not overwhelmed by system-generated alerts, which indicated caregivers’ increasing stress levels, because they were few in number and highly specific to a behavioral problem area that the clinicians believed was important.

The uniqueness of the REACH trial was the rigor of a randomized controlled multi-site study of 15 different interventions to reduce family caregivers’ stress, with outcome analyses conducted by an NIH- designated coordinating center to ensure non-biased findings. From the adoption perspective, REACH for TLC had the most difficulty recruiting participants, but once they were enrolled, the site had the least attrition, and the low-dose but 24/7 multi-modal availability of IVR-mediated counseling compared favorably to the high-dose, in-person intensive personal counseling that was offered at other sites (Gitlin et al. 2003). The caregiver vigilance measure was tested for the first time with a large sample and demonstrated favorable psychometrics and practical utility as a stress measure, using time in minutes that made it favorable for a technology-related outcome (Mahoney 2003; Mahoney et al. 2003a, b).

The Worker Interactive Networking (WIN) Project

Working-family caregivers of frail and memory-impaired older adults could receive support through either a low-technology Internet support group and/or the high-technology component that linked the caregiver at work from their desktop to home via a remote computational-monitoring system. This system, called WINcare, was funded as an “innovative high-technical risk application”, assessed care recipients at home, and alerted family caregivers via computers at work if there was a critical change in events of their concern during their working hours. Caregivers then remotely checked on activities, rather than having to go home to see, for example, if their memory-impaired family member arose, ate, or took medications. Parameters customized to each situation were established, and the caregiver received an alert if any or all were exceeded (Mahoney 2004). This study was considered high risk because it was the first deployment of X10 motion sensors in a real-world work environment and, while low in costs, they are prone to signal interferences. In year one, efforts focused on discovering the nuances to make signal and system operations reliable and stable.

Several human factors sub-studies were conducted to inform the presentation style and user preferences for 1) the user-training manual for low-literacy readers; 2) the screen and color formatting; 3) recruitment materials. Extensive system reliability and validity testing followed. The system was successfully deployed in 2002 and enrolled 27 dyads of working caregivers and their care recipients who stayed alone during the workday.

Worker Equity And Pre-conceptions Are Influential

The formative evaluation provided rich insights into technology adoption. As the WIN project’s field component progressed, it became evident that there were difficulties in recruiting participants for the study, particularly the home monitoring component. Plans called for 60 participants to be randomized into two groups, either exclusive use of an on-line caregiver discussion group, or to participate in both the discussion group and a home-monitoring system. After two of the four participating companies’ directors of employee benefit programs vehemently resisted randomization in favor of giving employees’ choice and maintaining equity of benefits, randomization was broken, and all participants were offered use of the home monitoring system. Thus, individuals who had previously been assigned only to the discussion group were now offered the opportunity for home monitoring, as well. The seven individuals in this cohort, however, were not willing to adopt the home-monitoring technology. A telephone-administered survey to examine why these participants did not want to participate in the home monitoring was developed and included questions designed to provide feedback about the discussion group, as well.

Findings revealed that the main reasons study participants declined use of the home-monitoring technology related to concerns about the care-recipient’s home environment and loss of familial privacy. They used terms that suggested the system would “see them” and “know about their personal activities” despite repeated assurance it could not. Additionally, installation concerns arose. More than half of the respondents (67%) verbalized that they felt installation would be a hassle. One-third agreed that they would feel invaded by technology and that the equipment would be in the way in the home. The majority of the participants (67%) expressed concerns that their privacy would be lost, with “big brother” watching them (50%). Moreover, concerns that their relatives would find home monitoring intrusive predominated, with 100% agreeing with this statement. Decliners had strong negative perceptions.

Caregivers’ perceptions about home-monitoring technology are important to capture and understand. Among the respondents, many more were interested in the home-monitoring component than were able to adopt it due to care-recipient and family resistance. We anticipated difficulties necessitated by the dual recruitment and the need for consent by both caregivers and care recipients, but underestimated the negative influence of other family members. Multiple stakeholders increase the difficulty in gaining consensus over the adoption of technology.

Interestingly, participants’ outcomes from this project revealed positive changes in workers’ morale, work satisfaction, and caregiver security. The main barrier to their more frequent use of the technology was being too busy at work. There was no perceived intrusiveness of the technology, elder isolation, or reduction in personal contact. The number of telephone contacts made by caregivers not only remained the same, but the quality of the conversations improved. Caregivers reported their conversations changed from nagging their parent about whether they ate, took their pills, etc.—since they already knew from the monitoring—to sharing information about the kids and pleasant events that they normally would not have time to do. The on-line discussion group was compared to the home-monitoring group, with the home-monitoring group demonstrating more positive outcomes on reduction of caregiver vigilance and satisfaction with technology (Mahoney et al. 2008).

Automated Technology for Elder Assessment, Safety, and Environment (AT EASE)

The Automated Technology for Elder Assessment, Safety, & Environment (AT EASE) research project was designed to assess the feasibility of using off-the-shelf, X10 motion-sensor technology in senior independent-living facilities to conduct systematic sensor reliability and validity testing; to elicit staff, residents, and families concerns about aging-in-place; and to develop and pilot test sensor monitoring technologies to address their concerns.

Independent living residences (ILRs) are burgeoning in the U.S.A. Anecdotal evidence is growing that elders may appear intact during pre-admission interviews, but upon relocation become confused. Others, as they age-in-place, are at risk for physical as well as cognitive impairments. Given the limited staffing in ILRs, monitoring technologies may offer a means to ensure residents’ safety. The study was conducted in a Boston suburb using two ILR high-rise complexes containing a total of 300 units. During the year-one development phase, eight focus groups comprised of 26 residents, staff, and families were conducted to identify concerns that could be addressed by technology. Among many concerns, the theme of being independent arose, and the groups identified ways to achieve this via technology.

The Meaning of Independence

The findings from the qualitative research highlighted the importance of place and the labeling of same on the residents’ perception of independence. These residences, being called independent living residences, validated to the residents that they were indeed “living independently,” although the majority used supportive housekeeping and meal services. Having this perception of “living independently” was the most highly regarded and desired state. Residents feared being a burden on their family members and others. Being asked to leave these premises presented an unwilling reality that their independence was lost, and confirmed a significant decline in their trajectory of life and well-being. For elders, it is at that nexus point that activity-monitoring technology became the preferred means to reduce family and building-staff safety concerns and prolong their aging-in-place. Residents were willing to trade-off privacy for protection—protection of their independence (Mahoney and Goc 2009).

Stakeholders’ Needs

From the families’ perspective, they primarily wanted to know that their loved one was “OK” and “up and about,” which the discussions revealed was proxy for “not dead”. They greatly feared walking into the apartment and finding their parent deceased. Building staff also wanted a means to check unobtrusively on residents to ensure they were not sick, incapacitated, or injured from a fall. Typically, the receptionist would watch the mailboxes to see who didn’t retrieve their mail and attend breakfast, and then check with neighbors to learn who was ill. Local perceptions about privacy and intrusiveness of wellness checks are important to understand. Residents on some floors instituted their own morning door knocks to check if someone was “up and about” or not. Residents on other floors felt this routine was intrusive and declined. Building superintendents noted they had on average three toilet overflows per month and requested that monitoring include water sensing. The facilities were serviced by four consulting nurse practitioners who, in addition to the other expressed safety and well-being concerns, endorsed monitoring of medications.

The previously tested monitoring system used in the WIN project was modified to incorporate the new features requested by the multiple stakeholders. However, field testing of the X10 motion sensors revealed poor reliability and validity, with 149 confounding wireless signals from other residents’ microwaves, lamps, emergency call buttons, and the building’s complex heating and ventilation systems. Ultimately, the system was completely reprogrammed and converted to the Zigbee wireless platform. This was an expensive and time-consuming change, but it eliminated signal interference and was essential to stabilize operations and eliminate false alerts. Off-the-shelf Zibee-based wireless motion and water sensors were used to monitor the elders’ functional health patterns and bathroom water overflows for a four-month period per participant. Pre- and post-measures were obtained with 10 “sets” of end-users—the management staff, elders, family, and affiliating nurse practitioners (n=29).

Tailor the Technology to Needs

Findings from this study support the fact that ILR monitoring technologies need to be customized to the concerns of the key stakeholders in order to promote adoption and buy-in. Participants preferred easily accessible and readable reports, with passive observation and reporting. Users preferred few alert functions, and limited but significant alert notifications. There was no end-user tolerance for system downtime or testing. Tailoring technologies to the resident and facility was feasible and recommended. This project demonstrated for the first time that stratified Internet-based reporting, targeted to multiple family and staff-authorized end-users, was achievable while maintaining confidentiality and privacy. It also contributed several innovations or firsts for the field: first continuous 24/7 real-time monitoring during a 12-month total project period with multiple end-users; first to report actual incidence data on field testing of the reliability and validity problems with X10 wireless signals in ILRs; first user of a Zigbee platform in the field; first to refresh and manage routine Web data postings every 15 min and alerts within 5 min of triggering; first to stratify and tailor reporting to different end-users; first to demonstrate feasibility of integrating water sensors into activity monitoring; and the first to integrate wireless videocam monitoring of medication-taking.

Residents reported no change post-intervention on their assessments of whether the system addressed their needs (yes), was intrusive (no), or would substitute for staff (no). Unexpectedly, there was a categorical drop from a pre-intervention of “strongly agree” to post-intervention “somewhat agree” that using the system made them feel more secure. The data shows that the participants had positive expectations, which is probably why they enrolled, and while the intervention met some, it could not meet all or their security needs, ironically, such as watching over them 24/7, because there was no visual component beyond seeing a medication bottle.

Family members reported a slight worsening of their family member’s health, a significantly different worsening of their emotional health, and a slight increase in their level of concern at post-interview. The time needed to check on their relative decreased slightly. The major focus group issue of family concern—whether their family member was “OK”, moving about the house safely—dropped from a pre-intervention worry rate of 50% to 20% post- intervention. From the adoption perspective, we saw that 60% who reported a good to very high match with their relatives’ health or safety needs correspondingly found it very useful. Those reporting it was somewhat (10%) to not-very useful (20%) did not see a match to their particular needs. For example, one respondent noted “X was hospitalized, and when he came home he had to have a homemaker who was in the apartment almost full time.” Similarly, another mentioned that, “A health care worker [is there now] every morning (bathing and dressing).” Thus, having someone present eliminated the need for remote monitoring. Interestingly, several of those who used the technology recommended the addition of a visual component that could be activated, upon receipt of an alert, to enable them to distinguish between normal activities such as oversleeping from a more serious event, such as a stroke, that would require immediate attention.

All of the staff (100%) rated their concern for resident wellness and safety at a moderate level, with the majority (60%) rating the AT EASE system as high to very high in addressing their concerns. The survey further queried to assess if the alerts would be seen as adding work. One respondent reported that the system reduced workload, while the majority (80%) noted no change. Using this technology as a substitute for staff was not of concern (100%). The vast majority would recommend this system as is (80%). Comments indicated the system was one more measure of security for residents that the staff had concerns about. The toilet-overflow issue was clearly addressed by the water sensor when it successfully (100%) detected the problem when it occurred in three of 10 study units. It met the need for early awareness in a clearly perceptible way that supported its purpose and usefulness. The cost of a stand-alone toilet water sensor that shuts off an overflow was less than $100, and it was immediately adopted post-project to save the $8,000 in ceiling and unit repair costs per episode.

Limits to Technology

Although medication taking was an issue, the nurse practitioners preferred to do a personal visit to those they were concerned about, which not only encompassed the task of reviewing medication taking, but also allowed them to integrate an in-home assessment of other factors influencing health and wellbeing. For example, they may assess dietary intake depending on foods they saw in the refrigerator, safety counseling if clutter was accumulating, and a depression screen if emotional changes were evident. This is something remote-monitoring technology cannot do—capture the gestalt that a skilled nurse practitioner in a therapeutic relationship can assess and adaptively respond to subtle patient environmental cues that something is wrong. We also found that the nurse practitioners were affiliated with three organizations, each of whom sent out numerous, routine daily emails about information that was not relevant to their practices. They controlled the perceived “information overload” and excessive time demand of going through the emails by not reading them. The nurses only responded to page alerts because these were specific to their clinical practice. The nurse practitioners desired information tailored to their needs and when they did not get it, they “worked around” the problem of information overload.

Resident User Profiles

The research findings indicated that the profile of the resident who would most benefit from home monitoring would be a recently relocated older adult and/or one with new onset cognitive impairment who exhibits forgetfulness and a proclivity to fall, not take their medications, and/or walk enough. Residents who indicate some type of vulnerability or at-risk situation that generates family or professional caregivers’ concern also fit the profile. End-user profiles indicated that those most satisfied with home monitoring perceived a good fit with their needs and found it addressed their concerns. The majority of family and professional caregivers reported being very busy and preferred a passive role, depending on system-generated alerts to notify them of variances. Consequently, the multiple formats and in-depth, real-time reporting features were generally underutilized. Nurse clinicians greatly feared information overload and controlled it by ignoring emails and limiting responses to active pages.

Technology Model for Remote Monitoring of Elders’ Activities

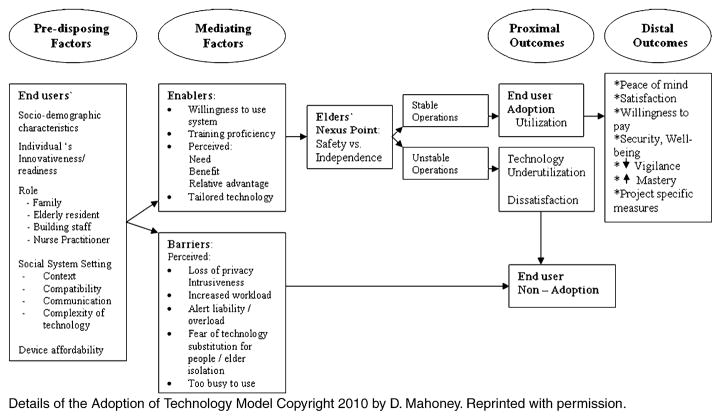

Data from these four studies informed the development and refinement of the model. Variables appear that remained influential to adoption across the studies. As Fig. 1 portrays, the model starts with the background socio-demographic characteristics of the target end-user(s), the potential roles, and the contextual setting. Higher levels of education and income have been consistent key personal characteristics of adopters, while age, minority status, and gender have not. The individual’s innovativeness captures one’s readiness to try new technology and relates to early, laggard, and non-adopter patterns. Roles are different but intertwined, and are complicated by multiple stakeholders needing to reach consensus on adoption. The social-system setting is of key importance. The contextual aspects of the setting, how well the technology offering is communicated to potential end-users, and whether it is compatible with their culture needs to be determined and addressed to avoid sabotage, as described previously. Because the technologies were provided for free during the research studies, cost was not a relevant factor. Device affordability is included as an initial consideration for when there is an actual cost to use a commercial system.

Fig 1.

Adoption of technology model for remote monitoring of elders’ daily activities

Next, the mediating factors exert a critical influence. Following the enabling path in the upper box, one’s willingness to learn and use, coupled with the ability to perceive technology benefits tailored to personal needs, leads to the pivotal nexus point for older adults. At this point, elders (or if cognitively impaired, their legal guardians) determine the acceptability of the technology-mediated trade-off between their safety and independence. When acceptable, it is then implemented and, given favorable technology operations, this then leads to end-user adoption as a proximal outcome and response variables as distal outcomes. Variables such as “peace of mind” and those under it are listed since they have been tested with positive findings. Accommodations are made for other “project-specific” measures underneath. Should the technology operations be unstable, then negative outcomes are likely which, if unresolved, will thwart diffusion and result in end-user dissatisfaction and technology underutilization and/or non-adoption. The lower third path follows the identified barriers, which if not overcome, directly results in end-users’ non-adoption.

Limitations and Future Research

The contributing studies were small in sample size for quantitative modeling, but sufficient in size for testing technical feasibility and operations, especially since they were initial versions and conducted in real-world settings. These studies contribute empirically generated data with consistency in quantitative and qualitative responses across them to support the variables included in the adoption model. Future research is recommended to formally test this model, when similar home-monitoring technologies are more matured and commonplace, thus making large-scale studies more realistic and affordable.

Discussion

Critical to adoption is the ability of the targeted end-user to readily perceive value of usage with direct benefits to him or her. The simple asking of whether the end-user intended to use the system was the best predictor of adoption. This, coupled with how proficient they became with system training, gave certainty to their responsiveness. Interestingly, commonly hypothesized barriers such as negative attitudes towards computers, anxiety, perceived intrusiveness of technology, and demographic characteristics such as age and gender did not exhibit statistical significance across these studies. Rather, (mis) perceptions about being watched by “big brother”, loosing one’s job to the technology, or having too many technology alerts to respond to were the key barriers to adoption. Among the adopters, the reliability and stability of the technical operations directly influenced their outcome impressions. Caregivers appreciated the system designs that offered multiple options, allowing them to tailor to their preferences for data observation and alert notification. Although monitoring technologies allow for the sharing of a wealth of data almost continuously, end-users feared “information overload” and data reduction strategies that gave few, but true, alerts were highly regarded. Project time and effort spent in reducing false alerts was worthwhile and essential to success.

There are unique challenges associated with gerontechnology research. Finding funding for refinement of existing systems or required incremental upgrades that do not exhibit “breakthrough” innovations is difficult but necessary (Alwan and Mahoney 2006). There is also a tension between capturing contextual variables that operate in the real world and the scale of research that realistically can be conducted within controlled experimental settings (Czaja and Sharit 2003). Despite the developmental issues, academic researchers have highlighted the need for theoretical developments in technology-intervention research (Shulz et al. 2002). The model presented in this paper begins to respond to the theory-building need and establishes a foundation for future research efforts.

Finally, the research and commercialization of geriatric home/activity/behavioral monitoring technologies is made more complex by the multiple stakeholders and the strong likelihood of differing philosophical viewpoints. Those who view technology as a positive force that can help humanity were adopters; others who viewed technology as de-humanizing refused to participate. Across these reported studies, the findings consistently negated the dehumanizing assumptions and supported the positive aspects. Being able to provide evidence-based positive outcomes to address critics’ subjective negative presumptions about the ethics of personal monitoring technologies is critical to move the field forward and reduce barriers to innovation and diffusion of new technologies (Mahoney et al. 2007).

Acknowledgments

This paper was supported through the Jacque Mohr Research Professorship at the MGH Institute of Health Professions. Credit is shared with the variety of research teams who participated in the development and testing of the monitoring technologies. Funding for the original studies was provided by the Boston Foundation for ElderCare (1993–4), National Institutes of Health, National Institute on Aging (U01AG13255, 1995–2002) for REACH for TLC-Telephone Linked Care, Department of Commerce, Technology Opportunity Program (2001–2004) for Worker Interactive Networking, and Automated Technology for Elder Assessment, Safety, and Environment by the Alzheimer’s Association Sensors for Seniors with Alzheimer’s Disease (ETAC, 2004–2007), and by the National Institutes of Health, National Institute of Nursing Research, for Nursense: Caregiver Vigilance Through Elder E-Monitoring (R21NR009262, 2005–2008). All the studies had received full board approval by a nationally certified Institutional Review Board (IRB) for human studies in accordance with the ethical standards promulgated in the 1964 Declaration of Helsinki prior to commencing and received ongoing reviews and annual IRB approvals over the course of their study periods.

Biography

Diane Feeney Mahoney PhD, APRN, BC, FAAN is the Jacque Mohr Professor of Geriatric Nursing Research and Director of Gerontechnology at MGH Institute of Health Professions, Boston, MA, USA. She received her doctorate with preparation as a social scientist from the Heller School for Social Policy and Management in 1989 and her Master’s degree as a gerontological nurse practitioner in 1980. Dr. Mahoney’s research focuses on the adoption and use of technologies by older adults, baby boomers, family caregivers and nurses. She is the author of over 100 peer reviewed publications and is a fellow in the Gerontological Society of America and the American Academy of Nursing.

Footnotes

The author reports no conflict of interest or disclosure of other sources of financial support.

References

- Alwan M, Mahoney DF. Federal Government Grants for Aging Services Technology Research: Problems and Recommendations. Center for Aging Services Technology (CAST), Funding Aging Services Technology (FAST) publication; 2006. Available via American Association of Homes and Services for Aging www.agingtech.org/resourcelink.aspx, Cited 14 April 2010. [Google Scholar]

- Czaja SJ, Sharit J. Practically relevant research: capturing real world tasks, environments, and outcomes. The Gerontologist. 2003;43(1):9–18. doi: 10.1093/geront/43.suppl_1.9. [DOI] [PubMed] [Google Scholar]

- Czaja SJ, Charness N, Fisk AD, Hertzog C, Nair SN, Rogers WA, et al. Factors predicting the use of technology: findings from the Center for Research and Education on Aging and Technology Enhancement (CREATE) Psychology and Aging. 2006;21(2):333–352. doi: 10.1037/0882-7974.21.2.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ellis ER, Allaire AJ. Modeling computer interest in older adults: the role of age, education, computer knowledge, and computer anxiety. Human Factors. 1999;41:345–355. doi: 10.1518/001872099779610996. [DOI] [PubMed] [Google Scholar]

- Friedman P, Wyatt JC. Evaluation methods in medical informatics. NY: Springer-Verlag; 1997. p. 9. [Google Scholar]

- Gitlin LN, Belle SH, Burgio LD, Czaja SJ, Mahoney D, Gallagher-Thompson D, et al. Effect of multicomponent interventions on caregiver burden and depression: The REACH multisite initiative at six month follow-up. Psychology and Aging. 2003;18(3):361–374. doi: 10.1037/0882-7974.18.3.361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopinsak G, Knirsch CA, Jain NL, Pablos-Mendez A. Automated tuberculosis detection. Journal of the American Medical Informatics Association. 1997;4(5):376–381. doi: 10.1136/jamia.1997.0040376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jay G, Willis SL. Influence of direct computer experience on older adults’ attitude toward computers. Journal of Gerontology: Psychological Sciences. 1992;47:P250–257. doi: 10.1093/geronj/47.4.p250. [DOI] [PubMed] [Google Scholar]

- Mahoney DF. A content analysis of an Alzheimer’s family caregivers virtual focus group. American Journal of Alzheimer’s Disease. 1998;13(6):309–316. [Google Scholar]

- Mahoney D. Developing technology applications for intervention research: a case study. Computers in Nursing. 2000;18(6):260–264. [PubMed] [Google Scholar]

- Mahoney D. Vigilance: evolution and definition for caregivers of family members with Alzheimer’s Disease. Journal of Gerontological Nursing. 2003;29(8):24–30. doi: 10.3928/0098-9134-20030801-07. [DOI] [PubMed] [Google Scholar]

- Mahoney D. Linking home care and the workplace through innovative wireless technology: the Worker Interactive Networking (WIN) project. Home Health Care Management and Practice. 2004;16(5):417–428. [Google Scholar]

- Mahoney D, Goc K. Tensions in independent living facilities for elders: a model of connected disconnections. Journal of Housing for the Elderly. 2009;23(3):166–184. doi: 10.1080/02763890903035522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahoney D, Tarlow B, Sandaire J. A computer mediated intervention for Alzheimer’s caregivers. Computers in Nursing. 1998;16(4):208–216. [PubMed] [Google Scholar]

- Mahoney D, Tennstedt S, Friedman R, Heeren T. An automated telephone system for monitoring the functional status of community-residing elders. The Gerontologist. 1999;39(2):229–234. doi: 10.1093/geront/39.2.229. [DOI] [PubMed] [Google Scholar]

- Mahoney D, Tarlow B, Jones R, Tennstedt S, Kasten L. Factors affecting the use of a telephone-based computerized intervention for caregivers of people with Alzheimer’s disease. Journal of Telemedicine and Telecare. 2001;7:139–148. doi: 10.1258/1357633011936291. [DOI] [PubMed] [Google Scholar]

- Mahoney D, Jones R, Coon D, Mendelsohn A, Gitlin L, Ory M. The caregiver vigilance scale: application and validation in the Resources for Enhancing Alzheimer’s Caregiver Health (REACH) project. American Journal of Alzheimer’s Disease and Other Dementias. 2003a;18(1):39–48. doi: 10.1177/153331750301800110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahoney D, Tarlow B, Jones R. Effects of an automated telephone support system on caregiver burden and anxiety: findings from the REACH for Telephone-Linked Care intervention study. The Gerontologist. 2003b;43(4):556–567. doi: 10.1093/geront/43.4.556. [DOI] [PubMed] [Google Scholar]

- Mahoney D, Purtilo R, Webbe F, Alwan M, Bharucha A, Becker A. In-home monitoring of persons with dementia: ethical guidelines for technology research and development. Alzheimer’s and Dementia. 2007;3(3):217–226. doi: 10.1016/j.jalz.2007.04.388. [DOI] [PubMed] [Google Scholar]

- Mahoney D, Mutchler P, Tarlow B, Liss E. “Real world” implementation lessons and outcomes from the worker interactive networking (WIN) project: Workplace based online caregiver support and remote monitoring of elders at home. Telemedicine and e-Health. 2008;14(3):224–234. doi: 10.1089/tmj.2007.0046. [DOI] [PubMed] [Google Scholar]

- Mair F, Whitten P. Systematic review of studies of patient satisfaction with telemedicine. British Medical Journal. 2000;320:1517–1520. doi: 10.1136/bmj.320.7248.1517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prochaska JO. A revolution in health promotion: Smoking cessation as a case study. In: Resnick RJ, Rozensky RH, editors. Health psychology through the lifespan: Practice and research opportunities. Washington, DC: APA; 1997. pp. 361–375. [Google Scholar]

- Rogers EM. Diffusion of innovations. 4. New York: The Free Press; 1995. [Google Scholar]

- Ryan B, Gross NC. The diffusion of hybrid seed corn in two Iowa communities. Rural Sociology. 1943;8:15–24. [Google Scholar]

- Schulz R, Burgio L, Burns R, Eisodorfer C, Gallagher-Thompson D, Gitlin L, et al. Resources for Enhancing Alzheimer’s Caregiver Health (REACH): overview, site-specific outcomes, future directions. The Gerontologist. 2003;43(4):514–520. doi: 10.1093/geront/43.4.514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shulz R, Lustig A, Handler S. Technology-based caregiver intervention research: current status and future directions. Gerontechnology. 2002;2(1):15–47. [Google Scholar]

- Southon F, Sauer C, Dampney C. Fit failure and the house of horrors: toward a configurational theory of IS project failure. Journal of the American Medical Informatics Association. 1997;4:349–366. [Google Scholar]

- Tarlow B, Mahoney D. The cost of recruiting Alzheimer’s disease caregivers for research. Journal of Aging and Health. 2000;12(4):490–510. doi: 10.1177/089826430001200403. [DOI] [PubMed] [Google Scholar]

- Zafar A, Overhage JM, McDonald CJ. Continuous speech recognition for clinicians. Journal of the American Medical Informatics Association. 1999;6(3):195–204. doi: 10.1136/jamia.1999.0060195. [DOI] [PMC free article] [PubMed] [Google Scholar]