Abstract

Patient safety is a global challenge that requires knowledge and skills in multiple areas, including human factors and systems engineering. In this chapter, numerous conceptual approaches and methods for analyzing, preventing and mitigating medical errors are described. Given the complexity of healthcare work systems and processes, we emphasize the need for increasing partnerships between the health sciences and human factors and systems engineering to improve patient safety. Those partnerships will be able to develop and implement the system redesigns that are necessary to improve healthcare work systems and processes for patient safety.

1. PATIENT SAFETY

A 1999 Institute of Medicine report brought medical errors to the forefront of healthcare and the American public (Kohn, Corrigan, & Donaldson, 1999). Based on studies conducted in Colorado, Utah and New York, the IOM estimated that between 44,000 and 98,000 Americans die each year as a result of medical errors, which by definition can be prevented or mitigated. The Colorado and Utah study shows that adverse events occurred in 2.9% of the hospitalizations (Thomas, et al., 2000). In the New York study, adverse events occurred in 3.7% of the hospitalizations (T. A. Brennan, et al., 1991). The 2001 report by the Institute of Medicine on “Crossing the Quality Chasm” emphasizes the need to improve the design of healthcare systems and processes for patient safety. The report proposes six aims for improvement in the healthcare system: (1) safe, (2) effective, (3) patient-centered, (4) timely, (5) efficient, and (6) equitable (Institute of Medicine Committee on Quality of Health Care in America, 2001). This chapter focuses on the safety aim, i.e. how to avoid injuries to patients from the care that is intended to help them. However, the improvement aims can be related to each other. For instance, safety, timeliness and efficient can be related: inefficient processes can create delays in care and, therefore, injuries to patients that could have been prevented.

Knowledge that healthcare systems and processes may be unreliable and produce medical errors and harm patients is not new. Using the critical incident technique, Safren and Chapanis (1960a, 1960b) collected information from nurses and identified 178 medication errors over 7 months in one hospital. The most common medication errors were: drug to wrong patient, wrong dose of medication, drug overdose, omitted drug, wrong drug and wrong administration time. The most commonly reported causes for these errors were: failure to follow checking procedures, written miscommunication, transcription errors, prescriptions misfiled and calculation errors. We have known for a long time that preventable errors occur in health care; however, it is only recently that patient safety has received adequate attention. This increased attention has been fueled by tragic medical errors.

From the Josie King Foundation website (http://www.josieking.org/page.cfm?pageID=10):

Josie was 18 months old…. In January of 2001 Josie was admitted to Johns Hopkins after suffering first and second degree burns from climbing into a hot bath. She healed well and within weeks was scheduled for release. Two days before she was to return home she died of severe dehydration and misused narcotics…

The death of Josie King has been attributed primarily to lack of communication between the different healthcare providers involved in her and lack of consideration for her parents’ concerns (King, 2006).

On February 7, 2003, surgeons put the wrong organs into a teenager, Jesica Santillan, at Duke University Hospital. The organs were from a donor with blood Type A; Jesica Santillán had Type O, and people with Type O can accept transfusions or tissues only from Type O donors. Jesica Santillan died two weeks after she received the wrong heart and lungs in one transplant operation and then suffered brain damage and complications after a second transplant operation. A root cause analysis of the error showed that lack of redundancy for checking ABO compatibility was a key factor in the error (Resnick, 2003). Soon after this error, Duke Medical Center implemented a new organ transplantation procedure that required the transplant surgeon, the transplant coordinator, and the procuring surgeon to each validate ABO compatibility and other key data (Resnick, 2003).

These tragedies of medical errors emphasize the most important point made by the Institute of Medicine in its various reports on patient safety (Institute of Medicine Committee on Quality of Health Care in America, 2001; Kohn, et al., 1999): systems and processes of care need to be redesigned to prevent and/or mitigate the impact of medical errors.

A major area of patient safety is medication errors and adverse drug events (Institute of Medicine, 2006). A series of studies by Leape, Bates and colleagues showed that medication errors and adverse drug events are frequent (D.W. Bates, Leape, & Petrycki, 1993), that only about 1% of medication errors lead to adverse drug events (D. W. Bates, Boyle, Vander Vliet, & al, 1995), that various system factors contribute to medication safety such as inadequate availability of patient information (L.L. Leape, et al., 1995), and that medication errors and ADEs are more frequent in intensive care units primarily because of the volume of medications prescribed and administered (Cullen, et al., 1997). Medication safety is a worldwide problem. For instance, a Canadian study of medication errors and adverse drug events (ADEs) found that 7.5% of hospital admissions resulted in ADEs; about 37% of the ADEs were preventable and 21% resulted in death (Baker, et al., 2004).

Patient safety has received attention by international health organizations. In 2004, the World Health Organization launched the World Alliance for Patient Safety. The World Alliance for Patient Safety has targeted the following patient safety issues: prevention of healthcare-associated infections, hand hygiene, surgical safety, and patient engagement [http://www.who.int/patientsafety/en/]. For instance, the WHO issued guidelines to ensure the safety of surgical patients. The implementation of these guidelines was tested in an international study of 8 hospitals located in Jordan, India, the US, Tanzania, the Philippines, Canada, England, and New Zealand (Haynes, et al., 2009). Each of the 8 hospitals used a surgical safety checklist that identified best practices during the following surgery stages: sign in (e.g., verifying patient identify and surgical site and procedure), time out (e.g., confirming patient identity) and sign out (e.g., review of key concerns for the recovery and care of the patient). Overall results showed that the intervention was successful as the death rate decreased from 1.5% to 0.8% and the complications rate decreased from 11% of patients to 7% of patients after introduction of the checklist. However, the effectiveness of the intervention varied significantly across the hospitals: 4 of the 8 hospitals displayed significant decreases in complications; 3 of these 4 hospitals also had decreases in death rates. To completely assess the actual implementation of this patient safety intervention and its effectiveness, one would have to understand the specific context or system in which the intervention was implemented, as well as the specific processes that were redesigned because of the intervention. This type of analysis would call for expertise in the area of human factors and systems engineering.

Some care settings or care situations are particularly prone to hazards, errors and system failures. For instance, in intensive care units (ICUs), patients are vulnerable, their care is complex and involves multiple disciplines and varied sources of information, and numerous activities are performed in patient care; all of these factors contribute to increasing the likelihood and impact of medical errors. A study of medical errors in a medical ICU and a coronary care unit shows that about 20% of the patients admitted in the units experienced an adverse event and 45% of the adverse events were preventable (Rothschild, et al., 2005). The most common errors involved in preventable adverse events were: prevention and diagnostic errors, medication errors, and preventable nosocomial infections. Various work system factors are related to patient safety problems in ICUs, such as not having daily rounds by an ICU physician (Pronovost, et al., 1999) and inadequate ICU nursing staffing and workload (Carayon & Gurses, 2005; Pronovost, et al., 1999). Bracco et al. (2000) found a total of 777 critical incidents in an ICU over a 1-year period: 31% were human-related incidents (human errors) that were evenly distributed between planning, execution, and surveillance. Planning errors had more severe consequences than other problems. The authors recommended timely, appropriate care to avoid planning and execution mishaps. CPOE may greatly enhance the timeliness of medication delivery by increasing the efficiency of the medication process and shortening the time between prescribing and administration.

Several studies have examined types of error in ICUs. Giraud et al. (1993) conducted a prospective, observational study to examine iatrogenic complications. Thirty-one percent of the admissions had iatrogenic complications, and human errors were involved in 67% of those complications. The risk of ICU mortality was about two-fold higher for patients with complications. Donchin et al. (1995) estimated a rate of 1.7 errors per ICU patient per day. A human factors analysis showed that most errors could be attributed to poor communication between physicians and nurses. Cullen and colleagues (1997) compared the frequency of ADEs and potential ADEs in ICUs and non-ICUs. Incidents were reported directly by nurses and pharmacists and were also detected by daily review of medical records. The rate of preventable ADEs and potential ADEs in ICUs was 19 events per 1,000 patient days, nearly twice the rate in non-ICUs. However, when adjusting for the number of drugs used, no differences were found between ICUs and non-ICUs. In the ICUs, ADEs and potential ADEs occurred mostly at the prescribing stage (28% to 48% of the errors) and at the administration stage (27% to 56%). The rate of preventable and potential ADEs (calculated over 1,000 patient-days) was actually significantly higher in the medical ICU (2.5%) than in the surgical ICU (1.4%) (Cullen, Bates, Leape, & The Adverse Drug Even Prevention Study Group, 2001; Cullen, et al., 1997). In a systems analysis of the causes of these ADEs, Leape et al. (1995) found that the majority of systems failures (representing 78% of the errors) were due to impaired access to information, e.g., availability of patient information and order transcription. Cimino et al. (2004) examined medication prescribing errors in nine pediatric ICUs. Before the implementation of various interventions, 11.1% of the orders had at least one prescribing error. After the implementation of the interventions (dosing assists, communication/education, and floor stocks), the rate of prescribing errors went down to 7.6% (68% decrease). The research on patient safety in ICUs shows that errors are frequent in ICUs. However, this may be due to the volume of activities and tasks (Cullen, et al., 1997). ICU patients receive about twice as many drugs as those on general care units (Cullen, et al., 2001). System-related human errors seem to be particularly prevalent in ICUs. Suggestions for reducing errors in ICUs are multiple, such as improving communication between nurses and physicians (Donchin, et al., 1995); improving access to information (L.L. Leape, et al., 1995); providing timely appropriate care (Bracco, et al., 2000); and integrating various types of computer technology, including CPOE (Varon & Marik, 2002).

Another high-risk care process is transition of care. In today’s healthcare system, patients are experiencing an increasing number of transitions of care. Transitions occur when patients are transferred from one care setting to another, from one level or department to another within a care setting, or from one care provider to another (Clancy, 2006). This time of transition is considered an interruption in the continuity of care for patients and has been defined as a gap, or a discontinuity, in care (Beach, Croskerry, & Shapiro, 2003;R.I. Cook, Render, & Woods, 2000). Each transition requires the transfer of all relevant information from one entity to the next, as well as the transfer of authority and responsibility (Perry, 2004; Wears, et al., 2004; Wears, et al., 2003). Concerns for patient safety arise when any or all of these elements are not effectively transferred during the transition (e.g., incorrect or incomplete information is transferred or confusion exists regarding responsibility for patients or orders) (Wears, et al., 2003). Transitions may be influenced by poor communication and inconsistency in care (Schultz, Carayon, Hundt, & Springman, 2007), both of which have been identified as factors threatening the quality and safety of care that patients receive (Beach, et al., 2003; JCAHO, 2002). Poor transitions can have a negative impact on patient care, such as delays in treatment and adverse events.

Several studies have documented possible associations between transitions and increased risks of patients experiencing an adverse event, particularly in patient transitions from the hospital to home or long-term care (Boockvar, et al., 2004; Coleman, Smith, Raha, & Min, 2005; Forster, et al., 2004; Moore, Wisnivesky, Williams, & McGinn, 2003) or at admission to hospital (Tam, et al., 2005). Associations have been found between medical errors and increased risk for rehospitalization resulting from poor transitions between the inpatient and outpatient setting (Moore, et al., 2003). Transitions involving medication changes from hospital to long-term care have been shown to be a likely cause of adverse drug events (Boockvar, et al., 2004). Patients prescribed long-term medication therapy with warfarin were found at higher risk for discontinuation of their medication after elective surgical procedures (Bell, et al., 2006). Although transitions have been shown to be critical points at which failure may occur, they may also be considered as critical points for potential recovery from failure (Clancy, 2006; Cooper, 1989). If reevaluations take place on the receiving end, certain information that was not revealed or addressed previously may be discovered or errors may be caught at this point (Perry, 2004; Wears, et al., 2003).

Despite the increased attention towards patient safety, it is unclear whether we are actually making any progress in improving patient safety (Charles Vincent, et al., 2008). Several reasons for this lack of progress or lack of measurable progress include: lack of reliable data on patient safety at the national level (Lucian L. Leape & Berwick, 2005; Charles Vincent, et al., 2008) or at the organizational level (Farley, et al., 2008; Shojania, 2008; Charles Vincent, et al., 2008), difficulty in engaging clinicians in patient safety improvement activities (Hoff, 2008; Charles Vincent, et al., 2008), and challenges in redesigning and improving complex healthcare systems and processes (Lucian L. Leape & Berwick, 2005; Weinert & Mann, 2008). The latter reason for limited improvement in patient safety is directly related to the discipline of human factors and systems engineering. The 2005 report by the National Academy of Engineering and the Institute of Medicine clearly articulated the need for increased involvement of human factors and systems engineering to improve healthcare delivery (Reid, Compton, Grossman, & Fanjiang, 2005). In the rest of the chapter, we will examine various conceptual frameworks and approaches to patient safety; this knowledge is important as we need to understand the “basics” of patient safety in order to implement effective solutions that do not have negative unintended consequences. We then discuss system redesign and related issues, including the role of health information technology in patient safety. The final section of the chapter describes various human factors and systems engineering tools that can be used for improving patient safety.

2. CONCEPTUAL APPROACHES TO PATIENT SAFETY

Different approaches to patient safety have been proposed. In this section, we described conceptual frameworks based on models and theories of human error and organizational accidents (section 2.1), focus on patient care process and system interactions (section 2.2), and models that link healthcare professionals’ performance to patient safety (section 2.3). In the last part of this section, we describe the SEIPS [Systems Engineering Initiative for Patient Safety] model of work system and patient safety that integrates many elements of these other models (Carayon, et al., 2006).

2.1 Human Errors and Organizational Accidents

The 1999 report by the IOM on “To Err is Human: Building a Safer Health System” highlighted the role of human errors in patient safety (Kohn, et al., 1999). There is a rich literature on human error and its role in accidents. The human error literature has been very much inspired by the work of Rasmussen (Rasmussen, 1990; Rasmussen, Pejtersen, & Goodstein, 1994) and Reason (1997), which distinguishes between latent and active failures. Latent conditions are “the inevitable “resident pathogens” within the system” that arise from decisions made by managers, engineers, designers and others (Reason, 2000, p.769). Active failures are actions and behaviors that are directly involved in an accident: (1) action slips or lapses (e.g., picking up the wrong medication), (2) mistakes (e.g., because of lack of medication knowledge, selecting the wrong medication for the patient), and (3) violations or work-arounds (e.g., not checking patient identification before medication administration). In the context of health care and patient safety, the distinction is made between the “sharp” end (i.e. work of practitioners and other people who are in direct contact with patient) and the “blunt” end (i.e. work by healthcare management and other organizational staff) (R.I. Cook, Woods, & Miller, 1998), which is roughly similar to the distinction between active failures and latent conditions.

Vincent and colleagues (2000; 1998) have proposed an organizational accident model based on the research by Reason (1990, 1997). According to this model, accidents or adverse events happen as a consequence of latent failures (i.e. management decision, organizational processes) that create conditions of work (i.e. workload, supervision, communication, equipment, knowledge/skill), which in turn produce active failures. Barriers or defenses may prevent the active failures to turn into adverse events. This model defines 7 categories of system factors that can influence clinical practice and may result in patient safety problems: (1) institutional context, (2) organizational and management factors, (3) work environment, (4) team factors, (5) individual (staff) factors, (6) task factors, and (7) patient characteristics.

Another application of Rasmussen’s conceptualization of human errors and organizational accidents focuses on the temporal process by which accidents may occur. Cook and Rasmussen (2005) describe how safety may be compromised when healthcare systems operate at almost maximum capacity. Under such circumstances operations become to migrate towards the marginal boundary of safety, therefore putting the system at greater risk for accidents. This migration is influenced by management pressure towards efficiency and the gradient towards least effort, which result from the need to operate at maximum capacity.

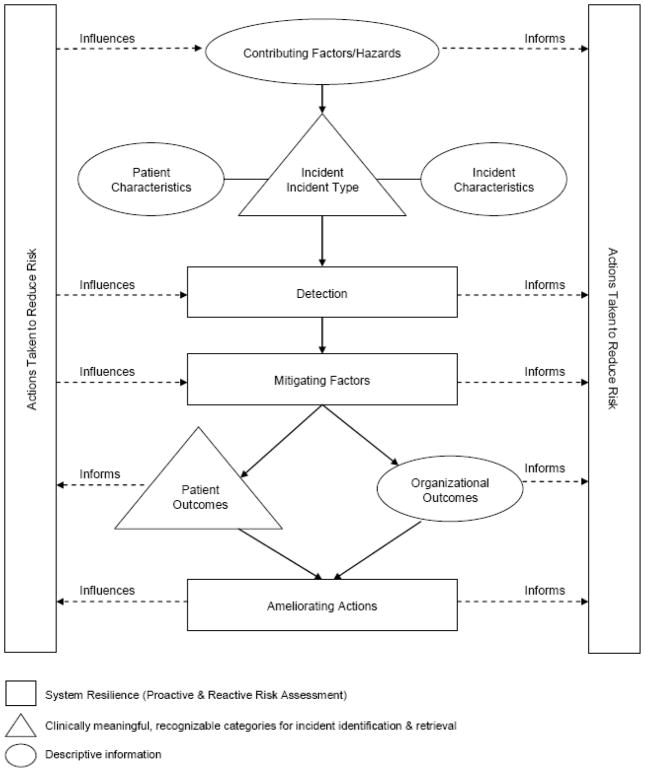

An extension of the human error and organizational accidents approach is illustrated by the work done by the World Alliance for Patient Safety to develop an international classification and a conceptual framework for patient safety. The International Classification for Patient Safety of the World Health Organization’s World Alliance for Patient Safety is a major effort at standardizing the terminology used in patient safety (Runciman, et al., 2009; The World Alliance For Patient Safety Drafting Group, et al., 2009). The conceptual framework for the international classification can be found in Figure 1 (The World Alliance For Patient Safety Drafting Group, et al., 2009). Patient safety incidents are at the core of the conceptual framework; incidents can be categorized into healthcare-associated infection, medication and blood/blood products, for instance (Runciman, et al., 2009). The conceptual framework shows that contributing factors or hazards can lead to incidents; incidents can be detected, mitigated (i.e. preventing or moderating patient harm), or ameliorated (i.e. actions occurring after the incident to improve or compensate for harm).

Figure 1.

Conceptual Framework for the International Classification for Patient Safety of the World Health Organization’s World Alliance for Patient Safety (The World Alliance For Patient Safety Drafting Group, et al., 2009)

These different models of human errors and organizational accidents are important in highlighting (1) different types of errors and failures (e.g., active errors versus latent failures; sharp end versus blunt end), (2) the key role of latent factors (e.g., management and organizational issues) in patient safety (Rasmussen, et al., 1994; Reason, 1997), (3) error recovery mechanisms (Runciman, et al., 2009; The World Alliance For Patient Safety Drafting Group, et al., 2009), and (4) temporal deterioration over time that can lead to accidents (R. Cook & Rasmussen, 2005). These models are important to unveil the basic mechanisms and pathways that lead to patient safety incidents. It is also important to examine patient care processes and the various interactions that occur along the patient journey that can create the hazards leading to patient safety incidents.

2.2 Patient Journey and System Interactions

Patient safety is about the patient after all (P. F. Brennan & Safran, 2004). Patient centeredness is one of the six improvement aims of the Institute of Medicine (Institute of Medicine Committee on Quality of Health Care in America, 2001): patient-centered care is “care that is respectful of and responsive to individual and patient preferences, needs, and values” and care that ensures “that patient values guide all clinical decisions” (page 6). Patient-centered care is very much related to patient safety. For instance, to optimize information flow and communication, experts recommend families be engaged in a relationship with physicians and nurses that fosters exchange of information as well as decision making that considers family preferences and needs (Stucky, 2003). Patient-centered care may actually be safer care.

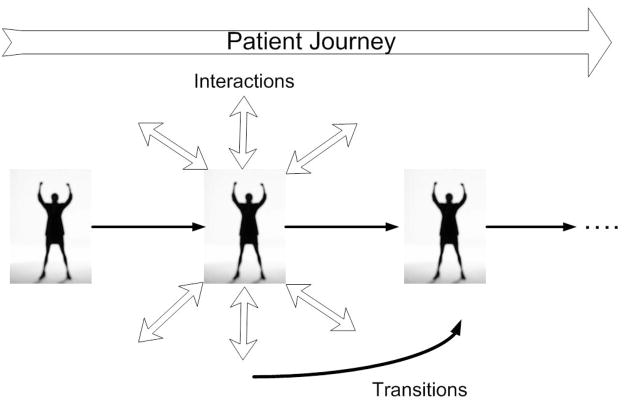

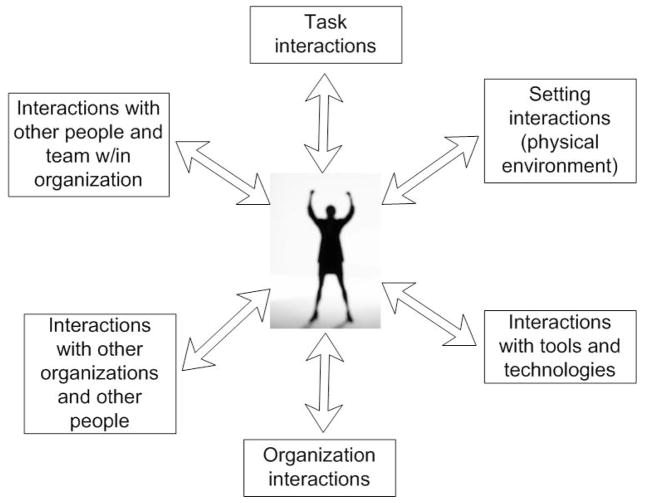

Care to patients is provided through a myriad of interactions between various individuals: the patients themselves, their families and friends, healthcare providers, and various other staff. These interactions involve a multitude of organizations, such as hospitals, large clinical practices, physician offices, nursing homes, pharmacies, home care agencies, and ambulatory surgery centers. These interactions among various individuals and organizations are a unique feature of ‘production’ within healthcare. As explained by Gaba (2000), health care is a system of complex interactions and tight coupling that make it vulnerable to normal accidents. Care is ‘produced’ during a myriad of interactions with varying levels of success, i.e. with various levels of quality and safety. These interactions occur over time, and therefore produce transitions of care that influence each other and accumulate over the journey of the patient care process. Figure 2 depicts a picture of the patient journey, showing various interactions occurring at each step of the patient care process and the transitions of care or patient handoffs happening over time. A patient handoff occurs when patient care requires a change in care setting or provider. Each handoff in the patient journey involves various interactions of the patient and the healthcare provider with a task (typically information sharing), other people, tools and technologies, and a physical, social and organizational context (see Figure 3). The growing number of transitions of care, due in part to an increasing number of shift changes and increased fragmentation of care, along with a heightened focus on patient safety, demonstrate the obvious need for safe and effective interactions to achieve successful patient handoffs.

Figure 2.

Patient Journey or Patient Care Process

Figure 3.

Human Factors Model of Interactions (adapted from Wilson (2000) and Carayon and Smith (2000))

Example

Let us examine the example of a patient care process to illustrate various interactions and the patient journey. See Table 1 for a description of a patient care process that shows several instances of interactions:

Table 1.

Example of a Patient Care Process

| A 68-year-old male with a history of COPD (Chronic Obstructive Pulmonary Disease) and poor pulmonary function is scheduled to have surgery to remove a lesion from his liver. Prior to coming to the work-up clinic located at the hospital for his pre-operative work-up, the patient was to have a pulmonary function test, but due to a bad cough, had to reschedule. There was some confusion as to whether he should have the test done at the facility near his home or have it done the day of his work-up visit. Several calls were made between the midlevel provider *, the facility’s pulmonary department, the patient, and the patient’s family to coordinate the test, which was then conducted the day before the work-up visit. The results from this test were faxed to the work-up clinic. However, the patient also had an appointment with his cardiologist back home prior to the work-up and the records from the cardiologist were not sent to the hospital by the day of the work-up. |

| The midlevel provider begins by contacting the patient’s cardiologist to request that his records be sent as soon as possible. The midlevel provider is concerned about the patient’s pulmonary status and would like someone from the hospital’s pulmonary department to review the patient’s test results and evaluate his condition for surgery. After some searching, the midlevel determines the number to call and pages pulmonary. In the meantime, the midlevel provider checks the patient’s online record, discovering that the patient did not have labs done this morning as instructed, and informs the patient that he must have his labs done before he leaves the hospital. Late in the day and after several pages, the midlevel provider hears back from the pulmonary department stating that they cannot fit the patient in yet today, but can schedule him for the following morning. Since the patient is from out of town, he would prefer to see his regular pulmonary physician back home. The midlevel provider will arrange for this appointment and be in touch with the patient and his family about the appointment and whether or not they will be able to go ahead with the surgery as planned in less than two weeks. |

A midlevel provider is a medical provider who is not a physician but is licensed to diagnose and treat patients under the supervision of a physician; it is typically a physician’s assistant or a nurse practitioners (The American Heritage® Stedman’s Medical Dictionary, 2nd Edition, 2004, Published by Houghton Mifflin Company).

Task interactions: result of pulmonary function test faxed to clinic, midlevel provider communication with patient’s cardiologist

Interpersonal interactions between the midlevel provider, the pulmonary department and the patient and his family in order to coordinate the pulmonary function test

Interactions between the midlevel provider and several tools and technologies, such as the fax machine used to receive test results and the patient’s online record

Interactions between the midlevel provider and outside organizations, such as the patient’s cardiologist.

The example also shows several types of patient handoffs:

Handoff from the patient’s cardiologist to the midlevel provider

Handoff between the midlevel provider and the pulmonary department

Handoff between the midlevel provider and the patient’s own pulmonary physician.

The High Reliability Organizing (HRO) approach developed by the Berkeley group (K. H. Roberts & R. Bea, 2001; K. H. Roberts & R. G. Bea, 2001) and the Michigan group (Weick & Sutcliffe, 2001) emphasizes the need for mindful interactions. Throughout the patient journey, we need to build systems and processes that allow various process owners and stakeholders to enhance mindfulness. Five HRO principles influence mindfulness: (1) tracking small failures, (2) resisting oversimplification, (3) sensitivity to operations, (4) resilience, and (5) deference to expertise (Weick & Sutcliffe, 2001). First, patient safety may be enhanced in an organizational culture and structure that is continuously preoccupied with failures. This would encourage reporting of errors and near misses, and learning from these failures. Second, understanding the complex, changing and uncertain work systems and processes in health care would allow healthcare organizations to have a more nuanced realistic understanding of their operations and to begin to anticipate potential failures by designing better systems and processes. Third, patient safety can be enhanced by developing a deep understanding of both the sharp and blunt ends of healthcare organizations. Fourth, since errors are inevitable, patient safety needs to allow people to detect, correct and recover from those errors. Finally, because healthcare work systems and processes are complex, the application of the requisite variety principle would lead to diversity and ‘migration’ to expertise. These five HRO principles can enhance transitions of care and interactions throughout the patient journey.

Examining the patient journey and the various vulnerabilities that may occur throughout the interactions of the patient care process provides important insights regarding patient safety. Another important view on patient safety focuses on the healthcare professionals and their performance.

2.3 Performance of Healthcare Professionals

Patient safety is about the patient, but requires that healthcare professionals have the right tools and environment to perform their tasks and coordinate their effort. Therefore, it is important to examine patient safety models that focus on the performance of healthcare professionals.

Bogner (2007) proposed the “Artichoke” model of systems factors that influence behavior. The interactions between providers and patients are the core of the system and represent the means of providing care. Several system layers influence these interactions: ambient conditions, physical environment, social environment, organizational factors, and the larger environment (e.g., legal-regulatory-reimbursement). Karsh et al. (2006) have proposed a model of patient safety that defines various characteristics of performance of the healthcare professional who delivers care. The performance of the healthcare professional can categorized into (1) physical performance (e.g., carrying, injecting, charting), (2) cognitive performance (e.g., perceiving, communicating, analyzing, awareness) and (3) social/behavioral performance (e.g., motivation, decision-making). Performance can be influenced by various characteristics of the work system, including characteristics of the ‘worker’ and his/her patients and their organization, as well as the external environment.

Efforts targeted at improving patient safety, therefore, need to consider the performance of healthcare providers and the various work system factors that hinder their ability to do their work, i.e. performance obstacles (Carayon, Gurses, Hundt, Ayoub, & Alvarado, 2005;A. Gurses, Carayon, & Wall, 2009;A. P. Gurses & Carayon, 2007).

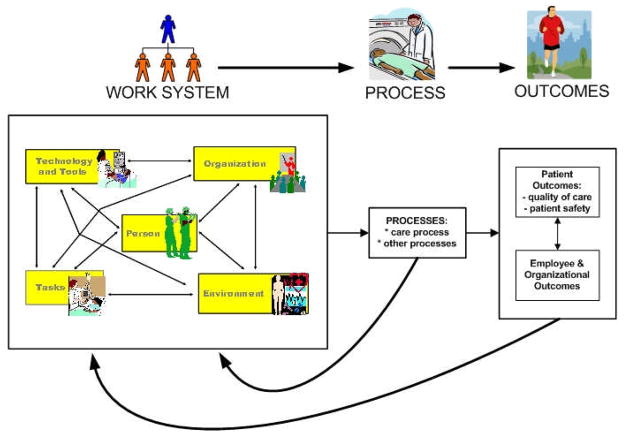

2.4 SEIPS Model of Work System and Patient Safety

The various models reviewed in previous sections emphasize specific aspects such as human error, patient care process and performance of healthcare professionals. In this section, we describe the SEIPS [Systems Engineering Initiative for Patient Safety] model of work system and patient safety as a conceptual framework that integrates many of the aspects described in other models (Carayon, et al., 2006). See Figure 4 for a graphical representation of the SEIPS model of work system and patient safety.

Figure 4.

SEIPS Model of Work System and Patient Safety (Carayon, et al., 2006)

The SEIPS model is based on the Donabedian’s (1978) model of quality. According to Donabedian (1978), quality can be conceptualized with regard to structure, process or outcome. Structure is defined as the setting in which care occurs and has been described as including material resources (e.g., facilities, equipment, money), human resources (e.g., staff and their qualifications) and organizational structure (e.g., methods of peer review, methods of reimbursement) (Donabedian, 1988). Process is “what is actually done in giving and receiving care” (Donabedian, 1988, page 1745). Patient outcomes are measured as the effects on health status of patients and populations (Donabedian, 1988). The SEIPS model is organized around the Structure-Process-Outcome model of Donabedian; it expands the ‘structure’ element by proposing the work system model of Smith and Carayon (Carayon & Smith, 2000; Smith & Carayon-Sainfort, 1989) as a way of describing the structure or system that can influence processes of care and outcomes. The SEIPS model also expands the outcomes by considering not only patient outcomes (e.g., patient safety) but also employee and organizational outcomes. In light of the importance of performance of healthcare professionals (see previous section), it is important to consider the impact of the work system on both patients and healthcare workers, as well as the potential linkage between patient safety and employee safety.

According to the SEIPS model of work system and patient safety (see Figure 4), patient safety is an outcome that results from the design of work systems and processes. Therefore, in order to improve patient safety, one needs to examine the specific processes involved and the work system factors that contribute either positively or negatively to processes and outcomes. In addition, patient safety is related to numerous individual and organizational outcomes. ‘Healthy’ healthcare organizations focus on both the health and safety of their patients, but also the health and safety of their employees (Murphy & Cooper, 2000; Sainfort, Karsh, Booske, & Smith, 2001).

3. PATIENT SAFETY AND SYSTEM REDESIGN

As emphasized throughout this chapter, medical errors and preventable patient harm can be avoided by a renewed focus on the design of work systems and processes. This type of system redesign effort requires competencies in engineering and health sciences. Redesigning a system can be challenging, especially in healthcare organizations that have limited technical infrastructure and technical expertise in human factors and systems engineering (Reid, et al., 2005).

3.1 Levels of System Design

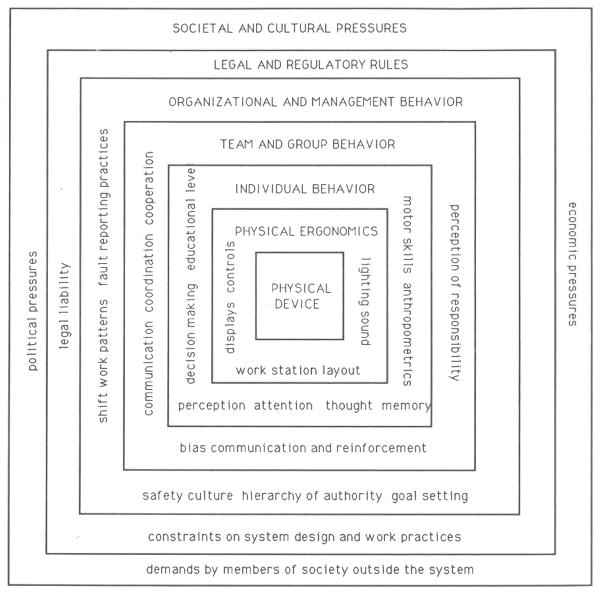

There is increasing recognition in the human factors literature of the different levels of factors that can contribute to human error and accidents (B. Karsh & Brown, 2009; Rasmussen, 2000). If the various factors are aligned ‘appropriately’ like ‘slices of Swiss cheese’, accidents can occur (Reason, 1990). Table 2 summarizes different approaches to the levels of factors contributing to human error. For instance, Moray (1994) has proposed a hierarchical systems model that defines multiple levels of human-system interaction (see Figure 5). The levels of system design are organized hierarchically as follows: physical devices, physical ergonomics, individual behavior, team and group behavior, organizational and management behavior, legal and regulatory rules, and societal and cultural pressures.

Table 2.

Levels of System Design

| AUTHORS | SYSTEM FACTORS CONTRIBUTING TO HUMAN ERROR |

|---|---|

| Rasmussen (2000): levels of a complex socio-technical system | Work Staff Management Company Regulators/associations Government |

| Moray (1994): hierarchical systems approach that includes several layers | Physical device Physical ergonomics Individual behavior Team and group behavior Organizational and management behavior Legal and regulatory rules Societal and cultural pressures |

| Johnson (2002): four levels of causal factors that can contribute to human error in healthcare | Level 1 factors that influence the behavior of individual clinicians (e.g., poor equipment design, poor ergonomics, technical complexity, multiple competing tasks) Level 2 factors that affect team-based performance (e.g., problems of coordination and communication, acceptance of inappropriate norms, operation of different procedures for the same tasks) Level 3 factors that relate to the management of healthcare applications (e.g., poor safety culture, inadequate resource allocation, inadequate staffing, inadequate risk assessment and clinical audit) Level 4 factors that involve regulatory and government organizations (e.g., lack of national structures to support clinical information exchange and risk management). |

| For comparison, levels of factors contribution to quality and safety of patient care | |

| (Berwick, 2002; Institute of Medicine Committee on Quality of Health Care in America, 2001) | Level A – experience of patients and communities Level B – microsystems of care, i.e. the small units of work that actually give the care that the patient experiences Level C – health care organizations Level D – health care environment |

Figure 5.

Hierarchical Model of Human-System Interaction (Moray, 1994)

It is interesting to make a parallel between the different levels of factors contributing to human error and the levels identified to deal with quality and safety of care (Berwick, 2002; Institute of Medicine Committee on Quality of Health Care in America, 2001). The 2001 IOM report on Crossing the Quality Chasm defines four levels at which interventions are needed in order to improve the quality and safety of care in the United States: Level A-experience of patients and communities, Level B-microsystems of care, i.e. the small units of work that actually give the care that the patient experiences, Level C-health care organizations, and Level D-health care environment. These levels are similar to the hierarchy of levels of factors contributing to human error (see Table 1). Models and methods of human factors engineering can be particularly useful because of their underlying systems approach and capacity to integrate variables at various levels (Hendrick, 1991; Luczak, 1997; Zink, 2000). Hendrick (1997) has defined a number of ‘levels’ of human factors or ergonomics:

human-machine: hardware ergonomics

human-environment: environmental ergonomics

human-software: cognitive ergonomics

human-job: work design ergonomics

human-organization: macroergonomics

Research at the first three levels has been performed in the context of quality of care and patient safety. Much still needs to be done at the levels of work design and at the macroergonomic level in order to design healthcare systems that produce high-quality safe patient care.

The levels of system design are interdependent and related to each other. In order to implement changes toward patient safety, it may be necessary to align incentives and motivation between the different levels. The case study of a radical change in a medical device manufacturer described by Vicente (2003) shows how improvements in the design of a medical device for patient safety did not occur until incentives between the different levels were aligned. A medical device manufacturer implemented a human factors approach after a number of events occurred and various pressures were put on the company. The events included several programming errors with a patient-controlled analgesia (PCA) pump sold by the company; some of the errors led to over deliveries of analgesic and patient deaths. After multiple pressures from the FDA, various professional associations (e.g., ISMP), the government (e.g., Department of Justice) and the public opinion (e.g., coverage in the lay press), in 2001, the company finally established a human factors program. This case study shows that at what happens at one level (e.g., manufacturer of the medical device) was related to other lower (e.g., patient deaths related to pump programming errors) and higher (e.g., regulatory agency) levels.

Patient safety improvement efforts should be targeted at all levels of system design. In addition, we need to ensure that incentives at various levels are aligned to encourage and support safe care.

3.2 Competencies for System Redesign

System redesign for patient safety required competencies in (1) health sciences and (2) human factors and systems engineering.

As an example of the application of human factors and systems engineering to patient safety, Jack and colleagues (2009) developed, implemented and tested the redesign of hospital discharge process. As was discussed earlier, transitions of care (e.g., patient discharge) are particularly vulnerable and have been related to numerous patient safety problems. Therefore, a team at Boston Medical Center redesigned the hospital discharge process by improving information flow and coordination. Three components of the discharge process were changed: (1) in hospital discharge process, (2) care plan post-hospital discharge, and (3) follow up with patient by pharmacist. Changes in the inhospital redesigned discharge process included: communication with the patient (i.e. patient education and information about follow up care), organization of post-discharge services and appointments for follow-up care, review of medication plan and other elements of the discharge plan, and transmission of discharge summary to appropriate parties (e.g., primary care provider of the patient). At the time of discharge, the patient was provided with a comprehensive written discharge plan. Post-discharge, a pharmacist followed up with the patient. This system redesign effort considers all important steps of the discharge process involved in the transition of care and the many interactions that occur in the discharge process (see Figure 3).

Patients who received the ‘intervention’ were less likely to be re-admitted or to visit the emergency department 30 days post-discharge. They were also more likely to visit their primary care provider. Survey data showed that patients involved in the redesigned discharge process felt more prepared for the discharge.

The study by Jack et al. (2009) is an interesting example of how system and process redesign can lead to benefits in quality and safety of care. It also shows that system redesign for patient safety requires knowledge in health sciences and human factors and systems engineering. In the Boston Medical Center study, the expertise in these various domains was distributed across members of the research team.

3.3 Challenges of System Redesign

It is important to emphasize that achieving patient safety is a constant process, similar to continuous quality improvement (Shortell et al., 1992). Safety cannot be ‘stored’; safety is an emergent system property that is created dynamically through various interactions between people and the system during the patient journey (see Figures 2 and 3). Some anticipatory system design can be performed using human factors knowledge (Carayon, Alvarado, & Hundt, 2003, 2006). Much is already known about various types of person/system interactions (e.g., usability of technology, appropriate task workload, teamwork) that can produce positive individual and organizational outcomes. However, health care is a dynamic complex system where people and system elements continuously change, therefore requiring constant vigilance and monitoring of the various system interactions and transitions.

When changes are implemented in healthcare organizations, opportunities are created to improve and recreate awareness and learning in order to foster mindfulness in interactions. Potentially adverse consequences to patients can occur when system interactions are faulty, inconsistent, error-laden or unclear between providers and those receiving or managing care (Bogner, 1994; Carayon, 2007;C. Vincent, et al., 1998). In order to maintain patient safety in healthcare organizations, healthcare providers, managers and other staff need to continuously learn (Rochlin, 1999), while reiterating or reinforcing their understanding as well as their expectations of the system of care being provided, a system that is highly dependent on ongoing interactions between countless individuals and, sometimes, organizations.

3.4 Role of Health Information Technology in Patient Safety

In healthcare, technologies are often seen as an important solution to improve quality of care and reduce or eliminate medical errors (David W. Bates & Gawande, 2003; Kohn, et al., 1999). These technologies include organizational and work technologies aimed at improving the efficiency and effectiveness of information and communication processes (e.g., computerized order entry provider and electronic medical record) and patient care technologies that are directly involved in the care processes (e.g., bar coding medication administration). For instance, the 1999 IOM report recommended adoption of new technology, like bar code administration technology, to reduce medication errors (Kohn, et al., 1999). However, implementation of new technologies in health care has not been without troubles or work-arounds (see, for example, the studies by Patterson et al. (2002) and Koppel et al. (2008) on potential negative effects of bar coding medication administration technology). Technologies change the way work is performed (Smith & Carayon, 1995) and because healthcare work and processes are complex, negative consequences of new technologies are possible (Battles & Keyes, 2002;R.I. Cook, 2002).

When looking for solutions to improving patient safety, technology may or may not be the only solution. For instance, a study of the implementation of nursing information computer systems in 17 New Jersey hospitals showed many problems experienced by hospitals, such as delays, and lack of software customization (Hendrickson, Kovner, Knickman, & Finkler, 1995). On the other hand, at least initially, nursing staff reported positive perceptions, in particular with regard to documentation (more readable, complete and timely). However, a more scientific quantitative evaluation of the quality of nursing documentation following the implementation of bedside terminals did not confirm those initial impressions (Marr, et al., 1993). This later result was due to the low use of bedside terminals by the nurses. This technology implementation may have ignored the impact of the technology on the tasks performed by the nurses. Nurses may have needed time away from the patient’s bedside in order to organize their thoughts and collaborate with colleagues (Marr, et al., 1993). This study demonstrates the need for a systems approach to understand the impact of technology. For instance, instead of using the “leftover” approach to function and task allocation, a human-centered approach to function and task allocation should be used (Hendrick & Kleiner, 2001). This approach considers the simultaneous design of the technology and the work system in order to achieve a balanced work system. One possible outcome of this allocation approach would be to rely on human and organizational characteristics that can foster safety (e.g., autonomy provided at the source of the variance; human capacity for error recovery), instead of completely ‘trusting’ the technology to achieve high quality and safety of care.

Whenever implementing a technology, one should examine the potential positive AND negative influences of the technology on the other work system elements (Battles & Keyes, 2002; Kovner, Hendrickson, Knickman, & Finkler, 1993; Smith & Carayon-Sainfort, 1989). In a study of the implementation of an Electronic Medical Record (EMR) system in a small family medicine clinic, a number of issues were examined: impact of the EMR technology on work patterns, employee perceptions related to the EMR technology and its potential/actual effect on work, and the EMR implementation process (Carayon, Smith, Hundt, Kuruchittham, & Li, 2009). Employee questionnaire data showed the following impact of the EMR technology on work: increased dependence on computers was found, as well as an increase in quantitative workload and a perceived negative influence on performance occurring at least in part from the introduction of the EMR (Hundt, Carayon, Smith, & Kuruchittham, 2002). It is important to examine for what tasks technology can be useful to provide better, safer care (Hahnel, Friesdorf, Schwilk, Marx, & Blessing, 1992).

The human factors characteristics of the new technologies’ design (e.g., usability) should also be studied carefully (Battles & Keyes, 2002). An experimental study by Lin et al. (2001) showed the application of human factors engineering principles to the design of the interface of an analgesia device. Results showed that the new interface led to the elimination of drug concentration errors, and to the reduction of other errors. A study by Effken et al. (1997) shows the application of a human factors engineering model, i.e. the ecological approach to interface design, to the design of a haemodynamic monitoring device.

The new technology may also bring its own ‘forms of failure’ (Battles & Keyes, 2002;R.I. Cook, 2002; R.Koppel, et al., 2005; Reason, 1990). For instance, bar coding medication administration technology can prevent patient misidentifications, but the possibility exists that an error during patient registration may be disseminated throughout the information system and may be more difficult to detect and correct than with conventional systems (Wald & Shojania, 2001). A study by Koppel et al. (2005) describes how the design and implementation of computerized provider order entry in a hospital contributed to 22 types of medication errors that were categorized into: (1) information errors due to fragmentation and systems integration failure (e.g., failure to renew antibiotics because of delays in reapproval), and (2) human-machine interface flaws (e.g., wrong patient selection or wrong medication selection).

In addition, the manner in which a new technology is implemented is as critical to its success as its technological capabilities (K. D. Eason, 1982; Smith & Carayon, 1995). End user involvement in the design and implementation of a new technology is a good way to help ensure a successful technological investment. Korunka and his colleagues (C. Korunka & Carayon, 1999;Christian Korunka, Weiss, & Karetta, 1993;C. Korunka, Zauchner, & Weiss, 1997) have empirically demonstrated the crucial importance of end user involvement in the implementation of technology to the health and well-being of end users. The implementation of technology in an organization has both positive and negative effects on the job characteristics that ultimately affect individual outcomes (quality of working life, such as job satisfaction and stress; and perceived quality of care delivered or self-rated performance) (Carayon and Haims, 2001). Inadequate planning when introducing a new technology designed to decrease medical errors has led to technology falling short of achieving its patient safety goal (Kaushal & Bates, 2001; Patterson, et al., 2002). The most common reason for failure of technology implementations is that the implementation process is treated as a technological problem, and the human and organizational issues are ignored or not recognized (K. Eason, 1988). When a technology is implemented, several human and organizational issues are important to consider (Carayon-Sainfort, 1992; Smith & Carayon, 1995). According to the SEIPS model of work system and patient safety (Carayon, et al., 2006), the implementation of a new technology will have impact on the entire work system, which will result in changes in processes of care and will therefore affect both patient and staff outcomes.

3.5 Link between Efficiency and Patient Safety

System redesign for patient safety should not be achieved at the expense of efficiency. On the contrary, it is important to recognize the possible synergies that can be obtained by patient safety and efficiency improvement efforts.

Efficiency issues related to access to intensive care services and crowding in emergency departments have been studied by Litvak and colleagues (McManus, et al., 2003; Rathlev, et al., 2007). Patients are often refused a bed in an intensive care unit; ICUs are well-known bottlenecks to patient flow. A study by McManus et al. (2003) shows that scheduled surgeries (as opposed to unscheduled surgeries and emergencies) can have a significant impact on rejections to the ICU. Although counterintuitive, this result demonstrates the impact that scheduled surgeries can contribute to erratic patient flow and intermittent periods of extreme overload and have a negative impact on ICUs. This clearly outlines the relationship between efficiency of scheduling process and workload experienced by the ICU staff, which is a well-known contributor to patient safety (Carayon & Alvarado, 2007; Carayon & Gurses, 2005). More broadly, Litvak et al. (2005) propose that unnecessary variability in healthcare processes contribute to nursing stress and patient safety problems. System redesign efforts aimed at removing or reducing unnecessary variability can improve both efficiency and patient safety.

Improving the efficiency of care processes can have very direct impact on patient safety. For instance, the delay between prescription of an antibiotic medication and its administration to septic shock patients is clearly related to patient outcomes (Kumar, et al., 2006): each hour of delay in administration of antibiotic medication is associated with an average increase in mortality of 7.6%. Therefore, improving the efficiency and timeliness of the medication process can improve quality and safety of care.

4. HUMAN FACTORS AND SYSTEMS ENGINEERING TOOLS FOR PATIENT SAFETY

The need for human factors and systems engineering expertise is pervasive throughout healthcare organizations. For instance, knowledge about work system and physical ergonomics can be used for understanding the relationship between employee safety and patient safety. This knowledge will be important for the employee health department of healthcare organizations. Purchasing departments of healthcare organizations need to have knowledge about usability and user-centered design in order to ensure that the equipment and devices are ergonomically designed. Given the major stress and workload problems experienced by many nurses, nursing managers need to know about job stress and workload management. Risk management represents the front-line of patient safety accidents; they need to understand human errors and other mechanisms involved in accidents. With the push toward health information technology, issues of technology design and implementation are receiving increasing attention. People involved in the design and implementation of those technologies need to have basic knowledge about interface design and usability, as well as sociotechnical system design. Biomedical engineers in healthcare organizations and medical device manufacturers design, purchase and maintain various equipment and technologies and, therefore, need to know about usability and user-centered design. The operating room is an example of a healthcare setting in which teamwork coordination and collaboration are critical for patient safety; human factors principles of team training are very relevant for this type of care setting.

We believe that improvements in the quality and safety of health care can be achieved by better integrating human factors and systems engineering expertise throughout the various layers and units of healthcare organizations. Some of the barriers to the widespread dissemination of this knowledge in healthcare organizations include: lack of recognition of the importance of systems design in various aspects of healthcare, technical jargon and terminology of human factors and systems engineering, and need for development of knowledge regarding the application of human factors and systems engineering in healthcare.

Numerous books provide information on human factors methods (Salvendy, 2006; Stanton, Hedge, Brookhuis, Salas, & Hendrick, 2004; Wilson & Corlett, 2005). Human factors methods can be classified as: (1) general methods (e.g., direct observation of work), (2) collection of information about people (e.g., physical measurement of anthropometric dimensions), (3) analysis and design (e.g., task analysis, time study), (4) evaluation of human-machine system performance (e.g., usability, performance measures, error analysis, accident reporting), (5) evaluation of demands on people (e.g., mental workload), and (6) management and implementation of ergonomics (e.g., participative methods). This shows the diversity of human factors methods to address various patient safety problems. In this section, we described selected human factors methods that have been used to evaluate high-risk care processes and technologies.

4.1 Human Factors Evaluation of High-Risk Processes

Numerous methods can be used to evaluate high-risk processes in health care. FMEA (Failure Modes and Effects Analysis) is one method that can be used to analyze, redesign and improve healthcare processes to meet the Joint Commission’s National Patient Safety Goals. The National Patient Safety Center of the VA has adapted the industrial FMEA method to healthcare (DeRosier, Stalhandske, Bagian, & Nudell, 2002). FMEA or other proactive risk assessment techniques have been applied to a range of healthcare processes, such as blood transfusion (Burgmeier, 2002), organ transplant (Richard I. Cook, et al., 2007), medication administration with implementation of smart infusion pump technology (Wetterneck, et al., 2006), and use of computerized provider order entry (Bonnabry, et al., 2008).

Proactive risk analysis of healthcare processes need to begin with a good understanding of the actual process. This often involves extensive data collection and analysis about the process. For instance, Carayon and colleagues (2007) used direct observations and interviews to analyze the vulnerabilities in the medication administration process and the use of bar coding medication administration technology by nurses. Such data collection and process analysis was guided and informed by the SEIPS model of work system and patient safety (Carayon, et al., 2006) (see Figure 4) in order to ensure that all system characteristics were adequately addressed in the process analysis.

The actual healthcare process may actually be different from organizational procedures for numerous reasons. For instance, a procedure may not have been updated after some technological or organizational change or the procedure was written by people who may not have a full understanding of the work and its context. A key concept in human factors engineering is the difference between the ‘prescribed’ work and the ‘real’ work (Guerin, Laville, Daniellou, Duraffourg, & Kerguelen, 2006; Leplat, 1989). Therefore, whenever analyzing a healthcare process, one needs to gather information about the ‘real’ process and the associated work system characteristics in its actual context.

4.2 Human Factors Evaluation of Technologies

As discussed in a previous section, technologies are often presented as solutions to improve patient safety and prevent medical errors (Kohn, et al., 1999). Technologies can lead to patient safety improvements only if they are designed, implemented and used according to human factors and systems engineering principles (Sage & Rouse, 1999; Salvendy, 2006).

At the design stage, a number of human factors tools are available to ensure that technologies fit human characteristics and are usable (Mayhew, 1999; Nielsen, 1993). Usability evaluation and testing methods are increasingly used by manufacturers and vendors of healthcare technologies. Healthcare organizations are also more likely to request information about the usability of technologies they purchase. Fairbanks and Caplan (2004) describe examples of how poor interface design of technologies used by paramedics can lead to medical errors. Gosbee and Gosbee (2005) provide practical information about usability evaluation and testing at the stage of technology design.

At the implementation stage, it is important to consider the rich literature on technological and organizational change that list principles for ‘good’ technology implementation (C. Korunka & Carayon, 1999; Ch. Korunka, Weiss, & Zauchner, 1997; Smith & Carayon, 1995; Weick & Quinn, 1999). For instance, a review of literature by Karsh (2004) highlight the following principles for technology implementation to promote patient safety:

top management commitment to the chang

responsibility and accountability structure for the change

structured approach to the change

training

pilot testing

communication

feedback

simulation

end user participation.

Even after a technology has been implemented, it is important to continue monitor its use in the ‘real’ context and to identify potential problems and work-arounds. About 2–3 years after the implementation of bar coding medication administration (BCMA) technology in a large academic medical center, a study of nurses’ use of the technology shows a range of work-arounds (Carayon, et al., 2007). For instance, nurses had developed work-arounds to be able to administer medications to patients in isolation rooms: it was very difficult for nurses to use the BCMA handheld device wrapped in a plastic bag; therefore, often the medication was scanned and documented as administered before the nurse would enter the patient room and administer the medication. This type of work-around results from a lack of fit between the context (i.e. patient in isolation room), the technology (i.e. BCMA handheld device) and the nurses’ task (i.e. medication administration). Some of these interactions may not be anticipated at the stage of designing the technology and may be ‘visible’ only after the technology is in use in the real context. This emphasizes the need to adopt a ‘continuous’ technology change approach that identifies problems associated with the technology’s use (Carayon, 2006; Weick & Quinn, 1999).

5. CONCLUSION

Improving patient safety involves major system redesign of healthcare work systems and processes (Carayon, et al., 2006). This chapter has outlined important conceptual approaches to patient safety; we have also discussed issues about system redesign and presented examples of human factors and systems engineering tools that can be used to improve patient safety. Additional information about human factors and systems engineering in patient safety is available elsewhere (see, for example, Carayon (2007) and Bogner (1994)).

Improving patient safety requires knowledge and skills in a range of disciplines, in particular health sciences and human factors and systems engineering. This is in line with the main recommendation by the NAE/IOM report on “Building a Better Delivery System. A New Engineering/Health Care Partnership” (Reid, et al., 2005). A number of partnerships between engineering and health care have grown and emerged since the publication of the NAE/IOM report. However, more progress is required, in particular in the area of patient safety. We need to train clinicians in human factors and systems engineering and to train engineers in health systems engineering; this major education and training effort should promote collaboration between the health sciences and human factors and systems engineering in various patient safety improvement projects. An example of this educational effort is the yearly week-long course on human factors engineering and patient safety taught by the SEIPS [Systems Engineering Initiative for Patient Safety] group at the University of Wisconsin-Madison [http://cqpi.engr.wisc.edu/seips_home/]. Similar efforts and more extensive educational offerings are necessary to train future healthcare leaders, professionals and engineers.

Acknowledgments

This publication was partially supported by grant 1UL1RR025011 from the Clinical & Translational Science Award (CTSA) program of the National Center for Research Resources National Institutes of Health (PI: M. Drezner) and by grant 1R01 HS015274-01 from the Agency for Healthcare Research and Quality (PI: P. Carayon, co-PI: K. Wood).

Biography

Pascale Carayon is Procter & Gamble Bascom Professor in Total Quality and Associate Chair in the Department of Industrial and Systems Engineering and the Director of the Center for Quality and Productivity Improvement (CQPI) at the University of Wisconsin-Madison. She received her Engineer diploma from the Ecole Centrale de Paris, France, in 1984 and her Ph.D. in Industrial Engineering from the University of Wisconsin-Madison in 1988. Her research examines systems engineering, human factors and ergonomics, sociotechnical engineering and occupational health and safety, and has been funded by the Agency for Healthcare Research and Quality, the National Science Foundation, the National Institutes for Health (NIH), the National Institute for Occupational Safety and Health, the Department of Defense, various foundations and private industry. Dr. Carayon leads the Systems Engineering Initiative for Patient Safety (SEIPS) at the University of Wisconsin-Madison (http://cqpi.engr.wisc.edu/seips_home).

Contributor Information

Pascale Carayon, Procter & Gamble Bascom Professor in Total Quality in the Department of Industrial and Systems Engineering, University of Wisconsin-Madison.

Kenneth E. Wood, University of Wisconsin-Madison, Senior Director of Medical Affairs and Director of Critical Care Medicine and Respiratory Care at the University of Wisconsin Hospital and Clinics.

References

- Baker GR, Norton PG, Flintoft V, Blais R, Brown A, Cox J, et al. The Canadian adverse events study: The incidence of adverse events among hospital patients in Canada. Journal of the Canadian Medical Association. 2004;170(11):1678–1686. doi: 10.1503/cmaj.1040498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates DW, Boyle DL, Vander Vliet MB, et al. Relationship between medication errors and adverse drug events. Journal of General Internal Medicine. 1995;10(4):199–205. doi: 10.1007/BF02600255. [DOI] [PubMed] [Google Scholar]

- Bates DW, Gawande AA. Improving safety with information technology. The New England Journal of Medicine. 2003;348(25):2526–2534. doi: 10.1056/NEJMsa020847. [DOI] [PubMed] [Google Scholar]

- Bates DW, Leape LL, Petrycki S. Incidence and preventability of adverse drug events in hospitalized adults. Journal of General Internal Medicine. 1993;8(6):289–294. doi: 10.1007/BF02600138. [DOI] [PubMed] [Google Scholar]

- Battles JB, Keyes MA. Technology and patient safety: A two-edged sword. Biomedical Instrumentation & Technology. 2002;36(2):84–88. doi: 10.2345/0899-8205(2002)36[84:TAPSAT]2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- Beach C, Croskerry P, Shapiro M. Profiles in patient safety: emergency care transitions. Academic Emergency Medicine. 2003;10(4):364–367. doi: 10.1111/j.1553-2712.2003.tb01350.x. [DOI] [PubMed] [Google Scholar]

- Bell CM, Bajcar J, Bierman AS, Li P, Mamdani MM, Urbach DR. Potentially unintended discontinuation of long-term medication use after elective surgical procedures. Archives of Internal Medicine. 2006;166(22):2525–2531. doi: 10.1001/archinte.166.22.2525. [DOI] [PubMed] [Google Scholar]

- Berwick DM. A user’s manual for the IOM’s ‘Quality Chasm’ report. Health Affairs. 2002;21(3):80–90. doi: 10.1377/hlthaff.21.3.80. [DOI] [PubMed] [Google Scholar]

- Bogner MS. The artichoke systems approach for identifying the why of error. In: Carayon P, editor. Handbook of Human Factors in Health Care and Patient Safety. Mahwah, NJ: Lawrence Erlbaum; 2007. pp. 109–126. [Google Scholar]

- Bogner MS, editor. Human Error in Medicine. Hillsdale, NJ: Lawrence Erlbaum Associates; 1994. [Google Scholar]

- Bonnabry P, Despont-Gros C, Grauser D, Casez P, Despond M, Pugin D, et al. A risk analysis method to evaluate the impact of a computerized provider order entry system on patient safety. Journal of the American Medical Informatics Association. 2008;15(4):453–460. doi: 10.1197/jamia.M2677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boockvar K, Fishman E, Kyriacou CK, Monias A, Gavi S, Cortes T. Adverse events due to discontinuations in drug use and dose changes in patients transferred between acute and long-term care facilities. [Original Investigation] Archives of Internal Medicine. 2004;164(5):545–550. doi: 10.1001/archinte.164.5.545. [DOI] [PubMed] [Google Scholar]

- Bracco D, Favre JB, Bissonnette B, Wasserfallen JB, Revelly JP, Ravussin P, et al. Human errors in a multidisciplinary intensive care unit: a 1-year prospective study. Intensive Care Medicine. 2000;27(1):137–145. doi: 10.1007/s001340000751. [DOI] [PubMed] [Google Scholar]

- Brennan PF, Safran C. Patient safety. Remember who it’s really for. International Journal of Medical Informatics. 2004;73(7–8):547–550. doi: 10.1016/j.ijmedinf.2004.05.005. [DOI] [PubMed] [Google Scholar]

- Brennan TA, Leape LL, Laird NM, hEBERT L, Localio AR, Lawthers AG, et al. Incidence of adverse events and negligence in hospitalized patients. Results of the Harvard Medical Practice Study I. New England Journal of Medicine. 1991;324(6):370–376. doi: 10.1056/NEJM199102073240604. [DOI] [PubMed] [Google Scholar]

- Burgmeier J. Failure mode and effect analysis: An application in reducing risk in blood transfusion. The Joint Commission Journal on Quality Improvement. 2002;28(6):331–339. doi: 10.1016/s1070-3241(02)28033-5. [DOI] [PubMed] [Google Scholar]

- Carayon-Sainfort P. The use of computers in offices: Impact on task characteristics and worker stress. International Journal of Human Computer Interaction. 1992;4(3):245–261. [Google Scholar]

- Carayon P. Human factors of complex sociotechnical systems. Applied Ergonomics. 2006;37:525–535. doi: 10.1016/j.apergo.2006.04.011. [DOI] [PubMed] [Google Scholar]

- Carayon P, editor. Handbook of Human Factors in Health Care and Patient Safety. Mahwah, New Jersey: Lawrence Erlbaum Associates; 2007. [Google Scholar]

- Carayon P, Alvarado C. Workload and patient safety among critical care nurses. Critical Care Nursing Clinics. 2007;8(5):395–428. doi: 10.1016/j.ccell.2007.02.001. [DOI] [PubMed] [Google Scholar]

- Carayon P, Gurses A. Nursing workload and patient safety in intensive care units: A human factors engineering evaluation of the literature. Intensive and Critical Care Nursing. 2005;21:284–301. doi: 10.1016/j.iccn.2004.12.003. [DOI] [PubMed] [Google Scholar]

- Carayon P, Gurses AP, Hundt AS, Ayoub P, Alvarado CJ. Performance obstacles and facilitators of healthcare providers. In: Korunka C, Hoffmann P, editors. Change and Quality in Human Service Work. Vol. 4. Munchen, Germany: Hampp Publishers; 2005. pp. 257–276. [Google Scholar]

- Carayon P, Hundt AS, Karsh BT, Gurses AP, Alvarado CJ, Smith M, et al. Work system design for patient safety: The SEIPS model. Quality & Safety in Health Care. 2006;15(Supplement I):i50–i58. doi: 10.1136/qshc.2005.015842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carayon P, Smith MJ. Work organization and ergonomics. Applied Ergonomics. 2000;31:649–662. doi: 10.1016/s0003-6870(00)00040-5. [DOI] [PubMed] [Google Scholar]

- Carayon P, Smith P, Hundt AS, Kuruchittham V, Li Q. Implementation of an Electronic Health Records system in a small clinic. Behaviour and Information Technology. 2009;28(1):5–20. [Google Scholar]

- Carayon P, Wetterneck TB, Hundt AS, Ozkaynak M, DeSilvey J, Ludwig B, et al. Evaluation of nurse interaction with bar code medication administration technology in the work environment. Journal of Patient Safety. 2007;3(1):34–42. [Google Scholar]

- Cimino MA, Kirschbaum MS, Brodsky L, Shaha SH. Assessing medication prescribing errors in pediatric intensive care units. Pediatric Critical Care Medicine. 2004;5(2):124–132. doi: 10.1097/01.PCC.0000112371.26138.E8. [DOI] [PubMed] [Google Scholar]

- Clancy CM. Care transitions: A threat and an opportunity for patient safety. American Journal of Medical Quality. 2006;21(6):415–417. doi: 10.1177/1062860606293537. [DOI] [PubMed] [Google Scholar]

- Coleman EA, Smith JD, Raha D, Min SJ. Posthospital medication discrepancies - Prevalence and contributing factors. Archives of Internal Medicine. 2005;165:1842–1847. doi: 10.1001/archinte.165.16.1842. [DOI] [PubMed] [Google Scholar]

- Cook R, Rasmussen J. “Going solid”: A model of system dynamics and consequences for patient safety. Quality & Safety in Health Care. 2005;14:130–134. doi: 10.1136/qshc.2003.009530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook RI. Safety technology: Solutions or experiments? Nursing Economic$ 2002;20(2):80–82. [PubMed] [Google Scholar]

- Cook RI, Render M, Woods DD. Gaps in the continuity of care and progress on patient safety. British Medical Journal. 2000;320:791–794. doi: 10.1136/bmj.320.7237.791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook RI, Woods DD, Miller C. A Tale of Two Stories: Contrasting Views of Patient Safety. Chicago, IL: National Patient Safety Foundation; 1998. [Google Scholar]

- Cook RI, Wreathall J, Smith A, Cronin DC, Rivero O, Harland RC, et al. Probabilistic risk assessment of accidental ABO-incompatible thoracic organ transplantation before and after 2003. Transplantation. 2007;84(12):1602–1609. doi: 10.1097/01.tp.0000295931.39616.25. [DOI] [PubMed] [Google Scholar]

- Cooper JB. Do short breaks increase or decrease anesthetic risk? Journal of Clinical Anesthesiology. 1989;1(3):228–231. doi: 10.1016/0952-8180(89)90047-0. [DOI] [PubMed] [Google Scholar]

- Cullen DJ, Bates DW, Leape LL The Adverse Drug Even Prevention Study Group. Prevention of adverse drug events: A decade of progress in patient safety. Journal of Clinical Anesthesia. 2001;12:600–614. doi: 10.1016/s0952-8180(00)00226-9. [DOI] [PubMed] [Google Scholar]

- Cullen DJ, Sweitzer BJ, Bates DW, Burdick E, Edmondson A, Leape LL. Preventable adverse drug events in hospitalized patients: A comparative study of intensive care and general care units. Critical Care Medicine. 1997;25(8):1289–1297. doi: 10.1097/00003246-199708000-00014. [DOI] [PubMed] [Google Scholar]

- DeRosier J, Stalhandske E, Bagian JP, Nudell T. Using health care Failure Mode and Effect Analysis: The VA National Center for Patient Safety’s prospective risk analysis system. Joint Commission Journal on Quality Improvement. 2002;28(5):248–267. 209. doi: 10.1016/s1070-3241(02)28025-6. [DOI] [PubMed] [Google Scholar]

- Donabedian A. The quality of medical care. Science. 1978;200:856–864. doi: 10.1126/science.417400. [DOI] [PubMed] [Google Scholar]

- Donabedian A. The quality of care. How can it be assessed? Journal of the American Medical Association. 1988;260(12):1743–1748. doi: 10.1001/jama.260.12.1743. [DOI] [PubMed] [Google Scholar]

- Donchin Y, Gopher D, Olin M, Badihi Y, Biesky M, Sprung CL, et al. A look into the nature and causes of human errors in the intensive care unit. Critical Care Medicine. 1995;23(2):294–300. doi: 10.1097/00003246-199502000-00015. [DOI] [PubMed] [Google Scholar]

- Eason K. Information Technology and Organizational Change. London: Taylor & Francis; 1988. [Google Scholar]

- Eason KD. The process of introducing information technology. Behaviour and Information Technology. 1982;1(2):197–213. [Google Scholar]

- Effken JA, Kim MG, Shaw RE. Making the constraints visible: Testing the ecological approach to interface design. Ergonomics. 1997;40(1):1–27. doi: 10.1080/001401397188341. [DOI] [PubMed] [Google Scholar]

- Fairbanks RJ, Caplan S. Poor interface design and lack of usability testing facilitate medical error. Joint Commission Journal on Quality and Safety. 2004;30(10):579–584. doi: 10.1016/s1549-3741(04)30068-7. [DOI] [PubMed] [Google Scholar]

- Farley DO, Haviland A, Champagne S, Jain AK, Battles JB, Munier WB, et al. Adverse-event-reporting practices by us hospitals: Results of a national survey. Quality & Safety in Health Care. 2008;17(6):416–423. doi: 10.1136/qshc.2007.024638. [DOI] [PubMed] [Google Scholar]

- Forster AJ, Clark HD, Menard A, Dupuis N, Chernish R, Chandok N, et al. Adverse events among medical patients after discharge from hospital. Canadian Medical Association Journal. 2004;170(3):345–349. [PMC free article] [PubMed] [Google Scholar]

- Giraud T, Dhainaut JF, Vaxelaire JF, Joseph T, Journois D, Bleichner G, et al. Iatrogenic complications in adult intensive care units: A prospective two-center study. Critical Care Medicine. 1993;21(1):40–51. doi: 10.1097/00003246-199301000-00011. [DOI] [PubMed] [Google Scholar]

- Gosbeee JW, Gosbee LL, editors. Using Human Factors Engineering to Improve Patient Safety. Oakbrook Terrrace, Illinois: Joint Commission Resources; 2005. [Google Scholar]

- Guerin F, Laville A, Daniellou F, Duraffourg J, Kerguelen A. Understanding and Transforming Work - The Practice of Ergonomics. Lyon, France: ANACT; 2006. [Google Scholar]

- Gurses A, Carayon P, Wall M. Impact of performance obstacles on intensive care nurses workload, perceived quality and safety of care, and quality of working life. Health Services Research. 2009 doi: 10.1111/j.1475-6773.2008.00934.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gurses AP, Carayon P. Performance obstacles of intensive care nurses. Nursing Research. 2007;56(3):185–194. doi: 10.1097/01.NNR.0000270028.75112.00. [DOI] [PubMed] [Google Scholar]

- Hahnel J, Friesdorf W, Schwilk B, Marx T, Blessing S. Can a clinician predict the technical equipment a patient will need during intensive care unit treatment? An approach to standardize and redesign the intensive care unit workstation. Journal of Clinical Monitoring. 1992;8(1):1–6. doi: 10.1007/BF01618079. [DOI] [PubMed] [Google Scholar]