Abstract

Objective

Correlation of white matter microstructure with various cognitive processing tasks and with overall intelligence has been previously demonstrated. We investigate the correlation of white matter microstructure with various higher-order auditory processing tasks, including interpretation of speech-in-noise, recognition of low-pass frequency filtered words, and interpretation of time-compressed sentences at two different values of compression. These tests are typically used to diagnose auditory processing disorder (APD) in children. Our hypothesis is that correlations between white matter microstructure in tracts connecting the temporal, frontal, and parietal lobes, as well as callosal pathways, will be seen. Previous functional imaging studies have shown correlations between activation in temporal, frontal and parietal regions from higher-order auditory processing tasks. Additionally, we hypothesize that the regions displaying correlations will vary according to the task, as each task uses a different set of skills.

Design

Diffusion tensor imaging (DTI) data was acquired in a cohort of 17 normal-hearing children ages 9-11. Fractional anisotropy (FA), a measure of white matter fiber tract integrity and organization was computed and correlated on a voxelwise basis with performance on the auditory processing tasks, controlling for age, sex, and full-scale IQ.

Results

Divergent correlations of white matter FA depending on the particular auditory processing task were found. Positive correlations were found between FA and speech-in-noise in white matter adjoining prefrontal areas, and between FA and filtered words in the corpus callosum. Regions exhibiting correlations with time-compressed sentences varied depending on the degree of compression: the greater degree of compression (with the greatest difficulty) resulted in correlations in white matter adjoining prefrontal (dorsal and ventral) while the smaller degree of compression (with less difficulty) resulted in correlations in white matter adjoining audio-visual association areas and the posterior cingulate. Only the time-compressed sentences with the lowest degree of compression resulted in positive correlations in the centrum semiovale; all the other tasks resulted in negative correlations.

Conclusion

The dependence of performance on higher-order auditory processing tasks on brain anatomical connectivity was seen in normal-hearing children ages 9-11. Results support a previously hypothesized dual-stream (dorsal and ventral) model of auditory processing, and that higher-order processing tasks rely less on the dorsal stream related to articulatory networks, and more on the ventral stream related to semantic comprehension. Results also show that the regions correlating with auditory processing vary according to the specific task, indicating that the neurological bases for the various tests used to diagnose APD in children may be partially independent.

Keywords: Auditory Processing, Auditory Processing Disorder, Children, Diffusion Tensor Imaging

Introduction

There is increasing interest in investigating brain connectivity and how this connectivity (whether anatomical, functional, or effective) correlates with cognitive function. Diffusion tensor imaging (DTI) is a powerful method of measuring white matter microstructure in vivo and hence estimating anatomical connectivity between brain regions (see . (Basser & Jones, 2002) for a review). A common parameter used to estimate white matter structural integrity and connectivity is fractional anisotropy (FA). FA is a parameter ranging from zero, in the case of completely isotropic diffusion, to one, in the case of completely anisotropic diffusion. In white matter regions diffusion anisotropy is expected since water is freer to diffuse in a direction parallel to the axon then perpendicular to it. Increased FA may represent increased myelination, increased fiber organization, or increased axonal caliber.

In specific areas, FA has been shown to be correlated with intelligence in normal children (Schmithorst, Wilke, Dardzinski, & Holland, 2005). FA has also been shown to be correlated in specific areas with function on a variety of specific cognitive tasks, such as reaction time on a go no-go task (Liston et al., 2006), measures of impulse control (Olson et al., 2009), or reading ability (Klingberg et al., 2000; Qiu, Tan, Zhou, & Khong, 2008).

Here we investigate possible correlations between FA values and performance on higher-order auditory processing tasks including: speech in noise, time-compressed sentences, and low-pass filtered words. These tests are often used to diagnose auditory processing disorder (APD) in children, who have normal peripheral hearing but nevertheless suffer from deficits in performance on higher-order processing tasks. The diagnosis of auditory processing disorder is considered controversial, due in part to the types of tasks used to diagnose this condition (Cacace & McFarland, 1998, 2005; Friel-Patti, 1999; McFarland & Cacace, 2002, 2003; Moore, 2006). The measures used have been criticized in terms of their reliance on other processing constructs, including language and attention.

The three measures we focus on here all involve manipulations of language stimuli. Therefore, these particular stimuli are likely to tap more complex functions than would processing of nonmeaningful stimuli typically used to assess basic auditory functioning (e.g., tones, clicks). Although imaging reports that have employed these specific tests (the SCAN-C, BKB-SIN, Time Compressed Sentence Test) are not yet available, differences in regional activation have been associated with tasks that employed elements found in these higher-order auditory processing tests. The regional differences suggest that these particular diagnostic tests are likely to reflect different neural aspects of higher-order auditory processing. For example, other speech-in-noise tasks correlate with measures obtained from oto-accoustic emissions (de Boer & Thornton, 2007; Kim, Frisina, & Frisina, 2006). Otoacoustic emission amplitudes are suppressed both with white noise or competing speech presented to the contralateral ear (Timpe-Syverson & Decker, 1999). These types of changes in otoacoustic emissions are attributed to the control of the efferent pathways that descend through the brainstem to the cochlea. Listening to verbal material in the context of noise is also associated with cortical activation in the temporal and anterior insula regions of the cortex (Schmidt et al., 2008; Wong, Uppunda, Parrish, & Dhar, 2008; Zekveld, Heslenfeld, Festen, & Schoonhoven, 2006). Frontal results for speech-in-noise tasks have been more variable. Schmidt and colleagues (2008) reported more right inferior frontal activation when items were presented without background noise as compared to with background noise. In contrast, in a second study (Zekveld et al., 2006), the left inferior frontal cortex responded to both intelligible and unintelligible speech in noise.

Studies that have used low-pass filtered stimuli (e.g., (Gandour et al., 2003; Meyer, Steinhauer, Alter, Friederici, & von Cramon, 2004; Plante, Creusere, & Sabin, 2002; Plante, Holland, & Schmithorst, 2006)) indicate effects in the dosolateral prefrontal, anterior insula, and superior temporal regions for listening to filtered vs. unfiltered sentences. Although the temporal activation seems to reflect basic auditory processing of filtered speech (Plante et al., 2002), frontal areas appear to differentially contribute to tasks that involve making decisions about low-pass filtered speech (Plante et al., 2002; Plante et al., 2006). The right lateralization of temporal activation seen in these studies is likely due to hemispheric bias for spectral verses temporal acoustic information (Dogil et al., 2002; Obleser, Eisner, & Kotz, 2008; Poeppel, 2003). Obleser and colleagues (2008) showed that listening to spectrally-altered sentences resulted in right lateralized temporal activation whereas listening to temporally-altered sentences resulted in left lateralized temporal activation.

Each of the tasks places greater demands on attention than would occur if the linguistic stimuli were presented alone and unadulterated. Auditory attention is known to involve multiple waves of temporally and spatially distinct activity (see (M.-H. Giard, Fort, Mochetant-Rostaing, & Pernier, 2000) for a review). The demands of focusing on speech, involved with directing attention to particular targets in the face of either noise (Schmidt et al., 2008) or competing stimuli (e.g. (Christensen, Antonucci, Lockwood, Kittleson, & Plante, 2008; Hugdahl et al., 2000; Jancke, Buchanan, Lutz, & Shah, 2001; Thomsen, Rimol, Ersland, & Hugdahl, 2004)), are associated with changes in activation in frontal, temporal, and parietal areas. Activation even within the primary auditory cortex has been shown to modulate to attentional demands (Johnson & Zatorre, 2005). There is also evidence that auditory attention effects can extend to the level of the cochlea. Small but reliable changes in otoaccoustic emission amplitudes have been measured in response to evoking stimuli that were either attended or unattended (M. H. Giard, Collet, Bouchet, & Pernier, 1994; Maison, Micheyl, & Collet, 2001). Similarly, emission amplitudes are reduced when the listener’s attention is focused on a visual task (Avan & Bonfils, 1991; Froehlich, Collet, Chanal, & Morgon, 1990; Froehlich, Collet, & Morgon, 1993; Puel, Bonfils, & Pujol, 1988). Both brainstem and otoacoustic emissions are affected when subjects attend or do not attend to the evoking stimuli in the context of contralateral noise (de Boer & Thornton, 2007; Ikeda, Hayashi, Sekiguchi, & Era, 2006). These data implicate both white matter pathways within the cerebrum as well as those projecting to the brainstem, particularly when tasks require heightened attention to the stimuli.

These functional imaging studies lead to two predictions concerning the role of white matter pathways in measures of central auditory processing. First, both brainstem and cortical pathways should be essential for the processing that is fundamental to tests used in the diagnosis of auditory processing disorders. Therefore, we would expect that the “auditory pathways” involved in these tasks might reasonably extend beyond those between the cochlea and primary auditory cortex. Second, that connections between frontal, parietal, and temporal cortices (as well as callosal pathways) may support the types of higher order auditory processing, including features such as speech in noise, filtered speech, or increased attentional demand, reflected by the tests. The degree to which each of the three tests correlates with different white matter regions would support the degree to which these tests could be seen as reflecting different constructs underlying higher auditory processing. Furthermore, knowledge of how brain connectivity in normal children is connected with performance on these tasks is an essential first step to understanding the locus of these deficits in children with APD.

Materials and Methods

Subjects

The subject population consisted of 17 normally developing children with normal hearing (11 M, 6 F). Mean age +/- SD = 10.17 +/- 1.0 years (range 9.0 – 11.6 years). Mean Wechsler Full-scale +/- SD = 112.9 +/- 10.6 (range 99 – 134). Institutional Board Review approval, informed consent from one parent, and assent from each subject were obtained prior to all experiments. Anatomical brain scans were read as normal by a board-certified radiologist.

Audiological testing

Normal hearing in both ears was verified via standard pure-tone audiometry. Subjects were tested in a soundproof audiometry booth. The tests (described below) were pre-recorded (on CD) and administered via a Technics Digital Audio system (Panasonic, Secaucus, NJ) through a calibrated audiometer. Several tests of higher-order auditory processing skills were administered as follows.

SCAN-C Filtered Words

The filtered words test is a subtest of the SCAN-C test for auditory processing disorders in children (Keith, 2000). The Filtered Words subtest enables the examiner to assess a child’s ability to understand distorted speech. The child is asked to repeat words that sound muffled. The test stimuli consist of one syllable words that have been low-pass filtered at 1000 Hz with a roll-off of 32 dB per octave. Three practice and 20 test words are presented monaurally in each ear sequentially. Normative data for SCAN-C (for both left and right ears) are available at one-year intervals from 5 years 0 months to 9 years 11 months; and one combined age group for 10 years 0 months to 11 years 11 months.

BKB-SIN Speech in Noise

The BKB-SIN Test is modified for use in children from a previously developed SIN test (QuickSIN Speech-in-Noise test, Etymotic Research, 2001) and uses the Bamford-Kowal-Bench sentences (Bench, Kowal, & Bamford, 1979) spoken by a male talker in four-talker babble. Sentences are presented by a target talker at various SNR levels ranging from +21 dB to -6 dB. The BKB-SIN contains 18 List Pairs, of which the first eight were used for this test. Each List Pair consists of two lists of eight to ten sentences each. The first sentence in each list has four key words, and the remaining sentences have three. A verbal “ready” cue precedes each sentence, and the subject repeats each sentence heard. The key words in each sentence are scored as correct or incorrect. The sentences are pre-recorded at signal-to-noise ratios that decrease in 3-dB steps. List Pairs 1-8 have ten sentences in each list, with one sentence at each SNR of: +21, +18, +15, +12, +9, +6, +3, 0, -3, and -6 dB. Each list in the pair is individually scored, and the results of the two lists are averaged to obtain the List Pair score. Results are compared to normative data to obtain the SNR loss. SNR loss refers to the increase in signal-to-noise ratio required by a listener to obtain 50% correct words, sentences, or words in sentences, compared to normal performance. Published reports indicate a wide range of SNR loss in persons with similar pure tone hearing losses; the measurement of SNR loss cannot be reliably predicted from the pure tone audiogram (Killion & Niquette, 2000; Taylor, 2003).

Time-Compressed Sentences

The Time Compressed Sentence Test (Beasley & Freeman, 1977; Keith, 2002) recording consists of a 1000 Hz calibration tone, a practice list of ten sentences presented at normal rates of speech (0% time compression), two lists of ten sentences with 40% time compression and two lists of ten sentences with 60% time compression. All time compressed test sentences are preceded by two practice sentences. The sentences are recorded with 5 second intervals to allow subjects time to respond. The sentences are taken from the Manchester University Test A, modified for word familiarity in the United States. In scoring, three points are given for each sentence repeated correctly, and one point deducted for each section of the sentence (subject, object, or predicate) misinterpreted or not heard. Test results are interpreted using tables that provide (a) cut-off scores for -1.5 and -2 SD of performance, (b) z-scores, and (c) percentile ranks. Examiners can also determine standard scores for direct comparison with other standardized tests such as intelligence tests and language measures such as the Clinical Evaluation of Language Function. Normative data was collected on 160 children in age groups from 6 years to 11 years 11 months (Keith, 2002).

MRI Scans

Scans were acquired on a Siemens 3T Trio full-body magnetic resonance imaging system EPI-DTI scan parameters were: TR = 6000 ms, TE = 87 ms, FOV = 25.6 × 25.6 cm, slice thickness = 2 mm, matrix = 128 × 128, b-value = 1000 s/mm2. One scan was acquired without diffusion weighting, and 12 diffusion-weighted scans were acquired, with different diffusion gradient directions. In addition, T1-weighted whole-brain 3-D MP-RAGE anatomical scans were acquired for each subject at 1 mm isotropic resolution. Visual analysis was used to inspect the DTI data for gross head motion (causing misregistration) and gross artifacts caused by motion during application of the diffusion-sensitizing gradients.

Spatial normalization and whole-brain segmentation was performed for each subject using procedures in SPM5 (Wellcome Dept. of Cognitive Neurology, London, UK) applied to the T1-weighted anatomical images. Pediatric templates for prior probabilities of gray and white matter distribution (Wilke, Schmithorst, & Holland, 2003) were used to improve segmentation accuracy of our pediatric images. Unlike results obtained using an adult template, neither affine scaling parameters nor white matter probability maps correlate with age using a pediatric template (Wilke, Schmithorst, & Holland, 2002). The segmentation results were output in native space and the spatial transformation into standardized Montreal Neurological Institute (MNI) space was accomplished by normalizing the white matter probability maps to the white matter pediatric template. This procedure was used to ensure maximum accuracy for normalization of the white matter. The white-matter probability maps from each subject were then transformed into the MNI space, and resampled to 2 mm isotropic resolution, to match the resolution of the DTI images. (The transformation parameters found for normalization of the white matter were also used for normalization of the DTI parametric maps.)

The DTI tensor components were computed from each DTI dataset. Fractional anisotropy (FA) maps were then computed from the tensor components. The maps were transformed into MNI space (using the same transformation parameters as found from normalization of the white matter). For additional accuracy (in case of slightly different subject positioning due to motion in the time between the DTI and whole-brain acquisitions), the FA maps were co-registered (using a rigid-body transformation) to the white matter probability maps for each subject. For each subject, analysis was restricted to voxels with a white matter posterior probability of > 0.9 from the SPM segmentation results, as well as FA > 0.25. Globally, analysis was restricted to voxels in which the above criteria were met for at least half (9) of the subjects. A total of 45221 voxels met the criteria and were retained for further analysis. The strict thresholds used for restricting the subset of voxels analyzed minimize the risk of spurious results due to partial volume effects and imperfect spatial normalization, at the cost of only being able to examine larger white matter tracts.

Data was analyzed using the General Linear Model (GLM) with age, sex, and full-scale IQ entered as nuisance variables, and performance on the auditory processing tests as the regressor of interest. T-score maps from the GLM were converted into Z-score maps, and filtered with a Gaussian filter of width 3 mm. However, to prevent “bleeding” of regions with significant effects into surrounding gray matter or CSF, the filtering was restricted to voxels inside the white matter mask (Schmithorst et al., 2005). A threshold of Z = 6.5 with spatial extent threshold of 150 voxels (= approximately 1.2 cc) was used. Since the filter width used was not significantly bigger than a single voxel, standard Gaussian random field theory would not provide a sufficiently accurate estimate of corrected p-values (for comparisons involving multiple voxels). Hence a Monte Carlo simulation, based on the method of (Ledberg, Akerman, & Roland, 1998), was used to estimate corrected p-values. Noise images were created from Principal Component Analysis of the data, and used to estimate the intrinsic spatial autocorrelations. The Monte Carlo simulation was performed, using those parameters, to simulate random noise with the same characteristics as present in the data. The cluster statistics from the Monte Carlo simulation were stored and used to estimate the significance of the found clusters in the data. A double-tailed threshold of p < 0.05 was used for significance.

ROIs were defined, identical to each cluster found to exhibit a significant correlation of FA with the auditory processing scores. For each ROI, the average FA values were computed for each subject. The centroid of each region was also computed; a transformation from MNI coordinates to Talairach coordinates was performed using the non-linear mni2tal procedure outlined in (http://www.nil.wustl.edu/labs/kevin/man/answers/mnispace.html). Using the Talairach Daemon (Lewis et al.), the cortical gray matter region nearest to the centroid was found. However, the white matter regions are significantly larger than a single voxel, and thus adjoin other gray matter regions than those determined by the Talairach Daemon to be nearest the centroids. The partial correlation coefficient (controlling for age, sex, and IQ) between FA and the auditory scores are given. Results of these analyses are included in Table 2.

Table 2.

Correlations of FA values for the regions in Figures 1-4 with task performance for the auditory processing tasks of Bamford-Kowal-Bench Speech-in-Noise (BKBSIN), Time-compressed sentences at 40% compression (TC40), Time-compressed sentences at 60% compression (TC60), and SCAN-C low-pass filtered words (SCAN-C).

| Region | Partial R | X, Y, Z (Talairach coordinates) | Nearest Gray Matter (Brodmann’s Area) |

|---|---|---|---|

| BKBSIN* | |||

| Right Prefrontal | -0.79 | -26 18 30 | Middle Frontal Gyrus (BA 9) |

| Left Prefrontal | -0.76 | 29 16 28 | Middle Frontal Gyrus (BA 9) |

| Right Centrum Semiovale | 0.85 | -24 -33 36 | Cingulate Gyrus (BA 31) |

| Left Centrum Semiovale | 0.65 | 26 -26 40 | Postcentral Gyrus (BA 3) |

| TC40 | |||

| Left Occipital | 0.83 | 30 -70 16 | Precuneus (BA 31) |

| Right Occipito-Temporal | 0.76 | -21 -51 27 | Cingulate Gyrus (BA 31) |

| Right Centrum Semiovale | 0.80 | -22 -26 42 | Cingulate Gyrus (BA 31) |

| Left Centrum Semiovale | 0.92 | 23 -33 45 | Postcentral Gyrus (BA 3) |

| TC60 | |||

| Right Inferior Prefrontal | 0.71 | -20 38 7 | Anterior Cingulate (BA 32) |

| Left Superior Frontal | 0.81 | 21 9 37 | Cingulate Gyrus (BA 31) |

| Left Centrum Semiovale | -0.69 | 26 -30 43 | Postcentral Gyrus (BA 3) |

| SCAN-C | |||

| Right Inferior Prefrontal | 0.85 | -15 49 -6 | Medial Frontal Gyrus (BA 10) |

| Genu of Corpus Callosum | 0.85 | 0 11 23 | Anterior Cingulate (BA 33) |

| Left Occipito-Temporal | 0.81 | 29 -64 17 | Posterior Cingulate (BA 31) |

| Right Occipito-Temporal | 0.71 | -26 -50 26 | Cingulate Gyrus (BA 31) |

| Left Parietal | 0.88 | 36 -41 34 | Parietal (BA 40) |

| Left Centrum Semiovale | -0.76 | 28 -14 38 | Precentral Gyrus (BA 6) |

| Right Centrum Semiovale | -0.77 | -29 -21 36 | Postcentral Gyrus (BA 3) |

Correlations are with raw BKB-SIN scores (SNR at 50% correct performance) thus negative correlations indicate positive correlations with test performance and vice versa.

Results

Auditory Processing Tests

Scores for the auditory processing tests are as follows. BKB-SIN (SNR for 50% comprehension): mean = 0.5 dB, σ = 0.89 dB, min = -1 dB, max = 2 dB. SCAN-C filtered words: mean = 35.7, σ = 2.0, min = 31, max = 39. Time-compressed sentences at 40% compression: mean = 58.9, σ = 1.05, min = 57, max = 60. Time-compressed sentences at 60% compression: mean = 53.35, σ = 3.06, min = 49, max = 58.

A matrix of correlation coefficients between the four auditory processing test scores and age, sex, and IQ is given in Table 1. The only correlations which even reached a nominal (p < 0.05) level of significance are the correlation between age and time-compressed sentences at 40% compression (R = 0.52), and the correlation between performance on the SCAN-C filtered words and BKB-SIN (R = -0.55; the sign is negative since lower BKB-SIN scores represent improved performance).

Table 1.

Correlation matrix between the variables of age, sex, and full-scale IQ, and performance on auditory processing tests.

| AGE | SEX | IQ | SCAN-C | BKBSIN | TC40 | TC60 | |

|---|---|---|---|---|---|---|---|

| AGE | 1.000 | 0.060 | 0.010 | 0.370 | -0.43 | 0.520* | -0.03 |

| SEX | 0.060 | 1.000 | -0.16 | 0.240 | -0.13 | 0.330 | -0.05 |

| IQ | 0.010 | -0.16 | 1.000 | 0.050 | -0.36 | 0.080 | 0.390 |

| SCAN-C | 0.370 | 0.240 | 0.050 | 1.000 | -0.56* | 0.460 | -0.09 |

| BKBSIN | -0.43 | -0.13 | -0.36 | -0.56* | 1.000 | -0.30 | -0.03 |

| TC40 | 0.520* | 0.330 | 0.080 | 0.460 | -0.30 | 1.000 | -0.20 |

| TC60 | -0.03 | -0.05 | 0.390 | -0.09 | -0.03 | -0.20 | 1.000 |

(= significant at nominal p < 0.05 uncorrected for multiple comparison).

DTI Results

DTI results are summarized in Table 2 for each task, with centroids of each region and partial correlations with task performance given.

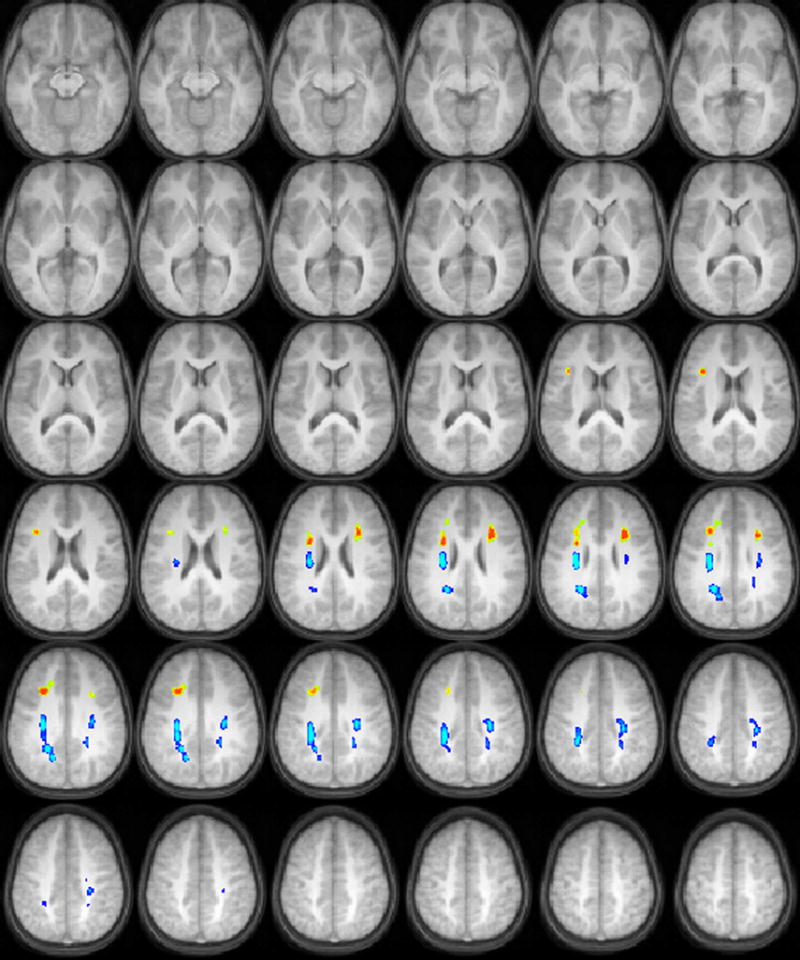

For the BKB-SIN task, negative correlations of FA with SNR at 50% comprehension (equivalent to positive correlations with task performance) were found in white matter in the left and right prefrontal cortices, while positive correlations of FA with SNR (equivalent of negative correlations with task performance) were found in the centrum semiovale bilaterally, which includes both ascending and descending sensory and motor fiber pathways. (Figure 1).

Figure 1.

Regions with significant correlations of white matter fractional anisotropy with audiologic performance on a speech-in-noise test (orange = positive correlation, blue = negative correlation) in a cohort of 17 children ages 9-11 years old. All images in radiologic orientation.

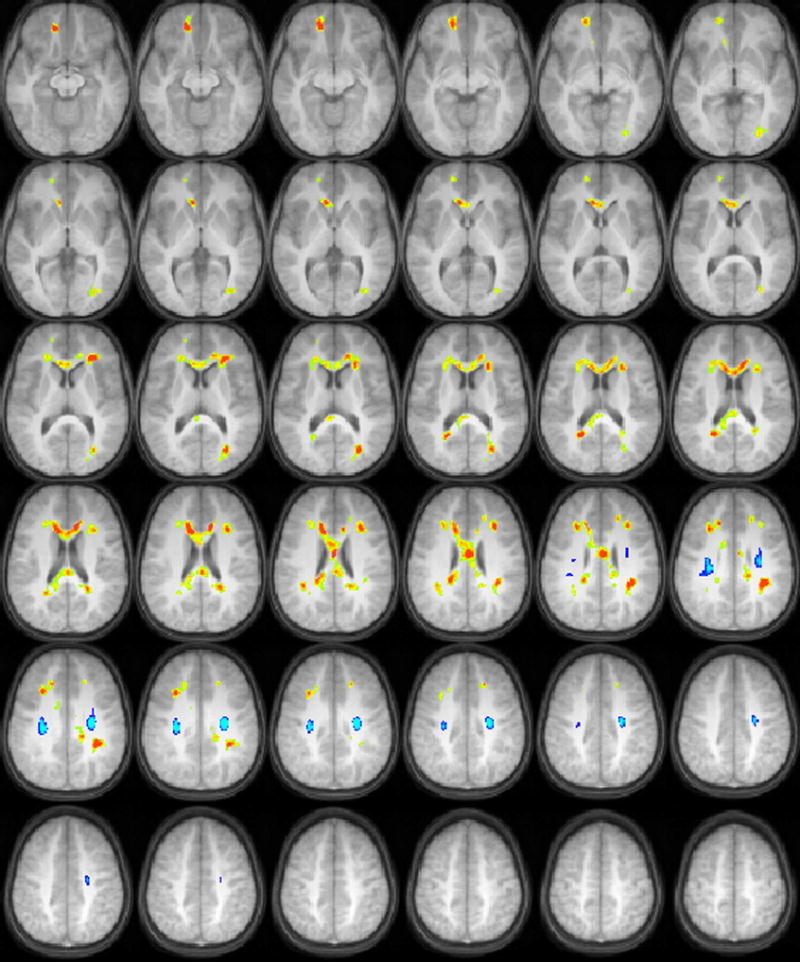

For the SCAN-C filtered words, positive correlations of FA with task performance were found in the corpus callosum, the right prefrontal cortex, and in occipito-temporal white matter bilaterally. Negative correlations of FA with task performance were found in the centrum semiovale bilaterally (Figure 2).

Figure 2.

Regions with significant correlations of white matter fractional anisotropy with audiologic performance on a test of recognition of low-pass filtered words (orange = positive correlation, blue = negative correlation) in a cohort of 17 children ages 9-11 years old. All images in radiologic orientation.

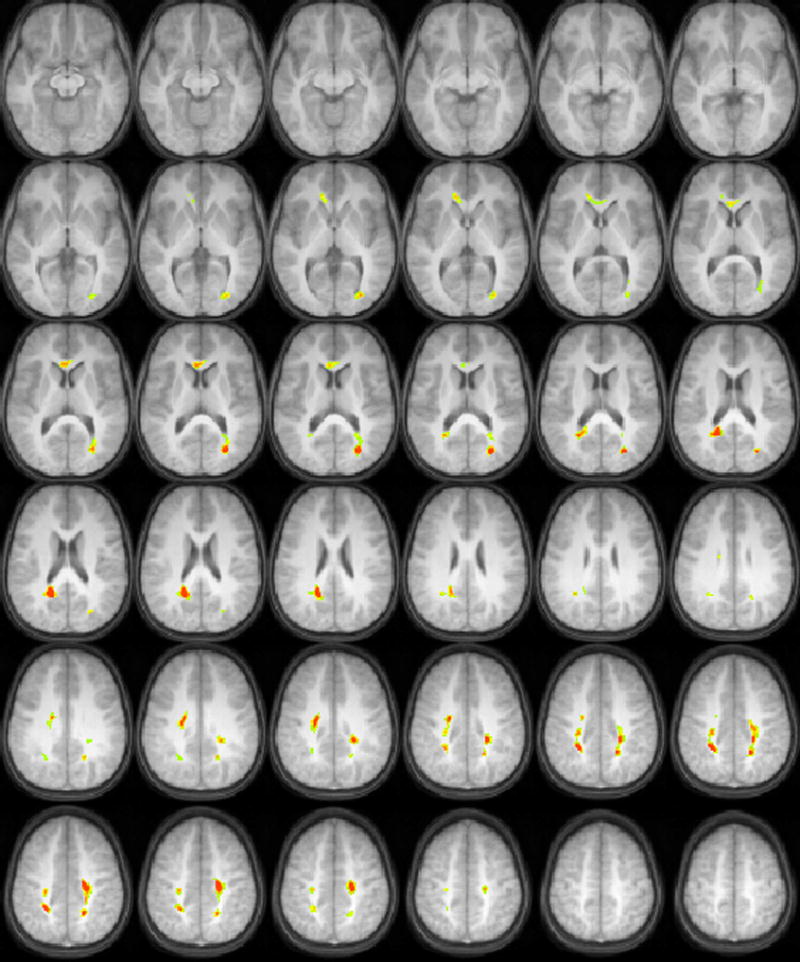

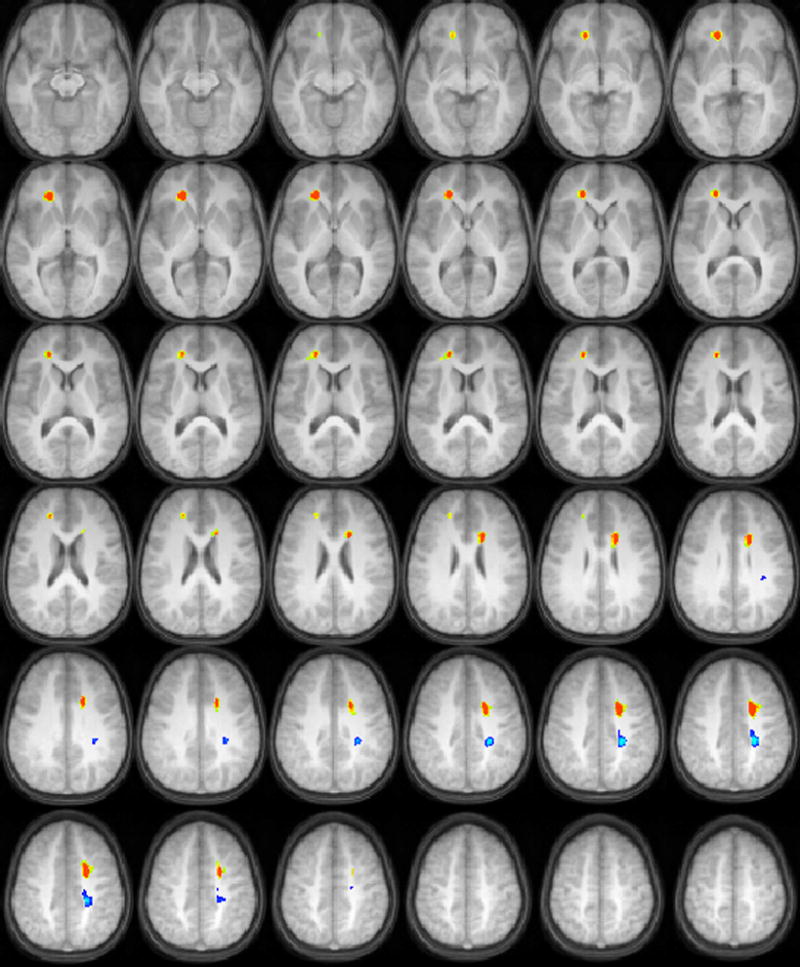

For the time-compressed sentences at 40% compression, positive correlations of FA with task performance were found in occipito-temporal white matter bilaterally, and in the anterior centrum semiovale bilaterally (Figure 3). For the time-compressed sentences at 60% compression (Figure 4), positive correlations of FA with task performance were found in the right inferior prefrontal cortex, while negative correlations were found in the posterior centrum semiovale. In the right hemisphere, the region reached into more inferior regions than in the left hemisphere; the dorsolateral prefrontal cortex was adjacent to the white matter regions in the left hemisphere, while the ventrolateral prefrontal cortex was adjacent to the white matter regions in the right hemisphere.

Figure 3.

Regions with significant correlations of white matter fractional anisotropy with audiologic performance on a test of recognition of time-compressed sentences at 40% compression (orange = positive correlation, blue = negative correlation) in a cohort of 17 children ages 9-11 years old. All images in radiologic orientation.

Figure 4.

Regions with significant correlations of white matter fractional anisotropy with audiologic performance on a test of recognition of time-compressed sentences at 60% compression (orange = positive correlation, blue = negative correlation) in a cohort of 17 children ages 9-11 years old. All images in radiologic orientation.

We investigate the correlation of white matter microstructure, using Diffusion Tensor Imaging (DTI), in a cohort of 17 normal-hearing children ages 9-11, with performance on various higher-order auditory processing tasks. The tests are typically used to diagnose auditory processing disorder (APD) in children, and include interpretation of speech-in-noise, recognition of low-pass frequency filtered words, and interpretation of time-compressed sentences at two different values of compression. Results support a previously hypothesized dual-stream (dorsal and ventral) model of auditory processing, and the partial independence of the neurological bases for performance on the various tests used to diagnose APD in children.

Discussion

The overall pattern of results suggests that intra-cortical white matter pathways as well as pathways running between cortical areas and the brainstem support the behaviors tested by the higher order auditory tasks used in this study. Two results are particularly salient. The first is that all tasks correlated with FA of fibers within the centrum semiovale. However, FA in this region and task performance was positively correlated for one task (TC40) and negatively correlated for the remaining three (TC60, SCAN-C, BKBSIN). Secondly, the remaining white matter regions that correlated with task performance were largely independent between tasks. This directly addresses an ongoing controversy concerning whether tests for APD measure independent or dependent constructs (Domitz & Schow, 2000; McFarland & Cacace, 2002). The DTI data supports the idea that the neurological bases for each of these tasks are at least partially independent.

Each of the tasks we studied requires effortful processing of linguistic information. What distinguishes these tasks is the manner in which processing was made effortful. For each test, the child’s task is to repeat the input. However, the input was varied in terms of whether sentences or words were presented (altering memory load and semantic support) and in terms of how the speech input was altered (added noise, filtering, or time compression). The results can be considered in light of these sources of task variation.

Correlations of FA with task performance were found in dorsal prefrontal cortex (for the BKBSIN task, the centroid was nearest the middle frontal gyrus, BA 9) as well as the right ventral prefrontal cortex (for the low-pass filtered words and the 60% time compressed sentences; the centroids of the regions were nearest the anterior cingulate, BA 32, and the medial frontal gyrus, BA 10, respectively). The dorso-lateral aspect of the prefrontal cortex is involved in working memory (see (Owen, McMillan, Laird, & Bullmore, 2005) for a review) and such top-down processes are known to support retrieval of information from degraded speech (Hannemann, Obleser, & Eulitz, 2007), by manipulating the degraded stimuli within short-term memory, and then reconstructing by processing within the context of semantic predictability (Obleser, Wise, Alex Dresner, & Scott, 2007). Therefore, it is not unexpected to see dorsally located prefrontal white matter correlate with our speech-in-noise task (BKBSIN) which shares these task characteristics. An alternative explanation is that the prefrontal cortex is involved with directing attention to relevant auditory features, including monitoring and selection (Lebedev, Messinger, Kralik, & Wise, 2004). Differences in the regions of prefrontal white matter identified for each task may reflect differences in how attentional resources must be marshaled under different listening conditions. FA in the right ventral prefrontal cortex correlated with task performance for the low-pass filtered words (SCANC) and the 60% time compressed sentences (TC60); the centroids of these regions were nearest to the medial frontal gyrus and anterior cingulate cortex, respectively. This region of the prefrontal cortex may be involved with lexical selection involved with multiple word candidates (Kan & Thompson-Schill, 2004b). When individual low-pass filtered words were presented in the SCAN-C, it is possible that children had to select the actual word from their lexicon as opposed to similar-sounding lexical items. Selection within a set of closely related semantic items appears more closely associated with left than right prefrontal activation (Kan & Thompson-Schill, 2004a); however for the SCAN-C, selection is not occuring from closely related semantic items but from phonetically similar items. The task may involve a conflict arising at the response level, necessitating inhibition of semantic associations. Conflicts at the response level and inhibition of targets have been found to recruit the right prefrontal cortex (Konishi et al., 1999; Milham et al., 2001).

The SCAN-C was also the only auditory processing task which displayed correlations with interhemispheric structures (the corpus callosum). In this case the subjects are likely using spectral information to help with lexical decision. As spectral information has been thought to be preferentially processed in the right hemisphere, interhemispheric transfer would play a key role in performance on this task. Although it should be pointed out the framework of the left hemisphere being dominant for processing fast temporal information, with the right hemisphere dominant for processing spectral information (Dogil et al., 2002; Obleser et al., 2008; Poeppel, 2003; Schonwiesner, Rubsamen, & von Cramon, 2005; Zatorre, Belin, & Penhune, 2002), has recently been challenged. A new hypothesis has been proposed (Hickok & Poeppel, 2007) for multi-time resolution processing in which the hemispheres differ in terms of selectivity, with the right hemisphere dominant for integrating information over longer timescales and the left hemisphere being less selective in response to different integration timescales (Boemio, Fromm, Braun, & Poeppel, 2005). Our finding of increased FA in interhemispheric regions such as the corpus callosum would also be consistent with this framework, in which processing of suprasegmental information is carried out over longer intervals (~300 ms) and in the right hemisphere.

Positive correlations between FA and white matter adjoining occipital or occipital-temporal areas were found for the SCAN-C filtered words and the time-compressed sentences at the lowest degree of compression. While the centroids of these regions were found to be closer to the posterior cingulate or precuneus (BA 31) by the Talairach Daemon, visual inspection of the coverage of these regions together with the Talariach atlas revealed that these white matter regions were adjacent to Brodmann’s area 39 (middle temporal gyrus/middle occipital gyrus). This region is held in the model of (Hickok & Poeppel, 2007) to be the more posterior region of the ventral stream, corresponding to the lexical interface linking phonological and semantic information. Our interpretation for the time-compressed sentences is that better conversion from phonologic to semantic information, as reflected in higher FA values adjoining BA 39, results in better encoding and therefore better recall from working memory when subjects are tested. For the SCAN-C filtered words, working memory would not be expected to be a major factor affecting task performance. However, a more “automatic” conversion using the ventral stream would be expected to facilitate recognition of the words.

A very interesting finding is the correlations found between FA in the centrum semiovale and task performance. For a few of these areas, the Talairach Daemon determined the nearest gray matter region to be the cingulate gyrus rather than the pre or post-central gyrus (Table 2). However, visual inspection of the Talairach Atlas (Talairach & Tournoux, 1988) revealed the centroid of these regions to be very close anatomically to the position of the corticospinal tract as shown in the atlas (<= 5 mm). Thus, it is likely these regions reflect connections with motor regions. While these fibers (in the centrum semiovale) do not connect directly to the auditory cortex, connections with motor regions are possibly related to descending control (efferent pathways) over the auditory system. Bidirectional connectivity is present between premotor areas and the auditory cortex, as shown in a tracer study (Romanski et al., 1999). The auditory cortex then projects to the superior olivary complex and inferior colliculus (Peterson & Schofield, 2007), and these corticofugal pathways reach into the inner ear via efferents in the medial olivocochlear bundle (Guinan, 2006). This explanation is consistent with the well known phenomenon of the suppression of otoacoustic emissions at the level of the cochlea in the presence of contralateral noise (Timpe-Syverson & Decker, 1999), as well as the correlation between these emissions and performance on speech-in-noise tasks (de Boer & Thornton, 2007; Kim et al., 2006). However, further research will be necessary to confirm a relationship between connections with motor regions and top-down efferent processing.

Also, for our tasks, these correlations were negative for three of the four tasks; these three tasks were more difficult than the one task that showed a positive correlation. Therefore, even if our hypothesis of top-down efferent processing is correct, descending control of the cochlea alone, or modulation of ascending pathways from the superior olivary complex and inferior colliculus (Peterson & Schofield, 2007) may not be the predominant predictor of performance once a task has crossed a threshold of difficulty. Instead, it may be that other aspects of processing become more prominent at high difficulty levels.

A recently proposed framework for speech processing (Hickok & Poeppel, 2007) possibly offers further insight. This framework places the premotor cortex in the “dorsal stream” used for auditory-motor mapping, a framework supported by functional imaging studies showing activation in the premotor cortex during speech perception (e.g. (Wilson, Saygin, Sereno, & Iacoboni, 2004)). Indeed, some theories of speech perception hold that speech recognition is facilitated by articulatory representations in the dorsal pathway (Kluender & Lotto, 1999; Liberman & Whalen, 2000). Several studies support this interpretation. The precentral gyrus was observed to be active during speech perception (Skipper, Nusbaum, & Small, 2005); although at a lower level during auditory-only presentation, as compared with audio-visual presentation. Repetitive transcranial magnetic stimulation (rTMS) studies have shown disruptions in speech perception when the left premotor cortex was stimulated prior to presentation of speech (Meister, Wilson, Deblieck, Wu, & Iacoboni, 2007); however, interestingly, phoneme perception was improved when the portion of the motor cortex representing the articulator producing a particular sound was stimulated just before the auditory presentation (D’Ausilio et al., 2009). Greater activation in the motor cortex has been observed for non-native speech sounds as compared to native speech sounds (Wilson & Iacoboni, 2006). A causally delayed relationship was found between the left posterior supramarginal gyrus and the premotor cortex (Londei et al., 2007) for listening to words and (pronounceable) non-words, although not for reversed words, again supporting the hypothesis (Warren, Wise, & Warren, 2005) that the presentation of “do-able” sounds causes the interfacing of auditory-sensory representations and the articulatory-motor component.

The authors further hypothesize that stored auditory vocal templates, based on experience of one’s own vocal output, are used in a template-matching process for interpretation of auditory input, which enables recognition of speech sounds despite variations due to the characteristics of the particular speaker (Kuhl, 2004). Such a template-matching process would need bidirectional transfer between auditory and motor regions, facilitating top-down in addition to bottom-up processes, a hypothesis supported by recent functional imaging studies (e.g. (Kohler et al., 2002; Wilson et al., 2004)), as well as a tracer study in primates (Romanski et al., 1999). Correlations between FA values in corticospinal pathways and performance on the auditory recognition tasks used in the study is consistent with this model, as cortico-cortical connections between premotor and auditory areas are utilized.

However, in the presence of significantly degraded speech, whether by loss of frequency content (such as the SCAN-C filtered words), extreme temporal speed (such as the time-compressed sentences at 60% compression), or the presence of noise (such as the speech presented over four-talker babble), the template-matching process may fail as the degraded speech is too far away in the auditory-motor space from a target. Humans cannot generate speech with the high-frequency content missing, talk as fast as the speech presented in the time-compressed sentences, or generate more than one voice at a time. We hypothesize that the auditory-motor template matching process is useful when children start to learn to speak and understand language, or perhaps when individuals learn a new language with unfamiliar phonemes. However, over time, we hypothesize that children begin to use the more ventral processing stream for comprehension and speech recognition (Hickok & Poeppel, 2007). Children thus “directly” generate semantic information from the incoming auditory stream and rely less on the auditory-motor dorsal stream for speech perception. As the mean age of children included in our study (~10 years) is during a period of active gray matter pruning (Giedd et al., 1999), connections to the auditory-motor dorsal stream may become less relevant and therefore, weaker, reflecting on the lower FA values seen.

A positive correlation between FA in the centrum semiovale was seen, however, for the time-compressed sentences with the lowest degree of compression. The range of subject scores was very limited, with even the worst-performing subjects still able to recall 57 out of the 60 words. Our interpretation is that the cognitive aspects of this task are heavily loaded towards short-term memory, as interpretation of the speech is not that difficult at this level of compression. Therefore, the positive correlation with this task performance reflects either auditory efferent control or subvocal articulatory representations carried by the dorsal pathway in order to maintain specific words in memory over a short period of time.

Our study has several limitations. The study sample is biased towards males. As differences have been shown in FA values between boys and girls (Schmithorst, Holland, & Dardzinski, 2008) and sex-related differences have been shown in the relation of FA values to cognitive function (Schmithorst, 2009) results can not necessarily be taken as representative for girls. Additionally, our study sample is biased towards the higher end in terms of overall intelligence and cognitive function, and so caution must be used generalizing to a larger population. The study is also limited by the small sample size (N = 17) and the restricted range of scores, especially for the time-compressed sentences with 40% compression. Keith (2000) showed increased performance with age for the SCAN-C filtered words, as well as for auditory figure ground (another subtest of the SCAN-C test, which measures ability to comprehend speech-in-noise). Our results, for the SCAN-C filtered words and the BKB-SIN speech-in-noise test, show correlations of magnitude around R = 0.4; these results are not significant due to the small sample size.

It is also important to note that the estimates of partial correlation coefficients were obtained post-hoc. As shown in (Kriegeskorte, Simmons, Bellgowan, & Baker, 2009), this can result in a biased estimator and overestimation of the effect sizes. Nevertheless, the partial correlations are indicative of a very robust effect, as it was detected even with our small sample size of 17 subjects. Additionally, the ROIs were determined from the filtered datasets, while the effect sizes were estimated using the unfiltered datasets, likely minimizing the effects of any existing bias.

In conclusion, the relation between brain anatomical connectivity and performance on higher-order auditory processing tasks was investigated in a cohort of normal-hearing children ages 9-11. Positive correlations between FA and task performance were seen in white matter adjoining prefrontal regions for the auditory processing tasks of speech in noise, time-compressed sentences at high (60%) compression, and low-pass filtered words. For those three tasks, negative correlations between performance and FA were found in the centrum semiovale. For the low-pass filtered words, positive correlations with FA were also found in the corpus callosum. For time-compressed sentences at low (40%) compression, positive correlations with FA were found with performance in the centrum semiovale. Our results support a dual-stream (dorsal and ventral) model of auditory processing, and that higher-order processing tasks rely less on the dorsal stream related to articulatory networks, and more on the ventral stream related to semantic comprehension.

Acknowledgments

Support: This work was supported by the National Institute on Deafness and other Communication Disorders at the National Institutes of Health (K25 DC008110).

References

- Avan P, Bonfils P. Analysis of possible interactions of an attentional task with cochlear mechanics. Hearing Research. 1991;57:269–275. doi: 10.1016/0378-5955(92)90156-h. [DOI] [PubMed] [Google Scholar]

- Basser PJ, Jones DK. Diffusion-tensor MRI: theory, experimental design and data analysis - a technical review. NMR Biomed. 2002;15(7-8):456–467. doi: 10.1002/nbm.783. [DOI] [PubMed] [Google Scholar]

- Beasley DS, Freeman BA. Time altered speech as a measure of central auditory processing. In: Keith RW, editor. Central Auditory Dysfunction. New York: Grune and Stratton; 1977. [Google Scholar]

- Bench J, Kowal A, Bamford J. The BKB (Bamford-Kowal-Bench) Sentence Lists for Partially-Hearing Children. Br J Audiol. 1979;13:108–112. doi: 10.3109/03005367909078884. [DOI] [PubMed] [Google Scholar]

- Boemio A, Fromm S, Braun A, Poeppel D. Hierarchical and asymmetric temporal sensitivity in human auditory cortices. Nat Neurosci. 2005;8(3):389–395. doi: 10.1038/nn1409. [DOI] [PubMed] [Google Scholar]

- Cacace AT, McFarland DJ. Central auditory processing disorder in school-aged children: a critical review. J Speech Lang Hear Res. 1998;41(2):355–373. doi: 10.1044/jslhr.4102.355. [DOI] [PubMed] [Google Scholar]

- Cacace AT, McFarland DJ. The importance of modality specificity in diagnosing central auditory processing disorder. Am J Audiol. 2005;14(2):112–123. doi: 10.1044/1059-0889(2005/012). [DOI] [PubMed] [Google Scholar]

- Christensen TA, Antonucci SM, Lockwood JL, Kittleson M, Plante E. Cortical and subcortical contributions to the attentive processing of speech. Neuroreport. 2008;19(11):1101–1105. doi: 10.1097/WNR.0b013e3283060a9d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D’Ausilio A, Pulvermuller F, Salmas P, Bufalari I, Begliomini C, Fadiga L. The motor somatotopy of speech perception. Curr Biol. 2009;19(5):381–385. doi: 10.1016/j.cub.2009.01.017. [DOI] [PubMed] [Google Scholar]

- de Boer J, Thornton A. Effect of subject task on contralateral suppression of click evoked otoacoustic emissions. Hearing Research. 2007;233:117–123. doi: 10.1016/j.heares.2007.08.002. [DOI] [PubMed] [Google Scholar]

- Dogil G, Ackermann H, Grodd W, Haider H, Kamp H, Mayer J, et al. The speaking brain: A tutorial introduction to fMRI experiments in the production of speech, prosody, & syntax. The Journal of Neurolinguistics. 2002;15:59–90. [Google Scholar]

- Domitz DM, Schow RL. A new CAPD battery--multiple auditory processing assessment: factor analysis and comparisons with SCAN. Am J Audiol. 2000;9(2):101–111. doi: 10.1044/1059-0889(2000/012). [DOI] [PubMed] [Google Scholar]

- Friel-Patti S. Clinical Decision-Making in the Assessment and Intervention of Central Auditory Processing Disorders. Language, Speech, and Hearing Services in Schools. 1999;30:345–352. doi: 10.1044/0161-1461.3004.345. [DOI] [PubMed] [Google Scholar]

- Froehlich P, Collet L, Chanal JM, Morgon A. Variability of the influence of a visual task on the active micromechanical properties of the cochlea. Brain Res. 1990;508(2):286–288. doi: 10.1016/0006-8993(90)90408-4. [DOI] [PubMed] [Google Scholar]

- Froehlich P, Collet L, Morgon A. Transiently evoked otoacoustic emission amplitudes change with changes of directed attention. Physiol Behav. 1993;53(4):679–682. doi: 10.1016/0031-9384(93)90173-d. [DOI] [PubMed] [Google Scholar]

- Gandour J, Wong D, Dzemidzic M, Lowe M, Tong Y, Li X. A cross-linguistic fMRI study of perception of intonation and emotion in Chinese. Hum Brain Mapp. 2003;18(3):149–157. doi: 10.1002/hbm.10088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giard M-H, Fort A, Mochetant-Rostaing Y, Pernier J. Neurophysiological mechanism of auditory selective attention. Front Biosci. 2000;5:D84–94. doi: 10.2741/giard. [DOI] [PubMed] [Google Scholar]

- Giard MH, Collet L, Bouchet P, Pernier J. Auditory selective attention in the human cochlea. Brain Res. 1994;633(1-2):353–356. doi: 10.1016/0006-8993(94)91561-x. [DOI] [PubMed] [Google Scholar]

- Giedd JN, Blumenthal J, Jeffries NO, Castellanos FX, Liu H, Zijdenbos A, et al. Brain development during childhood and adolescence: a longitudinal MRI study. Nat Neurosci. 1999;2(10):861–863. doi: 10.1038/13158. [DOI] [PubMed] [Google Scholar]

- Guinan JJ., Jr Olivocochlear efferents: anatomy, physiology, function, and the measurement of efferent effects in humans. Ear Hear. 2006;27(6):589–607. doi: 10.1097/01.aud.0000240507.83072.e7. [DOI] [PubMed] [Google Scholar]

- Hannemann R, Obleser J, Eulitz C. Top-down knowledge supports the retrieval of lexical information from degraded speech. Brain Res. 2007;1153:134–143. doi: 10.1016/j.brainres.2007.03.069. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci. 2007;8(5):393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Hugdahl K, Law I, Kyllingsbaek S, Bronnick K, Gade A, Paulson OB. Effects of attention on dichotic listening: an 15O-PET study. Hum Brain Mapp. 2000;10(2):87–97. doi: 10.1002/(SICI)1097-0193(200006)10:2<87::AID-HBM50>3.0.CO;2-V. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ikeda K, Hayashi A, Sekiguchi T, Era S. Attention effects at auditory periphery derived from human scalp potentials: displacement measure of potentials. Int J Neurosci. 2006;116(10):1187–1205. doi: 10.1080/00207450500516446. [DOI] [PubMed] [Google Scholar]

- Jancke L, Buchanan TW, Lutz K, Shah NJ. Focused and nonfocused attention in verbal and emotional dichotic listening: an FMRI study. Brain Lang. 2001;78(3):349–363. doi: 10.1006/brln.2000.2476. [DOI] [PubMed] [Google Scholar]

- Johnson JA, Zatorre RJ. Attention to simultaneous unrelated auditory and visual events: behavioral and neural correlates. Cereb Cortex. 2005;15(10):1609–1620. doi: 10.1093/cercor/bhi039. [DOI] [PubMed] [Google Scholar]

- Kan IP, Thompson-Schill SL. Effect of name agreement on prefrontal activity during overt and covert picture naming. Cogn Affect Behav Neurosci. 2004a;4(1):43–57. doi: 10.3758/cabn.4.1.43. [DOI] [PubMed] [Google Scholar]

- Kan IP, Thompson-Schill SL. Selection from perceptual and conceptual representations. Cogn Affect Behav Neurosci. 2004b;4(4):466–482. doi: 10.3758/cabn.4.4.466. [DOI] [PubMed] [Google Scholar]

- Keith R. Development and Standardization of the SCAN-C: Test for Auditory Processing Disorders in Children-Revised. J Am Acad Audiology. 2000;11(8):438–445. [PubMed] [Google Scholar]

- Keith R. Standardization of the Time Compressed Sentence Test. J Educational Audiology. 2002;10:15–20. [Google Scholar]

- Killion MC, Niquette PA. What can the pure-tone audiogram tell us about a patient’s SNR loss? Hear J. 2000;53:46–53. [Google Scholar]

- Kim S, Frisina RD, Frisina DR. Effects of age on speech understanding in normal hearing listeners: Relationship between the auditory efferent system and speech intelligibility in noise. Speech and Communication. 2006;48:855–862. [Google Scholar]

- Klingberg T, Hedehus M, Temple E, Salz T, Gabrieli JD, Moseley ME, et al. Microstructure of temporo-parietal white matter as a basis for reading ability: evidence from diffusion tensor magnetic resonance imaging. Neuron. 2000;25(2):493–500. doi: 10.1016/s0896-6273(00)80911-3. [DOI] [PubMed] [Google Scholar]

- Kluender KR, Lotto AJ. Virtues and perils of an empiricist approach to speech perception. J Acoust Soc Am. 1999;105:503–511. [Google Scholar]

- Kohler E, Keysers C, Umilta MA, Fogassi L, Gallese V, Rizzolatti G. Hearing sounds, understanding actions: action representation in mirror neurons. Science. 2002;297(5582):846–848. doi: 10.1126/science.1070311. [DOI] [PubMed] [Google Scholar]

- Konishi S, Nakajima K, Uchida I, Kikyo H, Kameyama M, Miyashita Y. Common inhibitory mechanism in human inferior prefrontal cortex revealed by event-related functional MRI. Brain. 1999;122(Pt 5):981–991. doi: 10.1093/brain/122.5.981. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI. Circular analysis in systems neuroscience: the dangers of double dipping. Nat Neurosci. 2009;12(5):535–540. doi: 10.1038/nn.2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl PK. Early language acquisition: cracking the speech code. Nat Rev Neurosci. 2004;5(11):831–843. doi: 10.1038/nrn1533. [DOI] [PubMed] [Google Scholar]

- Lebedev MA, Messinger A, Kralik JD, Wise SP. Representation of attended versus remembered locations in prefrontal cortex. PLoS Biol. 2004;2(11):e365. doi: 10.1371/journal.pbio.0020365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ledberg A, Akerman S, Roland PE. Estimation of the probabilities of 3D clusters in functional brain images. Neuroimage. 1998;8(2):113–128. doi: 10.1006/nimg.1998.0336. [DOI] [PubMed] [Google Scholar]

- Lewis JW, Talkington WJ, Walker NA, Spirou GA, Jajosky A, Frum C, et al. Human cortical organization for processing vocalizations indicates representation of harmonic structure as a signal attribute. J Neurosci. 2009;29(7):2283–2296. doi: 10.1523/JNEUROSCI.4145-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liberman AM, Whalen DH. On the relation of speech to language. Trends Cogn Sci. 2000;4(5):187–196. doi: 10.1016/s1364-6613(00)01471-6. [DOI] [PubMed] [Google Scholar]

- Liston C, Watts R, Tottenham N, Davidson MC, Niogi S, Ulug AM, et al. Frontostriatal microstructure modulates efficient recruitment of cognitive control. Cereb Cortex. 2006;16(4):553–560. doi: 10.1093/cercor/bhj003. [DOI] [PubMed] [Google Scholar]

- Londei A, D’Ausilio A, Basso D, Sestieri C, Del Gratta C, Romani GL, et al. Brain network for passive word listening as evaluated with ICA and Granger causality. Brain Res Bull. 2007;72(4-6):284–292. doi: 10.1016/j.brainresbull.2007.01.008. [DOI] [PubMed] [Google Scholar]

- Maison S, Micheyl C, Collet L. Influence of focused auditory attention on cochlear activity in humans. Psychophysiology. 2001;38(1):35–40. [PubMed] [Google Scholar]

- McFarland DJ, Cacace AT. Factor analysis in CAPD and the “unimodal” test battery: do we have a model that will satisfy? Am J Audiol. 2002;11(1):7–9. doi: 10.1044/1059-0889(2002/ltr01). author reply 9-12. [DOI] [PubMed] [Google Scholar]

- McFarland DJ, Cacace AT. Potential problems in the differential diagnosis of (central) auditory processing disorder (CAPD or APD) and attention-deficit hyperactivity disorder (ADHD) J Am Acad Audiol. 2003;14(5):278–280. author reply 280. [PubMed] [Google Scholar]

- Meister IG, Wilson SM, Deblieck C, Wu AD, Iacoboni M. The essential role of premotor cortex in speech perception. Curr Biol. 2007;17(19):1692–1696. doi: 10.1016/j.cub.2007.08.064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer M, Steinhauer K, Alter K, Friederici AD, von Cramon DY. Brain activity varies with modulation of dynamic pitch variance in sentence melody. Brain Lang. 2004;89(2):277–289. doi: 10.1016/S0093-934X(03)00350-X. [DOI] [PubMed] [Google Scholar]

- Milham MP, Banich MT, Webb A, Barad V, Cohen NJ, Wszalek T, et al. The relative involvement of anterior cingulate and prefrontal cortex in attentional control depends on nature of conflict. Brain Res Cogn Brain Res. 2001;12(3):467–473. doi: 10.1016/s0926-6410(01)00076-3. [DOI] [PubMed] [Google Scholar]

- Moore D. Auditory processing disorder (APD): Definition, diagnosis, neural basis, and intervention. Aud Med. 2006;4:4–11. [Google Scholar]

- Obleser J, Eisner F, Kotz SA. Bilateral speech comprehension reflects differential sensitivity to spectral and temporal features. J Neurosci. 2008;28(32):8116–8123. doi: 10.1523/JNEUROSCI.1290-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obleser J, Wise RJ, Alex Dresner M, Scott SK. Functional integration across brain regions improves speech perception under adverse listening conditions. J Neurosci. 2007;27(9):2283–2289. doi: 10.1523/JNEUROSCI.4663-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olson EA, Collins PF, Hooper CJ, Muetzel RL, Lim KO, Luciana M. White Matter Integrity Predicts Delay Discounting Behavior in 9- to 23-Year Olds: A Diffusion Tensor Imaging Study. J Cogn Neurosci. 2009 doi: 10.1162/jocn.2009.21107. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owen AM, McMillan KM, Laird AR, Bullmore E. N-back working memory paradigm: a meta-analysis of normative functional neuroimaging studies. Hum Brain Mapp. 2005;25(1):46–59. doi: 10.1002/hbm.20131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson DC, Schofield BR. Projections from auditory cortex contact ascending pathways that originate in the superior olive and inferior colliculus. Hearing Research. 2007;232:67–77. doi: 10.1016/j.heares.2007.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plante E, Creusere M, Sabin C. Dissociating sentential prosody from sentence processing: activation interacts with task demands. Neuroimage. 2002;17(1):401–410. doi: 10.1006/nimg.2002.1182. [DOI] [PubMed] [Google Scholar]

- Plante E, Holland SK, Schmithorst VJ. Prosodic processing by children: an fMRI study. Brain Lang. 2006;97(3):332–342. doi: 10.1016/j.bandl.2005.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poeppel D. The analysis of speech in different temporal integration windows: Cerebral lateralization as ‘asymmetric sampling in time”. Speech and Communication. 2003;41:425–455. [Google Scholar]

- Puel JL, Bonfils P, Pujol R. Selective attention modifies the active micromechanical properties of the cochlea. Brain Res. 1988;447(2):380–383. doi: 10.1016/0006-8993(88)91144-4. [DOI] [PubMed] [Google Scholar]

- Qiu D, Tan LH, Zhou K, Khong PL. Diffusion tensor imaging of normal white matter maturation from late childhood to young adulthood: voxel-wise evaluation of mean diffusivity, fractional anisotropy, radial and axial diffusivities, and correlation with reading development. Neuroimage. 2008;41(2):223–232. doi: 10.1016/j.neuroimage.2008.02.023. [DOI] [PubMed] [Google Scholar]

- Romanski LM, Tian B, Fritz J, Mishkin M, Goldman-Rakic PS, Rauschecker JP. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999;2(12):1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt CF, Zaehle T, Meyer M, Geiser E, Boesiger P, Jancke L. Silent and continuous fMRI scanning differentially modulate activation in an auditory language comprehension task. Hum Brain Mapp. 2008;29(1):46–56. doi: 10.1002/hbm.20372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmithorst VJ. Developmental Sex Differences in the Relation of Neuroanatomical Connectivity to Intelligence. Intelligence. 2009;37:164–173. doi: 10.1016/j.intell.2008.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmithorst VJ, Holland SK, Dardzinski BJ. Developmental differences in white matter architecture between boys and girls. Hum Brain Mapp. 2008;29(6):696–710. doi: 10.1002/hbm.20431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmithorst VJ, Wilke M, Dardzinski BJ, Holland SK. Cognitive functions correlate with white matter architecture in a normal pediatric population: A diffusion tensor MRI study. Hum Brain Mapp. 2005;26(2):139–147. doi: 10.1002/hbm.20149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schonwiesner M, Rubsamen R, von Cramon DY. Hemispheric asymmetry for spectral and temporal processing in the human antero-lateral auditory belt cortex. Eur J Neurosci. 2005;22(6):1521–1528. doi: 10.1111/j.1460-9568.2005.04315.x. [DOI] [PubMed] [Google Scholar]

- Skipper JI, Nusbaum HC, Small SL. Listening to talking faces: motor cortical activation during speech perception. Neuroimage. 2005;25(1):76–89. doi: 10.1016/j.neuroimage.2004.11.006. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. In: Co-Planar Stereotaxic Atlas of the Human Brain. Rayport M, translator. New York: Thieme Medical Publishers; 1988. [Google Scholar]

- Taylor BJ. Speech-in-noise tests: How and why to include them in your basic test battery. Hear J. 2003;56(1):40–46. [Google Scholar]

- Thomsen T, Rimol LM, Ersland L, Hugdahl K. Dichotic listening reveals functional specificity in prefrontal cortex: an fMRI study. Neuroimage. 2004;21(1):211–218. doi: 10.1016/j.neuroimage.2003.08.039. [DOI] [PubMed] [Google Scholar]

- Timpe-Syverson GK, Decker TN. Attention effects on distortion-product otoacoustic emissions with contralateral speech stimuli. J Am Acad Audiol. 1999;10(7):371–378. [PubMed] [Google Scholar]

- Warren JE, Wise RJ, Warren JD. Sounds do-able: auditory-motor transformations and the posterior temporal plane. Trends Neurosci. 2005;28(12):636–643. doi: 10.1016/j.tins.2005.09.010. [DOI] [PubMed] [Google Scholar]

- Wilke M, Schmithorst VJ, Holland SK. Assessment of spatial normalization of whole-brain magnetic resonance images in children. Hum Brain Mapp. 2002;17(1):48–60. doi: 10.1002/hbm.10053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilke M, Schmithorst VJ, Holland SK. Normative pediatric brain data for spatial normalization and segmentation differs from standard adult data. Magn Reson Med. 2003;50(4):749–757. doi: 10.1002/mrm.10606. [DOI] [PubMed] [Google Scholar]

- Wilson SM, Iacoboni M. Neural responses to non-native phonemes varying in producibility: evidence for the sensorimotor nature of speech perception. Neuroimage. 2006;33(1):316–325. doi: 10.1016/j.neuroimage.2006.05.032. [DOI] [PubMed] [Google Scholar]

- Wilson SM, Saygin AP, Sereno MI, Iacoboni M. Listening to speech activates motor areas involved in speech production. Nat Neurosci. 2004;7(7):701–702. doi: 10.1038/nn1263. [DOI] [PubMed] [Google Scholar]

- Wong PC, Uppunda AK, Parrish TB, Dhar S. Cortical mechanisms of speech perception in noise. J Speech Lang Hear Res. 2008;51(4):1026–1041. doi: 10.1044/1092-4388(2008/075). [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. Trends Cogn Sci. 2002;6(1):37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]

- Zekveld AA, Heslenfeld DJ, Festen JM, Schoonhoven R. Top-down and bottom-up processes in speech comprehension. Neuroimage. 2006;32(4):1826–1836. doi: 10.1016/j.neuroimage.2006.04.199. [DOI] [PubMed] [Google Scholar]