Abstract

Purpose

The Institute of Medicine report on cooperative groups and the National Cancer Institute’s (NCI) report from the Operational Efficiency Working Group both recommend changes to the processes for opening a clinical trial. This paper provides evidence for the need for such changes by completing the first comprehensive review of all the time and steps required to open a phase III oncology clinical trial and discusses the effect of time to protocol activation on subject accrual.

Methods

The Dilts & Sandler (2006) method was utilized at four cancer centers, two cooperative groups, and NCI’s Cancer Therapy Evaluation Program (CTEP). Accrual data were also collected.

Results

Opening a phase III cooperative group therapeutic trial requires 769 steps, 36 approvals, and a median of approximately 2.5 years from formal concept review to study opening. Time to activation at one group ranged from 435-1604 days and time to open at one cancer center ranged from 21-836. At centers, group trials significantly more likely to have zero accruals (38.8%) than non-group trials (20.6%) (p≤.001). 39.1% of closed NCI CTEP-approved phase III clinical trials from 2000-2007 resulted in <21 accruals.

Conclusions

The length, variability, and low accrual results demonstrate the need for the NCI clinical trial system to be reengineered. Improvements will be of only limited effectiveness if done in isolation; there is a need to return to the collaborative spirit with all parties creating an efficient and effective system. Recommendations put forth by the IOM and OEWG reports, if implemented, will aid this renewal.

Introduction

The Institute of Medicine (IOM) report entitled “A National Cancer Clinical Trials System for the 21st Century,” addresses four overarching goals 1. Two of those goals directly relate to clinical trial operational issues: improving the speed and efficiency of the design, activation, opening, and conduct of clinical trials (Goal I); and improving the means of prioritization, selection, support, and completion of cancer clinical trials (Goal III). Additionally, the report accepted the recommendations of the NCI’s Operational Efficiency Working Group (OEWG) report which includes specific timelines and milestones for performance in the opening of oncology clinical trials. This paper presents a comprehensive review of the evidence demonstrating the need for change in the existing NCI clinical trials system.

The focus of this research is primarily on phase III clinical trials. It can be argued that phase III clinical trials have the highest likelihood of being practice-changing as they can be used as the basis for the implementation of new treatment programs 2. Because of their complex nature and accrual requirements, almost all non-industry sponsored phase III clinical trials in the U.S. are designed and activated through the NCI cooperative group mechanism before they are opened at a cancer center. For clarity, we use the term “activate” to refer to the release of an approved protocol by a cooperative group to the oncology community and “open” to signify when the protocol has received local site IRB approval and has completed all other tasks required for the trial to be made available for patient accrual at that site.

The design and activation of phase III clinical trials requires an extensive development process, with multiple levels of internal oversight within a cooperative group and external oversight by organizations such as the NCI Cancer Therapy Evaluation Program (CTEP), the Food and Drug Administration (FDA), and the pharmaceutical company that has provided the investigational agent for the study. After activation by the cooperative group, the trial must then be evaluated locally at a site, adding additional layers of oversight and review. While the NCI cooperative group program has made major advances in the treatment of cancer, this program has been heavily scrutinized almost since its inception in 1955, with the first concern over its effectiveness voiced in 1959 3

While such scrutiny is not novel, what is generally missing from such discussions is a detailed, evidence-based evaluation of the steps required for an idea to transit through the system and of the operational or “invisible” barriers faced by all parties in the design of phase III clinical trials 4. While pieces of this puzzle have been presented separately 4-7, this paper presents the first complete picture of the steps and time required to develop and open a phase III clinical trial that examines all participants in the process. It highlights the barriers pervasive across the entire system.

Additionally, a non-clinical cancer researcher might hypothesize that trials which have received such extensive review by multiple parties should have a high oncology research community acceptance and therefore have a high likelihood of achieving their accrual goals. We present data showing that the opposite is the case: at individual cancer centers there is a higher likelihood of zero accrual to a cooperative group trial than to non-cooperative group trials. Further, we present data that shows that for NCI-CTEP approved trials closed in an eight year period (2000-2007), about 6% of all resulted in zero accruals. Finally, we offer a discussion of the implications of this long development process as it relates to accrual performance.

Methods

To understand the oncology clinical trial development process fully, research was conducted at a total of eight institutions involved in the development or implementation of oncology clinical trials in the United States. The sites investigated were two NCI-supported cooperative groups (Cancer and Leukemia Group-B, CALGB; Eastern Cooperative Oncology Group, ECOG), four NCI-designated comprehensive cancer centers (Fox Chase Cancer Center, The Ohio State University Comprehensive Cancer Center, University of North Carolina – Lineberger Comprehensive Cancer Center, and Vanderbilt-Ingram Cancer Center), the National Cancer Institute’s Cancer Therapy Evaluation Program (NCI-CTEP), and the Central Institutional Review Board (CIRB) supported by the NCI. It is important to note that the cancer centers were not randomly selected; they were chosen due to their excellent scores on the clinical research management portion of their respective Cancer Center Support Grant (CCSG) applications.

For each organization, the Dilts & Sandler 4 case study and process map methodology was followed. An interdisciplinary team of experts from schools of medicine, engineering and management collected data from multiple sources through: 1) extensive staff interviews, 2) analysis of existing process documentation and records, 3) archival analysis of clinical trial initiation data, and 4) electronic mail and database records. Personal interviews involved questions targeting the research objectives of identifying processes and barriers to the opening of clinical trials. Archival data collection from written and electronic sources were chosen because 1) such documentation was broad and permitted multiple time frames, settings and events, and 2) archival records are more precise and have less reporting and selectivity biases than an individual’s recollection of steps. This process allows for triangulation among the collection of descriptive data (what was said was being done as uncovered in the interviews), normative data (what the policies and procedures documentation indicated should be done) and archival data (what the clinical trial record review showed was actually done). This three part method resulted in capturing a complete understanding of the development process structure. The end result of this effort was a validated process map and timing data. The method was replicated at multiple institutions to ensure data consistency across sites. Additional, independent data were collected from CTEP for closed studies during the eight year period from 2000 –2007. This study was approved by the Vanderbilt University IRB (IRB# 060602) and supported by the NCI and Vanderbilt University.

The research has three primary outcome metrics: 1) process mapping, 2) timing analysis, and 3) accrual performance.

Process Mapping

This metric identified and mapped existing process steps required to activate or open an oncology clinical trial. The total development time is defined as beginning with the first formal submission of a concept or a letter of intent (LOI) to a cooperative group and ending with the opening of the study for patient accrual at a cancer center. These data were collected by means of more than 30 onsite sessions across more than 100 personnel interviews, additional follow-up e-mail correspondence, and a series of at least two clarification teleconferences each with members of the cooperative groups, CTEP, and comprehensive cancer centers. Informal clarification interview sessions were conducted at each site to ensure that the data and identified processes were accurate prior to finalization of results.

There were multiple cross-validation of steps and timing at different institution hierarchy levels. The interviews were conducted in both individual and group settings with the input remaining anonymous. The interview process included both open- and closed-ended questions. Upon completion, the interviewees were asked to clarify specific acronyms, decision point names, position titles and responsibilities. Objective data stored in databases or e-mails were cross-checked wherever possible with other independent records. Final validation of the process was conducted at each site with all involved participants to ensure that the process was captured correctly and that the interfaces among the participants were correct.

Timing Analysis

Once the process map was complete and verified for accuracy, the calendar days needed for each of the major steps in the process map were collected. For example, a timing analysis of a single trial at one site required the review of archival data compiled from more than four hundred historical e-mail correspondences, 15 file reviews, and validation of timing data through queries to the clinical research management database. Evaluating both the process and the timing of each process allows for an understanding of the maximum yield, or output, that the process can achieve. Identifying this, and the identified process variation, allows the selection of more effective strategies for process and productivity improvements 8-9.

Accrual Performance

A retrospective analysis of therapeutic trials completely closed to enrollment was conducted at each of the institutions. Data were acquired from the various databases that were implemented at each of the institutions. Random clinical trials within the cohort were selected and checked for consistency against physical records.

Results

Results are divided into four segments: 1) number of steps, loops, and groups involved in activating and opening a phase III NCI-approved therapeutic clinical trial, 2) time to activate and open such studies, 3) accruals to all phases of CTEP approved therapeutic studies, and 4) the relationship between time to activate or open and likelihood of eventual accrual success.

Chutes and Ladders

Using a representative cooperative group and comprehensive cancer center, to activate a phase III cooperative group trial requires a minimum of 458 processing steps in a cooperative group and 216 at CTEP/CIRB, with an additional 95 needed at a comprehensive cancer center to open the trial after activation, for a total of at least 769 processing steps (Table 1). Processing steps have two types: work steps, i.e., those that require activities be performed, and decision points, i.e., steps that require different routing of the study. There are two important caveats for these data. First, there is some “double-counting” of steps, for example when one organization’s process step requires that a proposed trial be sent to a different organization, the receipt of this trial will be counted as a processing step in the receiving organization. This results in a small degree of overstatement of steps.

Table 1.

Process Steps, Potential Loops and Number of Stakeholders Involved in Activating and Opening a Phase III Cooperative Group Trial

| Cooperative Groups** |

CTEP & CIRB | Cancer Centers** | Total | |

|---|---|---|---|---|

| Process Steps * | ≥ 458 | ≥ 216 | ≥ 95 | ≥ 769 |

| Working Steps* | ≥ 399 | ≥ 179 | ≥ 73 | ≥ 651 |

| Decision Points | 59 | 37 | 22 | 118 |

| Potential Loops | 26 | 15 | 8 | 49 |

| No. of Stakeholders Involved | 11 | 14 | 11 | 36 |

Process steps denote the minimum number of steps required to complete the development of a clinical trial. The actual number of clinic process steps may be greater depending upon the outcomes of the decision points and the number of loops

A representative Cooperative Group and Comprehensive Cancer Center was used in calculating the number of process steps, potential loops, and stakeholders

Second, it is possible in some parts of the process to bypass or repeat steps. Using the children’s game “Chutes & Ladders” as a comparison, such a bypass represents a ladder. For example, if a site accepted the results of a Central Institutional Review Board (CIRB), this would represent a ladder where the local IRB steps could be skipped. Another example would be a study that had expedited review, thus by-passing the full-board review steps. However, it is much more likely that the 769 steps identified are an accurate estimate of the minimum number of steps because virtually all trials are returned for reprocessing sometime during their design. There are a total of 49 points, termed “loops” or chutes, where a trial may be returned to an earlier part of the process flow. For example, if an IRB review requires a change in the science of a study, the proposed protocol can be returned to repeat some of the steps of scientific review.

Neither chutes nor ladders are inherently good or bad. From a positive perspective, a chute that requires a re-evaluation of a protocol due to a major scientific or safety flaw is a good thing. However, if this rework is repeated multiple times, it is a symptom of a deeper underlying flaw with either the protocol or the protocol design system itself. The OEWG report determined that for cooperative group phase III trials activated between 2006-2008, nearly all (97%) required two or more revision loops, and 34% required four or more revisions (p. 19).

Figure 1 shows the back-and-forth nature of the process for one representative phase III trial. This trial was selected because it had the most complete set of timing data. The horizontal rows on the figure are swim lanes and capture those steps undertaken by the study chair, cooperative group, CTEP, and CIRB respectively. Beginning on the upper left, it is straightforward to follow the steps of the design of the concept and protocol as it flows through activities of the various participants.

Figure 1.

Process Flow and Calendar Days Among Stakeholders for One Phase III Cooperative Group Trial

A listing of the various participants is shown in Table 2. A total of 36 separate groups or individuals may be required to approve a study before it is open to patient accrual. More importantly, there is significant overlap across the scope of reviews for scientific, data management, safety / ethics, regulatory, contracts/grants, and study startup – each of which has the potential to experience a chute to a former step in order to obtain complete consensus across all reviewing entities.

Table 2.

Reviews Required to Activate and Open a Phase III Cooperative Group Trial

| Cooperative Group | CTEP | Cancer Center | CCOP/ Affiliates | Others | |

|---|---|---|---|---|---|

| Scientific Review | Disease Site Committee | Steering / CRM | Protocol Review | Feasibility Review | Industry Sponsor |

| Executive Committee | PRC | Site Surveys | |||

| Protocol Reviews (2-4) | CTEP Final | ||||

|

| |||||

| Data Management | CRF Reviews (2-4) | CDE Review | |||

| Database Review | |||||

|

| |||||

| Safety / Ethics | Informed Consent | Local IRB | Informed Consent | CIRB | |

|

| |||||

| Regulatory | Regulatory Review | PMB Review | FDA | ||

| RAB Review | |||||

|

| |||||

| Contracts / Grants | Budget | Industry Sponsor | |||

| Language | |||||

|

| |||||

| Study Start-up | Start-up Review | Start-up Review | Start-up Review | ||

Abbreviations: CCC, Comprehensive Cancer Centers; CCOP, Community Clinical Oncology Program; CDE, Common Data Element; CIRB, Central Institutional Board Review, CRF, Case Report Form; CRM, Concept Review Meeting; CTCG, Clinical Trials Cooperative Group; CTEP, Cancer Therapy Evaluation Program; FDA, Food and Drug Administration; PMB, Pharmaceutical Management Branch, PRC, Protocol Review Committee; RAB, Regulatory Affairs Branch

Development Time and Variation in the Development Process

The time from submission of initial concept/LOI to study activation and opening is difficult to measure because, while the opening date is easily known, capturing the exact time when a concept is formalized can be problematic. The earliest formal measurable date was selected, usually the initial concept/LOI proposal sent by a PI to a cooperative group study committee. A total of 41 trials were evaluated (13 for CALGB; 28 for ECOG). Two comprehensive cancer centers supplied complete historic data with respect to time (n=58 and n=178) and two supplied small samples of studies (n=3 and n=4). (Table 3). The median time for CTEP and cooperative group processing is approximately 800 calendar days (CALGB=784; ECOG=808), with ranges of 537-1130 and 435-1604 days, respectively. For cancer centers, the median calendar time is approximately 120 days, with a range of 21-836 days, with cancer center B being considered an outlier due to its small sample size. These dates are cumulative as cancer centers cannot begin processing a phase III trial until it is activated by a cooperative group. The median calendar time from initial formal concept/LOI to opening for the first patient is therefore approximately 920 days, or nearly 2.5 years. However, the range is 456 to 2440 calendar days, or 1.25 to 6.7 years. To put these dates in perspective, this median time is longer than it took to design, conduct, and publish the first CALGB trial 10.

Table 3.

Activation and Opening Times at Cooperative Groups and Cancer Centers

| n | Calendar Days | ||

|---|---|---|---|

| Cooperative Group* | Median | min-max | |

| CALGB | 13 | 784 | (537 - 1130) |

| ECOG | 28 | 808 | (435 - 1604) |

| Cancer Center ** | |||

|

| |||

| Cancer Center A | 58 | 120 | (27 - 657) |

| Cancer Center B*** | 3 | 252 | (139 - 315) |

| Cancer Center C*** | 4 | 122 | (81 - 179) |

| Cancer Center D | 178 | 116 | (21 - 836) |

Cooperative Group development time is the number of calendar days from the time cooperative group recieves a LOI/Concept from the study chair until the study is centrally activated

Cancer Center development time is the number of calendar days the day the PI formally submits the trial for consideration until the study is open to patient enrollment at the local site

Cancer Centers B and C only provided samples of their protfolio of studies

One major issue related to the development of clinical trials is that they require the coordination and cooperation of multiple participants. For example, designing and activating a phase III cooperative group therapeutic non-pediatric trial requires evaluation and coordination between a cooperative group and CTEP. Multiple reviews by both parties can create a natural tension where one organization is waiting for the other organization to respond to a change. In its worst form, such tension can lead to blaming most start-up delay on the other organization’s inefficiencies. To investigate this issue, 28 studies activated between 2000-2005 were evaluated in depth with respect to time (Table 4). Interestingly, as can be seen from the last column in the table, no specific review or party consistently required longer time. Thus, the issue is not how to repair a single element of the system; rather it is how to reengineer the entire process to reduce rework while simultaneously increasing safety, quality and performance of the trial.

Table 4.

Calendar Days of Review by Different Stakeholders Requiring Response for Phase III Cooperative Group Trials Activated From 2000-2005

| CTEP / CIRB Review Time |

Cooperative Group Response Time |

Time Difference |

|||||

|---|---|---|---|---|---|---|---|

| Concept Review | Reviewer | n* | median | (range) | median | (range) | (coop minus CTEP) |

|

|

CTEP | 14 | 60 | (15 - 104) | 72 | (1 - 368) | 12 |

| CTEP | 4 | 48 | (19 - 66) | 36 | (22 - 84) | −12 | |

| Concept Re-review | CTEP | 3 | 6 | (1 - 6) | 17 | (1 - 56) | 11 |

| Protocol Review | |||||||

|

| |||||||

| Protocol Review Committee | CTEP | 33 | 32 | (5 - 69) | 32 | (1 - 188) | 0 |

| Protocol Re-Review | CTEP | 22 | 8 | (1 - 85) | 9 | (1 - 266) | 1 |

| CIRB Review | |||||||

|

| |||||||

| CIRB Review | CIRB | 43 | 29 | (5 - 55) | 21 | (2 - 83) | −8 |

| Protocol Re-review after CIRB Approval*** | CTEP | 19 | 12 | (1 - 32) | 17 | (1 - 140) | 5 |

| Amendments Prior to Activation | |||||||

|

| |||||||

| Protocol Re-Review | CTEP | 2 | 9 | (1 - 17) | 5 | (5 - 6) | −4 |

| CIRB Review | CIRB | 10 | 12 | (2 - 34) | 30 | (3 - 67) | 18 |

| Protocol Re-review after CIRB Approval*** | CTEP | 1 | 1 | (1 - 1) | 22 | (22 - 22) | 21 |

Sample size consists of the number of reviews that were required for the 28 clinical trials followed in the sample

The Concept Evaluation Panel was designed to provide a mechanism for quick feedback on new concepts. This process was rolled out during the sampling period - concepts for trials were either reviewed at the Concept Review Meeting or the Concept Evaluation Panel

Trials that require modifications after CIRB review require an additional consensus review by CTEP

Low Accruals Performance at Local Participating Institutions

Completing the arduous development process does not insure accrual success. Data from the four comprehensive cancer centers highlights the poor accrual performance of cooperative group trials at academic medical centers (Table 5) 11. Accruals at comprehensive cancer centers occurred over a multi-year period but there was not complete overlap in the time periods evaluated. Overall, 898 trials were in the sample: 394 cooperative group trials and 504 non-cooperative group trials. Of particular interest are those trials that were opened and closed to accrual with zero enrollments. Overall, for cooperative group trials opened at cancer centers, 38.8% of trials resulted in zero accruals, while 20.6% of non-cooperative group trials had zero accruals, thus a cooperative group trial was nearly twice as likely to have zero accrual at a cancer center than non-cooperative group studies (p≤.001). Expanding the range, 77.4% of cooperative group trials opened at these cancer centers had under 5 patients accrued.

Table 5.

Cancer Center Accrual to Cooperative Group Trials and Non-Cooperative Group Trials

| Cancer Center | Cancer Center | Cancer Center | Cancer Center | ||

|---|---|---|---|---|---|

| Accruals By Sponsor: | A | B | C | D | Overall |

| Time Period | 1/2001-7/2005 | 1/2000-9/2006 | 1/2000- 12/2005 | 1/2000-4/2007 | |

| Cooperative Group Trials | |||||

| n= | 37 | 166 | 61 | 130 | 394 |

| zero | 27.0% | 38.6% | 29.5% | 46.9% | 38.8% |

| 1-4 | 37.8% | 38.6% | 39.3% | 38.5% | 38.6% |

| 5-10 | 13.5% | 13.9% | 19.7% | 7.7% | 12.7% |

| 11-15 | 8.1% | 4.2% | 6.6% | 0.0% | 3.6% |

| 16-20 | 2.7% | 0.0% | 1.6% | 4.6% | 2.0% |

| >20 | 10.8% | 4.8% | 3.3% | 2.3% | 4.3% |

|

| |||||

| Non-Cooperative Group Trials | |||||

| n= | 111 | 157 | 43 | 193 | 504 |

| zero | 18.9% | 14.6% | 23.3% | 25.9% | 20.6% |

| 1-4 | 30.6% | 22.9% | 9.3% | 26.4% | 24.8% |

| 5-10 | 23.4% | 18.5% | 32.6% | 24.9% | 23.2% |

| 11-15 | 12.6% | 13.4% | 14.0% | 7.3% | 10.9% |

| 16-20 | 2.7% | 7.6% | 7.0% | 5.7% | 5.8% |

| >20 | 11.7% | 22.9% | 14.0% | 9.8% | 14.7% |

One argument that could be made to justify these results is that, because cooperative group trials are opened at multiple sites, it is reasonable to have low accruals at any individual site because of the high number of sites contributing to the single accrual goal. Because it is difficult to understand why any cancer center would open such a high number of adult cancer trials if they expect zero accruals, we decided to investigate this question from a more global perspective, i.e., looking at the aggregate, national accruals to such trials. Investigating accruals for all CTEP approved clinical trials completely closed to accrual from 2000-2007 showed that of the 911 trials of all phases in the sample period, 6.4% resulted in zero accruals (Table 6). With respect to phase III trials, 39.1% of trials closed to accrual with 20 or fewer enrollments.

Table 6.

Accrual to NCI-CTEP Approved Clinical Trials Closed 2000-2007

| Phase I | Phase I/II | Phase II | Phase III | Other | Pilot Studies |

Total | |

|---|---|---|---|---|---|---|---|

| n= | 186 | 73 | 549 | 41 | 34 | 28 | 911 |

| Zero | 5.9% | 8.2% | 4.3% | 9.8% | 29.5% | 10.7% | 6.4% |

| 1 to 4 | 9.1% | 12.3% | 4.3% | 7.3% | 8.9% | 0.0% | 6.0% |

| 5 to 10 | 12.4% | 4.1% | 7.3% | 9.8% | 2.9% | 10.7% | 8.1% |

| 11 to 15 | 11.8% | 11.0% | 7.1% | 2.4% | 2.9% | 7.1% | 8.0% |

| 16 to 20 | 8.6% | 5.5% | 9.5% | 9.8% | 2.9% | 17.9% | 9.0% |

| >20 | 52.2% | 58.9% | 67.5% | 60.9% | 52.9% | 53.6% | 62.5% |

The Impact of Long Development Times

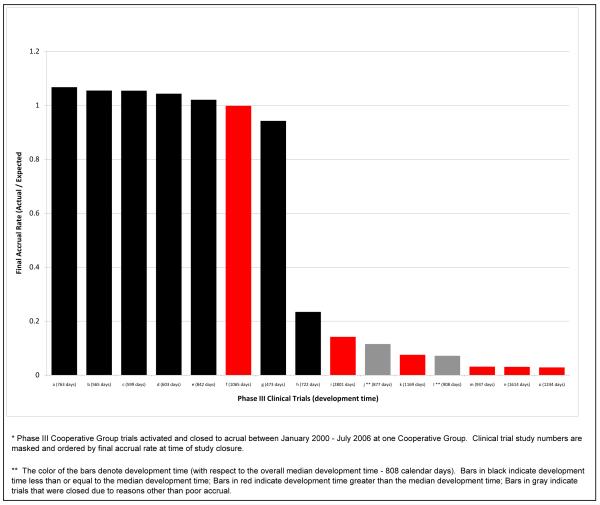

The relationship between accruals and development time was also investigated for phase III cooperative group trials closed to patient enrollment at one cooperative group between January 2000 – July 2006 (n=15). Figure 2 highlights the relationship between development time and accrual. Two of the trials were stopped due to scientific/safety reasons. Of the trials that required less than the median development time (n=7, shown in black), five achieved more than their expected minimum accrual by study closure, and one achieved 94% of its goal. On the other hand, of those trials with development time greater than the median (n=6), only one achieved greater than 90% of the expected accrual by study closure. The remaining trials closed with less than 15% of expected accrual. This relationship is not limited to cooperative group trials—additional analysis on a more complete data set from CTEP, the results of which are published in this issue of CCR, shows a strong negative statistical relationship between achievement of accrual goals and development time 12.

Figure 2.

Accrual Performance of Phase III Trials Closed to Accrual Stratified by Development Time(n=15*)

Discussion

The most recent call for reinvigorating the cooperative group system was issued in April 2010 with the publication of the IOM report “A National Cancer Clinical Trial System for the 21st Century” 1. While the results and the recommendations of this report may be controversial, the problems it outlines as well as its recommendations are not unique. Thirteen years ago, in 1997, an NCI report concluded, “The clinical trials methodologies used by the 11 cooperative groups and 51 cancer centers have created a system described as a ‘Tower of Babel’ 13. The problem persists today as evidenced by the summary results presented here and in recent comments alluding to the process of developing clinical trials as the “Ordinary Miracle of Cancer Clinical Trials 14. Additional evidence of the problem can be seen in three additional studies. Go et al. 15, investigating a total of 495 ECOG phase II and III trials from 1977-2006, found that 27% of trials failed to complete accrual. Schroen et al 16 in a study of 248 phase III trials open in 1993-2002 from five cooperative groups discovered that 35% were closed due to inadequate accrual. Finally, in an cross-country study, Wang-Gillam et al. 17 found that the median time from submittal to opening for a phase II industry-sponsored thoracic oncology clinical trial was 239.5 days at a US institution and 112.5 days at an Italian institution.

Improvements to both the quality of the processes and to the time required to develop clinical trials should not be done in isolation There is a need to rekindle the collaborative spirit of the cooperative group program. As is well stated in the IOM report, such collaboration would include uniformity of back-office, support functions while simultaneously not impairing a group’s ability to be creative with respect to developing clinical trials around interesting and important scientific questions. Additionally, a general philosophy of facilitation rather than primarily oversight should be fostered throughout the NCI, cooperative groups, and cancer centers.

Clearly it is time to go beyond mere recommendations and begin the actual execution of changes in the existing system. Some changes are beginning with efforts such as the timelines developed and implemented by the NCI Operational Efficiency Working Group (OEWG) 18. However, this represents only one of many possible steps—and involves only some of the many participants that need to change—if the system of clinical trial development as a whole is to be reinvigorated.

We would be remiss if we did not address the other elephant in the room: financial resources. Financial incentives, or what is better characterized as removal of financial disincentives, for investigators and centers are a critical aspect of improving the cooperative group program. In 2004, the estimated median per patient site cost for a phase III cooperative group trial was $5,000 19 to $6,000 20, well above the per patient reimbursement rate of $2,000, which has remained constant since 1999 21. In 2008 the NCI increased the rate of reimbursement for a small minority of complex trials by an additional $1,000 22. However, even this increased rate is not adequate to completely cover all labor costs, per subject enrollment costs, and additional research-related paperwork and reporting requirements 23. The response by the cooperative group member sites has been dramatic, with 42% planning or considering limiting accruals to cooperative group trials 24 due to per-case reimbursement or staffing issues.

However, this does not imply that the call for increasing the cooperative group budgets will in itself successfully transform the existing system of cancer research. Recall that the recommendations from the 1997 report occurred while funding was increasing for cooperative groups. Simply adding additional resources to a system in need of repair does not ensure better performance; rather, it is time to revamp the entire system with a focus on effectiveness, efficiency, and financial stability.

The findings presented in this review demonstrate the “chutes and ladders” of the existing system: some aspects of a concept or protocol are able to bypass some process steps (a ladder) but, as shown in the OEWG report, virtually all studies are reprocessed and revised (a chute), sometimes up to six times before proceeding. While many of these chutes and ladders can be within the same organization, most are the result of interactions between organizations, thus it is the system that needs to change. It is critical that more ladders be built and more chutes be eliminated in the entire system to achieve the overarching goal of discovering better ways to prevent, control and cure cancer.

Footnotes

The authors indicate no conflicts of interest nor are there any disclaimers attached to this manuscript.

References

- 1.IOM (Institute of Medicine), editor. A National Cancer Clinical Trials System for the 21st Century: Reinvigorating the NCI Cooperative Group Program. The National Academies Press; Washington, DC: 2010. [PubMed] [Google Scholar]

- 2.Djulbegovic B, Kumar A, Soares HP, et al. Treatment success in cancer: new cancer treatment successes identified in phase 3 randomized controlled trials conducted by the National Cancer Institute-sponsored cooperative oncology groups, 1955 to 2006. Arch Intern Med. 2008;168:632–42. doi: 10.1001/archinte.168.6.632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gellhorn A. Invited remarks on the current state of research in clinical cancer. Cancer Chemotherapy Rep. 1959;5:1–12. [Google Scholar]

- 4.Dilts DM, Sander AB. The “Invisible” Barriers to Clinical Trials: The impact of Structural, Infrastructural, and Procedural Barriers to Opening Oncology Clinical Trials. J Clin Oncol. 2006;24:454–52. doi: 10.1200/JCO.2005.05.0104. [DOI] [PubMed] [Google Scholar]

- 5.Dilts DM, Sander AB, Baker M, et al. Processes to Activate Phase III Clinical Trials in a Cooperative Oncology Group: The Case of the Cancer Leukemia Group B (CALGB) J Clin Oncol. 2006;24:4553–7. doi: 10.1200/JCO.2006.06.7819. [DOI] [PubMed] [Google Scholar]

- 6.Dilts DM, Sander AB, Cheng S, et al. The Steps and Time to Process Phase III Clinical Trials at the Cancer Therapy Evaluation Program. J Clin Oncol. 2009;27:1761–6. doi: 10.1200/JCO.2008.19.9133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Dilts DM, Sander AB, Cheng S, et al. Development of Clinical Trials in a Cooperative Group Setting: The Eastern Cooperative Group. Clin Cancer Res. 2008;14:3427–33. doi: 10.1158/1078-0432.CCR-07-5060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bohn R. Noise and learning in semiconductor manufacturing. Management Science. 1995;41:31–42. [Google Scholar]

- 9.Field J, Sinha K. Applying process knowledge for yield variation reduction: A longitudinal field study. Decision Sciences. 2005;36:159–86. [Google Scholar]

- 10.Schlisky RL. Personalizing Cancer Care: American Society of Clinical Oncology Presidential Address 2009. J Clin Oncol. 2009;27:3725–30. doi: 10.1200/JCO.2009.24.6827. [DOI] [PubMed] [Google Scholar]

- 11.Dilts DM, Sander AB, Cheng S, et al. Accrual to Clinical Trials at Selected Comprehensive Cancer Centers (abstract) American Society of Clinical Oncology. 2008;26:6543. [Google Scholar]

- 12.Cheng S, Dietrich M, Finnigan S, Dilts DM. A Sense of Urgency: Evaluating the Link between Clinical Trial Development Time and the Accrual Performance of CTEP-Sponsored Studies. Clin Cancer Res. 2010 doi: 10.1158/1078-0432.CCR-10-0133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.National Cancer Institute Report of the National Cancer Institute Clinical Trials Program Review. 1997.

- 14.Steensma DP. Processes to activate phase III clinical trials in a cooperative oncology group: the elephant is monstrous. J Clin Oncol. 2007;25:1148. doi: 10.1200/JCO.2006.09.5232. author reply -9. [DOI] [PubMed] [Google Scholar]

- 15.Go RS, Meyer M, Mathiason MA, et al. Nature and outcome of clinical trials conducted by the Eastern Cooperative Group (ECOG) from 1977 to 2006. J Clin Onc. 2010;28 abst: 6069. [Google Scholar]

- 16.Schroen AT, Petroni GR, Wang H, et al. Challenges to accrual predictions to phase III cancer clinical trials: A survey of study chairs and lead statisticians of 248 NCI-sponsored trials. J Clin Onc. 2009;27 doi: 10.1177/1740774511419683. abst 6562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wang-Gillam A, Williams K, Novello S, et al. Time to activate lung cancer clinical trials and patient enrollment: A representative comparison study between two academic centers across the Atlantic. J Clin Oncol. 2010 doi: 10.1200/JCO.2010.28.1824. doi:10.1200/ JCO.2010.281824. [DOI] [PubMed] [Google Scholar]

- 18.National Cancer Institute (NCI) Report of the Operational Efficiency Working Group: Compressing the Timeline for Cancer Clinical Trial Activation. National Cancer Institute; Bethesda, MD: Mar, 2010. [Google Scholar]

- 19.Emanuel E, Schnipper L, Kamin D, Levinson J, Lichter A. The costs of conducting clinical research. J Clin Oncol. 2003;21:4145–50. doi: 10.1200/JCO.2003.08.156. [DOI] [PubMed] [Google Scholar]

- 20.C-Change . A Guidance Document for Implementing Effective Cancer Clinical Trials. Ver 1.2 ed C-Change; Washington, DC: 2005. Executive Summary. [Google Scholar]

- 21.IOM (Institute of Medicine) Multi-center phase III clinical trials and NCI cooperative groups. Washington, DC: 2009. [PubMed] [Google Scholar]

- 22.Mooney M. Clinical Trials and Translational Research Advisory Committee Meeting. Bethesda, MD: 2008. Cooperative group clinical trials complexity funding: Model development & trial selection process. [Google Scholar]

- 23.American Cancer Society Cancer Action Network . Barriers to provider participation in clinical cancer trials: Potential policy solutions. Washington, DC: 2009. [Google Scholar]

- 24.Baer AR, Kelly CA, Bruinooge SS, Runowicz CD, Blayney DW. Challenges to National Cancer Institute–Supported Cooperative Group Clinical Trial Participation: An ASCO Survey of Cooperative Group Sites. Journal of Oncology Practice. 2010;6:1–4. doi: 10.1200/JOP.200028. [DOI] [PMC free article] [PubMed] [Google Scholar]