Abstract

A major goal of computational neuroscience is the creation of computer models for cortical areas whose response to sensory stimuli resembles that of cortical areas in vivo in important aspects. It is seldom considered whether the simulated spiking activity is realistic (in a statistical sense) in response to natural stimuli. Because certain statistical properties of spike responses were suggested to facilitate computations in the cortex, acquiring a realistic firing regimen in cortical network models might be a prerequisite for analyzing their computational functions. We present a characterization and comparison of the statistical response properties of the primary visual cortex (V1) in vivo and in silico in response to natural stimuli. We recorded from multiple electrodes in area V1 of 4 macaque monkeys and developed a large state-of-the-art network model for a 5 × 5-mm patch of V1 composed of 35,000 neurons and 3.9 million synapses that integrates previously published anatomical and physiological details. By quantitative comparison of the model response to the “statistical fingerprint” of responses in vivo, we find that our model for a patch of V1 responds to the same movie in a way which matches the statistical structure of the recorded data surprisingly well. The deviation between the firing regimen of the model and the in vivo data are on the same level as deviations among monkeys and sessions. This suggests that, despite strong simplifications and abstractions of cortical network models, they are nevertheless capable of generating realistic spiking activity. To reach a realistic firing state, it was not only necessary to include both N-methyl-d-aspartate and GABAB synaptic conductances in our model, but also to markedly increase the strength of excitatory synapses onto inhibitory neurons (>2-fold) in comparison to literature values, hinting at the importance to carefully adjust the effect of inhibition for achieving realistic dynamics in current network models.

INTRODUCTION

Numerical simulations of detailed biophysical models of cortical microcircuits or even whole brain regions provide powerful tools to approach complex questions in neuroscience and are commonly regarded as a promising tool to understand the mechanistic link from anatomical structure and physiological properties to computational functions of cortical circuits. In general, approaches along this line incorporate selected aspects of the known anatomy and physiology to replicate experimental data on emergent functional properties, such as the structure of preferred orientation maps of the primary visual cortex (Adorjan et al. 1999; Bartsch and van Hemmen 2001; Blumenfeld et al. 2006), direction selectivity maps (Ernst et al. 2001; Wenisch et al. 2005), and simple/complex cells (Chance et al. 1999; Tao et al. 2004; Wielaard et al. 2001), or successfully exemplifying theoretical ideas about information processing in the brain (Diesmann et al. 1999; Maass et al. 2002; Vogels and Abott 2005). However, these increasingly complex recurrent network models are often still a strong abstraction from reality, and it is not clear whether the responses of such network models exhibit at least a general likeness to its counterpart in reality.

Constraining the firing regimen of in silico models with that observed in vivo is important for at least two reasons: First, it will benchmark current models to achieve a realistic firing response and thus will further help to open new research directions because it will hint at current shortcomings of existing models. Second, it has been suggested theoretically that there might be a firing regimen or state that is favorable for ongoing computation within recurrent neural networks (Brunel 2000; Legenstein and Maass 2007; Vogels and Abbott 2005). One might thus postulate that, during evolution, the brain has shaped a particular useful firing regimen that is in some way supporting the computational function of the neural tissue. Therefore achieving a realistic firing activity in cortical circuit models might be an important but rarely considered prerequisite to using these models for analyzing aspects of cortical computational functions. If a realistic firing regimen cannot be achieved easily, the validity of conclusions drawn from these model circuits might be corrupted, or efforts have to be made to tune these models toward a realistic regimen. To study this issue, we ask in this study whether a state-of-the-art network model of a cortical circuit is able to reproduce the characteristic firing regimen of the cortex.

We focus on the primary visual system (V1) in the anesthetized state, because the anatomical and neurophysiological details of V1 are relatively well known and its position in visual sensory processing is relatively well established. In contrast to awake and behaving monkeys, the visual input received by V1 during stimulation is easily constrained by the experimenter. Moreover, V1 already serves as a reference cortical area to study large-scale network models (Johansson and Lansner 2007; Kremkow et al. 2007), although many aspects of its computational organization and the underlying mechanisms remain poorly understood (Olshausen and Field 2005).

To compare the firing state of V1 in vivo with that of simulated responses of a cortical network model in silico, we first recorded spike responses with multielectrode arrays in V1 of four anesthetized monkeys while presenting seminatural movies of several-minute duration. Given the complex naturalistic stimuli, we thus expect that V1 will likely be in an operating regimen, where its computations are usually performed. We characterized this firing regimen by its “statistical fingerprint” using a number of salient statistical features, measuring the spike variability, the burst behavior, and the correlation structure. We compared this “statistical fingerprint” to that obtained from the response of a state-of-the-art cortical circuit model of a 5 × 5-mm patch of V1, comprising about 35,000 neurons and 3.9 million synapses situated in several hypercolumns. The developed spiking neuron network model is based on the cortical microcircuit model described in Haeusler and Maass (2007), which implements experimental data from Thomson et al. (2002) on lamina-specific connection probabilities and data from Markram et al. (1998) and Gupta et al. (2000) regarding stereotypical dynamic properties (such as paired pulse depression and paired pulse facilitation) of synaptic connections. We extended this cortical microcircuit model laterally and incorporated many anatomical properties of V1 in macaques to ensure the comparability to our in vivo recordings.

Our approach, using both electrophysiological recordings and model circuit simulation, provided us with the unique possibility to use the same movie stimuli for the model simulations and during in vivo recordings. Given this comparability, we were able to study whether the firing regimen of a model achieves a realistic state, and if not, whether a set of global parameters were sufficient to tune the models firing regimen to become more realistic.

We found that the response of the detailed model circuit adopts a firing regimen that is remarkably similar to the in vivo response and is on average close to the deviations across different sessions and different monkeys. This close match was achieved by tuning only a few parameters: an overall synaptic weight scaling factor compensating for the reduced number of modeled neurons, the relative synaptic weight from excitatory to inhibitory neurons, and the relative strength of patchy lateral long-range excitatory weights. We found that the firing response statistics was not simply induced by the statistics of the complex input stimuli but instead depended significantly on the internal dynamics. This good fit suggests that current network models comprising realistic neuron dynamics, as well as realistic time courses of synaptic activation, which included short-term depression and facilitation, are capable of generating a similar diverse network response behavior as can be observed in in vivo recordings.

Growing evidence suggests that computational functions of neural circuits are closely linked to its firing regimen. We therefore expect that this characterization of the firing regimen provided here and the possibility to use a few parameters to calibrate a complex model will greatly ease the analysis of the computational properties of realistic, detailed circuit models in future.

METHODS

Experimental methods

ELECTROPHYSIOLOGICAL RECORDING.

The electrophysiological recordings were previously described in (Montemurro et al. 2008), where the same data were analyzed from a different perspective. However, for completeness we include a detailed description here.

Four adult rhesus monkeys (Macaca mulatta) participated in these experiments. All procedures were approved by the local authorities (Regierungspräsidium) and were in full compliance with the guidelines of the European Community (EUVD 86/609/EEC) for the care and use of laboratory animals. Before the experiments, form-fitted head posts and recording chambers were implanted during an aseptic and sterile surgical procedure (Logothetis et al. 2002). To perform the neurophysiological recordings, the animals were anesthetized (remifentanil, 0.5–2 μg/kg/min), intubated, and ventilated. Muscle relaxation was achieved with a fast acting paralytic, mivacurium chloride (5 mg/kg/h). Body temperature was kept constant, and lactated Ringer solution was given at a rate of 10 ml/kg/h. During the entire experiment, the vital signs of the monkey and the depth of anesthesia were continuously monitored (as described in Logothetis et al. 1999). For the protocol used in these experiments, we previously examined the concentration of all stress hormones (catecholamines) (Logothetis et al. 1999) and found them to be within the normal limits. Drops of 1% ophthalmic solution of anticholinergic cyclopentolate hydrochloride were instilled into each eye to achieve cycloplegia and mydriasis. Refractive errors were measured, and contact lenses (hard PMMA lenses, Wöhlk) with the appropriate dioptric power were used to bring the animal's eye into focus on the stimulus plane. The electrophysiological recordings were performed with electrodes that were arranged in a 4 × 4 square matrix (interelectrode spacing varied from 1 to 2.5 mm) and introduced each experimental session into the cortex through the overlying dura mater by a microdrive array system (Thomas Recording, Giessen, Germany). Electrode tips were typically (but not always) positioned in the upper or middle cortical layers. The impedance of the electrode varied from 300 to 800 kOhm. Both spontaneous and stimulus-induced neural activity were collected and recorded for periods ≤6 min. Signals were amplified using an Alpha Omega amplifier system (Alpha Omega, Ubstadt-Weiher, Germany). The amplifying system filtered out the frequencies below 1 Hz. Recordings were performed in a darkened booth (Illtec, Illbruck Acoustic). The receptive fields of the electrode sites were plotted manually, and the position and size of each field were stored together with the stimulus parameters and the acquisition data. The visual stimulator was a dual processor Pentium II workstation running Windows NT (Intergraph, Huntsville, AL) and equipped with OpenGL graphics cards (3Dlabs Wildcat series). The resolution was set to 640 by 480 pixels. The refresh rate was 60 Hz, and the movie frame rate was 30 Hz. All image generation was in 24-bit true-color, using hardware double buffering to provide smooth animation. The 640 × 480 VGA output drove the video interface of a fiber-optic stimulus presentation system (Avotec, Silent Vision) and also drove the experimenter's monitor. The field of view (FOV) of the system was 30 H × 23 V degrees of visual angle and the focus was fixed at 2 diopters. The system's effective resolution, determined by the fiber-optic projection system was 800 H × 225 V pixels (the fiber-optic bundle is 530 × 400 fibers). Binocular presentations were accomplished through two independently positioned plastic, fiber-optic glasses. Positioning was aided by a modified fundus camera (Zeiss RC250) that permitted simultaneous observation of the eye fundus. The fundus camera has a holder for avotec projector so that the center of camera lens and avotec projector is aligned in the same axis. This process ensured the alignment of the stimulus center with the fovea of each eye. To ensure accurate control of stimulus presentation, a photo-diode was attached to the experimenter's monitor permitting the recording of the exact presentation time of every single frame.

The visual stimuli were binocularly presented 3.5- to 6-min-long natural color movies (segments of the commercial movie “Star Wars”). During each of 10 recording sessions, the movie was repeated 12–40 times.

SPIKE DETECTION.

To extract spike times from the electrophysiological recordings, the 20.83 kHz neural signal was filtered in the high-frequency range of 500–3,500 Hz. The threshold for spike detection was set to 3.5 SD. A spike was recognized as such only if previous spikes have occurred >1 ms earlier. Spikes detected represent the spiking activity of a small population of cells rather than well-separated spikes from a single neuron. To separate this multiunit activity into single unit activity, we sorted the spikes according to the wave forms.

For spike sorting, we used the method described by Quian Quiroga et al. (2004). The spike waveforms were extracted around the detection times as described above (in a region of 0.25 ms before to 0.5 ms after the detected spike). These spike forms were interpolated, and 10 wavelet features (with 4 scales) were extracted (Quian Quiroga et al. 2004). From this feature pool, the 10 features (KS-test) were used as input for the clustering algorithm. We sorted the spikes using the paramagnetic algorithm of Quian Quiroga et al. (2004). For each electrode, a few reasonable clusters were selected by visual inspection of the spike waveforms ensuring a reasonable distinguishable average waveform among clusters. After this initial selection, spikes that initially were not classified in a particular cluster (or belonging to not selected clusters) were forced to belong to the nearest selected cluster (Mahalanobis distance; Quian Quiroga et al. 2004). A cluster that maintained very similar waveforms after this step was deemed to be a well-isolated cluster and was considered for further analysis. Otherwise the cluster was not considered further for spike sorting.

Model

In this section, we describe a data-based model developed to compare its spiking activity with the electrophysiological recordings from macaque. It consists of an input model [representing the retina and lateral geniculate nucleus (LGN) of the thalamus] and a model of a patch of V1, receiving and processing the spikes of the input model. In the following, we will first describe the V1 model and subsequently the input model.

V1 MODEL.

Our model for a 5 × 5-mm patch of area V1 consisted of 34,596 neurons and 3.9 million synapses. Various anatomical and physiological details were included in our model. The connectivity structure of the V1 model was similar to that of the generic cortical microcircuit model discussed in Haeusler and Maass (2007). The neurons of that model were equally distributed on three layers, corresponding to the cortical layers 2/3, 4, and 5. Each layer contained a population of excitatory neurons and a population of inhibitory neurons with a ratio of 4:1 (Beaulieu et al. 1992; Markram et al. 2004). The inter- and intralayer connectivity (probability and strength) was chosen according to experimental data from rat and cat cortex assembled in Thomson et al. (2002). Although there are differences, the connectivity structure in macaque is similar to that of the cat, in particular, if one identifies layer 2/3 and 4 in cat with 2-4B and 4C in macaque, respectively (Callaway 1998). The major geniculate input reaches in both species first layer 4C. Layer 4C projects to layer 2-4B, which in turn projects further to layer 5 (and layer 6 via layer 5), where feedback connections are made to layers 2-4B (see Callaway 1998 for a review). Furthermore, the sublaminar organization, e.g., the structure built by cytochrome-oxidase blobs in layer 2/3 (Callaway 1998), was neglected for simplicity and for the lack of precise data. However, as described below, the V1 model contained in addition to the microcircuit model of Haeusler and Maass (2007) a realistic thalamic input, a smooth orientation map, and patchy long-range connections in the superficial layer.

In contrast to Haeusler and Maass (2007), we set the relative amount of neurons per layer to 33%. This partitioning corresponds to experimental data from macaques (Beaulieu et al. 1992; O'Kusky and Colonnier 1982; Tyler et al. 1998), although we slightly adjusted the relative amount of neurons compared with the experimental values (where layer 4 has ∼33% more neurons), compensating for the fact that our model neglects the magnocellular and koniocellular pathways in favor of the parvocellular pathway (Callaway 1998). The three layers of the model can be identified with layers 2-4B, 4Cβ, and 5–6 in macaque V1. To avoid confusion with the terminology of (Haeusler and Maass 2007), we will, nevertheless, call them layer 2/3, 4, and 5 in the following.

In macaque, each of these three layers contains ∼50,000 neurons under a surface area of 1 mm2 (Beaulieu et al. 1992). In our model, we neglected that neuron density varies ≤1.5-fold between the layers (Beaulieu et al. 1992) and assumed instead that the neurons are uniformly distributed throughout the cortex. Thus for simplicity, we positioned all neurons on a cuboid grid with constant grid spaces. Using the experimentally measured neuron density, e.g., for layer 2/3, the grid spacing would be 20 μm for all directions. Because the simulation of such a dense network would take too much computation time, we diluted the neuron density by increasing the lateral grid spacing to 80 μm and the vertical spacing to about 200 μm.

We used a conductance-based single-compartment neuron model. Because of a considerable gain in computational speed, we used a neuron model suggested by Izhikevich (2003), which can be adjusted to express different firing dynamics (Izhikevich 2006). We randomly drew the parameters for each neuron in the network according to the bounds provided by Izhikevich et al. (2004). On the basis of these parameter distributions, the excitatory pools consisted of regular spiking cells, intrinsically bursting cells, and chattering cells, with a bias toward regular spiking cells. The inhibitory pools consisted of fast spiking neurons and low-threshold spiking neurons.

In addition to the synaptic input from other neurons in the model, each neuron received as additional input synaptic background input, modeling the bombardment of each neuron with synaptic inputs from a large number of neurons that are not represented in our model. This synaptic background input causes a depolarization of the membrane potential and a lower membrane resistance, commonly referred to as the “high conductance state” (Destexhe et al. 2001). The conductances of the background input was modeled according to Destexhe et al. (2001) by Ornstein-Uhlenbeck processes with means gexc = 0.012 μS and ginh = 0.047 μS, variances σexc = p1 0.003 μS and σinh = p1 0.0066 μS, and time constants τexc = 2.7 ms and τinh = 10.5 ms, where the indices exc/inh refer to excitatory and inhibitory background conductances, respectively. During the parameter optimization, we scaled the variances of both processes. The scaling factor p1 of both variances affects the amount of noise added to the conductance of a neuron.

Short-term synaptic dynamics was implemented according to Markram et al. (1998), with synaptic parameters chosen as in Maass et al. (2002) to fit data from microcircuits in rat somatosensory cortex (based on Gupta et al. 2000 and Markram et al. 1998). For further details, we refer to Haeusler and Maass (2007).

Lateral connectivity structure.

The generic microcircuit model of Haeusler and Maass (2007) was based on data for a column of about 100 μm diam with uniform connectivity per layer and neuron type. Here we extended the model laterally to several millimeters. Thus connection probabilities in our model depend on the lateral distance. For inter- and intracortical connections, we generally used a bell-shaped (Gaussian) probability distribution for determining the lateral extent. The SD of the Gaussian was set to 200 μm for excitatory neurons (Blasdel et al. 1985; Buzas et al. 2006; Lund et al. 2003) and to 150 μm for inhibitory neurons to incorporate the observed occurrence of extremely narrow inhibitory dendritic and axonal spreads (70 μm; Lund et al. 2003). The arborization of excitatory neurons in layer 5 seems to be wider, more diffuse, and has a spread of >500 μm laterally from the soma (Blasdel et al. 1985). Thus for these connections, we set the SD to 300 μm. Note that the value for the SD is about one half the expected maximal extent of 95% of the arborizations.

To ensure consistency with the connectivity data of Thomson et al. (2002), we scaled the Gaussian profiles such that the peak probabilities correspond to their experimentally measured connection probabilities. Therefore their connectivity pattern was locally preserved.

According to Song et al. (2005), the number of bidirectional connections between excitatory neurons in layer 5 is four times higher than the expected number under the assumption that the conditional probabilities, whether an unidirectional connection exists or not, are the same. We incorporated this probability increase into our model.

Patchy lateral long-range connections.

In cat and macaque, many pyramidal cells in layer 2/3 of the striate cortex (and also elsewhere in the cortex; Lund et al. 2003) have characteristic long-range projections targeting laterally 80% excitatory and 20% inhibitory cells (McGuire et al. 1991), which are ≤6 mm and more away (Buzas et al. 2006l Gilbert et al. 1996; Lund et al. 2003). Moreover, the targeted neurons tend to have similar feature preference as its origin, resulting in patchy connections linking similar preferred orientations (Buzas et al. 2006; Gilbert et al. 1996). Combining anatomical reconstructions of neurons and optical imaging of orientation maps, Buzas et al. (2006) proposed a formula to calculate the bouton density ρ of a typical layer 2/3 pyramidal cell

| (1) |

r is the lateral (Euclidean) distance between the pre- and the postsynaptic neuron, and Δφ is the difference of preferred orientations of the two neurons. Parameter μ is an offset in the orientation preference and parameter m is a scaling factor that accounts for the importance of the long-range orientation-dependent term against the local orientation independent term. SD σ1 and σ2 regulate the spatial width of the nonoriented and oriented term, respectively. Parameter κ signifies the “peakiness” of the density on the orientation axis. Z is a normalization constant. Because we defined preferred orientations in a hard-wired manner via “oriented” input connections (see APPENDIX A), we applied Eq. 1 for the lateral connections in layer 2/3, more specifically, for projections from excitatory cells targeting excitatory and inhibitory cells (McGuire et al. 1991).

Analogous to connections between other layers, we set σ1 = 200 μm for the local nonoriented term. We set μ = 0° and σ2 = 1,000 μm (estimated from the measurements of Buzas et al. 2006). We chose a higher κ = 20 than reported because of the following reasoning. As described above, the neuron density of our circuit model is much smaller than in reality. We compensated this neuron dilution by a noise process fed into each modeled neuron, which implicitly models activation of omitted neurons as described above (Destexhe et al. 2001). Because any (implicit) input from omitted neurons is independent of orientation preference, neurons in the circuit should have a strong bias toward orientation preference dependent connections. To account for this bias, we therefore increased κ.

Finally, the parameter m was set so that 58% of the excitatory synapses onto an excitatory neuron in layer 2/3 were long-range connections. As before, the connection probability was scaled, according to Thomson et al. (2002), by setting Z to appropriate values. Thus locally, i.e., for a neuron at the same lateral position (and orientation preference) such as a neuron located in the same layer beneath or above the presynaptic neuron, the connection probabilities were preserved. However, the weight distribution of the long-range connection was not constrained by Thomson et al. (2002). Hence, we simply scaled the recurrent weight reported by Thomson et al. (2002) and fitted the scaling factor to the in vivo data (see results). As standard value, we used a value of 1.0 for this parameter that is the same average weight as in Thomson et al. (2002).

Distance-dependent synaptic delay.

Synaptic delays differ for inhibitory and excitatory neurons. They were set according to measurements by Gupta et al. (2000) (for details see Haeusler and Maass 2007). These delays stem from molecular processes of synaptic transmission. In addition, a second delay originating from finite spike propagation velocity of the fibers was included. This delay depends on the (Euclidean) distance between the pre- and the postsynaptic neuron. Girard et al. (2001) found a median conduction velocity of 0.3 m/s for the upper layers and 1 m/s for the lower layers of V1 in macaque monkeys. Thus we sampled the velocity for each excitatory synapse in layer 2/3 from a Gaussian distribution with mean 0.3 m/s and SD 0.5 m/s (with enforced lower and upper bounds of 0.05 and 5 m/s, respectively). For the other layers, the conduction velocities were drawn from a Gaussian distribution with mean 1 m/s and SD 0.9 m/s (with same bounds as before). Because of myelination, conduction velocities of inhibitory fibers are generally higher than for excitatory cells (Thomson et al. 2002). Lacking exact measurements in the literature for all inhibitory cells, we sampled the velocities from a distribution with mean and SD twice as high as for excitatory neurons in the deep layers (the enforced upper bound was set to 10 m/s).

Synaptic conductances.

A spike, arriving at a synapse, causes a change in the synaptic conductance in the postsynaptic neuron. The dynamic of the conductance depends on the receptor kinetics. Each excitatory synapse in our model contains α-amino-3-hydroxy-5-methyl-4-isoxazolepropionic acid (AMPA) receptors having relatively fast kinetics (modeled as exponential decay with time constant τAMPA = 5 ms, reversal potential 0 mV). A fraction fNMDA of all excitatory synapses contain additionally relatively slow, postsynaptic voltage dependent N-methyl-d-aspartate (NMDA) receptors (τNMDA = 150 ms, reversal potential 0 mV; Dayan and Abbott 2001; Gerstner and Kistler 2002), and therefore exhibit a superposition of conductance kinetics. The ratio of NMDA to AMPA receptors in a synapse was drawn from a Gaussian distribution with mean μNMDA/AMPA = 0.47 and SD σNMDA/AMPA = 0.2μNMDA/AMPA (Myme et al. 2003).

Analogously, the inhibitory synaptic synapses were modeled as a mixture of GABAA and GABAB receptors. Whereas the GABAA kinetic was again modeled as a relatively fast exponential decay (τGABA-A = 5 ms, reversal potential −70 mV), the conductance kinetic of the GABAB receptors was implemented according to a model proposed by (Destexhe et al. 1994) with parameter values taken from (Thomson and Destexhe 1999) (reversal potential −90 mV). The GABAB-to-GABAA ratio of an individual inhibitory synapse was drawn from an uniform distribution between zero and a maximum ratio minh = 0.3.

INPUT MODEL.

The electrophysiological recordings were done during presentation of natural movies. Although our modeling effort was concentrated on the V1 model, we needed a sufficiently realistic transformation of movie stimulus to (V1 input) spike trains. Therefore the retina and the lateral geniculate nucleus (LGN) were modeled, according to Dong and Atick (1995), as a spatio-temporal filter bank with nonlinearities, which seems to be a good compromise between simplicity and realism (Gazeres et al. 1998). The filter bank converted time varying input signals on the retina, such as movies, into firing rates of LGN neurons. Note that this feedforward, rate-based model neglects any feedback connections from V1 to LGN (Callaway 1998). Moreover, we neglected that the ganglion cells typically react to color opponency rather than to pure luminance differences (Perry et al. 1984). Thus the color movie was converted to a grayscale movie.

Retina model.

The two-dimensional retinal inputs (movie frames) were filtered by “Mexican hat” (difference of Gaussians) spatial filters (Dong and Atick 1995; Enroth-Cugell and Robson 1966; Rodieck 1965). Filter sizes (describing the receptive fields of ganglion cells) were adapted to the geometry of parvocellular cells of macaque, where the SD of the Gaussian for center and surround were estimated to be σcenter = 0.0177° + 0.0019ε and σsurround ≈ 6.67 σcenter at eccentricity ε, respectively (in visual degrees; estimated from Fig. 4, A and B in Croner and Kaplan 1995). After the convolution of the stimulus luminance portrait with these kernels (yielding Scenter and Ssurround), the response of a retinal on-cell at visual field position r can be described by

| (2) |

Following Croner and Kaplan (1995), we set the ratio of center to surround ω = 0.642. The positive part of the center and surround interaction (indicated by the brackets […]+) was assigned to the response of an on-cell and, analogously, the absolute value of the negative part to the response of an off-cell (Dong and Atick 1995). For simplicity, we assumed that the origins of the center and surround summation fields are identical, although a recent study suggests that there might be an offset between them (Conway and Livingstone 2006).

Fig. 4.

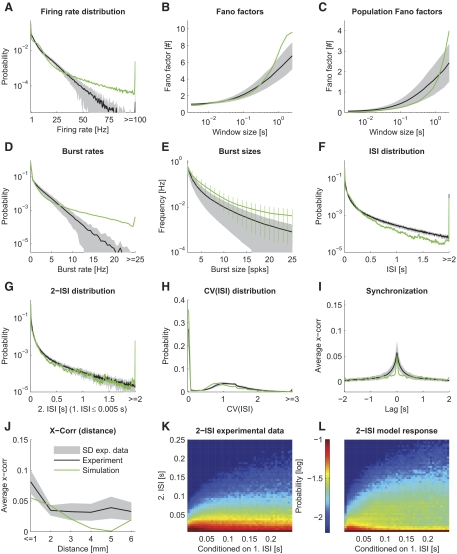

The spike statistics of the model in comparison to the statistics of the in vivo recordings. The mean of the statistical feature estimated on the in vivo data are plotted as a black line. The gray area indicates the SD between different sessions. We used a 25-s part of the movie “sw21” as stimulus for the model. The spike statistics of the simulated model with optimized parameters (adjusted relative weight scaling factors of the patchy lateral long-range connections and of the connections from excitatory to inhibitory neurons) are plotted in green. The goodness of fit is mean normalized deviation (MND) = 1.10. Each panel corresponds to a particular statistical feature analogous to Fig. 3. Note that the 2-ISI distributions (K and L) are plotted conditioned on the 1st ISI.

Applying the Difference-of-Gaussians model to the luminance of a stimulus results in a quantity called “contrast gain” (Croner and Kaplan 1995; Enroth-Cugell and Robson 1966; Rodieck 1965). To calculate the firing rate of ganglion cells, one has to multiply the “contrast gain” with the local contrast C(r) (as done in Eq. 2) if one neglects nonlinear saturation in the high contrast regimen that is typically not reached for the natural stimuli we used here. Locality is important because the concept of a global contrast, easily defined for full-field grating stimuli commonly used in experiments, is not applicable for real world images and movies (Tadmor and Tolhurst 2000). Following Tadmor and Tolhurst (2000), we estimated the local contrast using the same kernels as

| (3) |

where we additionally set the contrast to be zero in the case of darkness. Note that applying Eq. 3 results in a response RON(r) that is sparser than for a constant global contrast, because the response is now quadratic in the center and surround luminance difference (see Eq. 2).

LGN model.

The retinal output was filtered by the LGN model using a temporal kernel. The temporal kernel combines a phasic (taken from Dong and Atick 1995) and a tonic component (as in Gazeres et al. 1998), i.e., kLGN = kphasic + ktonic. It is for nonnegative times

| (4) |

and

| (5) |

Parameter A = 0.3 is the fraction of tonic activation (with respect to the peak firing rate) for a given stimulus, integrated over a time window of τ = 15 ms. Parameter ωc = 5.5 s−1 defines the shape of the phasic kernel (Dong and Atick 1995).

The positive parts and the absolute values of the negative parts of the temporal convolutions were assigned to nonlagged and lagged cells, respectively. Altogether, there are four different time-varying rate outputs, i.e., that of any combination of nonlagged or lagged cells in the LGN with either on- or off-cells from the retina (Dong and Atick 1995). Following Gazeres et al. (1998), a so-called “switching Gamma renewal process” was used to convert these time-varying rates to spike trains. This process, which was suggested to fit experimental data from cat LGN X-cells (Gazeres et al. 1998), adopts a higher spike time regularity for high-input rates (≥30 Hz; regularity parameter r = 5) and switches to a Poisson process for low rates (<30 Hz). The spontaneous background activity of each LGN neuron was set to a low value of 0.15 Hz. The peak LGN spike rate fmax was adjusted to achieve a mean firing rate of about 7 Hz under movie stimulation, when the four input channels were combined. The 7-Hz mean rate was estimated from our electrophysiological data from macaque monkey. Applying a typical 50-s movie section, we found that a mean rate of 7 Hz was achieved for fmax = 250 Hz. The peak response would be evoked by a dot of highest contrast filling the center region of a ganglion cell with optimal duration. This value is in good agreement with Gazeres et al. (1998), who reported peak rates range of 50–400 Hz.

Input connectivity to V1.

The visual field is retinotopically arranged on the cortical surface. However, although there exists only one retinal ganglion cell per LGN cell corresponding to the same visual field position at all eccentricities in macaque, there is a considerable magnification in density of cortical neurons in V1 per degree of visual field (Schein and de Monasterio 1987; Tootell et al. 1982). Comparing several earlier studies, Schein and de Monasterio (1987) estimated the cortical magnification factor (CMF) at eccentricity ε to be (in mm cortex per degree of visual field)

| (6) |

This definition of the cortical magnification factor (Eq. 6) is very convenient: for a fixed eccentricity and distance between adjacent neurons (grid spacing), one can calculate the lateral extent of the network needed to cover a given visual field size. Note, however, that this estimate is only useful when the lateral extent of the network model can be regarded as small compared with the variation in lateral cell density.

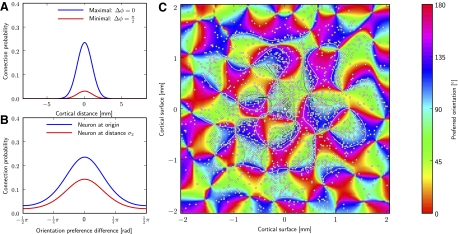

LGN neurons, belonging to the parvocellular pathway, typically project to layer 4Cβ of V1. There is still an ongoing debate to which extent oriented input shapes the orientation selectivity of neurons in the primary visual cortex or to what extent local cortical processing is involved (see Teich and Qian 2006 for a review). It seems that in macaques, orientation selectivity is thought to arise from the interaction of cells with gradually shifted input characteristics across the sublamina of the layer 4C (Callaway 1998; Lund et al. 2003), whereas the inputs to a single cell might not be oriented in macaques as suggested for the cat (Hubel and Wiesel 1977). However, because we did not model sublamina, we simplified the circuitry by, nevertheless, assuming that input connections to each neuron generate orientation tuning. This allows the definition of orientation maps in a straightforward “hard-wired” manner in our model. We used Kohonen's Self-Organizing Map algorithm (Kohonen 1982) to create orientation maps across the cortical surface, which is known to generate orientation maps with good correspondence to V1 orientation maps (Brockmann et al. 1997; Erwin et al. 1995; Obermayer and Blasdel 1993; Obermayer et al. 1990, 1992). See Fig. 1C for a typical orientation map generated by this algorithm (see APPENDIX A for details on the implementation of the algorithm).

Fig. 1.

Long-range connectivity of the V1 model. Long-range patchy connectivity of an example neuron implemented in a model circuit having 165 × 165 × 3 neurons in layer 2/3 positioned on a cuboid grid with a spacing of 25 μm. (Note, these dimensions are different from that used in the simulations of results; they are used here for better visualization). A and B: conditional probability that the neuron (marked with a white square in the center of C) is connected to a neuron having lateral distance r or orientation selectivity φ, respectively. The connection probability to a postsynaptic neuron at 0 lateral distance and same orientation preference was scaled to experimental data (≈0.24%; Thomson et al. 2002). Blue and red curves show the connection probabilities for neurons that have aligned or orthogonal preferred orientation to the presynaptic neuron, respectively. C: connections established according to the probability distributions for a presynaptic neuron in the origin of the circuit (white square). Small white dots represent lateral positions of postsynaptic neurons. Colors code for orientation tuning of a neuron (generated by a self-organizing map). The conditional connection probabilities are indicated by contour lines. One notes that the connection probability rises for regions with similar orientation as the presynaptic neuron (∼90°), thereby generating a patchy appearance. Only the orientated (long-range) part of Eq. 1 (2nd term) is used for establishing connections in this example plot. However, because the weighting factor is high m = 10 (see Eq. 1), only very few local connection will be added when considering both terms in the simulations. The orientation map additionally determines the orientation of thalamic input connections.

Based on the generated orientation preferences for each cortical position, the thalamic input connection probability to a cell in the circuit could thus be modeled as an oriented Gabor function, i.e., a two-dimensional Gaussian multiplied by a cosine function. The absolute value of the Gabor function corresponds to the connection probability of LGN neurons with a cortical cell positioned at the cortical equivalent position of the origin of the Gabor patch in the visual field. Positive and negative regions correspond to the connection probabilities of LGN on- and off-response cells, respectively. Lagged and nonlagged cells connected equally likely to cortical cells. Following Troyer et al. (1998), we expressed the Gabor function in parameters defining the number of subregions ns, the aspect ratio of the width and the height of the Gaussian envelope a, the orientation φ, the offset of the cosine ψ, and the frequency of the cosine f. Given these parameters, one calculates the SD of the Gaussian envelope as (Troyer et al. 1998)

| (7) |

while using coordinates rotated by φ. The advantage of using these parameters is that the frequency defines implicitly the size of the Gabor patch while the number of subregions is kept constant. Therefore the receptive fields of macaque V1, which are much smaller than those of the cat, can be easily included in this framework. We used data from Bredfeldt and Ringach (2002) and chose the frequency f from a Gaussian distribution with a mean of 3.7 deg−1 and a SD of 2.1 deg−1 (with an enforced minimum of 0.7 deg−1 and maximum of 8.0 deg−1). The number of subregions ns and phase shifts ψ were drawn from uniform distributions with ranges of (1.85, 2.65) and (0,2π), respectively (experimental values from cat as in Troyer et al. 1998).

To incorporate the smooth maps of preferred orientation φ and orientation preference q depending on cortical position u (see APPENDIX A), we set φ = φ(u) and the aspect ratio to a(u) = (amax − amin) q(u) + amin. We used values reported by Troyer et al. (1998) for the bounds amin = 3.8 and amax = 4.54 for excitatory neurons and for the generally less well-tuned inhibitory neurons, amin = 1.4 and amax = 2.0.

Last, the overall connection probability defined by the Gabor functions, was scaled to achieve an average number of 24 input synapses for both excitatory and inhibitory neurons, which is the estimated number of parvocellular afferent connection per cortical neuron in layer 4C of macaques (Peters et al. 1994). There is evidence that layer 6 receives occasional collaterals of the LGN input to layer 4 (Callaway 1998). Thus we set the connection probability to excitatory neurons in layer 5 (comprising layer 5 and layer 6 in our model) to 20% of that of the input to layer 4. These values are in good agreement with the data from Binzegger et al. (2004) estimated from cat. In macaques, layer 2/3 receives only koniocellular input (Callaway 1998). Because we omitted the koniocellular pathway in our model, layer 2/3 did not receive any thalamic input.

Because of finite conduction velocities of the fibers, signals from the retina reach V1 with a characteristic delay of about 30 ms (Maunsell et al. 1999). We sampled the delay of the LGN input synapses from a Gaussian distribution with mean 31 ms and SD 5 ms (and additionally enforced delays below 24 ms and above 50 ms to a value uniformly in the latter range). These values were taken from Fig. 3 of Maunsell et al. (1999).

Fig. 3.

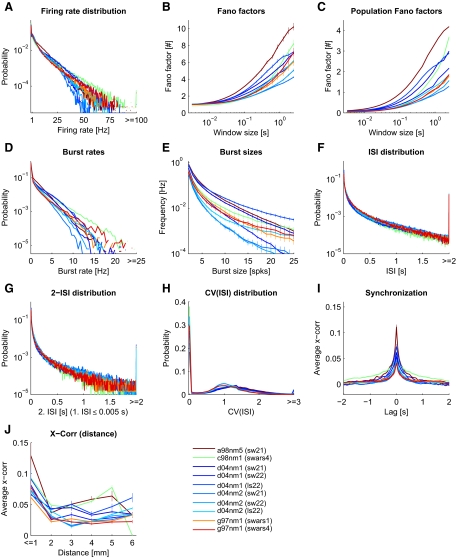

Spike statistics of the experimental data. Each panel corresponds to a particular statistical feature (see APPENDIX B for exact definitions). Data from 10 experimental sessions (from 4 monkeys) during movie stimulation are plotted separately in color code (1st monkey, brown; 2nd monkey, green; 3rd monkey, shades of blue; 4th monkey, shades of red). In the legend, the 1st 3 letters of a session code indicate the animal, and the 2nd 3 letters indicate the recording session. The shown segment of the presented movie (Star Wars) is indicated in brackets. All available trials are included. Values greater than the plot limits were included in the last bin (where applicable), resulting in a disproportional large probability in the last bin. G: the conditional ISI distribution given that the 1st ISI is <5 ms. The full distribution is shown in K and L.

Top-down connections.

In addition to the thalamic input, V1 neurons receive multiple feedback connections from extra-striate cortical areas (Felleman and Essen 1991), especially from V2, where the feedback connections are almost as numerous as the feed-forward connections (see Sincich and Horton 2005 for a review). Feedback projections predominantly project to targets in the upper layers but also to layer 5 (Rockland and Virga 1989; Sincich and Horton 2005).

Because our model is restricted to V1 and we do not have any recordings from V2 available, we decided to not include any top-down input stream explicitly. However, implicitly, additional input to V1 neurons is included by modeling the “high conductance state” of each neuron, which reflects the synaptic background input arriving from distal neurons (Destexhe et al. 2001).

Comparing the V1 model to electrophysiological data

SETUP OF THE STIMULUS TO THE MODEL.

The stimulus, presented to the V1 model during simulation, resembled the one presented to the monkeys. We used a 10-s fragment of one of the movie segments (sw21) shown during the electrophysiological recordings as input movie for the model. However, modeling the whole 10 × 7° visual field was not feasible because of computational costs. Therefore we trimmed the movie frames to a smaller size, covering 3 × 3° of the visual field. The center of the extracted region was aligned to the center of a receptive field of one of the electrodes (channel 7) of a particular session (“d04nm1”). Because the diameter of the receptive field of that electrode was experimentally determined to be 1.2°, the reduced stimulus should at least contain all direct input information available for neurons recorded by that electrode. On the retina, this receptive field was centered at (0.69, −2.39°) eccentricity relative to the fovea. In the model, we set the eccentricity, nevertheless, to 5°, because otherwise, the lateral extent (and, therefore the amount of neurons in the model) per visual degree would be prohibitively large (cf. Eq. 6). At 5° eccentricity, a V1 model covering 2.4 × 2.4° has a lateral extent of 5 × 5 mm cortical surface and neurons are positioned on a virtual grid of size 62 × 62 × 9 if one assumes a lateral grid spacing of 80 μm. Vertically, the grid spacing corresponds to 200 μm. The visual field covered by the V1 model is somewhat smaller than the stimulus to avoid boundary effects in the input connectivity. For analogous reasons, the LGN neurons were set to cover an intermediate area of 3 × 3° (77 × 77 grid).

ESTIMATING THE RELATIVE STRENGTH OF THE THALAMIC INPUT.

In the recorded spike trains, the mean firing rate of multiple trials (5-min duration) across monkeys and V1 electrode channels was on average 5.1 (4.8) Hz (SD) during movie stimulation and 1.9 (3.3) Hz during spontaneous activity (blank screen). Thus one could state that, because of the thalamic input, the mean firing rate of the circuit increases by about 3 Hz. From simultaneous extracellular recordings in LGN, we analogously find a mean firing rate of 7.1 (2.9) Hz during visual stimulation and 4.4 (2.1) Hz during absence of visual stimulation. Hence, in the LGN the movie stimulus increases the mean firing rate by about 60% of the spontaneous activity.

We used these values to determine the synaptic input weight scale (WIn,scale), i.e., the scaling factor of the peak conductances originating from LGN neurons, in the following manner: In the absence of all intercortical connections, the weight scaling factor of the input stream was set to a value achieving closest match to a given target mean firing rate in each neuron population (minimal Euclidean distance). Assuming that the main input drive to V1 (during visual stimulation) is from the thalamus, we set the target mean rate for the circuit to 2 Hz, which roughly corresponds to the activity increase seen during visual stimulation in our experimental data.

EVALUATING THE DEVIATION BETWEEN MODEL RESPONSE AND IN VIVO RECORDINGS.

To compare the firing regimen of the model with that of the in vivo recordings we evaluated the discrepancy between a set of 10 statistical features calculated from the model response and the recorded spike trains (see appendix b). After estimating a statistical feature on the experimental data and the model response, their deviation was calculated using Kulback-Leibler divergence or by calculated the mean squared error, depending whether the features resulted in an estimated probability distribution or not, respectively. This deviation was normalized by the average deviation seen in this features if tested between any two experimental sessions (different monkeys or different movie stimulus). We call this experimental data weighted deviation the normalized deviation (ND) for each statistical features. We report the normalized deviation averaged across all statistical features as a measure for the goodness of fit, and abbreviate it in the following with mean ND (MND).

Note that by construction a MND value of 1 indicates that the deviation between the model response and the in vivo data (average over all sessions) equals (on average over the 10 statistical features) the average deviation between individual experimental sessions. We used only one model random seed for the evaluation of the fitting error for each parameter setting to reduce computational costs.

To compensate for a lack of synaptic drive because of a much smaller neuron density in the model compared with reality, we introduce two scaling parameters WIn,scale and Wscale. The WIn,scale parameter, a multiplicative factor applied to all weights of the input connections, was set by a heuristic approach to approximately match the input strength observed in the experiments.

The second scaling parameter, the weight scale parameter Wscale, accounts for the recurrent synaptic drive adjustments and is a multiplicative factor applied to all recurrent weights. As this parameter is inherent to the model design, it cannot be constrained by literature values. Therefore to estimate the weight scale parameter Wscale, we used the value that minimizes the deviation of the model firing response statistics to the “statistical fingerprint” of the firing regimen of the in vivo recordings. To measure its deviation, we used the MND as described above. We restricted the analysis on the response of excitatory neurons only, because we expect that due to the generally larger size of excitatory neurons, the experimental recordings were strongly biased to record spikes originating from excitatory cells.

SIMULATION TECHNIQUES.

All simulations were performed using the PCSIM simulation environment (Pecevski et al. 2009). It takes about 5 h on a quad core machine (2,664 MHz) to simulate the described model for 10 s of biological time (depending on the mean firing rate). All simulations were performed in a distributed fashion on a cluster of 30 such quad core machines.

RESULTS

We first established the “statistical fingerprint” of the spiking activity of the primary visual cortex (V1) under naturalistic stimulus conditions in vivo. The extracted statistical features provided the grounds for comparison with the simulated firing response of a detailed circuit model. Because we hypothesized that V1 works in a characteristic firing regimen favorable for its ongoing computations, we were particular interested in features possibly characterizing a computational advantageous regime. For instance, such a regimen might consist of highly irregular firing and low correlations between neurons (Brunel 2000; Legenstein and Maass 2007). We therefore extracted 10 salient statistical features, which are sensitive to various aspects of the spiking response, such as response strength, response variability, spike correlations, bursting behavior, and the possible usage of spiking codes with nonlinear dependencies on consecutive spike intervals (see APPENDIX B for exact definitions).

Statistical characterization of the spike response in vivo to movies in monkey area V1

We first analyzed electrophysiological recordings from V1 of anesthetized macaque monkeys during stimulation with natural movies. The data comprised spike responses measured in 10 sessions (from 4 anesthetized macaque monkeys), each with 12–40 repeated representations of a movie of 3.5–6 min length. In Fig. 2, D and E, typical spiking responses of selected neurons are depicted. We characterized the firing statistics of this experimental data using a set of 10 statistical features (Fig. 3). The same set of features were also used to characterize the model response as described below (Fig. 9). We found that spike responses of V1 under naturalistic stimuli conditions were typically highly variable over time and moderately low correlated between different neurons having a smooth fall-off for long time lags. Firing rate and burst rate distributions followed exponential distributions, burst size frequencies and ISI distributions exhibited a power-law structure. This described general picture is consistent with previous published values.

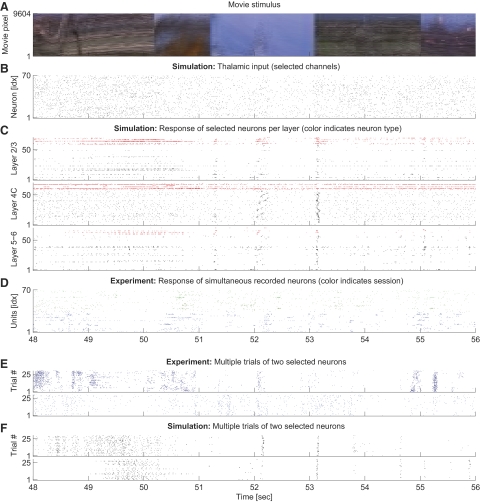

Fig. 2.

Spiking response to movie stimulus in model simulation and in vivo. A: movie input to the model circuit in true colors (in the model, we used a grayscale version of this movie). Pixels of the movie frames are lined up vertically. B: lateral geniculate nucleus (LGN) model response to the movie in A. Seventy input channels were randomly selected for plotting (in total, there are 4,900 LGN inputs). C: spike trains elicited by neurons in the V1 model in response to the LGN output from B are plotted in separate panels for each of the layers 2/3, 4C, and 5–6. For better visualization, 70 neurons (of 11,532) are randomly chosen from each layer. Inhibitory and excitatory neurons are colored red and black, respectively. One notes a high variability in the statistical structure across neurons. D: spike trains of the spike sorted experimental data in response to the same movie segment are shown. Different colors represent different sessions of the same monkey—green (blue): 2 trials of session d04nm1 (d04nm2). We show 2 trials to allow for an easier comparison of the statistical structure of the spike trains in vivo with the model response (C). Note that the receptive field of some electrode channels lie outside of the depicted movie region of A. E and F: Multiple trials of 2 selected neurons in experiment (E) and model (F). Note that trial-to-trial variability is comparable in silico and in vivo.

In detail, the exponential distributed firing rates (Fig. 3A) exhibited exponents varying between monkeys and experimental trials in the range of −2.35 to −0.23 s (mean −0.81 s, SD 0.62 s). The overall mean firing rate of the experimental data averaged over the different sessions was 5.06 ± 0.75 (SD) Hz. The exponential distribution of firing rates is consistent with results from the V1 of cats (Baddeley et al. 1997).

Spike train variability is generally high. We tested for the variability in the spiking response using the distribution of Fano factors of individual neurons for multiple time scales (Teich et al. 1997), and the Fano factor of the network population spike response, to measure the response variability of the population code. For individual neurons (Fig. 3B), the Fano factor approached 1 on average for small window sizes in the order of 10 ms, indicating a Poisson process with stationary rates. On larger time scales, however, the Fano factor increased. This increase in variability could reflect the internal dynamics, but might be partly induced by the movie stimulus, which mean brightness often varies on a time scale in the order of seconds.

The population Fano factor (Fig. 3C), measuring the response variability of the neuron population, showed a similar time window dependence as the Fano factor of individual neurons. However, the absolute value of the population Fano factor was markedly smaller, indicating that the population response was less variable over time on short time scales. On a longer time scale, however, the Fano factor of the population response increased, indicating that the neurons in the recorded population tend be active or silent together. This might hint at population burst-like activity, also evident when examining the concrete spike trains in the recordings (see Rasch et al. 2008 for a discussion of how these clusters of spikes relate to the local field potential fluctuations in the same data).

The high variability of the in vivo data were also evident from the coefficient of variation of the interspike intervals [CV(ISI)], plotted in Fig. 3H. High probabilities were typically found for CV(ISI) values above 1, indicating a high variability in the spike response. Such a high peak value is consistent with previously published data (Holt et al. 1996; Shadlen and Newsome 1998; Softky and Koch 1993; Stevens and Zador 1998).

Spike bursts, i.e., abrupt events of high spiking activity, have been suggested to be an important aspect of neuronal coding of information. For instance, bursts might convey additional and independent information about the sensory inputs (Cattaneo et al. 1981; Lisman 1997). Thus we included two statistics to measure the occurrence of bursts in neuron spike trains, a feature rarely examined in the literature. Figure 3D shows the burst rate distribution, measuring the frequency of spiking events having at least two spikes within an (average) ISI of 5 ms. Figure 3E shows the average burst rate for different sizes of bursts (see APPENDIX B for exact definitions). Qualitatively, the burst rate distributions of different sessions looked similar, having exponential distributions. The exponent varied in the range of −5.07 s to −3.18 s (mean −4.54 s, SD 0.56 s). The average bursts rate of all experimental data were 0.51 ± 0.17 (SD) Hz. However, in some sessions, there was a deviation from the exponential distribution and higher burst rates occurred more often than expected. Figure 3E shows that the burst rate as a function of the burst size can be described by a power law (a straight line in a log-log plot). We found exponents in the range of −3.52 to −2.29 (mean −2.88, SD 0.43).

It is conceivable that a certain ISI distribution might be characteristic for the firing regimen of the cortex. ISI distributions (Fig. 3F) were very similar for different monkeys and different sessions. There was a high probability for the occurrence of long ISIs. The distribution of ISIs similarly followed a power law with an average exponent of −1.20 ± 0.19 (range from −1.47 to −0.98).

Additionally, to account for any local temporal correlations in the spike timings, we also estimated the two-ISI distribution, which is a two-dimensional distribution of the joint event of one ISI and the immediately following ISI (Fig. 3K). The ISI distribution for the following ISI when conditioned on a very short current ISI had a similar power-law shape as the marginal ISI distribution (Fig. 3G), although the occurrence of a short ISI following a short ISI was more likely. Similar to the full ISI distribution, we found a relatively low variability across sessions and monkeys.

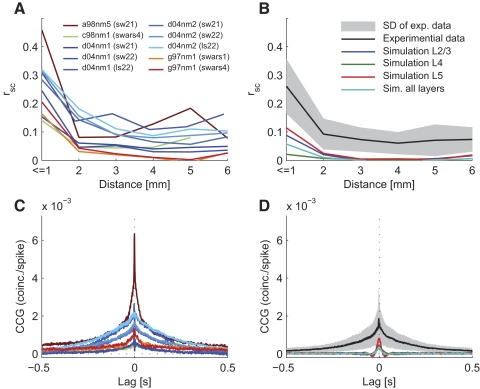

In general, we found that two neurons in V1 were on average correlated for lags up to about 250 ms having moderately low peak correlations. Other studies also reported low (signal) correlations between pairs of neurons for naturalistic stimuli (Reich et al. 2001; Yen et al. 2007) and even lower correlations in awake animals (Vinje and Gallant 2000). To be able to better compare our data to the literature, we calculated the shift-corrected cross-correlogram (Bair et al. 2001; Kohn and Smith 2005; Smith and Kohn 2008) and the noise correlations (rsc; Ecker et al. 2010) and found that the correlation structure in our data agreed very well with that of Smith and Kohn (Fig. 8). The strength of correlations however depended on the monkey and movie stimuli (Fig. 8, A and C). The mean value across all sessions was rsc = 0.26 ± 0.03 (SE) for neuron clusters nearer than 1 mm.

Fig. 8.

Correlation structure in the model and in vivo. For better comparability with literature values, correlations were plotted in terms of noise correlations (A and B) and shift-corrected cross-correlograms (CCG; C and D). Sessions of experimental data were plotted as in Fig. 2 (A and C). B and D: the corresponding statistics calculated on the model response (for optimized parameter settings). Correlation structure in the model response were calculated on each layer separately showing a systematic difference in the strength of correlation in each layer (no layer information was available for the in vivo data), Correlations in the model were generally lower than in the in vivo data.

We further analyzed the cross-correlation for pairs of neurons as a function of their distance (Figs. 3J and 8A). In agreement with others (Smith and Kohn 2008), the cross-correlation was higher for neurons (clusters) recorded by the same electrode and decreased for longer electrode distances, where the correlation remained on a low level.

In summary, we computed a set of statistical features characterizing the “statistical fingerprint” of the spiking activity under seminatural movie stimulus condition in vivo. Certain features of the obtained fingerprint, namely the high variability of ISIs, low cross-correlation, and the power-law distributions of burst events, suggest that the V1 during movie stimulation might indeed reach an operating state, which is favorable for recurrent neural networks for performing computational tasks. The results presented here agree in general with published literature. However, because we characterized the firing regimen not only by a small set of mean values but instead by 10 different functions (or estimated probability distributions), we were able to quantify the deviation of the firing regimen of a simulated model from that exhibited in vivo in great detail. Moreover, the dataset provided us with the unique possibility to test the importance of physiological meaningful parameters to optimize the model response behavior to closely reach a realistic state.

Quantification of the discrepancy between the firing regimen of a model for a patch of V1 and the firing state exhibited in vivo

Having characterized the V1 in vivo recordings, we proceeded with characterizing the simulated responses of the circuit model of V1 in silico. The V1 model was based on anatomical and physiological details of macaque monkeys and was built to model the neural activation in a 5 × 5-mm cortical patch of V1 (see methods for a detailed description of the V1 model). We simulated the model and recorded the spiking activity in response to 10 s of a typical movie segment (sw21) that had also been used for in vivo recordings.

Differences in the firing regimen in silico and in vivo were quantified by estimating the deviations in all statistical features. We calculated the MND between the model and the in vivo response (see methods for definitions). Note that MND = 1 indicates that the deviation of the model response to the mean response over all sessions equals the mean deviation between all pairs of sessions. Our measure thus relates to the deviation among individual experimental sessions. Moreover, the MND weights the importance of each statistical feature in a manner that features showing a high variability between experimental sessions are deemed less important and those features conserved across sessions are emphasized.

By setting parameters of the model to values derived from the literature (Table 1) and minimizing the fitting error in respect to the overall recurrent connections weight scale, which is inherent to the model design (Wscale; see methods), we found a mean normalized deviation of MND = 1.91 ± 0.01 (mean ± SE over identical networks and input but different random seed for the statistical evaluation), indicating that the deviation is on average about twice as high as between experimental sessions and monkeys.

Table 1.

Parameters investigated in their optimization potentials together with their standard value by pst

| Parameter | Standard Value | Reference | |

|---|---|---|---|

| p1 | Noise level scale | 1.0 | (Destexhe et al. 2001) |

| p2 | Fraction of synapses with NMDA | 0.9 | |

| p3 | NMDA-AMPA ratio | 0.47 | (Myme et al. 2003) |

| p4 | Width of inh. connections, μm | 150.0 | (Lund et al. 2003) |

| p5 | Max. fraction of GABAB conductance | 0.3 | |

| p6 | Inh. to exc. connections weight scale | 1.0 | (Thomson et al. 2002) |

| p7 | Exc. to inh. connections weight scale | 1.0 | (Thomson et al. 2002) |

| p8 | Long-range weight scale | 1.0 |

Standard values for 5 of the parameters could be extracted from the literature. If no reference is given, the standard value was chosen heuristically.

Because we presented complex movie stimuli, it is not clear whether the firing regimen of the model was indeed generated by internal dynamics or was instead solely induced by the statistics of the input. To test the possibility of induced dynamics, we calculated the MND on the input spike trains generated by the LGN model (omitting the now meaningless lateral cross-correlation feature), and found a value of MND = 2.49. This value is considerably higher than for the model response. We repeated the statistical analysis for the model network after abolishing all recurrent connections, leaving only the input connections intact. By varying the strength of the synaptic input connections, we found a minimal value of MND = 4.37. Thus the fit of the firing statistics was much worse than with intact recurrent connections, implicating that the recurrent dynamics of the network indeed shaped the firing response.

We concluded that by simply optimizing an overall scale parameter (Wscale), the model dynamics shaped its statistical response properties in direction of that of the in vivo response. However, deviations from the realistic firing regimen in vivo were still considerable.

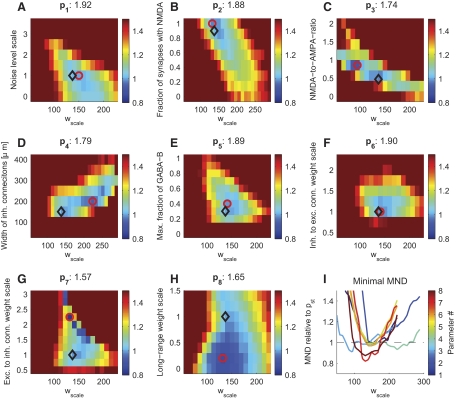

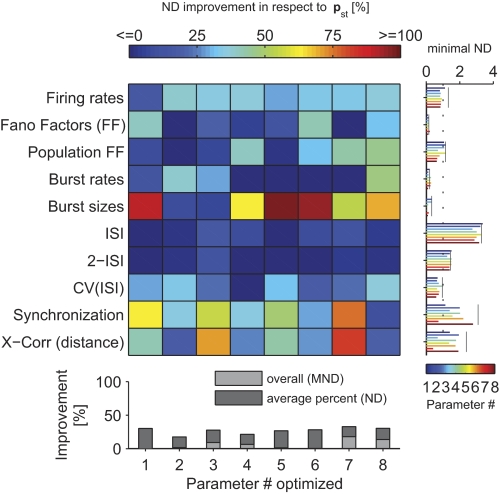

Improvement of the firing regimen when optimizing the model

Can the firing regimen of the model be adjusted by physiological meaningful parameters to improve the fit to the in vivo data? Finding such parameters would shed light on parameters that exert control over certain statistics. Therefore we chose eight physiological meaningful parameters (see Table 1 for an overview), which we believed to influence the firing dynamics. We optimized the model in respect to each parameter and evaluated each parameter's ability to improve the discrepancy between model and in vivo recordings. Unfortunately, simultaneous optimization of multiple parameters was computational prohibitive. Therefore we varied each parameter individually around the “standard” parameter values taken from the literature (pst), which we used to establish the initial fingerprint of the model's response (see above). Because the optimal Wscale might change during the variation of a parameter, we additionally varied Wscale resulting in two-dimensional landscapes (Fig. 5; see Table 2 for a summary of the quantitative results of the optimization). The effects of parameter optimizations on the improvement of each statistical feature are analyzed in Fig. 6.

Fig. 5.

Improvement in the goodness of fit between in vivo and in silico firing regimens when varying physiological meaningful general parameters. The improvement in MND in respect to the standard parameters is plotted in color code when varying 8 general parameters individually (see Table 1 for a description of the parameters). Each parameter was varied together with an overall scaling factor applied to all synaptic weights (Wscale), whereas other parameters were held constant. Adjusting some of the parameters considerably improved the fit to in vivo data. For instance, the relative synaptic weights of excitatory to inhibitory neurons needed to be increased dramatically (G). The standard parameter values and the settings showing the best fit in the statistical properties (minimal MND) are indicated with black diamonds and red circles, respectively. The minimal MND values are indicated in the titles. I shows the minimal MND (relative to standard parameters) vs. Wscale for the 8 parameters.

Table 2.

Optimized parameter values

| Parameter | Standard Value | Best Value | 5% Range Around Best Value | Corresp. Wscale | MND | |

|---|---|---|---|---|---|---|

| p1 | Noise level scale | 1.00 | 0.76 | 0.42–1.10 | 160.4 | 1.92 |

| p2 | Fraction of synapses with NMDA | 0.90 | 0.95 | 0.78–1.13 | 156.5 | 1.88 |

| p3 | NMDA-to-AMPA ratio | 0.47 | 0.86 | 0.73–0.98 | 107.4 | 1.74 |

| p4 | Width of inh. connections, μm | 150 | 208 | 182–233 | 223.8 | 1.79 |

| p5 | Max. fraction of GABAB conductance | 0.30 | 0.40 | 0.25–0.56 | 150.8 | 1.89 |

| p6 | Inh. to exc. connections weight scale | 1.00 | 1.11 | 0.96–1.27 | 151.5 | 1.90 |

| p7 | Exc. to inh. connections weight scale | 1.00 | 2.25 | 2.14–2.36 | 146.1 | 1.57 |

| p8 | Long-range weight scale | 1.00 | 0.11 | 0–0.46 | 139.3 | 1.65 |

The best value (and the corresponding Wscale) for each parameter were inferred by grid search (see Fig. 5). The “5% range” indicates the range where the MND changed by at most 5% (in respect to its best value) and was estimated using a quadratic fit around the best value (with fixed Wscale). MND, mean normalized deviation.

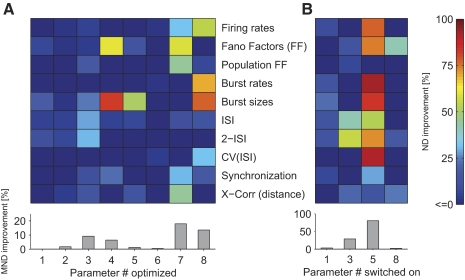

Fig. 6.

Effects of parameter optimization on individual statistical features. A: the percentage change in normalized deviation (ND) in respect to standard parameter settings when optimizing parameters p1, …, p8 individually is plotted in color code (see Table 1 for a description of the parameters). One notes that individual parameters have different influences on statistical features. The bottom margin shows the improvement in MND (averaged over all statistical features). Same simulation data as in Fig. 5. B: impact of the inclusion of different components in the model. Selected components of the model: background noise (p1), NMDA conductances (p3), GABAB conductances (p5), or patchy long-range connections (p8). The improvements of the fit when including a component are plotted in color code (relative to the standard parameter settings, having all components included). One notes that including GABAB conductances had the most pronounced effects, improving the fit to multiple statistics profoundly. When components were switched on and off, Wscale was again optimized in respect to MND.

We first chose a parameter varying the background noise strength (parameter p1). The background noise strength implicitly regulates the strength of how neurons not modeled in the circuit affect the modeled neurons (see methods). When varying this parameter, we did not find a strong dependence on the quality of the fit (Fig. 5A), suggesting that this background noise strength was of minor importance. Although varying the noise strength improved some individual statistical features in respect to the literature values (such as Fano factors, burst sizes and ISI distributions; Fig. 6A), the effect was typically below 10% ND improvement. Indeed, even if we disabled the background noise, the fit to all statistical features simultaneously was only compromised by a negligible decrease of the MND of 3% (Fig. 6B). This suggests that our network was already big enough to explicitly provide realistic synaptic background inputs to any neuron.

In our model, the lateral width of inhibitory neurons was relatively small (SD 150 μm; see methods). We tested whether the fit could be improved by varying the lateral spread of the inhibitory connections (p4). However, this was not the case: a good range for this parameter lied between 150 and 250 μm, depending on the overall strength of the synapses (Wscale; Fig. 5D). Although the ND of burst sizes and Fano factors could be markedly improved (Figs. 6A and Fig. 7), these features had only a small influence on the MND because their variance between experimental sessions was high and, moreover, they were already well fitted by a model with parameters set to standard values (cf. Fig. 7, right marginal plot). In consequence, the MND could only be improved by ∼5% by optimizing the lateral connection width of inhibitory neurons, suggesting that our original value was adequate.

Fig. 7.

Optimization of statistical feature individually (compared with optimize the mean over all). The improvement in ND relative to the standard parameters is plotted in color code. The ND of individual statistical features was always optimized in respect to Wscale. Parameters (listed in Table 1) have different impact on statistical features. The bottom margin indicates the cumulative ND improvements and the improvements in MND (where Wscale is optimized on MND instead of ND). Left margin shows the actual ND values for each parameter (color coded bars). Note that an ND of 1 means a deviation equal to the average deviation between experimental sessions (and monkeys). Black lines are plotted in case of standard parameters. One notes that some statistical features were more difficult to fit, whereas others were less problematic (reaching a value well below 1).

In general, we expected the synaptic receptor composition to be critical for achieving a realistic regimen. Because NMDA conductances are activated on a slow time scale and thus might affect the variability of the model especially on a longer time scale, we tested two parameters varying the amount of NMDA receptors in different ways: the fraction of synapses having NMDA receptors (p2) and the average NMDA-to-AMPA ratio of a synapse (having NMDA receptors) (p3). Knowing that the latter ratio shows a relatively high fluctuation in experimental literature (Myme et al. 2003) and that NMDA receptor function might be influenced by anesthesia (Guntz et al. 2005), these parameters might need to be adjusted in the model. Remarkably, when NMDA conductances were not included in the model at all, the fit degraded significantly (about 20% decrease in MND), compromising mostly the fit to the ISI structure and the Fano Factors, but also the fit to the lateral cross-correlation (Fig. 6B). This suggests that the NMDA conductances were a necessary component of the network model to achieve a realistic firing regimen especially for the variability on a longer time scale. However, we also noticed that varying these parameters led to only minor improvements (within 10% change of MND in respect to the standard parameters; Fig. 5, B and C). Thus we concluded that the standard literature values for the NMDA-to-AMPA ratio and the fraction of synapses having NMDA receptors were already adequately chosen.

GABAB conductances are activated nonlinearly only in case of high presynaptic activity events (Thomson and Destexhe 1999) and furthermore exhibit relatively slow dynamics. We thus expected that the adjustment of the maximal fraction of GABAB conductances (p5) would affect the population spike structure. Indeed, we found that the GABAB conductances were critical in our model: including these conductances in the model dramatically improved the fit (about 80% improvement; Fig. 6B). One possible reason for this dependence on GABAB conductances could be the crucial lack of long-lasting inhibition or the lack of nonlinear activation of inhibitory neurons when GABAB conductances were absent.

The strong effect suggested that sufficient activation of inhibitory neurons was necessary for achieving a realistic firing state. However, similar to the NMDA conductances, varying the maximal fraction of GABAB conductances did not considerably improve the MND value in respect to standard parameters (Fig. 5E).

One might hypothesize that the balance of excitation and inhibition was not established appropriately in the network model. To vary the overall connection strength between neuron pools, we chose relative synaptic weight scaling factors from inhibitory to excitatory neurons (p6) and from excitatory to inhibitory neurons (p7) as parameters. Whereas varying the inhibitory to excitatory connection strengths did not yield any overall improvement (Fig. 5F), varying the reverse, the excitatory to inhibitory connection strengths had a strong effect. We noticed that increasing p7 2.25-fold resulted in an 18% improvement of the fit to in vivo data (Fig. 5G), indicating the importance of correctly balancing inhibition and excitation for acquiring a realistic firing regimen. Judging from the discontinuity of the error landscape (Fig. 5G), twofold increase in p7 seemed to switch the firing regimen into a new state, which was much more similar to the firing regimen in nature.

This strong overall improvement in the MND was mainly mediated by the ND improvement in the correlation structure (lateral cross-correlation and synchronizations), which could be improved by about 40% in comparison to the simulation using standard literature values (Fig. 6A). Additionally, deviations in firing rate distribution and both Fano factors were also decreased by high percentages (Fig. 6A).

Finally, we chose the relative synaptic weights scaling factor of the patchy lateral long-range connections (p8) because it is not well constrained by the literature (see methods for details). We found that the initial weight scale was somewhat too high: decreasing the weight of the long-range connections improved the variability of the network response. Indeed, the removal of long-range connections decreased the MND only by 3% (Fig. 6B). The decrease of the MND by 14%, when optimizing for the relative strength of the long-range connection (Fig. 5H), was mainly mediated by improving the burst structure (>50% improvement in the burst sizes and the burst rate distribution), as well as the CV(ISI) distribution (∼35%; Fig. 6A). When inspecting the spike responses visually, we noticed a slow rhythmic bursting for high p8 values (near 1). These periodic population bursts were not seen after decreasing p8. The relative weight of the lateral long-range connections therefore had to be reduced to avoid the induction of population bursts resulting in a much better fit to responses in vivo, in particular reducing the deviation in the statistical features sensitive to the burst structure.

In summary, for the majority of the selected parameters, its literature value could not be markedly improved. The improved MND deviated <5% from the MND values in case of standard parameters. An intermediate effect could be seen when varying the NMDA-to-AMPA ratio (p3). Here the improvement with respect to the standard parameters reached 9%. The most striking improvement, however, could be gained by varying the relative weight scaling factors of the long-range connections (p8) and of the excitatory to inhibitory to connections (p7). Here the MND improved by 14 and 18%, respectively.

Next, we tested whether the fit could be further improved by varying the combination of the two most promising parameters together, i.e., the relative weight factors of excitatory to inhibitory connections and of patchy long-range connections, respectively, p7 and p8. By setting p7 to its best value (2.25) and again varying p8 (as in Fig. 5H), the goodness of fit improved further to MND = 1.19 (for p8 = 0.3). We simulated this optimized model for multiple trials (changing the random seed of the simulation) and found a mean MND value of 1.30 ± 0.01 (SE). This is a 32% improvement over the model using standard parameters.

Finally, if a longer, nonintersecting section of the movie (25 s) was tested with these optimized parameters, the MND value decreased to a value of 1.10. Varying other parameters while using the best value for p7 did not further improve the fit (data not shown).