Abstract

Natural behaviours, and hence neuronal populations, often combine multiple sensory cues to improve stimulus detectability or discriminability as we explore the environment. Here we review one such example of multisensory cue integration in the dorsal medial superior temporal area (MSTd) of the macaque visual cortex. Visual and vestibular cues about the direction of self-motion in the world (heading) are encoded by single multisensory neurons in MSTd. Most neurons tend to prefer lateral stimulus directions and, as they are broadly tuned, are most sensitive in discriminating heading directions around straight forward. Decoding of MSTd population activity shows that these neuronal properties can account for the fact that heading perception in humans and macaques is most precise for directions around straight forward, whereas heading sensitivity declines with increasing eccentricity of the reference direction. Remarkably, when heading is specified by both cues simultaneously, behavioural precision is improved in a manner that is predicted by statistically optimal (Bayesian) cue integration models. A subpopulation of multisensory MSTd cells with congruent visual and vestibular heading preferences also combines the cues near-optimally, establishing a potential neural substrate for behavioral cue integration.

Dora Angelaki is a Professor in the Departments of Anatomy & Neurobiology and Biomedical Engineering, and the Alumni Endowed Professor of Neurobiology at Washington University. Her general area of interest is computational, cognitive and systems neuroscience. Within this broad field, she specializes in the neural mechanisms of spatial orientation and navigation using humans and non-human primates as a model. She is interested in neural coding and how complex, cognitive behaviour is produced by neuronal populations. Dr Angelaki maintains a very active research laboratory funded primarily by the National Institutes of Health.

Patterns of image motion across the retina (‘optic flow’) can be strong cues to self-motion, as evidenced by the fact that optic flow alone can elicit the illusion of self-motion (Brandt et al. 1973; Berthoz et al. 1975; Dichgans & Brandt, 1978). Indeed, many visual psychophysical and theoretical studies have shown that optic flow also provides powerful cues to heading perception (Gibson, 1950) and have examined how heading can be computed from optic flow (see Warren, 2003 for review). Independent information about the motion of our head/body in space arises from the vestibular system, specifically the otolith organs that detect linear acceleration of the head (Fernandez & Goldberg, 1976a,b,c; Si et al. 1997). Indeed, heading discrimination thresholds during self-motion in darkness increase more than 10-fold after bilateral labyrinthectomy (Gu et al. 2007), indicating that vestibular signals are critical for precise heading perception.

Where in the brain optic flow and vestibular signals might interact has been investigated recently. Optic flow-sensitive neurons have been found in the dorsal portion of the medial superior temporal area (MSTd, Tanaka et al. 1986; Duffy & Wurtz, 1991, 1995), ventral intraparietal area (VIP, Schaafsma & Duysens, 1996; Bremmer et al. 2002a,b;), posterior parietal cortex (area 7a, Siegel & Read, 1997) and the superior temporal polysensory area (Anderson & Siegel, 1999). In particular, neurons in MSTd/VIP have large visual receptive fields and are selective for optic flow patterns similar to those seen during self-motion (MSTd, Tanaka et al. 1986, 1989; Duffy & Wurtz, 1991, 1995; Bradley et al. 1996; Lappe et al. 1996; VIP, Schaafsma & Duysens, 1996; Bremmer et al. 2002a). Importantly, electrical stimulation of MSTd or VIP has been reported to bias heading judgments that are based solely on optic flow (Britten & van Wezel, 1998, 2002; Zhang & Britten, 2003). MSTd/VIP neurons are also selective for motion in darkness, suggesting that they receive vestibular inputs (Duffy, 1998; Bremmer et al. 1999, 2002b; Schlack et al. 2002; Page & Duffy, 2003; Gu et al. 2006, 2007, 2008; Takahashi et al. 2007). The response modulation of MSTd neurons during inertial motion was indeed shown to be of labyrinthine origin, as MSTd cells were no longer tuned for heading following bilateral labyrinthectomy (Gu et al. 2007; Takahashi et al. 2007).

This review focuses on the multisensory properties of neurons in area MSTd, as they have been the subject of several recent studies (Gu et al. 2006, 2007, 2008, 2010; Fetsch et al. 2007; Takahashi et al. 2007; Morgan et al. 2008). In particular, we summarize (1) how population activity in MSTd predicts heading discrimination behaviour (Gu et al. 2010) and (2) how neuronal sensitivity changes when both visual and vestibular heading cues are presented simultaneously (Gu et al. 2008; Morgan et al. 2008).

Tuning of individual MSTd neurons

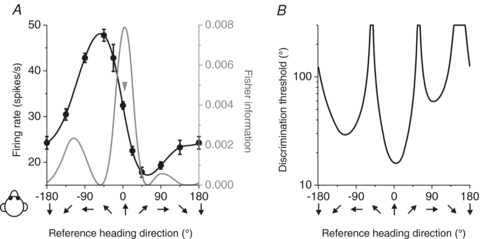

When tested with different heading directions in the horizontal plane, MSTd neurons were broadly tuned, as illustrated by the example neuron shown in Fig. 1A. The tuning curve can be used to estimate the directional information carried by the neuron, by computing Fisher information. Fisher information provides an upper limit on the precision with which any unbiased estimator can discriminate small variations in heading around some reference direction (Seung & Sompolinsky, 1993; Pouget et al. 1998).

Figure 1. Calculation of Fisher information and discrimination thresholds for an example neuron.

A, example tuning curve (black) and Fisher information (grey). Arrow indicates the direction corresponding to peak Fisher information. B, neuronal discrimination thresholds (computed from Fisher information) as a function of the reference heading.

Assuming Poisson statistics and independent noise among neurons, the contribution of each cell to Fisher information can be computed as the ratio of the square of its tuning curve slope (at a particular reference heading) divided by the corresponding mean firing rate (Jazayeri & Movshon, 2006; Gu et al. 2010), as illustrated by the grey curve in Fig. 1A. The corresponding neuronal discrimination thresholds (corresponding to 84% correct performance, or d′= ) can be computed from the inverse of the square root of Fisher information, and are plotted as a function of reference heading in Fig. 1B. Note that maximum Fisher information, which corresponds to the minimum neuronal discrimination threshold, is seen close to the steepest point along the tuning curve (arrow in Fig. 1A), not at the point of peak response (see also Parker & Newsome, 1998; Purushothaman & Bradley, 2005; Jazayeri & Movshon, 2006; Gu et al. 2008, 2010). This is because Fisher information is proportional to the magnitude of the derivative of the tuning curve.

) can be computed from the inverse of the square root of Fisher information, and are plotted as a function of reference heading in Fig. 1B. Note that maximum Fisher information, which corresponds to the minimum neuronal discrimination threshold, is seen close to the steepest point along the tuning curve (arrow in Fig. 1A), not at the point of peak response (see also Parker & Newsome, 1998; Purushothaman & Bradley, 2005; Jazayeri & Movshon, 2006; Gu et al. 2008, 2010). This is because Fisher information is proportional to the magnitude of the derivative of the tuning curve.

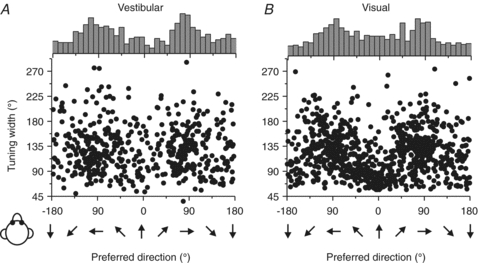

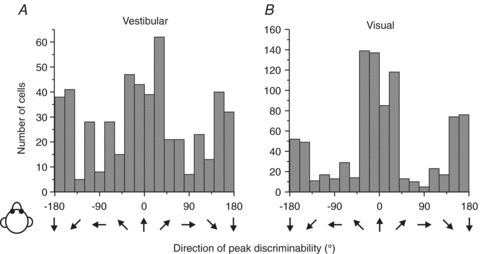

Across the population, heading preferences of MSTd neurons were distributed throughout the horizontal plane, with a tendency to cluster around lateral directions (±90 deg, Fig. 2; see also Gu et al. 2006, 2010). This was true for both the vestibular (Fig. 2A) and visual (Fig. 2B) responses, both of which also had large tuning widths (90–180 deg). The only exception was a group of narrowly tuned cells with visual heading preferences close to straight forward (for this group of cells, vestibular heading tuning is broadly distributed in both preferred direction and tuning width). As shown in Fig. 3, peak discriminability was often observed for reference headings ∼90 deg away from the heading that elicits peak firing rate, consistent with the broad tuning shown by most MSTd cells (Gu et al. 2010). Thus, whereas heading preferences were biased toward lateral motion directions, most MSTd cells had peak discriminability for directions near straight ahead.

Figure 2. MSTd population responses.

Scatter plots of each cell's tuning width in the vestibular (A; n= 511) and the visual (B; n= 882) conditions plotted versus preferred direction. The top histogram illustrates the marginal distribution of heading preferences. Replotted with permission from Gu et al. (2010).

Figure 3. Summary of peak discriminability for MSTd neurons.

Distributions of the direction of maximal discriminability in the vestibular (A; n= 511) and the visual (B; n= 882) conditions. Note that peak discriminability occurs most frequently around the forward (0 deg) and backward (±180 deg) directions. Replotted with permission from Gu et al. (2010).

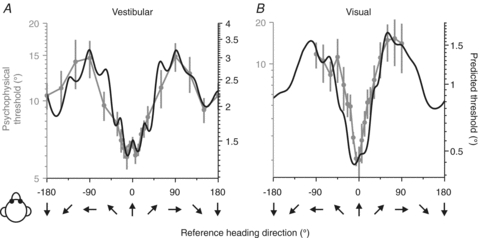

Decoding MSTd population activity: comparison with behaviour

Under the assumption of independent noise among neurons (Gu et al. 2010), the population Fisher information can be computed by summing the contributions from all MSTd cells with significant heading tuning. As expected from the distribution of peak discriminability (Fig. 3), the population Fisher information was maximal for headings near 0 deg (forward motion) and minimal for headings near ±90 deg (lateral motion). To create predictions that can be compared more directly with psychophysical data regarding heading discrimination, population Fisher information was transformed into predicted behavioural thresholds (with a criterion of d′= ; see Gu et al. 2010 for details), as illustrated for the vestibular and visual conditions in Fig. 4A and B. Predicted thresholds from the MSTd population activity (black curves, Fig. 4) are lowest for reference headings around straight forward in both the visual and vestibular stimulus conditions.

; see Gu et al. 2010 for details), as illustrated for the vestibular and visual conditions in Fig. 4A and B. Predicted thresholds from the MSTd population activity (black curves, Fig. 4) are lowest for reference headings around straight forward in both the visual and vestibular stimulus conditions.

Figure 4. Comparison of predicted and measured heading thresholds as a function of reference direction.

Human behavioural thresholds (grey symbols with error bars) are compared with predicted thresholds (black) computed from neuronal responses in the vestibular (A; n= 248) and the visual (B; n= 472) conditions. Note that the ordinate scale for predicted and behavioural thresholds has been adjusted such that the minimum/maximum values align. This is because it is the shape of the dependence on reference heading that we want to compare; we cannot simply interpret the absolute values of predicted thresholds, as they depend on the correlated noise among neurons and the number of neurons that contribute to the decision, which remains unknown. Replotted with permission from Gu et al. (2010).

To quantify the precision with which subjects discriminate heading and to compare with the predictions based on MSTd population activity, a two-interval, two-alternative-forced-choice psychophysical task was used. Each trial consisted of two sequential translations, a ‘reference’ and a ‘comparison’, and heading thresholds were measured around several reference directions in the horizontal plane (Gu et al. 2010). The subjects’ task was to report whether the comparison movement was to the right or left of the reference direction and the task involved either inertial motion in darkness (‘vestibular’ condition) or optic flow with subjects stationary (‘visual’ condition). Inertial motion (vestibular) signals were provided by translating on a motion platform, and optic flow (visual) signals were provided by a projector that was mounted on the platform and rear-projected images onto a screen in front of the subject. Choice data were pooled to construct a single psychometric function for each reference heading and fitted with a cumulative Gaussian function. Psychophysical threshold was defined as the standard deviation of the Gaussian fit (corresponding to ∼84% correct performance).

As illustrated in Fig. 4 (grey data points with error bars), behavioural thresholds increased as the reference heading deviated away from straight forward (0 deg), and this was true for both the vestibular and visual tasks (see also Crowell & Banks, 1993). Human vestibular heading thresholds increase more than 2-fold as the reference heading moves from forward to lateral (Fig. 4A). This effect, while robust, was substantially smaller for the vestibular task than the visual task, for which thresholds increased nearly 10-fold with eccentricity (Fig. 4B). Subjects were always most sensitive for heading discrimination around straight forward (0 deg reference) and least sensitive for discrimination around side-to-side motions (±90 deg references) (Gu et al. 2010).

Because the psychophysical data were obtained under stimulus conditions quite similar to those of the physiology experiments, a direct comparison between neural predictions and behaviour is possible. Remarkably, the dependence of behavioural thresholds on heading direction (Fig. 4, grey) matched well the dependence predicted by the inverse of Fisher information (Fig. 4, black). Importantly, the lowest thresholds for straight forward headings do not occur because of a disproportionately large population of neurons that prefer forward motion, but rather because broadly-tuned MSTd neurons with lateral direction preferences have their peak discriminability (steepest tuning-curve slopes) for motion directions near straight ahead (Fig. 3).

The results of Fig. 4 also held true when two specific decoding methods, maximum likelihood estimation (Dayan & Abbott, 2001) and the population vector (Georgopoulos et al. 1986; Sanger, 1996), were used to compute predicted population thresholds (Gu et al. 2010). Thus, because most MSTd neurons have broad, cosine-like tuning curves for heading direction, the over-representation of lateral heading preferences in MSTd causes many neurons to have the steep slope of their tuning curves near straight ahead and the population precision to be highest during discrimination around forward directions.

Behavioural evidence of cue integration: decreased psychophysical thresholds

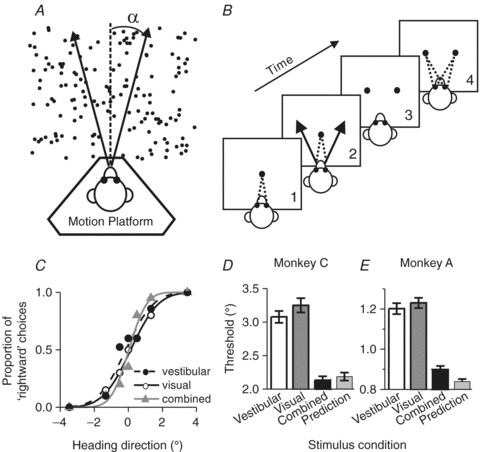

The results described above compared visual and vestibular heading thresholds with predictions from neural activity, but did not address the question of whether perceptual sensitivity to heading is increased when visual and vestibular cues are presented together. Improved sensitivity during cue integration has been observed in other multi-sensory tasks, such as visual–haptic size discrimination (Ernst & Banks, 2002) and visual–auditory spatial localization (Alais & Burr, 2004), and the results are generally consistent with optimal Bayesian inference (Knill & Pouget, 2004). To examine whether such optimal cue integration occurs for visual–vestibular heading perception, animals were trained to perform a multisensory heading discrimination task. The task was a one-interval version of the one described in the previous section: animals experienced a single movement trajectory (Fig. 5A) while fixating on a head-fixed target (Fig. 5B, panels 1 and 2). At the end of the movement stimulus, which lasted 2 s, the animal had to make a saccade to either one of two targets, depending on whether his perceived heading was to the right (right target) or to the left (left target) of straight forward (Fig. 5B, panels 3 and 4). The monkey experienced the movement using visual cues alone (visual condition), non-visual cues alone (vestibular condition) or both cues presented simultaneously and congruently (‘combined’ condition).

Figure 5. Cue combination: heading discrimination task and behavioural performance.

A, task layout. Monkeys were seated on a motion platform and were translated within the horizontal plane to provide vestibular stimulation. A projector mounted on the platform displayed images of a 3-D star field, and thus provided optic flow information. B, task design. After fixating a visual target, the monkey experienced forward motion with a small leftward or rightward component, and subsequently reported his perceived heading (‘left’vs.‘right’) by making a saccadic eye movement to one of two targets. C, typical psychophysical performance (from a single session) under the three stimulus conditions: vestibular, visual and combined (Gu et al. 2008). D and E, comparison of the average psychophysical threshold obtained in the combined condition (black bars) relative to the single cue thresholds (vestibular: open bars; visual: dark grey bars) and compared to predicted thresholds based on statistically optimal cue integration (light grey bar). Replotted with permission from Gu et al. (2008).

Trained animals performed the task well using either visual or vestibular cues (Gu et al. 2007, 2008; Fetsch et al. 2009). Example behavioural data are shown in the form of psychometric functions in Fig. 5C. Note that the reliability of the visual cue was roughly equated with the reliability of the vestibular cue by reducing the coherence of the visual motion stimulus, such that visual and vestibular thresholds were approximately equal (Fig. 5C, open/filled circles and continuous/dashed curves). This balancing of the two cues is important, as it provides the best opportunity to observe improvement in performance under cue combination, assuming subjects perform as nearly optimal Bayesian observers (Ernst & Banks, 2002; Gu et al. 2008). In the combined condition (Fig. 5C, grey triangles), psychometric functions became steeper compared to unimodal stimulation and the monkey's heading threshold was reduced.

These observations were consistent across sessions and animals, as illustrated by the average behavioural thresholds from two animals in Fig. 5D, E (Gu et al. 2008). If the monkey combined the two cues optimally, as predicted by Bayesian cue integration principles (Ernst & Banks, 2002; Knill & Pouget, 2004), thresholds should be reduced by ∼30% under cue combination (Fig. 5D and E, light grey bar). Indeed, monkey behavioural thresholds were reduced under the combined stimulus condition relative to the single cue thresholds and were similar to the optimal predictions (Fig. 5D, E, black bars). When visual and vestibular cues were put in conflict while varying visual motion coherence, both macaques and humans adjusted the relative weighting of the two cues on a trial-by-trial basis, in agreement with the predictions of Bayes-optimal cue integration (Fetsch et al. 2009). Thus, visual/vestibular heading perception follows the principles of statistically optimal cue integration, as shown previously for other types of sensory interactions (van Beers et al. 1999; Ernst & Banks, 2002; Knill & Saunders, 2003; Alais & Burr, 2004; Hillis et al. 2004; Fetsch et al. 2009).

Neuronal evidence of cue integration: decreased neurometric thresholds

The demonstration of near-optimal cue integration in the monkey's behaviour provides a unique opportunity to search for the neural basis of statistically optimal inference. Approximately 60% of MSTd neurons were significantly tuned for heading under both the visual and vestibular stimulus conditions (Gu et al. 2006; Takahashi et al. 2007), making these multisensory neurons ideal candidates for generating the cue integration effects seen in behaviour. Interestingly, multisensory MSTd cells formed a continuum where at the two extremes (1) ‘Congruent’ neurons had similar visual/vestibular heading preferences, and thus signalled the same motion direction under both unimodal stimulus conditions, and (2) ‘Opposite’ neurons preferred nearly opposite directions of heading under visual and vestibular stimulus conditions (Gu et al. 2006, 2010).

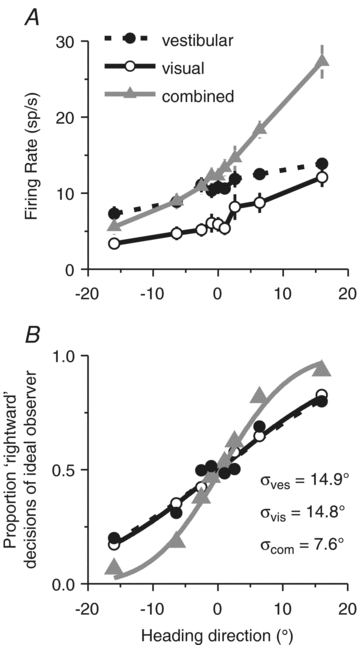

Multisensory MSTd neurons were first identified as having significant tuning under both the vestibular and visual conditions, then tested while the animal performed the multimodal heading discrimination task, as illustrated with a typical congruent MSTd cell in Fig. 6. This example neuron preferred rightward (positive) headings for both unimodal stimuli and its tuning was monotonic over the narrow range of heading directions sampled during the heading discrimination task (note that heading directions are referenced to either the real or simulated self-motion; thus, similar tuning in the visual and vestibular conditions defines a congruent cell). For almost all congruent cells, like this example neuron, heading tuning became steeper in the combined condition (Fig. 6A, grey triangles).

Figure 6. Cue combination: example MSTd neuron.

A, firing rates, and B, corresponding neurometric functions from an example congruent MSTd neuron. Negative angles: leftward headings; positive angles: rightward directions. Smooth curves in B show best-fitting cumulative Gaussian functions.

To compute neuronal thresholds that can be compared with behavioural thresholds more directly, neuronal responses were converted into neurometric functions using a Receiver Operating Characteristic (ROC) analysis (Green & Swets, 1966; Britten et al. 1992), which quantify the ability of an ideal observer to discriminate heading based on the activity of a single neuron (Fig. 6B). Neurometric data were fitted with cumulative Gaussian functions and neuronal threshold was defined as the standard deviation of the Gaussian fit. The smaller the threshold, the steeper the neurometric function and the more sensitive the neuron is to subtle variations in heading. Across the population, MSTd neurons were generally less sensitive than the monkeys’ behaviour, with only the most sensitive neurons rivalling behavioural thresholds (Gu et al. 2007, 2008). Thus, under the assumption that MSTd population activity is monitored by the animal in order to perform the heading task, decoding must either pool responses across many neurons or rely more heavily on the most sensitive neurons (Parker & Newsome, 1998).

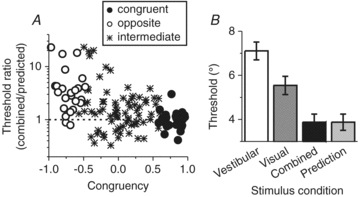

Congruent MSTd neurons, like the example in Fig. 6, were generally characterized by smaller neuronal thresholds in the combined condition (Fig. 6B, grey triangles), indicating that the neuron could discriminate smaller variations in heading when both visual and vestibular cues were provided. This improved sensitivity was only characteristic of congruent cells, as illustrated in Fig. 7A, which plots how well the combined threshold agreed with the statistically optimal predictions as a function of congruency index, which ranges from +1 when visual and vestibular tuning functions had a consistent slope to −1 when visual and vestibular tuning functions had opposite slopes. In this plot, the ordinate illustrates, for each neuron, the ratio of the neuronal threshold in the combined condition to the threshold expected if neurons combined cues optimally (Gu et al. 2008).

Figure 7. Cue combination: MSTd population summary.

A, neuronal sensitivity under cue combination depends on congruency of visual and vestibular tuning. The ordinate in this scatter plot represents the ratio of the neuronal threshold measured in the combined condition to predicted threshold from optimal cue integration. The abscissa represents the Congruency Index of heading tuning for visual and vestibular responses, which quantifies the degree to which neuronal tuning is similar in the visual and vestibular conditions. Dashed horizontal line: threshold in the combined condition is equal to the prediction. B, comparison of the average neurometric threshold, computed from congruent cells only (with congruency index >0), in the combined condition (black bars) relative to the single cue thresholds (vestibular: open bars; visual: dark grey bars) and compared to predicted thresholds based on statistically optimal cue integration (light grey bar). Replotted with permission from Gu et al. (2008).

These findings illustrate that only congruent cells (i.e. neurons with large positive congruency index, black circles in Fig. 7A) had thresholds close to the optimal prediction (ratios near unity), as illustrated further by the average neuronal thresholds in Fig. 7B. Thus, the average neuronal threshold for congruent MSTd cells in the combined condition followed a pattern similar to the monkeys’ behaviour. In contrast, combined thresholds for opposite cells were generally much higher than predicted from optimal cue integration (Fig. 7A, open circles), indicating that these neurons became less sensitive during cue combination.

Conclusion

These findings support the hypothesis that area MSTd is part of the neural substrate involved in visual/vestibular multisensory heading perception. In support of this notion, preliminary results show that reversible inactivation of MSTd produces clear deficits in heading perception (Gu et al. 2009). But how widely distributed in the brain are these neural representations of multisensory self-motion information? Other cortical areas in which neurons integrate vestibular signals and optic flow (e.g. area VIP or the frontal pursuit area) might also contribute in meaningful ways, and a substantial challenge for the future will be to understand the specific roles that various brain regions play in the multisensory perception of self-motion. These findings also motivate additional questions that need to be addressed in the future. For example, what is the functional significance of ‘opposite’ neurons in MSTd and how do congruent and opposite cells work together to distinguish self-motion from object motion? Do multisensory neurons generally perform weighted linear summation of their unimodal inputs (Morgan et al. 2008)? How do the combination rules and/or weights that neurons apply to their unimodal inputs vary as the strength of the sensory inputs varies (Morgan et al. 2008)? Finally, how are these sensory signals read out from population responses and how much of the necessary computations take place in sensory representations versus decision-making networks? The experimental paradigm of visual–vestibular integration for heading perception is a great model to address these and other critical questions regarding multisensory integration in the brain.

Acknowledgments

This work was supported by NIH grants EY019087 and EY016178.

Glossary

Abbreviations

- MSTd

dorsal medial superior temporal area

- VIP

ventral intraparietal area

References

- Alais D, Burr D. The ventriloquist effect results from near-optimal bimodal integration. Curr Biol. 2004;14:257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Anderson KC, Siegel RM. Optic flow selectivity in the anterior superior temporal polysensory area, STPa, of the behaving monkey. J Neurosci. 1999;19:2681–2692. doi: 10.1523/JNEUROSCI.19-07-02681.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berthoz A, Pavard B, Young LR. Perception of linear horizontal self-motion induced by peripheral vision (linear vection) basic characteristics and visual-vestibular interactions. Exp Brain Res. 1975;23:471–489. doi: 10.1007/BF00234916. [DOI] [PubMed] [Google Scholar]

- Bradley DC, Maxwell M, Andersen RA, Banks MS, Shenoy KV. Mechanisms of heading perception in primate visual cortex. Science. 1996;273:1544–1547. doi: 10.1126/science.273.5281.1544. [DOI] [PubMed] [Google Scholar]

- Brandt T, Dichgans J, Koenig E. Differential effects of central verses peripheral vision on egocentric and exocentric motion perception. Exp Brain Res. 1973;16:476–491. doi: 10.1007/BF00234474. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W. Heading encoding in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002a;16:1554–1568. doi: 10.1046/j.1460-9568.2002.02207.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002b;16:1569–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Kubischik M, Pekel M, Lappe M, Hoffmann KP. Linear vestibular self-motion signals in monkey medial superior temporal area. Ann N Y Acad Sci. 1999;871:272–281. doi: 10.1111/j.1749-6632.1999.tb09191.x. [DOI] [PubMed] [Google Scholar]

- Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: a comparison of neuronal and psychophysical performance. J Neurosci. 1992;12:4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Britten KH, van Wezel RJ. Electrical microstimulation of cortical area MST biases heading perception in monkeys. Nat Neurosci. 1998;1:59–63. doi: 10.1038/259. [DOI] [PubMed] [Google Scholar]

- Britten KH, Van Wezel RJ. Area MST and heading perception in macaque monkeys. Cereb Cortex. 2002;12:692–701. doi: 10.1093/cercor/12.7.692. [DOI] [PubMed] [Google Scholar]

- Crowell JA, Banks MS. Perceiving heading with different retinal regions and types of optic flow. Percept Psychophys. 1993;53(3):325–337. doi: 10.3758/bf03205187. [DOI] [PubMed] [Google Scholar]

- Dayan P, Abbott LF. Theoretical Neuroscience. Cambridge, MA: MIT Press; 2001. [Google Scholar]

- Dichgans J, Brandt T. Visual-vestibular interaction: effects on self-motion perception and postural control. In: Teuber H-L, Held R, Leibowitz H, editors. Handbook of Sensory Physiology. Vol. 8. Berlin: Springer; 1978. pp. 753–804. [Google Scholar]

- Duffy CJ. MST neurons respond to optic flow and translational movement. J Neurophysiol. 1998;80:1816–1827. doi: 10.1152/jn.1998.80.4.1816. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Sensitivity of MST neurons to optic flow stimuli. I. A continuum of response selectivity to large-field stimuli. J Neurophysiol. 1991;65:1329–1345. doi: 10.1152/jn.1991.65.6.1329. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Response of monkey MST neurons to optic flow stimuli with shifted centers of motion. J Neurosci. 1995;15:5192–5208. doi: 10.1523/JNEUROSCI.15-07-05192.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Fernandez C, Goldberg JM. Physiology of peripheral neurons innervating otolith organs of the squirrel monkey. I. Response to static tilts and to long-duration centrifugal force. J Neurophysiol. 1976a;39:970–984. doi: 10.1152/jn.1976.39.5.970. [DOI] [PubMed] [Google Scholar]

- Fernandez C, Goldberg JM. Physiology of peripheral neurons innervating otolith organs of the squirrel monkey. II. Directional selectivity and force-response relations. J Neurophysiol. 1976b;39:985–995. doi: 10.1152/jn.1976.39.5.985. [DOI] [PubMed] [Google Scholar]

- Fernandez C, Goldberg JM. Physiology of peripheral neurons innervating otolith organs of the squirrel monkey. III. Response dynamics. J Neurophysiol. 1976c;39:996–1008. doi: 10.1152/jn.1976.39.5.996. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, Turner AH, DeAngelis GC, Angelaki DE. Dynamic reweighting of visual and vestibular cues during self-motion perception. J Neurosci. 2009;29:15601–15612. doi: 10.1523/JNEUROSCI.2574-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Wang S, Gu Y, Deangelis GC, Angelaki DE. Spatial reference frames of visual, vestibular, and multimodal heading signals in the dorsal subdivision of the medial superior temporal area. J Neurosci. 2007;27:700–712. doi: 10.1523/JNEUROSCI.3553-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Georgopoulos AP, Schwartz AB, Kettner RE. Neuronal population coding of movement direction. Science. 1986;233:1416–1419. doi: 10.1126/science.3749885. [DOI] [PubMed] [Google Scholar]

- Gibson JJ. The Perception of the Visual World. Cambridge, MA, USA: Riverside Press; 1950. [Google Scholar]

- Green DM, Swets JA. Signal Detection Theory and Psychophysics. New York: Wiley; 1966. [Google Scholar]

- Gu Y, Angelaki DE, Deangelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat Neurosci. 2008;11:1201–1210. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Deangelis GC, Angelaki DE. A functional link between area MSTd and heading perception based on vestibular signals. Nat Neurosci. 2007;10:1038–1047. doi: 10.1038/nn1935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, DeAngelis GC, Angelaki DE. 2009 Neuroscience Meeting Planner. Chicago, IL: Society for Neuroscience; 2009. Contribution of visual and vestibular signals to heading perception revealed by reversible inactivation of area MSTd. Program No. 558.11. Online. [Google Scholar]

- Gu Y, Fetsch CR, Adeyemo B, Deangelis GC, Angelaki DE. Decoding of MSTd population activity accounts for variations in the precision of heading perception. Neuron. 2010;66:596–609. doi: 10.1016/j.neuron.2010.04.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J Neurosci. 2006;26:73–85. doi: 10.1523/JNEUROSCI.2356-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillis JM, Watt SJ, Landy MS, Banks MS. Slant from texture and disparity cues: optimal cue combination. J Vis. 2004;4:967–992. doi: 10.1167/4.12.1. [DOI] [PubMed] [Google Scholar]

- Jazayeri M, Movshon JA. Optimal representation of sensory information by neural populations. Nat Neurosci. 2006;9:690–696. doi: 10.1038/nn1691. [DOI] [PubMed] [Google Scholar]

- Knill DC, Pouget A. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 2004;27(12):712–719. doi: 10.1016/j.tins.2004.10.007. [DOI] [PubMed] [Google Scholar]

- Knill DC, Saunders JA. Do humans optimally integrate stereo and texture information for judgments of surface slant? Vision Res. 2003;43:2539–2558. doi: 10.1016/s0042-6989(03)00458-9. [DOI] [PubMed] [Google Scholar]

- Lappe M, Bremmer F, Pekel M, Thiele A, Hoffmann KP. Optic flow processing in monkey STS: a theoretical and experimental approach. J Neurosci. 1996;16:6265–6285. doi: 10.1523/JNEUROSCI.16-19-06265.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan ML, Deangelis GC, Angelaki DE. Multisensory integration in macaque visual cortex depends on cue reliability. Neuron. 2008;59:662–673. doi: 10.1016/j.neuron.2008.06.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Page WK, Duffy CJ. Heading representation in MST: sensory interactions and population encoding. J Neurophysiol. 2003;89:1994–2013. doi: 10.1152/jn.00493.2002. [DOI] [PubMed] [Google Scholar]

- Parker AJ, Newsome WT. Sense and the single neuron: probing the physiology of perception. Annu Rev Neurosci. 1998;21:227–277. doi: 10.1146/annurev.neuro.21.1.227. [DOI] [PubMed] [Google Scholar]

- Pouget A, Zhang K, Deneve S, Latham PE. Statistically efficient estimation using population coding. Neural Comput. 1998;10:373–401. doi: 10.1162/089976698300017809. [DOI] [PubMed] [Google Scholar]

- Purushothaman G, Bradley DC. Neural population code for fine perceptual decisions in area MT. Nat Neurosci. 2005;8:99–106. doi: 10.1038/nn1373. [DOI] [PubMed] [Google Scholar]

- Sanger TD. Probability density estimation for the interpretation of neural population codes. J Neurophysiol. 1996;76:2790–2793. doi: 10.1152/jn.1996.76.4.2790. [DOI] [PubMed] [Google Scholar]

- Schaafsma SJ, Duysens J. Neurons in the ventral intraparietal area of awake macaque monkey closely resemble neurons in the dorsal part of the medial superior temporal area in their responses to optic flow patterns. J Neurophysiol. 1996;76:4056–4068. doi: 10.1152/jn.1996.76.6.4056. [DOI] [PubMed] [Google Scholar]

- Schlack A, Hoffmann KP, Bremmer F. Interaction of linear vestibular and visual stimulation in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002;16:1877–1886. doi: 10.1046/j.1460-9568.2002.02251.x. [DOI] [PubMed] [Google Scholar]

- Seung HS, Sompolinsky H. Simple models for reading neuronal population codes. Proc Natl Acad Sci U S A. 1993;90:10749–10753. doi: 10.1073/pnas.90.22.10749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Si X, Angelaki DE, Dickman JD. Response properties of pigeon otolith afferents to linear acceleration. Exp Brain Res. 1997;117:242–250. doi: 10.1007/s002210050219. [DOI] [PubMed] [Google Scholar]

- Siegel RM, Read HL. Analysis of optic flow in the monkey parietal area 7a. Cereb Cortex. 1997;7:327–346. doi: 10.1093/cercor/7.4.327. [DOI] [PubMed] [Google Scholar]

- Takahashi K, Gu Y, May PJ, Newlands SD, DeAngelis GC, Angelaki DE. Multimodal coding of three-dimensional rotation and translation in area MSTd: comparison of visual and vestibular selectivity. J Neurosci. 2007;27:9742–9756. doi: 10.1523/JNEUROSCI.0817-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka K, Fukada Y, Saito HA. Underlying mechanisms of the response specificity of expansion/contraction and rotation cells in the dorsal part of the medial superior temporal area of the macaque monkey. J Neurophysiol. 1989;62:642–656. doi: 10.1152/jn.1989.62.3.642. [DOI] [PubMed] [Google Scholar]

- Tanaka K, Hikosaka K, Saito H, Yukie M, Fukada Y, Iwai E. Analysis of local and wide-field movements in the superior temporal visual areas of the macaque monkey. J Neurosci. 1986;6:134–144. doi: 10.1523/JNEUROSCI.06-01-00134.1986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Gon JJ. Integration of proprioceptive and visual position-information: An experimentally supported model. J Neurophysiol. 1999;81:1355–1364. doi: 10.1152/jn.1999.81.3.1355. [DOI] [PubMed] [Google Scholar]

- Warren WH. Optic flow. In: Chalupa LM, Werner JS, editors. The Visual Neurosciences. Cambridge, MA: MIT Press; 2003. pp. 1247–1259. [Google Scholar]

- Zhang T, Britten KH. 2003 Neuroscience Meeting Planner. New Orleans, LA: Society for Neuroscience, 2003; 2003. Microstimulation of area VIP biases heading perception in monkeys. Program No. 339.9. Online. [Google Scholar]